Decision Tree Intuition: From Concept to Application - Analysis Report

VerifiedAdded on 2022/08/21

|5

|729

|15

Report

AI Summary

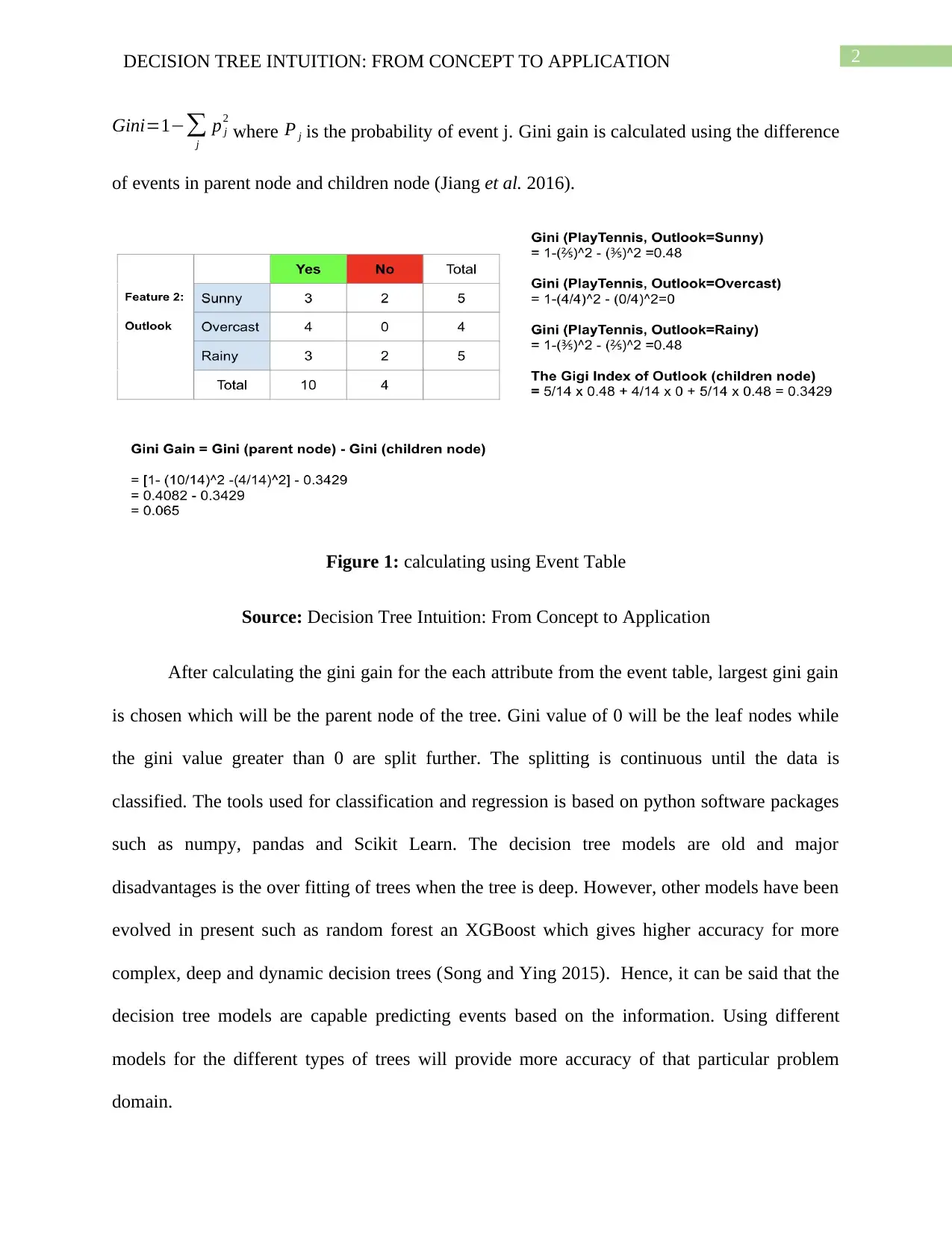

This report provides an overview of decision trees, a powerful machine learning algorithm used for data analysis and prediction. It explores the core concepts, including root nodes, splitting, and leaf nodes, and delves into different algorithms like ID3 (Iterative Dichotomiser) which utilizes information gain and entropy, and CART (Classification and Regression Trees) which uses the Gini index. The report discusses the advantages and disadvantages of decision tree models, including the risk of overfitting, and highlights the evolution of more advanced models like random forests and XGBoost. It also mentions the use of tools like scikit-learn and software packages such as numpy, pandas, and scikit-learn for implementing these algorithms. Finally, the report emphasizes the importance of selecting appropriate models for specific problem domains to enhance prediction accuracy.

1 out of 5

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)