Deep Neural Networks: Transferability of Features Research Proposal

VerifiedAdded on 2020/04/13

|4

|539

|54

Project

AI Summary

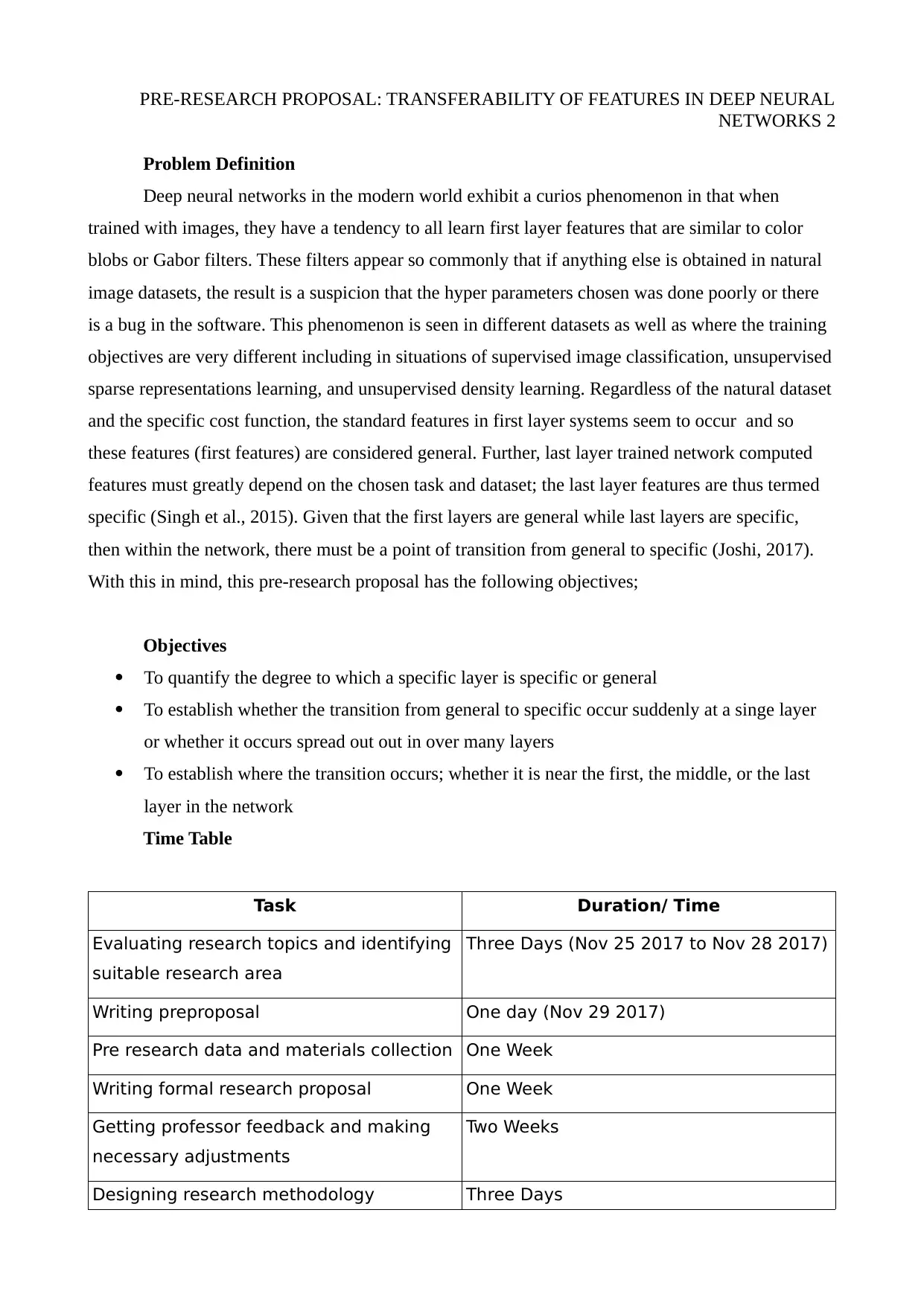

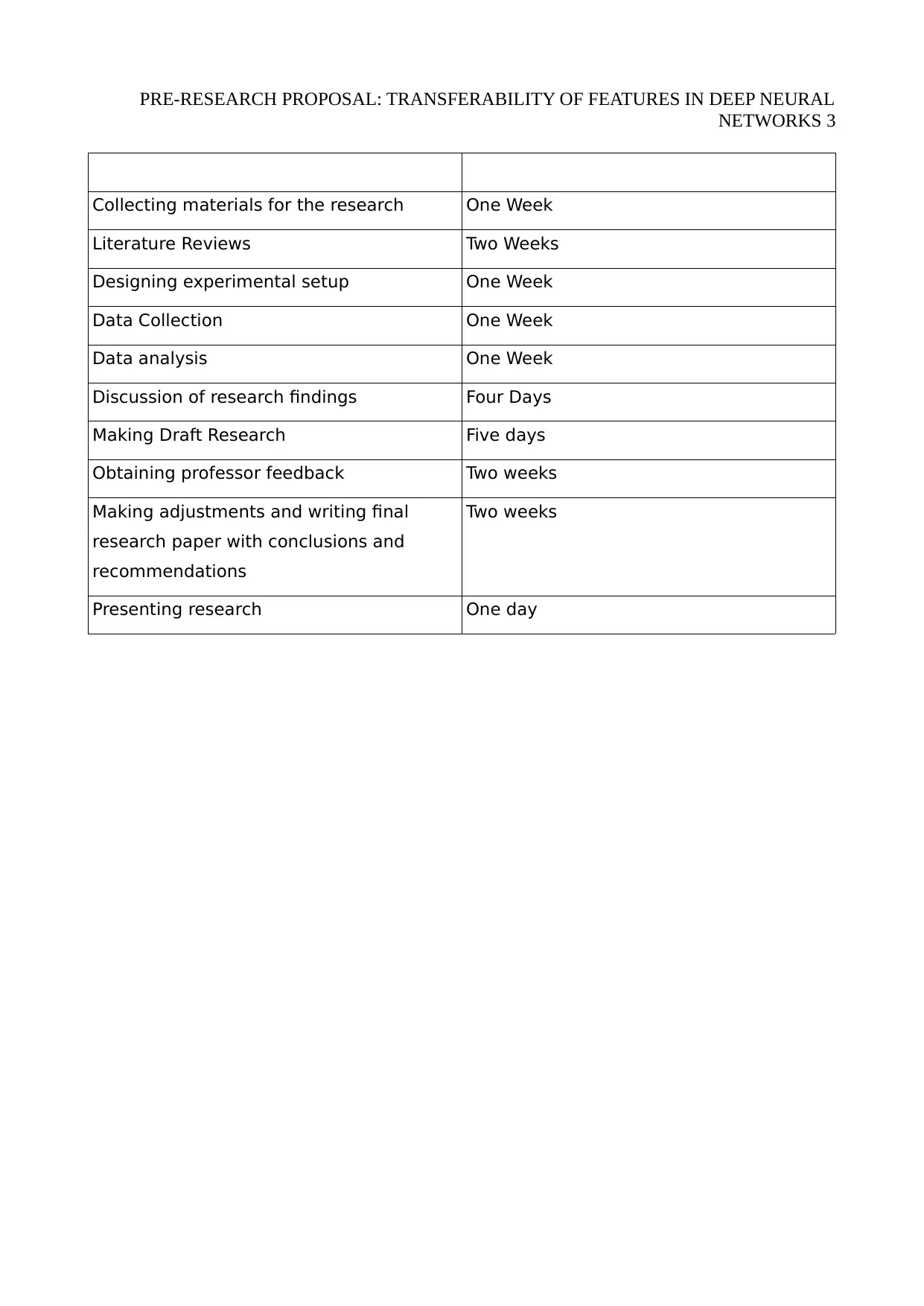

This document presents a pre-research proposal focused on the transferability of features in deep neural networks. The proposal addresses the observation that deep neural networks trained on images often exhibit similar first-layer features, such as Gabor filters, regardless of the specific dataset or training objective. The research aims to quantify the specificity or generality of network layers, determine if the transition from general to specific features is gradual or abrupt, and identify where this transition occurs within the network. The proposal includes a detailed timeline outlining the various stages of the research, from evaluating research topics and writing the proposal, to designing the methodology, collecting and analyzing data, and finally, writing the research paper. The proposal references relevant literature, including works by Joshi (2017) and Singh et al. (2015), to support the research's foundation and objectives. The goal is to contribute to a better understanding of how features are learned and transferred in deep learning models, with implications for network design and optimization.

1 out of 4

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)