ENEL890AO: Regression Experiments for Electrical Engineering Course

VerifiedAdded on 2023/06/11

|6

|686

|171

Homework Assignment

AI Summary

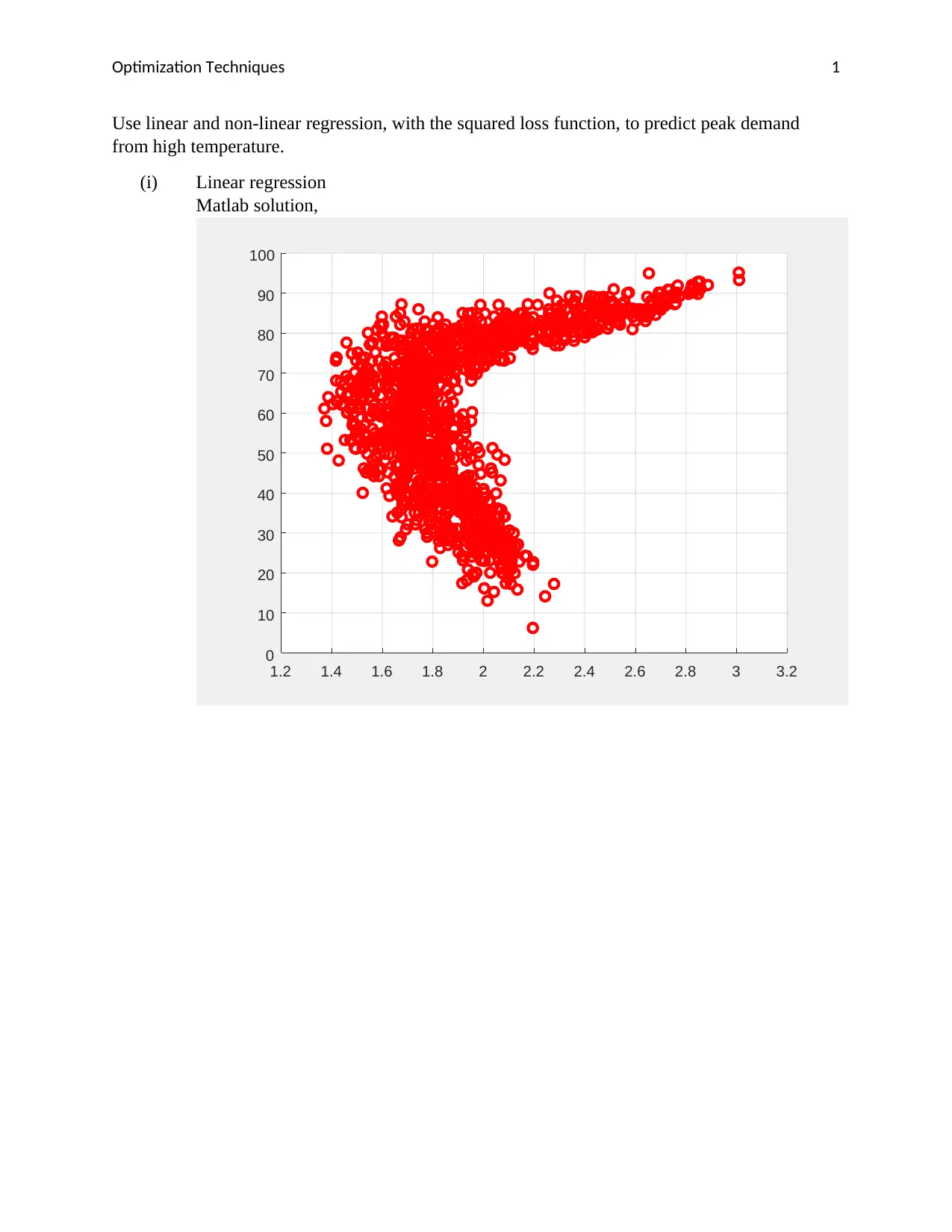

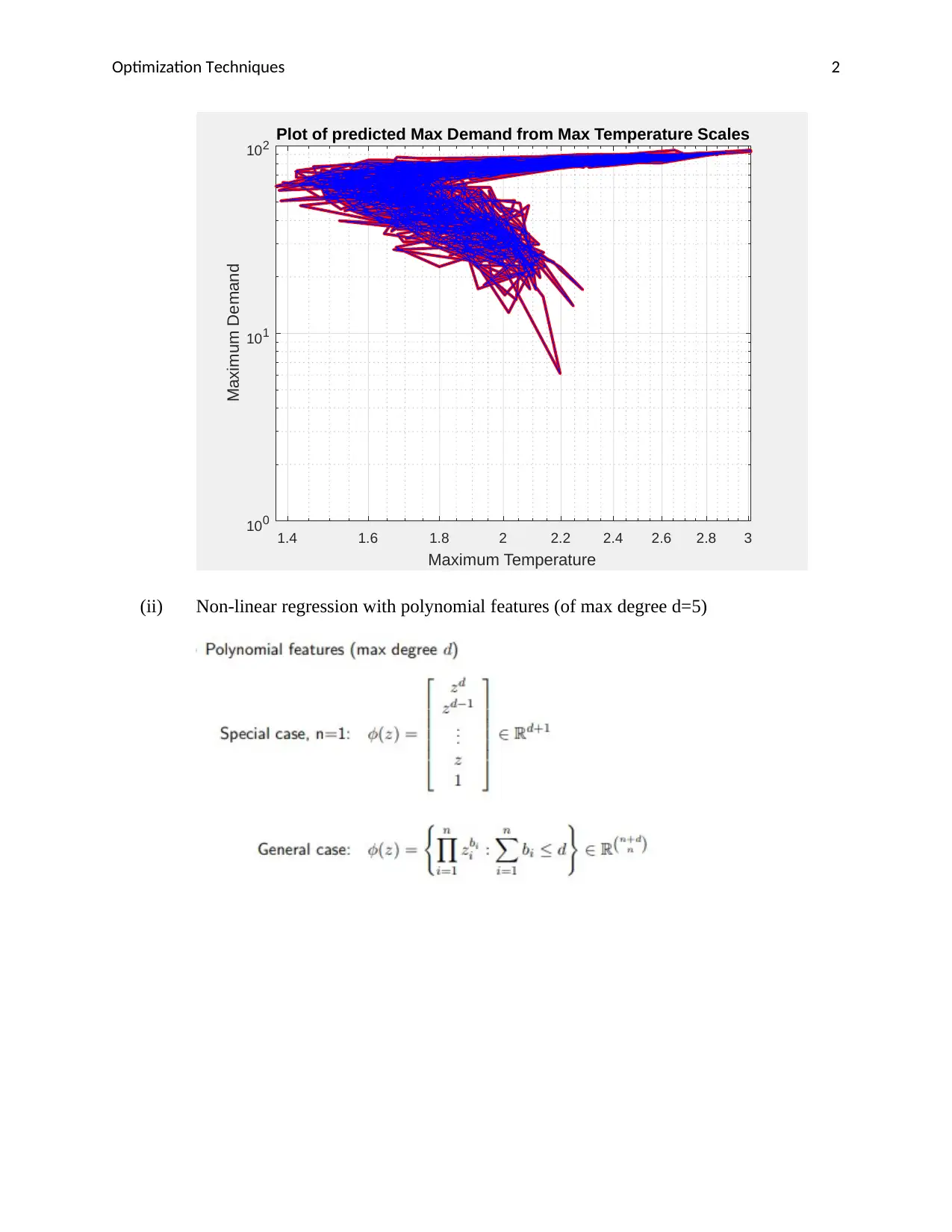

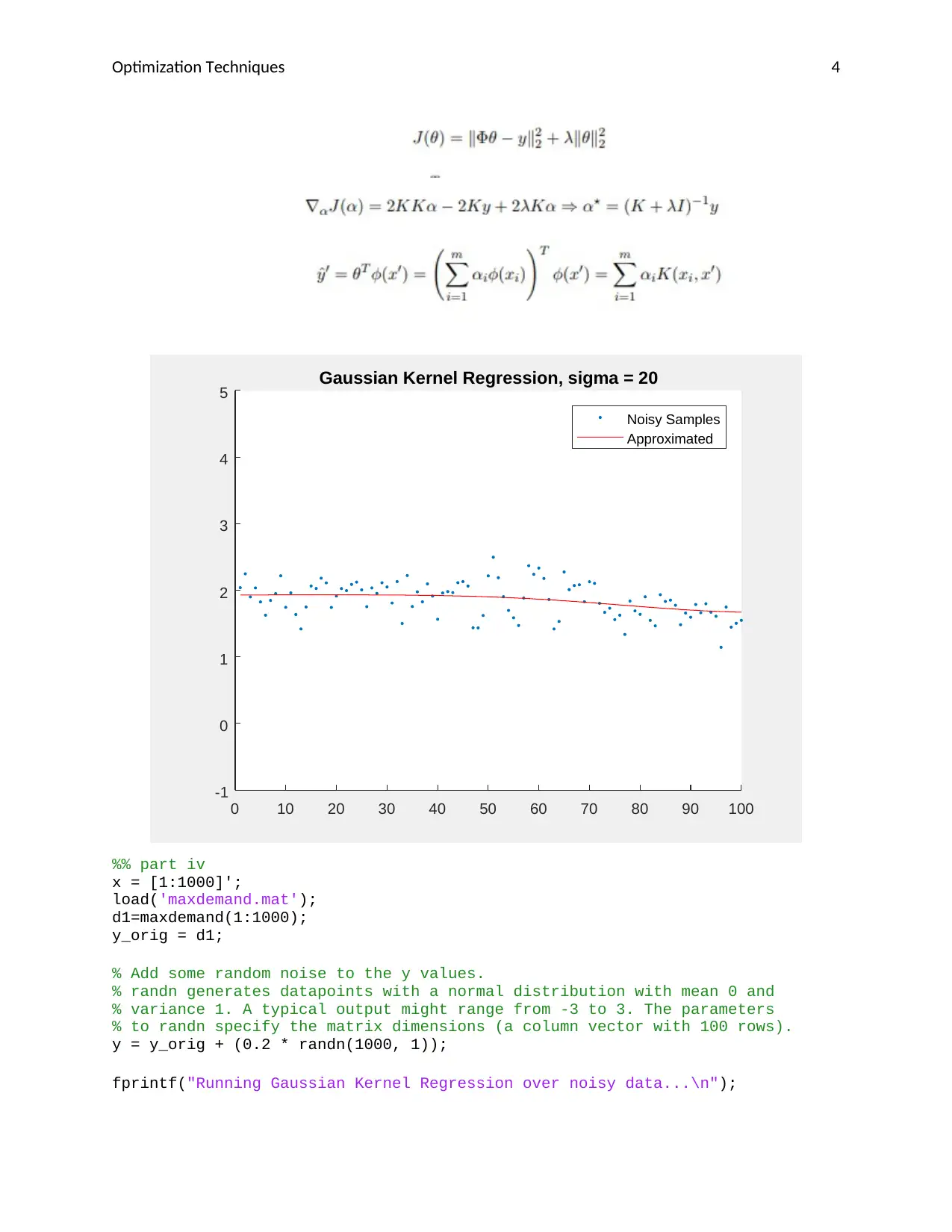

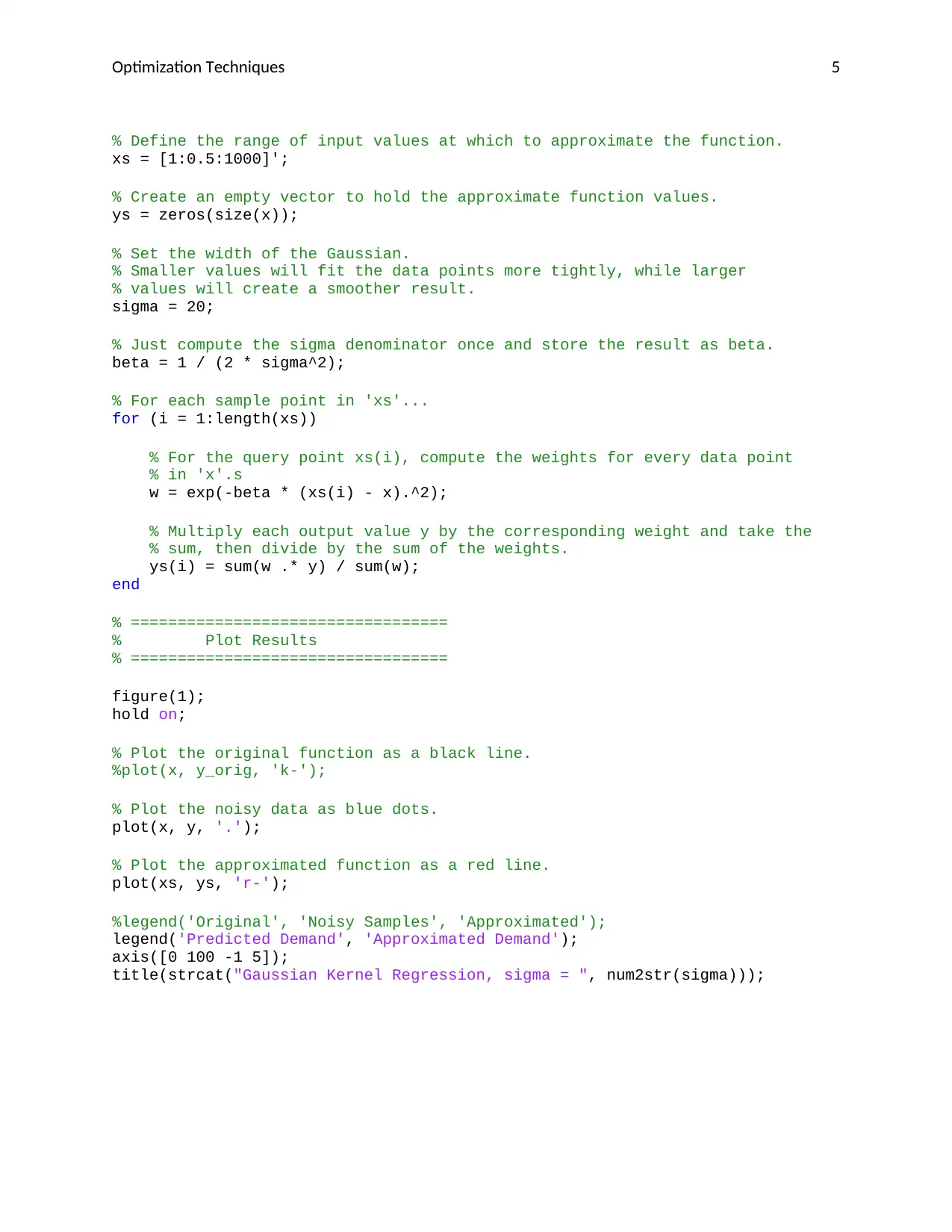

This assignment solution for ENEL890AO Computational Methods in Electrical Engineering focuses on using linear and non-linear regression techniques to predict peak demand from high temperature. The solution implements linear regression, non-linear regression with polynomial features (degree 5), non-linear regression with RBF features, and kernel linear regression using a Gaussian kernel. The code and resulting figures are included, demonstrating the application of these methods and the resulting fits over the input data. The assignment uses the squared loss function for optimization and provides a detailed implementation of Gaussian Kernel Regression over noisy data, including the generation of noisy data points and the approximation of the function with a Gaussian kernel. The results are plotted to visualize the predicted demand and the approximated demand.

1 out of 6

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)