Event-Based Imaging for Space Situational Awareness: PhD Research

VerifiedAdded on 2022/09/09

|7

|1395

|28

Project

AI Summary

This PhD proposal outlines a research project focused on Event-Based Imaging for Space Situational Awareness (SSA). The research addresses the increasing challenges posed by satellite collisions and space debris through the use of neuromorphic cameras, specifically event-based cameras, which offer advantages like low latency and high dynamic range. The proposal investigates the potential of these cameras, along with Adaptive Optics (AO) systems and Deep Learning techniques, for satellite and space junk detection and tracking. The methodology includes collecting datasets with event-based and CCD cameras, feature extraction using FEAST and HOTS techniques, and FPGA implementation. The project aims to develop an algorithm that can be implemented to find time differences using Global Positional System (GPS) receiver signals with multiple telescopes and event-based cameras. The research will utilize the Astrosite observatory for data collection and validation. The expected outcome is an enhanced solution for space management and SSA, providing improved information about satellites and space junk, ultimately contributing to space traffic management. The project includes a Gantt chart outlining the phases, activities, and resource requirements for the research.

Doctor of Philosophy Proposal

Title: Event-Based Imaging for Space Situation Awareness

1 | P a g e

Title: Event-Based Imaging for Space Situation Awareness

1 | P a g e

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

RESEARCH BACKGROUND

The number of satellites has currently upsurged, and due to their increased velocity;

unprecedented collisions can occur such as Collison with another satellite, collision with

space junk, unexpected accidents, as well as hostile action for the resultant debris cloud. The

implication will likely degenerate into destroying satellites and spacecraft [1]. As a result,

one concept; Space Situational awareness (SSA) has been developed to address the

underlying challenges. SSA focus on initiating space traffic management STM. Most of the

satellites, which have been launched over the last 50 years, are characterised with

incapacitating anomalies and space debris. To evade the collisions, SAA is aiming at

predicting the physical location of natural and artificial items in the orbit to evade collisions.

Mahowald et al [2] designed a spake based - based neuromorphic camera with an Address

Event Representation (AER) vision system. It is a unique biological system since not even

the traditional CCD and humans (asynchronous events act on the sensing systems of

organisms, and the information is processed hierarchically and in parallel in a massive neuron

network) works similarly. SSA precisely adopts the neuromorphic sensors, chips as well as

Deep Learning approach to address the issue.

Event-based cameras are bio-inspired sensors which respond to brightness changes

asynchronously and independently for each pixel, thus offering several advantages over the

conventional cameras. For instance low latency, low power, high speed and high dynamic

range (HDR). Since their emergence, various applications for computer vision and robotics

such as visual tracking, detection and recognition, Simultaneous Localisation and Mapping

(SLAM), Visual Reconstruction, and Stereo Matching have been proposed.

Research Questions

In relation to the idea, below are some questions, which will offer guidance to the research:

can event-based cameras with silicon retinas feature detect/track satellites?

2 | P a g e

The number of satellites has currently upsurged, and due to their increased velocity;

unprecedented collisions can occur such as Collison with another satellite, collision with

space junk, unexpected accidents, as well as hostile action for the resultant debris cloud. The

implication will likely degenerate into destroying satellites and spacecraft [1]. As a result,

one concept; Space Situational awareness (SSA) has been developed to address the

underlying challenges. SSA focus on initiating space traffic management STM. Most of the

satellites, which have been launched over the last 50 years, are characterised with

incapacitating anomalies and space debris. To evade the collisions, SAA is aiming at

predicting the physical location of natural and artificial items in the orbit to evade collisions.

Mahowald et al [2] designed a spake based - based neuromorphic camera with an Address

Event Representation (AER) vision system. It is a unique biological system since not even

the traditional CCD and humans (asynchronous events act on the sensing systems of

organisms, and the information is processed hierarchically and in parallel in a massive neuron

network) works similarly. SSA precisely adopts the neuromorphic sensors, chips as well as

Deep Learning approach to address the issue.

Event-based cameras are bio-inspired sensors which respond to brightness changes

asynchronously and independently for each pixel, thus offering several advantages over the

conventional cameras. For instance low latency, low power, high speed and high dynamic

range (HDR). Since their emergence, various applications for computer vision and robotics

such as visual tracking, detection and recognition, Simultaneous Localisation and Mapping

(SLAM), Visual Reconstruction, and Stereo Matching have been proposed.

Research Questions

In relation to the idea, below are some questions, which will offer guidance to the research:

can event-based cameras with silicon retinas feature detect/track satellites?

2 | P a g e

How can Adaptive Optics (AO) system be useful to improve the detecting and

tracking tasks?

Can Deep Learning techniques and algorithms be used to identify the shape of space

junk in computer vision?

Can the range be calculated through the cooperation between multiple telescopes and

event-based cameras?

Will forecasting image sensors characterised by variable spatial resolution across the

surface of the sensor target data reduction without a critical impact to the final

execution of the application?

KNOWLEDGE GAPS

Despite the impressive technological development in various fields, the past research has not

provided a reliable and robust extraordinary algorithm and application in SSA, specifically in

tracking and detecting of satellites and space junks. Tracking is of pivotal importance in

supervision applications, and due to the nature of objects, it becomes complex to extract

datasets from such scenes [3].

Tracking is limited for objects with hyper velocity, especially when the standard camera is

used. These cameras lack the Data Fusion (DF) process of integrating various data sources

from multiple event-based cameras and other devices. Besides, there are many objects in

space around earth, which makes it difficult to detect and track from the ground through these

noises in space. Correspondingly, sending a piece of equipment to space is exorbitant, as it is

faced with challenges of adaptations and possible collisions with other space objects.

Therefore, limited research has been conducted on the use of Bio-inspired Sensors and Data

Fusion in capturing space objects through the ground truth model.

3 | P a g e

tracking tasks?

Can Deep Learning techniques and algorithms be used to identify the shape of space

junk in computer vision?

Can the range be calculated through the cooperation between multiple telescopes and

event-based cameras?

Will forecasting image sensors characterised by variable spatial resolution across the

surface of the sensor target data reduction without a critical impact to the final

execution of the application?

KNOWLEDGE GAPS

Despite the impressive technological development in various fields, the past research has not

provided a reliable and robust extraordinary algorithm and application in SSA, specifically in

tracking and detecting of satellites and space junks. Tracking is of pivotal importance in

supervision applications, and due to the nature of objects, it becomes complex to extract

datasets from such scenes [3].

Tracking is limited for objects with hyper velocity, especially when the standard camera is

used. These cameras lack the Data Fusion (DF) process of integrating various data sources

from multiple event-based cameras and other devices. Besides, there are many objects in

space around earth, which makes it difficult to detect and track from the ground through these

noises in space. Correspondingly, sending a piece of equipment to space is exorbitant, as it is

faced with challenges of adaptations and possible collisions with other space objects.

Therefore, limited research has been conducted on the use of Bio-inspired Sensors and Data

Fusion in capturing space objects through the ground truth model.

3 | P a g e

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

METHODOLOGY

To develop an algorithm of tracking and detecting satellites, several of datasets will be

collected using an event-based camera and CCD camera, and with the potential of using an

Adaptive optics (AO) system to improve detecting and tracking tasks.

Event-based Feature Extraction using Adaptive Selection Thresholds (FEAST) technique

enables the algorithm to extract features in an unsupervised manner [4], and a hierarchy

event-based time surface (HOTS) that provide Spatial-temporal features[5]. After developing

an accurate algorithm of tracking and detection of satellites and space junk, Field

Programmable Gate Array (FPGA) will be used to implement the algorithm on

reconfigurable architecture based on Logic Blocks (LBs). FPGA can implement faster and

parallel processing of signals, and various types of neural networks can be executed, mainly it

allows to perform neural satellites will adopt the Global Positional System (GPS) receiver

signals with multiple telescopes and event-based cameras to find the time differences.

RESOURCE REQUIREMENTS

To accomplish the desired outcomes for this project, the resources required are divided into

three main sections. Firstly, conducting a literature review of the underlying situational

awareness (unsolved problems) to determine the type of data needs to be collected in this

project. Secondly, the creation of an efficient program through robust software’s to deliver a

fast solution. Finally, the hardware will provide support for the principal functions, for

instance, vision cameras, telescopes, FPGA hardware, Graphical and vision processing units.

There is a mobile telescope observatory built specifically for neuromorphic sensors

(Astrosite) located at MARCS Institute, and this robotic electro-optic telescope and cameras

will be utilised to provide ground truth and to build an accurate mounted model.

4 | P a g e

To develop an algorithm of tracking and detecting satellites, several of datasets will be

collected using an event-based camera and CCD camera, and with the potential of using an

Adaptive optics (AO) system to improve detecting and tracking tasks.

Event-based Feature Extraction using Adaptive Selection Thresholds (FEAST) technique

enables the algorithm to extract features in an unsupervised manner [4], and a hierarchy

event-based time surface (HOTS) that provide Spatial-temporal features[5]. After developing

an accurate algorithm of tracking and detection of satellites and space junk, Field

Programmable Gate Array (FPGA) will be used to implement the algorithm on

reconfigurable architecture based on Logic Blocks (LBs). FPGA can implement faster and

parallel processing of signals, and various types of neural networks can be executed, mainly it

allows to perform neural satellites will adopt the Global Positional System (GPS) receiver

signals with multiple telescopes and event-based cameras to find the time differences.

RESOURCE REQUIREMENTS

To accomplish the desired outcomes for this project, the resources required are divided into

three main sections. Firstly, conducting a literature review of the underlying situational

awareness (unsolved problems) to determine the type of data needs to be collected in this

project. Secondly, the creation of an efficient program through robust software’s to deliver a

fast solution. Finally, the hardware will provide support for the principal functions, for

instance, vision cameras, telescopes, FPGA hardware, Graphical and vision processing units.

There is a mobile telescope observatory built specifically for neuromorphic sensors

(Astrosite) located at MARCS Institute, and this robotic electro-optic telescope and cameras

will be utilised to provide ground truth and to build an accurate mounted model.

4 | P a g e

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

EXPECTATION OF RESEARCH RESULTS

The anticipated in this project is that the data collected through Astrosite in different

locations will be utilised to test and train our algorithm to detect and track satellites and space

junk, as well as enhancing the results by comparing them to the previous researches in this

field. These results will provide a better solution for space management and space situational

awareness to have a better picture and information about satellites and space junk through

ground truth device (Astrosite).

5 | P a g e

The anticipated in this project is that the data collected through Astrosite in different

locations will be utilised to test and train our algorithm to detect and track satellites and space

junk, as well as enhancing the results by comparing them to the previous researches in this

field. These results will provide a better solution for space management and space situational

awareness to have a better picture and information about satellites and space junk through

ground truth device (Astrosite).

5 | P a g e

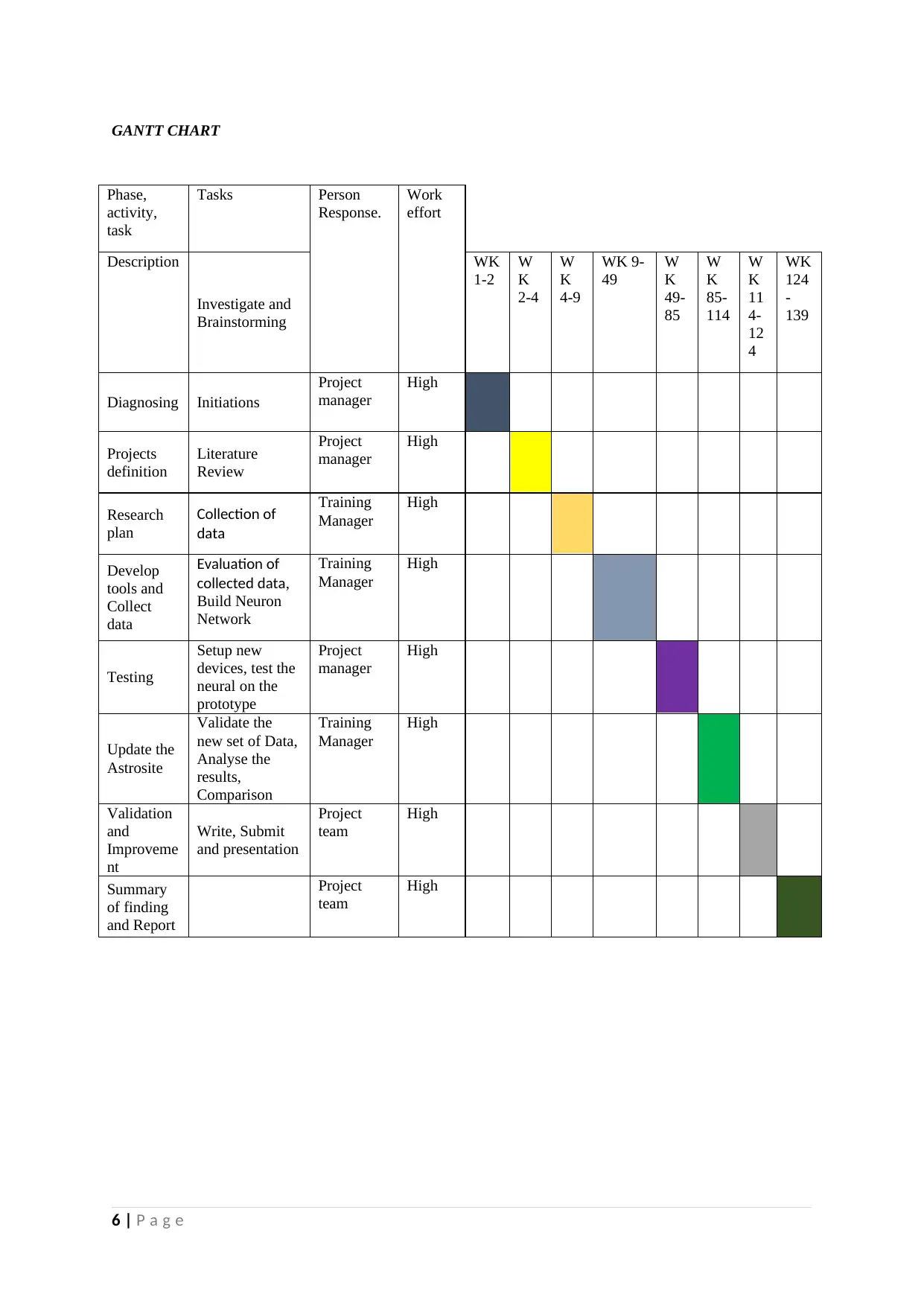

GANTT CHART

Phase,

activity,

task

Tasks Person

Response.

Work

effort

Description

Investigate and

Brainstorming

WK

1-2

W

K

2-4

W

K

4-9

WK 9-

49

W

K

49-

85

W

K

85-

114

W

K

11

4-

12

4

WK

124

-

139

Diagnosing Initiations

Project

manager

High

Projects

definition

Literature

Review

Project

manager

High

Research

plan

Collection of

data

Training

Manager

High

Develop

tools and

Collect

data

Evaluation of

collected data,

Build Neuron

Network

Training

Manager

High

Testing

Setup new

devices, test the

neural on the

prototype

Project

manager

High

Update the

Astrosite

Validate the

new set of Data,

Analyse the

results,

Comparison

Training

Manager

High

Validation

and

Improveme

nt

Write, Submit

and presentation

Project

team

High

Summary

of finding

and Report

Project

team

High

6 | P a g e

Phase,

activity,

task

Tasks Person

Response.

Work

effort

Description

Investigate and

Brainstorming

WK

1-2

W

K

2-4

W

K

4-9

WK 9-

49

W

K

49-

85

W

K

85-

114

W

K

11

4-

12

4

WK

124

-

139

Diagnosing Initiations

Project

manager

High

Projects

definition

Literature

Review

Project

manager

High

Research

plan

Collection of

data

Training

Manager

High

Develop

tools and

Collect

data

Evaluation of

collected data,

Build Neuron

Network

Training

Manager

High

Testing

Setup new

devices, test the

neural on the

prototype

Project

manager

High

Update the

Astrosite

Validate the

new set of Data,

Analyse the

results,

Comparison

Training

Manager

High

Validation

and

Improveme

nt

Write, Submit

and presentation

Project

team

High

Summary

of finding

and Report

Project

team

High

6 | P a g e

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

REFERENCES

1- Kennewell, J. A., & Vo, B. N. (2013, July). An overview of space situational awareness.

In Proceedings of the 16th International Conference on Information Fusion (pp. 1029-1036).

IEEE.

2- T. Delbruck, B. Linares-Barranco, E. Culurciello, and C. Posch, “Activity-driven, event-

based vision sensors,” in Proceedings of 2010 IEEE International Symposium on Circuits

and Systems, Paris, France, May 2010, pp. 2426–2429, DOI: 10.1109/ISCAS.2010.5537149.

3- Martin R., Arandjelović O. (2010) Multiple-object Tracking in Cluttered and Crowded

Public Spaces. In: Bebis G. et al. (eds) Advances in Visual Computing. ISVC 2010.

Lecture Notes in Computer Science, vol 6455. Springer, Berlin, Heidelberg.

4- Afshar, S., Ralph, N., Xu, Y., Tapson, J., Schaik, A. V., & Cohen, G. (2020). Event-based

feature extraction using adaptive selection thresholds. Sensors, 20(6), 1600.

5- Lagorce, X., Orchard, G., Galluppi, F., Shi, B. E., & Benosman, R. B. (2016). Hots: a

hierarchy of event-based time-surfaces for pattern recognition. IEEE transactions on pattern

analysis and machine intelligence, 39(7), 1346-1359.

7 | P a g e

1- Kennewell, J. A., & Vo, B. N. (2013, July). An overview of space situational awareness.

In Proceedings of the 16th International Conference on Information Fusion (pp. 1029-1036).

IEEE.

2- T. Delbruck, B. Linares-Barranco, E. Culurciello, and C. Posch, “Activity-driven, event-

based vision sensors,” in Proceedings of 2010 IEEE International Symposium on Circuits

and Systems, Paris, France, May 2010, pp. 2426–2429, DOI: 10.1109/ISCAS.2010.5537149.

3- Martin R., Arandjelović O. (2010) Multiple-object Tracking in Cluttered and Crowded

Public Spaces. In: Bebis G. et al. (eds) Advances in Visual Computing. ISVC 2010.

Lecture Notes in Computer Science, vol 6455. Springer, Berlin, Heidelberg.

4- Afshar, S., Ralph, N., Xu, Y., Tapson, J., Schaik, A. V., & Cohen, G. (2020). Event-based

feature extraction using adaptive selection thresholds. Sensors, 20(6), 1600.

5- Lagorce, X., Orchard, G., Galluppi, F., Shi, B. E., & Benosman, R. B. (2016). Hots: a

hierarchy of event-based time-surfaces for pattern recognition. IEEE transactions on pattern

analysis and machine intelligence, 39(7), 1346-1359.

7 | P a g e

1 out of 7

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.