Criminology Report: Examining Big Data Issues in Gov Surveillance

VerifiedAdded on 2023/06/15

|15

|3012

|273

Report

AI Summary

This criminology report delves into the implications of big data for governmental surveillance, highlighting challenges such as finding signals in noise, data silos, data inaccuracy, and the rapid advancement of technology. It addresses key issues for the government, including costs, regulatory hurdles, and the potential for misuse of data, which could amplify inequality. The report also examines privacy implications related to data storage, endpoint security, real-time data security, access control, and human rights. Recommendations include maintaining security in distributed computing frameworks, implementing security practices for non-relational data stores, preserving privacy in data mining and analytics, using encrypted data-centric security, applying granular access control, and ensuring secure storage and transaction logging. Ultimately, the report emphasizes the need for a balanced approach to leverage big data's benefits while mitigating its risks in the context of criminological research and policy.

Running head: CRIMINOLOGY

Criminology

(Big data issue)

Name of the student:

Name of the university:

Author Note

Criminology

(Big data issue)

Name of the student:

Name of the university:

Author Note

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1CRIMINOLOGY

Executive summary

Big data indicates large quantity of data inundating business activities regularly. The following study

is developed to analyze the case of governmental surveillance area keeping Big Data in mind. For

this, various evidences and provided. Primary challenges are addressed. Lastly, recommendations

are made discussing and justifying reasons for how various issues must be addressed.

Executive summary

Big data indicates large quantity of data inundating business activities regularly. The following study

is developed to analyze the case of governmental surveillance area keeping Big Data in mind. For

this, various evidences and provided. Primary challenges are addressed. Lastly, recommendations

are made discussing and justifying reasons for how various issues must be addressed.

2CRIMINOLOGY

Table of Contents

1. Problem statement:............................................................................................................................3

2. Evidence of the above problems:.......................................................................................................3

3. Key issues for government:...............................................................................................................5

4. Recommendations:............................................................................................................................8

5. References:......................................................................................................................................11

Table of Contents

1. Problem statement:............................................................................................................................3

2. Evidence of the above problems:.......................................................................................................3

3. Key issues for government:...............................................................................................................5

4. Recommendations:............................................................................................................................8

5. References:......................................................................................................................................11

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3CRIMINOLOGY

1. Problem statement:

Area of surveillance practice to be addressed:

Current developments in computational statistics and data collection are coupled with rising

computing processing power. This also includes plunging costs of storage and makes technologies

efficiently analyze a huge set of heterogeneous data found everywhere. Big data technologies have

been raising serious ethical, privacy and security issues, left unaddressed and needed to survey.

Key issues underpinning the above example:

The above areas of surveillance bring about various issues. This includes finding a signal in

noise, data silos, data inaccuracy and fast movement of technology (Kim, Trimi and Chung 2014).

2. Evidence of the above problems:

Big data refers to a huge volume of data which is structured and unstructured inundating

business on a daily basis. However, it intensifies specific trends in surveillance related to networks

and Information Technology.

Having more data has not been any substitute to have high-quality data. For instance, an

article in Nature reported that the elections pollsters of US have been struggling to gain

representative samples of the population (Kitchin 2014). This is because they have been legally

allowed to call landline phones, whereas people of America have been notably depending on cell

phones. One can seek many political opinions on social media. It has been never reliably

representative of voters. A significant share of Tweets and social media posts regarding politics are

generated through computers.

1. Problem statement:

Area of surveillance practice to be addressed:

Current developments in computational statistics and data collection are coupled with rising

computing processing power. This also includes plunging costs of storage and makes technologies

efficiently analyze a huge set of heterogeneous data found everywhere. Big data technologies have

been raising serious ethical, privacy and security issues, left unaddressed and needed to survey.

Key issues underpinning the above example:

The above areas of surveillance bring about various issues. This includes finding a signal in

noise, data silos, data inaccuracy and fast movement of technology (Kim, Trimi and Chung 2014).

2. Evidence of the above problems:

Big data refers to a huge volume of data which is structured and unstructured inundating

business on a daily basis. However, it intensifies specific trends in surveillance related to networks

and Information Technology.

Having more data has not been any substitute to have high-quality data. For instance, an

article in Nature reported that the elections pollsters of US have been struggling to gain

representative samples of the population (Kitchin 2014). This is because they have been legally

allowed to call landline phones, whereas people of America have been notably depending on cell

phones. One can seek many political opinions on social media. It has been never reliably

representative of voters. A significant share of Tweets and social media posts regarding politics are

generated through computers.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4CRIMINOLOGY

One current report from CapGemini stated that experience of digital customers has been all

about knowing and understanding customers. This indicated harnessing every source. This meant

assessing every contact and linking external sources like commercially available and social media

data. It has been all about gathering, examining and interpreting data from digital supply chain from

a myriad of connected devices (Jin et al. 2015).

Finding signal in noise:

It has been hard to get insights of a vast amount of data. For using Big Data, Maksim

Tsvetovat, data scientist and writer of the book “Social Network Analysis for Startups”, a statement

can be taken. He states that there needs to audible signal in the detected noise (Hilbert 2016). Many

times, it is not just one. As intelligent surveillance is done on the data, people need to return and say

they never measured the right or wrong variables. This is because there has been nothing one can

detect here. In raw form, big data seems like a hairball and a clear progression to this data is needed.

The article further states that it is approached and the person approaching behaves like a scientist.

This means the hypothesis is failed. Some more hypotheses are needed to come up with. Perhaps,

one of them could turn out to be correct.

Data silos:

They are primarily kryptonite of Big Data. They store every smart data that are captured in

disparate and separate units. However, they have nothing to do with other groups. Thus they have no

insights that can be collected from the data. This is because it is not merely integrated at the back

end. These data silos are the reasons why one needs to number crunch for producing any monthly

sales report. Moreover, this is the cause that any C-level decision is made at snail’s pace. It makes

marketing and sales team just not get along (Chen and Zhang 2014). Further, it makes customers

One current report from CapGemini stated that experience of digital customers has been all

about knowing and understanding customers. This indicated harnessing every source. This meant

assessing every contact and linking external sources like commercially available and social media

data. It has been all about gathering, examining and interpreting data from digital supply chain from

a myriad of connected devices (Jin et al. 2015).

Finding signal in noise:

It has been hard to get insights of a vast amount of data. For using Big Data, Maksim

Tsvetovat, data scientist and writer of the book “Social Network Analysis for Startups”, a statement

can be taken. He states that there needs to audible signal in the detected noise (Hilbert 2016). Many

times, it is not just one. As intelligent surveillance is done on the data, people need to return and say

they never measured the right or wrong variables. This is because there has been nothing one can

detect here. In raw form, big data seems like a hairball and a clear progression to this data is needed.

The article further states that it is approached and the person approaching behaves like a scientist.

This means the hypothesis is failed. Some more hypotheses are needed to come up with. Perhaps,

one of them could turn out to be correct.

Data silos:

They are primarily kryptonite of Big Data. They store every smart data that are captured in

disparate and separate units. However, they have nothing to do with other groups. Thus they have no

insights that can be collected from the data. This is because it is not merely integrated at the back

end. These data silos are the reasons why one needs to number crunch for producing any monthly

sales report. Moreover, this is the cause that any C-level decision is made at snail’s pace. It makes

marketing and sales team just not get along (Chen and Zhang 2014). Further, it makes customers

5CRIMINOLOGY

look elsewhere for moving the business ahead since they never feel needs are being met. To

eliminate data silos data is to be integrated.

Data inaccuracy:

It is seen that data silos are ineffective at an operational level. Further, they are also a fertile,

productive ground, regarding substantial data problems or data inaccuracy. As per recent report

established from Experian Data Quality most of the businesses think that their customer-contact

information is proper (Archenaa and Anita 2015). However, as one possess a database with full of

improper customer data, one might have nothing at all. The most effective method to combat data

inaccuracy is to eradicate data silos through integrating data.

Fast progress of technology:

Vast corporations of current data are prey to the data silos. The reason is that they prefer to

keep databases on-premises. Moreover, the decision making regarding new technologies has been

very slow in such case. To demonstrate this one instance of CapGemini report can be cited. It

showed that substantial disruptions from new competitors have been coming up from various other

sectors. This problem is mentioned by over 35% of respondents in every industry. This is

comparable to the overall average of below 25% (Kitchin 2014). Essentially conventional players

have been slower in moving on technological advances along with finding them being faced with

various severe competition rising from smaller companies due to this.

3. Key issues for government:

The technology of big data is relatively a new concept. Though it has possessed many

benefits, issues to using big data have been existing public sectors also. One of them is cost. Various

agencies have been paying a high premium to both their internal resources and the external parties

look elsewhere for moving the business ahead since they never feel needs are being met. To

eliminate data silos data is to be integrated.

Data inaccuracy:

It is seen that data silos are ineffective at an operational level. Further, they are also a fertile,

productive ground, regarding substantial data problems or data inaccuracy. As per recent report

established from Experian Data Quality most of the businesses think that their customer-contact

information is proper (Archenaa and Anita 2015). However, as one possess a database with full of

improper customer data, one might have nothing at all. The most effective method to combat data

inaccuracy is to eradicate data silos through integrating data.

Fast progress of technology:

Vast corporations of current data are prey to the data silos. The reason is that they prefer to

keep databases on-premises. Moreover, the decision making regarding new technologies has been

very slow in such case. To demonstrate this one instance of CapGemini report can be cited. It

showed that substantial disruptions from new competitors have been coming up from various other

sectors. This problem is mentioned by over 35% of respondents in every industry. This is

comparable to the overall average of below 25% (Kitchin 2014). Essentially conventional players

have been slower in moving on technological advances along with finding them being faced with

various severe competition rising from smaller companies due to this.

3. Key issues for government:

The technology of big data is relatively a new concept. Though it has possessed many

benefits, issues to using big data have been existing public sectors also. One of them is cost. Various

agencies have been paying a high premium to both their internal resources and the external parties

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

6CRIMINOLOGY

for controlling their information (Clarke and Margetts 2014). Further, data management has been at

many times redundant as not set up properly. Here, for instance, any agency might translate

documents and feeds of foreign social media. Through assimilating intelligent solutions coupling big

data, like compatible translation tools, a government can turn out to be more efficient with spending.

Other issues with big data have been rising with inherent regularity nature in the public

sector. Very often regulations have never been taking into account the expanded new capabilities

that have been offered by IT. Department of commercial enterprises has been presently working to

set up IT governance for better managing their computing assets in lean resource environment. The

government has already possessed all types of current laws and regulations in place. The rules have

been strong many times. It has been difficult to push forward with the IT initiatives (Reed and

Dongarra 2015). Though with enthusiasm and know-how, the process of approval can bottleneck

pace at which new departments gets deployed. This has been at many times causing government

agencies in running 50 to 10 years beyond the IT adoption of the enterprise.

For example, “PrimeCrime” unit was closed due to severe outcomes of the wrong analysis.

However, in the real world, the genie of big data is out of a bottle and would not be going away

sooner. Despite this, humanity never needs to live with this status quo. Misuse of data could amplify

and perpetuate inequality (Al Nuaimi et al. 2015). Hence, significant data possess more potential as a

tool for a positive social effect. It can develop educational outcomes and interconnect folks to

resources such as loans, financing and mental health. Moreover, it helps in ending food deserts,

decreasing traffics and improving environmental conditions under polluted neighborhoods.

for controlling their information (Clarke and Margetts 2014). Further, data management has been at

many times redundant as not set up properly. Here, for instance, any agency might translate

documents and feeds of foreign social media. Through assimilating intelligent solutions coupling big

data, like compatible translation tools, a government can turn out to be more efficient with spending.

Other issues with big data have been rising with inherent regularity nature in the public

sector. Very often regulations have never been taking into account the expanded new capabilities

that have been offered by IT. Department of commercial enterprises has been presently working to

set up IT governance for better managing their computing assets in lean resource environment. The

government has already possessed all types of current laws and regulations in place. The rules have

been strong many times. It has been difficult to push forward with the IT initiatives (Reed and

Dongarra 2015). Though with enthusiasm and know-how, the process of approval can bottleneck

pace at which new departments gets deployed. This has been at many times causing government

agencies in running 50 to 10 years beyond the IT adoption of the enterprise.

For example, “PrimeCrime” unit was closed due to severe outcomes of the wrong analysis.

However, in the real world, the genie of big data is out of a bottle and would not be going away

sooner. Despite this, humanity never needs to live with this status quo. Misuse of data could amplify

and perpetuate inequality (Al Nuaimi et al. 2015). Hence, significant data possess more potential as a

tool for a positive social effect. It can develop educational outcomes and interconnect folks to

resources such as loans, financing and mental health. Moreover, it helps in ending food deserts,

decreasing traffics and improving environmental conditions under polluted neighborhoods.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7CRIMINOLOGY

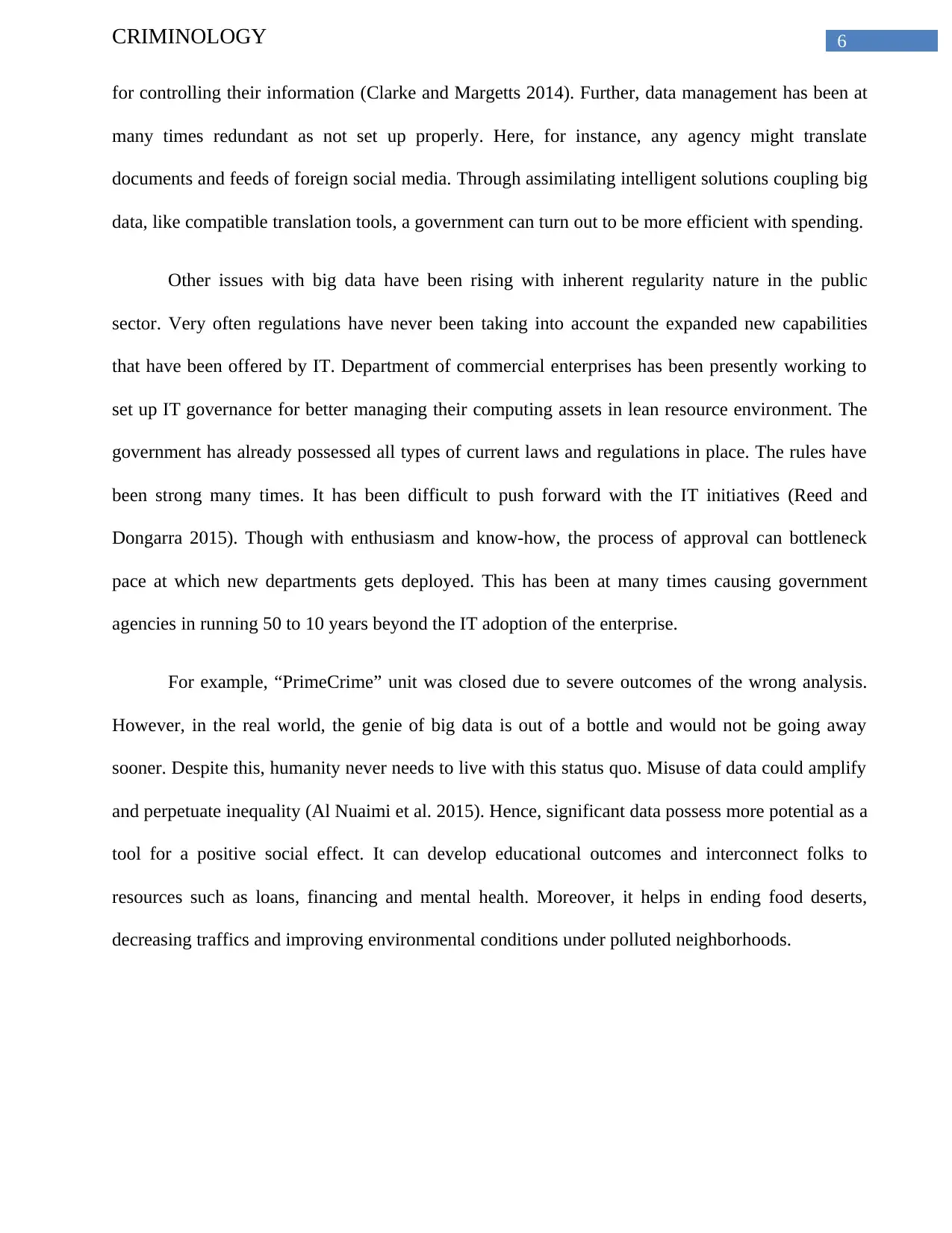

Regarding privacy, there have been various implications. They are discussed below.

Implications Discussion

Protecting

transaction logs and

data

New challenges are being posed to storage of big data since auto-

tiring process never keep track of the location of data storage.

Validating and

filtrating end-point

inputs

Different end-point devices have been the primary factors to

maintain big data. Necessary, processing and storage tasks are done with the

help of input data. This is supplied by end-points.

Protecting and

securing data in real

time

Because of an enormous quantity of data generation, most of the

companies have been unable to control daily checks (Janssen and van den

Hoven 2015).

Protecting access

control method

encryption and

communication

A secured device of data storage is a smart step to protect data.

Since devices of data storage are vulnerable, it is mandatory to encrypt

access control methods.

Issues of impacts on

human rights

The problem has been that one is merely unaware of every positive

and negative effect brought by Big Data. This has made that difficult to

undertake informed decisions. However, it is unknown that how

computation, design thinking and science has been influencing conventional

interventionist, protectionist, economic and legal frameworks (Wamba et al.

2015). Further, it is not clear whether similar technologies changing

different aspects of social and commercial life possess the potential of

addressing justice, empowerment and human suffering.

Regarding privacy, there have been various implications. They are discussed below.

Implications Discussion

Protecting

transaction logs and

data

New challenges are being posed to storage of big data since auto-

tiring process never keep track of the location of data storage.

Validating and

filtrating end-point

inputs

Different end-point devices have been the primary factors to

maintain big data. Necessary, processing and storage tasks are done with the

help of input data. This is supplied by end-points.

Protecting and

securing data in real

time

Because of an enormous quantity of data generation, most of the

companies have been unable to control daily checks (Janssen and van den

Hoven 2015).

Protecting access

control method

encryption and

communication

A secured device of data storage is a smart step to protect data.

Since devices of data storage are vulnerable, it is mandatory to encrypt

access control methods.

Issues of impacts on

human rights

The problem has been that one is merely unaware of every positive

and negative effect brought by Big Data. This has made that difficult to

undertake informed decisions. However, it is unknown that how

computation, design thinking and science has been influencing conventional

interventionist, protectionist, economic and legal frameworks (Wamba et al.

2015). Further, it is not clear whether similar technologies changing

different aspects of social and commercial life possess the potential of

addressing justice, empowerment and human suffering.

8CRIMINOLOGY

Currently, various development and humanitarian communities have gone through

approaches that are data-driven, interventions and innovations. Despite that, multiple issues have

been emerging regarding distinct topics, policymakers, human security practitioners and human

rights. As information and data have always been vital for all these areas, nature of networked and

digital technologies and their ability to store collect and assess data has been developing very fast

(Hashem et al. 2016).

Apart from above, Big Data incentivizes more data collection and longer retention. Again, in

economic terms, it has been accentuating information asymmetries of government and allows for

people to get manipulated. Moreover, it has been emphasizing power differentials among people in

society though amplifying present benefits and drawbacks.

It is seen from the above study that big data as an essential contemporary surveillance issue

for a government has made the system more save money, more efficient and identify fraud. It has

been helping public bodies better serve the citizens. Data has been enabling a government to perform

existing activities more cost-effectively.

4. Recommendations:

The following recommendations are helpful for understanding the likely effect of Big Data

analytics on criminological research and policy.

Maintain Security in Distributed Computing Framework:

There are two methods to ensure trustworthiness of worker computers. The first one is truth

establishment where workers are stringently authenticated and provided access to properties by

masters only.

Currently, various development and humanitarian communities have gone through

approaches that are data-driven, interventions and innovations. Despite that, multiple issues have

been emerging regarding distinct topics, policymakers, human security practitioners and human

rights. As information and data have always been vital for all these areas, nature of networked and

digital technologies and their ability to store collect and assess data has been developing very fast

(Hashem et al. 2016).

Apart from above, Big Data incentivizes more data collection and longer retention. Again, in

economic terms, it has been accentuating information asymmetries of government and allows for

people to get manipulated. Moreover, it has been emphasizing power differentials among people in

society though amplifying present benefits and drawbacks.

It is seen from the above study that big data as an essential contemporary surveillance issue

for a government has made the system more save money, more efficient and identify fraud. It has

been helping public bodies better serve the citizens. Data has been enabling a government to perform

existing activities more cost-effectively.

4. Recommendations:

The following recommendations are helpful for understanding the likely effect of Big Data

analytics on criminological research and policy.

Maintain Security in Distributed Computing Framework:

There are two methods to ensure trustworthiness of worker computers. The first one is truth

establishment where workers are stringently authenticated and provided access to properties by

masters only.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

9CRIMINOLOGY

The next one is Mandatory Access Control. Here every worker is constrained to a limited set

of tasks.

Security Practice implementation for Non-Relational Data Stores:

Data integrity must be forced through application or middleware layer. Encryption must be

used every time where data has been in transit and rest.

Preserve the Privacy in Data Mining and Analytics:

Privacies for legal causes should be preserved for monetary and system performance. Since a

legal requirement varies from nation to nation, it is critical to comply with policies of countries

where the activities are done.

Encrypted Data-Centric Security:

Identity and encryption based on an attribute are used to enforce access control over distinct

objects by cryptography. It can encrypt plaintexts such that only one entity with particular identity

could decrypt text. Attribute-based encryption is implemented similar control over attributes rather

than personalities. An efficient homomorphic encryption scheme can keep data encrypted though it

has been worked on. Besides, another tool useful to maintain privacy is “Group Signatures”. This

allows individual entities to access data. This can be identified publicly only as a section of the

group.

Granular Access Control:

There is complicacy to track and implement big data environments, where scale is so

massive. Here it is recommended to decrease complexities arising from granular access controls at

an application level.

The next one is Mandatory Access Control. Here every worker is constrained to a limited set

of tasks.

Security Practice implementation for Non-Relational Data Stores:

Data integrity must be forced through application or middleware layer. Encryption must be

used every time where data has been in transit and rest.

Preserve the Privacy in Data Mining and Analytics:

Privacies for legal causes should be preserved for monetary and system performance. Since a

legal requirement varies from nation to nation, it is critical to comply with policies of countries

where the activities are done.

Encrypted Data-Centric Security:

Identity and encryption based on an attribute are used to enforce access control over distinct

objects by cryptography. It can encrypt plaintexts such that only one entity with particular identity

could decrypt text. Attribute-based encryption is implemented similar control over attributes rather

than personalities. An efficient homomorphic encryption scheme can keep data encrypted though it

has been worked on. Besides, another tool useful to maintain privacy is “Group Signatures”. This

allows individual entities to access data. This can be identified publicly only as a section of the

group.

Granular Access Control:

There is complicacy to track and implement big data environments, where scale is so

massive. Here it is recommended to decrease complexities arising from granular access controls at

an application level.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

10CRIMINOLOGY

Secure Storage and Transaction Logging:

Technologies to deal with some challenges have turned into more robust responding to

demands of big data. The encryption is a vital part to maintain integrity and confidentiality of data.

Further, digital signatures utilizing asymmetric encryption, regular audits and hash chaining has

helped to secure data.

Granular Audits:

This can be started by enabling options for logging for every component assuring

completeness of information. It has been including applications at every layer that includes operating

systems.

Data Provenance and Verification:

It is also recommended that persistence of data-independency must be satisfied while

upgrading provenance graphs.

Endpoint Input Validation and Filtering:

The solution has been taking two approaches. One is a prevention of tampering and other is

filtering and detection of compromised data. Apart from this, it has been virtually building a

complicated and extensive system that has been entirely resistant towards tampering.

Real-Time Security Monitoring:

It can be used to recognize threats. It has been including differentiation of actual threats from

false positives. A framework of big data requires include analysis and controlling tools. As they

could be available under a structure, these devices could be placed in front-end system. Their task is

primarily to supply analytics needed to analyze feedback for identifying threats.

Secure Storage and Transaction Logging:

Technologies to deal with some challenges have turned into more robust responding to

demands of big data. The encryption is a vital part to maintain integrity and confidentiality of data.

Further, digital signatures utilizing asymmetric encryption, regular audits and hash chaining has

helped to secure data.

Granular Audits:

This can be started by enabling options for logging for every component assuring

completeness of information. It has been including applications at every layer that includes operating

systems.

Data Provenance and Verification:

It is also recommended that persistence of data-independency must be satisfied while

upgrading provenance graphs.

Endpoint Input Validation and Filtering:

The solution has been taking two approaches. One is a prevention of tampering and other is

filtering and detection of compromised data. Apart from this, it has been virtually building a

complicated and extensive system that has been entirely resistant towards tampering.

Real-Time Security Monitoring:

It can be used to recognize threats. It has been including differentiation of actual threats from

false positives. A framework of big data requires include analysis and controlling tools. As they

could be available under a structure, these devices could be placed in front-end system. Their task is

primarily to supply analytics needed to analyze feedback for identifying threats.

11CRIMINOLOGY

5. References:

Al Nuaimi, E., Al Neyadi, H., Mohamed, N. and Al-Jaroodi, J., 2015. Applications of big data to

smart cities. Journal of Internet Services and Applications, 6(1), p.25.

Archenaa, J. and Anita, E.M., 2015. A survey of big data analytics in healthcare and

government. Procedia Computer Science, 50, pp.408-413.

Bertot, J.C., Gorham, U., Jaeger, P.T., Sarin, L.C. and Choi, H., 2014. Big data, open government

and e-government: Issues, policies and recommendations. Information Polity, 19(1, 2), pp.5-16.

Chen, C.P. and Zhang, C.Y., 2014. Data-intensive applications, challenges, techniques and

technologies: A survey on Big Data. Information Sciences, 275, pp.314-347.

Chen, M., Mao, S. and Liu, Y., 2014. Big data: A survey. Mobile networks and applications, 19(2),

pp.171-209.

Clarke, A. and Margetts, H., 2014. Governments and citizens getting to know each other? Open,

closed, and big data in public management reform. Policy & Internet, 6(4), pp.393-417.

Einav, L. and Levin, J., 2014. Economics in the age of big data. Science, 346(6210), p.1243089.

Hashem, I.A.T., Chang, V., Anuar, N.B., Adewole, K., Yaqoob, I., Gani, A., Ahmed, E. and

Chiroma, H., 2016. The role of big data in smart city. International Journal of Information

Management, 36(5), pp.748-758.

Hilbert, M., 2016. Big data for development: A review of promises and challenges. Development

Policy Review, 34(1), pp.135-174.

Janssen, M. and van den Hoven, J., 2015. Big and Open Linked Data (BOLD) in government: A

challenge to transparency and privacy?.

5. References:

Al Nuaimi, E., Al Neyadi, H., Mohamed, N. and Al-Jaroodi, J., 2015. Applications of big data to

smart cities. Journal of Internet Services and Applications, 6(1), p.25.

Archenaa, J. and Anita, E.M., 2015. A survey of big data analytics in healthcare and

government. Procedia Computer Science, 50, pp.408-413.

Bertot, J.C., Gorham, U., Jaeger, P.T., Sarin, L.C. and Choi, H., 2014. Big data, open government

and e-government: Issues, policies and recommendations. Information Polity, 19(1, 2), pp.5-16.

Chen, C.P. and Zhang, C.Y., 2014. Data-intensive applications, challenges, techniques and

technologies: A survey on Big Data. Information Sciences, 275, pp.314-347.

Chen, M., Mao, S. and Liu, Y., 2014. Big data: A survey. Mobile networks and applications, 19(2),

pp.171-209.

Clarke, A. and Margetts, H., 2014. Governments and citizens getting to know each other? Open,

closed, and big data in public management reform. Policy & Internet, 6(4), pp.393-417.

Einav, L. and Levin, J., 2014. Economics in the age of big data. Science, 346(6210), p.1243089.

Hashem, I.A.T., Chang, V., Anuar, N.B., Adewole, K., Yaqoob, I., Gani, A., Ahmed, E. and

Chiroma, H., 2016. The role of big data in smart city. International Journal of Information

Management, 36(5), pp.748-758.

Hilbert, M., 2016. Big data for development: A review of promises and challenges. Development

Policy Review, 34(1), pp.135-174.

Janssen, M. and van den Hoven, J., 2015. Big and Open Linked Data (BOLD) in government: A

challenge to transparency and privacy?.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 15

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.