Hadoop File System: Forensic Investigation and Architecture Report

VerifiedAdded on 2022/11/13

|10

|1804

|404

Report

AI Summary

This report provides a comprehensive overview of the Hadoop File System (HDFS), its internal architecture, and its application in forensic investigations. It begins with an executive summary and introduction to Hadoop, emphasizing its role in big data storage and processing. The report details the core components of the Hadoop architecture, including the namenode, datanode, and blocks, explaining their functions and interactions within the HDFS. Furthermore, it delves into the structures of the HDFS, such as CheckpointNode, BackupNode, and file system snapshots, elucidating their roles in data management and system recovery. The report then focuses on HDFS forensic investigation, outlining different categories of forensic data within Hadoop, including information support, evidence of record, and application/user evidence, which can be useful in digital forensic investigations. The report concludes by summarizing the key findings, emphasizing the importance of HDFS in storing large amounts of data and its relevance to forensic analysis.

Running head: HADOOP FILE SYSTEM

HADOOP FILE SYSTEM

Name of the Student

Name of the University

Author Note

HADOOP FILE SYSTEM

Name of the Student

Name of the University

Author Note

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

HADOOP FILE SYSTEM 1

Executive Summary

The report is done by keeping all the points of Hadoop File System in mind. Hadoop is a

software that can store huge amounts of data and can do solve problems. The report focuses

about the internal architecture of Hadoop that consists of the namenode, datanode and the

block. The report discusses in detail about the internal structures of Hadoop and its workings

that includes the backupNode, checkpointnode and the file system snapshot. The report also

focuses on the forensic investigation and the steps of the investigation using the Hadoop as

Hadoop can store huge amounts of data that can be useful in the investigation of forensics.

Last but not the least the report concludes with the different steps of Hadoop forensic

investigation and how the investigation is done.

Executive Summary

The report is done by keeping all the points of Hadoop File System in mind. Hadoop is a

software that can store huge amounts of data and can do solve problems. The report focuses

about the internal architecture of Hadoop that consists of the namenode, datanode and the

block. The report discusses in detail about the internal structures of Hadoop and its workings

that includes the backupNode, checkpointnode and the file system snapshot. The report also

focuses on the forensic investigation and the steps of the investigation using the Hadoop as

Hadoop can store huge amounts of data that can be useful in the investigation of forensics.

Last but not the least the report concludes with the different steps of Hadoop forensic

investigation and how the investigation is done.

2HADOOP FILE SYSTEM

Table of Contents

Introduction:...............................................................................................................................3

Discussions.................................................................................................................................3

Hadoop internals and architecture..........................................................................................3

Hadoop Distributed File System Structure............................................................................4

HDFS Forensic Investigation.................................................................................................5

Conclusion..................................................................................................................................6

References..................................................................................................................................7

Table of Contents

Introduction:...............................................................................................................................3

Discussions.................................................................................................................................3

Hadoop internals and architecture..........................................................................................3

Hadoop Distributed File System Structure............................................................................4

HDFS Forensic Investigation.................................................................................................5

Conclusion..................................................................................................................................6

References..................................................................................................................................7

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3HADOOP FILE SYSTEM

Introduction:

Hadoop is basically an open source software library that allows the large data sets of

the distributed processing across clusters of computers. Hadoop is platform for big data and it

is widely used by many organizations (Bende and Shedge, 2016). The Hadoop provides a

framework of software for the storage that is distributed and using the map reduce

programming model the processing of big data can be done. This report deals with the

forensic investigation using Hadoop. The report discusses the internal structures as well as

architecture of Hadoop, the Hadoop distributed file system and HDFS forensic investigation.

Discussions:

Hadoop internals and architecture:

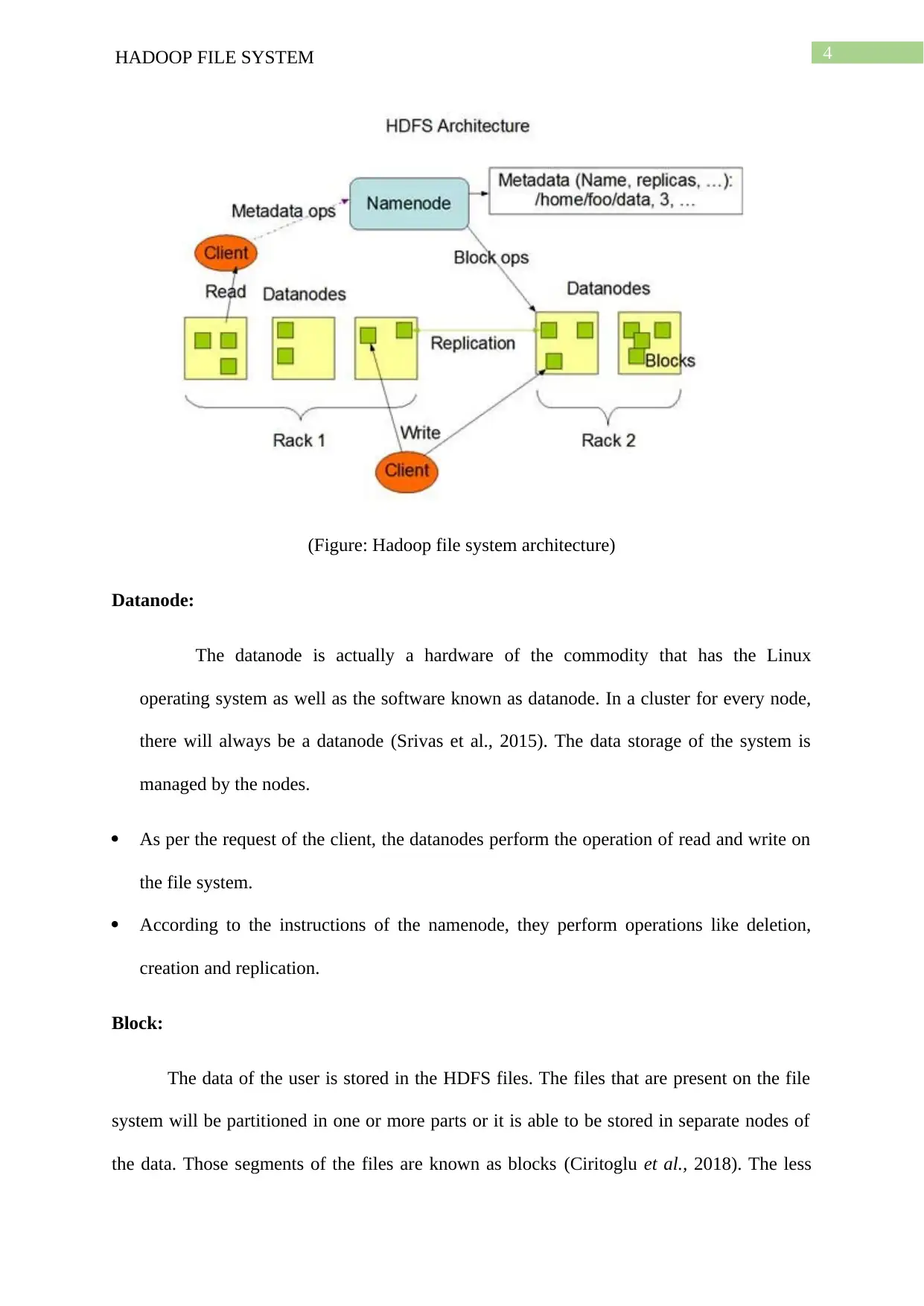

The Hadoop File System follows the architecture of master slave and it consists of the

following elements

Namenode:

The namenode is the hardware of the commodity that consists of the Linux operating

system and the software of namenode (Gao et al., 2017). The namenode is a kind of software

that can be executed on commodity software. The system, which has the namenode software,

acts as a master server. It performs the following tasks-

The namespace of the file system is managed by it.

It also controls the access of clients to the files.

It also runs the operations of file system like closing, renaming, opening the files and the

directories.

Introduction:

Hadoop is basically an open source software library that allows the large data sets of

the distributed processing across clusters of computers. Hadoop is platform for big data and it

is widely used by many organizations (Bende and Shedge, 2016). The Hadoop provides a

framework of software for the storage that is distributed and using the map reduce

programming model the processing of big data can be done. This report deals with the

forensic investigation using Hadoop. The report discusses the internal structures as well as

architecture of Hadoop, the Hadoop distributed file system and HDFS forensic investigation.

Discussions:

Hadoop internals and architecture:

The Hadoop File System follows the architecture of master slave and it consists of the

following elements

Namenode:

The namenode is the hardware of the commodity that consists of the Linux operating

system and the software of namenode (Gao et al., 2017). The namenode is a kind of software

that can be executed on commodity software. The system, which has the namenode software,

acts as a master server. It performs the following tasks-

The namespace of the file system is managed by it.

It also controls the access of clients to the files.

It also runs the operations of file system like closing, renaming, opening the files and the

directories.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4HADOOP FILE SYSTEM

(Figure: Hadoop file system architecture)

Datanode:

The datanode is actually a hardware of the commodity that has the Linux

operating system as well as the software known as datanode. In a cluster for every node,

there will always be a datanode (Srivas et al., 2015). The data storage of the system is

managed by the nodes.

As per the request of the client, the datanodes perform the operation of read and write on

the file system.

According to the instructions of the namenode, they perform operations like deletion,

creation and replication.

Block:

The data of the user is stored in the HDFS files. The files that are present on the file

system will be partitioned in one or more parts or it is able to be stored in separate nodes of

the data. Those segments of the files are known as blocks (Ciritoglu et al., 2018). The less

(Figure: Hadoop file system architecture)

Datanode:

The datanode is actually a hardware of the commodity that has the Linux

operating system as well as the software known as datanode. In a cluster for every node,

there will always be a datanode (Srivas et al., 2015). The data storage of the system is

managed by the nodes.

As per the request of the client, the datanodes perform the operation of read and write on

the file system.

According to the instructions of the namenode, they perform operations like deletion,

creation and replication.

Block:

The data of the user is stored in the HDFS files. The files that are present on the file

system will be partitioned in one or more parts or it is able to be stored in separate nodes of

the data. Those segments of the files are known as blocks (Ciritoglu et al., 2018). The less

5HADOOP FILE SYSTEM

amount of data that can be read or write by a HDFS is called a block. The size of the block is

64 MB, which is default. However, the size can be changed according to the configuration of

the HDFS.

Hadoop Distributed File System Structure:

The Hadoop Distributed File System consists of the following structures

CheckpointNode:

A checkpoint node is another namenode in the cluster of the HDFS. The work of the

checkpointnode is to download the checkpoint that is currently available and the files of the

journal from the namenode. Then it merges the files locally and then returns the new

checkpoint to the namenode (Ramakrishnan et al., 2017). At this point in the HDFS, the

namenode stores the empty journal that is existing and the new checkpoint. The metadata of

the file system can be protected by creating periodic checkpoints.

BackupNode:

The BackupNode has the ability to create checkpoints that are creative in nature.

However, other than that it also maintains up to date image and an in memory of the system

of the files namespace. It is always co-existing with the namenode state. The backupnode is

informed by the namenode about all the transactions that is in form of stream of changes.

The requirements of the memory are same in both namenode and backupnode (Fahmy,

Elghandour and Nagi, 2016). They both store the information in the memory. The checkpoint

on the disk and the backupnode’s image in the memory is the backup of the state of the

namespace if the namenode fails. The backupnode contain all the system of the file

information other than the location of the block.

File system snapshot:

amount of data that can be read or write by a HDFS is called a block. The size of the block is

64 MB, which is default. However, the size can be changed according to the configuration of

the HDFS.

Hadoop Distributed File System Structure:

The Hadoop Distributed File System consists of the following structures

CheckpointNode:

A checkpoint node is another namenode in the cluster of the HDFS. The work of the

checkpointnode is to download the checkpoint that is currently available and the files of the

journal from the namenode. Then it merges the files locally and then returns the new

checkpoint to the namenode (Ramakrishnan et al., 2017). At this point in the HDFS, the

namenode stores the empty journal that is existing and the new checkpoint. The metadata of

the file system can be protected by creating periodic checkpoints.

BackupNode:

The BackupNode has the ability to create checkpoints that are creative in nature.

However, other than that it also maintains up to date image and an in memory of the system

of the files namespace. It is always co-existing with the namenode state. The backupnode is

informed by the namenode about all the transactions that is in form of stream of changes.

The requirements of the memory are same in both namenode and backupnode (Fahmy,

Elghandour and Nagi, 2016). They both store the information in the memory. The checkpoint

on the disk and the backupnode’s image in the memory is the backup of the state of the

namespace if the namenode fails. The backupnode contain all the system of the file

information other than the location of the block.

File system snapshot:

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

6HADOOP FILE SYSTEM

A snapshot is a picture of the whole cluster. It is saved to prevent any loss of data that

can be in any case of up gradation of the system. The original files are not a part of these

images because the memory area is doubled of the whole cluster. When the admin requests a

snapshot, the namenode take any of the checkpoint file that already exists and then merge the

logs of the journal into it. Then they store the files into file system that is persistent (Fahmy,

Elghandour and Nagi, 2016). All the datanode copy the information of their directory and the

links to the blocks of the data and then they store it into them. This information is useful in

case of failure of the cluster.

HDFS Forensic Investigation:

In forensic, evidence is the base and in digital investigations, data is the base. Hadoop

can store a lot of information and data in the memory. For evidence, information is provided

by the data. However, the data is sometimes useful and sometimes not useful. This totally

depends on the case and the scenario of the investigation or forensic. There are three

categories of forensic data in Hadoop.

Information support: This is the information, which is not direct but the information that is

used to identify the evidences, or it can give some information’s about the configurations.

Evidence of the record: The record of the evidence is the information that is direct. Any

analysed data in HDFS come under the category of record evidence.

Application evidence and user: This evidence is the evidence, which includes the log files,

configuration files, and analysis of the scripts, metadata and any of the logic that can be

performed on the data (Kakoulli and Herodotou, 2017). It gives the information about how

the data was analysed or generated.

A snapshot is a picture of the whole cluster. It is saved to prevent any loss of data that

can be in any case of up gradation of the system. The original files are not a part of these

images because the memory area is doubled of the whole cluster. When the admin requests a

snapshot, the namenode take any of the checkpoint file that already exists and then merge the

logs of the journal into it. Then they store the files into file system that is persistent (Fahmy,

Elghandour and Nagi, 2016). All the datanode copy the information of their directory and the

links to the blocks of the data and then they store it into them. This information is useful in

case of failure of the cluster.

HDFS Forensic Investigation:

In forensic, evidence is the base and in digital investigations, data is the base. Hadoop

can store a lot of information and data in the memory. For evidence, information is provided

by the data. However, the data is sometimes useful and sometimes not useful. This totally

depends on the case and the scenario of the investigation or forensic. There are three

categories of forensic data in Hadoop.

Information support: This is the information, which is not direct but the information that is

used to identify the evidences, or it can give some information’s about the configurations.

Evidence of the record: The record of the evidence is the information that is direct. Any

analysed data in HDFS come under the category of record evidence.

Application evidence and user: This evidence is the evidence, which includes the log files,

configuration files, and analysis of the scripts, metadata and any of the logic that can be

performed on the data (Kakoulli and Herodotou, 2017). It gives the information about how

the data was analysed or generated.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7HADOOP FILE SYSTEM

Conclusion:

Thus, it can be concluded from the above report that this report is based on the

Hadoop File System and its architectures. Hadoop is a collection of utilities of software that

can be facilitated using a network to solve problems that involves enormous data and

computation. The report discusses about the architecture of Hadoop as well as the internal

structures of Hadoop. The report also focuses on the forensic investigation and the steps to

carry out forensic information using the Hadoop File System. The report concludes with

different steps of forensic information using Hadoop. HDFS can be very useful in the

forensic information as it can store huge amounts of data.

Conclusion:

Thus, it can be concluded from the above report that this report is based on the

Hadoop File System and its architectures. Hadoop is a collection of utilities of software that

can be facilitated using a network to solve problems that involves enormous data and

computation. The report discusses about the architecture of Hadoop as well as the internal

structures of Hadoop. The report also focuses on the forensic investigation and the steps to

carry out forensic information using the Hadoop File System. The report concludes with

different steps of forensic information using Hadoop. HDFS can be very useful in the

forensic information as it can store huge amounts of data.

8HADOOP FILE SYSTEM

References:

Bende, S. and Shedge, R., 2016. Dealing with small files problem in Hadoop distributed file

system. Procedia Computer Science, 79, pp.1001-1012.

Gao, S., Li, L., Li, W., Janowicz, K. and Zhang, Y., 2017. Constructing gazetteers from

volunteered big geo-data based on Hadoop. Computers, Environment and Urban Systems, 61,

pp.172-186.

Srivas, M.C., Ravindra, P., Saradhi, U.V., Pande, A.A., Sanapala, C.G.K.B., Renu, L.V.,

Vellanki, V., Kavacheri, S. and Hadke, A., MapR Technologies, Inc., 2015. Map-reduce

ready distributed file system. U.S. Patent 9,207,930.

Ciritoglu, H.E., Saber, T., Buda, T.S., Murphy, J. and Thorpe, C., 2018, July. Towards a

better replica management for Hadoop distributed file system. In 2018 IEEE International

Congress on Big Data (BigData Congress) (pp. 104-111). IEEE.

Ciritoglu, H.E., Batista de Almeida, L., Cunha de Almeida, E., Buda, T.S., Murphy, J. and

Thorpe, C., 2018, April. Investigation of replication factor for performance enhancement in

the Hadoop distributed file system. In Companion of the 2018 ACM/SPEC International

Conference on Performance Engineering (pp. 135-140). ACM.

Ramakrishnan, R., Sridharan, B., Douceur, J.R., Kasturi, P., Krishnamachari-Sampath, B.,

Krishnamoorthy, K., Li, P., Manu, M., Michaylov, S., Ramos, R. and Sharman, N., 2017,

May. Azure data lake store: a hyperscale distributed file service for big data analytics.

In Proceedings of the 2017 ACM International Conference on Management of Data (pp. 51-

63). ACM.

References:

Bende, S. and Shedge, R., 2016. Dealing with small files problem in Hadoop distributed file

system. Procedia Computer Science, 79, pp.1001-1012.

Gao, S., Li, L., Li, W., Janowicz, K. and Zhang, Y., 2017. Constructing gazetteers from

volunteered big geo-data based on Hadoop. Computers, Environment and Urban Systems, 61,

pp.172-186.

Srivas, M.C., Ravindra, P., Saradhi, U.V., Pande, A.A., Sanapala, C.G.K.B., Renu, L.V.,

Vellanki, V., Kavacheri, S. and Hadke, A., MapR Technologies, Inc., 2015. Map-reduce

ready distributed file system. U.S. Patent 9,207,930.

Ciritoglu, H.E., Saber, T., Buda, T.S., Murphy, J. and Thorpe, C., 2018, July. Towards a

better replica management for Hadoop distributed file system. In 2018 IEEE International

Congress on Big Data (BigData Congress) (pp. 104-111). IEEE.

Ciritoglu, H.E., Batista de Almeida, L., Cunha de Almeida, E., Buda, T.S., Murphy, J. and

Thorpe, C., 2018, April. Investigation of replication factor for performance enhancement in

the Hadoop distributed file system. In Companion of the 2018 ACM/SPEC International

Conference on Performance Engineering (pp. 135-140). ACM.

Ramakrishnan, R., Sridharan, B., Douceur, J.R., Kasturi, P., Krishnamachari-Sampath, B.,

Krishnamoorthy, K., Li, P., Manu, M., Michaylov, S., Ramos, R. and Sharman, N., 2017,

May. Azure data lake store: a hyperscale distributed file service for big data analytics.

In Proceedings of the 2017 ACM International Conference on Management of Data (pp. 51-

63). ACM.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

9HADOOP FILE SYSTEM

Kakoulli, E. and Herodotou, H., 2017, May. OctopusFS: A distributed file system with tiered

storage management. In Proceedings of the 2017 ACM International Conference on

Management of Data (pp. 65-78). ACM.

Fahmy, M.M., Elghandour, I. and Nagi, M., 2016, December. CoS-HDFS: co-locating geo-

distributed spatial data in Hadoop distributed file system. In Proceedings of the 3rd

IEEE/ACM International Conference on Big Data Computing, Applications and

Technologies (pp. 123-132). ACM.

Kakoulli, E. and Herodotou, H., 2017, May. OctopusFS: A distributed file system with tiered

storage management. In Proceedings of the 2017 ACM International Conference on

Management of Data (pp. 65-78). ACM.

Fahmy, M.M., Elghandour, I. and Nagi, M., 2016, December. CoS-HDFS: co-locating geo-

distributed spatial data in Hadoop distributed file system. In Proceedings of the 3rd

IEEE/ACM International Conference on Big Data Computing, Applications and

Technologies (pp. 123-132). ACM.

1 out of 10

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.