Big Data Processing: A Comparative Analysis of Apache Hadoop and Spark

VerifiedAdded on 2023/01/18

|63

|15956

|48

Report

AI Summary

This report provides a comprehensive comparative analysis of Apache Hadoop and Apache Spark, two prominent tools in the realm of big data processing. It begins with an overview of the challenges and complexities associated with big data, including its volume, velocity, variety, and veracity. The report then delves into the core concepts of big data analytics, distinguishing between batch and streaming processing methods. A significant portion of the paper is dedicated to dissecting Apache Hadoop, exploring its evolution, ecosystem, components (HDFS, MapReduce, YARN, etc.), and practical applications. Following this, the report pivots to Apache Spark, detailing its ecosystem, key components (Spark Core, SQL, Streaming, MLlib, etc.), and real-world use cases. The analysis culminates in a direct comparison of Hadoop and Spark, highlighting their key differences, market positions, and suitability for various applications. The report concludes by advocating for the use of Apache Spark due to its superior processing speeds, near real-time analytics capabilities, and user-friendly APIs.

Running head: APACHE HADOOP VERSUS APACHE SPARK 1

Apache Hadoop Versus Apache Spark

Name

Institutional Affiliation

Course

Date

Apache Hadoop Versus Apache Spark

Name

Institutional Affiliation

Course

Date

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

APACHE HADOOP VERSUS APACHE SPARK 2

Abstract

Big data have acquired huge attention in the past few years. Evaluating big data is a

basic requirement in the modern era, and such requirements are terrifying when assessing

massive data sets. It is very challenging to evaluate the huge amount of data to acquire

different patterns and relevance of data on timely manner. This paper will investigate the Big

Data Analysis concept and discuss two Big Data analytical tools: Apache Spark and Apache

Hadoop.

This paper proposes that Apache Spark should be used. In terms of performance,

apache spark has higher processing speeds and near real time analytics. On the other hand,

Apache Hadoop was designed for batch processing, thus, it does not support real time

processing and is much slower in terms of processing as compared to Apache spark.

Secondly, apache spark is popular for its ease of use because it come with APIs that are user-

friendly build for Spark SQL, Python, Java, and Scala. Apache Spark is also built with an

interactive mode to allow the users and application developers to have immediate response

for actions and queries taken. On the other hand, Apache Hadoop does not have any

interactive elements but only supports add-ons like Pig and Hive. Apache Spark is also

compatible with Apache Hadoop and share all the sources of data that Hadoop uses.

However, because of better performance, Apache is still the preferred option.

Abstract

Big data have acquired huge attention in the past few years. Evaluating big data is a

basic requirement in the modern era, and such requirements are terrifying when assessing

massive data sets. It is very challenging to evaluate the huge amount of data to acquire

different patterns and relevance of data on timely manner. This paper will investigate the Big

Data Analysis concept and discuss two Big Data analytical tools: Apache Spark and Apache

Hadoop.

This paper proposes that Apache Spark should be used. In terms of performance,

apache spark has higher processing speeds and near real time analytics. On the other hand,

Apache Hadoop was designed for batch processing, thus, it does not support real time

processing and is much slower in terms of processing as compared to Apache spark.

Secondly, apache spark is popular for its ease of use because it come with APIs that are user-

friendly build for Spark SQL, Python, Java, and Scala. Apache Spark is also built with an

interactive mode to allow the users and application developers to have immediate response

for actions and queries taken. On the other hand, Apache Hadoop does not have any

interactive elements but only supports add-ons like Pig and Hive. Apache Spark is also

compatible with Apache Hadoop and share all the sources of data that Hadoop uses.

However, because of better performance, Apache is still the preferred option.

APACHE HADOOP VERSUS APACHE SPARK 3

Table of Contents

1 Introduction........................................................................................................................5

2 Big Data.............................................................................................................................6

3 Big Data Analytics.............................................................................................................8

4 Big Data Analytic Tools.....................................................................................................9

5 Apache Hadoop..................................................................................................................9

5.1 Evolvement of Apache Hadoop...................................................................................9

5.2 Hadoop Ecosystem/ Architecture..............................................................................10

5.3 Components of Apache Hadoop................................................................................10

5.3.1 HDFS (Hadoop distributed file system).............................................................10

5.3.2 Hadoop MapReduce...........................................................................................12

5.3.3 Hadoop Common...............................................................................................13

5.3.4 Hadoop Yarn......................................................................................................13

5.3.5 Other Hadoop Components................................................................................15

5.4 Hadoop Download.....................................................................................................30

5.5 Types of Hadoop installation.....................................................................................31

5.6 Major Commands of Hadoop....................................................................................31

5.7 Hadoop Streaming.....................................................................................................32

5.8 Reasons to Choose Apache Hadoop..........................................................................32

5.9 Practical Applications of Apache Hadoop................................................................34

5.10 Apache Hadoop Scope...........................................................................................36

6 Apache Spark...................................................................................................................37

6.1 Apache Spark Ecosystem..........................................................................................37

6.2 Components of Apache Spark...................................................................................39

6.2.1 Apache Spark core.............................................................................................39

6.2.2 Apache Spark SQL.............................................................................................40

6.2.3 Apache Spark Streaming....................................................................................40

6.2.4 Apache Spark MLlib..........................................................................................42

6.2.5 Apache Spark GraphX.......................................................................................42

6.2.6 Apache Spark R..................................................................................................42

6.2.7 Scalability Function...........................................................................................43

6.3 Running Spark Applications on a Cluster.................................................................43

6.4 Applications of Apache Spark...................................................................................45

6.5 Practical Applications of Apache Spark....................................................................46

6.6 Reasons to Choose Spark..........................................................................................47

7 Comparison between Apache Hadoop and Apache Spark...............................................49

Table of Contents

1 Introduction........................................................................................................................5

2 Big Data.............................................................................................................................6

3 Big Data Analytics.............................................................................................................8

4 Big Data Analytic Tools.....................................................................................................9

5 Apache Hadoop..................................................................................................................9

5.1 Evolvement of Apache Hadoop...................................................................................9

5.2 Hadoop Ecosystem/ Architecture..............................................................................10

5.3 Components of Apache Hadoop................................................................................10

5.3.1 HDFS (Hadoop distributed file system).............................................................10

5.3.2 Hadoop MapReduce...........................................................................................12

5.3.3 Hadoop Common...............................................................................................13

5.3.4 Hadoop Yarn......................................................................................................13

5.3.5 Other Hadoop Components................................................................................15

5.4 Hadoop Download.....................................................................................................30

5.5 Types of Hadoop installation.....................................................................................31

5.6 Major Commands of Hadoop....................................................................................31

5.7 Hadoop Streaming.....................................................................................................32

5.8 Reasons to Choose Apache Hadoop..........................................................................32

5.9 Practical Applications of Apache Hadoop................................................................34

5.10 Apache Hadoop Scope...........................................................................................36

6 Apache Spark...................................................................................................................37

6.1 Apache Spark Ecosystem..........................................................................................37

6.2 Components of Apache Spark...................................................................................39

6.2.1 Apache Spark core.............................................................................................39

6.2.2 Apache Spark SQL.............................................................................................40

6.2.3 Apache Spark Streaming....................................................................................40

6.2.4 Apache Spark MLlib..........................................................................................42

6.2.5 Apache Spark GraphX.......................................................................................42

6.2.6 Apache Spark R..................................................................................................42

6.2.7 Scalability Function...........................................................................................43

6.3 Running Spark Applications on a Cluster.................................................................43

6.4 Applications of Apache Spark...................................................................................45

6.5 Practical Applications of Apache Spark....................................................................46

6.6 Reasons to Choose Spark..........................................................................................47

7 Comparison between Apache Hadoop and Apache Spark...............................................49

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

APACHE HADOOP VERSUS APACHE SPARK 4

7.1 The Market Situation.................................................................................................49

7.2 The main Difference Between Apache Hadoop and Apache Spark..........................50

7.3 Examples of Practical Applications...........................................................................51

8 Conclusion........................................................................................................................52

9 References........................................................................................................................54

7.1 The Market Situation.................................................................................................49

7.2 The main Difference Between Apache Hadoop and Apache Spark..........................50

7.3 Examples of Practical Applications...........................................................................51

8 Conclusion........................................................................................................................52

9 References........................................................................................................................54

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

APACHE HADOOP VERSUS APACHE SPARK 5

1 Introduction

In the current age of computer, people are increasingly depending on technological

devices and almost all aspects of human life, including social, personal and professional are

wholly covered with technology. Almost all of those aspects deal with some kind of data

(Hartung, 2018). As a result of the huge increased in data complexity caused by rapid

increase in variety and speed, new challenges have emerged in the sector of data

management, thus the evolvement of the term Big Data. Analysing, storing, assessing and

securing big data are among the popular terms in the current technological world (Hussain &

Roy, 2016). Big data analysis is a method of collecting data from various resources then

arranging that information in a significant way and then evaluating those big data sets to

uncover important figures and facts from that data collection. This data analysis assists in

identifying hidden figures and facts of data, as well as ranking or categorizing the

information based on the importance it offers (Hoskins, 2014). In summary, big data analysis

is the process of acquiring knowledge from massive variety of data. Organizations such as

twitter processes about 10 thousand tweets per second before broadcasting them to people.

They evaluate all data at a very fast rate to make sure each tweet is according to the set policy

and inhibited words are removed from the tweets. The evaluation process must be carried out

in real time to ensure that there no delays in broadcasting tweets live to the public

(Kirkpatrick, 2013). For instance, enterprises such as Forex Trading evaluate social

information to forecast public trends of the future. To evaluate such large data, it is necessary

to use analytical tools. This paper concentrates on Apache Hadoop and Apache Spark. The

sections of this paper include: literature review that explores the general view of big data and

big data analytics. The paper will also discuss the two leading big data analytical tools;

Apache Scope and Apache Hadoop.

1 Introduction

In the current age of computer, people are increasingly depending on technological

devices and almost all aspects of human life, including social, personal and professional are

wholly covered with technology. Almost all of those aspects deal with some kind of data

(Hartung, 2018). As a result of the huge increased in data complexity caused by rapid

increase in variety and speed, new challenges have emerged in the sector of data

management, thus the evolvement of the term Big Data. Analysing, storing, assessing and

securing big data are among the popular terms in the current technological world (Hussain &

Roy, 2016). Big data analysis is a method of collecting data from various resources then

arranging that information in a significant way and then evaluating those big data sets to

uncover important figures and facts from that data collection. This data analysis assists in

identifying hidden figures and facts of data, as well as ranking or categorizing the

information based on the importance it offers (Hoskins, 2014). In summary, big data analysis

is the process of acquiring knowledge from massive variety of data. Organizations such as

twitter processes about 10 thousand tweets per second before broadcasting them to people.

They evaluate all data at a very fast rate to make sure each tweet is according to the set policy

and inhibited words are removed from the tweets. The evaluation process must be carried out

in real time to ensure that there no delays in broadcasting tweets live to the public

(Kirkpatrick, 2013). For instance, enterprises such as Forex Trading evaluate social

information to forecast public trends of the future. To evaluate such large data, it is necessary

to use analytical tools. This paper concentrates on Apache Hadoop and Apache Spark. The

sections of this paper include: literature review that explores the general view of big data and

big data analytics. The paper will also discuss the two leading big data analytical tools;

Apache Scope and Apache Hadoop.

APACHE HADOOP VERSUS APACHE SPARK 6

2 Big Data

The availability and exponential growth of massive amount of information with

different variety is referred to as Big Data (Hoskins, 2014). Big Data is a term that is

popularly used in the current automated world and is perceived to be as important to the

society and business as the internet. It is extensively proved and believed that more data

result to more precise assessments, which in turns lead to more timely, legitimate and

confident decision making (Bettencourt, 2014). Better decisions and judgement result in

reduced risk, higher operational efficiencies, and reductions of cost. Researchers of Big Data

picture big data as follows:

Volume-wise: this is a significant factor that has led to the emergence of big data.

volume is increasing to different factors. Governments and companies have been

documenting transactional data for years. Social media is consistently sending automation,

machine-to-machine data, unstructured data, sensors data, among others (Saeed, 2018).

Previously, storage of data was still a problem, however, the emergence of affordable and

advanced storage devices has assisted in addressing the issue of data storage (Bughin, 2016).

Nevertheless, volume still causes other problems such as identifying the significance within

huge data volumes and gathering important information by analysing the data.

Velocity-wise: the rate at which data volume is increasing is becoming critical and it is

challenging to address the issue with efficiency and in time. The need to manage large pieces

of information in real time is brought by the rise of RFID (radio-frequency identification)

tags, robotics, sensors and automation, internet streaming, among other technology facilities

(Catlett & Ghani, 2015). As such, increase in data velocity is among the biggest challenge

being experienced by every big company today.

2 Big Data

The availability and exponential growth of massive amount of information with

different variety is referred to as Big Data (Hoskins, 2014). Big Data is a term that is

popularly used in the current automated world and is perceived to be as important to the

society and business as the internet. It is extensively proved and believed that more data

result to more precise assessments, which in turns lead to more timely, legitimate and

confident decision making (Bettencourt, 2014). Better decisions and judgement result in

reduced risk, higher operational efficiencies, and reductions of cost. Researchers of Big Data

picture big data as follows:

Volume-wise: this is a significant factor that has led to the emergence of big data.

volume is increasing to different factors. Governments and companies have been

documenting transactional data for years. Social media is consistently sending automation,

machine-to-machine data, unstructured data, sensors data, among others (Saeed, 2018).

Previously, storage of data was still a problem, however, the emergence of affordable and

advanced storage devices has assisted in addressing the issue of data storage (Bughin, 2016).

Nevertheless, volume still causes other problems such as identifying the significance within

huge data volumes and gathering important information by analysing the data.

Velocity-wise: the rate at which data volume is increasing is becoming critical and it is

challenging to address the issue with efficiency and in time. The need to manage large pieces

of information in real time is brought by the rise of RFID (radio-frequency identification)

tags, robotics, sensors and automation, internet streaming, among other technology facilities

(Catlett & Ghani, 2015). As such, increase in data velocity is among the biggest challenge

being experienced by every big company today.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

APACHE HADOOP VERSUS APACHE SPARK 7

Variety-wise: although the increase of huge data volume is a huge challenge, data

variety is a bigger problem. Information is increasing in different varieties including,

different formats, unstructured, various file systems, images, financial data, scientific data,

structured, relational and non-relational, videos, multimedia, aviation data, etc (Dhar, 2014).

The issue is finding ways to correlate the various types of data in time to obtain value from

this data. currently, many companies are trying hard to acquire better solutions to the issue.

Variability-wise: the inconsistent trend of the flow of big data is a big challenge.

Social media reaction to events across the globe drives large volumes of information which

requires timely assessments before trend changes (Diesner, 2015). Events across the world

have an impact on financial markets, and this operating cost increase more while handling

unstructured data.

Complexity-wise: huge volumes of data, inconsistent trends, and increasing variety of

data makes big data very challenging. In spite of all the above facts, big data must be sorted

out to correlate, connect and develop useful relational linkages and hierarchies in time before

the information becomes difficult to control (Dumbill, 2013). This illustrates the complexity

involved in today’s big data. in short, any repository of big data with the following features

can be referred to as big data.

Central planning and management

Extensible: primary capabilities can be altered and augmented

Manages huge amounts of data (Zeide, 2017)

Less costly

Offer capabilities for processing data

Accessibility: highly available open source or commercial good with

excellent usability (Hare, 2014)

Distributed repetitive data storage

Variety-wise: although the increase of huge data volume is a huge challenge, data

variety is a bigger problem. Information is increasing in different varieties including,

different formats, unstructured, various file systems, images, financial data, scientific data,

structured, relational and non-relational, videos, multimedia, aviation data, etc (Dhar, 2014).

The issue is finding ways to correlate the various types of data in time to obtain value from

this data. currently, many companies are trying hard to acquire better solutions to the issue.

Variability-wise: the inconsistent trend of the flow of big data is a big challenge.

Social media reaction to events across the globe drives large volumes of information which

requires timely assessments before trend changes (Diesner, 2015). Events across the world

have an impact on financial markets, and this operating cost increase more while handling

unstructured data.

Complexity-wise: huge volumes of data, inconsistent trends, and increasing variety of

data makes big data very challenging. In spite of all the above facts, big data must be sorted

out to correlate, connect and develop useful relational linkages and hierarchies in time before

the information becomes difficult to control (Dumbill, 2013). This illustrates the complexity

involved in today’s big data. in short, any repository of big data with the following features

can be referred to as big data.

Central planning and management

Extensible: primary capabilities can be altered and augmented

Manages huge amounts of data (Zeide, 2017)

Less costly

Offer capabilities for processing data

Accessibility: highly available open source or commercial good with

excellent usability (Hare, 2014)

Distributed repetitive data storage

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

APACHE HADOOP VERSUS APACHE SPARK 8

Very fast data insertion

Hardware sceptic

Parallel processing of tasks

3 Big Data Analytics

Big data analytics is the practice of employing assessment algorithms operating on

great supporting channels to reveal potentials hidden in big data, such as unknown patterns or

hidden correlations (Tromp, Pechenizkiy & Gaber, 2017). Based on the time required to

process big data, big data analytics can be grouped into two different paradigms.

Batch processing: here, information is first kept and then assessed. The

leading model for batch processing is MapReduce. The basic concept of MapReduce

is that information is first split into small portions (Cercone, F'IEEE, 2015). These

portions are later processed in a distributed and parallel way to create intermediate

outcomes. The end result is acquired by combining all the intermediate outcomes (Al

Jabri, Al-Badi & Ali, 2017). The MapReduce organizes computation resources near

the location of data, which prevents the occurrence of communication cost of

transmitting data. The model is easy to use and is extensively used in web mining,

bioinformatics and machine reading.

Streaming processing: the first thing is to assume that data value relies

on the freshness of data. therefore, the streaming processing model evaluates

information in a timely manner to obtain it outcome. In this model, information is

acquired in a stream. In its constant acquisition, because the stream carries large

volume and is fast, only a small section of the stream is kept in insufficient memory

(Batarseh, Yang & Deng, 2017). The few that passes over the stream are used to attain

Very fast data insertion

Hardware sceptic

Parallel processing of tasks

3 Big Data Analytics

Big data analytics is the practice of employing assessment algorithms operating on

great supporting channels to reveal potentials hidden in big data, such as unknown patterns or

hidden correlations (Tromp, Pechenizkiy & Gaber, 2017). Based on the time required to

process big data, big data analytics can be grouped into two different paradigms.

Batch processing: here, information is first kept and then assessed. The

leading model for batch processing is MapReduce. The basic concept of MapReduce

is that information is first split into small portions (Cercone, F'IEEE, 2015). These

portions are later processed in a distributed and parallel way to create intermediate

outcomes. The end result is acquired by combining all the intermediate outcomes (Al

Jabri, Al-Badi & Ali, 2017). The MapReduce organizes computation resources near

the location of data, which prevents the occurrence of communication cost of

transmitting data. The model is easy to use and is extensively used in web mining,

bioinformatics and machine reading.

Streaming processing: the first thing is to assume that data value relies

on the freshness of data. therefore, the streaming processing model evaluates

information in a timely manner to obtain it outcome. In this model, information is

acquired in a stream. In its constant acquisition, because the stream carries large

volume and is fast, only a small section of the stream is kept in insufficient memory

(Batarseh, Yang & Deng, 2017). The few that passes over the stream are used to attain

APACHE HADOOP VERSUS APACHE SPARK 9

approximation results. Streaming processing technology and theory have been

analysed for years. The streaming processing model is employed for online

applications, usually at the millisecond or second level (Bornakke & Due, 2018).

4 Big Data Analytic Tools

There are many big data tools for data evaluation today. However, only two tools will

be discussed in this paper; Apache Spark and Apache Hadoop.

5 Apache Hadoop

Apache Hadoop is an open-source data framework or platform built in Java, devoted

to analyse and store huge amounts of unstructured data (E. Laxmi Lydia & Srinivasa Rao,

2018). Digital mediums are transmitting large amounts of data, as such, new technologies of

big data are emerging at a fast rate. Nevertheless, Apache Hadoop was among the first tool to

be innovated. It enables several simultaneous tasks to execute from one to many servers

without delay (Kim & Lee, 2014). It comprises of a distributed file that permits transmission

of files and data between different nodes in split seconds. Besides, Apache Hadoop has the

ability to process effectively even in cases of node failure (MATACUTA & POPA, 2018).

5.1 Evolvement of Apache Hadoop

Scientists Mike Cafarella and Doug Cutting generated the Hadoop 1.0 platform and

introduced it in 2006 to promote delivery for Nutch search engine. It was inspired by

MapReduce of Google which divides an application into small sections to execute on

different nodes (Mach-Król & Modrzejewska, 2017). The Apache Software foundation

allowed the public to access the tool in 2012. The name of the tool came from Doug Cutting’s

kid yellow soft toy elephant. In the process of its modification, a second improved version

approximation results. Streaming processing technology and theory have been

analysed for years. The streaming processing model is employed for online

applications, usually at the millisecond or second level (Bornakke & Due, 2018).

4 Big Data Analytic Tools

There are many big data tools for data evaluation today. However, only two tools will

be discussed in this paper; Apache Spark and Apache Hadoop.

5 Apache Hadoop

Apache Hadoop is an open-source data framework or platform built in Java, devoted

to analyse and store huge amounts of unstructured data (E. Laxmi Lydia & Srinivasa Rao,

2018). Digital mediums are transmitting large amounts of data, as such, new technologies of

big data are emerging at a fast rate. Nevertheless, Apache Hadoop was among the first tool to

be innovated. It enables several simultaneous tasks to execute from one to many servers

without delay (Kim & Lee, 2014). It comprises of a distributed file that permits transmission

of files and data between different nodes in split seconds. Besides, Apache Hadoop has the

ability to process effectively even in cases of node failure (MATACUTA & POPA, 2018).

5.1 Evolvement of Apache Hadoop

Scientists Mike Cafarella and Doug Cutting generated the Hadoop 1.0 platform and

introduced it in 2006 to promote delivery for Nutch search engine. It was inspired by

MapReduce of Google which divides an application into small sections to execute on

different nodes (Mach-Król & Modrzejewska, 2017). The Apache Software foundation

allowed the public to access the tool in 2012. The name of the tool came from Doug Cutting’s

kid yellow soft toy elephant. In the process of its modification, a second improved version

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

APACHE HADOOP VERSUS APACHE SPARK 10

Hadoop 2.30 was launched on 20th February 2014. It consisted of major adjustments in the

architecture.

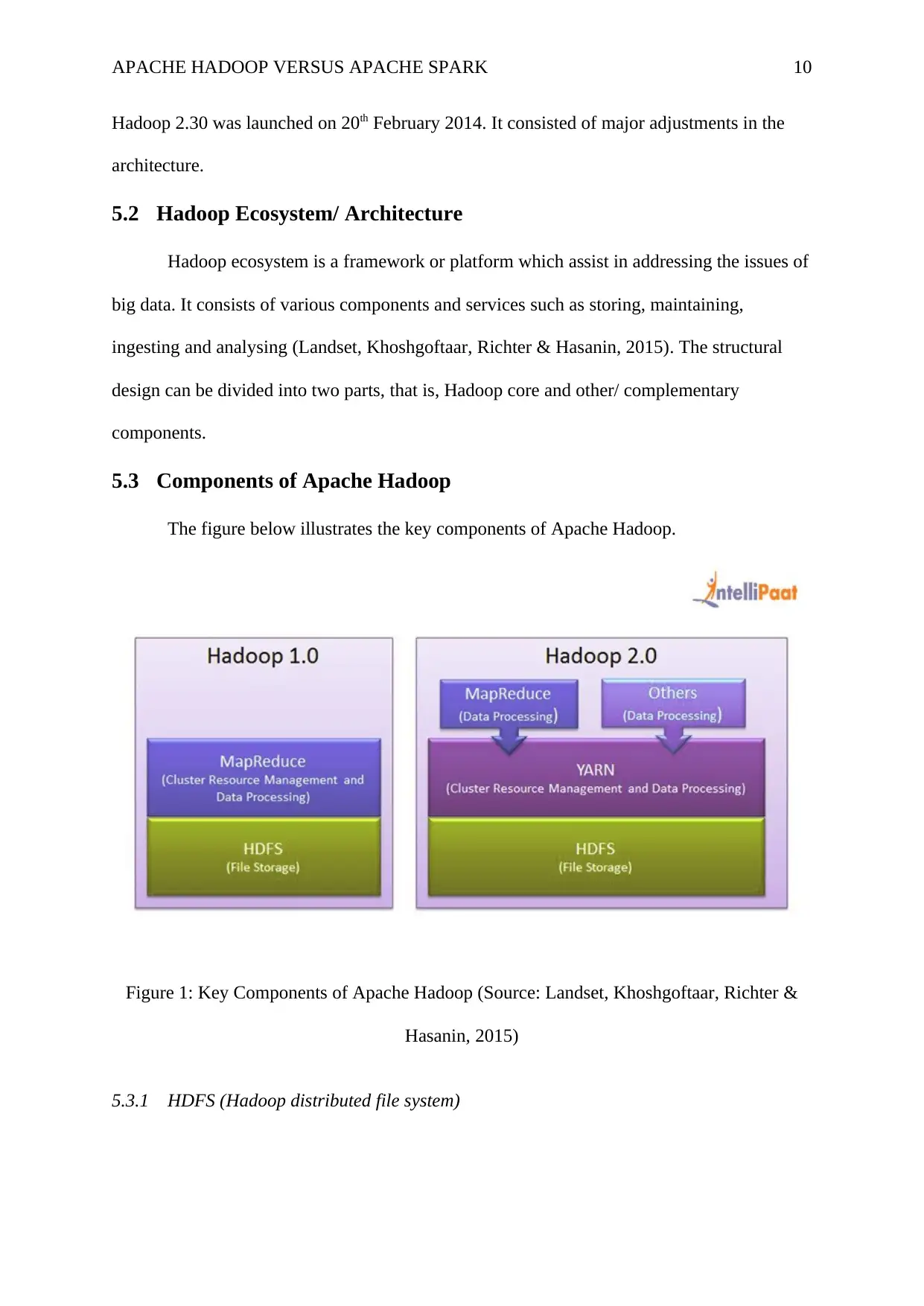

5.2 Hadoop Ecosystem/ Architecture

Hadoop ecosystem is a framework or platform which assist in addressing the issues of

big data. It consists of various components and services such as storing, maintaining,

ingesting and analysing (Landset, Khoshgoftaar, Richter & Hasanin, 2015). The structural

design can be divided into two parts, that is, Hadoop core and other/ complementary

components.

5.3 Components of Apache Hadoop

The figure below illustrates the key components of Apache Hadoop.

Figure 1: Key Components of Apache Hadoop (Source: Landset, Khoshgoftaar, Richter &

Hasanin, 2015)

5.3.1 HDFS (Hadoop distributed file system)

Hadoop 2.30 was launched on 20th February 2014. It consisted of major adjustments in the

architecture.

5.2 Hadoop Ecosystem/ Architecture

Hadoop ecosystem is a framework or platform which assist in addressing the issues of

big data. It consists of various components and services such as storing, maintaining,

ingesting and analysing (Landset, Khoshgoftaar, Richter & Hasanin, 2015). The structural

design can be divided into two parts, that is, Hadoop core and other/ complementary

components.

5.3 Components of Apache Hadoop

The figure below illustrates the key components of Apache Hadoop.

Figure 1: Key Components of Apache Hadoop (Source: Landset, Khoshgoftaar, Richter &

Hasanin, 2015)

5.3.1 HDFS (Hadoop distributed file system)

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

APACHE HADOOP VERSUS APACHE SPARK 11

HDFS stores information in small memory block and transmits them through a bunch

(Naidu, 2018). Every data is duplicated several times to make sure data is available. HDFS is

the most essential element of Hadoop Ecosystem. It is the basic Hadoop storage system. It is

developed in Java and offers fault tolerant, cost efficient, scalable and dependable data

storage for big data (Mavridis & Karatza, 2017). HDFS executes on product hardware and

comprises of default configuration for multiple installations. Many instances configuration is

required for large sets of data. Hadoop uses shell-like commands to communicate directly

with HDFS.

Components of HDFS

There are two main Hadoop HDFS components; DataNode abd NameNode.

NameNode: it is also referred to as Master node. It does not store

dataset or actual data. instead, it stores Metadata, that is, their location, which

Datanode the information is kept, number of blocks, on which Rack, among other

details. It comprises of directories and files. The work of HDFS NameNode is to

manage namespace of the filesystem, control user’s access to documents and run

execution of file system such closing, naming, opening directories and files (Aung &

Thein, 2014).

DataNode: it is also referred to as Slave. Its work is to store actual data

in HDFS. Datanode carries out the read and write functions as requested by the users.

DataNode replica blocj comprises of two documents on the file system. The first

document is for data and the second document is for documenting sections of

metadata. Data checksums are included in the HDFS Metadata. In the initial set-up,

each Datanode joins with its matching Namenode and begins the interaction.

Validation of Datanode software version and namespace ID happens during the first

interaction. If a mismatch is discovered, Datanode will automatically go down. HDFS

HDFS stores information in small memory block and transmits them through a bunch

(Naidu, 2018). Every data is duplicated several times to make sure data is available. HDFS is

the most essential element of Hadoop Ecosystem. It is the basic Hadoop storage system. It is

developed in Java and offers fault tolerant, cost efficient, scalable and dependable data

storage for big data (Mavridis & Karatza, 2017). HDFS executes on product hardware and

comprises of default configuration for multiple installations. Many instances configuration is

required for large sets of data. Hadoop uses shell-like commands to communicate directly

with HDFS.

Components of HDFS

There are two main Hadoop HDFS components; DataNode abd NameNode.

NameNode: it is also referred to as Master node. It does not store

dataset or actual data. instead, it stores Metadata, that is, their location, which

Datanode the information is kept, number of blocks, on which Rack, among other

details. It comprises of directories and files. The work of HDFS NameNode is to

manage namespace of the filesystem, control user’s access to documents and run

execution of file system such closing, naming, opening directories and files (Aung &

Thein, 2014).

DataNode: it is also referred to as Slave. Its work is to store actual data

in HDFS. Datanode carries out the read and write functions as requested by the users.

DataNode replica blocj comprises of two documents on the file system. The first

document is for data and the second document is for documenting sections of

metadata. Data checksums are included in the HDFS Metadata. In the initial set-up,

each Datanode joins with its matching Namenode and begins the interaction.

Validation of Datanode software version and namespace ID happens during the first

interaction. If a mismatch is discovered, Datanode will automatically go down. HDFS

APACHE HADOOP VERSUS APACHE SPARK 12

DataNode is responsible for performing operations such as block replica deletion,

creation and duplication according to the NameNode instruction (Hussain & T, 2016).

Another task carried out by DataNode is to monitor data storage of the system.

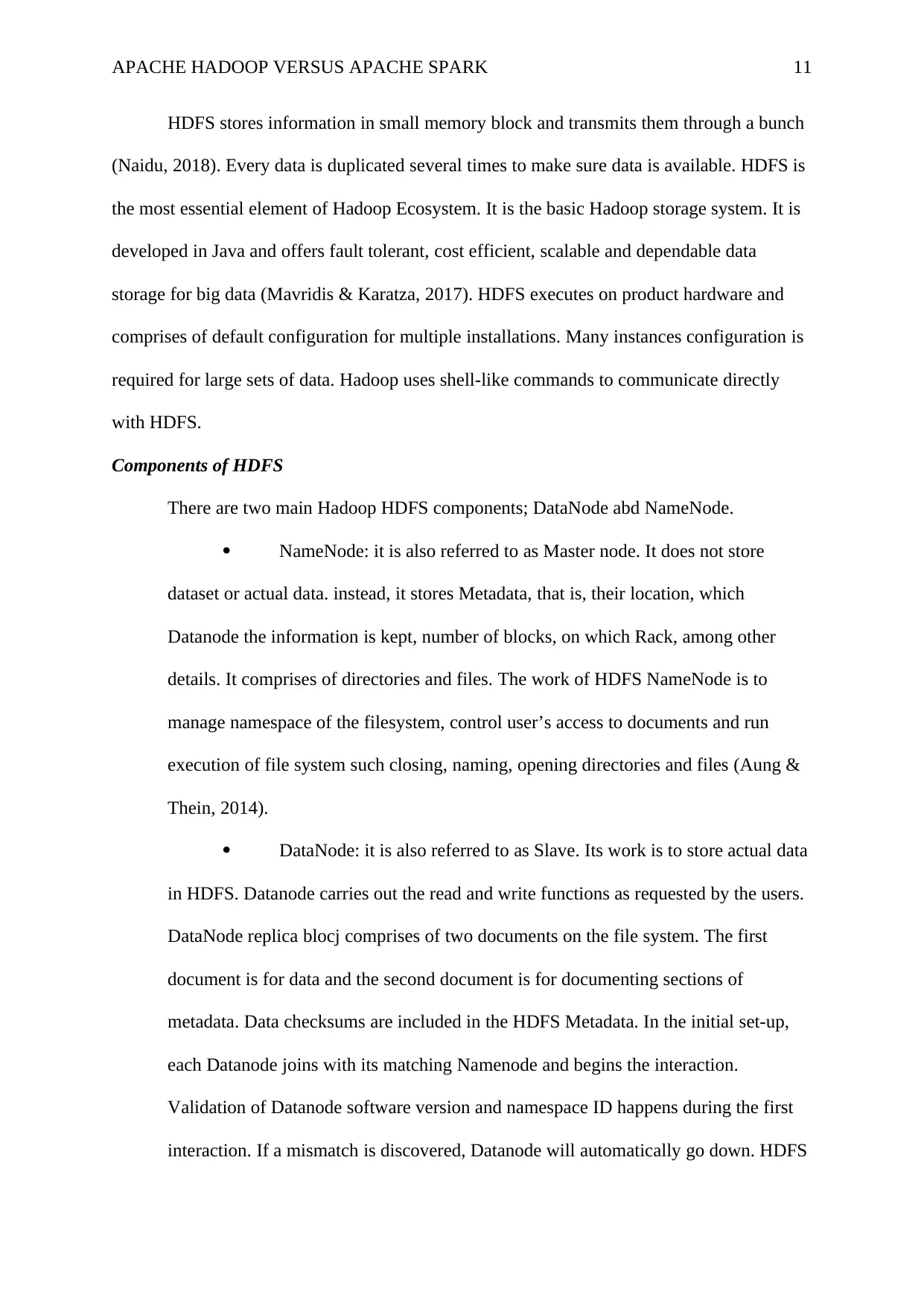

5.3.2 Hadoop MapReduce

It runs tasks in an equivalent manner by transmitting it as small blocks. Hadoop

MapReduce offers data processing. It is a software structure for writing programs easily that

process the huge amount of unstructured and structured data kept in the HDFS (Singh &

Reddy, 2014). MapReduce applications are similar in nature, therefore are very helpful for

carrying out analysis of huge amount of data using several machines in the set. As such, it

enhances the reliability and speed of cluster.

Figure 2: Hadoop MapReduce (Source: Greeshma & Pradeepini, 2016)

Hadoop MapReduce works by dividing the processing into two stages: Reduce phase

and map phase (Greeshma & Pradeepini, 2016). Each phase incorporates the concept of the

key-value pair as output and input. Besides, programmer particularize the two functions;

reduce function and map function.

DataNode is responsible for performing operations such as block replica deletion,

creation and duplication according to the NameNode instruction (Hussain & T, 2016).

Another task carried out by DataNode is to monitor data storage of the system.

5.3.2 Hadoop MapReduce

It runs tasks in an equivalent manner by transmitting it as small blocks. Hadoop

MapReduce offers data processing. It is a software structure for writing programs easily that

process the huge amount of unstructured and structured data kept in the HDFS (Singh &

Reddy, 2014). MapReduce applications are similar in nature, therefore are very helpful for

carrying out analysis of huge amount of data using several machines in the set. As such, it

enhances the reliability and speed of cluster.

Figure 2: Hadoop MapReduce (Source: Greeshma & Pradeepini, 2016)

Hadoop MapReduce works by dividing the processing into two stages: Reduce phase

and map phase (Greeshma & Pradeepini, 2016). Each phase incorporates the concept of the

key-value pair as output and input. Besides, programmer particularize the two functions;

reduce function and map function.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 63

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.