Big Data with HIVE: Data Warehousing, Querying, and Partitioning

VerifiedAdded on 2023/06/01

|8

|1105

|326

Report

AI Summary

This report provides an overview of using Apache Hive in big data environments for data warehousing, querying, and managing large datasets. It highlights Hive's role in providing a SQL-like interface (HiveQL) to perform MapReduce tasks on extensive datasets within a Hadoop cluster. The report discusses Hive's advantages, such as unified resource management, simplified arrangement, and shared security, making it suitable for petabyte-scale data growth. It covers key aspects like data partitioning, which enhances query performance, and demonstrates how to connect datasets using SQL-like join semantics. The summary also includes a discussion of static and dynamic partitioning modes, along with commands for managing partitions, offering a comprehensive understanding of Hive's capabilities in big data processing and analysis.

Big Data

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Table of Contents

HIVE in Big data.................................................................................................................................2

Summary..............................................................................................................................................5

References............................................................................................................................................7

1

HIVE in Big data.................................................................................................................................2

Summary..............................................................................................................................................5

References............................................................................................................................................7

1

HIVE in Big data

The huge information industry has aced the craft of social occasion and logging

terabytes of information, yet the test is to base estimates and settle on choices got from this

genuine information, which is the reason Apache Hive is so vital. It anticipates a structure

onto the information and inquiries this information following a SQL-like inquiry structure to

perform Map and lessen assignments on extensive datasets. Hive information stockroom

programming empowers perusing, composing, and overseeing expansive datasets in

dispersed capacity. Utilizing the Hive inquiry dialect (HiveQL), which is fundamentally the

same as SQL, inquiries are changed over into a progression of employments that execute on a

Hadoop bunch through Map Reduce or Apache Spark. Clients can run clump handling

remaining tasks at hand with Hive while likewise investigating similar information for

intelligent SQL or machine-learning outstanding burdens utilizing apparatuses like Apache

Impala or Apache Spark all inside a solitary stage. As a major aspect of CDH, Hive

additionally profits by (Akerkar, 2014):

Unified asset administration given by YARN

Simplified arrangement and organization given by Cloudera Manager

Shared security and administration to meet consistence necessities given by Apache

Sentry and Cloudera Navigator

Since Hive is a petabyte-scale information stockroom framework based on the

Hadoop stage, it is a decent decision for situations encountering marvellous development in

information volume. The basic Map Reduce interface with HDFS is difficult to program

specifically, yet Hive gives a SQL interface, making it conceivable to utilize existing

programming abilities to perform information readiness. Hive on Map Reduce or Spark is

most appropriate for clump information arrangement or ETL:

You should run booked bunch employments with extensive ETL sorts with joins to

get ready information for Hadoop. Most information served to BI clients in Impala is

set up by ETL designers utilizing Hive (Mohanty, Jagadeesh and Srivatsa, 2013).

You run information exchange or transformation employments that take numerous

hours. With Hive, if an issue happens partially through such an occupation, it recoups

and proceeds.

You get or give information in different configurations, where the Hive SerDes and

assortment of UDFs make it helpful to ingest and convert the information. Regularly,

2

The huge information industry has aced the craft of social occasion and logging

terabytes of information, yet the test is to base estimates and settle on choices got from this

genuine information, which is the reason Apache Hive is so vital. It anticipates a structure

onto the information and inquiries this information following a SQL-like inquiry structure to

perform Map and lessen assignments on extensive datasets. Hive information stockroom

programming empowers perusing, composing, and overseeing expansive datasets in

dispersed capacity. Utilizing the Hive inquiry dialect (HiveQL), which is fundamentally the

same as SQL, inquiries are changed over into a progression of employments that execute on a

Hadoop bunch through Map Reduce or Apache Spark. Clients can run clump handling

remaining tasks at hand with Hive while likewise investigating similar information for

intelligent SQL or machine-learning outstanding burdens utilizing apparatuses like Apache

Impala or Apache Spark all inside a solitary stage. As a major aspect of CDH, Hive

additionally profits by (Akerkar, 2014):

Unified asset administration given by YARN

Simplified arrangement and organization given by Cloudera Manager

Shared security and administration to meet consistence necessities given by Apache

Sentry and Cloudera Navigator

Since Hive is a petabyte-scale information stockroom framework based on the

Hadoop stage, it is a decent decision for situations encountering marvellous development in

information volume. The basic Map Reduce interface with HDFS is difficult to program

specifically, yet Hive gives a SQL interface, making it conceivable to utilize existing

programming abilities to perform information readiness. Hive on Map Reduce or Spark is

most appropriate for clump information arrangement or ETL:

You should run booked bunch employments with extensive ETL sorts with joins to

get ready information for Hadoop. Most information served to BI clients in Impala is

set up by ETL designers utilizing Hive (Mohanty, Jagadeesh and Srivatsa, 2013).

You run information exchange or transformation employments that take numerous

hours. With Hive, if an issue happens partially through such an occupation, it recoups

and proceeds.

You get or give information in different configurations, where the Hive SerDes and

assortment of UDFs make it helpful to ingest and convert the information. Regularly,

2

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

the last phase of the ETL procedure with Hive may be to an elite, broadly upheld

arrangement, for example, Parquet.

HIVE is viewed as an apparatus of decision for performing questions on expansive

datasets, particularly those that require full table outputs. HIVE has propelled dividing

highlights. Information document dividing in hive is exceptionally helpful to prune

information amid the question, with the end goal to diminish inquiry times. There are

numerous occasions where clients need to channel the information on particular section

esteems (Ohlhorst, 2013).

Using the apportioning highlight of HIVE that subdivides the information, HIVE

clients can recognize the segments, which can be utilized to compose the

information.

Using parceling, the examination should be possible just on the applicable subset

of information, bringing about a much enhanced execution of HIVE questions.

If there should be an occurrence of divided tables, subdirectories are made under the table's

information index for every one of a kind estimation of a parcel segment. You will take in

more about the parceling highlights in the resulting segments. The accompanying chart

clarifies information stockpiling in a solitary Hadoop Distributed File System or HDFS

catalog.

3

arrangement, for example, Parquet.

HIVE is viewed as an apparatus of decision for performing questions on expansive

datasets, particularly those that require full table outputs. HIVE has propelled dividing

highlights. Information document dividing in hive is exceptionally helpful to prune

information amid the question, with the end goal to diminish inquiry times. There are

numerous occasions where clients need to channel the information on particular section

esteems (Ohlhorst, 2013).

Using the apportioning highlight of HIVE that subdivides the information, HIVE

clients can recognize the segments, which can be utilized to compose the

information.

Using parceling, the examination should be possible just on the applicable subset

of information, bringing about a much enhanced execution of HIVE questions.

If there should be an occurrence of divided tables, subdirectories are made under the table's

information index for every one of a kind estimation of a parcel segment. You will take in

more about the parceling highlights in the resulting segments. The accompanying chart

clarifies information stockpiling in a solitary Hadoop Distributed File System or HDFS

catalog.

3

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

The provided data set is shown below.

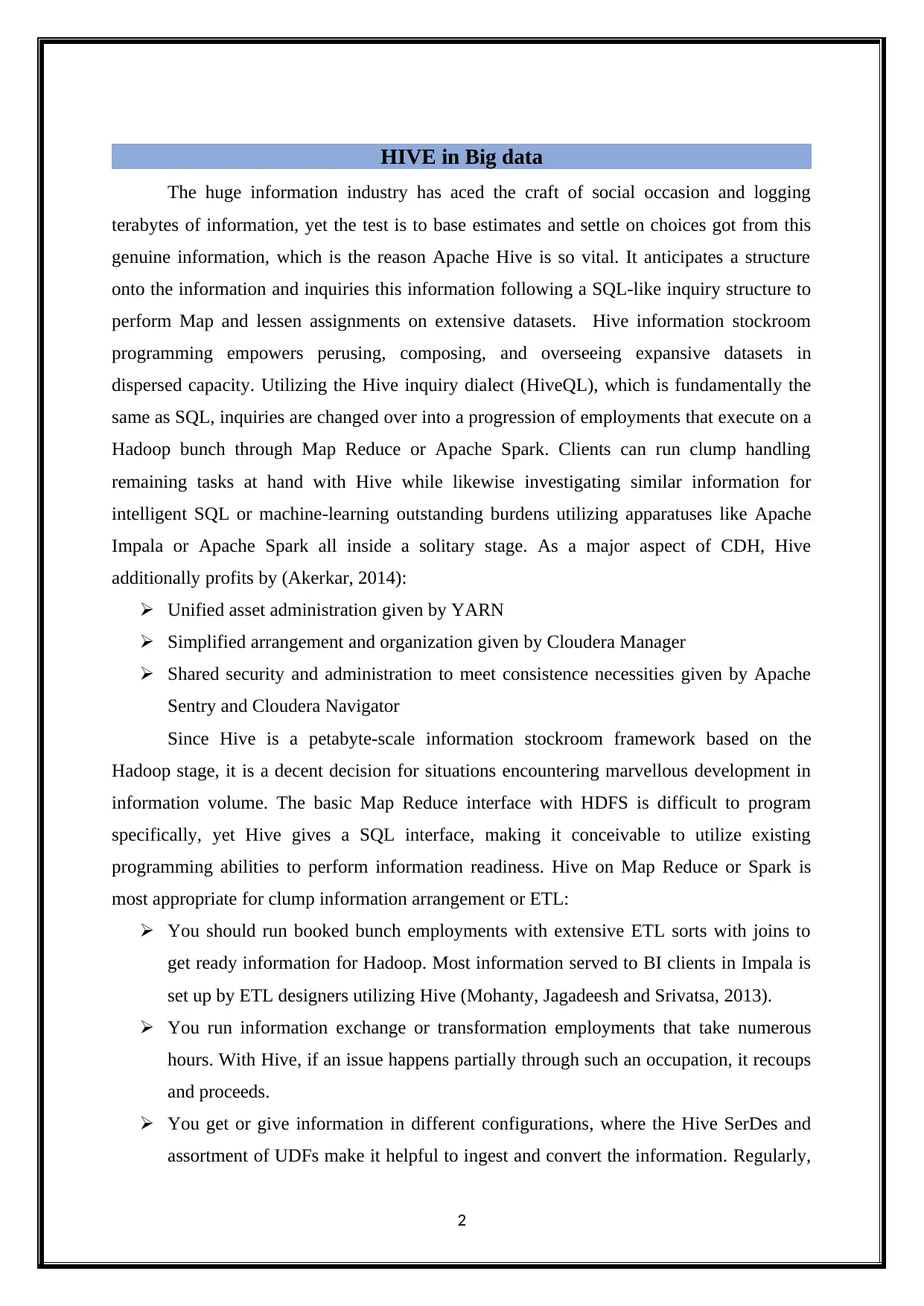

We should begin with connecting the two datasets characterized previously. For this

reason, Hive gives SQL-like join semantics. An internal join is the most widely recognized

join task utilized in applications and can be viewed as the default join-type. The inward join

consolidates segment estimations of two tables, say A and B, in light of the join predicate. An

inward join inquiry contrasts each column of A and each line of B to discover every one of

those sets of lines that fulfil the join predicate. On the off chance that the join predicate is

fulfilled, section esteems from A and B for that record are consolidated to frame another

resultant record. An inward join can be thought of as taking a Cartesian result of the two

tables and afterward restoring those records, which fulfil the join predicate. HIVE DML

statement output is shown below (Stoddard, 2015).

4

We should begin with connecting the two datasets characterized previously. For this

reason, Hive gives SQL-like join semantics. An internal join is the most widely recognized

join task utilized in applications and can be viewed as the default join-type. The inward join

consolidates segment estimations of two tables, say A and B, in light of the join predicate. An

inward join inquiry contrasts each column of A and each line of B to discover every one of

those sets of lines that fulfil the join predicate. On the off chance that the join predicate is

fulfilled, section esteems from A and B for that record are consolidated to frame another

resultant record. An inward join can be thought of as taking a Cartesian result of the two

tables and afterward restoring those records, which fulfil the join predicate. HIVE DML

statement output is shown below (Stoddard, 2015).

4

Summary

o Partitions are really level cuts of information that enable bigger arrangements of

information to be isolated into more reasonable pieces.

o In the static dividing mode, you can embed or input the information records

separately into a segment table.

o When you have a lot of information put away in a table, at that point the dynamic

segment is reasonable.

o Use the SHOW direction to see segments.

5

o Partitions are really level cuts of information that enable bigger arrangements of

information to be isolated into more reasonable pieces.

o In the static dividing mode, you can embed or input the information records

separately into a segment table.

o When you have a lot of information put away in a table, at that point the dynamic

segment is reasonable.

o Use the SHOW direction to see segments.

5

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

o To erase or include parcels, utilize the ALTER order (Zikopoulos et al., 2013).

o Use parceling when perusing the whole informational collection takes too long,

questions quite often channel on the segment sections, and there are a sensible

number of various qualities for segment segments.

o HIVEQL is an inquiry dialect for HIVE to process and dissect organized

information in a Megastore.

o HIVEQL can be stretched out with the assistance of client characterized capacities,

Map Reduce contents, client characterized types, and information designs.

6

o Use parceling when perusing the whole informational collection takes too long,

questions quite often channel on the segment sections, and there are a sensible

number of various qualities for segment segments.

o HIVEQL is an inquiry dialect for HIVE to process and dissect organized

information in a Megastore.

o HIVEQL can be stretched out with the assistance of client characterized capacities,

Map Reduce contents, client characterized types, and information designs.

6

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

References

Akerkar, R. (2014). Big Data Computing. Boca Raton: Chapman and Hall/CRC.

Mohanty, S., Jagadeesh, M. and Srivatsa, H. (2013). Big data imperatives. New York:

Apress.

Ohlhorst, F. (2013). Big data analytics. Hoboken, N.J.: John Wiley & Sons Inc.

Stoddard, C. (2015). Hive. Madison, Wisconsin: The University of Wisconsin Press.

Zikopoulos, P., DeRoos, D., Parasuraman, K., Deutsch, T., Corrigan, D. and Giles, J.

(2013). Harness the power of Big Data. New York: McGraw-Hill.

7

Akerkar, R. (2014). Big Data Computing. Boca Raton: Chapman and Hall/CRC.

Mohanty, S., Jagadeesh, M. and Srivatsa, H. (2013). Big data imperatives. New York:

Apress.

Ohlhorst, F. (2013). Big data analytics. Hoboken, N.J.: John Wiley & Sons Inc.

Stoddard, C. (2015). Hive. Madison, Wisconsin: The University of Wisconsin Press.

Zikopoulos, P., DeRoos, D., Parasuraman, K., Deutsch, T., Corrigan, D. and Giles, J.

(2013). Harness the power of Big Data. New York: McGraw-Hill.

7

1 out of 8

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2025 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.