IMAT5209: Human Factor in System Design - Heuristic Evaluation Report

VerifiedAdded on 2023/04/21

|20

|5044

|365

Report

AI Summary

This report presents a heuristic evaluation of a mobile health system designed to support diabetes self-management. The study utilized a modified heuristic evaluation technique involving dual-domain experts (healthcare and usability professionals), validated scenarios, and in-depth severity ratings. The evaluation identified 129 usability problems with 274 heuristic violations, with the categories of Consistency and Standards, and Match between System and Real World dominating the issues. Severity ratings indicated that a significant number of violations were major or catastrophic, highlighting the need for redesign. The report details the evaluation methodology, including the use of specific scenarios and tasks to simulate patient interactions. The findings emphasize the importance of usability in mobile health applications and the potential for expert evaluations to identify crucial issues related to self-management and patient safety. The report concludes with a discussion of the implications of these findings for the design and development of more effective and user-friendly mobile health systems.

Running head: HUMAN FACTOR IN SYSTEM DESIGN

Human Factor in System Design: Heuristic Evaluation of Mobile Health

System for Self-management Support of Diabetes

Name of Student-

Name of University-

Author’s Note-

Human Factor in System Design: Heuristic Evaluation of Mobile Health

System for Self-management Support of Diabetes

Name of Student-

Name of University-

Author’s Note-

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1HUMAN FACTOR IN SYSTEM DESIGN

Table of Contents

Part One: Interactive System and its Users......................................................................................2

Part Two: Use Cases........................................................................................................................3

Part Three: The Usability Requirement...........................................................................................4

Part Four: The Evaluation Methodology.........................................................................................6

Part Five: Evaluation.......................................................................................................................8

Part Six: Findings of Evaluation....................................................................................................11

Conclusion.....................................................................................................................................18

References......................................................................................................................................19

Table of Contents

Part One: Interactive System and its Users......................................................................................2

Part Two: Use Cases........................................................................................................................3

Part Three: The Usability Requirement...........................................................................................4

Part Four: The Evaluation Methodology.........................................................................................6

Part Five: Evaluation.......................................................................................................................8

Part Six: Findings of Evaluation....................................................................................................11

Conclusion.....................................................................................................................................18

References......................................................................................................................................19

2HUMAN FACTOR IN SYSTEM DESIGN

Part One: Interactive System and its Users

Mobile health platforms offer significant opportunities for improving diabetic self-care,

but only if adequate usability exists. Expert evaluations such as heuristic evaluation can provide

distinct usability information about systems. The purpose of this study was to complete a

usability evaluation of a mobile health system for diabetes patients using a modified heuristic

evaluation technique of (1) dual-domain experts (healthcare professionals, usability experts), (2)

validated scenarios and user tasks related to patients’ self-care, and (3) in-depth severity factor

ratings. Experts identified 129 usability problems with 274 heuristic violations for the system.

The categories Consistency and Standards dominated at 24.1% (n = 66), followed by Match

between System and Real World at 22.3% (n = 61). Average severity ratings across system

views were 2.8 (of 4), with 9.3% (n = 12) rated as catastrophic and 53.5% (n = 69) as major. The

large volume of violations with severe ratings indicated clear priorities for redesign. The

modified heuristic approach allowed evaluators to identify unique and important issues,

including ones related to self-management and patient safety.

Recent figures from the World Health Organization show that 347 million people in the

world are affected by diabetes; this chronic disease is predicted to be the seventh leading cause

of death in the world by the year 2030 (Quiñones and Rusu, 2017). Data from the Department of

Health and Human Services and the Centers for Disease Control and Prevention show that in the

United States alone, the number of people living with the disease is 29.1 million. This number

continues to grow rapidly. Of the people diagnosed with this disease, 90% have type 2 diabetes.

Factors such as poorly regulated glycemic levels have a large influence on patients’ conditions

and are therefore vital to monitor for controlling the disease. Type 2 diabetes is also lifestyle

related to a large degree and can be self-managed to a certain extent in addition to using more

Part One: Interactive System and its Users

Mobile health platforms offer significant opportunities for improving diabetic self-care,

but only if adequate usability exists. Expert evaluations such as heuristic evaluation can provide

distinct usability information about systems. The purpose of this study was to complete a

usability evaluation of a mobile health system for diabetes patients using a modified heuristic

evaluation technique of (1) dual-domain experts (healthcare professionals, usability experts), (2)

validated scenarios and user tasks related to patients’ self-care, and (3) in-depth severity factor

ratings. Experts identified 129 usability problems with 274 heuristic violations for the system.

The categories Consistency and Standards dominated at 24.1% (n = 66), followed by Match

between System and Real World at 22.3% (n = 61). Average severity ratings across system

views were 2.8 (of 4), with 9.3% (n = 12) rated as catastrophic and 53.5% (n = 69) as major. The

large volume of violations with severe ratings indicated clear priorities for redesign. The

modified heuristic approach allowed evaluators to identify unique and important issues,

including ones related to self-management and patient safety.

Recent figures from the World Health Organization show that 347 million people in the

world are affected by diabetes; this chronic disease is predicted to be the seventh leading cause

of death in the world by the year 2030 (Quiñones and Rusu, 2017). Data from the Department of

Health and Human Services and the Centers for Disease Control and Prevention show that in the

United States alone, the number of people living with the disease is 29.1 million. This number

continues to grow rapidly. Of the people diagnosed with this disease, 90% have type 2 diabetes.

Factors such as poorly regulated glycemic levels have a large influence on patients’ conditions

and are therefore vital to monitor for controlling the disease. Type 2 diabetes is also lifestyle

related to a large degree and can be self-managed to a certain extent in addition to using more

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3HUMAN FACTOR IN SYSTEM DESIGN

conventional treatment procedures. Self-management is becoming increasingly important in

diabetes care; researchers found that self-management support should be integrated into patients’

everyday lives to achieve desired, improved patient outcomes. As an adjunct to diabetes

management, researchers highlighted the use of information and communication technology

(ICT) and the development of applications for day-to-day self-care and disease management. In

support of that goal, the mobile health (mHealth) system for this study was developed as an

individually based mobile and web support system for type 2 diabetes patients’ self-

management.

Part Two: Use Cases

To facilitate their adoption, mobile healthcare applications and systems for chronic

disease management must be usable. Despite the increasing availability of self-management

tools, many of the patient-operated ICT applications are still deficient in terms of usability.

Completing an evaluation process in a self-management context for these kinds of systems

requires an understanding of users and their needs when performing tasks in such a system.

Having this focus can help ensure that mobile applications are safe for clinical and patient use

and possibly prevent user errors. Authors thus argue that it is vital for user interfaces to be

designed in a way that does not contribute to errors as this can also be a factor negatively

affecting users’ experiences with the system. Usability evaluations can therefore help to

appropriately determine how well the application or system meets the clinical need and patients’

expectations and in safeguarding both the quality of care and patient outcomes. These

evaluations can be expert based, such as heuristic evaluation (HE) or cognitive walk-through, or

empirical and user based evaluations, such as think-aloud methods involving user tests with

actual system users.

conventional treatment procedures. Self-management is becoming increasingly important in

diabetes care; researchers found that self-management support should be integrated into patients’

everyday lives to achieve desired, improved patient outcomes. As an adjunct to diabetes

management, researchers highlighted the use of information and communication technology

(ICT) and the development of applications for day-to-day self-care and disease management. In

support of that goal, the mobile health (mHealth) system for this study was developed as an

individually based mobile and web support system for type 2 diabetes patients’ self-

management.

Part Two: Use Cases

To facilitate their adoption, mobile healthcare applications and systems for chronic

disease management must be usable. Despite the increasing availability of self-management

tools, many of the patient-operated ICT applications are still deficient in terms of usability.

Completing an evaluation process in a self-management context for these kinds of systems

requires an understanding of users and their needs when performing tasks in such a system.

Having this focus can help ensure that mobile applications are safe for clinical and patient use

and possibly prevent user errors. Authors thus argue that it is vital for user interfaces to be

designed in a way that does not contribute to errors as this can also be a factor negatively

affecting users’ experiences with the system. Usability evaluations can therefore help to

appropriately determine how well the application or system meets the clinical need and patients’

expectations and in safeguarding both the quality of care and patient outcomes. These

evaluations can be expert based, such as heuristic evaluation (HE) or cognitive walk-through, or

empirical and user based evaluations, such as think-aloud methods involving user tests with

actual system users.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4HUMAN FACTOR IN SYSTEM DESIGN

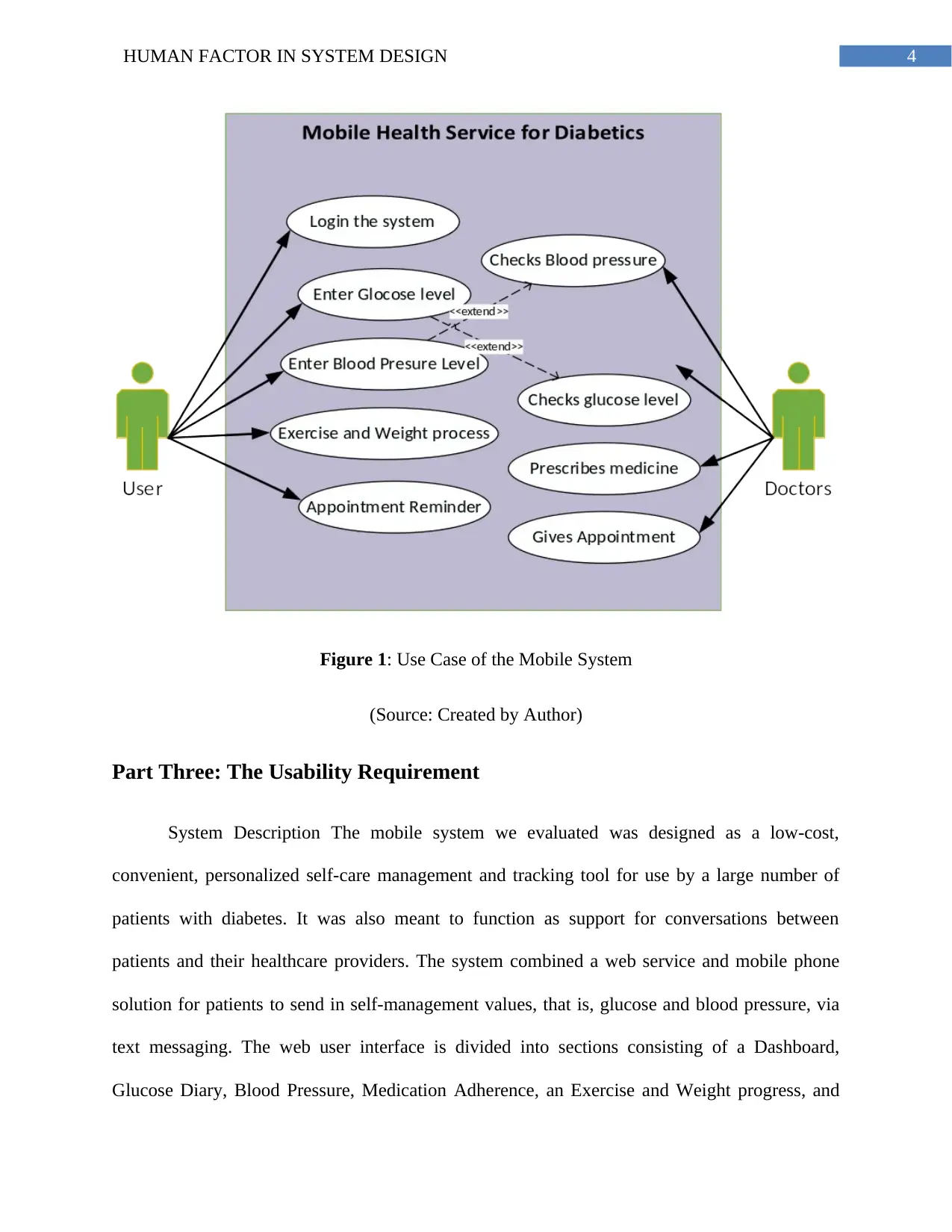

Figure 1: Use Case of the Mobile System

(Source: Created by Author)

Part Three: The Usability Requirement

System Description The mobile system we evaluated was designed as a low-cost,

convenient, personalized self-care management and tracking tool for use by a large number of

patients with diabetes. It was also meant to function as support for conversations between

patients and their healthcare providers. The system combined a web service and mobile phone

solution for patients to send in self-management values, that is, glucose and blood pressure, via

text messaging. The web user interface is divided into sections consisting of a Dashboard,

Glucose Diary, Blood Pressure, Medication Adherence, an Exercise and Weight progress, and

Figure 1: Use Case of the Mobile System

(Source: Created by Author)

Part Three: The Usability Requirement

System Description The mobile system we evaluated was designed as a low-cost,

convenient, personalized self-care management and tracking tool for use by a large number of

patients with diabetes. It was also meant to function as support for conversations between

patients and their healthcare providers. The system combined a web service and mobile phone

solution for patients to send in self-management values, that is, glucose and blood pressure, via

text messaging. The web user interface is divided into sections consisting of a Dashboard,

Glucose Diary, Blood Pressure, Medication Adherence, an Exercise and Weight progress, and

5HUMAN FACTOR IN SYSTEM DESIGN

Appointment reminder view. Each has graphical representations of the different measurements

and goals with progress indicators in red, yellow, and green. For example, the sections include a

meter to visualize glucose readings, a blood pressure bar with systolic and diastolic values, a

medication adherence section indicating how much of the prescribed medication was taken, and

an exercise and weight progress section to show exercise and weight measures. Using their cell

phones, patients can retrieve, enter, and edit their values and goals. Scenarios and tasks were

developed based on these kinds of patient interactions and uses.

Scenarios and tasks outlined specific steps that evaluators used to interact with the

diabetes self-management system in the HE. Tasks were based on real case scenarios to simulate

how patients would use the system in a self-management process in a clinic or at home. To

ensure that these were as realistic as possible, a panel also evaluated both scenarios and tasks.

The panel included a physician with a diabetes specialty, a diabetes RN, a public health

professional with chronic patient intervention systems expertise, and a diabetes patient. The

panel verified and validated tasks for content validity and accuracy (content validity index of

0.91 of 1.0). The eight tasks and scenarios were disease specific and had varying levels of

difficulty. For example, tasks consisted of viewing and locating glucose values on graphs,

identifying and correcting collected glucose values, setting weight and exercise goals and

medicine and appointment reminders, and viewing summary statements about medical

measurements. Table 1 includes an example of a scenario and task.

Scenario:

During your follow-up appointment with your provider, you agreed that a stronger

commitment regarding weight loss and exercise would improve your diabetes condition. You

now would like to activate the system's support service for exercise tracking and weight

Appointment reminder view. Each has graphical representations of the different measurements

and goals with progress indicators in red, yellow, and green. For example, the sections include a

meter to visualize glucose readings, a blood pressure bar with systolic and diastolic values, a

medication adherence section indicating how much of the prescribed medication was taken, and

an exercise and weight progress section to show exercise and weight measures. Using their cell

phones, patients can retrieve, enter, and edit their values and goals. Scenarios and tasks were

developed based on these kinds of patient interactions and uses.

Scenarios and tasks outlined specific steps that evaluators used to interact with the

diabetes self-management system in the HE. Tasks were based on real case scenarios to simulate

how patients would use the system in a self-management process in a clinic or at home. To

ensure that these were as realistic as possible, a panel also evaluated both scenarios and tasks.

The panel included a physician with a diabetes specialty, a diabetes RN, a public health

professional with chronic patient intervention systems expertise, and a diabetes patient. The

panel verified and validated tasks for content validity and accuracy (content validity index of

0.91 of 1.0). The eight tasks and scenarios were disease specific and had varying levels of

difficulty. For example, tasks consisted of viewing and locating glucose values on graphs,

identifying and correcting collected glucose values, setting weight and exercise goals and

medicine and appointment reminders, and viewing summary statements about medical

measurements. Table 1 includes an example of a scenario and task.

Scenario:

During your follow-up appointment with your provider, you agreed that a stronger

commitment regarding weight loss and exercise would improve your diabetes condition. You

now would like to activate the system's support service for exercise tracking and weight

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

6HUMAN FACTOR IN SYSTEM DESIGN

tracking and put in your tracking goals regarding your exercise and weight.

Please complete the following tasks.

1. Select and activate the service that you would like to use to set tracking goals for exercise

and weight.

2. Set your exercise goal to 3 times per week.

3. Set your weight goal to 180 pounds.

4. When you consider yourself done with the task, finish and return to “Participant Home.”

Table 1: Scenario and Task used

(Source: Georgsson, Staggers and Weir 2015)

Part Four: The Evaluation Methodology

Heuristic Evaluation Heuristic evaluation is one of the most common usability inspection

methods completed by usability experts. Users are explicitly not part of this kind of method.

Instead, experts apply the knowledge they have about usability principles, processes, and

standards to evaluate systems (Väätäjä et al. 2016).19 Heuristic evaluation was first defined by

Nielsen and Molich.16 In this technique, usability experts evaluate an application to find

usability problems, assign them to a specific category of heuristic and ascribe a severity rating.

Nielsen20 originally defined 10 heuristic categories and recommended assigning severity scores

to a master list of usability violations. Authors have attempted to modify and extend Nielsen’s

techniques in different ways to achieve better results in various contexts. These include Zhang et

al,21 who came up with 14 heuristics by combining Nielsen’s 10 heuristics with Shneiderman’s

eight “golden rules” to evaluate infusion pumps. Allen and colleagues9 employed a more

simplified use of the HE inspection method by having evaluators select only those heuristics they

tracking and put in your tracking goals regarding your exercise and weight.

Please complete the following tasks.

1. Select and activate the service that you would like to use to set tracking goals for exercise

and weight.

2. Set your exercise goal to 3 times per week.

3. Set your weight goal to 180 pounds.

4. When you consider yourself done with the task, finish and return to “Participant Home.”

Table 1: Scenario and Task used

(Source: Georgsson, Staggers and Weir 2015)

Part Four: The Evaluation Methodology

Heuristic Evaluation Heuristic evaluation is one of the most common usability inspection

methods completed by usability experts. Users are explicitly not part of this kind of method.

Instead, experts apply the knowledge they have about usability principles, processes, and

standards to evaluate systems (Väätäjä et al. 2016).19 Heuristic evaluation was first defined by

Nielsen and Molich.16 In this technique, usability experts evaluate an application to find

usability problems, assign them to a specific category of heuristic and ascribe a severity rating.

Nielsen20 originally defined 10 heuristic categories and recommended assigning severity scores

to a master list of usability violations. Authors have attempted to modify and extend Nielsen’s

techniques in different ways to achieve better results in various contexts. These include Zhang et

al,21 who came up with 14 heuristics by combining Nielsen’s 10 heuristics with Shneiderman’s

eight “golden rules” to evaluate infusion pumps. Allen and colleagues9 employed a more

simplified use of the HE inspection method by having evaluators select only those heuristics they

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7HUMAN FACTOR IN SYSTEM DESIGN

deemed appropriate for their assessment and assigned severity ratings for the usability problems

on the fly instead of first creating a master list of all usability problems. Chattrati chart and

Brodie22 extended the method to a technique they called HE-Plus, a directed approach using

usability problem profiles to help evaluators focus their evaluations on specific types of problem

areas to provide more consistent and reliable evaluation results. Heuristic evaluation or expert

usability evaluation can be useful because it provides a unique perspective and distinct

information19 and because it is a discount usability technique, meaning it is relatively quick, cost

effective, and resource efficient.16,23 However, as other authors have shown, the original

method by Nielsen can be improved upon for better results. Critics of the technique, for example,

indicate that many problems found with HE can be minor interface design problems or of a more

general nature. User tests, in comparison, involve actual users and identify problems of a critical,

qualitative nature. On the other hand, these are also more costly and time-consuming. In sum,

current expert techniques require improvements to be able to find more severe usability problems

of a critical nature for users. In this article, we addressed this gap. Our approach to accomplish

this is by using a modified HE technique using its beneficial aspects and also focusing on the

patient user and their needs in disease management and system information and interaction

requirements to provide enhanced evaluation results. Our modifications involve (1) employing

dual-domain experts (healthcare professionals and usability experts combined) as evaluators, (2)

using realistic, validated user tasks with appropriate scenarios related to patients’ diabetes self-

care, and (3) making severity ratings specific and in-depth across three severity rating factors by

predicting each problem’s influence on patients with factors of impact, persistence, and

frequency. Our intent was to explore whether the technique would be able to detect both crucial

deemed appropriate for their assessment and assigned severity ratings for the usability problems

on the fly instead of first creating a master list of all usability problems. Chattrati chart and

Brodie22 extended the method to a technique they called HE-Plus, a directed approach using

usability problem profiles to help evaluators focus their evaluations on specific types of problem

areas to provide more consistent and reliable evaluation results. Heuristic evaluation or expert

usability evaluation can be useful because it provides a unique perspective and distinct

information19 and because it is a discount usability technique, meaning it is relatively quick, cost

effective, and resource efficient.16,23 However, as other authors have shown, the original

method by Nielsen can be improved upon for better results. Critics of the technique, for example,

indicate that many problems found with HE can be minor interface design problems or of a more

general nature. User tests, in comparison, involve actual users and identify problems of a critical,

qualitative nature. On the other hand, these are also more costly and time-consuming. In sum,

current expert techniques require improvements to be able to find more severe usability problems

of a critical nature for users. In this article, we addressed this gap. Our approach to accomplish

this is by using a modified HE technique using its beneficial aspects and also focusing on the

patient user and their needs in disease management and system information and interaction

requirements to provide enhanced evaluation results. Our modifications involve (1) employing

dual-domain experts (healthcare professionals and usability experts combined) as evaluators, (2)

using realistic, validated user tasks with appropriate scenarios related to patients’ diabetes self-

care, and (3) making severity ratings specific and in-depth across three severity rating factors by

predicting each problem’s influence on patients with factors of impact, persistence, and

frequency. Our intent was to explore whether the technique would be able to detect both crucial

8HUMAN FACTOR IN SYSTEM DESIGN

and context-related problems in patient self-management in addition to the more common, minor

usability issues.

Expert Evaluators The HE was performed by three expert evaluators who identified

heuristic violations listed in Nielsen’s taxonomy.20 According to Nielsen, three to five single-

domain usability expert evaluators find, on average, between 74% and 87% of usability

problems.27 The number of usability problems found by dual-domain experts is even higher at

81% to 90%. Only two to three dual-domain evaluators are then deemed necessary.27 These

types of experts are seen as especially suitable in evaluating complex systems, such as those in

the healthcare area, because they have usability expertise and extensive knowledge in the

specific domain of application.28,29 Each expert for this study was thus carefully selected based

on dual-domain competency consisting of (1) extensive usability experience in health

informatics, (2) being healthcare professionals (registered nurses [RNs]), and experience with (3)

the patient group and their task requirements, and (4) diabetes self-management. As this was a

HE evaluation, it involved only expert evaluators and no patients. Therefore, institutional review

board approval was not required for this study.

Part Five: Evaluation

Evaluators had identical instructional materials to learn the system and to ensure

consistency across evaluators. Information materials consisted of a digital video on system

modules, how to navigate the portal, a study design manual detailing each specific scenario and

tasks to be performed, an application user manual, and an evaluation guide sheet. The study

design manual also included materials on how to conduct the evaluation, the scenarios, and a

usability task manual outlining how to navigate tasks. Providing specific scenarios and tasks to

and context-related problems in patient self-management in addition to the more common, minor

usability issues.

Expert Evaluators The HE was performed by three expert evaluators who identified

heuristic violations listed in Nielsen’s taxonomy.20 According to Nielsen, three to five single-

domain usability expert evaluators find, on average, between 74% and 87% of usability

problems.27 The number of usability problems found by dual-domain experts is even higher at

81% to 90%. Only two to three dual-domain evaluators are then deemed necessary.27 These

types of experts are seen as especially suitable in evaluating complex systems, such as those in

the healthcare area, because they have usability expertise and extensive knowledge in the

specific domain of application.28,29 Each expert for this study was thus carefully selected based

on dual-domain competency consisting of (1) extensive usability experience in health

informatics, (2) being healthcare professionals (registered nurses [RNs]), and experience with (3)

the patient group and their task requirements, and (4) diabetes self-management. As this was a

HE evaluation, it involved only expert evaluators and no patients. Therefore, institutional review

board approval was not required for this study.

Part Five: Evaluation

Evaluators had identical instructional materials to learn the system and to ensure

consistency across evaluators. Information materials consisted of a digital video on system

modules, how to navigate the portal, a study design manual detailing each specific scenario and

tasks to be performed, an application user manual, and an evaluation guide sheet. The study

design manual also included materials on how to conduct the evaluation, the scenarios, and a

usability task manual outlining how to navigate tasks. Providing specific scenarios and tasks to

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

9HUMAN FACTOR IN SYSTEM DESIGN

simulate the diabetes patient care process ensured that all experts had the same knowledge level

about the functionality and user tasks (Rossi, Nagano and Neto 2016). The procedure itself was a

two-part process. The evaluators first familiarized themselves with the system and its usage

using the materials and training described above. Then, they performed the modified HE as

visualized in Figure 1. Each dual-domain expert evaluator performed the eight scenarios and

tasks independently. After the evaluators detected a usability problem, they assigned each

problem to a heuristic violation/s from the categories in Table 2. A master list was compiled,

duplicate problems were removed, and the list was verified across the evaluators for accuracy.

Then, each evaluator individually assigned severity scores to each problem by using the severity

rating factors of frequency, impact, and persistence. These were also averaged by factor and

combined into one severity rating for each usability problem as described above. Descriptive

statistics were used to summarize heuristic violations and associated severity scores.

Visibility of system status:

The system should always keep users informed about what is going on, through

appropriate feedback within reasonable time.

Follow real-world conventions, making information appear in a natural and logical order.

User control and freedom:

Users often choose system functions by mistake and will need a clearly marked

“emergency exit” to leave the unwanted state without having to go through an extended

dialogue. Support undo and redo.

Consistency and standards:

Users should not have to wonder whether different words, situations, or actions mean the

same thing. Follow platform conventions.

simulate the diabetes patient care process ensured that all experts had the same knowledge level

about the functionality and user tasks (Rossi, Nagano and Neto 2016). The procedure itself was a

two-part process. The evaluators first familiarized themselves with the system and its usage

using the materials and training described above. Then, they performed the modified HE as

visualized in Figure 1. Each dual-domain expert evaluator performed the eight scenarios and

tasks independently. After the evaluators detected a usability problem, they assigned each

problem to a heuristic violation/s from the categories in Table 2. A master list was compiled,

duplicate problems were removed, and the list was verified across the evaluators for accuracy.

Then, each evaluator individually assigned severity scores to each problem by using the severity

rating factors of frequency, impact, and persistence. These were also averaged by factor and

combined into one severity rating for each usability problem as described above. Descriptive

statistics were used to summarize heuristic violations and associated severity scores.

Visibility of system status:

The system should always keep users informed about what is going on, through

appropriate feedback within reasonable time.

Follow real-world conventions, making information appear in a natural and logical order.

User control and freedom:

Users often choose system functions by mistake and will need a clearly marked

“emergency exit” to leave the unwanted state without having to go through an extended

dialogue. Support undo and redo.

Consistency and standards:

Users should not have to wonder whether different words, situations, or actions mean the

same thing. Follow platform conventions.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

10HUMAN FACTOR IN SYSTEM DESIGN

Error prevention:

Even better than good error messages is a careful design which prevents a problem from

occurring in the first place. Either eliminate error-prone conditions or check for them and

present users with a confirmation option before they commit to the action.

Recognition rather than recall:

Minimize the user's memory load by making objects, actions, and options visible. The

user should not have to remember information from one part of the dialogue to another.

Instructions for use of the system should be visible or easily retrievable whenever

appropriate.

Flexibility and efficiency of use:

Accelerators—unseen by the novice user—may often speed up the interaction for the

expert user such that the system can cater to both inexperienced and experienced users.

Allow users to tailor frequent actions.

Aesthetic and minimalist design:

Dialogues should not contain information which is irrelevant or rarely needed. Every

extra unit of information in a dialogue competes with the relevant units of information

and diminishes their relative visibility.

Help users recognize, diagnose, and recover from errors:

Error messages should be expressed in plain language (no codes), precisely indicate the

problem, and constructively suggest a solution.

Help and documentation:

Even though it is better if the system can be used without documentation, it may be

necessary to provide help and documentation. Any such information should be easy to

Error prevention:

Even better than good error messages is a careful design which prevents a problem from

occurring in the first place. Either eliminate error-prone conditions or check for them and

present users with a confirmation option before they commit to the action.

Recognition rather than recall:

Minimize the user's memory load by making objects, actions, and options visible. The

user should not have to remember information from one part of the dialogue to another.

Instructions for use of the system should be visible or easily retrievable whenever

appropriate.

Flexibility and efficiency of use:

Accelerators—unseen by the novice user—may often speed up the interaction for the

expert user such that the system can cater to both inexperienced and experienced users.

Allow users to tailor frequent actions.

Aesthetic and minimalist design:

Dialogues should not contain information which is irrelevant or rarely needed. Every

extra unit of information in a dialogue competes with the relevant units of information

and diminishes their relative visibility.

Help users recognize, diagnose, and recover from errors:

Error messages should be expressed in plain language (no codes), precisely indicate the

problem, and constructively suggest a solution.

Help and documentation:

Even though it is better if the system can be used without documentation, it may be

necessary to provide help and documentation. Any such information should be easy to

11HUMAN FACTOR IN SYSTEM DESIGN

search, focused on the user's task, list concrete steps to be carried out, and not be too

large.

Table 2: Usability Evaluation as per Nielson

(Source: Georgsson, Staggers and Weir 2015)

Part Six: Findings of Evaluation

The HE resulted in a total of 129 usability problems and 274 heuristic violations. The

usability problems by place of occurrence (view), number of heuristic violations, and mean

severity ratings are summarized in Figure 2. The number of usability problems ranged from a

low of 12 to a high of 34 across application views. The Dashboard view generated the most

usability problems (34), followed by the Glucose Diary view (21), the Blood pressure view (20),

and the Medication adherence view (15). Heuristic evaluation violations ranged from 25 to 69.

The largest number of heuristic violations were was on the Dashboard view (69), the Glucose

Diary view (49), the Blood pressure view (44), the Medication adherence view (31), and the

Appointment reminder view (29). The average severity ratings ranged from 2.7 to 3 on a scale of

0 to 4, with the Glucose Diary view and Medication adherence view having the highest at 2.9

and 3.0 respectively.

Heuristic Violations across System Views

Of the 10 types of HE violations depicted in Figure 3, the categories of Consistency and

Standards and Match Between the System and the Real World dominated at 24.1% (n = 66) and

22.3% (n = 61) respectively, followed by Aesthetic and Minimalist Design at 16.8% (n = 46) and

Recognition Rather Than Recall at 11.7% (n = 32). The heuristic categories Recover 1.4% (n =

4) and Help 1.03% (n = 3) had the fewest violations across all views.

search, focused on the user's task, list concrete steps to be carried out, and not be too

large.

Table 2: Usability Evaluation as per Nielson

(Source: Georgsson, Staggers and Weir 2015)

Part Six: Findings of Evaluation

The HE resulted in a total of 129 usability problems and 274 heuristic violations. The

usability problems by place of occurrence (view), number of heuristic violations, and mean

severity ratings are summarized in Figure 2. The number of usability problems ranged from a

low of 12 to a high of 34 across application views. The Dashboard view generated the most

usability problems (34), followed by the Glucose Diary view (21), the Blood pressure view (20),

and the Medication adherence view (15). Heuristic evaluation violations ranged from 25 to 69.

The largest number of heuristic violations were was on the Dashboard view (69), the Glucose

Diary view (49), the Blood pressure view (44), the Medication adherence view (31), and the

Appointment reminder view (29). The average severity ratings ranged from 2.7 to 3 on a scale of

0 to 4, with the Glucose Diary view and Medication adherence view having the highest at 2.9

and 3.0 respectively.

Heuristic Violations across System Views

Of the 10 types of HE violations depicted in Figure 3, the categories of Consistency and

Standards and Match Between the System and the Real World dominated at 24.1% (n = 66) and

22.3% (n = 61) respectively, followed by Aesthetic and Minimalist Design at 16.8% (n = 46) and

Recognition Rather Than Recall at 11.7% (n = 32). The heuristic categories Recover 1.4% (n =

4) and Help 1.03% (n = 3) had the fewest violations across all views.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 20

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.