Human Factors and Usability Evaluation Report for IMAT5209 Module

VerifiedAdded on 2022/10/02

|16

|3357

|252

Report

AI Summary

This report presents a usability evaluation of an mHealth diabetic self-management application, focusing on human factors in system design. The study employed a user-centered cognitive walkthrough and think-aloud protocol to assess the application's usability, clinical efficacy, and user acceptance. The evaluation involved tasks based on diabetes self-management guidelines, reviewed by domain experts and diabetes patients. The study, conducted at the University of Utah, recruited 12 patients who participated in the evaluation, identifying usability problems related to the main view, navigation, and personalization. Findings revealed heuristic violations and usability problems, with the UC-CW method identifying 26 problems and the think-aloud method identifying 20. The report provides detailed analysis of the evaluation process, including time estimates, heuristic violations, and severity ratings, offering insights into improving the application's design and user experience. The report includes an appendix with the tasks used in the evaluation.

Running head: HUMAN FACTORS IN SYSTEM DESIGN

Human Factors in System Design

Name of Student-

Name of University-

Author’s Note-

Human Factors in System Design

Name of Student-

Name of University-

Author’s Note-

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1HUMAN FACTORS IN SYSTEM DESIGN

Table of Contents

Part One: Interactive System and its Users......................................................................................1

Part Two: Use Cases........................................................................................................................1

Part Three: Usability Requirements.................................................................................................2

Part Four: Evaluation Methodology................................................................................................3

Part Five: Evaluation.......................................................................................................................4

Part Six: Findings of Evaluation....................................................................................................10

Bibliography..................................................................................................................................13

Appendix........................................................................................................................................14

Table of Contents

Part One: Interactive System and its Users......................................................................................1

Part Two: Use Cases........................................................................................................................1

Part Three: Usability Requirements.................................................................................................2

Part Four: Evaluation Methodology................................................................................................3

Part Five: Evaluation.......................................................................................................................4

Part Six: Findings of Evaluation....................................................................................................10

Bibliography..................................................................................................................................13

Appendix........................................................................................................................................14

2HUMAN FACTORS IN SYSTEM DESIGN

Part One: Interactive System and its Users

Interactive system that is being chosen for carrying out the evaluation study includes a

walkthrough for evaluating mHealth Diabetic self-management application. This evaluation will

increase the usability and the clinical efficacy for the mobile application. The type 2 diabetes

affects more than 382 million people all over the world. This particular number is expected to be

increased by approx. 35% in coming years. Managing the chronic diseases by own using the

mobile application has become common. The application of mobile health is mainly delivered by

smart phones or by tablets providing the patients potential to manage their own diabetic rate.

The mobile applications are new technologies and there are few studies that have tested

those applications as clinical interventions. The researchers stated that the people who are using

the diabetes application via their smartphones can use it for tracking the blood glucose and the

diet helps in controlling the adherence for managing the diabetes and improves self-monitoring.

The evidence on the diabetic app of clinical effectiveness is very inconclusive. The methods

including the usability testing is costly and is also time consuming. This report contain the

usability study of cognitive walkthrough stating the user centered cognitive walkthrough that is

capable of addressing all deficiencies contained in original technique and perform validation

with TA protocol (Think aloud). This approach will assess the effectiveness, user acceptance and

the efficiency with the diabetic patients on application of self-management.

Part Two: Use Cases

The think aloud (TA) protocol that is used for this self-evaluation method includes fields

of human factors as well as computer engineering. In this evaluation, the users includes some

representative tasks where the thoughts are verbalized in their interactions. The aim is gaining

Part One: Interactive System and its Users

Interactive system that is being chosen for carrying out the evaluation study includes a

walkthrough for evaluating mHealth Diabetic self-management application. This evaluation will

increase the usability and the clinical efficacy for the mobile application. The type 2 diabetes

affects more than 382 million people all over the world. This particular number is expected to be

increased by approx. 35% in coming years. Managing the chronic diseases by own using the

mobile application has become common. The application of mobile health is mainly delivered by

smart phones or by tablets providing the patients potential to manage their own diabetic rate.

The mobile applications are new technologies and there are few studies that have tested

those applications as clinical interventions. The researchers stated that the people who are using

the diabetes application via their smartphones can use it for tracking the blood glucose and the

diet helps in controlling the adherence for managing the diabetes and improves self-monitoring.

The evidence on the diabetic app of clinical effectiveness is very inconclusive. The methods

including the usability testing is costly and is also time consuming. This report contain the

usability study of cognitive walkthrough stating the user centered cognitive walkthrough that is

capable of addressing all deficiencies contained in original technique and perform validation

with TA protocol (Think aloud). This approach will assess the effectiveness, user acceptance and

the efficiency with the diabetic patients on application of self-management.

Part Two: Use Cases

The think aloud (TA) protocol that is used for this self-evaluation method includes fields

of human factors as well as computer engineering. In this evaluation, the users includes some

representative tasks where the thoughts are verbalized in their interactions. The aim is gaining

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3HUMAN FACTORS IN SYSTEM DESIGN

understanding through the task behavior of the users, the thought process of the users for

conducting system interaction and helps in identifying usability problems in system. The user

who are involved in the evaluation are the actual system user. The walkthrough is that is carried

out in this evaluation includes 6 to 10 developers, 2 to 3 engineers and 6 to 10 system users who

are associated with the group session. The group includes many tasks as well as participants who

are included in the group session.

Part Three: Usability Requirements

All the users associated with the system includes the group tasks uses and the participants

take part of the intended users. The users involved expresses the views prior other groups start

determining all problems in the self-evaluation system. The technique that is added in this

technique includes performing conventional expert of the session and added video recording for

the cognitive walkthrough to show the users interactions with this system. This usability study

includes think aloud session with all users for getting the conventional walkthrough of the

experts.

The tasks included in evaluation are much important and those are evaluated to find the

issues and the system limitations. The tasks are to address the area involved in the system and

includes sufficient number as well as specifications. In this analysis there is limited tasks

analysis and all the tasks are broken into small actions. There is no proper guidelines for the

process of task development and for task selection and also helps in priotirizing the system or the

tasks included in evaluation.

The study that is undertaken for this evaluation is mainly conducted at University of Utah

in the Salt Lake City of USA. After receiving the approval of Institutional Review Board, 12

understanding through the task behavior of the users, the thought process of the users for

conducting system interaction and helps in identifying usability problems in system. The user

who are involved in the evaluation are the actual system user. The walkthrough is that is carried

out in this evaluation includes 6 to 10 developers, 2 to 3 engineers and 6 to 10 system users who

are associated with the group session. The group includes many tasks as well as participants who

are included in the group session.

Part Three: Usability Requirements

All the users associated with the system includes the group tasks uses and the participants

take part of the intended users. The users involved expresses the views prior other groups start

determining all problems in the self-evaluation system. The technique that is added in this

technique includes performing conventional expert of the session and added video recording for

the cognitive walkthrough to show the users interactions with this system. This usability study

includes think aloud session with all users for getting the conventional walkthrough of the

experts.

The tasks included in evaluation are much important and those are evaluated to find the

issues and the system limitations. The tasks are to address the area involved in the system and

includes sufficient number as well as specifications. In this analysis there is limited tasks

analysis and all the tasks are broken into small actions. There is no proper guidelines for the

process of task development and for task selection and also helps in priotirizing the system or the

tasks included in evaluation.

The study that is undertaken for this evaluation is mainly conducted at University of Utah

in the Salt Lake City of USA. After receiving the approval of Institutional Review Board, 12

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4HUMAN FACTORS IN SYSTEM DESIGN

number of patients that were recruited. Setting that was made included the diabetes and the

endocrinology center for the University of the Utah Health. Cognitive walkthrough was mainly

conducted in conference room by using a projector as well as all individual think aloud session

was done in a quiet place in clinic. All the participants participated in the evaluation test has to

fill out the informed consent at starting of the evaluation session.

The 12patients who were involved in the evaluation criteria were recruited in the

Diabetes and Endocrinology Center using the purposive sampling for ensuring users having

different characteristics representing method evaluations. Criteria inclusion included were a)

diagnosing the patients for the Type 1 and the Type 2 diabetes, b) having no cognitive

impairment, c) familiarity as well as knowledge and knowledge regarding using computer

system, internet as well as mobile phone, d) should have the ability for speaking as well as

understanding English language. The patients included should have no connection with the

mHealth application that is being evaluated. The patients are to be randomized in two different

test groups having 6 participants in each group for performing the user centered cognitive

walkthrough. After the completion of the test, the participants were given $20 gift cards to

participate in the evaluation.

Part Four: Evaluation Methodology

There were suitable scenarios as well as tasks that were developed as well as validated

for carrying out this evaluation. The tasks were mainly based on some extensive review for the

diabetes guidelines that comes under self-management. This would ensure that all important as

well as self-management aspects that are critical for the evaluation is included in the tasks. The

tasks that were included in the system were then reviewed by three domain experts. The tasks

number of patients that were recruited. Setting that was made included the diabetes and the

endocrinology center for the University of the Utah Health. Cognitive walkthrough was mainly

conducted in conference room by using a projector as well as all individual think aloud session

was done in a quiet place in clinic. All the participants participated in the evaluation test has to

fill out the informed consent at starting of the evaluation session.

The 12patients who were involved in the evaluation criteria were recruited in the

Diabetes and Endocrinology Center using the purposive sampling for ensuring users having

different characteristics representing method evaluations. Criteria inclusion included were a)

diagnosing the patients for the Type 1 and the Type 2 diabetes, b) having no cognitive

impairment, c) familiarity as well as knowledge and knowledge regarding using computer

system, internet as well as mobile phone, d) should have the ability for speaking as well as

understanding English language. The patients included should have no connection with the

mHealth application that is being evaluated. The patients are to be randomized in two different

test groups having 6 participants in each group for performing the user centered cognitive

walkthrough. After the completion of the test, the participants were given $20 gift cards to

participate in the evaluation.

Part Four: Evaluation Methodology

There were suitable scenarios as well as tasks that were developed as well as validated

for carrying out this evaluation. The tasks were mainly based on some extensive review for the

diabetes guidelines that comes under self-management. This would ensure that all important as

well as self-management aspects that are critical for the evaluation is included in the tasks. The

tasks that were included in the system were then reviewed by three domain experts. The tasks

5HUMAN FACTORS IN SYSTEM DESIGN

were also reviewed by the diabetes patients to ensure accuracy and were accessed with the use of

content validity index. This validation iterations that were included in the evaluation test before

reaching the acceptable score were being completed and the result of 14 tasks are listed in the

appendix 1.

Evaluation involved in informing the participants in the evaluation test were done for five

minutes and the application of the mHealth were then demonstrated to the participants on large

screen for about 15 minutes. All the patients associated with the study was provided with a

booklet having 14 tasks. All the scenarios and the tasks included space for recording individual

observations as well as provide answers for the two simplified cognitive walkthrough questions.

This is done for assisting the group discussion in the evaluation study. In the group walkthrough,

the participants involved read out all the scenarios in turn and the group for evaluation study

guided facilitator to perform all the tasks that are shown on prjector screen. After performing

each tasks, the patients expresses individual personal thoughts that they experienced about the

usability problem for mHealth Application undertaken for the study.

The application of mHealth is a platform that is used to research to support self-

management for the Type 1 and the Type 2 diabetic patient. This application is considered much

suitable for the study by some researchers that leads to some system improvements. The mHealth

application mainly includes different diabetes functions that allows the patients for monitoring

the blood glucose, the medication, food as well as physical activity included in the system. The

measurements of blood glucose gets transferred to a glucometer through Bluetooth are even

entered manually. The food as well as the physical activity data are noted manually. The glucose

measurements are mainly are shown on graphs.

Part Five: Evaluation

were also reviewed by the diabetes patients to ensure accuracy and were accessed with the use of

content validity index. This validation iterations that were included in the evaluation test before

reaching the acceptable score were being completed and the result of 14 tasks are listed in the

appendix 1.

Evaluation involved in informing the participants in the evaluation test were done for five

minutes and the application of the mHealth were then demonstrated to the participants on large

screen for about 15 minutes. All the patients associated with the study was provided with a

booklet having 14 tasks. All the scenarios and the tasks included space for recording individual

observations as well as provide answers for the two simplified cognitive walkthrough questions.

This is done for assisting the group discussion in the evaluation study. In the group walkthrough,

the participants involved read out all the scenarios in turn and the group for evaluation study

guided facilitator to perform all the tasks that are shown on prjector screen. After performing

each tasks, the patients expresses individual personal thoughts that they experienced about the

usability problem for mHealth Application undertaken for the study.

The application of mHealth is a platform that is used to research to support self-

management for the Type 1 and the Type 2 diabetic patient. This application is considered much

suitable for the study by some researchers that leads to some system improvements. The mHealth

application mainly includes different diabetes functions that allows the patients for monitoring

the blood glucose, the medication, food as well as physical activity included in the system. The

measurements of blood glucose gets transferred to a glucometer through Bluetooth are even

entered manually. The food as well as the physical activity data are noted manually. The glucose

measurements are mainly are shown on graphs.

Part Five: Evaluation

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

6HUMAN FACTORS IN SYSTEM DESIGN

Usability problem characteristics: Usability problems included were not small. In the

Main View, it is not possible to figure or identify the ranges that were based on the own need of

the patients for measuring their latest glucose level. The most important feature that is

considered is individual tailoring of diseases. The second people included in the evaluation study

is the problem involved in Main View. The navigational concern involved in Main View was the

most important concern and the participant needs to swipe across graphical image for navigating

the periodical pattern for checking the glucose measurement of the patient for the last group.

This included different action compared to previous steps included in the evaluation.

There is another personalization problem that is plotted in Plotted Graph View. This

consists graphs that includes cut-off that shows low values, middle value as well as high value of

glucose that are included for all the individual patient. In the List View, the patients found that it

was not clear about the way to navigate as well as define the location when the users came in the

list in the diary of the registered food, for medication, for insulin values, as well as glucose. It is

not possible to get the response for the date sequence for all the entries listed and that lead to

confuse the patients.

Time estimates for evaluation: Elapsed time included for evaluation included in entire

UC-CW evaluation process was taken to be 1830 minutes. Most part of time was elapsed for

reviewing all guidelines as well as constructing the evaluation tasks and the data coding along

with the usability problem that is determined for the each task. Less time was consumed in

validating the tasks. About 480 minute was elapsed for problem determination and 130 minutes

was taken for task validation in the system. Assigning heuristics as well as the severity ratings

took about 120 minutes. The user evaluation for the evaluation technique took about 140 minutes

for completing the evaluation. The total time of evaluation is mainly divided in to 26 problems

Usability problem characteristics: Usability problems included were not small. In the

Main View, it is not possible to figure or identify the ranges that were based on the own need of

the patients for measuring their latest glucose level. The most important feature that is

considered is individual tailoring of diseases. The second people included in the evaluation study

is the problem involved in Main View. The navigational concern involved in Main View was the

most important concern and the participant needs to swipe across graphical image for navigating

the periodical pattern for checking the glucose measurement of the patient for the last group.

This included different action compared to previous steps included in the evaluation.

There is another personalization problem that is plotted in Plotted Graph View. This

consists graphs that includes cut-off that shows low values, middle value as well as high value of

glucose that are included for all the individual patient. In the List View, the patients found that it

was not clear about the way to navigate as well as define the location when the users came in the

list in the diary of the registered food, for medication, for insulin values, as well as glucose. It is

not possible to get the response for the date sequence for all the entries listed and that lead to

confuse the patients.

Time estimates for evaluation: Elapsed time included for evaluation included in entire

UC-CW evaluation process was taken to be 1830 minutes. Most part of time was elapsed for

reviewing all guidelines as well as constructing the evaluation tasks and the data coding along

with the usability problem that is determined for the each task. Less time was consumed in

validating the tasks. About 480 minute was elapsed for problem determination and 130 minutes

was taken for task validation in the system. Assigning heuristics as well as the severity ratings

took about 120 minutes. The user evaluation for the evaluation technique took about 140 minutes

for completing the evaluation. The total time of evaluation is mainly divided in to 26 problems

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7HUMAN FACTORS IN SYSTEM DESIGN

that are identified and that is equal to 70.4 minutes for each usability problem. Session of

evaluation took about 14 minutes and this included 5 minutes for informing method and 15

minutes for application tutorial that is given to the participants. 120 minutes was taken for

evaluation.

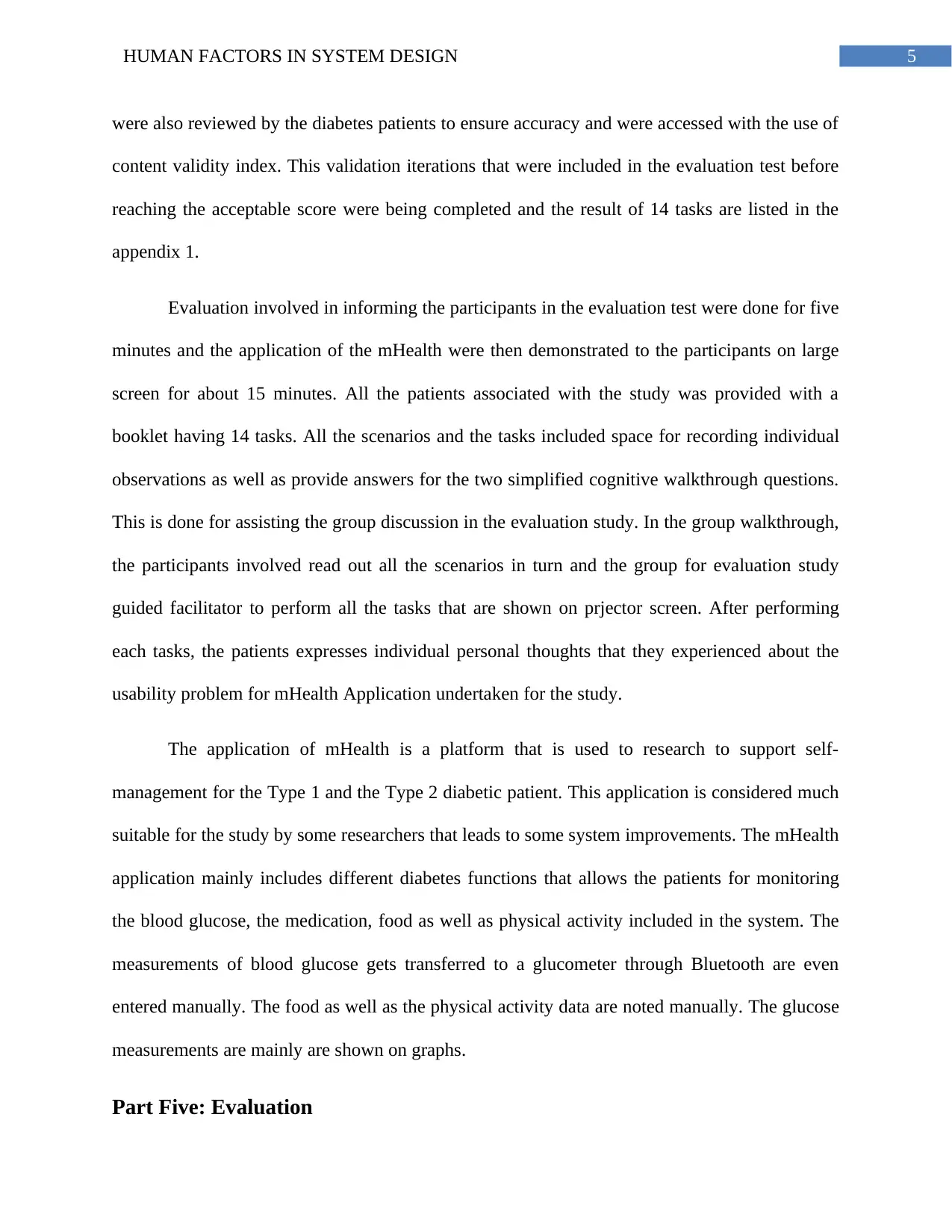

The Main View page includes the UC-CW and think aloud included had the highest

quantity of usability problems. This was mainly followed by Carbohydrate entry view. This is

shown in the figure below. Both methods that is included in List View method, Glucose entry

method and includes time setting that has very low volumes for the usability problems as well as

the heuristics violations identified in the application. The main view and the time setting view

has same severity rating. This is similar to the plotted graph and the list view graph.

Figure 1: Usability problems and number of heuristic violated

(Source: Georgsson et al. 2019)

that are identified and that is equal to 70.4 minutes for each usability problem. Session of

evaluation took about 14 minutes and this included 5 minutes for informing method and 15

minutes for application tutorial that is given to the participants. 120 minutes was taken for

evaluation.

The Main View page includes the UC-CW and think aloud included had the highest

quantity of usability problems. This was mainly followed by Carbohydrate entry view. This is

shown in the figure below. Both methods that is included in List View method, Glucose entry

method and includes time setting that has very low volumes for the usability problems as well as

the heuristics violations identified in the application. The main view and the time setting view

has same severity rating. This is similar to the plotted graph and the list view graph.

Figure 1: Usability problems and number of heuristic violated

(Source: Georgsson et al. 2019)

8HUMAN FACTORS IN SYSTEM DESIGN

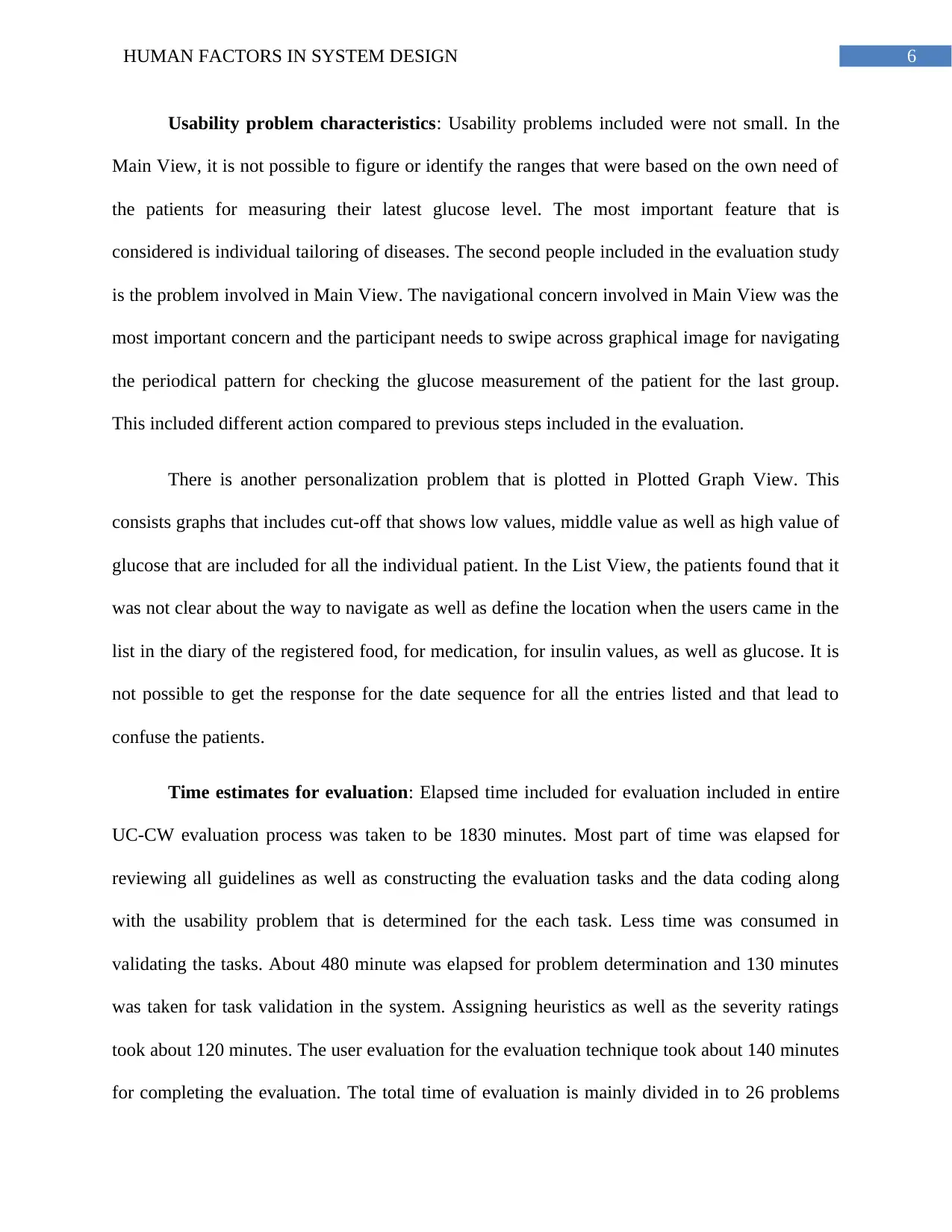

Total 74 heuristic violations occurred or views in UC-CW with 51 heuristics violation sin

TA. In the figure 2 below, it can be seen that the majority heuristic violation includes both the

methods that stated the category match with the system and the real world. The consistency as

well as the standards at analyzed at 22.9 % and 29.4 % and the recognition or the recall was

analyzed at 17.5 % and with 19.6 %. The user control included in the evaluation and the freedom

has very few violations included by both the methods across the view and that is measured to be

at 1.4 % and 3.9 %. This is except the categories Help and Recover. This does not have any

match category.

Figure 2: Heuristics violation across methods

(Source: Georgsson et al. 2019)

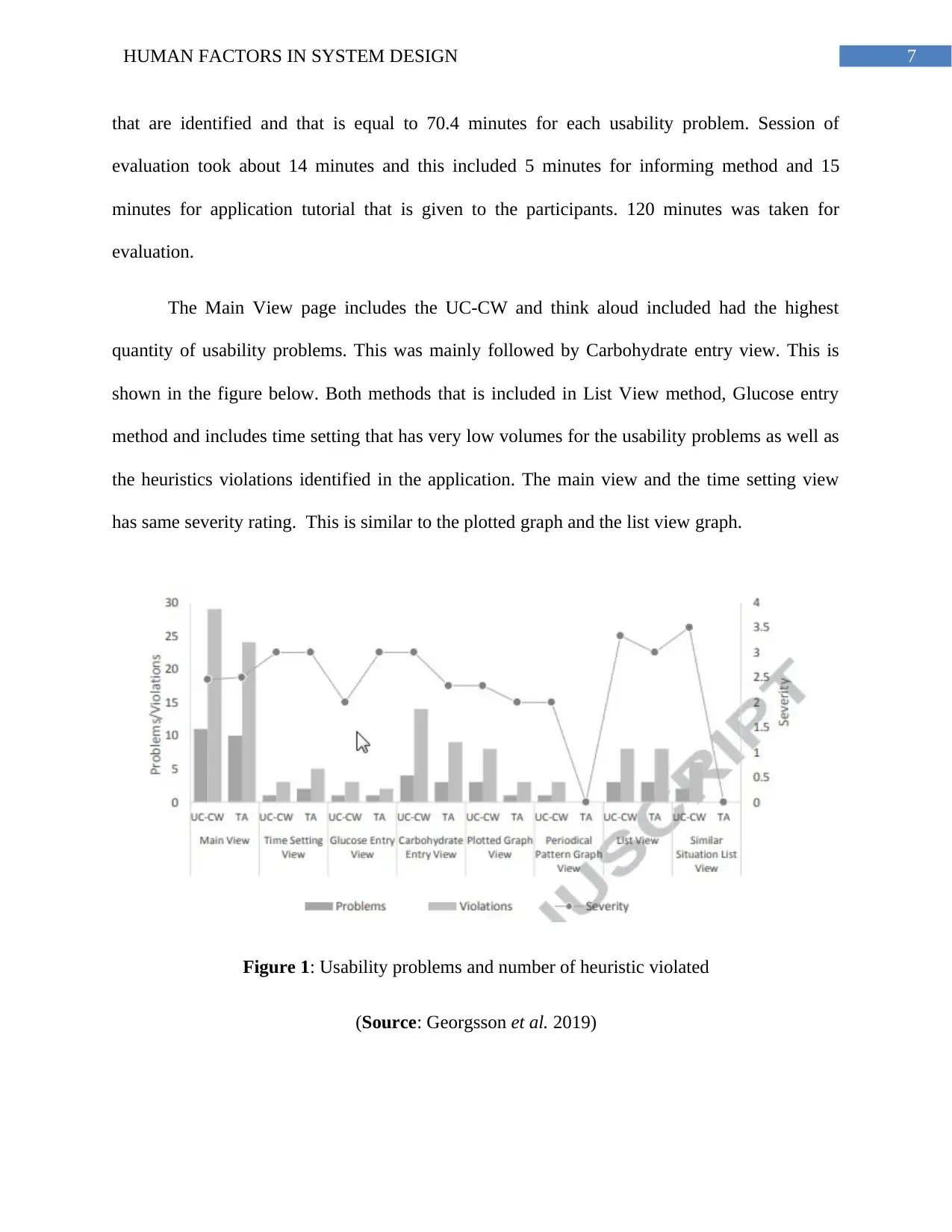

The UC-CW mainly resulted in total 26 usability problems while think aloud it resulted

in 20 usability problems. The UC-CW was mainly identified with 5 catastrophic and include 10

severity ratings whereas in TA two catastrophic as well as 8 major ratings were studied. TA

Total 74 heuristic violations occurred or views in UC-CW with 51 heuristics violation sin

TA. In the figure 2 below, it can be seen that the majority heuristic violation includes both the

methods that stated the category match with the system and the real world. The consistency as

well as the standards at analyzed at 22.9 % and 29.4 % and the recognition or the recall was

analyzed at 17.5 % and with 19.6 %. The user control included in the evaluation and the freedom

has very few violations included by both the methods across the view and that is measured to be

at 1.4 % and 3.9 %. This is except the categories Help and Recover. This does not have any

match category.

Figure 2: Heuristics violation across methods

(Source: Georgsson et al. 2019)

The UC-CW mainly resulted in total 26 usability problems while think aloud it resulted

in 20 usability problems. The UC-CW was mainly identified with 5 catastrophic and include 10

severity ratings whereas in TA two catastrophic as well as 8 major ratings were studied. TA

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

9HUMAN FACTORS IN SYSTEM DESIGN

included 10 small ratings as well as included UC-CW nine and two cosmetic rating were given in

the UC-CW.

Figure 3: Usability problems, and classifications on heuristics

(Source: Georgsson et al. 2019)

For all individual methods, there are similar results. Both of them are identified with the

most critical problems in the Main view. There were less usability problems located on main

view of the system. Three view were identified, one is glucose entry view, second is periodical

pattern graph, and the third is plotted graph view. This also included one minor rating in each

evaluation tasks and carbohydrate view included minor ratings as well as shows the plotted graph

view. The list view had one small rating with each TA.

UC-CW that were identified includes many ratings that were severe including same

situation list view. The methods included in the evaluation also varied with the severity ratings

for Glucose Entry view, this includes the carbohydrate view in the analysis as well as periodical

included 10 small ratings as well as included UC-CW nine and two cosmetic rating were given in

the UC-CW.

Figure 3: Usability problems, and classifications on heuristics

(Source: Georgsson et al. 2019)

For all individual methods, there are similar results. Both of them are identified with the

most critical problems in the Main view. There were less usability problems located on main

view of the system. Three view were identified, one is glucose entry view, second is periodical

pattern graph, and the third is plotted graph view. This also included one minor rating in each

evaluation tasks and carbohydrate view included minor ratings as well as shows the plotted graph

view. The list view had one small rating with each TA.

UC-CW that were identified includes many ratings that were severe including same

situation list view. The methods included in the evaluation also varied with the severity ratings

for Glucose Entry view, this includes the carbohydrate view in the analysis as well as periodical

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

10HUMAN FACTORS IN SYSTEM DESIGN

pattern view. This helps in demonstrating the UC-CW includes more sensitive with the severity

ratings for all the views. Both the methods included same severity ratings available for the List

View, for the time setting view and available for the main view. Both the methods has same

average severity ratings included in the chosen application.

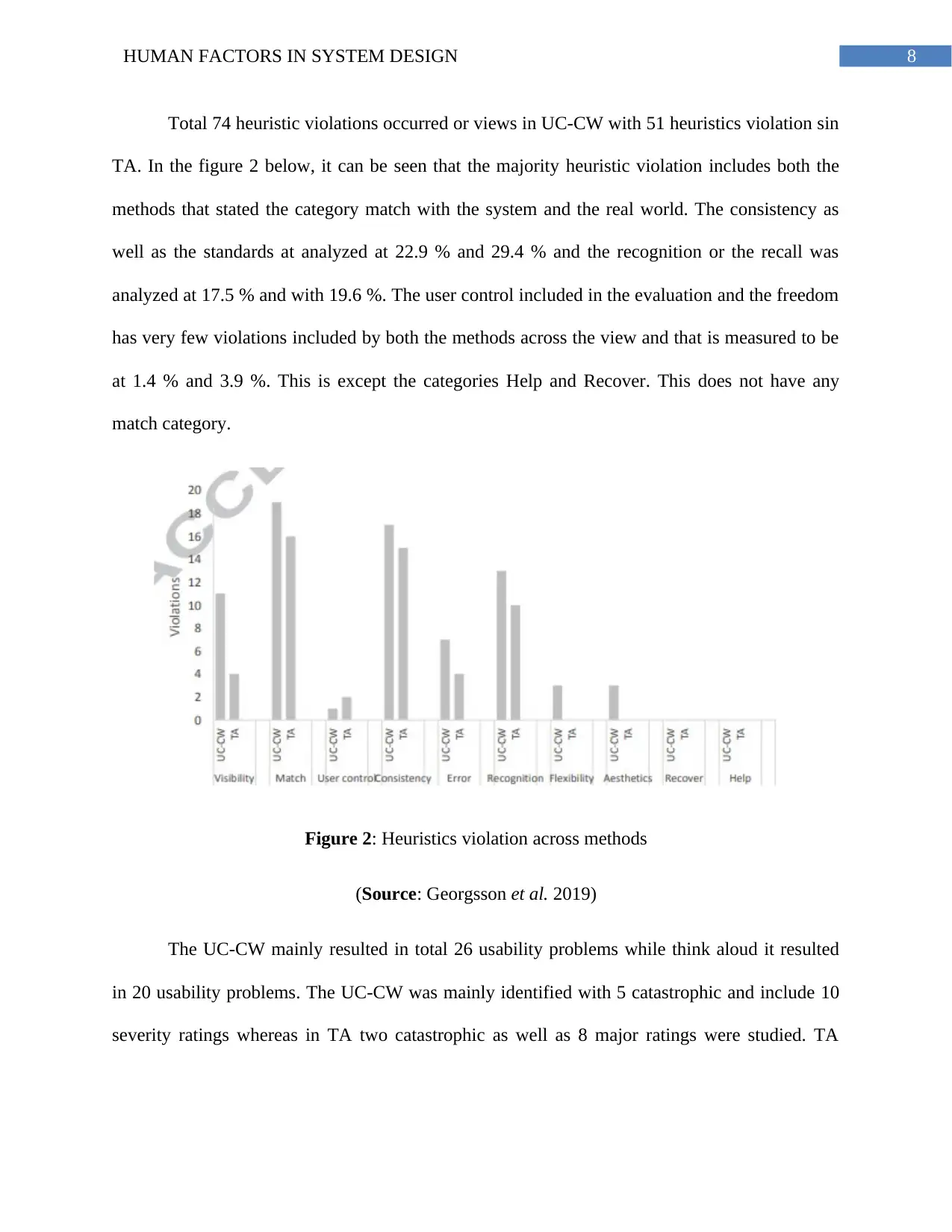

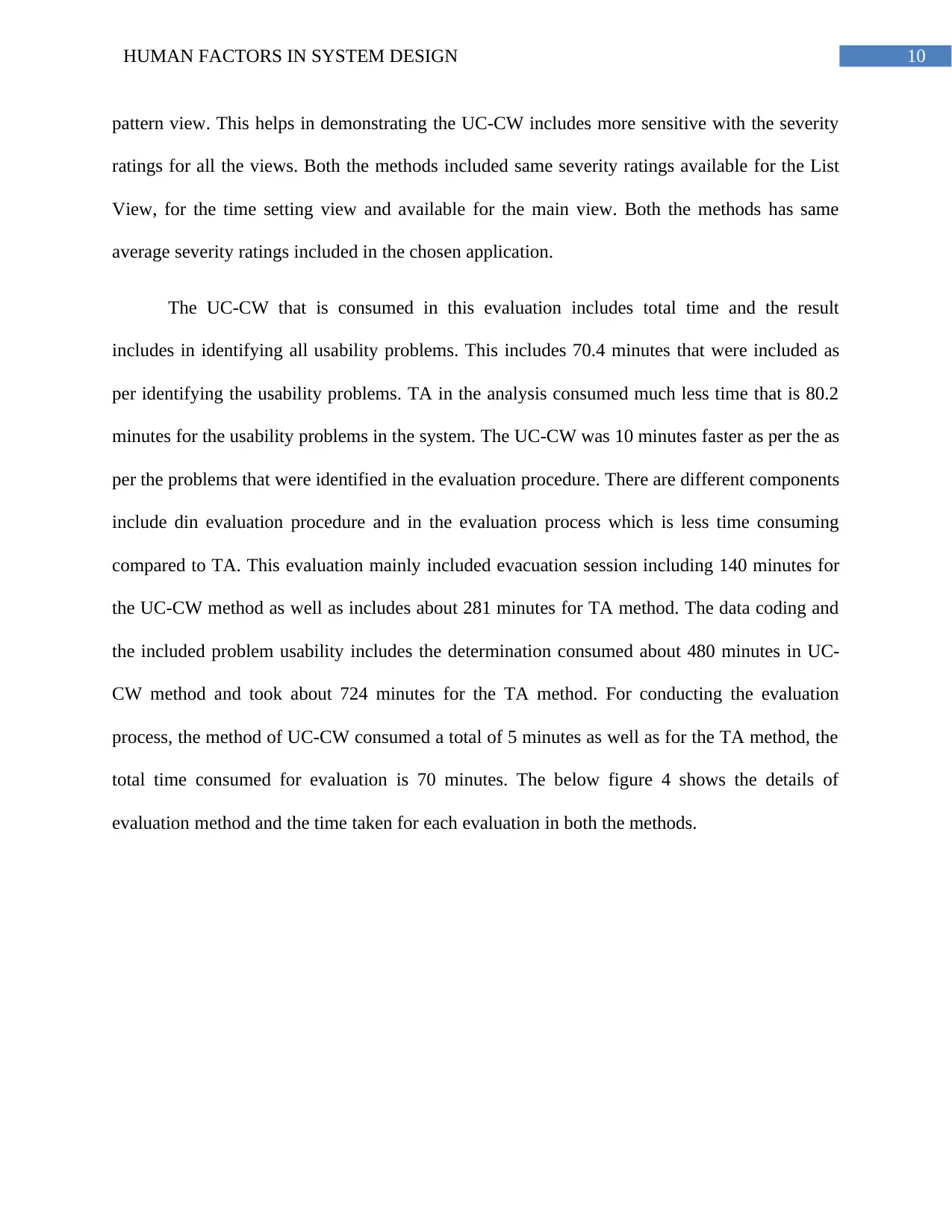

The UC-CW that is consumed in this evaluation includes total time and the result

includes in identifying all usability problems. This includes 70.4 minutes that were included as

per identifying the usability problems. TA in the analysis consumed much less time that is 80.2

minutes for the usability problems in the system. The UC-CW was 10 minutes faster as per the as

per the problems that were identified in the evaluation procedure. There are different components

include din evaluation procedure and in the evaluation process which is less time consuming

compared to TA. This evaluation mainly included evacuation session including 140 minutes for

the UC-CW method as well as includes about 281 minutes for TA method. The data coding and

the included problem usability includes the determination consumed about 480 minutes in UC-

CW method and took about 724 minutes for the TA method. For conducting the evaluation

process, the method of UC-CW consumed a total of 5 minutes as well as for the TA method, the

total time consumed for evaluation is 70 minutes. The below figure 4 shows the details of

evaluation method and the time taken for each evaluation in both the methods.

pattern view. This helps in demonstrating the UC-CW includes more sensitive with the severity

ratings for all the views. Both the methods included same severity ratings available for the List

View, for the time setting view and available for the main view. Both the methods has same

average severity ratings included in the chosen application.

The UC-CW that is consumed in this evaluation includes total time and the result

includes in identifying all usability problems. This includes 70.4 minutes that were included as

per identifying the usability problems. TA in the analysis consumed much less time that is 80.2

minutes for the usability problems in the system. The UC-CW was 10 minutes faster as per the as

per the problems that were identified in the evaluation procedure. There are different components

include din evaluation procedure and in the evaluation process which is less time consuming

compared to TA. This evaluation mainly included evacuation session including 140 minutes for

the UC-CW method as well as includes about 281 minutes for TA method. The data coding and

the included problem usability includes the determination consumed about 480 minutes in UC-

CW method and took about 724 minutes for the TA method. For conducting the evaluation

process, the method of UC-CW consumed a total of 5 minutes as well as for the TA method, the

total time consumed for evaluation is 70 minutes. The below figure 4 shows the details of

evaluation method and the time taken for each evaluation in both the methods.

11HUMAN FACTORS IN SYSTEM DESIGN

Figure 4: Time in minutes included in evaluation procedure

(Source: Georgsson et al. 2019)

Part Six: Findings of Evaluation

With the enhanced cognitive walkthrough, the user centered was designed for including

the users in all the phases. This helps to streamline the development of tasks as well as includes

different evaluation process as well as has mportant tasks with the validity aspects for detecting

the usability problems that are critical for all the users. For performing the beginning validation

against original think aloud with small group of user patient measuring the effectiveness, the

efficiency and the user acceptance of the application. Both of the methods includes two domain

Figure 4: Time in minutes included in evaluation procedure

(Source: Georgsson et al. 2019)

Part Six: Findings of Evaluation

With the enhanced cognitive walkthrough, the user centered was designed for including

the users in all the phases. This helps to streamline the development of tasks as well as includes

different evaluation process as well as has mportant tasks with the validity aspects for detecting

the usability problems that are critical for all the users. For performing the beginning validation

against original think aloud with small group of user patient measuring the effectiveness, the

efficiency and the user acceptance of the application. Both of the methods includes two domain

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 16

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.