Information Systems and Big Data Analysis: History, Challenges

VerifiedAdded on 2023/06/16

|1

|1810

|141

Essay

AI Summary

This essay provides a comprehensive overview of big data, starting with its history, tracing back to the 1930s and the coining of the term in 2005, and dividing its evolution into three phases. It details the key characteristics of big data, including volume, variety, velocity, and variability, and discusses the challenges associated with analyzing large and complex datasets. The essay also explores various techniques used in big data analytics, such as A/B testing and data mining, and highlights how big data technology supports businesses, using the Royal Bank of Scotland as an example. The document concludes by referencing scholarly articles on ethical issues, weather forecasting, and ethical frameworks related to big data, offering a holistic view of the subject.

History on big Data

The Big Data terms has been used since the early 1990s but it is not exactly known that who was the

first person to use this term. Where as most people give credit to John R. Mashy for making this

term popular to the world. the term Big Data has been around 2005, when it was launched by

OReilly Media in 2005. However, the usage of Big Data and the need to understand all available

data has been around significantly longer. The primary major data project is created in 1937 and was

ordered by the Franklin D. Roosevelts administration in the USA. Big data history can be majorly

divided into three main phases such as Phase 1.0, 2.0 and 3.0. In the Big Data phase 1.0 data

analysis, big data and data analytics was originated from the longstanding domain of data base

management. The Big data phase 2.0 includes the beginning of internet and the web to offer unique

data collection as well as data analysis opportunities. Where as in the Big data phase 3.0 includes

that web based unstructured content is still the main focus for many organization in data analysis or

big data and it consist of current possibilities to retrieves valuable information. After the Social

Security Act became law in 1937, the government had to keep track of contribution from 26 million

Americans and more than 3 million employers. The principal data-processing machine appeared in

1943 and was developed by the British to decipher Nazi codes during World War II. In 2005 Roger

Mougalas from OReilly Media coined the term Big Data for the initial time, just a year after they

created the term Web 2.0. It refers to a large set of data that is almost impossible to manage and

process utilizing traditional business intelligence apparatuses. After the Social Security Act became

law in 1937, the government had to keep track of contribution from 26 million Americans and more

than 3 million employers. The principal data-processing machine appeared in 1943 and was

developed by the British to decipher Nazi codes during World War II. In 2005 Roger Mougalas from

OReilly Media coined the term Big Data for the initial time, just a year after they created the term

Web 2.0. It refers to a large set of data that is almost impossible to manage and process utilizing

traditional business intelligence tools.

Information Systems and Big Data Analysis

Name of the Student

The challenges of big data analytics

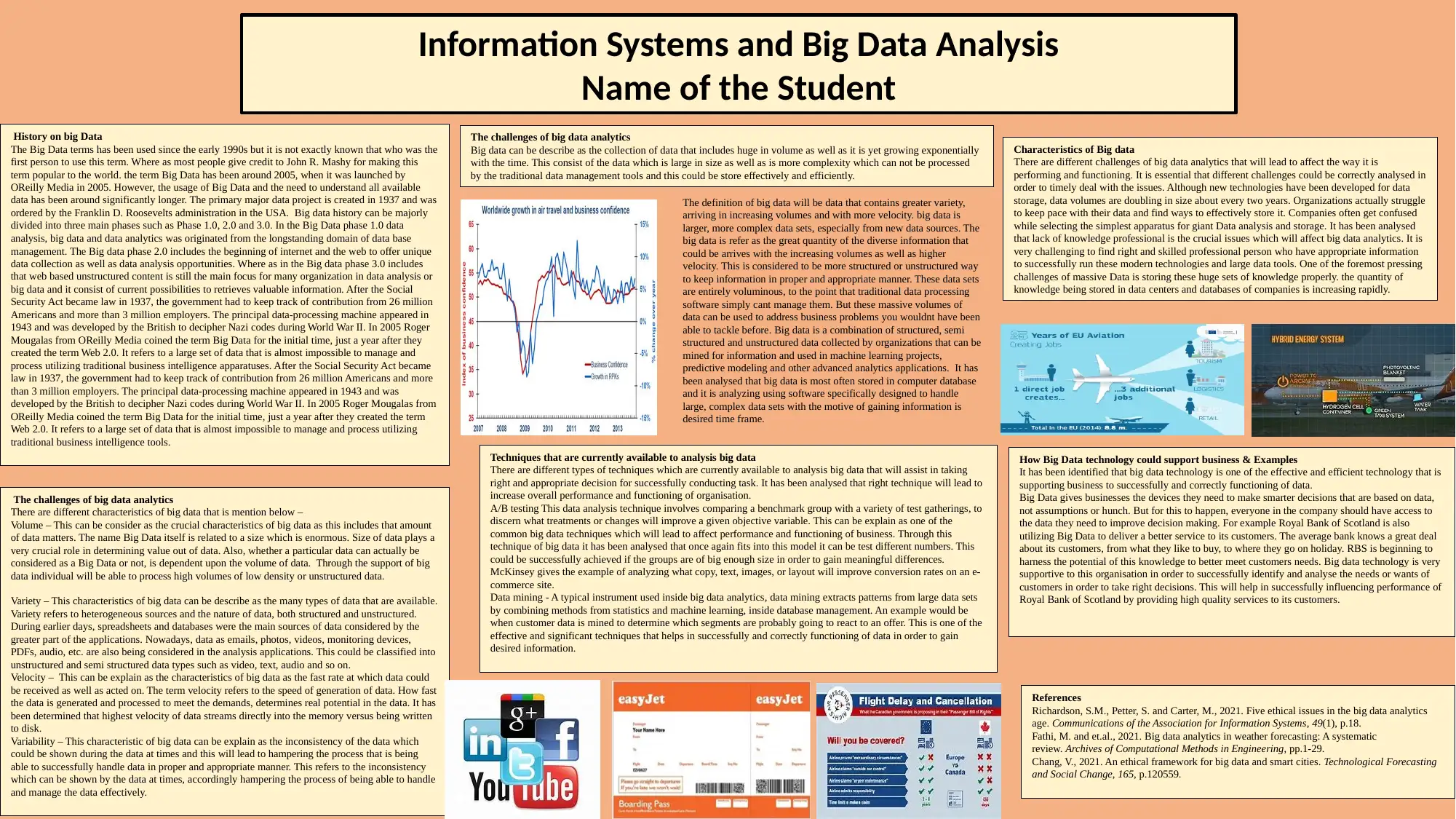

Big data can be describe as the collection of data that includes huge in volume as well as it is yet growing exponentially

with the time. This consist of the data which is large in size as well as is more complexity which can not be processed

by the traditional data management tools and this could be store effectively and efficiently.

Characteristics of Big data

There are different challenges of big data analytics that will lead to affect the way it is

performing and functioning. It is essential that different challenges could be correctly analysed in

order to timely deal with the issues. Although new technologies have been developed for data

storage, data volumes are doubling in size about every two years. Organizations actually struggle

to keep pace with their data and find ways to effectively store it. Companies often get confused

while selecting the simplest apparatus for giant Data analysis and storage. It has been analysed

that lack of knowledge professional is the crucial issues which will affect big data analytics. It is

very challenging to find right and skilled professional person who have appropriate information

to successfully run these modern technologies and large data tools. One of the foremost pressing

challenges of massive Data is storing these huge sets of knowledge properly. the quantity of

knowledge being stored in data centers and databases of companies is increasing rapidly.

The challenges of big data analytics

There are different characteristics of big data that is mention below –

Volume – This can be consider as the crucial characteristics of big data as this includes that amount

of data matters. The name Big Data itself is related to a size which is enormous. Size of data plays a

very crucial role in determining value out of data. Also, whether a particular data can actually be

considered as a Big Data or not, is dependent upon the volume of data. Through the support of big

data individual will be able to process high volumes of low density or unstructured data.

Variety – This characteristics of big data can be describe as the many types of data that are available.

Variety refers to heterogeneous sources and the nature of data, both structured and unstructured.

During earlier days, spreadsheets and databases were the main sources of data considered by the

greater part of the applications. Nowadays, data as emails, photos, videos, monitoring devices,

PDFs, audio, etc. are also being considered in the analysis applications. This could be classified into

unstructured and semi structured data types such as video, text, audio and so on.

Velocity – This can be explain as the characteristics of big data as the fast rate at which data could

be received as well as acted on. The term velocity refers to the speed of generation of data. How fast

the data is generated and processed to meet the demands, determines real potential in the data. It has

been determined that highest velocity of data streams directly into the memory versus being written

to disk.

Variability – This characteristic of big data can be explain as the inconsistency of the data which

could be shown during the data at times and this will lead to hampering the process that is being

able to successfully handle data in proper and appropriate manner. This refers to the inconsistency

which can be shown by the data at times, accordingly hampering the process of being able to handle

and manage the data effectively.

References

Richardson, S.M., Petter, S. and Carter, M., 2021. Five ethical issues in the big data analytics

age. Communications of the Association for Information Systems, 49(1), p.18.

Fathi, M. and et.al., 2021. Big data analytics in weather forecasting: A systematic

review. Archives of Computational Methods in Engineering, pp.1-29.

Chang, V., 2021. An ethical framework for big data and smart cities. Technological Forecasting

and Social Change, 165, p.120559.

How Big Data technology could support business & Examples

It has been identified that big data technology is one of the effective and efficient technology that is

supporting business to successfully and correctly functioning of data.

Big Data gives businesses the devices they need to make smarter decisions that are based on data,

not assumptions or hunch. But for this to happen, everyone in the company should have access to

the data they need to improve decision making. For example Royal Bank of Scotland is also

utilizing Big Data to deliver a better service to its customers. The average bank knows a great deal

about its customers, from what they like to buy, to where they go on holiday. RBS is beginning to

harness the potential of this knowledge to better meet customers needs. Big data technology is very

supportive to this organisation in order to successfully identify and analyse the needs or wants of

customers in order to take right decisions. This will help in successfully influencing performance of

Royal Bank of Scotland by providing high quality services to its customers.

Techniques that are currently available to analysis big data

There are different types of techniques which are currently available to analysis big data that will assist in taking

right and appropriate decision for successfully conducting task. It has been analysed that right technique will lead to

increase overall performance and functioning of organisation.

A/B testing This data analysis technique involves comparing a benchmark group with a variety of test gatherings, to

discern what treatments or changes will improve a given objective variable. This can be explain as one of the

common big data techniques which will lead to affect performance and functioning of business. Through this

technique of big data it has been analysed that once again fits into this model it can be test different numbers. This

could be successfully achieved if the groups are of big enough size in order to gain meaningful differences.

McKinsey gives the example of analyzing what copy, text, images, or layout will improve conversion rates on an e-

commerce site.

Data mining - A typical instrument used inside big data analytics, data mining extracts patterns from large data sets

by combining methods from statistics and machine learning, inside database management. An example would be

when customer data is mined to determine which segments are probably going to react to an offer. This is one of the

effective and significant techniques that helps in successfully and correctly functioning of data in order to gain

desired information.

The definition of big data will be data that contains greater variety,

arriving in increasing volumes and with more velocity. big data is

larger, more complex data sets, especially from new data sources. The

big data is refer as the great quantity of the diverse information that

could be arrives with the increasing volumes as well as higher

velocity. This is considered to be more structured or unstructured way

to keep information in proper and appropriate manner. These data sets

are entirely voluminous, to the point that traditional data processing

software simply cant manage them. But these massive volumes of

data can be used to address business problems you wouldnt have been

able to tackle before. Big data is a combination of structured, semi

structured and unstructured data collected by organizations that can be

mined for information and used in machine learning projects,

predictive modeling and other advanced analytics applications. It has

been analysed that big data is most often stored in computer database

and it is analyzing using software specifically designed to handle

large, complex data sets with the motive of gaining information is

desired time frame.

The Big Data terms has been used since the early 1990s but it is not exactly known that who was the

first person to use this term. Where as most people give credit to John R. Mashy for making this

term popular to the world. the term Big Data has been around 2005, when it was launched by

OReilly Media in 2005. However, the usage of Big Data and the need to understand all available

data has been around significantly longer. The primary major data project is created in 1937 and was

ordered by the Franklin D. Roosevelts administration in the USA. Big data history can be majorly

divided into three main phases such as Phase 1.0, 2.0 and 3.0. In the Big Data phase 1.0 data

analysis, big data and data analytics was originated from the longstanding domain of data base

management. The Big data phase 2.0 includes the beginning of internet and the web to offer unique

data collection as well as data analysis opportunities. Where as in the Big data phase 3.0 includes

that web based unstructured content is still the main focus for many organization in data analysis or

big data and it consist of current possibilities to retrieves valuable information. After the Social

Security Act became law in 1937, the government had to keep track of contribution from 26 million

Americans and more than 3 million employers. The principal data-processing machine appeared in

1943 and was developed by the British to decipher Nazi codes during World War II. In 2005 Roger

Mougalas from OReilly Media coined the term Big Data for the initial time, just a year after they

created the term Web 2.0. It refers to a large set of data that is almost impossible to manage and

process utilizing traditional business intelligence apparatuses. After the Social Security Act became

law in 1937, the government had to keep track of contribution from 26 million Americans and more

than 3 million employers. The principal data-processing machine appeared in 1943 and was

developed by the British to decipher Nazi codes during World War II. In 2005 Roger Mougalas from

OReilly Media coined the term Big Data for the initial time, just a year after they created the term

Web 2.0. It refers to a large set of data that is almost impossible to manage and process utilizing

traditional business intelligence tools.

Information Systems and Big Data Analysis

Name of the Student

The challenges of big data analytics

Big data can be describe as the collection of data that includes huge in volume as well as it is yet growing exponentially

with the time. This consist of the data which is large in size as well as is more complexity which can not be processed

by the traditional data management tools and this could be store effectively and efficiently.

Characteristics of Big data

There are different challenges of big data analytics that will lead to affect the way it is

performing and functioning. It is essential that different challenges could be correctly analysed in

order to timely deal with the issues. Although new technologies have been developed for data

storage, data volumes are doubling in size about every two years. Organizations actually struggle

to keep pace with their data and find ways to effectively store it. Companies often get confused

while selecting the simplest apparatus for giant Data analysis and storage. It has been analysed

that lack of knowledge professional is the crucial issues which will affect big data analytics. It is

very challenging to find right and skilled professional person who have appropriate information

to successfully run these modern technologies and large data tools. One of the foremost pressing

challenges of massive Data is storing these huge sets of knowledge properly. the quantity of

knowledge being stored in data centers and databases of companies is increasing rapidly.

The challenges of big data analytics

There are different characteristics of big data that is mention below –

Volume – This can be consider as the crucial characteristics of big data as this includes that amount

of data matters. The name Big Data itself is related to a size which is enormous. Size of data plays a

very crucial role in determining value out of data. Also, whether a particular data can actually be

considered as a Big Data or not, is dependent upon the volume of data. Through the support of big

data individual will be able to process high volumes of low density or unstructured data.

Variety – This characteristics of big data can be describe as the many types of data that are available.

Variety refers to heterogeneous sources and the nature of data, both structured and unstructured.

During earlier days, spreadsheets and databases were the main sources of data considered by the

greater part of the applications. Nowadays, data as emails, photos, videos, monitoring devices,

PDFs, audio, etc. are also being considered in the analysis applications. This could be classified into

unstructured and semi structured data types such as video, text, audio and so on.

Velocity – This can be explain as the characteristics of big data as the fast rate at which data could

be received as well as acted on. The term velocity refers to the speed of generation of data. How fast

the data is generated and processed to meet the demands, determines real potential in the data. It has

been determined that highest velocity of data streams directly into the memory versus being written

to disk.

Variability – This characteristic of big data can be explain as the inconsistency of the data which

could be shown during the data at times and this will lead to hampering the process that is being

able to successfully handle data in proper and appropriate manner. This refers to the inconsistency

which can be shown by the data at times, accordingly hampering the process of being able to handle

and manage the data effectively.

References

Richardson, S.M., Petter, S. and Carter, M., 2021. Five ethical issues in the big data analytics

age. Communications of the Association for Information Systems, 49(1), p.18.

Fathi, M. and et.al., 2021. Big data analytics in weather forecasting: A systematic

review. Archives of Computational Methods in Engineering, pp.1-29.

Chang, V., 2021. An ethical framework for big data and smart cities. Technological Forecasting

and Social Change, 165, p.120559.

How Big Data technology could support business & Examples

It has been identified that big data technology is one of the effective and efficient technology that is

supporting business to successfully and correctly functioning of data.

Big Data gives businesses the devices they need to make smarter decisions that are based on data,

not assumptions or hunch. But for this to happen, everyone in the company should have access to

the data they need to improve decision making. For example Royal Bank of Scotland is also

utilizing Big Data to deliver a better service to its customers. The average bank knows a great deal

about its customers, from what they like to buy, to where they go on holiday. RBS is beginning to

harness the potential of this knowledge to better meet customers needs. Big data technology is very

supportive to this organisation in order to successfully identify and analyse the needs or wants of

customers in order to take right decisions. This will help in successfully influencing performance of

Royal Bank of Scotland by providing high quality services to its customers.

Techniques that are currently available to analysis big data

There are different types of techniques which are currently available to analysis big data that will assist in taking

right and appropriate decision for successfully conducting task. It has been analysed that right technique will lead to

increase overall performance and functioning of organisation.

A/B testing This data analysis technique involves comparing a benchmark group with a variety of test gatherings, to

discern what treatments or changes will improve a given objective variable. This can be explain as one of the

common big data techniques which will lead to affect performance and functioning of business. Through this

technique of big data it has been analysed that once again fits into this model it can be test different numbers. This

could be successfully achieved if the groups are of big enough size in order to gain meaningful differences.

McKinsey gives the example of analyzing what copy, text, images, or layout will improve conversion rates on an e-

commerce site.

Data mining - A typical instrument used inside big data analytics, data mining extracts patterns from large data sets

by combining methods from statistics and machine learning, inside database management. An example would be

when customer data is mined to determine which segments are probably going to react to an offer. This is one of the

effective and significant techniques that helps in successfully and correctly functioning of data in order to gain

desired information.

The definition of big data will be data that contains greater variety,

arriving in increasing volumes and with more velocity. big data is

larger, more complex data sets, especially from new data sources. The

big data is refer as the great quantity of the diverse information that

could be arrives with the increasing volumes as well as higher

velocity. This is considered to be more structured or unstructured way

to keep information in proper and appropriate manner. These data sets

are entirely voluminous, to the point that traditional data processing

software simply cant manage them. But these massive volumes of

data can be used to address business problems you wouldnt have been

able to tackle before. Big data is a combination of structured, semi

structured and unstructured data collected by organizations that can be

mined for information and used in machine learning projects,

predictive modeling and other advanced analytics applications. It has

been analysed that big data is most often stored in computer database

and it is analyzing using software specifically designed to handle

large, complex data sets with the motive of gaining information is

desired time frame.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2025 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.