IMD11112 Design and Dialogue Report: Campus Garden Application

VerifiedAdded on 2023/04/21

|27

|5222

|178

Report

AI Summary

This report details the design and development of an interactive mobile application for the Napier Lion’s Gate project at the Merchiston campus. The application aims to enhance visitor experience within the campus garden by integrating augmented reality (AR), the Internet of Things (IoT), and user experience (UX) design principles. The report explores various design approaches, including conceptual design, design language, and the use of icons and typography. It also includes online research on AR and VR technologies, along with the application's architecture, which is built on the Vufuria SDK. Furthermore, the report covers the AR experience, the functionality of IoT, and the application's features, such as an interactive campus map, visitor scheduling, and plant information through camera functionalities and proximity sensors. Evaluation and testing methods, including participant-based evaluation and usability testing, are also discussed. The report concludes with potential future enhancements and provides references for further study.

Running head: DESIGN AND DIALOGUE 1

Design and Dialogue

[Name of Student]

[Institution Affiliation]

Design and Dialogue

[Name of Student]

[Institution Affiliation]

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Running head: DESIGN AND DIALOGUE 2

Table of Contents

1 Introduction..............................................................................................................................5

2 Design Approaches..................................................................................................................6

2.1 Conceptual Design............................................................................................................7

2.2 Design Language...............................................................................................................8

2.2.1 Color..........................................................................................................................9

2.3 Icons................................................................................................................................10

2.3.1 Typography..............................................................................................................11

3 Understanding........................................................................................................................12

3.1 Online Research..............................................................................................................12

3.1.1 Marker-based system...............................................................................................13

3.1.2 Markerless system...................................................................................................13

3.2 The AR Experience.........................................................................................................14

3.3 How IoT Works..............................................................................................................14

3.4 How The Application Works..........................................................................................16

3.4.1 Requirements...........................................................................................................16

3.4.2 Sensors.....................................................................................................................16

4 Environment..........................................................................................................................19

4.1 Story Boarding................................................................................................................19

5 Evaluation and Testing..........................................................................................................20

Table of Contents

1 Introduction..............................................................................................................................5

2 Design Approaches..................................................................................................................6

2.1 Conceptual Design............................................................................................................7

2.2 Design Language...............................................................................................................8

2.2.1 Color..........................................................................................................................9

2.3 Icons................................................................................................................................10

2.3.1 Typography..............................................................................................................11

3 Understanding........................................................................................................................12

3.1 Online Research..............................................................................................................12

3.1.1 Marker-based system...............................................................................................13

3.1.2 Markerless system...................................................................................................13

3.2 The AR Experience.........................................................................................................14

3.3 How IoT Works..............................................................................................................14

3.4 How The Application Works..........................................................................................16

3.4.1 Requirements...........................................................................................................16

3.4.2 Sensors.....................................................................................................................16

4 Environment..........................................................................................................................19

4.1 Story Boarding................................................................................................................19

5 Evaluation and Testing..........................................................................................................20

Running head: DESIGN AND DIALOGUE 3

5.1 Participant Based Evaluation..........................................................................................20

5.2 List of Requirements and Problems................................................................................21

5.3 Usability Testing of The App..........................................................................................22

6 Future Enhancements.............................................................................................................23

7 Conclusion.............................................................................................................................24

8 References..............................................................................................................................25

5.1 Participant Based Evaluation..........................................................................................20

5.2 List of Requirements and Problems................................................................................21

5.3 Usability Testing of The App..........................................................................................22

6 Future Enhancements.............................................................................................................23

7 Conclusion.............................................................................................................................24

8 References..............................................................................................................................25

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Running head: DESIGN AND DIALOGUE 4

List Of Tables

Table 1 Key requirements..............................................................................................................16

Table 2 Sensors..............................................................................................................................17

List Of Figures

Figure 1 Overal Design....................................................................................................................7

Figure 2 Conceptual Design............................................................................................................8

Figure 3 Design Language...............................................................................................................9

Figure 4 Color choice....................................................................................................................10

Figure 5 Icons to Be Used.............................................................................................................11

Figure 6 Design Typography.........................................................................................................12

Figure 7AR Experience.................................................................................................................14

Figure 8 IoT In Action...................................................................................................................15

Figure 9 App environment.............................................................................................................19

Figure 10 AR and 3-D of the tree area..........................................................................................20

List Of Tables

Table 1 Key requirements..............................................................................................................16

Table 2 Sensors..............................................................................................................................17

List Of Figures

Figure 1 Overal Design....................................................................................................................7

Figure 2 Conceptual Design............................................................................................................8

Figure 3 Design Language...............................................................................................................9

Figure 4 Color choice....................................................................................................................10

Figure 5 Icons to Be Used.............................................................................................................11

Figure 6 Design Typography.........................................................................................................12

Figure 7AR Experience.................................................................................................................14

Figure 8 IoT In Action...................................................................................................................15

Figure 9 App environment.............................................................................................................19

Figure 10 AR and 3-D of the tree area..........................................................................................20

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Running head: DESIGN AND DIALOGUE 5

1 INTRODUCTION

The newly proposed project for the Napier Lion’s Gate includes a more user-friendly

mobile phone application with enhanced interactivity which is geared to give the visitors of the

campus a more interactive application to get to know the campus garden. To make this a reality,

the smartphone application has been proposed to have a new set of requirements that include the

inclusion of advanced technologies such as augmented reality, virtual reality and the new field of

Internet of Things. Some of the key features of the app include an interactive campus map, a

feature for visitors to schedule their visits to the campus and have a 360 degrees view of the

campus buildings and infrastructure and garden [1]. Through the application, one is able to

display detailed information about plants in the vicinity and their corresponding species. This is

made possible due to various camera functionalities built into the app and inclusion of proximity

sensors to correctly identify the plants [2].

The underlying architecture of the app is built on the Vufuria SDK which is normally

used to build Augmented reality applications for mobile devices. The app is secured by an

interactive login screen where users such as students and the campus staff can securely log in and

once authorized, they will be presented with a simplified version of the campus moodle. In this

moodle, the users can view the various courses offered by the campus, the status of various

modules and the student is given the ability to choose different options for taking modules, either

of trimester basis. Since AR and mostly IoT forms the bulk of the application, different student

and staff will be presented with a different view which is customized for their needs [3].

1 INTRODUCTION

The newly proposed project for the Napier Lion’s Gate includes a more user-friendly

mobile phone application with enhanced interactivity which is geared to give the visitors of the

campus a more interactive application to get to know the campus garden. To make this a reality,

the smartphone application has been proposed to have a new set of requirements that include the

inclusion of advanced technologies such as augmented reality, virtual reality and the new field of

Internet of Things. Some of the key features of the app include an interactive campus map, a

feature for visitors to schedule their visits to the campus and have a 360 degrees view of the

campus buildings and infrastructure and garden [1]. Through the application, one is able to

display detailed information about plants in the vicinity and their corresponding species. This is

made possible due to various camera functionalities built into the app and inclusion of proximity

sensors to correctly identify the plants [2].

The underlying architecture of the app is built on the Vufuria SDK which is normally

used to build Augmented reality applications for mobile devices. The app is secured by an

interactive login screen where users such as students and the campus staff can securely log in and

once authorized, they will be presented with a simplified version of the campus moodle. In this

moodle, the users can view the various courses offered by the campus, the status of various

modules and the student is given the ability to choose different options for taking modules, either

of trimester basis. Since AR and mostly IoT forms the bulk of the application, different student

and staff will be presented with a different view which is customized for their needs [3].

Running head: DESIGN AND DIALOGUE 6

Augmented reality has been a growing field in the technology industry. It comprises of a

superimposed image generated by a computer on a view of a real-world object. The general

effect is a more composite view of the object [4]. The above has been made possible, thanks to

the processing of data generated by the system such as audio-visual, graphical and GIS. Several

research has been done in this field and the overall effect is the presentation of-of a blend of the

real object and computer-generated images to give the user a better experience with the real-life

objects [5]. This has made the virtual and real objects to exist in the same space within the

application.

The IoT, on the other hand, is made of devices and other information gathering objects

which are able to seamlessly communicate with each other and share data. This has increased the

potential of IoT devices to numerous use cases [6].

The combination of the two technology has provided the app with the ability to identify

the various tree and tree species in the campus garden, provision of a brief summary and the

potential benefits of the plant is included in the augmented view. The information is presented to

the user in a 3-dimensional space to increase more interactivity [7].

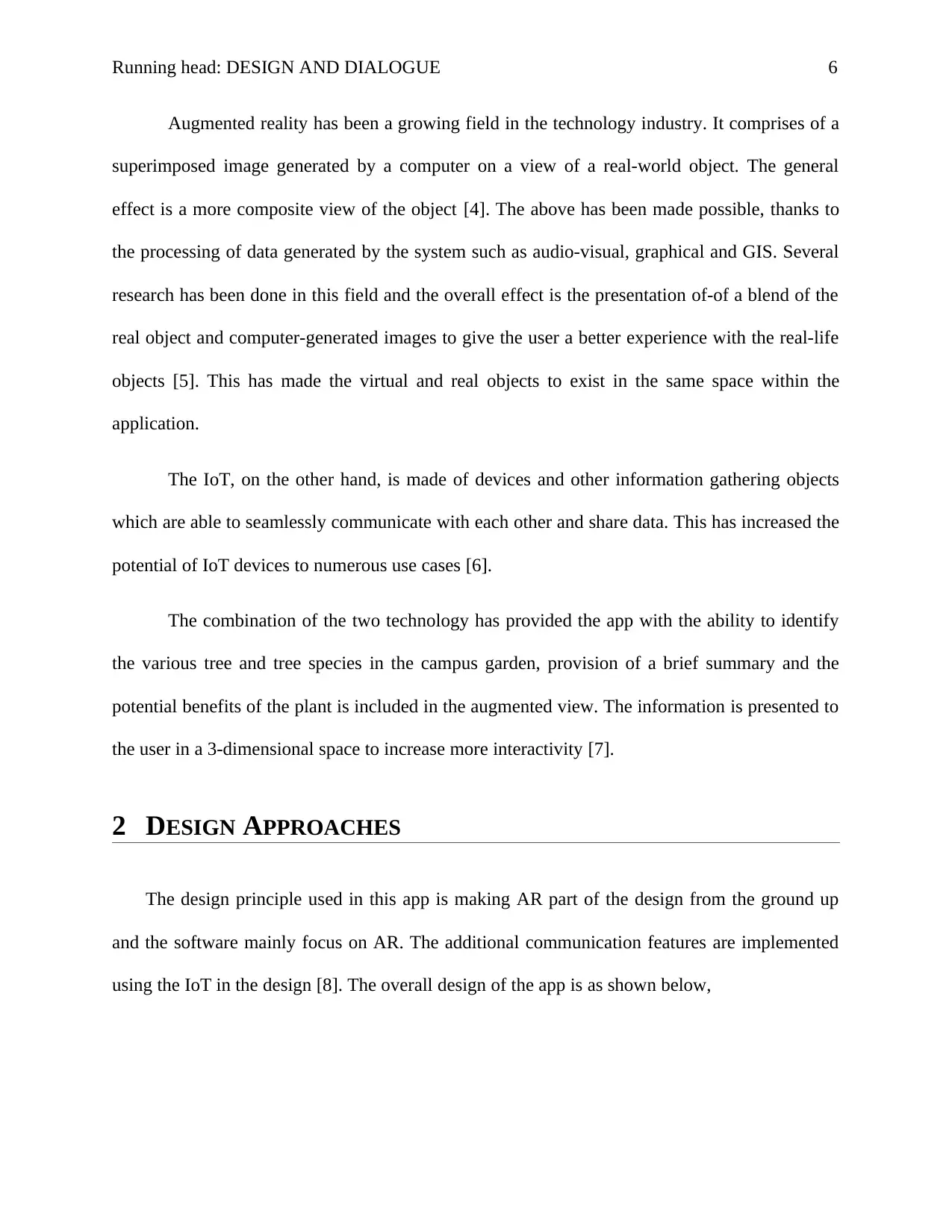

2 DESIGN APPROACHES

The design principle used in this app is making AR part of the design from the ground up

and the software mainly focus on AR. The additional communication features are implemented

using the IoT in the design [8]. The overall design of the app is as shown below,

Augmented reality has been a growing field in the technology industry. It comprises of a

superimposed image generated by a computer on a view of a real-world object. The general

effect is a more composite view of the object [4]. The above has been made possible, thanks to

the processing of data generated by the system such as audio-visual, graphical and GIS. Several

research has been done in this field and the overall effect is the presentation of-of a blend of the

real object and computer-generated images to give the user a better experience with the real-life

objects [5]. This has made the virtual and real objects to exist in the same space within the

application.

The IoT, on the other hand, is made of devices and other information gathering objects

which are able to seamlessly communicate with each other and share data. This has increased the

potential of IoT devices to numerous use cases [6].

The combination of the two technology has provided the app with the ability to identify

the various tree and tree species in the campus garden, provision of a brief summary and the

potential benefits of the plant is included in the augmented view. The information is presented to

the user in a 3-dimensional space to increase more interactivity [7].

2 DESIGN APPROACHES

The design principle used in this app is making AR part of the design from the ground up

and the software mainly focus on AR. The additional communication features are implemented

using the IoT in the design [8]. The overall design of the app is as shown below,

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Running head: DESIGN AND DIALOGUE 7

Figure 1 Overal Design

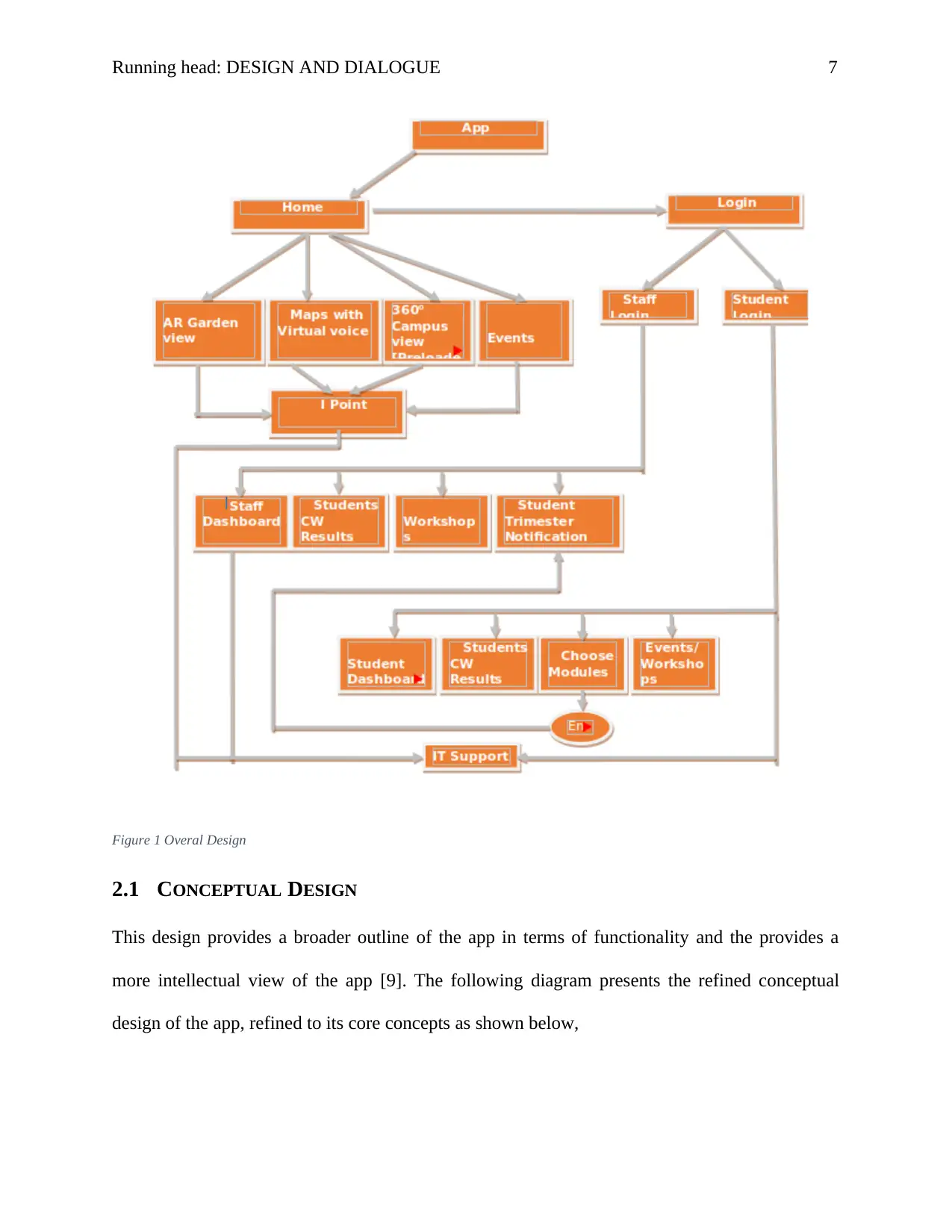

2.1 CONCEPTUAL DESIGN

This design provides a broader outline of the app in terms of functionality and the provides a

more intellectual view of the app [9]. The following diagram presents the refined conceptual

design of the app, refined to its core concepts as shown below,

Figure 1 Overal Design

2.1 CONCEPTUAL DESIGN

This design provides a broader outline of the app in terms of functionality and the provides a

more intellectual view of the app [9]. The following diagram presents the refined conceptual

design of the app, refined to its core concepts as shown below,

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Running head: DESIGN AND DIALOGUE 8

Figure 2 Conceptual Design

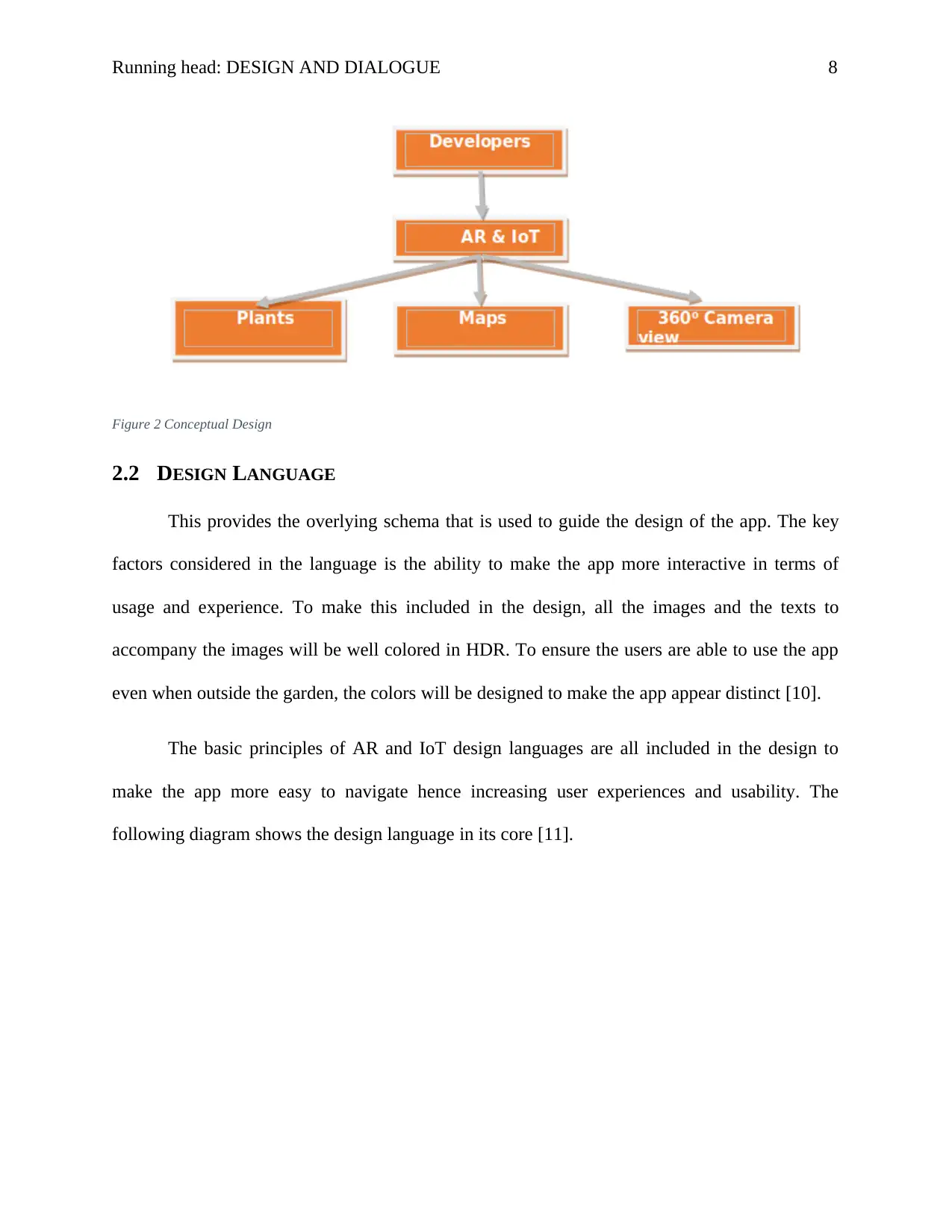

2.2 DESIGN LANGUAGE

This provides the overlying schema that is used to guide the design of the app. The key

factors considered in the language is the ability to make the app more interactive in terms of

usage and experience. To make this included in the design, all the images and the texts to

accompany the images will be well colored in HDR. To ensure the users are able to use the app

even when outside the garden, the colors will be designed to make the app appear distinct [10].

The basic principles of AR and IoT design languages are all included in the design to

make the app more easy to navigate hence increasing user experiences and usability. The

following diagram shows the design language in its core [11].

Figure 2 Conceptual Design

2.2 DESIGN LANGUAGE

This provides the overlying schema that is used to guide the design of the app. The key

factors considered in the language is the ability to make the app more interactive in terms of

usage and experience. To make this included in the design, all the images and the texts to

accompany the images will be well colored in HDR. To ensure the users are able to use the app

even when outside the garden, the colors will be designed to make the app appear distinct [10].

The basic principles of AR and IoT design languages are all included in the design to

make the app more easy to navigate hence increasing user experiences and usability. The

following diagram shows the design language in its core [11].

Running head: DESIGN AND DIALOGUE 9

Figure 3 Design Language

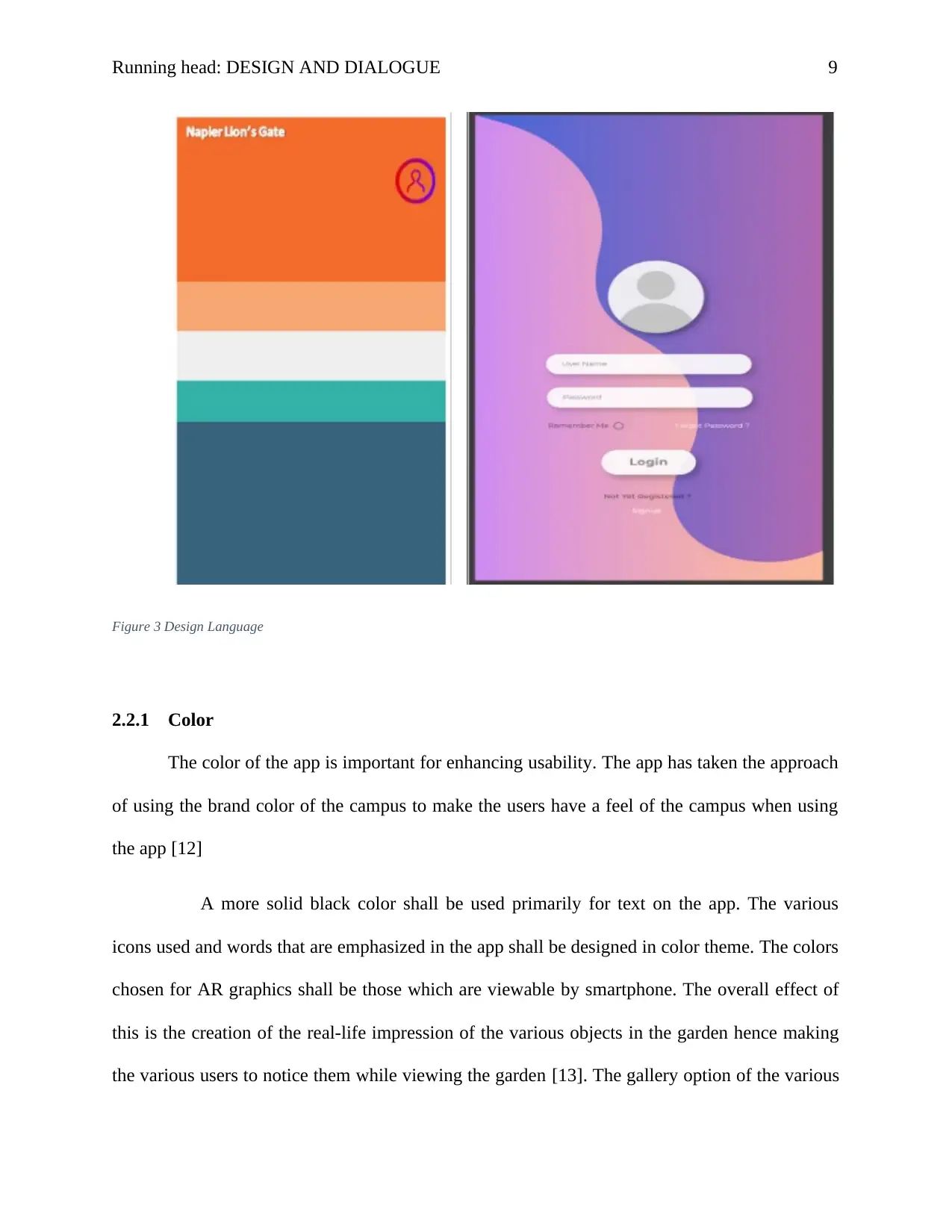

2.2.1 Color

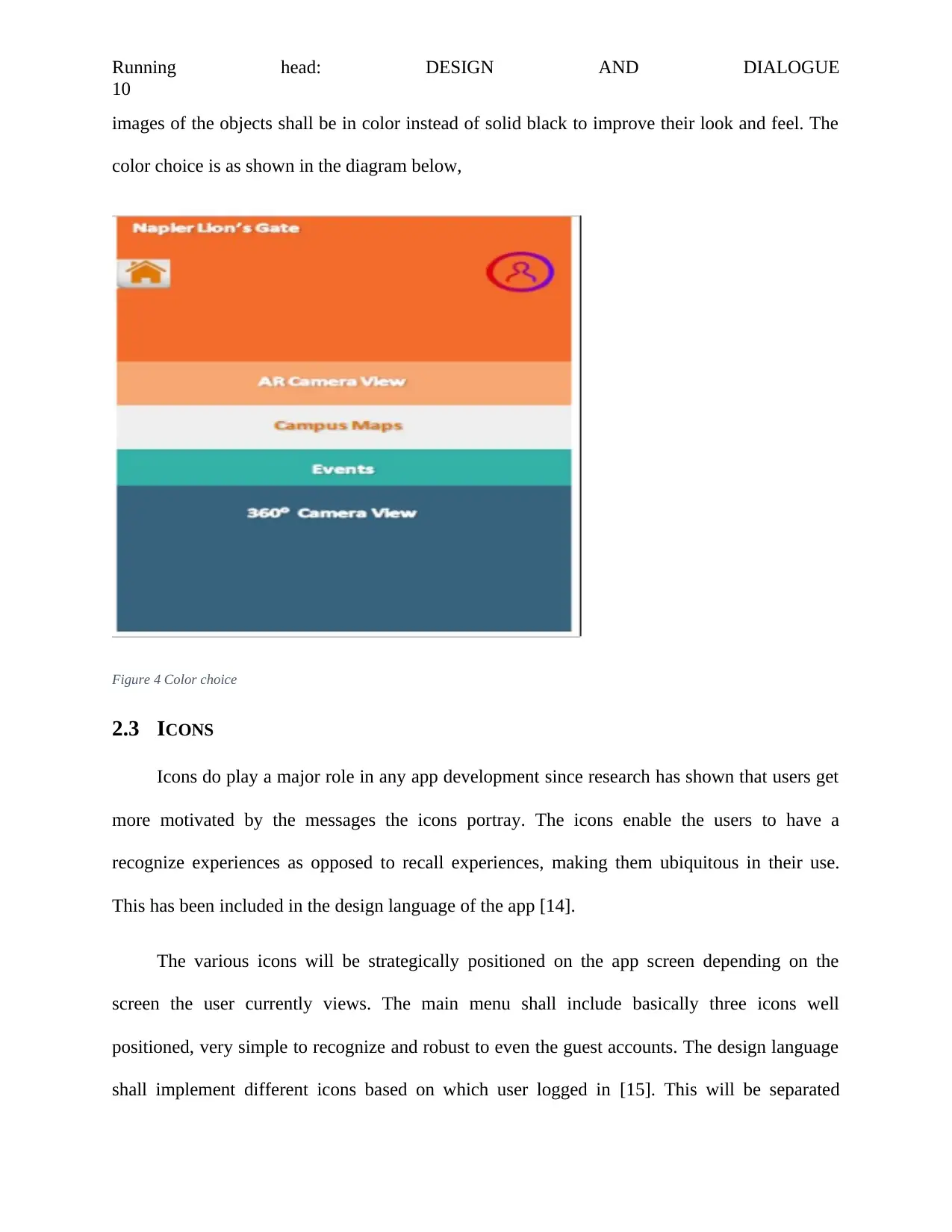

The color of the app is important for enhancing usability. The app has taken the approach

of using the brand color of the campus to make the users have a feel of the campus when using

the app [12]

A more solid black color shall be used primarily for text on the app. The various

icons used and words that are emphasized in the app shall be designed in color theme. The colors

chosen for AR graphics shall be those which are viewable by smartphone. The overall effect of

this is the creation of the real-life impression of the various objects in the garden hence making

the various users to notice them while viewing the garden [13]. The gallery option of the various

Figure 3 Design Language

2.2.1 Color

The color of the app is important for enhancing usability. The app has taken the approach

of using the brand color of the campus to make the users have a feel of the campus when using

the app [12]

A more solid black color shall be used primarily for text on the app. The various

icons used and words that are emphasized in the app shall be designed in color theme. The colors

chosen for AR graphics shall be those which are viewable by smartphone. The overall effect of

this is the creation of the real-life impression of the various objects in the garden hence making

the various users to notice them while viewing the garden [13]. The gallery option of the various

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Running head: DESIGN AND DIALOGUE

10

images of the objects shall be in color instead of solid black to improve their look and feel. The

color choice is as shown in the diagram below,

Figure 4 Color choice

2.3 ICONS

Icons do play a major role in any app development since research has shown that users get

more motivated by the messages the icons portray. The icons enable the users to have a

recognize experiences as opposed to recall experiences, making them ubiquitous in their use.

This has been included in the design language of the app [14].

The various icons will be strategically positioned on the app screen depending on the

screen the user currently views. The main menu shall include basically three icons well

positioned, very simple to recognize and robust to even the guest accounts. The design language

shall implement different icons based on which user logged in [15]. This will be separated

10

images of the objects shall be in color instead of solid black to improve their look and feel. The

color choice is as shown in the diagram below,

Figure 4 Color choice

2.3 ICONS

Icons do play a major role in any app development since research has shown that users get

more motivated by the messages the icons portray. The icons enable the users to have a

recognize experiences as opposed to recall experiences, making them ubiquitous in their use.

This has been included in the design language of the app [14].

The various icons will be strategically positioned on the app screen depending on the

screen the user currently views. The main menu shall include basically three icons well

positioned, very simple to recognize and robust to even the guest accounts. The design language

shall implement different icons based on which user logged in [15]. This will be separated

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Running head: DESIGN AND DIALOGUE

11

screens for both students and the general staffs. [16]The following represents some sample icons

to be used in the design language.

Figure 5 Icons to Be Used

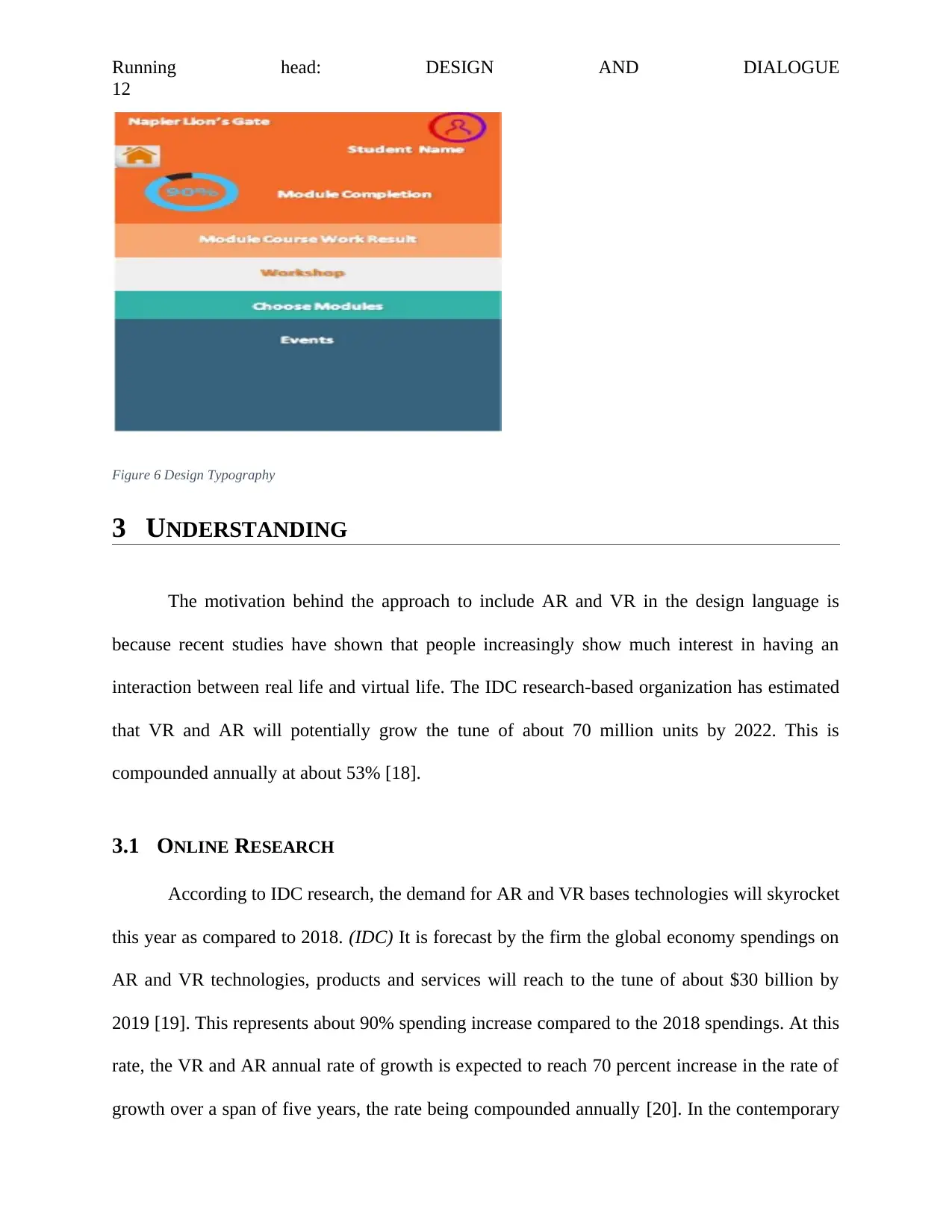

2.3.1 Typography

Typography is key in enhancing the styling of the app to make it more appealing to the users in

terms of looks and feel. It is therefore important to include it in the design language to foster

more interaction with the app. The styled typos used in the app will enhance the objective of

giving he augmented reality look by making the objects appear real life [17]. Sample typos are as

shown below,

11

screens for both students and the general staffs. [16]The following represents some sample icons

to be used in the design language.

Figure 5 Icons to Be Used

2.3.1 Typography

Typography is key in enhancing the styling of the app to make it more appealing to the users in

terms of looks and feel. It is therefore important to include it in the design language to foster

more interaction with the app. The styled typos used in the app will enhance the objective of

giving he augmented reality look by making the objects appear real life [17]. Sample typos are as

shown below,

Running head: DESIGN AND DIALOGUE

12

Figure 6 Design Typography

3 UNDERSTANDING

The motivation behind the approach to include AR and VR in the design language is

because recent studies have shown that people increasingly show much interest in having an

interaction between real life and virtual life. The IDC research-based organization has estimated

that VR and AR will potentially grow the tune of about 70 million units by 2022. This is

compounded annually at about 53% [18].

3.1 ONLINE RESEARCH

According to IDC research, the demand for AR and VR bases technologies will skyrocket

this year as compared to 2018. (IDC) It is forecast by the firm the global economy spendings on

AR and VR technologies, products and services will reach to the tune of about $30 billion by

2019 [19]. This represents about 90% spending increase compared to the 2018 spendings. At this

rate, the VR and AR annual rate of growth is expected to reach 70 percent increase in the rate of

growth over a span of five years, the rate being compounded annually [20]. In the contemporary

12

Figure 6 Design Typography

3 UNDERSTANDING

The motivation behind the approach to include AR and VR in the design language is

because recent studies have shown that people increasingly show much interest in having an

interaction between real life and virtual life. The IDC research-based organization has estimated

that VR and AR will potentially grow the tune of about 70 million units by 2022. This is

compounded annually at about 53% [18].

3.1 ONLINE RESEARCH

According to IDC research, the demand for AR and VR bases technologies will skyrocket

this year as compared to 2018. (IDC) It is forecast by the firm the global economy spendings on

AR and VR technologies, products and services will reach to the tune of about $30 billion by

2019 [19]. This represents about 90% spending increase compared to the 2018 spendings. At this

rate, the VR and AR annual rate of growth is expected to reach 70 percent increase in the rate of

growth over a span of five years, the rate being compounded annually [20]. In the contemporary

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 27

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.