ITECH 2201: Cloud Computing - Big Data Workbook Assignment, Week 6

VerifiedAdded on 2021/05/31

|24

|7358

|489

Homework Assignment

AI Summary

This document presents a comprehensive solution to a Week 6 Big Data assignment within the ITECH 2201 Cloud Computing course. The assignment delves into key aspects of data science, including its definition, applications, and the impact of big data. It explores the characteristics of big data, such as velocity, variety, volume, veracity, volatility, value, and validity, referencing academic research. The solution also examines big data platforms, including data acquisition, organization, and analysis techniques, supported by video resources. Furthermore, it analyzes Google's data products like PageRank and Spell Checker, highlighting how large-scale data is used effectively. The assignment also discusses the limitations of traditional relational databases (RDBMS) in handling big data and introduces NoSQL databases. The solution incorporates cited articles and videos to support its findings, providing a detailed understanding of big data concepts and their practical implications in cloud computing.

ITECH 2201 Cloud Computing

School of Science, Information Technology & Engineering

Workbook for Week 6 (Big Data)

Please note: All the efforts were taken to ensure the given web links are accessible.

However, if they are broken – please use any appropriate video/article and refer them in

your answer

CRICOS Provider No. 00103D Insert file name here Page 1 of 24

School of Science, Information Technology & Engineering

Workbook for Week 6 (Big Data)

Please note: All the efforts were taken to ensure the given web links are accessible.

However, if they are broken – please use any appropriate video/article and refer them in

your answer

CRICOS Provider No. 00103D Insert file name here Page 1 of 24

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Part A

Exercise 1: Data Science

Read the article at http://datascience.berkeley.edu/about/what-is-data-science/ and answer the following:

What is Data Science?

Data science basically a topic that refers to the significance and organization of data avalanche creation in

recent years. It allows the identification of patterns and patterns of data, and allows people with advanced

scholarships to improve the conditions in which humanity creates social plus business value. The

appearance of the "bid data" also enables us to comprehend these phenomena more deeply, ranging from

biological systems and economic behavior 1 to human social entities.

According to IBM estimation, what is the percent of the data in the world today that has been created

in the past two years?

It is measured or estimated that ninety percent of the world's data in last two years has been completed by

IBM.

What is the value of petabytestorage?

Million gigabytes also written as (10 to 15th power) is peta-byte.

For each course, both foundation and advanced, you find at

http://datascience.berkeley.edu/academics/curriculum/briefly state (in 2 to 3 lines) what they offer?

Based on the given course description as well as from the video. The purpose of this question is to

understand the different streams available in Data Science.

Foundation course:

Foundation course or basic curriculum is n essential skills and knowledge that students provide in the

data science. It includes storing, searching, designing, and analysing of research work in data science

provide students with data visualization and practical application knowledge (Khan, Fahim Uddin &

Gupta, 2014).

Advanced course:

Advanced course plays an important role in deep understanding and value and application of the data

science. Analytical method comprises complex skills that address big data-related issues through

CRICOS Provider No. 00103D Insert file name here Page 2 of 24

Exercise 1: Data Science

Read the article at http://datascience.berkeley.edu/about/what-is-data-science/ and answer the following:

What is Data Science?

Data science basically a topic that refers to the significance and organization of data avalanche creation in

recent years. It allows the identification of patterns and patterns of data, and allows people with advanced

scholarships to improve the conditions in which humanity creates social plus business value. The

appearance of the "bid data" also enables us to comprehend these phenomena more deeply, ranging from

biological systems and economic behavior 1 to human social entities.

According to IBM estimation, what is the percent of the data in the world today that has been created

in the past two years?

It is measured or estimated that ninety percent of the world's data in last two years has been completed by

IBM.

What is the value of petabytestorage?

Million gigabytes also written as (10 to 15th power) is peta-byte.

For each course, both foundation and advanced, you find at

http://datascience.berkeley.edu/academics/curriculum/briefly state (in 2 to 3 lines) what they offer?

Based on the given course description as well as from the video. The purpose of this question is to

understand the different streams available in Data Science.

Foundation course:

Foundation course or basic curriculum is n essential skills and knowledge that students provide in the

data science. It includes storing, searching, designing, and analysing of research work in data science

provide students with data visualization and practical application knowledge (Khan, Fahim Uddin &

Gupta, 2014).

Advanced course:

Advanced course plays an important role in deep understanding and value and application of the data

science. Analytical method comprises complex skills that address big data-related issues through

CRICOS Provider No. 00103D Insert file name here Page 2 of 24

experimental design and data visualization to help students explore and make them aware of the exact

usage of data science.

Exercise 2: Characteristics of Big Data

Read the following research paper from IEEE Xplore Digital Library

Ali-ud-din Khan, M.; Uddin, M.F.; Gupta, N., "Seven V's of Big Data understanding Big Data to

extract value," American Society for Engineering Education (ASEE Zone 1), 2014 Zone 1 Conference

of the , pp.1,5, 3-5 April 2014 and answer the following questions: Summarise the motivation of the

author (in one paragraph)

As the author has described, it comes from the fact that BD is emphasized because it now become important

part of life and also hides solutions to any industry problem. The main reason for this paper is that they

think big data is the main area of technology. In addition, it is written for "BD Ocean". As we all know,

billions of statistics are generated every day, making big data as a style.

What are the 7 v’s mentioned in the paper? Briefly describe each V in one paragraph.

1) Velocity: Velocity is discussed from two perspectives. Basic thing is incoming of data that enterprise

needs to prepare the technology plus database engine processes. The other is to move big data to a large

storage area that needs a quick response when the data arrives.

2) Variety: It includes diverse shapes, such as video, text, which is a main difference between big data as

well as traditional data. The challenging part is due to complexity that can lead to erroneous data

integration.

3) Volume: Volume means size of information or data created from some sources including audio, text,

video, research reports, spatial images, social networks, weather forecasts, crime reports to mention.

4) Veracity: Compared with traditional data, it focuses on the reliability of data because it can be

standardized. These big data come directly from users. The reliability of these users is low. Therefore,

cleaning up data is an important step for big data.

5) Volatility: When considering big data, volatility means data retention strategy. This is easily executed

in a relational database furthermore can expand the type, speed, and amount of data in the big data

world.

6) Value: Value is a significant V value because it is an ideal result of big data analysis and is also the

result of previous analysis.

7) Validity: Validity means accuracy of the data and correct usage and data is real and does not want to be

effective in dissimilar situations (Corea, 2016).

Explore the author’s future work by using the reference [4] in the research paper. Summarise

your understanding how Big Data can improve the healthcare sector in 300 words.

As it has been stated that the cost or ownership and management data will be exceeded. The governance

mechanism depends to a large extent on the value of the data. For structures and strategies, it is required to

CRICOS Provider No. 00103D Insert file name here Page 3 of 24

usage of data science.

Exercise 2: Characteristics of Big Data

Read the following research paper from IEEE Xplore Digital Library

Ali-ud-din Khan, M.; Uddin, M.F.; Gupta, N., "Seven V's of Big Data understanding Big Data to

extract value," American Society for Engineering Education (ASEE Zone 1), 2014 Zone 1 Conference

of the , pp.1,5, 3-5 April 2014 and answer the following questions: Summarise the motivation of the

author (in one paragraph)

As the author has described, it comes from the fact that BD is emphasized because it now become important

part of life and also hides solutions to any industry problem. The main reason for this paper is that they

think big data is the main area of technology. In addition, it is written for "BD Ocean". As we all know,

billions of statistics are generated every day, making big data as a style.

What are the 7 v’s mentioned in the paper? Briefly describe each V in one paragraph.

1) Velocity: Velocity is discussed from two perspectives. Basic thing is incoming of data that enterprise

needs to prepare the technology plus database engine processes. The other is to move big data to a large

storage area that needs a quick response when the data arrives.

2) Variety: It includes diverse shapes, such as video, text, which is a main difference between big data as

well as traditional data. The challenging part is due to complexity that can lead to erroneous data

integration.

3) Volume: Volume means size of information or data created from some sources including audio, text,

video, research reports, spatial images, social networks, weather forecasts, crime reports to mention.

4) Veracity: Compared with traditional data, it focuses on the reliability of data because it can be

standardized. These big data come directly from users. The reliability of these users is low. Therefore,

cleaning up data is an important step for big data.

5) Volatility: When considering big data, volatility means data retention strategy. This is easily executed

in a relational database furthermore can expand the type, speed, and amount of data in the big data

world.

6) Value: Value is a significant V value because it is an ideal result of big data analysis and is also the

result of previous analysis.

7) Validity: Validity means accuracy of the data and correct usage and data is real and does not want to be

effective in dissimilar situations (Corea, 2016).

Explore the author’s future work by using the reference [4] in the research paper. Summarise

your understanding how Big Data can improve the healthcare sector in 300 words.

As it has been stated that the cost or ownership and management data will be exceeded. The governance

mechanism depends to a large extent on the value of the data. For structures and strategies, it is required to

CRICOS Provider No. 00103D Insert file name here Page 3 of 24

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

write and execute truth limit of project information extraction simultaneously. Data can be between layers,

in short, there is less risk of data at higher levels. Therefore, it is recognized that there are higher storage

costs and higher levels of protection to ensure these levels are related to costs. Advent of digitalized

technology has provided many benefits for healthcare suppliers. One of the key advances is the utilizes of

big information in medical business. Utilizing big data may help medical industry participants provides

more effective operations moreover insight into patient as well as their well being. Healthcare business

faces a variety of challenges, from a new disorder outbreak to maintain optimal operational efficiencies.

The Big data analytic may also help solve these health care challenges. Utilizing a large amount of

information in healthcare industry, such as clinical, financial, development and research, operational data,

and management, The Big Data may gain meaningful insight and improve operational effectiveness of the

business. “Healthcare companies can lower medical costs and provide better services Finding ways to treat

diseases: Some drugs seem to works for several peoples, however not other, furthermore there are the

various things to observe in single genome. It is impossible to learn all of these learning’s in detail,

however big data may help reveal unknown correlation, hidden pattern, and insight also by examine huge

amounts of information. In future, it can be used to create special drugs for the patient's human genome to

obtain the best therapeutic effect. Combining all patients' electronic health records, dietary information,

social factors, etc. with DNA sequencing can recommend customized treatment and personalized medicine.

Aurora Health Care has begun a proof of concept for this, and they have been able to reduce the

readmission rate by 10% and save $6 million annually (Abouelmehdi, Beni-Hessane & Khaloufi, 2018).

Exercise 3: Big Data Platform

In order to build a big data platform - one has to acquire, organize and analyse the big data. Go through the

following links and answer the questions that follow the links: Check the videos and change the wordings

− http://www.infochimps.com/infochimps-cloud/how-it-works/

− http://www.youtube.com/watch?v=TfuhuA_uaho

− http://www.youtube.com/watch?v=IC6jVRO2Hq4

− http://www.youtube.com/watch?v=2yf_jrBhz5w

Please note: You are encouraged to watch all the videos in the series from Oracle.

How to acquire big data for enterprises and how it can be used?

From the video mentioned as well as Oracle's article the main change to infrastructure are the procurement

phase. These 2 major use cases must be consider. First, for the social media update, forum comment and

blogs, companies can simply remove analysis of overnight or weekly trends. Want to update, study, also

store information for online profile moreover continue to monitor sensor. In case, the NoSQL database may

be use to store a big data but it is extensible and flexible. Even the Hadoop distributed system files may be

use for batch information. In this method, the system aims to capture all information by not parsing data and

categorizing it in fixed mode. As a result, data can be easily accessed through simple keys and customer-

based applications.

CRICOS Provider No. 00103D Insert file name here Page 4 of 24

in short, there is less risk of data at higher levels. Therefore, it is recognized that there are higher storage

costs and higher levels of protection to ensure these levels are related to costs. Advent of digitalized

technology has provided many benefits for healthcare suppliers. One of the key advances is the utilizes of

big information in medical business. Utilizing big data may help medical industry participants provides

more effective operations moreover insight into patient as well as their well being. Healthcare business

faces a variety of challenges, from a new disorder outbreak to maintain optimal operational efficiencies.

The Big data analytic may also help solve these health care challenges. Utilizing a large amount of

information in healthcare industry, such as clinical, financial, development and research, operational data,

and management, The Big Data may gain meaningful insight and improve operational effectiveness of the

business. “Healthcare companies can lower medical costs and provide better services Finding ways to treat

diseases: Some drugs seem to works for several peoples, however not other, furthermore there are the

various things to observe in single genome. It is impossible to learn all of these learning’s in detail,

however big data may help reveal unknown correlation, hidden pattern, and insight also by examine huge

amounts of information. In future, it can be used to create special drugs for the patient's human genome to

obtain the best therapeutic effect. Combining all patients' electronic health records, dietary information,

social factors, etc. with DNA sequencing can recommend customized treatment and personalized medicine.

Aurora Health Care has begun a proof of concept for this, and they have been able to reduce the

readmission rate by 10% and save $6 million annually (Abouelmehdi, Beni-Hessane & Khaloufi, 2018).

Exercise 3: Big Data Platform

In order to build a big data platform - one has to acquire, organize and analyse the big data. Go through the

following links and answer the questions that follow the links: Check the videos and change the wordings

− http://www.infochimps.com/infochimps-cloud/how-it-works/

− http://www.youtube.com/watch?v=TfuhuA_uaho

− http://www.youtube.com/watch?v=IC6jVRO2Hq4

− http://www.youtube.com/watch?v=2yf_jrBhz5w

Please note: You are encouraged to watch all the videos in the series from Oracle.

How to acquire big data for enterprises and how it can be used?

From the video mentioned as well as Oracle's article the main change to infrastructure are the procurement

phase. These 2 major use cases must be consider. First, for the social media update, forum comment and

blogs, companies can simply remove analysis of overnight or weekly trends. Want to update, study, also

store information for online profile moreover continue to monitor sensor. In case, the NoSQL database may

be use to store a big data but it is extensible and flexible. Even the Hadoop distributed system files may be

use for batch information. In this method, the system aims to capture all information by not parsing data and

categorizing it in fixed mode. As a result, data can be easily accessed through simple keys and customer-

based applications.

CRICOS Provider No. 00103D Insert file name here Page 4 of 24

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

How to organize and handle the big data?

Stored data in the HDFS want to be a pre-processed, well organized, and converted so that it may be loaded

into information warehouse using traditional enterprise data and data store in NoSQL. It moreover knows

that BD is always in different formats. Procedure called sessions are for specific information. This

procedure translates behaviour patterns and other related information into useful data so after that it may be

aggregated as well as loaded into the relational database systems.

What are the analyses that can be done using big data?

Big data analysis is complete in distributed surroundings because big data analysed in some deeper

analysis, i.e. due to the required infrastructure, data mining and statistical analysis of various systems for

storing various data. Zooming can be done on large amounts of data. Analytical models can make better

decisions automatically. Finally, the response time driven in changing behaviour can be delivered faster

(Jee & Kim, 2013).

CRICOS Provider No. 00103D Insert file name here Page 5 of 24

Stored data in the HDFS want to be a pre-processed, well organized, and converted so that it may be loaded

into information warehouse using traditional enterprise data and data store in NoSQL. It moreover knows

that BD is always in different formats. Procedure called sessions are for specific information. This

procedure translates behaviour patterns and other related information into useful data so after that it may be

aggregated as well as loaded into the relational database systems.

What are the analyses that can be done using big data?

Big data analysis is complete in distributed surroundings because big data analysed in some deeper

analysis, i.e. due to the required infrastructure, data mining and statistical analysis of various systems for

storing various data. Zooming can be done on large amounts of data. Analytical models can make better

decisions automatically. Finally, the response time driven in changing behaviour can be delivered faster

(Jee & Kim, 2013).

CRICOS Provider No. 00103D Insert file name here Page 5 of 24

Part B (4 Marks)

Part B answers should be based on well cited article/videos – name the references used in your answer.For

more information read the guidelines as given in Assignment 1.

Exercise 4: Big Data Products (1 mark)

Google is a master at creating data products. Below are few examples from Google. Describe the below

products and explain how the large scale data is used effectively in these products.

a. Google’s PageRank

In 2005, the Google began to link Google's webmasters and blogs as "votes," a new attribute called a

link to unfollow, which is a countermeasure against spam. The hyperlink page correspond to a single

page vote, and a voting page is obtained through the significance of all linked pages. If there is no

linked page, the page may have greater number of relations or no hierarchy.

b. Google’s Spell Checker

This spell checker are used to spell words. It is a standalone application. It is called electronic dictionaries,

search engine, word processor furthermore email customers. This spellchecker are used to separate words

when comparing during stem analysis.

c. Google’s Flu Trends

This trend of Google Flu are the web services operated by the Google that provide estimate of influenza

activities in 25 and more than that countries. It estimates available historical information and present

research information for download (Kościelniak & Puto, 2015).

d. Google’s Trends

Google Trends are Google search-based web-based tool. When search terms are entered in different

languages for search in different regions of the world, they are usually displayed as search terms.

Like Google – Facebook and LinkedIn also uses large scale data effectively. How?

This is well-known facts, that generates a huge amount of information in website, because they are the

social platform, moreover all of these information’s should be recognized against the user's behaviour

pattern to get the recommendation. Such as, Face book are use for various activities that provide suggestion

that the users need to purchase or attends that is likely to be post on the page with explore criteria.

Exercise 5: Big Data Tools

Briefly explain why a traditional relational database (RDBS) is not effectively used to store big data?

CRICOS Provider No. 00103D Insert file name here Page 6 of 24

Part B answers should be based on well cited article/videos – name the references used in your answer.For

more information read the guidelines as given in Assignment 1.

Exercise 4: Big Data Products (1 mark)

Google is a master at creating data products. Below are few examples from Google. Describe the below

products and explain how the large scale data is used effectively in these products.

a. Google’s PageRank

In 2005, the Google began to link Google's webmasters and blogs as "votes," a new attribute called a

link to unfollow, which is a countermeasure against spam. The hyperlink page correspond to a single

page vote, and a voting page is obtained through the significance of all linked pages. If there is no

linked page, the page may have greater number of relations or no hierarchy.

b. Google’s Spell Checker

This spell checker are used to spell words. It is a standalone application. It is called electronic dictionaries,

search engine, word processor furthermore email customers. This spellchecker are used to separate words

when comparing during stem analysis.

c. Google’s Flu Trends

This trend of Google Flu are the web services operated by the Google that provide estimate of influenza

activities in 25 and more than that countries. It estimates available historical information and present

research information for download (Kościelniak & Puto, 2015).

d. Google’s Trends

Google Trends are Google search-based web-based tool. When search terms are entered in different

languages for search in different regions of the world, they are usually displayed as search terms.

Like Google – Facebook and LinkedIn also uses large scale data effectively. How?

This is well-known facts, that generates a huge amount of information in website, because they are the

social platform, moreover all of these information’s should be recognized against the user's behaviour

pattern to get the recommendation. Such as, Face book are use for various activities that provide suggestion

that the users need to purchase or attends that is likely to be post on the page with explore criteria.

Exercise 5: Big Data Tools

Briefly explain why a traditional relational database (RDBS) is not effectively used to store big data?

CRICOS Provider No. 00103D Insert file name here Page 6 of 24

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

According to a XYZ there is the 3 major reason why RDBS are not efficiently use to store a big data.

Initially, size of data drastically increased within the PB level, and the ability to process such a large amount

of RDBS data was a tedious task. Most of the RDBS data is unstructured or semi-structured. This

information frequently comes from a social media, texts, videos, emails. The Unstructured information is

beyond the scope of the RDBS because relational database cannot identified in unstructured information.

The RDBS are design for structured data or financial data for blog sensors. The high speed of big data is

another reason for information retention instead off rapid development (Hoskins, 2014).

What is NoSQL Database?

The NoSQL database are defined as "a basically distributed system and a non-relational database that also

enables quick ad hoc organizations to analyse extremely high volumes and different data type. It also

known as a cloud database, a big data database because it has a huge number of Data generation, storage,

etc. Another name are non-relational database.

Name and briefly describe at least 5 NoSQL Databases

Cassandra: Face book originally developed Cassandra, as well as then developed Apache open sources

projects, which are ideal for social networking CC databases. This is a non-relational database that is used

in conjunction with Google's Big Table.

Lucene: This is one of the ASF 4 Jakarta Task Forces in Jakarta. It is an open source tool for

subprojects or full-text search engine toolkits. It is not a full-text search engine, but a full-text search

engine architecture.

Oracle's NoSQL database: The big data machine are NoSQL database, integrated Hadoop, , an R system

language, a Hadoop loader, and an Oracle database and Hadoop adapter. It released Oracle OpenWorld as a

big data appliances on 4th October.

HBase: Called the Hadoop database, it provides high-performances, column-oriented, highly reliable

furthermore scalable storage systems that are distributed through HBase technologies. The stored structure

is cluster type of the computer server. The Google BigTable start source implementations are done on

HBase because it is the same as the BigTable's files storage systems.

BigTable are non-relational database: they contains a multidimensional classification map for a storage, it

is sparse, persistent as well as distributed. Because PB-level information processing are done on several

machines, it is very reliable (Bughin, 2016).

What is MapReduce and how it works?

MapReduce are used for a parallel computing of several data sets because it is a model of programming. It

helps programmers run on distributed systems just like parallel programming. Map functions are performed

for a set of key-value pair to map the new set of pairs specify on the concurrent reduction functions to make

sure that each shared key are mapped to a similar set of keys for current software.

Briefly describe some notable MapReduce products (at least 5)

Couchdb: This is an Apache open source database software that focuses on how to use and build a scalable

architecture.

CRICOS Provider No. 00103D Insert file name here Page 7 of 24

Initially, size of data drastically increased within the PB level, and the ability to process such a large amount

of RDBS data was a tedious task. Most of the RDBS data is unstructured or semi-structured. This

information frequently comes from a social media, texts, videos, emails. The Unstructured information is

beyond the scope of the RDBS because relational database cannot identified in unstructured information.

The RDBS are design for structured data or financial data for blog sensors. The high speed of big data is

another reason for information retention instead off rapid development (Hoskins, 2014).

What is NoSQL Database?

The NoSQL database are defined as "a basically distributed system and a non-relational database that also

enables quick ad hoc organizations to analyse extremely high volumes and different data type. It also

known as a cloud database, a big data database because it has a huge number of Data generation, storage,

etc. Another name are non-relational database.

Name and briefly describe at least 5 NoSQL Databases

Cassandra: Face book originally developed Cassandra, as well as then developed Apache open sources

projects, which are ideal for social networking CC databases. This is a non-relational database that is used

in conjunction with Google's Big Table.

Lucene: This is one of the ASF 4 Jakarta Task Forces in Jakarta. It is an open source tool for

subprojects or full-text search engine toolkits. It is not a full-text search engine, but a full-text search

engine architecture.

Oracle's NoSQL database: The big data machine are NoSQL database, integrated Hadoop, , an R system

language, a Hadoop loader, and an Oracle database and Hadoop adapter. It released Oracle OpenWorld as a

big data appliances on 4th October.

HBase: Called the Hadoop database, it provides high-performances, column-oriented, highly reliable

furthermore scalable storage systems that are distributed through HBase technologies. The stored structure

is cluster type of the computer server. The Google BigTable start source implementations are done on

HBase because it is the same as the BigTable's files storage systems.

BigTable are non-relational database: they contains a multidimensional classification map for a storage, it

is sparse, persistent as well as distributed. Because PB-level information processing are done on several

machines, it is very reliable (Bughin, 2016).

What is MapReduce and how it works?

MapReduce are used for a parallel computing of several data sets because it is a model of programming. It

helps programmers run on distributed systems just like parallel programming. Map functions are performed

for a set of key-value pair to map the new set of pairs specify on the concurrent reduction functions to make

sure that each shared key are mapped to a similar set of keys for current software.

Briefly describe some notable MapReduce products (at least 5)

Couchdb: This is an Apache open source database software that focuses on how to use and build a scalable

architecture.

CRICOS Provider No. 00103D Insert file name here Page 7 of 24

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Apache Hadoop: It is big data open source software for MapReduce programming framework, it is scalable

cloud computing.

Disco Project: This is a lightweight distributed computing system and an open source framework.

Riak: This is a scalable, easy-to-use, easy-to-use NoSQL database that is also distributed.

Infinispan: Software developed by Red Hat for key NoSQL and distributed cache data storage (Vis, 2013).

Amazon’s S3 service lets to store large chunks of data on an online service. List some 5 features for

Amazon’s S3 service.

Amazon's S3 service has the following features, as described below:

Version Control: It allows each object in the bucket to save, retrieve, and retrieve each version. It is used to

improve the dependability of storage as well as recover deleted or overwritten objects.

Life cycle: Objects using the life cycle will be automatically deleted and marked as a glacier storage at a

specific time.

This tag marks the cost allocation like AWS billing aspect to easily track AWS costs and organize bucket tags.

Request pricing refers to the behaviour of using a store and accessing objects in a folder to get a list of files for

all actions that AWS charges. Price is an important factor to consider when dealing with a large number of

documents.

RRS is reduced, and redundant storage can be enabled and disabled in the storage to decrease the cost of

reproducible data in a non-critical manner.

Getting the concise, valuable information from a sea of data can be challenging. We need statistical

analysis tool to deal with Big Data. Name and describe some (at least 3) statistical analysis tools.

Some statistical analysis tools are:

[R]: R is a programming language for navigating the command line interface. It also uses circuits to

perform R functions in a complex computer science environment, making it accurate and able to learn

faster. R can run on various operating systems.

EXCEL spreadsheet: It is one of the Microsoft Office products and it is a powerful software. These tables

and charts are easy to operate and manage. It is also used for data analysis and statistical analysis, which is

due to the deficiencies caused by the slow operation.

SPSS Statistics: SPSS Statistics does not require extensive programming knowledge. In addition to the

syntax editor, there is a point-and-click graphical interface. It is an IBM statistical tool for analysis. It has

some control over the statistical output.

Exercise 6: Big Data Application (1 mark)

Name 3 industries that should use Big Data – justify your claim in 250 words for each industry using

proper references.

Financial industry: From the perspective of existing customers, use investment characteristics, asset

management, banking services, product financial strategies, etc. to formulate customer demographic

segmentation and data analysis of insurance demographics to provide one-stop financial customer solutions.

Get the most value. It is used to manage duplicate transactions in the workflow. Blockchains are used to

improve big data security, consistent compliance archiving and Blockchain analysis.

CRICOS Provider No. 00103D Insert file name here Page 8 of 24

cloud computing.

Disco Project: This is a lightweight distributed computing system and an open source framework.

Riak: This is a scalable, easy-to-use, easy-to-use NoSQL database that is also distributed.

Infinispan: Software developed by Red Hat for key NoSQL and distributed cache data storage (Vis, 2013).

Amazon’s S3 service lets to store large chunks of data on an online service. List some 5 features for

Amazon’s S3 service.

Amazon's S3 service has the following features, as described below:

Version Control: It allows each object in the bucket to save, retrieve, and retrieve each version. It is used to

improve the dependability of storage as well as recover deleted or overwritten objects.

Life cycle: Objects using the life cycle will be automatically deleted and marked as a glacier storage at a

specific time.

This tag marks the cost allocation like AWS billing aspect to easily track AWS costs and organize bucket tags.

Request pricing refers to the behaviour of using a store and accessing objects in a folder to get a list of files for

all actions that AWS charges. Price is an important factor to consider when dealing with a large number of

documents.

RRS is reduced, and redundant storage can be enabled and disabled in the storage to decrease the cost of

reproducible data in a non-critical manner.

Getting the concise, valuable information from a sea of data can be challenging. We need statistical

analysis tool to deal with Big Data. Name and describe some (at least 3) statistical analysis tools.

Some statistical analysis tools are:

[R]: R is a programming language for navigating the command line interface. It also uses circuits to

perform R functions in a complex computer science environment, making it accurate and able to learn

faster. R can run on various operating systems.

EXCEL spreadsheet: It is one of the Microsoft Office products and it is a powerful software. These tables

and charts are easy to operate and manage. It is also used for data analysis and statistical analysis, which is

due to the deficiencies caused by the slow operation.

SPSS Statistics: SPSS Statistics does not require extensive programming knowledge. In addition to the

syntax editor, there is a point-and-click graphical interface. It is an IBM statistical tool for analysis. It has

some control over the statistical output.

Exercise 6: Big Data Application (1 mark)

Name 3 industries that should use Big Data – justify your claim in 250 words for each industry using

proper references.

Financial industry: From the perspective of existing customers, use investment characteristics, asset

management, banking services, product financial strategies, etc. to formulate customer demographic

segmentation and data analysis of insurance demographics to provide one-stop financial customer solutions.

Get the most value. It is used to manage duplicate transactions in the workflow. Blockchains are used to

improve big data security, consistent compliance archiving and Blockchain analysis.

CRICOS Provider No. 00103D Insert file name here Page 8 of 24

Insurance: This is one of the industries that need services and can reduce the time to process complex

claims within 10 minutes. It also needs to eliminate millions of dollars in leaks and fraud. It is also a

customer-centric profitable company. Another important use is to set office premiums because they set the

profit price of premiums by covering risk to suit the customer's budget. This industry is also based on the

principle of risk.

Retail industry: This is a data-driven cognitive technology used to increase the customer experience. It is

also used to analyze social media data to improve product design and marketing to provide quality services.

Big data analytics in the retail process can predict demand products, identify interested customers and

research best forecasting trends, and optimize pricing to deal with the competitive advantage of the products

to be sold.

From your lecture and also based on the below given video link:

https://www.youtube.com/watch?v=_sXkTSiAe-A

Write a paragraph about memory virtualization.

Memory virtualization separates volatile random access memory (RAM) resources from

individual systems in the data center and then aggregates these resources into any computer-

available virtualized memory pool in the cluster. Operating system or application running on the

operating system. The distributed memory pool can then be used as a cache for CPU or GPU

applications, messaging layers, or large shared memory resources. Memory virtualization allows

networked servers and distributed servers to share memory pools to overcome physical memory

limitations, a common bottleneck for software performance. By integrating this functionality

into the network, applications can use a large amount of memory to improve overall

performance, system utilization, improve memory usage efficiency, and enable new use cases.

The software on the memory pool node (server) allows nodes to connect to memory pools to

contribute memory and store and retrieve data.

Watch the below mentioned YouTube link:

https://www.youtube.com/watch?v=wTcxRObq738

Based on the video answer the following questions:

What is RAID 0?

RAID 0, are also known as the disk striping, are the technique for decomposing files and

distributing data across the all disk drive in the RAID group. One disadvantage of a RAID 0 are

that it has no parity. If the drive fails, there will be no redundancy as well as all data will be

misplaced.

Describe Striping, Mirroring and Parity.

CRICOS Provider No. 00103D Insert file name here Page 9 of 24

claims within 10 minutes. It also needs to eliminate millions of dollars in leaks and fraud. It is also a

customer-centric profitable company. Another important use is to set office premiums because they set the

profit price of premiums by covering risk to suit the customer's budget. This industry is also based on the

principle of risk.

Retail industry: This is a data-driven cognitive technology used to increase the customer experience. It is

also used to analyze social media data to improve product design and marketing to provide quality services.

Big data analytics in the retail process can predict demand products, identify interested customers and

research best forecasting trends, and optimize pricing to deal with the competitive advantage of the products

to be sold.

From your lecture and also based on the below given video link:

https://www.youtube.com/watch?v=_sXkTSiAe-A

Write a paragraph about memory virtualization.

Memory virtualization separates volatile random access memory (RAM) resources from

individual systems in the data center and then aggregates these resources into any computer-

available virtualized memory pool in the cluster. Operating system or application running on the

operating system. The distributed memory pool can then be used as a cache for CPU or GPU

applications, messaging layers, or large shared memory resources. Memory virtualization allows

networked servers and distributed servers to share memory pools to overcome physical memory

limitations, a common bottleneck for software performance. By integrating this functionality

into the network, applications can use a large amount of memory to improve overall

performance, system utilization, improve memory usage efficiency, and enable new use cases.

The software on the memory pool node (server) allows nodes to connect to memory pools to

contribute memory and store and retrieve data.

Watch the below mentioned YouTube link:

https://www.youtube.com/watch?v=wTcxRObq738

Based on the video answer the following questions:

What is RAID 0?

RAID 0, are also known as the disk striping, are the technique for decomposing files and

distributing data across the all disk drive in the RAID group. One disadvantage of a RAID 0 are

that it has no parity. If the drive fails, there will be no redundancy as well as all data will be

misplaced.

Describe Striping, Mirroring and Parity.

CRICOS Provider No. 00103D Insert file name here Page 9 of 24

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Striping are the very confuse RAID level for beginners and requirements to be well understood

and explained. RAIDS are collection of various disks also define the number of consecutively

addressable disk block in these disks. These disk blocks are called As a set of strips and these

strips, multiple disks are called strips.

Mirroring is easy to understand and one of the main reliable information protection methods. In

this method, you only need to make a copy of the disk image you want to protect, and in this

way get two copies of the data.

Parity: Mirroring involves high costs, so to protect data, the new technology uses a strip called

parity. This is a reliable and low-cost data protection solution. In this method, extra HDDs or

disks are added to the stripe width to save the parity bits.

Exercise 2: Storage Design (2 marks)

Summarize storage repository design based on the following video link:

https://www.youtube.com/watch?v=eVQH7C3nulY

The repositories are essentially logical disk space provided by file systems on the top of the

physical storage space hardware. If repositories are created on file servers, such as NFS share,

the file system exists already; if repositories are created on LUN, OCFS2 system file are first

created. Before you begin the configuration, you must reach an NFS-based repository and a

LUN-based repository.

Below YouTube link describes the Intelligent Storage System

https://www.youtube.com/watch?v=raTIRsMi7zk

Based on the watched video answer the following questions:

What is ISS?

SSS is the element-rich RAID arrays that will provides a highly optimize I / O processing’s

capabilities. It also provides plenty of caching and different I/O methods to improve

performance. The ISS operating environment also provides brilliant cache organization, array

asset administration, and connecting heterogeneous host. It supports virtual provisioning, flash

drives as well as automatic storage tiring.

What are the 4 main components of the ISS?

The Video have been always mentioned 4 main mechanism of front end, cache, ISS, physical

disks and back end.

CRICOS Provider No. 00103D Insert file name here Page 10 of 24

and explained. RAIDS are collection of various disks also define the number of consecutively

addressable disk block in these disks. These disk blocks are called As a set of strips and these

strips, multiple disks are called strips.

Mirroring is easy to understand and one of the main reliable information protection methods. In

this method, you only need to make a copy of the disk image you want to protect, and in this

way get two copies of the data.

Parity: Mirroring involves high costs, so to protect data, the new technology uses a strip called

parity. This is a reliable and low-cost data protection solution. In this method, extra HDDs or

disks are added to the stripe width to save the parity bits.

Exercise 2: Storage Design (2 marks)

Summarize storage repository design based on the following video link:

https://www.youtube.com/watch?v=eVQH7C3nulY

The repositories are essentially logical disk space provided by file systems on the top of the

physical storage space hardware. If repositories are created on file servers, such as NFS share,

the file system exists already; if repositories are created on LUN, OCFS2 system file are first

created. Before you begin the configuration, you must reach an NFS-based repository and a

LUN-based repository.

Below YouTube link describes the Intelligent Storage System

https://www.youtube.com/watch?v=raTIRsMi7zk

Based on the watched video answer the following questions:

What is ISS?

SSS is the element-rich RAID arrays that will provides a highly optimize I / O processing’s

capabilities. It also provides plenty of caching and different I/O methods to improve

performance. The ISS operating environment also provides brilliant cache organization, array

asset administration, and connecting heterogeneous host. It supports virtual provisioning, flash

drives as well as automatic storage tiring.

What are the 4 main components of the ISS?

The Video have been always mentioned 4 main mechanism of front end, cache, ISS, physical

disks and back end.

CRICOS Provider No. 00103D Insert file name here Page 10 of 24

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Storage Area Network (SAN) and Network Attached Storage (NAS) are widely used concepts in

data storage arena. The following YouTube video links gives detailed description of these

concepts:

− http://www.youtube.com/watch?v=csdJFazj3h0

− http://www.youtube.com/watch?v=vdf6CvGQZrk

− https://www.youtube.com/watch?v=KxdfGcynfJ0

− https://www.youtube.com/watch?v=4RsLUTJ_Qtk

Based on the watched videos answer the following questions:

Describe NAS and SAN briefly using diagrams?

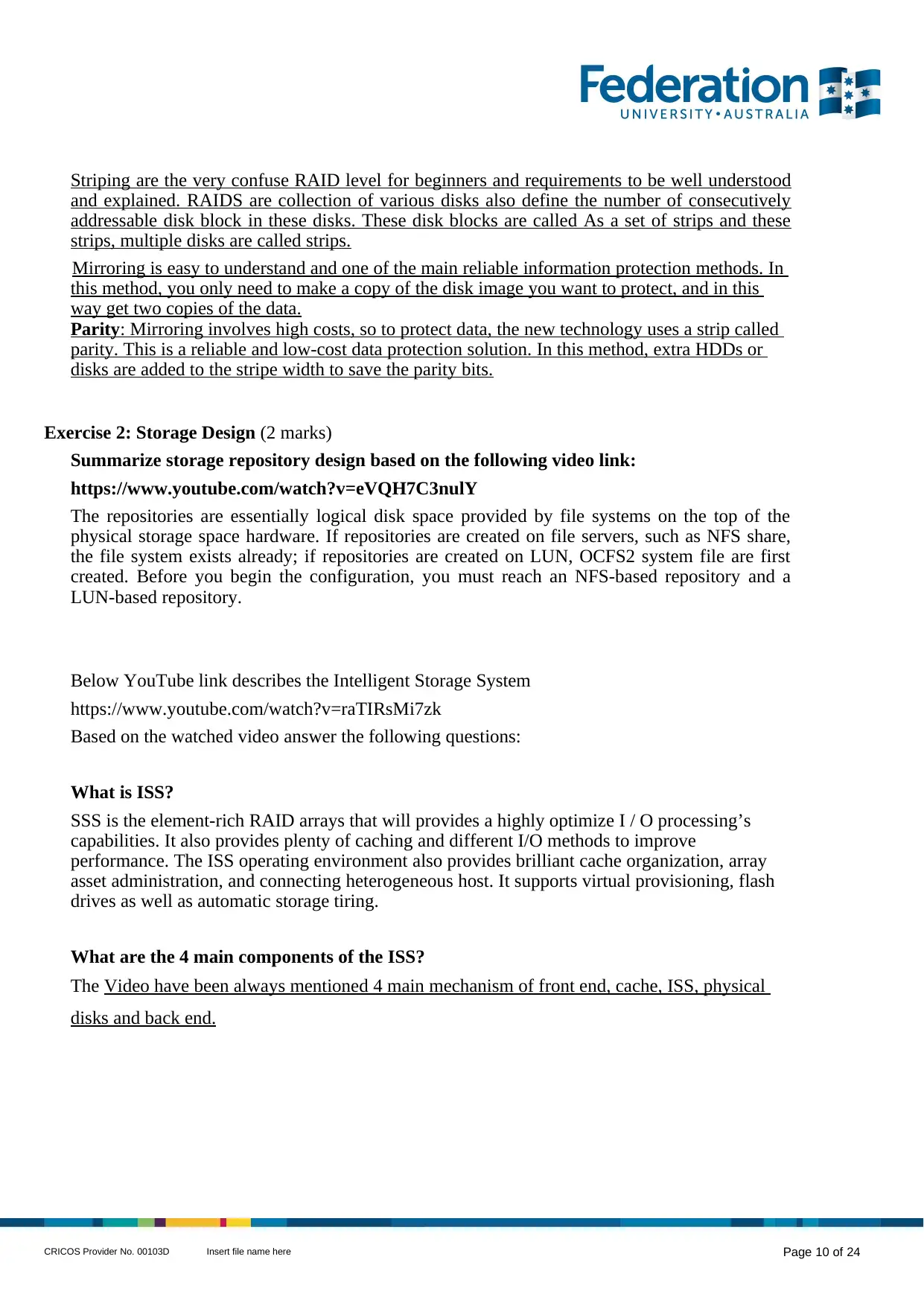

Store Area Network (SAN) is a high performance network, the primary purpose of which is to

communicate with the computer system with storage devices.

CRICOS Provider No. 00103D Insert file name here Page 11 of 24

data storage arena. The following YouTube video links gives detailed description of these

concepts:

− http://www.youtube.com/watch?v=csdJFazj3h0

− http://www.youtube.com/watch?v=vdf6CvGQZrk

− https://www.youtube.com/watch?v=KxdfGcynfJ0

− https://www.youtube.com/watch?v=4RsLUTJ_Qtk

Based on the watched videos answer the following questions:

Describe NAS and SAN briefly using diagrams?

Store Area Network (SAN) is a high performance network, the primary purpose of which is to

communicate with the computer system with storage devices.

CRICOS Provider No. 00103D Insert file name here Page 11 of 24

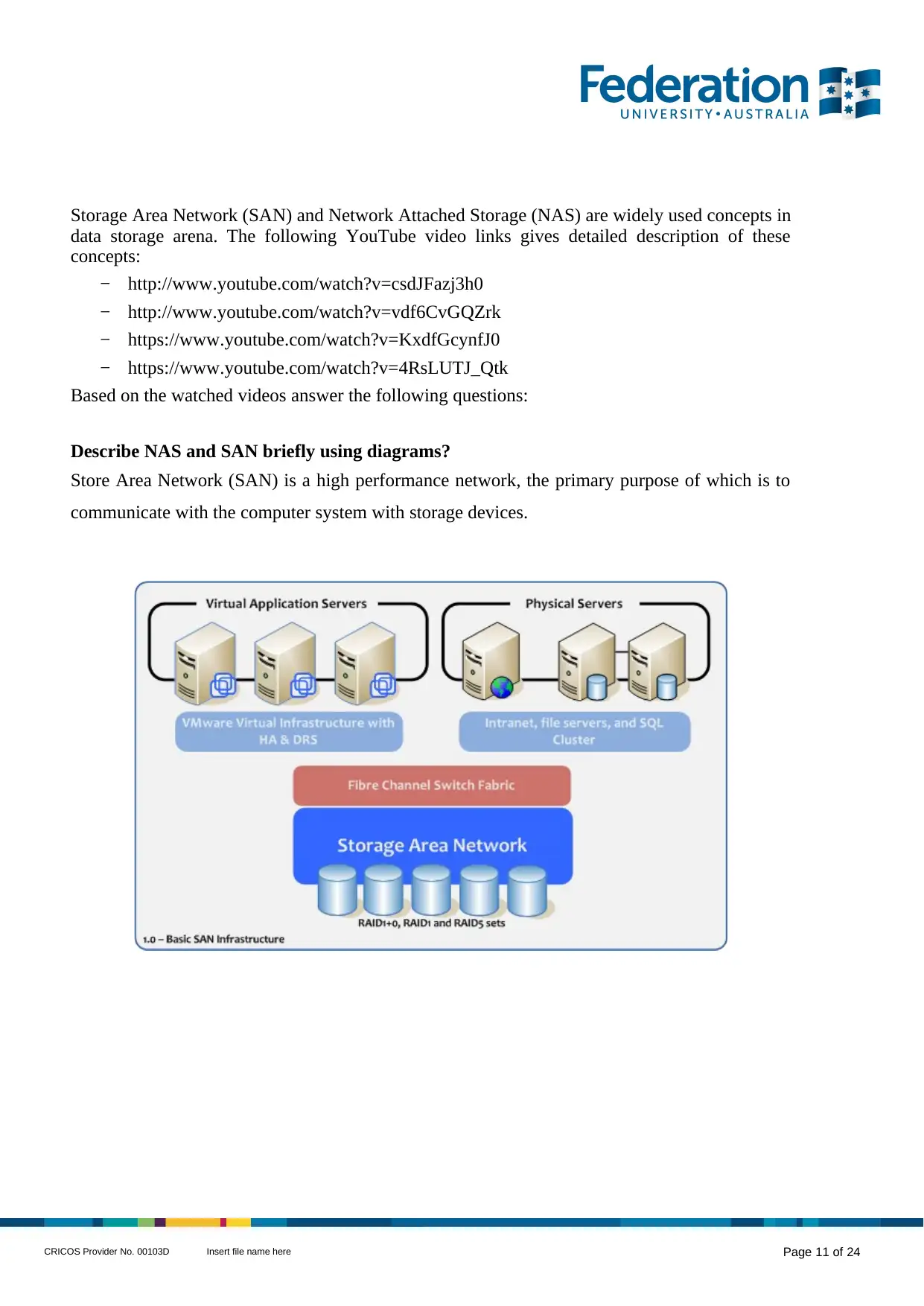

Network Attached Storage (NAS) is a specialized file storage device that provides file-based

shared storage for local area network (LAN) nodes over standard Ethernet connections. (Rouse,

2015)

The SAN organizes storage resources on a separate, high-performance network. The key difference

between NAS and SAN is that network attached storage handles single file input/output (I/O)

requests, while the storage area network manages sequential data block I/O requests.

What are the advantages of SAN over NAS?

The major advantages of the NAS:

Support inclusive access to data

Improve the efficiency

Improve the flexibility

Centralize storage

Simplify administration

Scalability

The major advantages of the SAN:

Good disk uses

SAN for tragedy recovery for various applications

For improve the availability of the application

CRICOS Provider No. 00103D Insert file name here Page 12 of 24

shared storage for local area network (LAN) nodes over standard Ethernet connections. (Rouse,

2015)

The SAN organizes storage resources on a separate, high-performance network. The key difference

between NAS and SAN is that network attached storage handles single file input/output (I/O)

requests, while the storage area network manages sequential data block I/O requests.

What are the advantages of SAN over NAS?

The major advantages of the NAS:

Support inclusive access to data

Improve the efficiency

Improve the flexibility

Centralize storage

Simplify administration

Scalability

The major advantages of the SAN:

Good disk uses

SAN for tragedy recovery for various applications

For improve the availability of the application

CRICOS Provider No. 00103D Insert file name here Page 12 of 24

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 24

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.