Predictive Analytics (BUS5PA) Assignment 1: Model Evaluation

VerifiedAdded on 2020/02/24

|31

|3683

|461

Project

AI Summary

This assignment, a project for BUS5PA Predictive Analytics at La Trobe Business School, Melbourne, Australia, focuses on building and evaluating predictive models. The project utilizes the SAS Miner platform to analyze the ORGANICS dataset. It involves setting up the project, exploratory data analysis, and building decision tree and regression models. The analysis includes data partitioning, imputation, and model selection using stepwise regression. The student compares the performance of decision tree models (Tree 1 and Tree 2) and a regression model, evaluating them based on metrics such as average square error, misclassification rate, ROC index, and Kolmogorov-Smirnov statistic. The discussion covers the principles of decision tree induction and logistic regression, highlighting their strengths and weaknesses in different scenarios. The project aims to determine the best-performing model for predicting customer loyalty based on organic product purchases, providing insights into data mining techniques and model evaluation.

Assignment 1 – Building and Evaluating Predictive

Models

(BUS5PA Predictive Analytics – Semester 2, 2017)

By

<Student Name>

(18815197)

La Trobe Business School

Melbourne, Australia

Models

(BUS5PA Predictive Analytics – Semester 2, 2017)

By

<Student Name>

(18815197)

La Trobe Business School

Melbourne, Australia

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Table of Contents

1. Setting up the project and exploratory analysis 1

2. Decision tree based modeling and analysis 2

3. Regression based modeling and analysis 4

4. Open ended discussion 6

5. Extending current knowledge with additional reading 9

References 12-13

Appendix A-M

1. Setting up the project and exploratory analysis 1

2. Decision tree based modeling and analysis 2

3. Regression based modeling and analysis 4

4. Open ended discussion 6

5. Extending current knowledge with additional reading 9

References 12-13

Appendix A-M

List of Figures

Figure 1 Creation of project: BUS5PA_Assignment1_18815197..................................................A

Figure 2 Creation of Library...........................................................................................................A

Figure 3 Roles of variables.............................................................................................................B

Figure 4 Distribution of Organics purchase indicator.....................................................................B

Figure 5 Organics data source.........................................................................................................C

Figure 6 Organics data source in Organics diagram workspace.....................................................C

Figure 7 Addition of Data partition.................................................................................................C

Figure 8 Data set Allocations..........................................................................................................D

Figure 9 Decision Tree Addition....................................................................................................D

Figure 10 Interactive method has not been selected.......................................................................D

Figure 11 Use average square error as Assessment measure..........................................................D

Figure 12 Subtree Assessment Plot.................................................................................................E

Figure 13 Decision Tree Model......................................................................................................E

Figure 14 Decision Tree after adding Tree 2...................................................................................F

Figure 15 Three-way Split...............................................................................................................F

Figure 16 Assessment Measure.......................................................................................................F

Figure 1 Creation of project: BUS5PA_Assignment1_18815197..................................................A

Figure 2 Creation of Library...........................................................................................................A

Figure 3 Roles of variables.............................................................................................................B

Figure 4 Distribution of Organics purchase indicator.....................................................................B

Figure 5 Organics data source.........................................................................................................C

Figure 6 Organics data source in Organics diagram workspace.....................................................C

Figure 7 Addition of Data partition.................................................................................................C

Figure 8 Data set Allocations..........................................................................................................D

Figure 9 Decision Tree Addition....................................................................................................D

Figure 10 Interactive method has not been selected.......................................................................D

Figure 11 Use average square error as Assessment measure..........................................................D

Figure 12 Subtree Assessment Plot.................................................................................................E

Figure 13 Decision Tree Model......................................................................................................E

Figure 14 Decision Tree after adding Tree 2...................................................................................F

Figure 15 Three-way Split...............................................................................................................F

Figure 16 Assessment Measure.......................................................................................................F

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Figure 17 Average square error for the model with Tree 2............................................................G

Figure 18 StatExplore tool with ORGANICS data source.............................................................G

Figure 19 Default input method of class and interval variables.....................................................H

Figure 20 Imputation indicators for all imputed inputs..................................................................H

Figure 21 Addition of Regression node..........................................................................................H

Figure 22 Model Selection...............................................................................................................I

Figure 23 Regression Result.............................................................................................................I

Figure 24 Summary of Stepwise Selection......................................................................................J

Figure 25 Odd ratio Estimates..........................................................................................................J

Figure 26 Average squared error (ASE).........................................................................................K

Figure 27 Model Comparison Process............................................................................................K

Figure 28 Model Comparison Result..............................................................................................L

Figure 29 ROC Chart......................................................................................................................L

Figure 30 Cumulative Lift..............................................................................................................M

Figure 31 Fit Statistics...................................................................................................................M

Figure 18 StatExplore tool with ORGANICS data source.............................................................G

Figure 19 Default input method of class and interval variables.....................................................H

Figure 20 Imputation indicators for all imputed inputs..................................................................H

Figure 21 Addition of Regression node..........................................................................................H

Figure 22 Model Selection...............................................................................................................I

Figure 23 Regression Result.............................................................................................................I

Figure 24 Summary of Stepwise Selection......................................................................................J

Figure 25 Odd ratio Estimates..........................................................................................................J

Figure 26 Average squared error (ASE).........................................................................................K

Figure 27 Model Comparison Process............................................................................................K

Figure 28 Model Comparison Result..............................................................................................L

Figure 29 ROC Chart......................................................................................................................L

Figure 30 Cumulative Lift..............................................................................................................M

Figure 31 Fit Statistics...................................................................................................................M

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

List of Tables

Table 1 Model performance comparison 6

Table 1 Model performance comparison 6

1. Setting up the project and exploratory analysis

a. Project named BUS5PA_Assignment1_18815197 has been created which has been shown in

Figure 1.

1) SAS Library has been created named Assign, and data source has been created using SAS

dataset ORGANICS, which has been mentioned above in Figure 2 and Figure 5.

2) Roles have been set as mentioned in the business case assignment, Figure 3 shows all the

roles defined for the data source ORGANICS.

3) “TargetBuy” is defined as target variable. 24.77% individuals have purchased organic

products and rest i.e. 75.23% have not purchased organic products, which has been depicted

in Figure 4.

4) As mentioned in Figure 3, Demcluster has been set rejected.

5) Data source named organics has been defined, which has been shown in Figure 5.

6) Data source ORGANICS has been added to Organics diagram workspace, which has been

shown in Figure 6.

b. TargetAmt cannot be used as the input for a model that is used to predict TargetBuy,

TargetBuy indicates if the individuals have purchased the organic item or not, whereas

TargetAmt indicates the number of organic amounts bought. TargetAmt will only be recorded

for those who have purchased any organic products i.e. when Targetbuy is Yes. Hence,

-1-

a. Project named BUS5PA_Assignment1_18815197 has been created which has been shown in

Figure 1.

1) SAS Library has been created named Assign, and data source has been created using SAS

dataset ORGANICS, which has been mentioned above in Figure 2 and Figure 5.

2) Roles have been set as mentioned in the business case assignment, Figure 3 shows all the

roles defined for the data source ORGANICS.

3) “TargetBuy” is defined as target variable. 24.77% individuals have purchased organic

products and rest i.e. 75.23% have not purchased organic products, which has been depicted

in Figure 4.

4) As mentioned in Figure 3, Demcluster has been set rejected.

5) Data source named organics has been defined, which has been shown in Figure 5.

6) Data source ORGANICS has been added to Organics diagram workspace, which has been

shown in Figure 6.

b. TargetAmt cannot be used as the input for a model that is used to predict TargetBuy,

TargetBuy indicates if the individuals have purchased the organic item or not, whereas

TargetAmt indicates the number of organic amounts bought. TargetAmt will only be recorded

for those who have purchased any organic products i.e. when Targetbuy is Yes. Hence,

-1-

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

TargetAmt can never be the predictor of TargetBuy. In this business case, as an initial buyer

incentive plan, the management’s objective is to develop loyalty model by whether

customers have purchased any of the organic products. So, TargetBuy is the perfectly

suitable as target variable.

2. Decision tree based modeling and analysis

a. Data partition node is added to the Organics diagram workspace from Sample Tab, and it has

been connected to the data source node i.e. ORGANICS. 50% of the data have been assigned

in training and rest 50% have been added in validation, which has been depicted in Figure 7

and Figure 8.

b. Decision Tree node has been added to the Organics diagram workspace and it has been

connected to the Data partition node, which has been depicted in Figure 9.

c. Decision Tree has been built autonomously, not interactively, and sub tree model assessment

criteria has been chosen as Use average square error which has been shown in Figure 10 and

11.

1) As per average square error, there are total 29 leaves in the optimal tree, subtree assessment

plot has been shown in Figure 12.

-2-

incentive plan, the management’s objective is to develop loyalty model by whether

customers have purchased any of the organic products. So, TargetBuy is the perfectly

suitable as target variable.

2. Decision tree based modeling and analysis

a. Data partition node is added to the Organics diagram workspace from Sample Tab, and it has

been connected to the data source node i.e. ORGANICS. 50% of the data have been assigned

in training and rest 50% have been added in validation, which has been depicted in Figure 7

and Figure 8.

b. Decision Tree node has been added to the Organics diagram workspace and it has been

connected to the Data partition node, which has been depicted in Figure 9.

c. Decision Tree has been built autonomously, not interactively, and sub tree model assessment

criteria has been chosen as Use average square error which has been shown in Figure 10 and

11.

1) As per average square error, there are total 29 leaves in the optimal tree, subtree assessment

plot has been shown in Figure 12.

-2-

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

2) Age has been used for the first split, it has partitioned the training data in two parts, first

subset was for the age less than 44.5, for this subset TargetBuy = 1 has higher than average

concentration. Second subset is for age greater than or equals to 44.5, for the second subset

TargetBuy = 0 has higher than average concentration. Using average square error assessment,

autonomously created decision tree model has been depicted in Figure 13.

d. Second Decision Tree has been added to the diagram, and it has been connected to the Data

Partition node (shown in Figure 14).

1) The maximum number of branch has been set 3 to allow three-way splits, shown in Figure

15.

2) Creation of decision tree model using average square error has been shown in Figure 16.

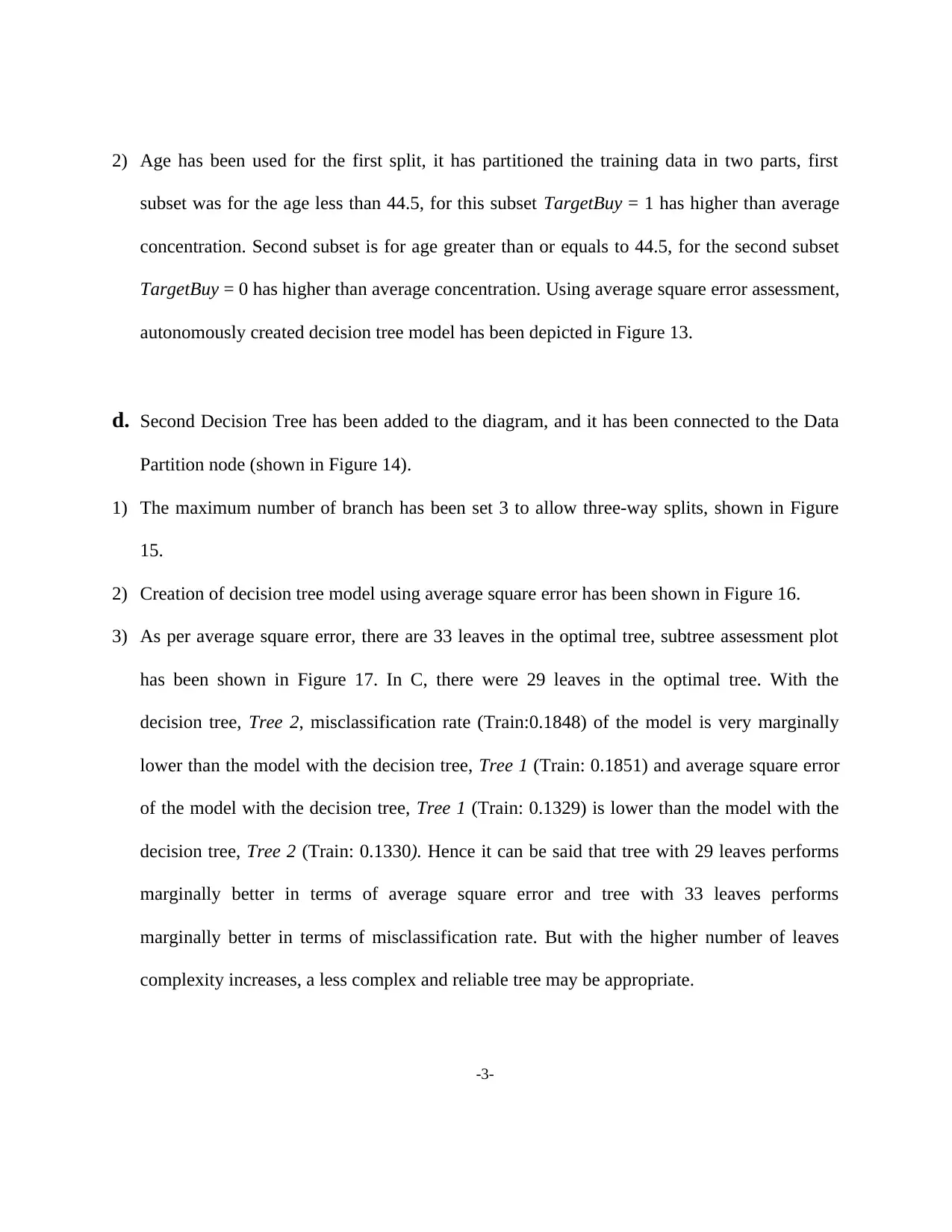

3) As per average square error, there are 33 leaves in the optimal tree, subtree assessment plot

has been shown in Figure 17. In C, there were 29 leaves in the optimal tree. With the

decision tree, Tree 2, misclassification rate (Train:0.1848) of the model is very marginally

lower than the model with the decision tree, Tree 1 (Train: 0.1851) and average square error

of the model with the decision tree, Tree 1 (Train: 0.1329) is lower than the model with the

decision tree, Tree 2 (Train: 0.1330). Hence it can be said that tree with 29 leaves performs

marginally better in terms of average square error and tree with 33 leaves performs

marginally better in terms of misclassification rate. But with the higher number of leaves

complexity increases, a less complex and reliable tree may be appropriate.

-3-

subset was for the age less than 44.5, for this subset TargetBuy = 1 has higher than average

concentration. Second subset is for age greater than or equals to 44.5, for the second subset

TargetBuy = 0 has higher than average concentration. Using average square error assessment,

autonomously created decision tree model has been depicted in Figure 13.

d. Second Decision Tree has been added to the diagram, and it has been connected to the Data

Partition node (shown in Figure 14).

1) The maximum number of branch has been set 3 to allow three-way splits, shown in Figure

15.

2) Creation of decision tree model using average square error has been shown in Figure 16.

3) As per average square error, there are 33 leaves in the optimal tree, subtree assessment plot

has been shown in Figure 17. In C, there were 29 leaves in the optimal tree. With the

decision tree, Tree 2, misclassification rate (Train:0.1848) of the model is very marginally

lower than the model with the decision tree, Tree 1 (Train: 0.1851) and average square error

of the model with the decision tree, Tree 1 (Train: 0.1329) is lower than the model with the

decision tree, Tree 2 (Train: 0.1330). Hence it can be said that tree with 29 leaves performs

marginally better in terms of average square error and tree with 33 leaves performs

marginally better in terms of misclassification rate. But with the higher number of leaves

complexity increases, a less complex and reliable tree may be appropriate.

-3-

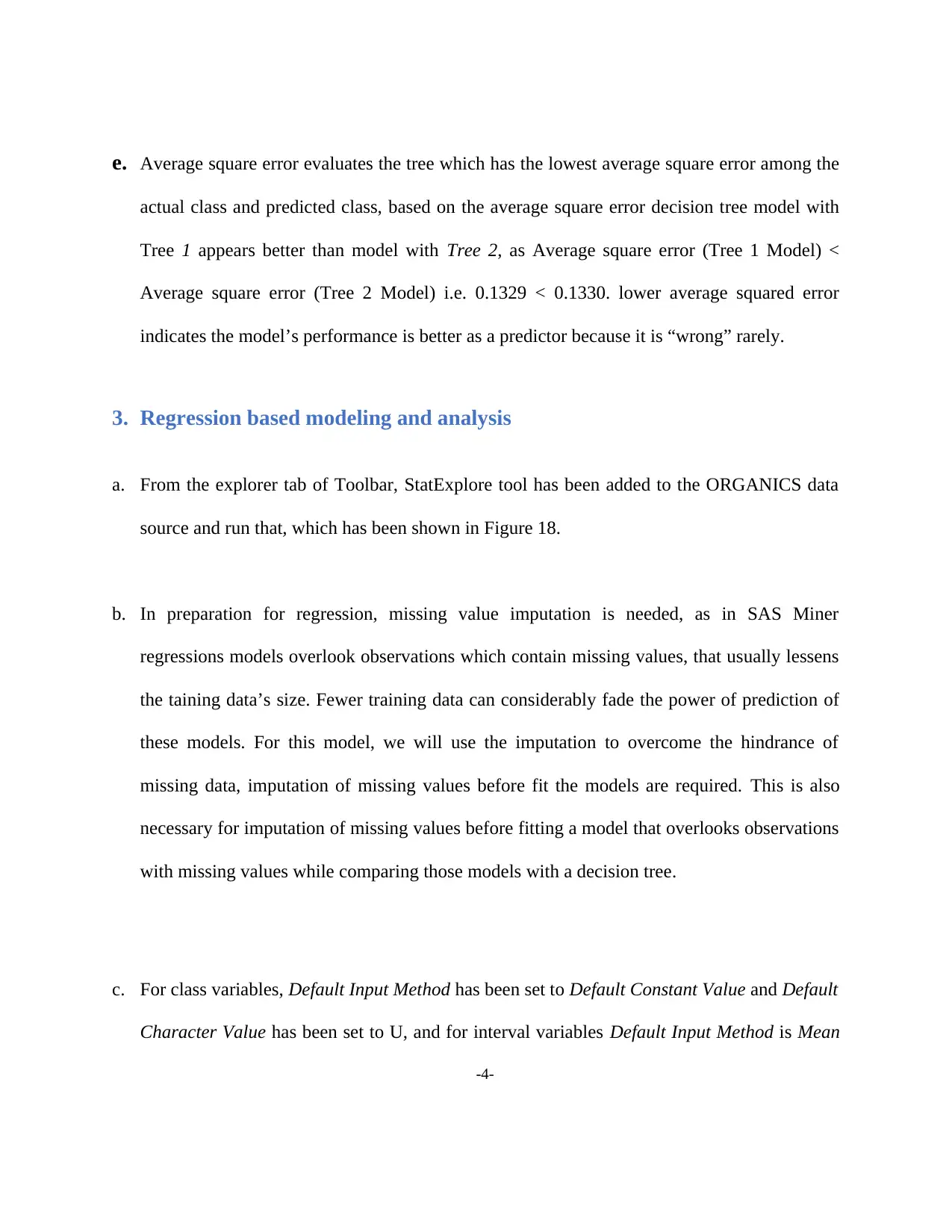

e. Average square error evaluates the tree which has the lowest average square error among the

actual class and predicted class, based on the average square error decision tree model with

Tree 1 appears better than model with Tree 2, as Average square error (Tree 1 Model) <

Average square error (Tree 2 Model) i.e. 0.1329 < 0.1330. lower average squared error

indicates the model’s performance is better as a predictor because it is “wrong” rarely.

3. Regression based modeling and analysis

a. From the explorer tab of Toolbar, StatExplore tool has been added to the ORGANICS data

source and run that, which has been shown in Figure 18.

b. In preparation for regression, missing value imputation is needed, as in SAS Miner

regressions models overlook observations which contain missing values, that usually lessens

the taining data’s size. Fewer training data can considerably fade the power of prediction of

these models. For this model, we will use the imputation to overcome the hindrance of

missing data, imputation of missing values before fit the models are required. This is also

necessary for imputation of missing values before fitting a model that overlooks observations

with missing values while comparing those models with a decision tree.

c. For class variables, Default Input Method has been set to Default Constant Value and Default

Character Value has been set to U, and for interval variables Default Input Method is Mean

-4-

actual class and predicted class, based on the average square error decision tree model with

Tree 1 appears better than model with Tree 2, as Average square error (Tree 1 Model) <

Average square error (Tree 2 Model) i.e. 0.1329 < 0.1330. lower average squared error

indicates the model’s performance is better as a predictor because it is “wrong” rarely.

3. Regression based modeling and analysis

a. From the explorer tab of Toolbar, StatExplore tool has been added to the ORGANICS data

source and run that, which has been shown in Figure 18.

b. In preparation for regression, missing value imputation is needed, as in SAS Miner

regressions models overlook observations which contain missing values, that usually lessens

the taining data’s size. Fewer training data can considerably fade the power of prediction of

these models. For this model, we will use the imputation to overcome the hindrance of

missing data, imputation of missing values before fit the models are required. This is also

necessary for imputation of missing values before fitting a model that overlooks observations

with missing values while comparing those models with a decision tree.

c. For class variables, Default Input Method has been set to Default Constant Value and Default

Character Value has been set to U, and for interval variables Default Input Method is Mean

-4-

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

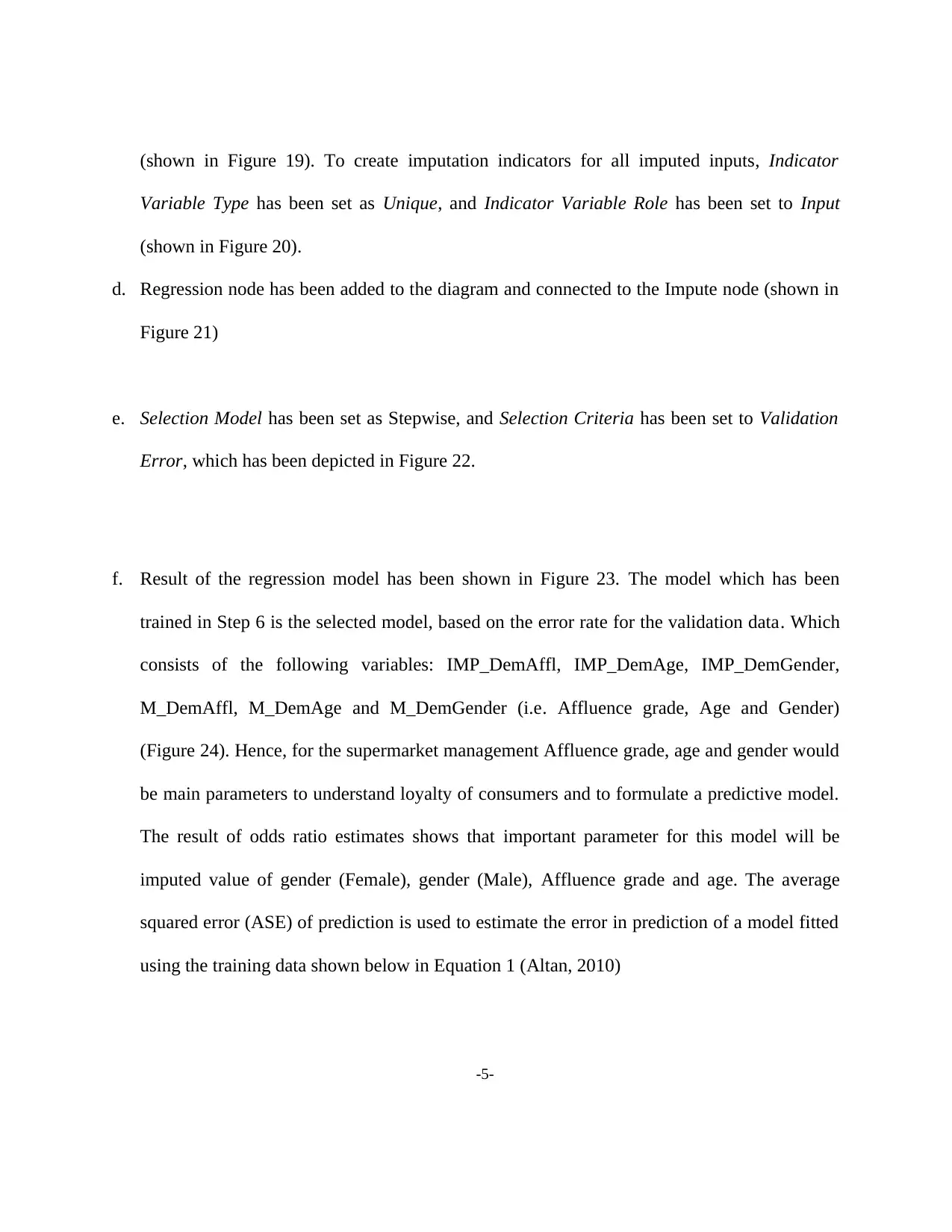

(shown in Figure 19). To create imputation indicators for all imputed inputs, Indicator

Variable Type has been set as Unique, and Indicator Variable Role has been set to Input

(shown in Figure 20).

d. Regression node has been added to the diagram and connected to the Impute node (shown in

Figure 21)

e. Selection Model has been set as Stepwise, and Selection Criteria has been set to Validation

Error, which has been depicted in Figure 22.

f. Result of the regression model has been shown in Figure 23. The model which has been

trained in Step 6 is the selected model, based on the error rate for the validation data. Which

consists of the following variables: IMP_DemAffl, IMP_DemAge, IMP_DemGender,

M_DemAffl, M_DemAge and M_DemGender (i.e. Affluence grade, Age and Gender)

(Figure 24). Hence, for the supermarket management Affluence grade, age and gender would

be main parameters to understand loyalty of consumers and to formulate a predictive model.

The result of odds ratio estimates shows that important parameter for this model will be

imputed value of gender (Female), gender (Male), Affluence grade and age. The average

squared error (ASE) of prediction is used to estimate the error in prediction of a model fitted

using the training data shown below in Equation 1 (Altan, 2010)

-5-

Variable Type has been set as Unique, and Indicator Variable Role has been set to Input

(shown in Figure 20).

d. Regression node has been added to the diagram and connected to the Impute node (shown in

Figure 21)

e. Selection Model has been set as Stepwise, and Selection Criteria has been set to Validation

Error, which has been depicted in Figure 22.

f. Result of the regression model has been shown in Figure 23. The model which has been

trained in Step 6 is the selected model, based on the error rate for the validation data. Which

consists of the following variables: IMP_DemAffl, IMP_DemAge, IMP_DemGender,

M_DemAffl, M_DemAge and M_DemGender (i.e. Affluence grade, Age and Gender)

(Figure 24). Hence, for the supermarket management Affluence grade, age and gender would

be main parameters to understand loyalty of consumers and to formulate a predictive model.

The result of odds ratio estimates shows that important parameter for this model will be

imputed value of gender (Female), gender (Male), Affluence grade and age. The average

squared error (ASE) of prediction is used to estimate the error in prediction of a model fitted

using the training data shown below in Equation 1 (Altan, 2010)

-5-

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

ASE= 1

n ∑ ( xi− ^xi )2

…………………………………………Equation 1

Here xi is the ith observation in the validation data set and ^xi is its predicted value using the

fitted model and n is the validation sample size. The ASE output SAS has been shown in

Figure 26. ASE for this model is 0.138587 (train data) and 0.137156 (validation data). In a

modeling context, a better predictive model generates values which are near to the ASE

values. An overfit model usually generates a lesser ASE on the training data but it generates

higher values on the validation and test data. An underfit model shows higher values for all

the roles of the data.

4. Open ended discussion

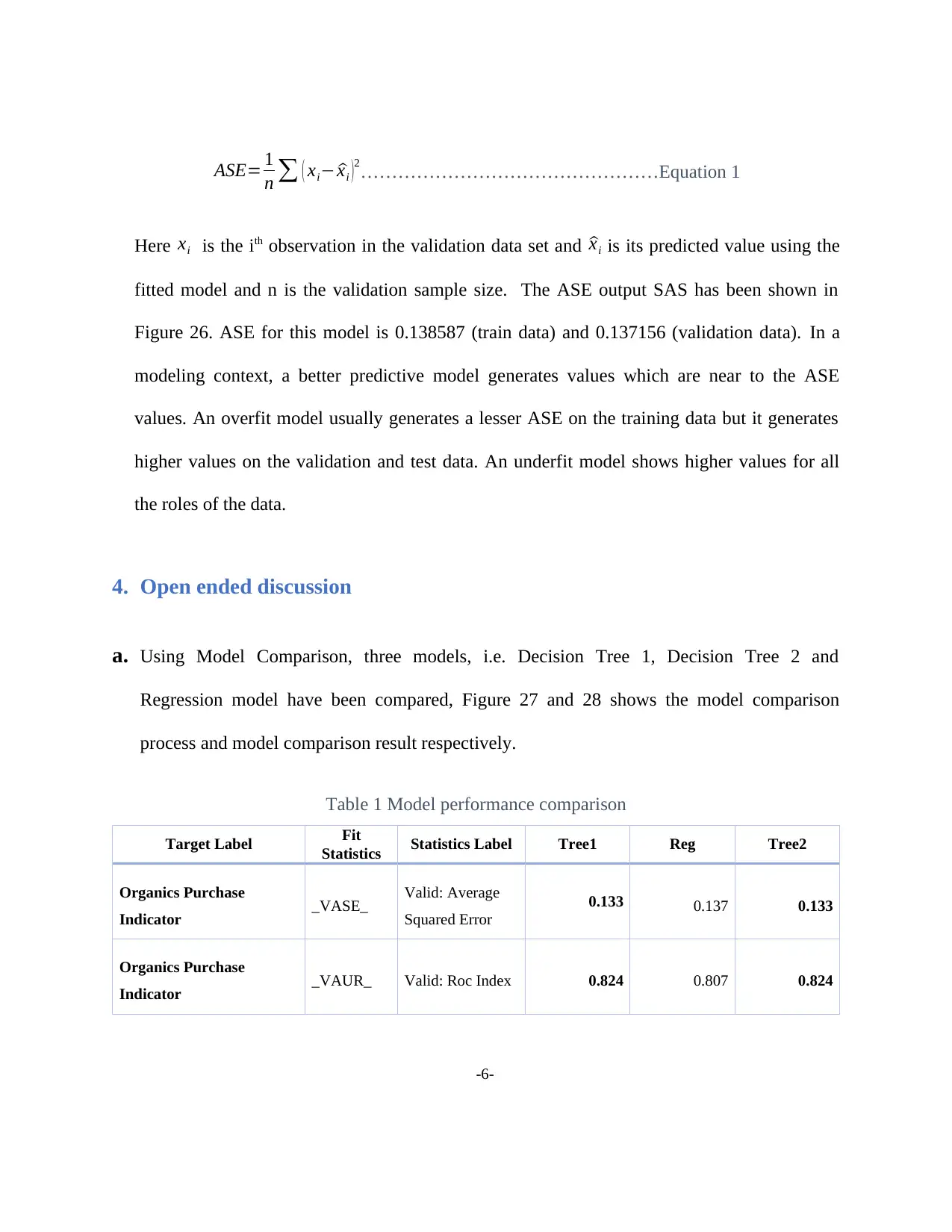

a. Using Model Comparison, three models, i.e. Decision Tree 1, Decision Tree 2 and

Regression model have been compared, Figure 27 and 28 shows the model comparison

process and model comparison result respectively.

Table 1 Model performance comparison

Target Label Fit

Statistics Statistics Label Tree1 Reg Tree2

Organics Purchase

Indicator _VASE_ Valid: Average

Squared Error

0.133 0.137 0.133

Organics Purchase

Indicator _VAUR_ Valid: Roc Index 0.824 0.807 0.824

-6-

n ∑ ( xi− ^xi )2

…………………………………………Equation 1

Here xi is the ith observation in the validation data set and ^xi is its predicted value using the

fitted model and n is the validation sample size. The ASE output SAS has been shown in

Figure 26. ASE for this model is 0.138587 (train data) and 0.137156 (validation data). In a

modeling context, a better predictive model generates values which are near to the ASE

values. An overfit model usually generates a lesser ASE on the training data but it generates

higher values on the validation and test data. An underfit model shows higher values for all

the roles of the data.

4. Open ended discussion

a. Using Model Comparison, three models, i.e. Decision Tree 1, Decision Tree 2 and

Regression model have been compared, Figure 27 and 28 shows the model comparison

process and model comparison result respectively.

Table 1 Model performance comparison

Target Label Fit

Statistics Statistics Label Tree1 Reg Tree2

Organics Purchase

Indicator _VASE_ Valid: Average

Squared Error

0.133 0.137 0.133

Organics Purchase

Indicator _VAUR_ Valid: Roc Index 0.824 0.807 0.824

-6-

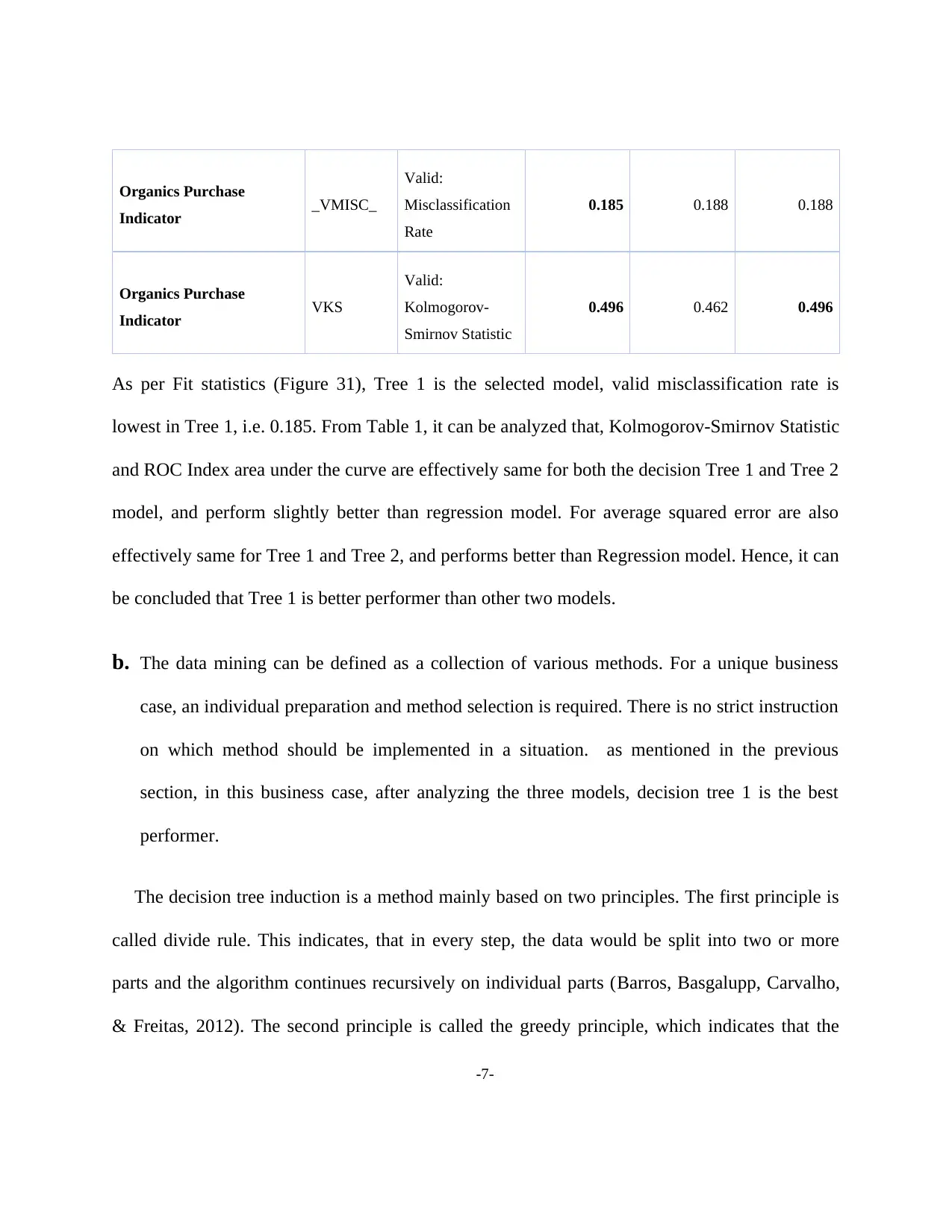

Organics Purchase

Indicator _VMISC_

Valid:

Misclassification

Rate

0.185 0.188 0.188

Organics Purchase

Indicator VKS

Valid:

Kolmogorov-

Smirnov Statistic

0.496 0.462 0.496

As per Fit statistics (Figure 31), Tree 1 is the selected model, valid misclassification rate is

lowest in Tree 1, i.e. 0.185. From Table 1, it can be analyzed that, Kolmogorov-Smirnov Statistic

and ROC Index area under the curve are effectively same for both the decision Tree 1 and Tree 2

model, and perform slightly better than regression model. For average squared error are also

effectively same for Tree 1 and Tree 2, and performs better than Regression model. Hence, it can

be concluded that Tree 1 is better performer than other two models.

b. The data mining can be defined as a collection of various methods. For a unique business

case, an individual preparation and method selection is required. There is no strict instruction

on which method should be implemented in a situation. as mentioned in the previous

section, in this business case, after analyzing the three models, decision tree 1 is the best

performer.

The decision tree induction is a method mainly based on two principles. The first principle is

called divide rule. This indicates, that in every step, the data would be split into two or more

parts and the algorithm continues recursively on individual parts (Barros, Basgalupp, Carvalho,

& Freitas, 2012). The second principle is called the greedy principle, which indicates that the

-7-

Indicator _VMISC_

Valid:

Misclassification

Rate

0.185 0.188 0.188

Organics Purchase

Indicator VKS

Valid:

Kolmogorov-

Smirnov Statistic

0.496 0.462 0.496

As per Fit statistics (Figure 31), Tree 1 is the selected model, valid misclassification rate is

lowest in Tree 1, i.e. 0.185. From Table 1, it can be analyzed that, Kolmogorov-Smirnov Statistic

and ROC Index area under the curve are effectively same for both the decision Tree 1 and Tree 2

model, and perform slightly better than regression model. For average squared error are also

effectively same for Tree 1 and Tree 2, and performs better than Regression model. Hence, it can

be concluded that Tree 1 is better performer than other two models.

b. The data mining can be defined as a collection of various methods. For a unique business

case, an individual preparation and method selection is required. There is no strict instruction

on which method should be implemented in a situation. as mentioned in the previous

section, in this business case, after analyzing the three models, decision tree 1 is the best

performer.

The decision tree induction is a method mainly based on two principles. The first principle is

called divide rule. This indicates, that in every step, the data would be split into two or more

parts and the algorithm continues recursively on individual parts (Barros, Basgalupp, Carvalho,

& Freitas, 2012). The second principle is called the greedy principle, which indicates that the

-7-

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 31

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.