EECS 152B Winter 2019 Assignment 3 - LMS and RLS Noise Canceller

VerifiedAdded on 2023/04/21

|11

|2041

|354

Homework Assignment

AI Summary

This assignment solution details the implementation of an adaptive noise canceller using the Least Mean Squares (LMS) and Recursive Least Squares (RLS) algorithms in MATLAB, as part of the EECS 152B Winter 2019 course. The experiment involves recovering a voice signal buried in noise by generating a filtered version of the reference noise and convolving it with an impulse response vector. The document covers the theoretical background, including the mathematical derivations of both algorithms, the experimental procedure, and a discussion of the results obtained by varying parameters such as µ and L. It further analyzes the stability and convergence properties of the LMS and RLS filters under different conditions, concluding with the MATLAB code used for the implementation and simulation of the adaptive noise cancellation system.

qwertyuiopasdfghjklzxcvbnmqwe

rtyuiopasdfghjklzxcvbnmqwertyu

iopasdfghjklzxcvbnmqwertyuiopa

sdfghjklzxcvbnmqwertyuiopasdfg

hjklzxcvbnmqwertyuiopasdfghjkl

zxcvbnmqwertyuiopasdfghjklzxcv

bnmqwertyuiopasdfghjklzxcvbnm

qwertyuiopasdfghjklzxcvbnmqwe

rtyuiopasdfghjklzxcvbnmqwertyu

iopasdfghjklzxcvbnmqwertyuiopa

sdfghjklzxcvbnmqwertyuiopasdfg

hjklzxcvbnmqwertyuiopasdfghjkl

zxcvbnmqwertyuiopasdfghjklzxcv

bnmqwertyuiopasdfghjklzxcvbnm

qwertyuiopasdfghjklzxcvbnmqwe

rtyuiopasdfghjklzxcvbnmrtyuiopa

EECS 152B

Winter 2019 Assignment 3

Student Name

Student ID Number

Date of submission

rtyuiopasdfghjklzxcvbnmqwertyu

iopasdfghjklzxcvbnmqwertyuiopa

sdfghjklzxcvbnmqwertyuiopasdfg

hjklzxcvbnmqwertyuiopasdfghjkl

zxcvbnmqwertyuiopasdfghjklzxcv

bnmqwertyuiopasdfghjklzxcvbnm

qwertyuiopasdfghjklzxcvbnmqwe

rtyuiopasdfghjklzxcvbnmqwertyu

iopasdfghjklzxcvbnmqwertyuiopa

sdfghjklzxcvbnmqwertyuiopasdfg

hjklzxcvbnmqwertyuiopasdfghjkl

zxcvbnmqwertyuiopasdfghjklzxcv

bnmqwertyuiopasdfghjklzxcvbnm

qwertyuiopasdfghjklzxcvbnmqwe

rtyuiopasdfghjklzxcvbnmrtyuiopa

EECS 152B

Winter 2019 Assignment 3

Student Name

Student ID Number

Date of submission

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

INTRODUCTION

This lab experiment seeks to implement an adaptive noise canceller using the LMS and RLS

algorithms. A filtered version of the reference noise is generated by convolving the noise and the

impulse response vector h.

h= [ 0.78−0.55 0.24−0.16 0.08 ]

The method of least squares is used in the derivation of the recursive algorithm for automatically

adjusting the coefficient filter. There are several assumptions that are invoked on the statistics of

the input signals. The recursive least-squares algorithm realizes a rate of convergence that works

quite faster than the LMS algorithm while the RLS algorithm uses the information contained in

the input data from the onset of the experiment. When the filter convolution is performed, the

output is obtained as,

The deterministic correlation between the input signals at the filter ends is summed over the data

length or the noise input, n, as

To obtain the energy of the desired response,

1

y (i )=∑

k=1

M

h(k , n)u(i−k+ 1) i=1,2 ,. .. , n

e (i)=d (i)− y ( i)

J ( n )=∑

i=1

n

d2 ( i)−2 ∑

k =1

M

h( k , n )∑

i=1

n

d (i )u (i−k +1)

+ ∑

k =1

M

∑

m=1

M

h ( k , n)h(m , n)∑

i=1

n

u( i−k + 1)u ( i−m+1) M ≤n

φ( n; k ,m)=∑

i=1

n

u(i−k ) u( i−m) k , m=0,1 , .. . , M −1

θ( n ; k )=∑

i=1

n

d(i )u( i−k ) k =0,1 ,. .. , M −1

Ed (n )=∑

i=1

n

d2(i )

This lab experiment seeks to implement an adaptive noise canceller using the LMS and RLS

algorithms. A filtered version of the reference noise is generated by convolving the noise and the

impulse response vector h.

h= [ 0.78−0.55 0.24−0.16 0.08 ]

The method of least squares is used in the derivation of the recursive algorithm for automatically

adjusting the coefficient filter. There are several assumptions that are invoked on the statistics of

the input signals. The recursive least-squares algorithm realizes a rate of convergence that works

quite faster than the LMS algorithm while the RLS algorithm uses the information contained in

the input data from the onset of the experiment. When the filter convolution is performed, the

output is obtained as,

The deterministic correlation between the input signals at the filter ends is summed over the data

length or the noise input, n, as

To obtain the energy of the desired response,

1

y (i )=∑

k=1

M

h(k , n)u(i−k+ 1) i=1,2 ,. .. , n

e (i)=d (i)− y ( i)

J ( n )=∑

i=1

n

d2 ( i)−2 ∑

k =1

M

h( k , n )∑

i=1

n

d (i )u (i−k +1)

+ ∑

k =1

M

∑

m=1

M

h ( k , n)h(m , n)∑

i=1

n

u( i−k + 1)u ( i−m+1) M ≤n

φ( n; k ,m)=∑

i=1

n

u(i−k ) u( i−m) k , m=0,1 , .. . , M −1

θ( n ; k )=∑

i=1

n

d(i )u( i−k ) k =0,1 ,. .. , M −1

Ed (n )=∑

i=1

n

d2(i )

The residual sum of squares is given as,

The vector formed based on the least squares filter is obtained from the deterministic normal

equations which obtain the least squares that form a filter,

The algorithm used in the recursive least squares is based on the deterministic correlation matrix

which is defined by the term below,

2

J (n )=Ed (n )−2 ∑

k =1

M

h( k , n) θ(n ;k −1)

+ ∑

k =1

M

∑

m=1

M

h (k , n)h(m , n) φ(n ;k −1 , m−1)

∂ J (n)

∂h(k , n )=−2θ (n ; k−1)+2 ∑

m=1

M

h(m , n)φ( n ;k −1 , m−1) k=1,2, . .. , M

∑

m=1

M

^h (m, n )φ (n ; k−1 , m−1)=θ(n ; k −1) k=1,2, . .. , M

^h(n )= [ ^h ( 1, n ), ^h (2 , n ), . .. , ^h( M ,n ) ] T

Φ(n )=

[ φ ( n ;0,0) φ(n ; 0,1) . . φ (n; 0 , M −1 )

φ( n ;1,0) φ(n ; 1,1) . . φ (n ; 1, M −1 )

. . . . .

. . . . .

φ(n ; M −1,0 ) φ(n ; M −1,1 ) . . φ( n ; M −1 , M −1) ]

θ(n )= [ θ( n; 0 ),θ (n ; 1),. . ., θ(n ; M −1 ) ] T

φ( n; k ,m)=∑

i=1

n

u( i−m )u(i−k )+ cδmk

δmk=¿ {1 m=k ¿ ¿ ¿ ¿

φ( n; k ,m)=u(n−m)u(n−k )+ [ ∑

i=1

n−1

u (i−m)u(i−k )+cδmk ]

The vector formed based on the least squares filter is obtained from the deterministic normal

equations which obtain the least squares that form a filter,

The algorithm used in the recursive least squares is based on the deterministic correlation matrix

which is defined by the term below,

2

J (n )=Ed (n )−2 ∑

k =1

M

h( k , n) θ(n ;k −1)

+ ∑

k =1

M

∑

m=1

M

h (k , n)h(m , n) φ(n ;k −1 , m−1)

∂ J (n)

∂h(k , n )=−2θ (n ; k−1)+2 ∑

m=1

M

h(m , n)φ( n ;k −1 , m−1) k=1,2, . .. , M

∑

m=1

M

^h (m, n )φ (n ; k−1 , m−1)=θ(n ; k −1) k=1,2, . .. , M

^h(n )= [ ^h ( 1, n ), ^h (2 , n ), . .. , ^h( M ,n ) ] T

Φ(n )=

[ φ ( n ;0,0) φ(n ; 0,1) . . φ (n; 0 , M −1 )

φ( n ;1,0) φ(n ; 1,1) . . φ (n ; 1, M −1 )

. . . . .

. . . . .

φ(n ; M −1,0 ) φ(n ; M −1,1 ) . . φ( n ; M −1 , M −1) ]

θ(n )= [ θ( n; 0 ),θ (n ; 1),. . ., θ(n ; M −1 ) ] T

φ( n; k ,m)=∑

i=1

n

u( i−m )u(i−k )+ cδmk

δmk=¿ {1 m=k ¿ ¿ ¿ ¿

φ( n; k ,m)=u(n−m)u(n−k )+ [ ∑

i=1

n−1

u (i−m)u(i−k )+cδmk ]

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

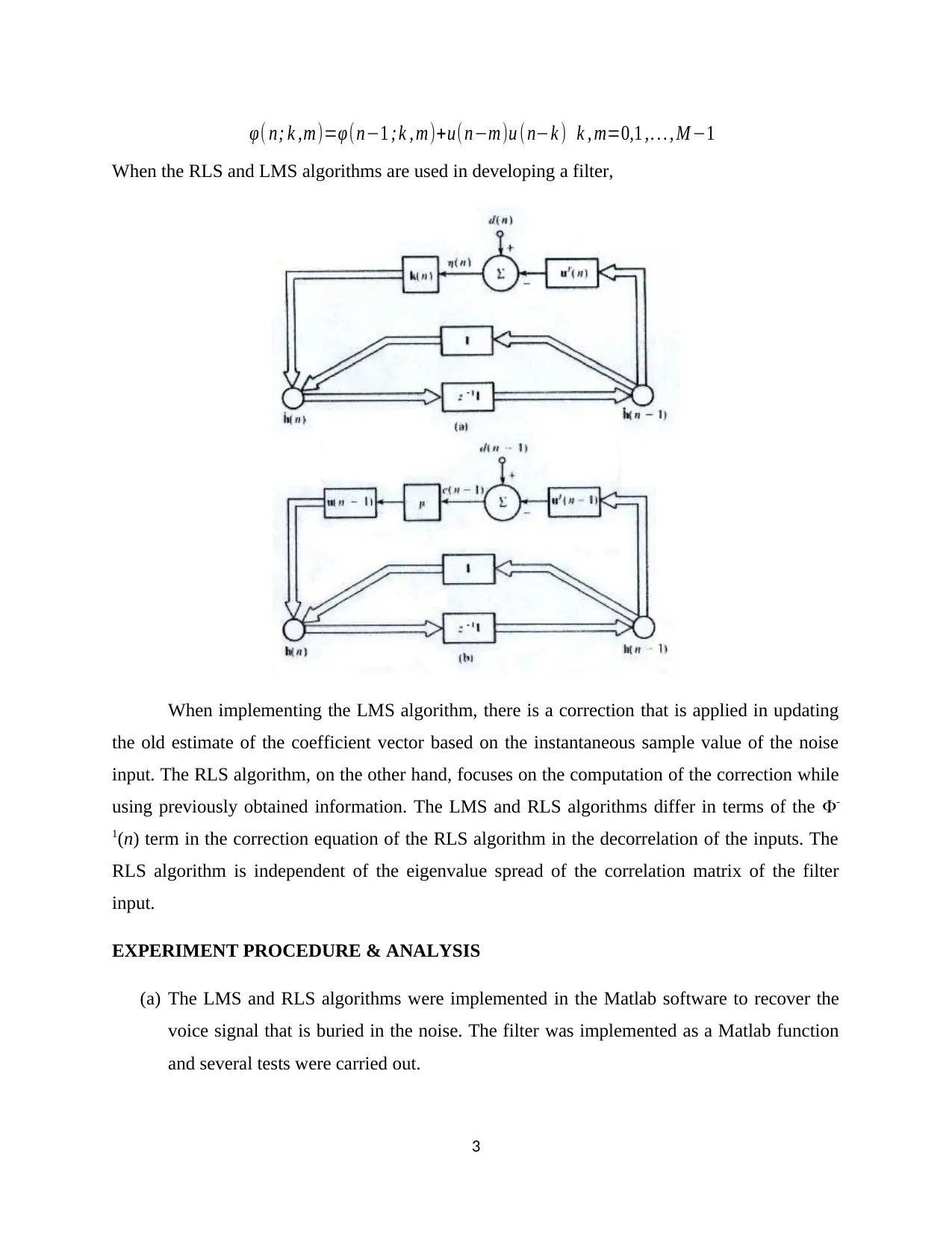

When the RLS and LMS algorithms are used in developing a filter,

When implementing the LMS algorithm, there is a correction that is applied in updating

the old estimate of the coefficient vector based on the instantaneous sample value of the noise

input. The RLS algorithm, on the other hand, focuses on the computation of the correction while

using previously obtained information. The LMS and RLS algorithms differ in terms of the -

1(n) term in the correction equation of the RLS algorithm in the decorrelation of the inputs. The

RLS algorithm is independent of the eigenvalue spread of the correlation matrix of the filter

input.

EXPERIMENT PROCEDURE & ANALYSIS

(a) The LMS and RLS algorithms were implemented in the Matlab software to recover the

voice signal that is buried in the noise. The filter was implemented as a Matlab function

and several tests were carried out.

3

φ( n; k ,m)=φ(n−1 ; k , m)+u(n−m)u ( n−k ) k , m=0,1 ,. . . , M −1

When implementing the LMS algorithm, there is a correction that is applied in updating

the old estimate of the coefficient vector based on the instantaneous sample value of the noise

input. The RLS algorithm, on the other hand, focuses on the computation of the correction while

using previously obtained information. The LMS and RLS algorithms differ in terms of the -

1(n) term in the correction equation of the RLS algorithm in the decorrelation of the inputs. The

RLS algorithm is independent of the eigenvalue spread of the correlation matrix of the filter

input.

EXPERIMENT PROCEDURE & ANALYSIS

(a) The LMS and RLS algorithms were implemented in the Matlab software to recover the

voice signal that is buried in the noise. The filter was implemented as a Matlab function

and several tests were carried out.

3

φ( n; k ,m)=φ(n−1 ; k , m)+u(n−m)u ( n−k ) k , m=0,1 ,. . . , M −1

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

(b) The tests on the algorithm were plotted and the experimenters listened in on the errors

that resulted from implementing both algorithms.

(c) The value of μ was increased to show that the LMS would converge faster. The values

were tested to determine when the filter can be varied before it becomes unstable.

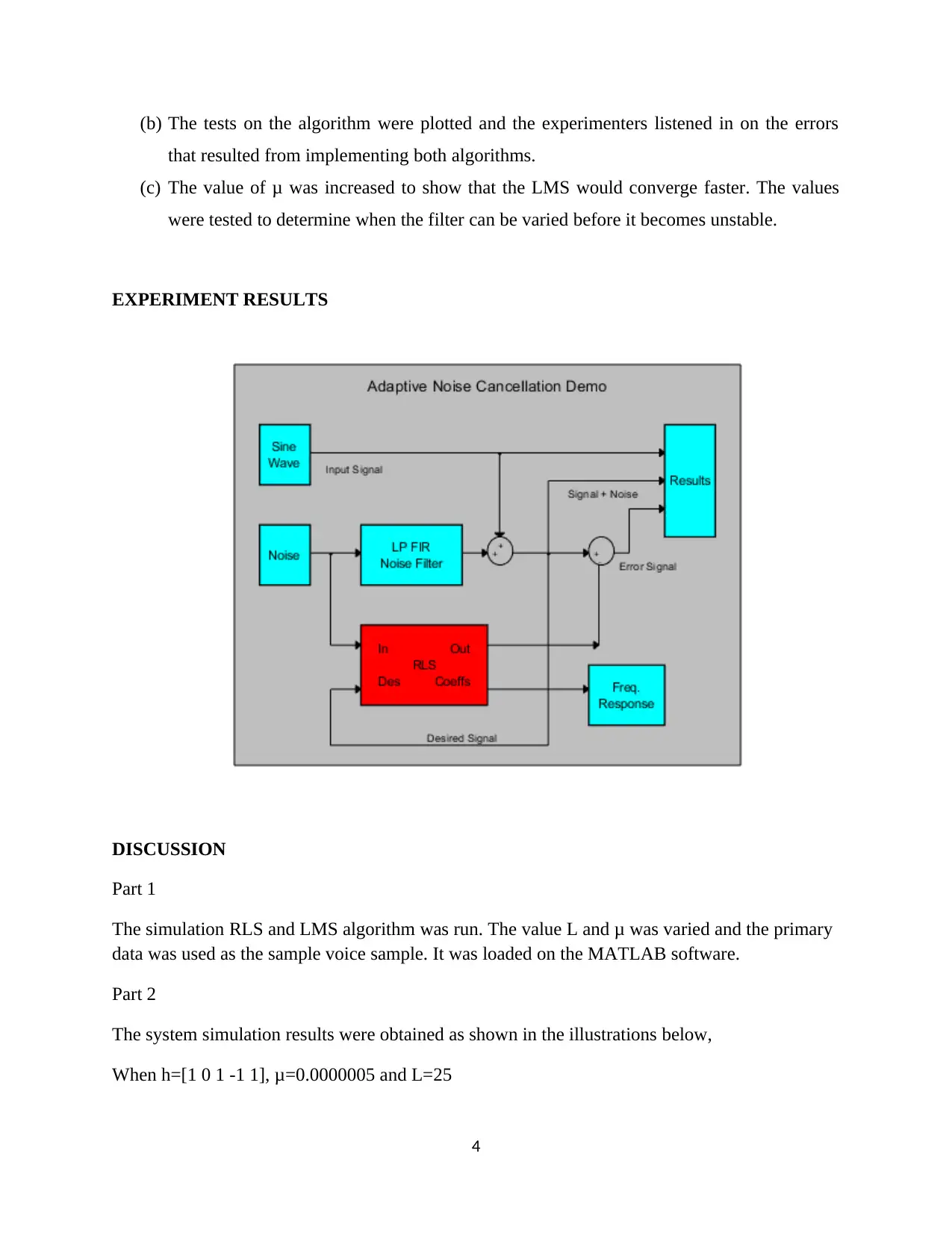

EXPERIMENT RESULTS

DISCUSSION

Part 1

The simulation RLS and LMS algorithm was run. The value L and μ was varied and the primary

data was used as the sample voice sample. It was loaded on the MATLAB software.

Part 2

The system simulation results were obtained as shown in the illustrations below,

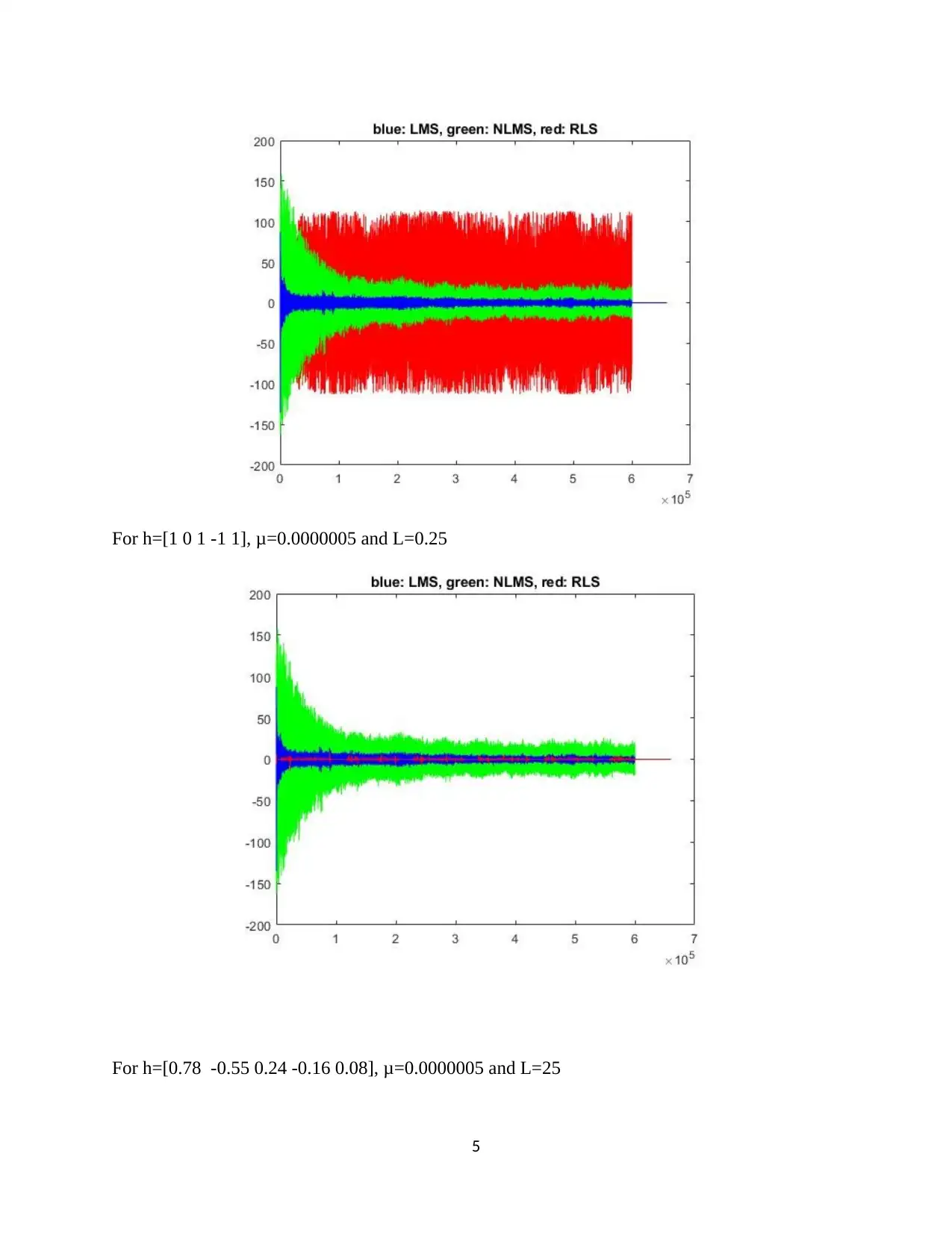

When h=[1 0 1 -1 1], μ=0.0000005 and L=25

4

that resulted from implementing both algorithms.

(c) The value of μ was increased to show that the LMS would converge faster. The values

were tested to determine when the filter can be varied before it becomes unstable.

EXPERIMENT RESULTS

DISCUSSION

Part 1

The simulation RLS and LMS algorithm was run. The value L and μ was varied and the primary

data was used as the sample voice sample. It was loaded on the MATLAB software.

Part 2

The system simulation results were obtained as shown in the illustrations below,

When h=[1 0 1 -1 1], μ=0.0000005 and L=25

4

For h=[1 0 1 -1 1], μ=0.0000005 and L=0.25

For h=[0.78 -0.55 0.24 -0.16 0.08], μ=0.0000005 and L=25

5

For h=[0.78 -0.55 0.24 -0.16 0.08], μ=0.0000005 and L=25

5

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

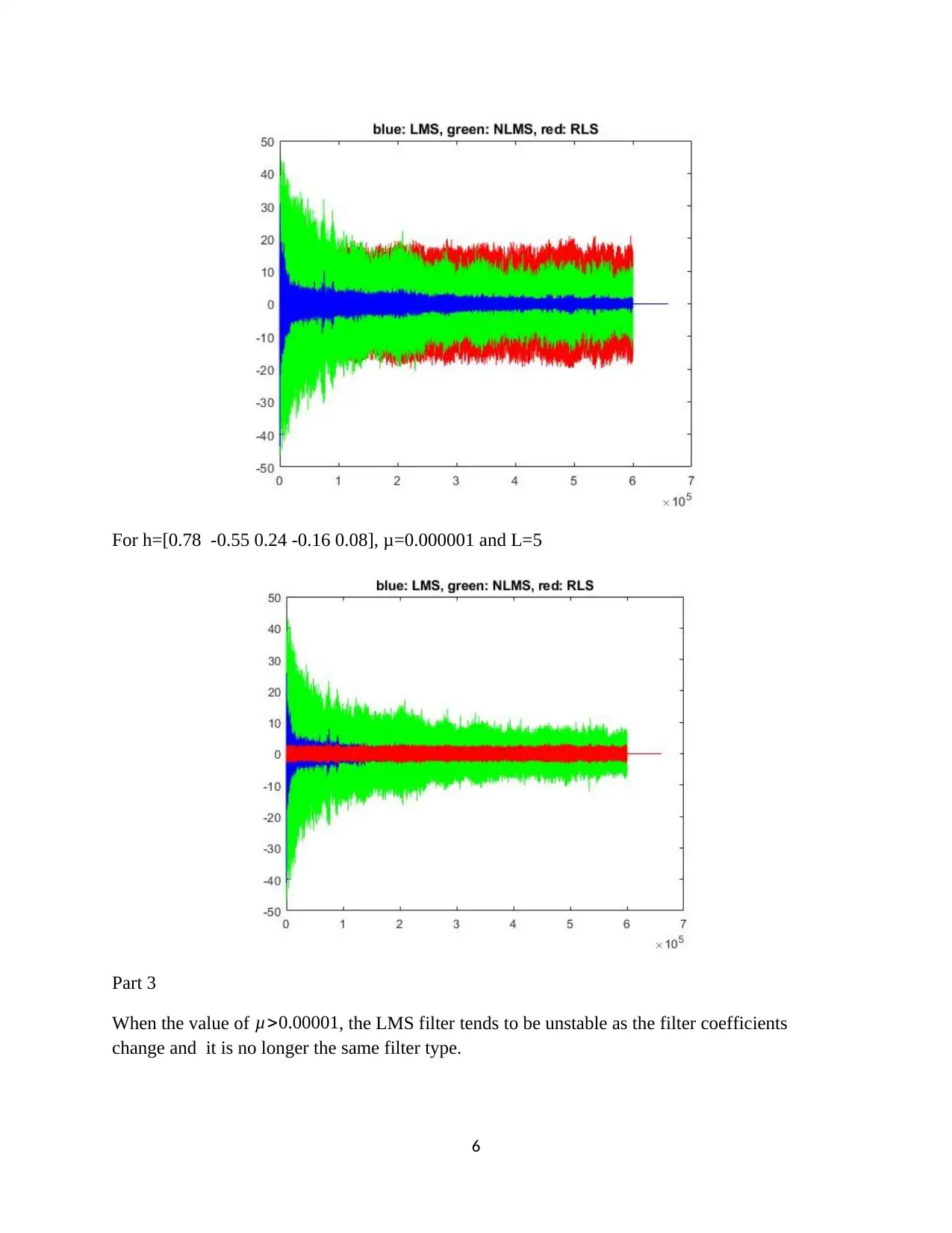

For h=[0.78 -0.55 0.24 -0.16 0.08], μ=0.000001 and L=5

Part 3

When the value of μ>0.00001, the LMS filter tends to be unstable as the filter coefficients

change and it is no longer the same filter type.

6

Part 3

When the value of μ>0.00001, the LMS filter tends to be unstable as the filter coefficients

change and it is no longer the same filter type.

6

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Part 4

The value of L helps to control the desired sensitivity of the RLS. When the value of L is set to

0.001, the hrls weights vanish.

Part 5

The LMS algorithm requires a large convergence parameter value to speed up the convergence

of the filter coefficient to their optimal values, the convergence parameters should be small for

better accuracy.

Part 6

The results when the signal is weak are widely different to the first results we obtain; we can

strongly remark that the RLS algorithm is efficient as compared to LMS or NLMS.

Part 7

The results obtained were different from the first result and obtained so as to strongly remark that

the RLS algorithm is efficient as compared to LMS or NLMS.

Part 8

It doesn’t affect for the convergence else we will need to adapt the algorithm for each voice

sample, which it is not a generally good in real life application.

Part 9

Different audio files are obtained as the desired and reference signals. The type of noise

signal affect the parameters needed for the convergence of the LMS and RLS adaptive filters.

The LMS algorithm requires up to 20 iterations. When the tap coefficients contained are 6 in the

tapped-delay-line filter. RLS algorithm converges on the 3rd iteration. The rate of convergence of

the RLS algorithm is faster than that of the LMS algorithm by an order of magnitude.

7

The value of L helps to control the desired sensitivity of the RLS. When the value of L is set to

0.001, the hrls weights vanish.

Part 5

The LMS algorithm requires a large convergence parameter value to speed up the convergence

of the filter coefficient to their optimal values, the convergence parameters should be small for

better accuracy.

Part 6

The results when the signal is weak are widely different to the first results we obtain; we can

strongly remark that the RLS algorithm is efficient as compared to LMS or NLMS.

Part 7

The results obtained were different from the first result and obtained so as to strongly remark that

the RLS algorithm is efficient as compared to LMS or NLMS.

Part 8

It doesn’t affect for the convergence else we will need to adapt the algorithm for each voice

sample, which it is not a generally good in real life application.

Part 9

Different audio files are obtained as the desired and reference signals. The type of noise

signal affect the parameters needed for the convergence of the LMS and RLS adaptive filters.

The LMS algorithm requires up to 20 iterations. When the tap coefficients contained are 6 in the

tapped-delay-line filter. RLS algorithm converges on the 3rd iteration. The rate of convergence of

the RLS algorithm is faster than that of the LMS algorithm by an order of magnitude.

7

LMS algorithm always exhibit a non-zero maladjustment may be used in a small step size

parameter, . The superior performance of the RLS algorithm compared to the LMS algorithm. It

was obtained at the expense of a large increase in computational complexity. The complexity of

an adaptive algorithm for the real-time operation is determined by the number of multiplications

per iteration and the precision required performing arithmetic operations.

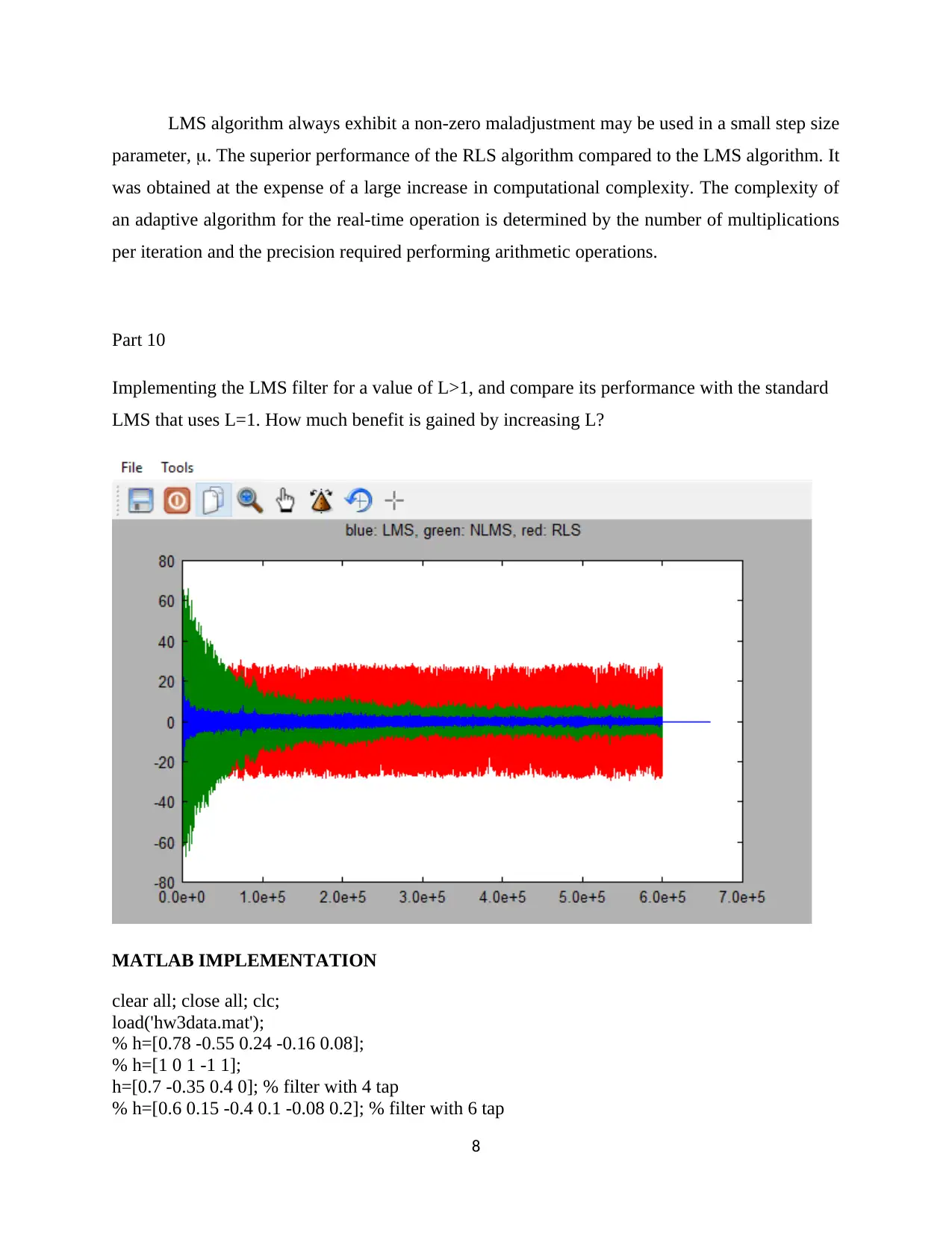

Part 10

Implementing the LMS filter for a value of L>1, and compare its performance with the standard

LMS that uses L=1. How much benefit is gained by increasing L?

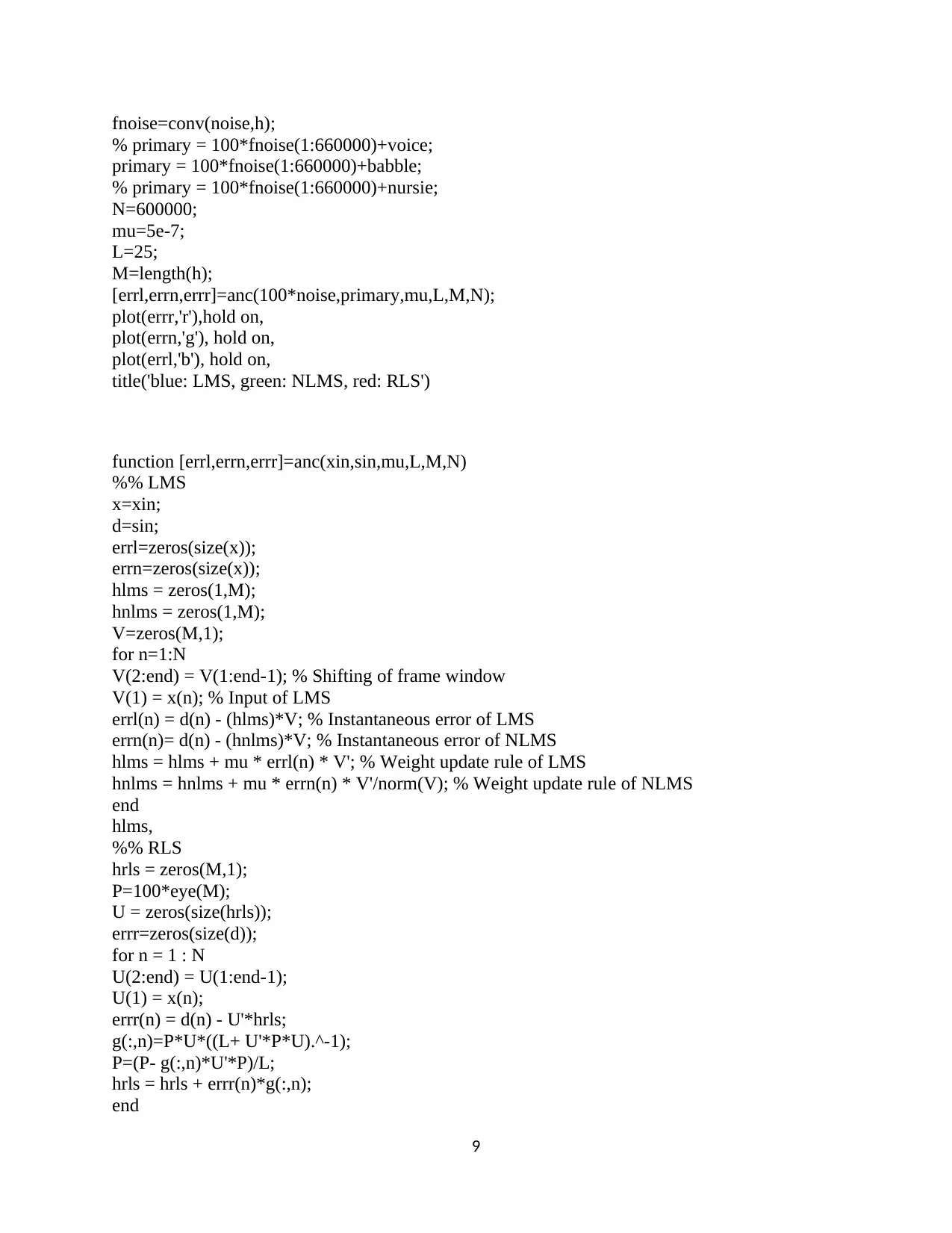

MATLAB IMPLEMENTATION

clear all; close all; clc;

load('hw3data.mat');

% h=[0.78 -0.55 0.24 -0.16 0.08];

% h=[1 0 1 -1 1];

h=[0.7 -0.35 0.4 0]; % filter with 4 tap

% h=[0.6 0.15 -0.4 0.1 -0.08 0.2]; % filter with 6 tap

8

parameter, . The superior performance of the RLS algorithm compared to the LMS algorithm. It

was obtained at the expense of a large increase in computational complexity. The complexity of

an adaptive algorithm for the real-time operation is determined by the number of multiplications

per iteration and the precision required performing arithmetic operations.

Part 10

Implementing the LMS filter for a value of L>1, and compare its performance with the standard

LMS that uses L=1. How much benefit is gained by increasing L?

MATLAB IMPLEMENTATION

clear all; close all; clc;

load('hw3data.mat');

% h=[0.78 -0.55 0.24 -0.16 0.08];

% h=[1 0 1 -1 1];

h=[0.7 -0.35 0.4 0]; % filter with 4 tap

% h=[0.6 0.15 -0.4 0.1 -0.08 0.2]; % filter with 6 tap

8

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

fnoise=conv(noise,h);

% primary = 100*fnoise(1:660000)+voice;

primary = 100*fnoise(1:660000)+babble;

% primary = 100*fnoise(1:660000)+nursie;

N=600000;

mu=5e-7;

L=25;

M=length(h);

[errl,errn,errr]=anc(100*noise,primary,mu,L,M,N);

plot(errr,'r'),hold on,

plot(errn,'g'), hold on,

plot(errl,'b'), hold on,

title('blue: LMS, green: NLMS, red: RLS')

function [errl,errn,errr]=anc(xin,sin,mu,L,M,N)

%% LMS

x=xin;

d=sin;

errl=zeros(size(x));

errn=zeros(size(x));

hlms = zeros(1,M);

hnlms = zeros(1,M);

V=zeros(M,1);

for n=1:N

V(2:end) = V(1:end-1); % Shifting of frame window

V(1) = x(n); % Input of LMS

errl(n) = d(n) - (hlms)*V; % Instantaneous error of LMS

errn(n)= d(n) - (hnlms)*V; % Instantaneous error of NLMS

hlms = hlms + mu * errl(n) * V'; % Weight update rule of LMS

hnlms = hnlms + mu * errn(n) * V'/norm(V); % Weight update rule of NLMS

end

hlms,

%% RLS

hrls = zeros(M,1);

P=100*eye(M);

U = zeros(size(hrls));

errr=zeros(size(d));

for n = 1 : N

U(2:end) = U(1:end-1);

U(1) = x(n);

errr(n) = d(n) - U'*hrls;

g(:,n)=P*U*((L+ U'*P*U).^-1);

P=(P- g(:,n)*U'*P)/L;

hrls = hrls + errr(n)*g(:,n);

end

9

% primary = 100*fnoise(1:660000)+voice;

primary = 100*fnoise(1:660000)+babble;

% primary = 100*fnoise(1:660000)+nursie;

N=600000;

mu=5e-7;

L=25;

M=length(h);

[errl,errn,errr]=anc(100*noise,primary,mu,L,M,N);

plot(errr,'r'),hold on,

plot(errn,'g'), hold on,

plot(errl,'b'), hold on,

title('blue: LMS, green: NLMS, red: RLS')

function [errl,errn,errr]=anc(xin,sin,mu,L,M,N)

%% LMS

x=xin;

d=sin;

errl=zeros(size(x));

errn=zeros(size(x));

hlms = zeros(1,M);

hnlms = zeros(1,M);

V=zeros(M,1);

for n=1:N

V(2:end) = V(1:end-1); % Shifting of frame window

V(1) = x(n); % Input of LMS

errl(n) = d(n) - (hlms)*V; % Instantaneous error of LMS

errn(n)= d(n) - (hnlms)*V; % Instantaneous error of NLMS

hlms = hlms + mu * errl(n) * V'; % Weight update rule of LMS

hnlms = hnlms + mu * errn(n) * V'/norm(V); % Weight update rule of NLMS

end

hlms,

%% RLS

hrls = zeros(M,1);

P=100*eye(M);

U = zeros(size(hrls));

errr=zeros(size(d));

for n = 1 : N

U(2:end) = U(1:end-1);

U(1) = x(n);

errr(n) = d(n) - U'*hrls;

g(:,n)=P*U*((L+ U'*P*U).^-1);

P=(P- g(:,n)*U'*P)/L;

hrls = hrls + errr(n)*g(:,n);

end

9

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

hrls,

end

10

end

10

1 out of 11

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.