MA636/MA836 - Markov Chain Assignment: Probability and States

VerifiedAdded on 2022/09/11

|12

|1406

|11

Homework Assignment

AI Summary

This document presents a comprehensive solution to a Markov chain assignment, addressing key concepts such as homogeneous Markov chains, transition matrices, and stationary distributions. The assignment explores the calculation of probabilities, including conditional probabilities, and analyzes the properties of Markov chains, including the Markov property and the concept of a general matrix. It also covers the determination of stationary distributions, application of the total probability theorem, and the identification of reachable states. Furthermore, the solution delves into topics like final representations, stationary distributions, and the Kolmogorov forward and backward equations. The assignment also includes examples of Markov chain applications in system maintenance and decomposition techniques, with a focus on transition probability matrices and their implications. Overall, the document provides detailed explanations and calculations, offering a thorough understanding of Markov chain analysis.

MATHS

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Table of Contents

Question 1............................................................................................................................................2

a).......................................................................................................................................................2

b).......................................................................................................................................................3

Question 2............................................................................................................................................3

Question 3............................................................................................................................................4

a).......................................................................................................................................................4

b).......................................................................................................................................................5

c)........................................................................................................................................................5

Question 4............................................................................................................................................5

a).......................................................................................................................................................5

b).......................................................................................................................................................6

Question 5............................................................................................................................................6

i)........................................................................................................................................................6

ii).......................................................................................................................................................6

Question 6............................................................................................................................................7

a).......................................................................................................................................................7

b).......................................................................................................................................................7

i)....................................................................................................................................................7

ii)...................................................................................................................................................8

c)........................................................................................................................................................9

i)....................................................................................................................................................9

ii)...................................................................................................................................................9

iii)..................................................................................................................................................9

iv)................................................................................................................................................10

Reference............................................................................................................................................11

Question 1............................................................................................................................................2

a).......................................................................................................................................................2

b).......................................................................................................................................................3

Question 2............................................................................................................................................3

Question 3............................................................................................................................................4

a).......................................................................................................................................................4

b).......................................................................................................................................................5

c)........................................................................................................................................................5

Question 4............................................................................................................................................5

a).......................................................................................................................................................5

b).......................................................................................................................................................6

Question 5............................................................................................................................................6

i)........................................................................................................................................................6

ii).......................................................................................................................................................6

Question 6............................................................................................................................................7

a).......................................................................................................................................................7

b).......................................................................................................................................................7

i)....................................................................................................................................................7

ii)...................................................................................................................................................8

c)........................................................................................................................................................9

i)....................................................................................................................................................9

ii)...................................................................................................................................................9

iii)..................................................................................................................................................9

iv)................................................................................................................................................10

Reference............................................................................................................................................11

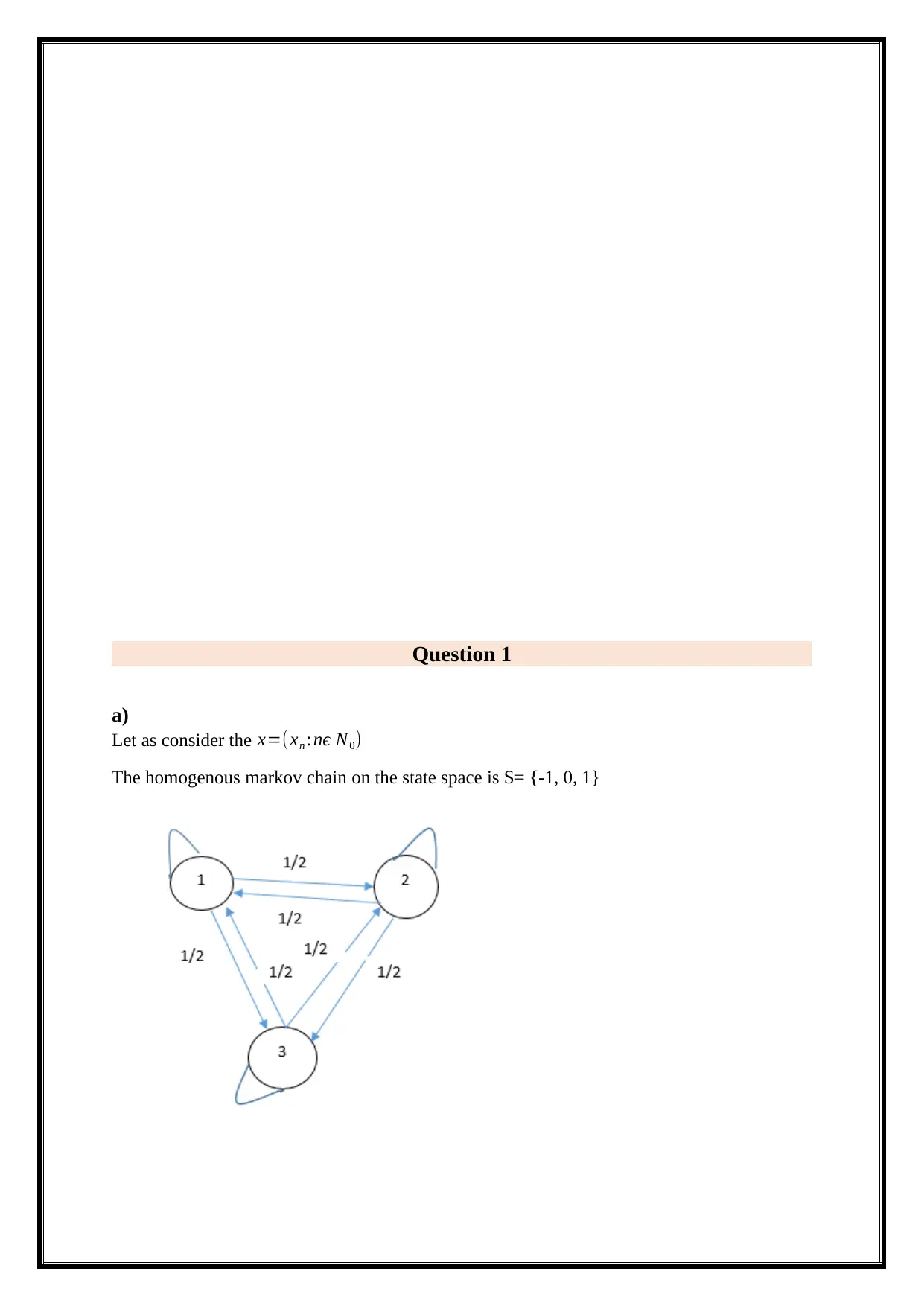

Question 1

a)

Let as consider the x=(xn :nϵ N0)

The homogenous markov chain on the state space is S= {-1, 0, 1}

a)

Let as consider the x=(xn :nϵ N0)

The homogenous markov chain on the state space is S= {-1, 0, 1}

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

( 0 1 /2 1/2

1/2 0 1/2

1/2 1 /2 0 )

=

( 0 0.5 0.5

0.5 0 0.5

0.5 0.5 0 ) (−1 0 1 )

( 0∗−1 0.5∗0 0.5∗1

0.5∗−1 0∗0 0.5∗1

0.5∗1 0.5∗0 0∗1 )=

( 0 0 0.5

−0.5 0 0.5

0.5 0 0 )

A zero element in the transition matrix indicate that the transition is not possible.

To calculating the probability transition matrix is,

E ( X 1

X 0 ) =b and E ( X 2

X 0 ) =b

E ( X 1

X 0 ) =0.5, E ( X 2

X 0 ) =0.5

b)

P( X 0=−1 ¿=¿ P( X 0=0 ¿= P( X 0=1¿

=

( 0 0.5 0.5

0.5 0 0.5

0.5 0.5 0 ) (−1 0 1 )

=

( 0 0 0.5

−0.5 0 0.5

0.5 0 0 )

E(x1)=0 and E(x2)=0.25

Question 2

S = {0, 1, 2}

=

(1/3 1/6 1/2

1/ 4 1/3 5/12

1/2 1 /4 1/ 4 )=

( 0.33 0.166 0.5

0.25 0.33 0.416

0.5 0.25 0.25 )

The stationary distribution is ( π1, π2 , π3)

1/2 0 1/2

1/2 1 /2 0 )

=

( 0 0.5 0.5

0.5 0 0.5

0.5 0.5 0 ) (−1 0 1 )

( 0∗−1 0.5∗0 0.5∗1

0.5∗−1 0∗0 0.5∗1

0.5∗1 0.5∗0 0∗1 )=

( 0 0 0.5

−0.5 0 0.5

0.5 0 0 )

A zero element in the transition matrix indicate that the transition is not possible.

To calculating the probability transition matrix is,

E ( X 1

X 0 ) =b and E ( X 2

X 0 ) =b

E ( X 1

X 0 ) =0.5, E ( X 2

X 0 ) =0.5

b)

P( X 0=−1 ¿=¿ P( X 0=0 ¿= P( X 0=1¿

=

( 0 0.5 0.5

0.5 0 0.5

0.5 0.5 0 ) (−1 0 1 )

=

( 0 0 0.5

−0.5 0 0.5

0.5 0 0 )

E(x1)=0 and E(x2)=0.25

Question 2

S = {0, 1, 2}

=

(1/3 1/6 1/2

1/ 4 1/3 5/12

1/2 1 /4 1/ 4 )=

( 0.33 0.166 0.5

0.25 0.33 0.416

0.5 0.25 0.25 )

The stationary distribution is ( π1, π2 , π3)

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

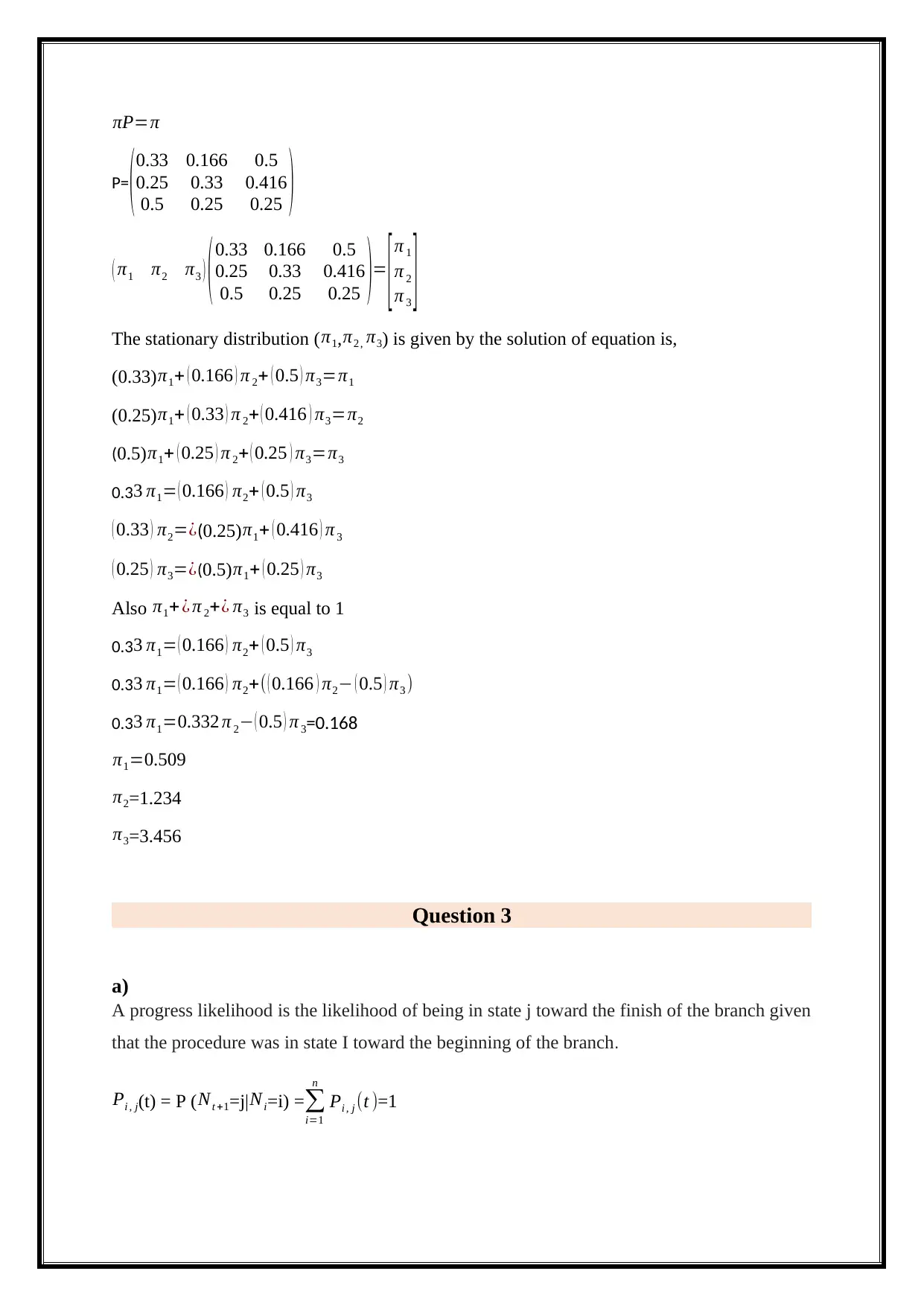

πP=π

P= (0.33 0.166 0.5

0.25 0.33 0.416

0.5 0.25 0.25 )

( π1 π2 π3 ) (0.33 0.166 0.5

0.25 0.33 0.416

0.5 0.25 0.25 )=

[π 1

π 2

π 3 ]

The stationary distribution ( π1, π2 , π3) is given by the solution of equation is,

(0.33) π1+ ( 0.166 ) π 2+ ( 0.5 ) π3=π1

(0.25)π1+ ( 0.33 ) π 2+ ( 0.416 ) π3=π2

(0.5) π1+ ( 0.25 ) π 2+ ( 0.25 ) π3=π3

0.33 π1= ( 0.166 ) π2+ ( 0.5 ) π3

( 0.33 ) π2=¿(0.25)π1+ ( 0.416 ) π 3

( 0.25 ) π3=¿(0.5)π1+ ( 0.25 ) π3

Also π1+ ¿ π 2+¿ π3 is equal to 1

0.33 π1= ( 0.166 ) π2+ ( 0.5 ) π3

0.33 π1= ( 0.166 ) π2+( ( 0.166 ) π2− ( 0.5 ) π3 )

0.33 π1=0.332 π 2− ( 0.5 ) π 3=0.168

π1=0.509

π2=1.234

π3=3.456

Question 3

a)

A progress likelihood is the likelihood of being in state j toward the finish of the branch given

that the procedure was in state I toward the beginning of the branch.

Pi , j(t) = P (Nt +1=j|Ni=i) =∑

i=1

n

Pi , j (t )=1

P= (0.33 0.166 0.5

0.25 0.33 0.416

0.5 0.25 0.25 )

( π1 π2 π3 ) (0.33 0.166 0.5

0.25 0.33 0.416

0.5 0.25 0.25 )=

[π 1

π 2

π 3 ]

The stationary distribution ( π1, π2 , π3) is given by the solution of equation is,

(0.33) π1+ ( 0.166 ) π 2+ ( 0.5 ) π3=π1

(0.25)π1+ ( 0.33 ) π 2+ ( 0.416 ) π3=π2

(0.5) π1+ ( 0.25 ) π 2+ ( 0.25 ) π3=π3

0.33 π1= ( 0.166 ) π2+ ( 0.5 ) π3

( 0.33 ) π2=¿(0.25)π1+ ( 0.416 ) π 3

( 0.25 ) π3=¿(0.5)π1+ ( 0.25 ) π3

Also π1+ ¿ π 2+¿ π3 is equal to 1

0.33 π1= ( 0.166 ) π2+ ( 0.5 ) π3

0.33 π1= ( 0.166 ) π2+( ( 0.166 ) π2− ( 0.5 ) π3 )

0.33 π1=0.332 π 2− ( 0.5 ) π 3=0.168

π1=0.509

π2=1.234

π3=3.456

Question 3

a)

A progress likelihood is the likelihood of being in state j toward the finish of the branch given

that the procedure was in state I toward the beginning of the branch.

Pi , j(t) = P (Nt +1=j|Ni=i) =∑

i=1

n

Pi , j (t )=1

Pi , j(t)={1 if i= j

0 i ≠ j

Ci , j(1) = Pi , j(t), ∀ i, ∀ j

The applying on the total probability theorem,

P= [ p01 p02 p03

p11 p12 p13

p21 p22 p23 ]

Sum of element of each row of matrix,

P (Nt +1=0) and then

E ( Nt +1) =0

E ( Nt +1)- E(N i)=0

E ( Nt +1) = E ( Ni ) =0

E (Nt +1)/ E ( Ni ) =0

b)

A procedure has a markov property if the likelihood dissemination of future states depends

just upon the present state not on the grouping of occasion that continue it.

Discrete time,

( Ni| Ni−0 , Ni−1 , Ni−2 ,)=( Ni∨Ni −2 ,)

A time symmetric version,

E (Nt +1)/E ( Ni )=E (Ni∨Nt +1 , Nt−1)

A more general version

Let A be a set of indices>N, t is a set of indices <t. Then

Ni ⊥ Nt +1 ⊥ Nt −1

There are all equivalent.

c)

A general grid is an assortment of numbers orchestrated into a fixed number of lines and

segments. Generally the numbers are genuine numbers. As a rule, grids can contain complex

0 i ≠ j

Ci , j(1) = Pi , j(t), ∀ i, ∀ j

The applying on the total probability theorem,

P= [ p01 p02 p03

p11 p12 p13

p21 p22 p23 ]

Sum of element of each row of matrix,

P (Nt +1=0) and then

E ( Nt +1) =0

E ( Nt +1)- E(N i)=0

E ( Nt +1) = E ( Ni ) =0

E (Nt +1)/ E ( Ni ) =0

b)

A procedure has a markov property if the likelihood dissemination of future states depends

just upon the present state not on the grouping of occasion that continue it.

Discrete time,

( Ni| Ni−0 , Ni−1 , Ni−2 ,)=( Ni∨Ni −2 ,)

A time symmetric version,

E (Nt +1)/E ( Ni )=E (Ni∨Nt +1 , Nt−1)

A more general version

Let A be a set of indices>N, t is a set of indices <t. Then

Ni ⊥ Nt +1 ⊥ Nt −1

There are all equivalent.

c)

A general grid is an assortment of numbers orchestrated into a fixed number of lines and

segments. Generally the numbers are genuine numbers. As a rule, grids can contain complex

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

numbers yet we won't see those here. Here is a case of a network with three lines and three

segments.

The general matrix they can denoted as,

[ N0 N0−1 N0 −2

N1−0 N1−1 N1−2

N2−0 N2−1 N2−2 ]

Question 4

a)

Markov chain transition probability matrix,

P= ( 0 0.5 0.5

0.33 0.25 0.416

0.66 0.25 0.416 )( 0.1

0.4

0.5 )

( 0 0.2 0.25

0.33 0.1 0.208

0.66 0.1 0.208 )

P(X 0=L)=0

P( X2=M)=0.1

P(X3=H)=0.208

b)

Markov chain with state space S, think about a couple of states (I, j). We state that j is

reachable from I, indicated by I → j, if there exists a whole number n ≥ 0 with the end goal

that P ij > 0. This implies beginning in state I, there is a positive likelihood (however not

really equivalent to 1) that the chain will be in state j at time n (that is, n steps later); p

(xn=L/x0=L) =0.25.

Question 5

Let as consider random walk barrier y

S=N0

p00=1-q

p01=q

Pij={ q j=i+1

1−q j=i−1

segments.

The general matrix they can denoted as,

[ N0 N0−1 N0 −2

N1−0 N1−1 N1−2

N2−0 N2−1 N2−2 ]

Question 4

a)

Markov chain transition probability matrix,

P= ( 0 0.5 0.5

0.33 0.25 0.416

0.66 0.25 0.416 )( 0.1

0.4

0.5 )

( 0 0.2 0.25

0.33 0.1 0.208

0.66 0.1 0.208 )

P(X 0=L)=0

P( X2=M)=0.1

P(X3=H)=0.208

b)

Markov chain with state space S, think about a couple of states (I, j). We state that j is

reachable from I, indicated by I → j, if there exists a whole number n ≥ 0 with the end goal

that P ij > 0. This implies beginning in state I, there is a positive likelihood (however not

really equivalent to 1) that the chain will be in state j at time n (that is, n steps later); p

(xn=L/x0=L) =0.25.

Question 5

Let as consider random walk barrier y

S=N0

p00=1-q

p01=q

Pij={ q j=i+1

1−q j=i−1

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

i)

A final portrayal of a gathering is a gathering portrayal that has no nontrivial invariant

subspaces. The unchangeable portrayal has various momentous properties, as formalized in

the gathering symmetrically hypothesis. Let the gathering request of a gathering be Q, and the

component of the 1-q portrayal (the request for every constituent grid) be Q (a positive whole

number). Leave any activity alone indicated 1-q, and let the q line and 1-q section of the

network comparing to a grid q in the 1-q unchangeable portrayal be q,1-q. The accompanying

properties can be gotten from the gathering symmetrically hypothesis.

.[ 1 q

1−q 1 ]=1

ii)

A stationary conveyance of a Markov chain is a likelihood circulation that remaining

parts unaltered in the Markov chain as time advances. Ordinarily, it is spoken to as a column

vector π whose sections are probabilities adding to 1, and given progress grid P, it fulfills. A

Markov chain is a stochastic model portraying a grouping of potential occasions in which the

likelihood of every occasion depends just on the state achieved in the past occasion q<1/2 is

the conceivable.

Question 6

a)

A Markov tie is said to be unchangeable in the event that it is conceivable to get to

any state from any state. The accompanying clarifies this definition all the more officially. A

state j is said to be open from a state I (composed I → j) if a framework began in state I has a

non-zero likelihood of progressing into state j sooner or later. All states in a correspondence

class are for the most part together positive repetitive, invalid intermittent or transient.

Specifically, for a final Markov chain, all states together should be sure intermittent, invalid

repetitive or transient. A Markov chain is aperiodic if each state is aperiodic. An

unchangeable Markov chain just needs one aperiodic state to infer all states are aperiodic.

Each condition of a bipartite chart has an even period.

b)

i)

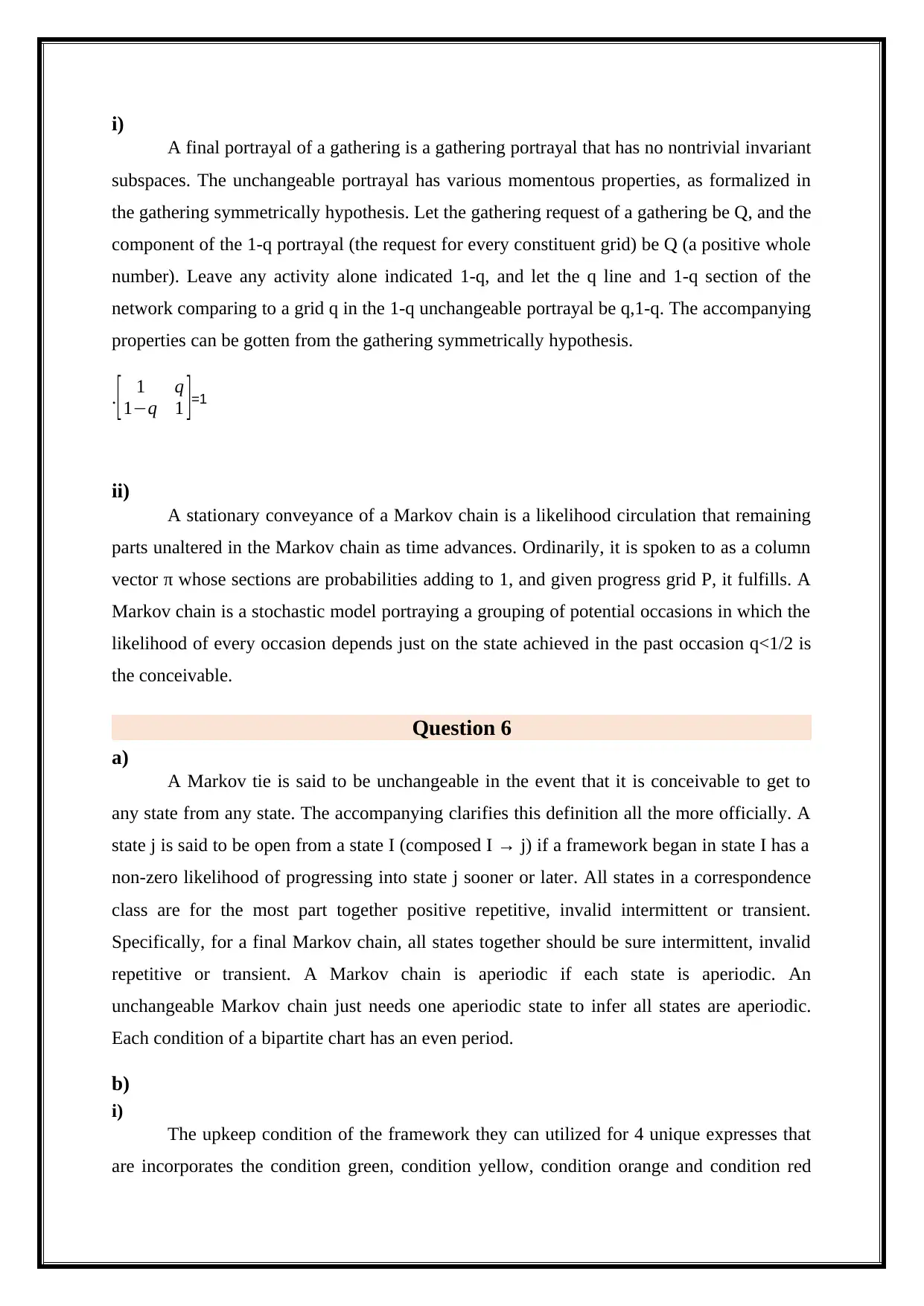

The upkeep condition of the framework they can utilized for 4 unique expresses that

are incorporates the condition green, condition yellow, condition orange and condition red

A final portrayal of a gathering is a gathering portrayal that has no nontrivial invariant

subspaces. The unchangeable portrayal has various momentous properties, as formalized in

the gathering symmetrically hypothesis. Let the gathering request of a gathering be Q, and the

component of the 1-q portrayal (the request for every constituent grid) be Q (a positive whole

number). Leave any activity alone indicated 1-q, and let the q line and 1-q section of the

network comparing to a grid q in the 1-q unchangeable portrayal be q,1-q. The accompanying

properties can be gotten from the gathering symmetrically hypothesis.

.[ 1 q

1−q 1 ]=1

ii)

A stationary conveyance of a Markov chain is a likelihood circulation that remaining

parts unaltered in the Markov chain as time advances. Ordinarily, it is spoken to as a column

vector π whose sections are probabilities adding to 1, and given progress grid P, it fulfills. A

Markov chain is a stochastic model portraying a grouping of potential occasions in which the

likelihood of every occasion depends just on the state achieved in the past occasion q<1/2 is

the conceivable.

Question 6

a)

A Markov tie is said to be unchangeable in the event that it is conceivable to get to

any state from any state. The accompanying clarifies this definition all the more officially. A

state j is said to be open from a state I (composed I → j) if a framework began in state I has a

non-zero likelihood of progressing into state j sooner or later. All states in a correspondence

class are for the most part together positive repetitive, invalid intermittent or transient.

Specifically, for a final Markov chain, all states together should be sure intermittent, invalid

repetitive or transient. A Markov chain is aperiodic if each state is aperiodic. An

unchangeable Markov chain just needs one aperiodic state to infer all states are aperiodic.

Each condition of a bipartite chart has an even period.

b)

i)

The upkeep condition of the framework they can utilized for 4 unique expresses that

are incorporates the condition green, condition yellow, condition orange and condition red

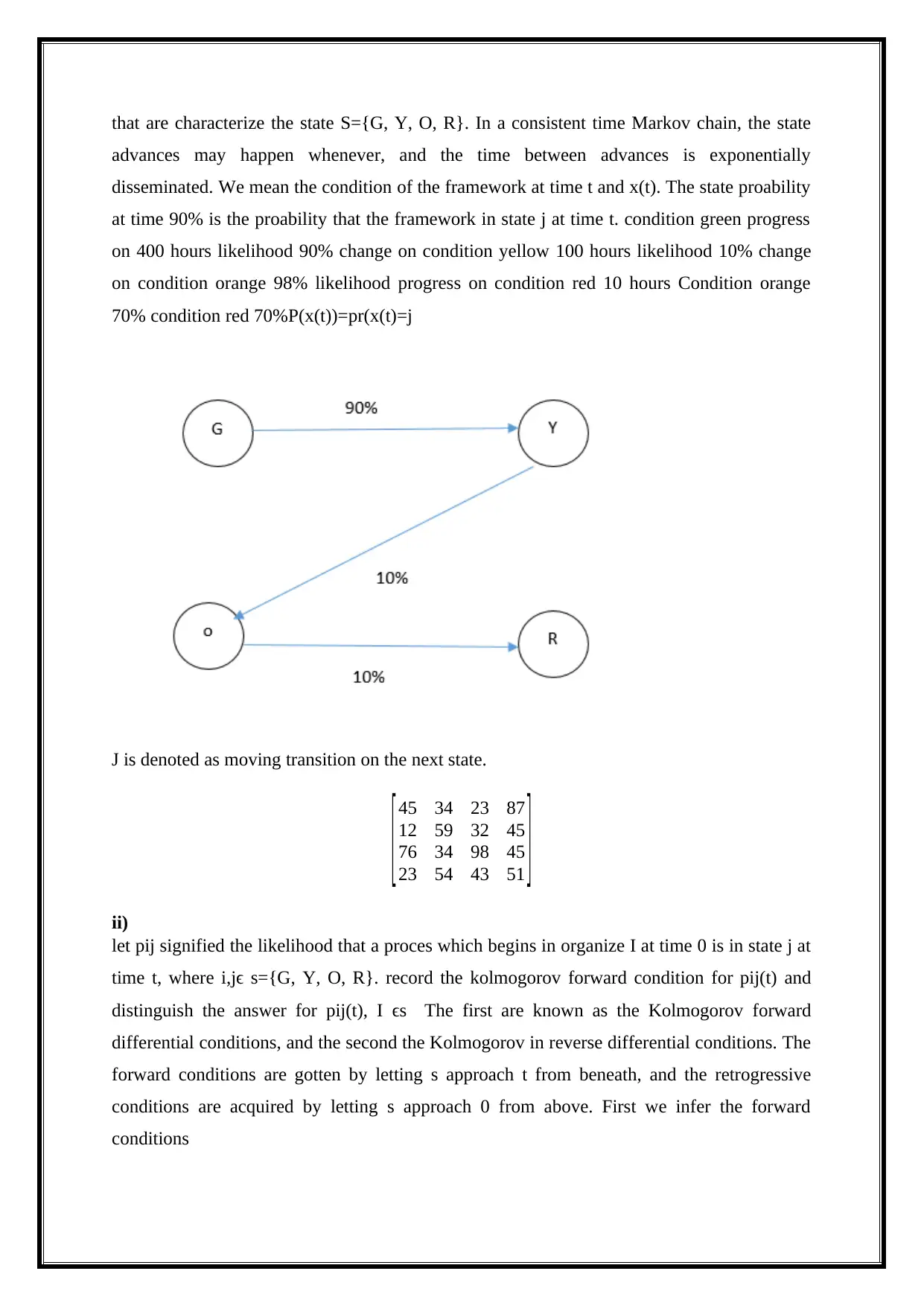

that are characterize the state S={G, Y, O, R}. In a consistent time Markov chain, the state

advances may happen whenever, and the time between advances is exponentially

disseminated. We mean the condition of the framework at time t and x(t). The state proability

at time 90% is the proability that the framework in state j at time t. condition green progress

on 400 hours likelihood 90% change on condition yellow 100 hours likelihood 10% change

on condition orange 98% likelihood progress on condition red 10 hours Condition orange

70% condition red 70%P(x(t))=pr(x(t)=j

J is denoted as moving transition on the next state.

[45 34

12 59

23 87

32 45

76 34

23 54

98 45

43 51 ]

ii)

let pij signified the likelihood that a proces which begins in organize I at time 0 is in state j at

time t, where i,jϵ s={G, Y, O, R}. record the kolmogorov forward condition for pij(t) and

distinguish the answer for pij(t), I ϵs The first are known as the Kolmogorov forward

differential conditions, and the second the Kolmogorov in reverse differential conditions. The

forward conditions are gotten by letting s approach t from beneath, and the retrogressive

conditions are acquired by letting s approach 0 from above. First we infer the forward

conditions

advances may happen whenever, and the time between advances is exponentially

disseminated. We mean the condition of the framework at time t and x(t). The state proability

at time 90% is the proability that the framework in state j at time t. condition green progress

on 400 hours likelihood 90% change on condition yellow 100 hours likelihood 10% change

on condition orange 98% likelihood progress on condition red 10 hours Condition orange

70% condition red 70%P(x(t))=pr(x(t)=j

J is denoted as moving transition on the next state.

[45 34

12 59

23 87

32 45

76 34

23 54

98 45

43 51 ]

ii)

let pij signified the likelihood that a proces which begins in organize I at time 0 is in state j at

time t, where i,jϵ s={G, Y, O, R}. record the kolmogorov forward condition for pij(t) and

distinguish the answer for pij(t), I ϵs The first are known as the Kolmogorov forward

differential conditions, and the second the Kolmogorov in reverse differential conditions. The

forward conditions are gotten by letting s approach t from beneath, and the retrogressive

conditions are acquired by letting s approach 0 from above. First we infer the forward

conditions

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

For that t-s small a positive pi , j(t-s) can be expressed as (t-s) yi , j+o(t-s) for k=j.

The rate at which advances happen into state j at time t and the subsequent term is the

rate at which changes happen out of state j. In this way the distinction of these terms is the net

rate at which advances happen into j, which is the rate at which Pij (t) is expanding at time t.

c)

i)

The transition probability matrix is,

[100 50

50 0

50 0

50 0

0 200

0 0

100 100

0 100 ]

We can take the probabilities are,

P( X 4=3/ X3=2)= P23=50

P(X 4=3/X3=3)= P33=100

ii)

The rate at which advances happen into state j at time t and the subsequent term is the

rate at which changes happen out of state j. In this way the distinction of these terms is the net

rate at which advances happen into j, which is the rate at which Pij (t) is expanding at time t.

c)

i)

The transition probability matrix is,

[100 50

50 0

50 0

50 0

0 200

0 0

100 100

0 100 ]

We can take the probabilities are,

P( X 4=3/ X3=2)= P23=50

P(X 4=3/X3=3)= P33=100

ii)

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

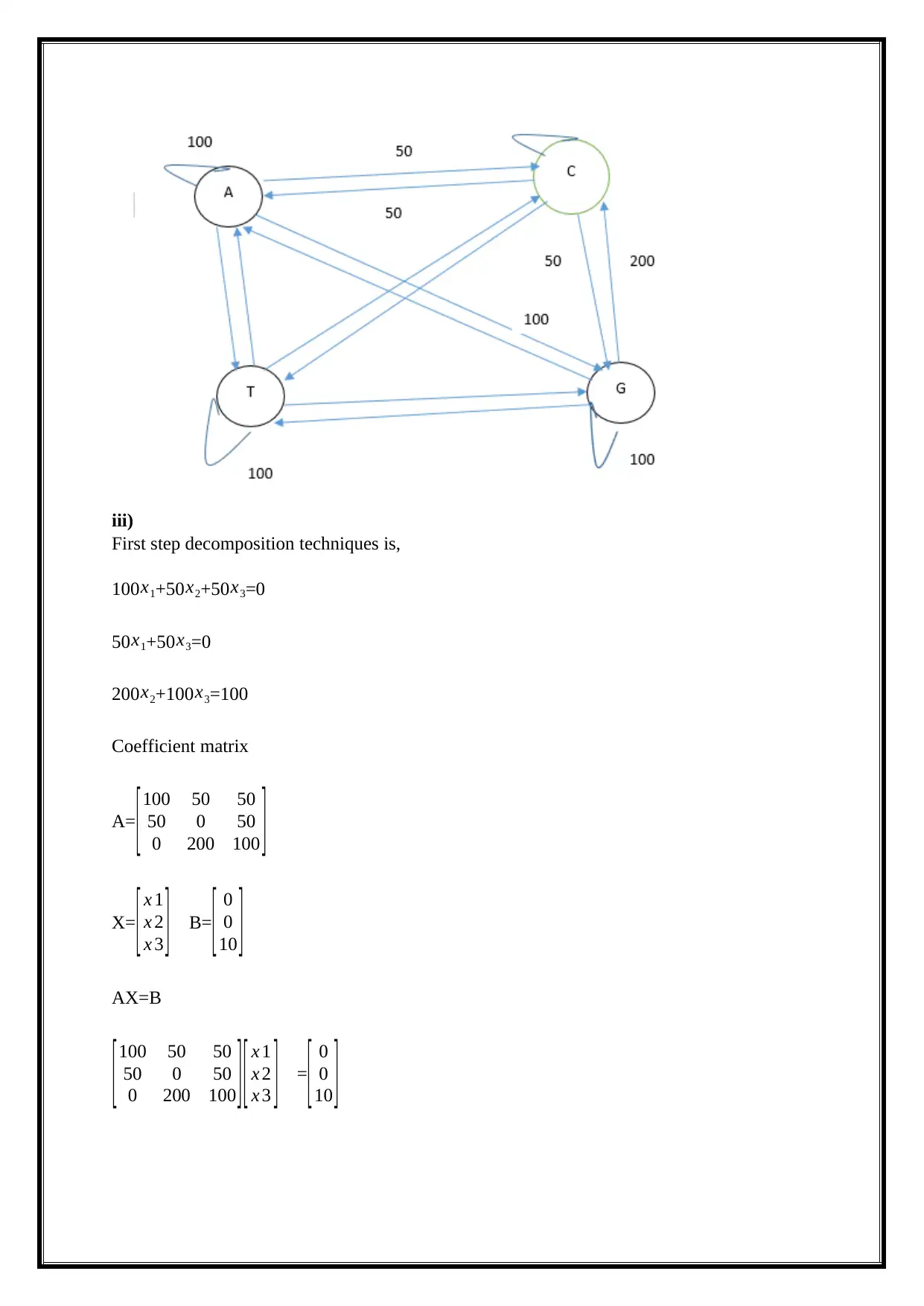

iii)

First step decomposition techniques is,

100x1+50x2+50x3=0

50 x1+50 x3=0

200x2+100 x3=100

Coefficient matrix

A= [100 50 50

50 0 50

0 200 100 ]

X= [ x 1

x 2

x 3 ] B=

[ 0

0

10 ]

AX=B

[ 100 50 50

50 0 50

0 200 100 ][ x 1

x 2

x 3 ] =

[ 0

0

10 ]

First step decomposition techniques is,

100x1+50x2+50x3=0

50 x1+50 x3=0

200x2+100 x3=100

Coefficient matrix

A= [100 50 50

50 0 50

0 200 100 ]

X= [ x 1

x 2

x 3 ] B=

[ 0

0

10 ]

AX=B

[ 100 50 50

50 0 50

0 200 100 ][ x 1

x 2

x 3 ] =

[ 0

0

10 ]

[ 0 50 50

50 0 50

0 200 0 ]

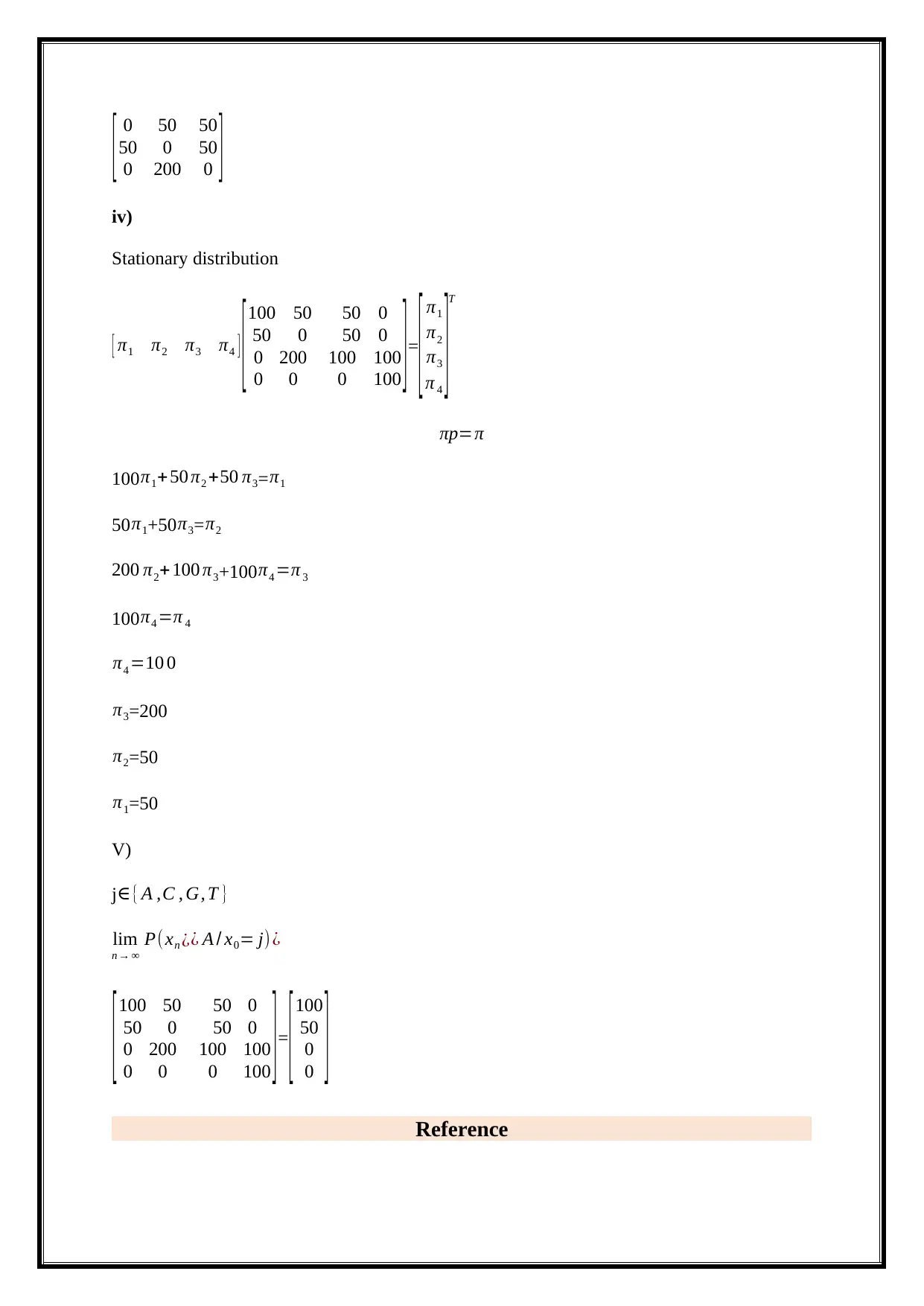

iv)

Stationary distribution

[ π1 π2 π3 π4 ] [ 100 50

50 0

50 0

50 0

0 200

0 0

100 100

0 100 ]=

[ π1

π2

π3

π 4

]T

πp=π

100 π1+50 π2 +50 π3= π1

50 π1+50π3= π2

200 π2+100 π3+100 π4 =π 3

100π4 =π 4

π4 =10 0

π3=200

π2=50

π1=50

V)

j∈{ A ,C , G, T }

lim

n → ∞

P(xn ¿¿ A / x0= j) ¿

[100 50

50 0

50 0

50 0

0 200

0 0

100 100

0 100 ]=

[100

50

0

0 ] Reference

50 0 50

0 200 0 ]

iv)

Stationary distribution

[ π1 π2 π3 π4 ] [ 100 50

50 0

50 0

50 0

0 200

0 0

100 100

0 100 ]=

[ π1

π2

π3

π 4

]T

πp=π

100 π1+50 π2 +50 π3= π1

50 π1+50π3= π2

200 π2+100 π3+100 π4 =π 3

100π4 =π 4

π4 =10 0

π3=200

π2=50

π1=50

V)

j∈{ A ,C , G, T }

lim

n → ∞

P(xn ¿¿ A / x0= j) ¿

[100 50

50 0

50 0

50 0

0 200

0 0

100 100

0 100 ]=

[100

50

0

0 ] Reference

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 12

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.