MTEST Test Design and Analysis Report: Software Testing

VerifiedAdded on 2023/03/31

|7

|1084

|65

Report

AI Summary

This report provides a comprehensive analysis of MTEST test design and software testing methodologies. It begins by examining real-world examples of software failures, such as the London air traffic control incident, highlighting the importance of thorough testing. The report then details the identification of test cases based on the software's requirements, utilizing strategies like negative testing, error guessing, boundary value analysis, and equivalence class partitioning. Specific test cases are outlined in a table, covering various scenarios. The report further elaborates on boundary value analysis, identifying boundary values for the application and detailing associated test cases. Additionally, it discusses error guessing test cases, emphasizing the role of the tester's experience. The report concludes by referencing relevant literature on software testing and analysis.

Running head: MTEST TEST DESIGN AND ANALYSIS

MTEST Test Design and Analysis

Name of the Student

Name of the University

Author Note

MTEST Test Design and Analysis

Name of the Student

Name of the University

Author Note

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1

TEST CASE IDENTIFICATION FOR MTEST

Question 1: Real world example of software failure

A range of factors can be at play leading to software failures. In most cases these can

be due to making repeated changes without any plans for conducting follow up tests (Grottke

et al, 2015). Another vital role is played by the security aspect which is always under scanner.

When software programs are built without addressing the loopholes, attackers spare no time

in exploiting the vulnerabilities causing damages to both end user and the service provider

(Kanewala, Bieman & Ben‐Hur, 2016). Glitches and incomplete builds of the software

programs result in generation of erroneous output.

Flights in the aviation industry generally get cancelled because of bad weather and not

software. However in December 2014, one air traffic control centre (ATC) was compelled to

close down the airspace over London as the software tasked with management of departures

as well as arrivals started malfunctioning. Although this software got repaired almost

immediately and brought back up at the pretty fast, the consequences suffered as a result

major and affected flights widely. Heathrow ended up reporting a cancellation of more than

50 flights many of which had to turn back to their originating locations.

The entire incident was considered to be a technical problem at the Air Traffic

Control centre of Swanwick in England. This particular ATC is known for repeatedly

suffering from one issue to another. The responsible governing body NATS had to apologise

for the incident. Though official report by NATS blamed power outage, other reports

mentioned of a bug in the software responsible for sequencing landing and take offs that

could have been prevented if better software testing was conducted before implementation.

TEST CASE IDENTIFICATION FOR MTEST

Question 1: Real world example of software failure

A range of factors can be at play leading to software failures. In most cases these can

be due to making repeated changes without any plans for conducting follow up tests (Grottke

et al, 2015). Another vital role is played by the security aspect which is always under scanner.

When software programs are built without addressing the loopholes, attackers spare no time

in exploiting the vulnerabilities causing damages to both end user and the service provider

(Kanewala, Bieman & Ben‐Hur, 2016). Glitches and incomplete builds of the software

programs result in generation of erroneous output.

Flights in the aviation industry generally get cancelled because of bad weather and not

software. However in December 2014, one air traffic control centre (ATC) was compelled to

close down the airspace over London as the software tasked with management of departures

as well as arrivals started malfunctioning. Although this software got repaired almost

immediately and brought back up at the pretty fast, the consequences suffered as a result

major and affected flights widely. Heathrow ended up reporting a cancellation of more than

50 flights many of which had to turn back to their originating locations.

The entire incident was considered to be a technical problem at the Air Traffic

Control centre of Swanwick in England. This particular ATC is known for repeatedly

suffering from one issue to another. The responsible governing body NATS had to apologise

for the incident. Though official report by NATS blamed power outage, other reports

mentioned of a bug in the software responsible for sequencing landing and take offs that

could have been prevented if better software testing was conducted before implementation.

2

TEST CASE IDENTIFICATION FOR MTEST

Question 2: Identifying the test cases according to the scenarios created

based on requirements of the software

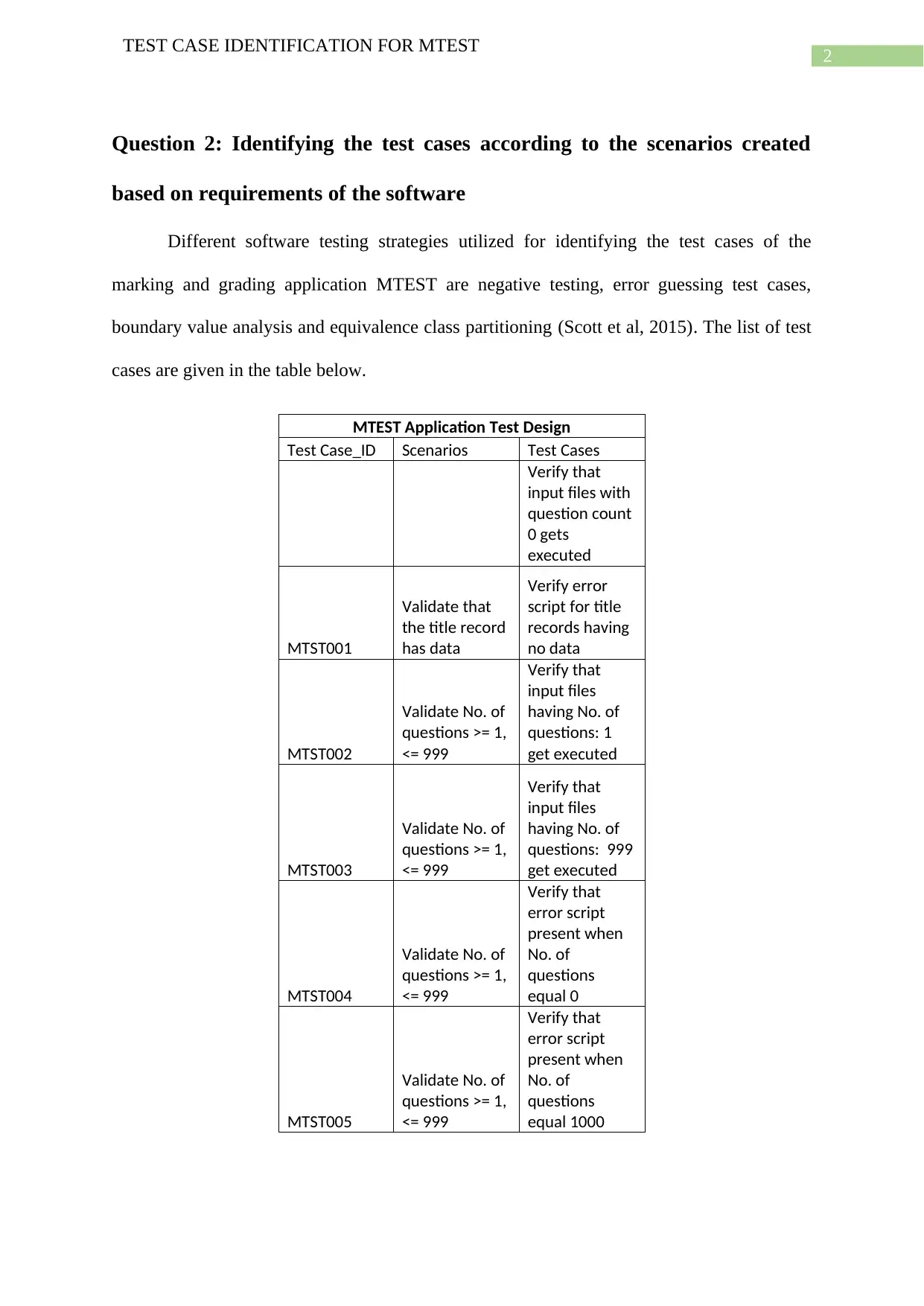

Different software testing strategies utilized for identifying the test cases of the

marking and grading application MTEST are negative testing, error guessing test cases,

boundary value analysis and equivalence class partitioning (Scott et al, 2015). The list of test

cases are given in the table below.

MTEST Application Test Design

Test Case_ID Scenarios Test Cases

Verify that

input files with

question count

0 gets

executed

MTST001

Validate that

the title record

has data

Verify error

script for title

records having

no data

MTST002

Validate No. of

questions >= 1,

<= 999

Verify that

input files

having No. of

questions: 1

get executed

MTST003

Validate No. of

questions >= 1,

<= 999

Verify that

input files

having No. of

questions: 999

get executed

MTST004

Validate No. of

questions >= 1,

<= 999

Verify that

error script

present when

No. of

questions

equal 0

MTST005

Validate No. of

questions >= 1,

<= 999

Verify that

error script

present when

No. of

questions

equal 1000

TEST CASE IDENTIFICATION FOR MTEST

Question 2: Identifying the test cases according to the scenarios created

based on requirements of the software

Different software testing strategies utilized for identifying the test cases of the

marking and grading application MTEST are negative testing, error guessing test cases,

boundary value analysis and equivalence class partitioning (Scott et al, 2015). The list of test

cases are given in the table below.

MTEST Application Test Design

Test Case_ID Scenarios Test Cases

Verify that

input files with

question count

0 gets

executed

MTST001

Validate that

the title record

has data

Verify error

script for title

records having

no data

MTST002

Validate No. of

questions >= 1,

<= 999

Verify that

input files

having No. of

questions: 1

get executed

MTST003

Validate No. of

questions >= 1,

<= 999

Verify that

input files

having No. of

questions: 999

get executed

MTST004

Validate No. of

questions >= 1,

<= 999

Verify that

error script

present when

No. of

questions

equal 0

MTST005

Validate No. of

questions >= 1,

<= 999

Verify that

error script

present when

No. of

questions

equal 1000

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3

TEST CASE IDENTIFICATION FOR MTEST

MTST006

Validate record

set count in

columns (10 –

59)

Verify that

specific record

present for

questions 1 -

50 between

columns (10,

59)

MTST007

Validate record

set count in

columns (10 –

59)

Verify that two

records

present for

questions 51 –

100 between

columns (10,

59)

MTST008

Validate record

set count in

columns (10 –

59)

Verify that

three records

present for

questions 101 -

150 between

columns (10,

59)

MTST009

Validate record

set count in

columns (10 –

59)

Verify that

maximum

records

supported is

present for 999

questions

between

columns (10,

59)

MTST010

Validate

student name

in columns 1 to

9 of student

records

Verify that

student names

present in

columns 1 to 9

of student

records

MTST011

Validate

column 80 data

for student and

question

records

Verify that

error script

present for

input files

having wrong

column 80 data

for student and

question

records

TEST CASE IDENTIFICATION FOR MTEST

MTST006

Validate record

set count in

columns (10 –

59)

Verify that

specific record

present for

questions 1 -

50 between

columns (10,

59)

MTST007

Validate record

set count in

columns (10 –

59)

Verify that two

records

present for

questions 51 –

100 between

columns (10,

59)

MTST008

Validate record

set count in

columns (10 –

59)

Verify that

three records

present for

questions 101 -

150 between

columns (10,

59)

MTST009

Validate record

set count in

columns (10 –

59)

Verify that

maximum

records

supported is

present for 999

questions

between

columns (10,

59)

MTST010

Validate

student name

in columns 1 to

9 of student

records

Verify that

student names

present in

columns 1 to 9

of student

records

MTST011

Validate

column 80 data

for student and

question

records

Verify that

error script

present for

input files

having wrong

column 80 data

for student and

question

records

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4

TEST CASE IDENTIFICATION FOR MTEST

Question 3: Test Cases on BVA (Boundary Value Analysis)

The software testing strategy Boundary Value Analysis or BVA deals with testing

applications by using values at each end of equivalence class partitions as per the requirement

specifications of the application (Niiranen et al, 2017). This is how the testing strategy

inspects the reliability of a software.

Boundary values observed for the application MTEST are found to be 1000, 999, 1

and 0 (Smelyanskiy, Sawaya, & Aspuru-Guzik, 2016). The test cases identified with this

testing strategy are:

Verify that error script present when No. of questions equal 1000

Verify that input files having No. of questions: 999 get executed

Verify that input files having No. of questions: 1 get executed

Verify that error script present when No. of questions equal 0

Question 4: Error guessing test cases for MTEST marking and grading

application

Error guessing is the type of testing strategy where the tester conducts the tests based

on his own skills and experience to figure out the errors in software programs (Qu et al,

2015). As a result, the experience and level of understanding of the test analyst plays a vital

role here. Error guessing test cases obtained for the MTEST grading application are:

Verify that maximum records supported is present for 999 questions between columns (10,

59)

Verify that error script present for input files having wrong column 80 data for student and

question records

TEST CASE IDENTIFICATION FOR MTEST

Question 3: Test Cases on BVA (Boundary Value Analysis)

The software testing strategy Boundary Value Analysis or BVA deals with testing

applications by using values at each end of equivalence class partitions as per the requirement

specifications of the application (Niiranen et al, 2017). This is how the testing strategy

inspects the reliability of a software.

Boundary values observed for the application MTEST are found to be 1000, 999, 1

and 0 (Smelyanskiy, Sawaya, & Aspuru-Guzik, 2016). The test cases identified with this

testing strategy are:

Verify that error script present when No. of questions equal 1000

Verify that input files having No. of questions: 999 get executed

Verify that input files having No. of questions: 1 get executed

Verify that error script present when No. of questions equal 0

Question 4: Error guessing test cases for MTEST marking and grading

application

Error guessing is the type of testing strategy where the tester conducts the tests based

on his own skills and experience to figure out the errors in software programs (Qu et al,

2015). As a result, the experience and level of understanding of the test analyst plays a vital

role here. Error guessing test cases obtained for the MTEST grading application are:

Verify that maximum records supported is present for 999 questions between columns (10,

59)

Verify that error script present for input files having wrong column 80 data for student and

question records

5

TEST CASE IDENTIFICATION FOR MTEST

TEST CASE IDENTIFICATION FOR MTEST

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

6

TEST CASE IDENTIFICATION FOR MTEST

References

Grottke, M., Kim, D. S., Mansharamani, R., Nambiar, M., Natella, R., & Trivedi, K. S.

(2015). Recovery from software failures caused by mandelbugs. IEEE Transactions

on Reliability, 65(1), 70-87.

Kanewala, U., Bieman, J. M., & Ben‐Hur, A. (2016). Predicting metamorphic relations for

testing scientific software: a machine learning approach using graph kernels. Software

testing, verification and reliability, 26(3), 245-269.

Niiranen, J., Kiendl, J., Niemi, A. H., & Reali, A. (2017). Isogeometric analysis for sixth-

order boundary value problems of gradient-elastic Kirchhoff plates. Computer

Methods in Applied Mechanics and Engineering, 316, 328-348.

Qu, M., Cui, N., Zou, B., & Wu, X. (2015, January). An Embedded Software Testing

Requirements Modeling Tool Describing Static and Dynamic Characteristics. In 2015

International Symposium on Computers & Informatics. Atlantis Press.

Scott, C., Wundsam, A., Raghavan, B., Panda, A., Or, A., Lai, J., ... & Acharya, H. B. (2015).

Troubleshooting blackbox SDN control software with minimal causal sequences.

ACM SIGCOMM Computer Communication Review, 44(4), 395-406.

Smelyanskiy, M., Sawaya, N. P., & Aspuru-Guzik, A. (2016). qHiPSTER: the quantum high

performance software testing environment. arXiv preprint arXiv:1601.07195.

TEST CASE IDENTIFICATION FOR MTEST

References

Grottke, M., Kim, D. S., Mansharamani, R., Nambiar, M., Natella, R., & Trivedi, K. S.

(2015). Recovery from software failures caused by mandelbugs. IEEE Transactions

on Reliability, 65(1), 70-87.

Kanewala, U., Bieman, J. M., & Ben‐Hur, A. (2016). Predicting metamorphic relations for

testing scientific software: a machine learning approach using graph kernels. Software

testing, verification and reliability, 26(3), 245-269.

Niiranen, J., Kiendl, J., Niemi, A. H., & Reali, A. (2017). Isogeometric analysis for sixth-

order boundary value problems of gradient-elastic Kirchhoff plates. Computer

Methods in Applied Mechanics and Engineering, 316, 328-348.

Qu, M., Cui, N., Zou, B., & Wu, X. (2015, January). An Embedded Software Testing

Requirements Modeling Tool Describing Static and Dynamic Characteristics. In 2015

International Symposium on Computers & Informatics. Atlantis Press.

Scott, C., Wundsam, A., Raghavan, B., Panda, A., Or, A., Lai, J., ... & Acharya, H. B. (2015).

Troubleshooting blackbox SDN control software with minimal causal sequences.

ACM SIGCOMM Computer Communication Review, 44(4), 395-406.

Smelyanskiy, M., Sawaya, N. P., & Aspuru-Guzik, A. (2016). qHiPSTER: the quantum high

performance software testing environment. arXiv preprint arXiv:1601.07195.

1 out of 7

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.