Multiple Linear Regression Analysis of Employee Engagement

VerifiedAdded on 2019/09/18

|8

|1113

|382

Homework Assignment

AI Summary

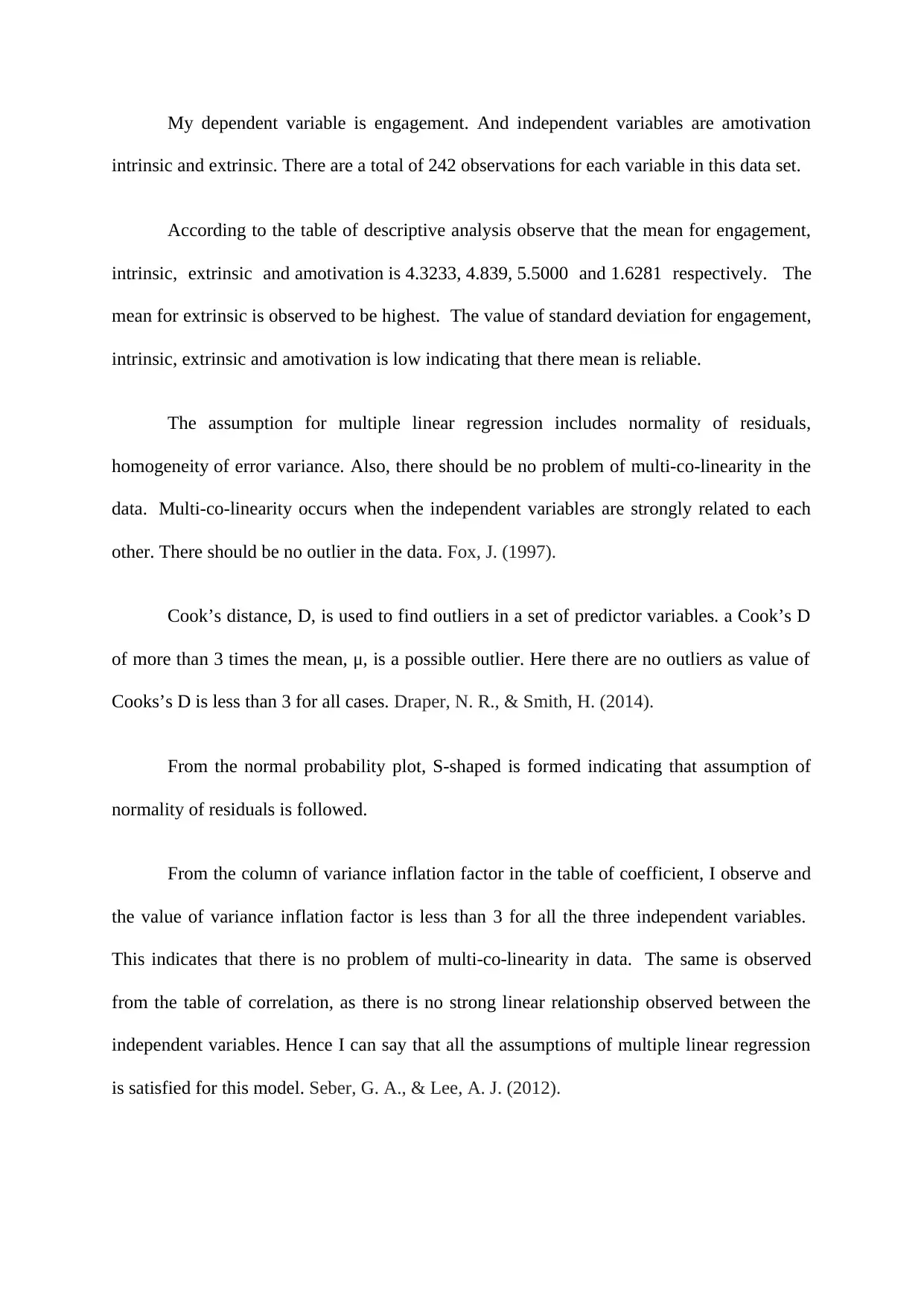

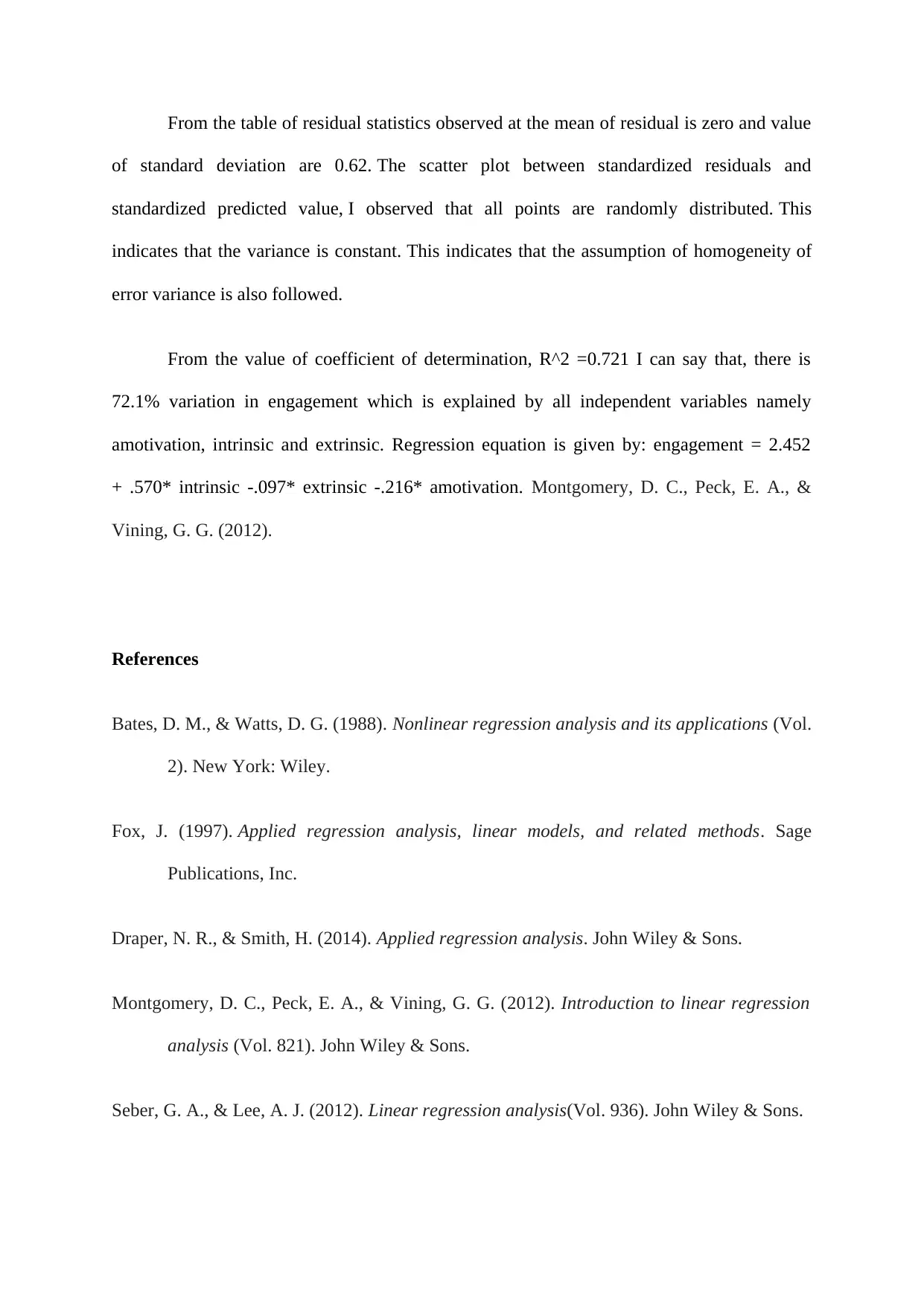

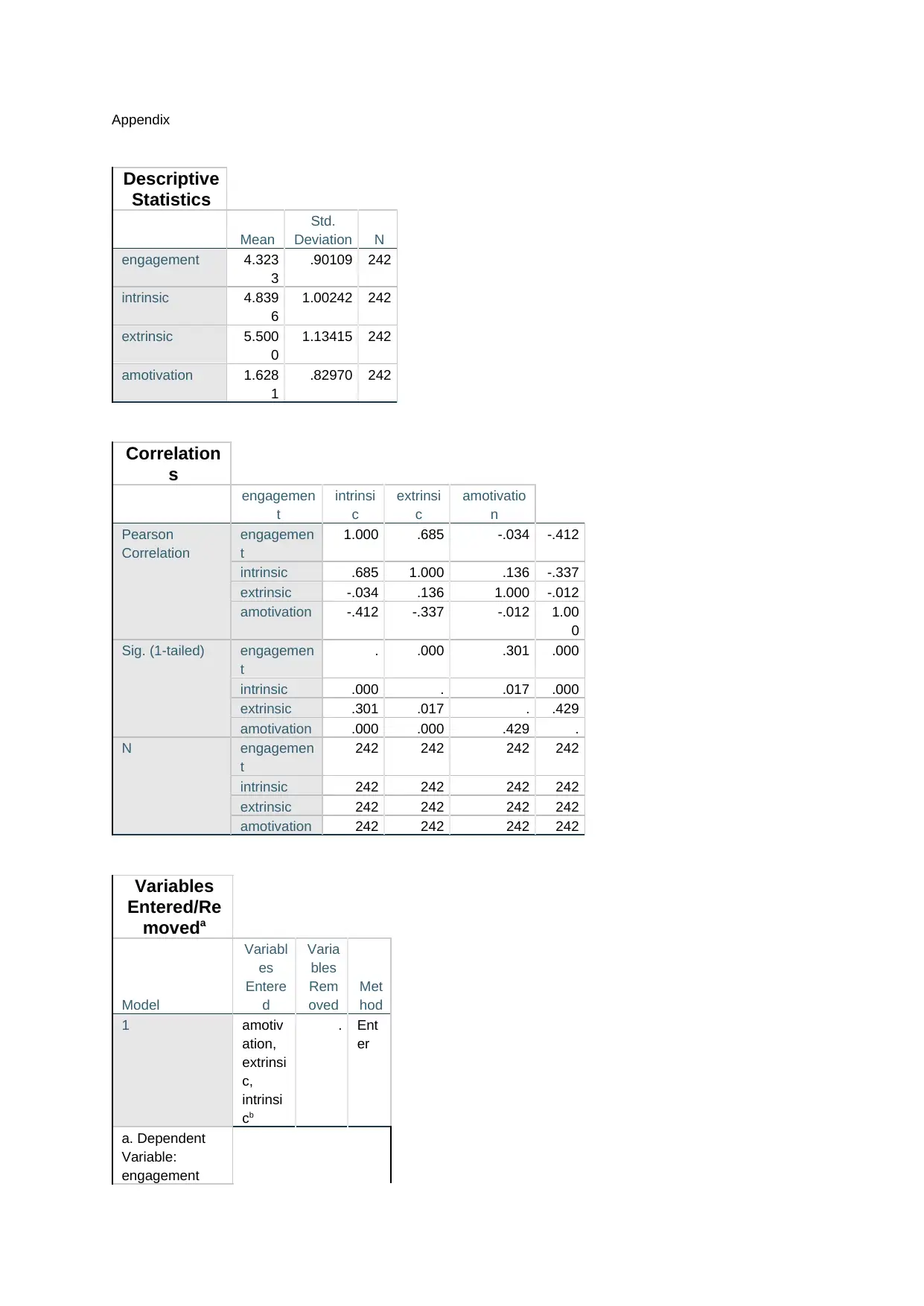

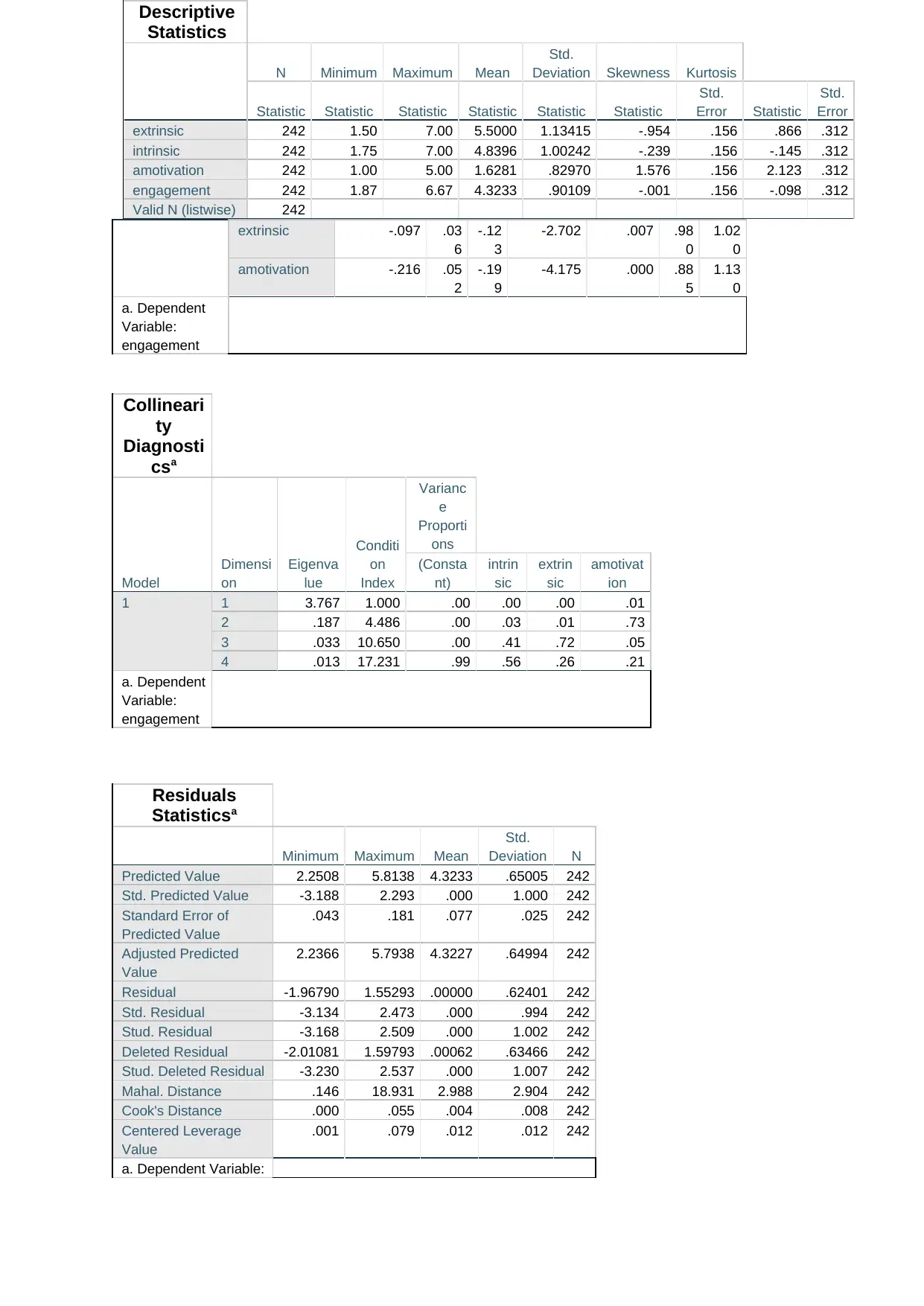

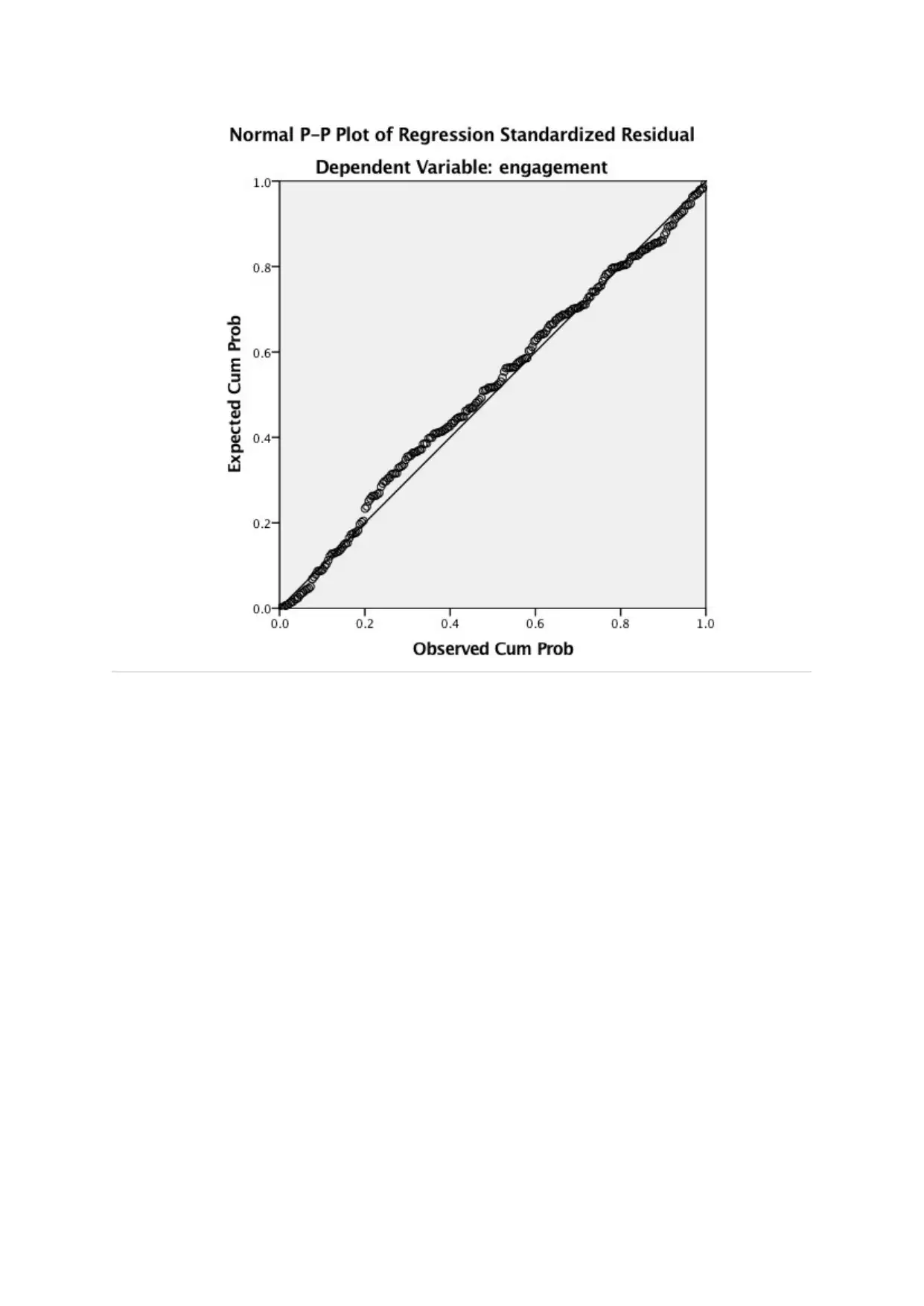

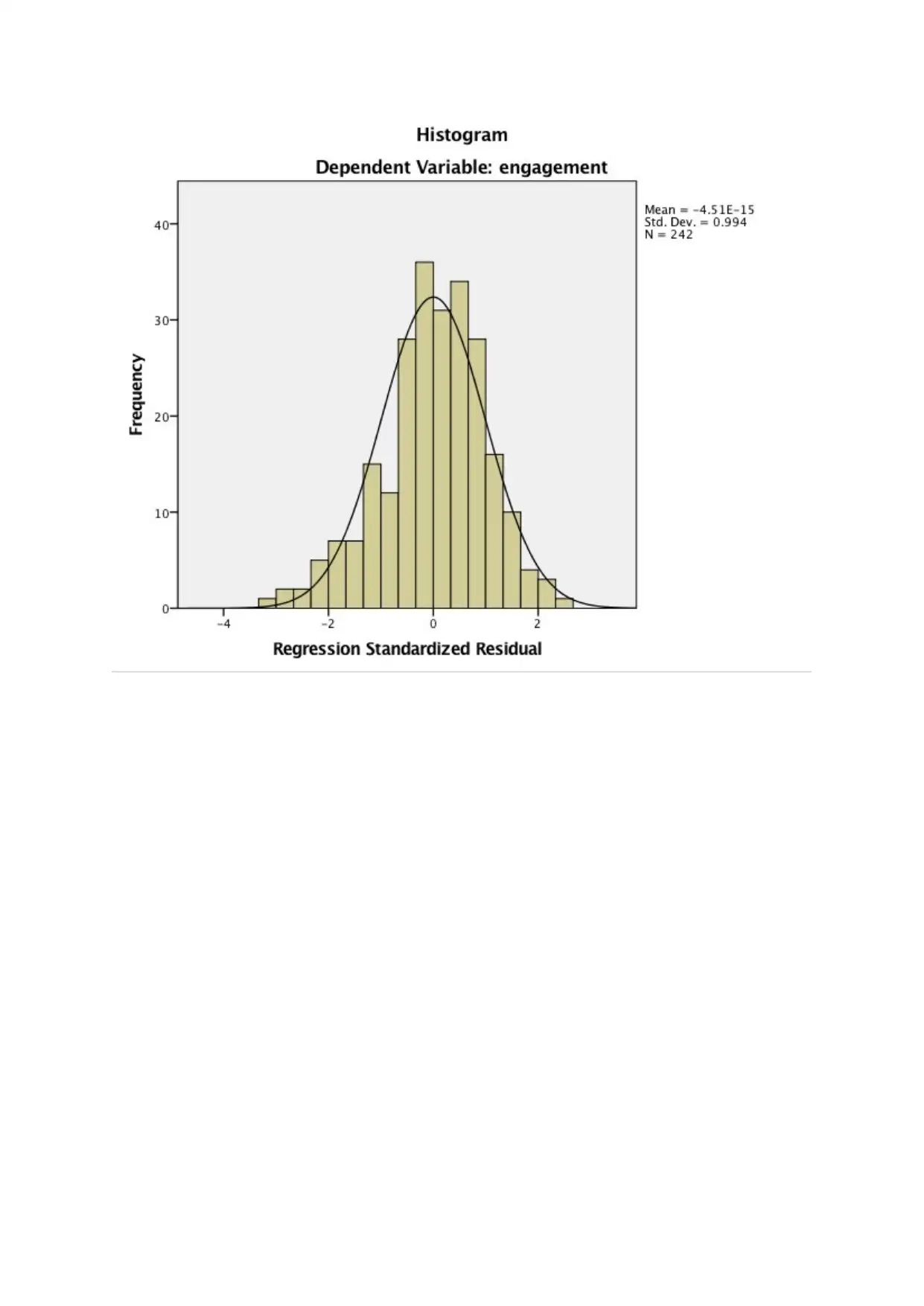

This assignment presents a detailed multiple linear regression analysis investigating the factors influencing employee engagement. The analysis uses a dataset of 242 observations, with engagement as the dependent variable and intrinsic motivation, extrinsic motivation, and amotivation as independent variables. Descriptive statistics reveal the mean and standard deviation for each variable. The study assesses the assumptions of multiple linear regression, including normality of residuals, homogeneity of error variance, and the absence of multicollinearity, which are validated through Cook's distance, normal probability plots, variance inflation factors, and correlation analysis. The results indicate that all assumptions are met, and the regression model explains 72.1% of the variation in engagement. The regression equation is provided, highlighting the significant contributions of intrinsic motivation and amotivation, while extrinsic motivation shows a negative but significant impact. The analysis includes comprehensive statistical tables and references to support the findings.

1 out of 8

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)