Data Analysis of Postgraduate Applicants Using Serverless Cloud Tech

VerifiedAdded on 2023/01/16

|82

|11633

|75

Project

AI Summary

This project focuses on developing a data analytics solution for universities to predict the behavior of postgraduate applicants. It addresses the challenges universities face in managing large applicant datasets, especially after the introduction of PG loans. The project aims to build a data analytics dashboard that integrates data from sources like UCAS, HESA, and universities, using a non-relational architecture and serverless cloud technology (AWS). Key objectives include developing a visual environment for predictive analysis, utilizing algorithms to understand applicant demographics, and recommending recruitment strategies. The project explores the advantages of serverless technology and cloud computing for data storage, processing, and real-time analytics. It also covers research questions related to data analytics tools, AWS, and the relationship between data analysis and applicant prediction. The project includes a literature review covering cloud computing, data analysis, and machine learning techniques like supervised learning. The goal is to create a dashboard that enables universities to make data-driven decisions to improve postgraduate recruitment outcomes.

Postgraduate potential Applicants on Data

Analysis using (AWS) Server less Cloud

Technology

1

Analysis using (AWS) Server less Cloud

Technology

1

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

ABSTRACT

It has summarised about the unprecedented growth of information or data whether they are

centralised of oncoming for handling huge data set through relational database management

system. The project has concerned about the big data technology such as Amazon web server,

which are collecting or gathering large amount of data on cloud server. In order to increasing the

storage processing of enterprise and also derived the value from using big data analytics.

However, it has rapidly changing the demand of enterprise in global marketplace, because it has

automatically flowing large data or information. Therefore, it obvious to bring new challenges

but it could be managed or handled by analytical tool or platform. Afterwards, it has planned or

selected an appropriate architecture to storage of infrastructure, processing and visualize in

proper manner.

2

It has summarised about the unprecedented growth of information or data whether they are

centralised of oncoming for handling huge data set through relational database management

system. The project has concerned about the big data technology such as Amazon web server,

which are collecting or gathering large amount of data on cloud server. In order to increasing the

storage processing of enterprise and also derived the value from using big data analytics.

However, it has rapidly changing the demand of enterprise in global marketplace, because it has

automatically flowing large data or information. Therefore, it obvious to bring new challenges

but it could be managed or handled by analytical tool or platform. Afterwards, it has planned or

selected an appropriate architecture to storage of infrastructure, processing and visualize in

proper manner.

2

Contents

ABSTRACT....................................................................................................................................2

CHAPTER-1 INTRODUCTION.....................................................................................................4

CHAPTER-2 LITERATURE REVIEW AND THEORETHICAL BACK GROUND..................6

CHAPTER-3 METHODOLOGY, TECHNOLOGY AND OUTCOME......................................18

CHAPTER-4 DESIGN, IMPLEMENTATION AND TESTING.................................................24

CHAPTER-5 CONCLUSION AND RECOMMENDATION......................................................40

REFERENCES..............................................................................................................................42

3

ABSTRACT....................................................................................................................................2

CHAPTER-1 INTRODUCTION.....................................................................................................4

CHAPTER-2 LITERATURE REVIEW AND THEORETHICAL BACK GROUND..................6

CHAPTER-3 METHODOLOGY, TECHNOLOGY AND OUTCOME......................................18

CHAPTER-4 DESIGN, IMPLEMENTATION AND TESTING.................................................24

CHAPTER-5 CONCLUSION AND RECOMMENDATION......................................................40

REFERENCES..............................................................................................................................42

3

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

CHAPTER-1 INTRODUCTION

Data analysis is based on the process or method which mainly consider for purpose of

analytics. Sometimes, it is composed with process which help for performed different

functionality (Trudgian and Mirzaei, 2012). Data analytics is a practice concerned about the

entire business because this process will help for managing and controlling behaviour or data

generated by different activities.

In this report, it will be concerned about the different functions that performed by Data

analytical technology such as Amazon web service. Furthermore, it is a proper modelling of

information or data. The project will help for identifying the specific rate of potential applicants

on analysis the data by using server less cloud technology.

Aim

Aims to provide a solution on a data analytics dashboard that universities can use to

predict and analyse the behaviour of postgraduate applicant by gathering dataset from different

organisations such UCAS, HESA and Universities and integrate with non-relation architecture.

Back ground

Big data is based on the modern technology in term of data analytics. It consists of large

amount of information or data. Therefore, it is able to collect, store or maintain the data and then

processing software. Initially, it has identified the various type of challenges factors that always

support for collecting data and also sharing files between one or more devices. Through big data

technology, it can easily modify, structure and maintain the specific privacy or security aspects.

This modern technology will support for involving the all unstructured or structure data but

arranging through big data into structure format.

Server less technology is mainly used for identifying the potential applicants which

always supports for maintain the scalability and flexibility in data management. AWS is consider

an appropriate server less technology that applicable in the different organizations. Furthermore,

server less technology will be performed the process and also represented through use cases.

AWS can be used the effective components in achieving the accurate result or technology

(Backes and et al., 2019). Generally, this technology has been considered the Python

programming language. By using server less technology, it has been reducing the risk, threat

4

Data analysis is based on the process or method which mainly consider for purpose of

analytics. Sometimes, it is composed with process which help for performed different

functionality (Trudgian and Mirzaei, 2012). Data analytics is a practice concerned about the

entire business because this process will help for managing and controlling behaviour or data

generated by different activities.

In this report, it will be concerned about the different functions that performed by Data

analytical technology such as Amazon web service. Furthermore, it is a proper modelling of

information or data. The project will help for identifying the specific rate of potential applicants

on analysis the data by using server less cloud technology.

Aim

Aims to provide a solution on a data analytics dashboard that universities can use to

predict and analyse the behaviour of postgraduate applicant by gathering dataset from different

organisations such UCAS, HESA and Universities and integrate with non-relation architecture.

Back ground

Big data is based on the modern technology in term of data analytics. It consists of large

amount of information or data. Therefore, it is able to collect, store or maintain the data and then

processing software. Initially, it has identified the various type of challenges factors that always

support for collecting data and also sharing files between one or more devices. Through big data

technology, it can easily modify, structure and maintain the specific privacy or security aspects.

This modern technology will support for involving the all unstructured or structure data but

arranging through big data into structure format.

Server less technology is mainly used for identifying the potential applicants which

always supports for maintain the scalability and flexibility in data management. AWS is consider

an appropriate server less technology that applicable in the different organizations. Furthermore,

server less technology will be performed the process and also represented through use cases.

AWS can be used the effective components in achieving the accurate result or technology

(Backes and et al., 2019). Generally, this technology has been considered the Python

programming language. By using server less technology, it has been reducing the risk, threat

4

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

within data management in order to maintain a proper security as well as privacy. Furthermore,

different organizations that has been adopted the data protection regulation (GDPR) which

always help for providing the better way to strengthening all essential provision in regards of

information.

Problem statement

The problem has been occurred when government introduced new PG loan in 2016. Its

primary aim is to influence or encourage towards the postgraduate enrolment number. In order to

show the evidence in regards of domestic recruitment. On the other hand, it has identified the

universities face issue because they are able to follow the proper threat within postgraduate

applicants (BaiJhaney and Wells, 2019). Therefore, it is very difficult for managing the large

amount of data in regarding the recruitment. That’s why, universities are planning to use server

less modern technology which always supports for analysis large amount of data or information.

Course leader

The research project is mainly focused on the improve the necessary requirement for

business so that it should be identified the problem at different level. The majority is that when

overseas applications but they do not materialize. The problem has been occurred when receiving

the application throughout the years. It would be better for considering the offer on the basis

annually. When resolved the problem so that overseas application and also accumulate the

multiple application in order to create the attention at the time of advertisement.

Objective

To build a data analytics visual environment to predict postgraduate applicants based on

previous data that can be used for management decision-making.

Using algorithm analysis to understand the demographics of applicants and

recommendations on where to focus on recruitment and advertisement for postgraduate

applicants.

Review best Technologies AWS platform or tools and infrastructure to use for data

analytics.

To develop postgraduate recruiting dashboard to collect, transform, store, and process

data streaming using latest technologies Amazon web server.

5

different organizations that has been adopted the data protection regulation (GDPR) which

always help for providing the better way to strengthening all essential provision in regards of

information.

Problem statement

The problem has been occurred when government introduced new PG loan in 2016. Its

primary aim is to influence or encourage towards the postgraduate enrolment number. In order to

show the evidence in regards of domestic recruitment. On the other hand, it has identified the

universities face issue because they are able to follow the proper threat within postgraduate

applicants (BaiJhaney and Wells, 2019). Therefore, it is very difficult for managing the large

amount of data in regarding the recruitment. That’s why, universities are planning to use server

less modern technology which always supports for analysis large amount of data or information.

Course leader

The research project is mainly focused on the improve the necessary requirement for

business so that it should be identified the problem at different level. The majority is that when

overseas applications but they do not materialize. The problem has been occurred when receiving

the application throughout the years. It would be better for considering the offer on the basis

annually. When resolved the problem so that overseas application and also accumulate the

multiple application in order to create the attention at the time of advertisement.

Objective

To build a data analytics visual environment to predict postgraduate applicants based on

previous data that can be used for management decision-making.

Using algorithm analysis to understand the demographics of applicants and

recommendations on where to focus on recruitment and advertisement for postgraduate

applicants.

Review best Technologies AWS platform or tools and infrastructure to use for data

analytics.

To develop postgraduate recruiting dashboard to collect, transform, store, and process

data streaming using latest technologies Amazon web server.

5

To build non-relational architecture on a real-time predictive analytics system to identify

the applicants before they become a student using previous data statistics.

Integrate data between the various system and store data on cloud system for processing

and streaming.

The aim is to develop a dashboard for universities to predict student’s behaviors on real

time to allow management teams to make effective decisions based on those insights.

Develop and design data Analytics dashboard on cloud service environment using a cloud

company such as Amazon Web Service or Azure platform.

Research questions

What are different type of data analytical tool or platform used?

What do you understand concept of AWS modern technology?

What are advantage and disadvantage of server less technology in organization?

What is the relationship between data analytics and predicting the potential applicants?

CHAPTER-2 LITERATURE REVIEW AND THEORETHICAL BACK

GROUND

Theme: 1 cloud computing

Church, Schmidt and Ajayi (2020) Cloud computing is based in the on-demand

availability of service from different application to storage and processing power, especially used

for data storage without establishing the direct management by user. It is the most effective

conceptual innovation in which describing the data centers available to different users over

internet. Moreover, Cloud computing service is mainly covered wide range of options which

always giving the basic storage, networking and process by using natural language processing.

There are various fundamental concept behind the cloud computing. It is mainly storage data

from particular location of service. Many other detail includes as operating system, hardware. In

this way, it become easier for running the entire cloud services in proper manner. Universities or

educational institution also focused on adopting the cloud computing service. This will provide

the better way to manage or store large amount of data. It is very effective in term of security or

privacy so that large number of institution create their own cloud environment.

6

the applicants before they become a student using previous data statistics.

Integrate data between the various system and store data on cloud system for processing

and streaming.

The aim is to develop a dashboard for universities to predict student’s behaviors on real

time to allow management teams to make effective decisions based on those insights.

Develop and design data Analytics dashboard on cloud service environment using a cloud

company such as Amazon Web Service or Azure platform.

Research questions

What are different type of data analytical tool or platform used?

What do you understand concept of AWS modern technology?

What are advantage and disadvantage of server less technology in organization?

What is the relationship between data analytics and predicting the potential applicants?

CHAPTER-2 LITERATURE REVIEW AND THEORETHICAL BACK

GROUND

Theme: 1 cloud computing

Church, Schmidt and Ajayi (2020) Cloud computing is based in the on-demand

availability of service from different application to storage and processing power, especially used

for data storage without establishing the direct management by user. It is the most effective

conceptual innovation in which describing the data centers available to different users over

internet. Moreover, Cloud computing service is mainly covered wide range of options which

always giving the basic storage, networking and process by using natural language processing.

There are various fundamental concept behind the cloud computing. It is mainly storage data

from particular location of service. Many other detail includes as operating system, hardware. In

this way, it become easier for running the entire cloud services in proper manner. Universities or

educational institution also focused on adopting the cloud computing service. This will provide

the better way to manage or store large amount of data. It is very effective in term of security or

privacy so that large number of institution create their own cloud environment.

6

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Over the past decade, it has been concluded that interest in adoption of cloud computing

within organization. It always supports for promises the potential to reshape the different way of

acquire or manage need for different efficient resources. In particularly, the line with notion of

different shared services so that cloud computing is consider as innovative model in term of IT

infrastructure. Usually, it enabled to focus on the specific core requirement of enterprise

activities. Thus, it support for increasing the productivity as well as profitability in global

marketplace. The adoption of cloud computing means that automatically improve the simplicity,

scalability and flexibility. Afterwards, it provide the better offers to increase demand of cloud

computing in global marketplace. In recently, it has been reported that 76% of large corporation

are adopting the cloud services whereas 60% of SME are also adopting cloud computing.

Moghaddam (2020) there are lot of definition of cloud computing that has been relevant

to the AWS technology. In order to provide the efficient IT sourcing model for improving the

performance of enterprise application on regular basis. it became wider as internet based service

offering while comprised large amount data storage, hosting network infrastructure. Moreover, it

also added the cloud service consumer which despite that there might be offering better services.

Usually, it is depending the enterprise need and requirement whereas considerable as Saas. It

help for improve the capability of consumer when they can enterprise applications. These are

running on the cloud infrastructure and performed the multiple task.

The cloud service model has deployed in term of different private cloud whereas it

provision for exclusive use by enterprise, which also comprising the multiple clients. By using

cloud computing platform, enterprise may be managed, owned and operated with effectively. On

the other hand, community cloud, where it applicable within infrastructure that provisioned for

exclusive used by single community. In this way, different enterprise should be adopting the

cloud infrastructure and provide the service in different manner. Large Corporation have a

specific slack resources in context of both technical as well as financial manner. It also becoming

affordable to easily deploy the private SaaS, IaaS and PaaS.

7

within organization. It always supports for promises the potential to reshape the different way of

acquire or manage need for different efficient resources. In particularly, the line with notion of

different shared services so that cloud computing is consider as innovative model in term of IT

infrastructure. Usually, it enabled to focus on the specific core requirement of enterprise

activities. Thus, it support for increasing the productivity as well as profitability in global

marketplace. The adoption of cloud computing means that automatically improve the simplicity,

scalability and flexibility. Afterwards, it provide the better offers to increase demand of cloud

computing in global marketplace. In recently, it has been reported that 76% of large corporation

are adopting the cloud services whereas 60% of SME are also adopting cloud computing.

Moghaddam (2020) there are lot of definition of cloud computing that has been relevant

to the AWS technology. In order to provide the efficient IT sourcing model for improving the

performance of enterprise application on regular basis. it became wider as internet based service

offering while comprised large amount data storage, hosting network infrastructure. Moreover, it

also added the cloud service consumer which despite that there might be offering better services.

Usually, it is depending the enterprise need and requirement whereas considerable as Saas. It

help for improve the capability of consumer when they can enterprise applications. These are

running on the cloud infrastructure and performed the multiple task.

The cloud service model has deployed in term of different private cloud whereas it

provision for exclusive use by enterprise, which also comprising the multiple clients. By using

cloud computing platform, enterprise may be managed, owned and operated with effectively. On

the other hand, community cloud, where it applicable within infrastructure that provisioned for

exclusive used by single community. In this way, different enterprise should be adopting the

cloud infrastructure and provide the service in different manner. Large Corporation have a

specific slack resources in context of both technical as well as financial manner. It also becoming

affordable to easily deploy the private SaaS, IaaS and PaaS.

7

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Theme: 2 Analysis

As mentioned in the context of literature summary of report in this paper, which is known

as analysis. The author may describe about how individual people understand the concept of

cloud computing. Sometimes, it has become consider as source of targeting the large number of

organizations. For instance, it also analysed the individual opinion or view point in term of data

mining. The business decision has considered one of the strongest strategic overview, which

always helping the decision development (Buure, 2020). When SME have focused on the

adopting the innovation in the business so that they can identify the opinion about data mining of

individual people. Implicit way to gain the demand, trends and insight, covering the new

opportunities from various perspectives.

In order to achieve the specific goal and objective in relevant to the data mining. Initially,

it is important for predicting the behaviour when it can use an appropriate algorithms. In

particular areas which required to relate with different algorithms which called as computing

thinking. As per considerable as computational thinking algorithms that will help for using in

project. For purpose of applying the abstraction, algorithmic thinking to resolve the problem in

regards of data application problem or issue.

In context of supervised learning, user may define the specific set of input and always

expected the accurate outcome. That’s why, they must adopt the effective computational

algorithm. Therefore, computer may have learned about the specific input which automatically

convert into reproduce the outputs. The supervised learning that will be identified to achieve the

analysis process. Initially, it is mainly focused on the determined features, labels in concept of

large datasets (Ochara, 2020). Moreover, it has determined the sentiments scores which are given

in the text format. Afterwards, it will be assigned the specific score value, which carried the

positive or meaning result. Furthermore, Machine learning process always compute that generate

both negative as well as positive result. Therefore, it is helping to achieve the supervised learning

need or requirement. Usually, there are lot of features that must be categorised into data type.

Each and every character that show unique value so that machine will unable to interpret in step

by step manner. In machine learning, the feature may need to be converted into different vectors

on the basis of requirement. Afterwards, machine can easily compute.

8

As mentioned in the context of literature summary of report in this paper, which is known

as analysis. The author may describe about how individual people understand the concept of

cloud computing. Sometimes, it has become consider as source of targeting the large number of

organizations. For instance, it also analysed the individual opinion or view point in term of data

mining. The business decision has considered one of the strongest strategic overview, which

always helping the decision development (Buure, 2020). When SME have focused on the

adopting the innovation in the business so that they can identify the opinion about data mining of

individual people. Implicit way to gain the demand, trends and insight, covering the new

opportunities from various perspectives.

In order to achieve the specific goal and objective in relevant to the data mining. Initially,

it is important for predicting the behaviour when it can use an appropriate algorithms. In

particular areas which required to relate with different algorithms which called as computing

thinking. As per considerable as computational thinking algorithms that will help for using in

project. For purpose of applying the abstraction, algorithmic thinking to resolve the problem in

regards of data application problem or issue.

In context of supervised learning, user may define the specific set of input and always

expected the accurate outcome. That’s why, they must adopt the effective computational

algorithm. Therefore, computer may have learned about the specific input which automatically

convert into reproduce the outputs. The supervised learning that will be identified to achieve the

analysis process. Initially, it is mainly focused on the determined features, labels in concept of

large datasets (Ochara, 2020). Moreover, it has determined the sentiments scores which are given

in the text format. Afterwards, it will be assigned the specific score value, which carried the

positive or meaning result. Furthermore, Machine learning process always compute that generate

both negative as well as positive result. Therefore, it is helping to achieve the supervised learning

need or requirement. Usually, there are lot of features that must be categorised into data type.

Each and every character that show unique value so that machine will unable to interpret in step

by step manner. In machine learning, the feature may need to be converted into different vectors

on the basis of requirement. Afterwards, machine can easily compute.

8

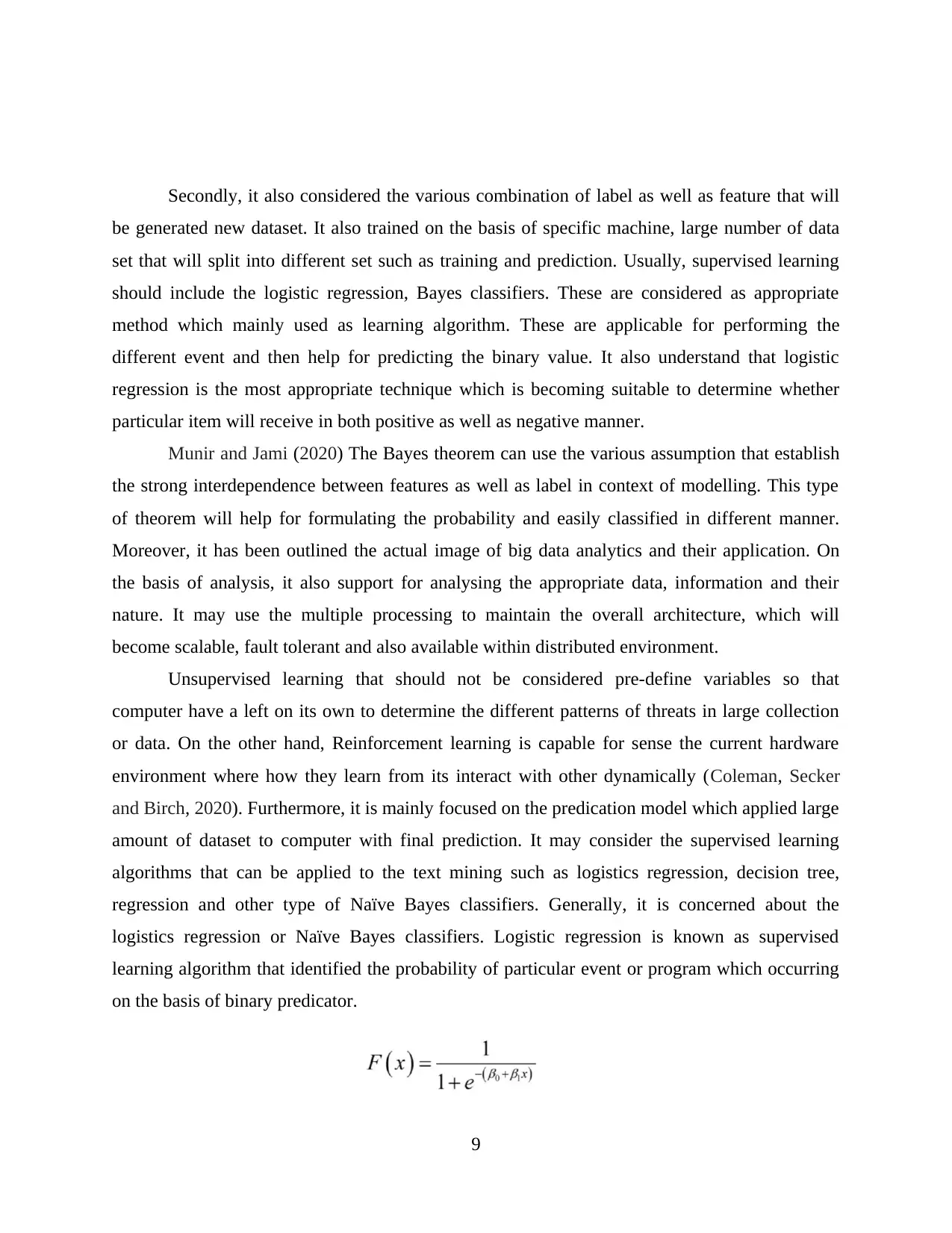

Secondly, it also considered the various combination of label as well as feature that will

be generated new dataset. It also trained on the basis of specific machine, large number of data

set that will split into different set such as training and prediction. Usually, supervised learning

should include the logistic regression, Bayes classifiers. These are considered as appropriate

method which mainly used as learning algorithm. These are applicable for performing the

different event and then help for predicting the binary value. It also understand that logistic

regression is the most appropriate technique which is becoming suitable to determine whether

particular item will receive in both positive as well as negative manner.

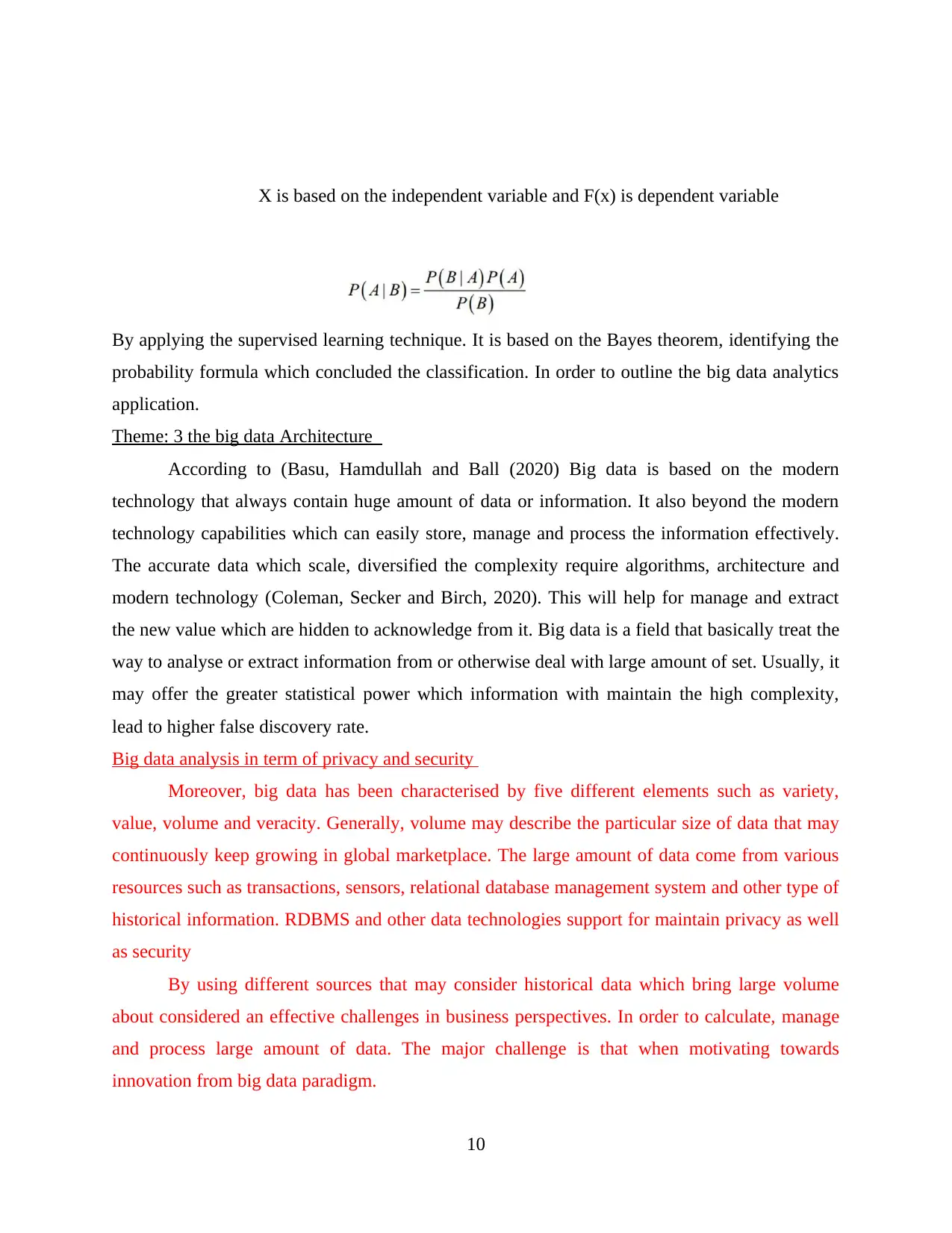

Munir and Jami (2020) The Bayes theorem can use the various assumption that establish

the strong interdependence between features as well as label in context of modelling. This type

of theorem will help for formulating the probability and easily classified in different manner.

Moreover, it has been outlined the actual image of big data analytics and their application. On

the basis of analysis, it also support for analysing the appropriate data, information and their

nature. It may use the multiple processing to maintain the overall architecture, which will

become scalable, fault tolerant and also available within distributed environment.

Unsupervised learning that should not be considered pre-define variables so that

computer have a left on its own to determine the different patterns of threats in large collection

or data. On the other hand, Reinforcement learning is capable for sense the current hardware

environment where how they learn from its interact with other dynamically (Coleman, Secker

and Birch, 2020). Furthermore, it is mainly focused on the predication model which applied large

amount of dataset to computer with final prediction. It may consider the supervised learning

algorithms that can be applied to the text mining such as logistics regression, decision tree,

regression and other type of Naïve Bayes classifiers. Generally, it is concerned about the

logistics regression or Naïve Bayes classifiers. Logistic regression is known as supervised

learning algorithm that identified the probability of particular event or program which occurring

on the basis of binary predicator.

9

be generated new dataset. It also trained on the basis of specific machine, large number of data

set that will split into different set such as training and prediction. Usually, supervised learning

should include the logistic regression, Bayes classifiers. These are considered as appropriate

method which mainly used as learning algorithm. These are applicable for performing the

different event and then help for predicting the binary value. It also understand that logistic

regression is the most appropriate technique which is becoming suitable to determine whether

particular item will receive in both positive as well as negative manner.

Munir and Jami (2020) The Bayes theorem can use the various assumption that establish

the strong interdependence between features as well as label in context of modelling. This type

of theorem will help for formulating the probability and easily classified in different manner.

Moreover, it has been outlined the actual image of big data analytics and their application. On

the basis of analysis, it also support for analysing the appropriate data, information and their

nature. It may use the multiple processing to maintain the overall architecture, which will

become scalable, fault tolerant and also available within distributed environment.

Unsupervised learning that should not be considered pre-define variables so that

computer have a left on its own to determine the different patterns of threats in large collection

or data. On the other hand, Reinforcement learning is capable for sense the current hardware

environment where how they learn from its interact with other dynamically (Coleman, Secker

and Birch, 2020). Furthermore, it is mainly focused on the predication model which applied large

amount of dataset to computer with final prediction. It may consider the supervised learning

algorithms that can be applied to the text mining such as logistics regression, decision tree,

regression and other type of Naïve Bayes classifiers. Generally, it is concerned about the

logistics regression or Naïve Bayes classifiers. Logistic regression is known as supervised

learning algorithm that identified the probability of particular event or program which occurring

on the basis of binary predicator.

9

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

X is based on the independent variable and F(x) is dependent variable

By applying the supervised learning technique. It is based on the Bayes theorem, identifying the

probability formula which concluded the classification. In order to outline the big data analytics

application.

Theme: 3 the big data Architecture

According to (Basu, Hamdullah and Ball (2020) Big data is based on the modern

technology that always contain huge amount of data or information. It also beyond the modern

technology capabilities which can easily store, manage and process the information effectively.

The accurate data which scale, diversified the complexity require algorithms, architecture and

modern technology (Coleman, Secker and Birch, 2020). This will help for manage and extract

the new value which are hidden to acknowledge from it. Big data is a field that basically treat the

way to analyse or extract information from or otherwise deal with large amount of set. Usually, it

may offer the greater statistical power which information with maintain the high complexity,

lead to higher false discovery rate.

Big data analysis in term of privacy and security

Moreover, big data has been characterised by five different elements such as variety,

value, volume and veracity. Generally, volume may describe the particular size of data that may

continuously keep growing in global marketplace. The large amount of data come from various

resources such as transactions, sensors, relational database management system and other type of

historical information. RDBMS and other data technologies support for maintain privacy as well

as security

By using different sources that may consider historical data which bring large volume

about considered an effective challenges in business perspectives. In order to calculate, manage

and process large amount of data. The major challenge is that when motivating towards

innovation from big data paradigm.

10

By applying the supervised learning technique. It is based on the Bayes theorem, identifying the

probability formula which concluded the classification. In order to outline the big data analytics

application.

Theme: 3 the big data Architecture

According to (Basu, Hamdullah and Ball (2020) Big data is based on the modern

technology that always contain huge amount of data or information. It also beyond the modern

technology capabilities which can easily store, manage and process the information effectively.

The accurate data which scale, diversified the complexity require algorithms, architecture and

modern technology (Coleman, Secker and Birch, 2020). This will help for manage and extract

the new value which are hidden to acknowledge from it. Big data is a field that basically treat the

way to analyse or extract information from or otherwise deal with large amount of set. Usually, it

may offer the greater statistical power which information with maintain the high complexity,

lead to higher false discovery rate.

Big data analysis in term of privacy and security

Moreover, big data has been characterised by five different elements such as variety,

value, volume and veracity. Generally, volume may describe the particular size of data that may

continuously keep growing in global marketplace. The large amount of data come from various

resources such as transactions, sensors, relational database management system and other type of

historical information. RDBMS and other data technologies support for maintain privacy as well

as security

By using different sources that may consider historical data which bring large volume

about considered an effective challenges in business perspectives. In order to calculate, manage

and process large amount of data. The major challenge is that when motivating towards

innovation from big data paradigm.

10

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Apart from that describe the structure of big data and organise into structure format so

that it become easier to access information or data. The relational database management system

like SQL server, oracle database and BD2. These are basically used to maintain or collect

information while reducing the redundancy and also ensure the consistency of data. In most of

cases, the large amount of data can exist within unstructured manner. That’s why, it is an

essential for handling the large by using NoSQL database system and help for manipulating or

processing the different type of data. Another challenge aspects is that when consider single data

model so that they can easily handle large amount of information effectively.

Microsoft Azure

Big data on Microsoft Azure which providing the robust service for analysing, evaluating

large amount of information. It is consider one of most effective way to store or collect large

amount of data. Generally, it can be used Azure data lake storage and then process through spark

on Azure Databricks. Moreover. Azure stream analytics is defined as MS for real time data

processing (Coleman, Secker and Birch, 2020). It mainly used for stream based analytics query

language such as T-SQL. It means that allows to easily understanding the time learning.

There are large number of data sets, which often a big data solution that must process

with the help of long-running batch activities. In order to filter and aggregate and prepare for

purpose of data analysis. When transform the large amount of data or information by using

Microsoft Azure because it help for converting into actionable insights and allows for combing

with large amount or data at certain scale.

Azure analytics service which enable to use full breadth of large data assets, helping to

build the transformative or secure the better solution at business scale (Coleman, Secker and

Birch, 2020). It is fully managed by Azure data lake storage and other type of azure analytics. It

is useful for deploying the better solution and transform data into visualization.

Amazon web services

It is based on the modern technology that mainly evolve cloud computing tool or

platform provided by Amazon. This technique is based on the combination of infrastructure as

service and platform as service. These are providing the better quality of service to organization.

In order to storage large amount of data or information within database management system.

11

that it become easier to access information or data. The relational database management system

like SQL server, oracle database and BD2. These are basically used to maintain or collect

information while reducing the redundancy and also ensure the consistency of data. In most of

cases, the large amount of data can exist within unstructured manner. That’s why, it is an

essential for handling the large by using NoSQL database system and help for manipulating or

processing the different type of data. Another challenge aspects is that when consider single data

model so that they can easily handle large amount of information effectively.

Microsoft Azure

Big data on Microsoft Azure which providing the robust service for analysing, evaluating

large amount of information. It is consider one of most effective way to store or collect large

amount of data. Generally, it can be used Azure data lake storage and then process through spark

on Azure Databricks. Moreover. Azure stream analytics is defined as MS for real time data

processing (Coleman, Secker and Birch, 2020). It mainly used for stream based analytics query

language such as T-SQL. It means that allows to easily understanding the time learning.

There are large number of data sets, which often a big data solution that must process

with the help of long-running batch activities. In order to filter and aggregate and prepare for

purpose of data analysis. When transform the large amount of data or information by using

Microsoft Azure because it help for converting into actionable insights and allows for combing

with large amount or data at certain scale.

Azure analytics service which enable to use full breadth of large data assets, helping to

build the transformative or secure the better solution at business scale (Coleman, Secker and

Birch, 2020). It is fully managed by Azure data lake storage and other type of azure analytics. It

is useful for deploying the better solution and transform data into visualization.

Amazon web services

It is based on the modern technology that mainly evolve cloud computing tool or

platform provided by Amazon. This technique is based on the combination of infrastructure as

service and platform as service. These are providing the better quality of service to organization.

In order to storage large amount of data or information within database management system.

11

AWS offers variety of tool and solution for organizations or other developers that can be

used in the data centers. Amazon web service can be categorised into different manner. Each can

be configured in different way on the basis of user’s requirements. It should be able to see

specific configuration options and individual server to map with AWS service.

The Amazon web services are provided from large amount of data centers which spreading

across the availability zone. It may contain the different physical data centers so that many

enterprise select the particular zone for particular reason (Choudhary and et.al., 2020). This type

of technology will be providing the scalable object storage for data backup. Within enterprises,

IT professional can store data and files as S3 objects and its current range up to 5 Giga bytes. In

this way, organization can save money with s3 through its infrequent access storage tier.

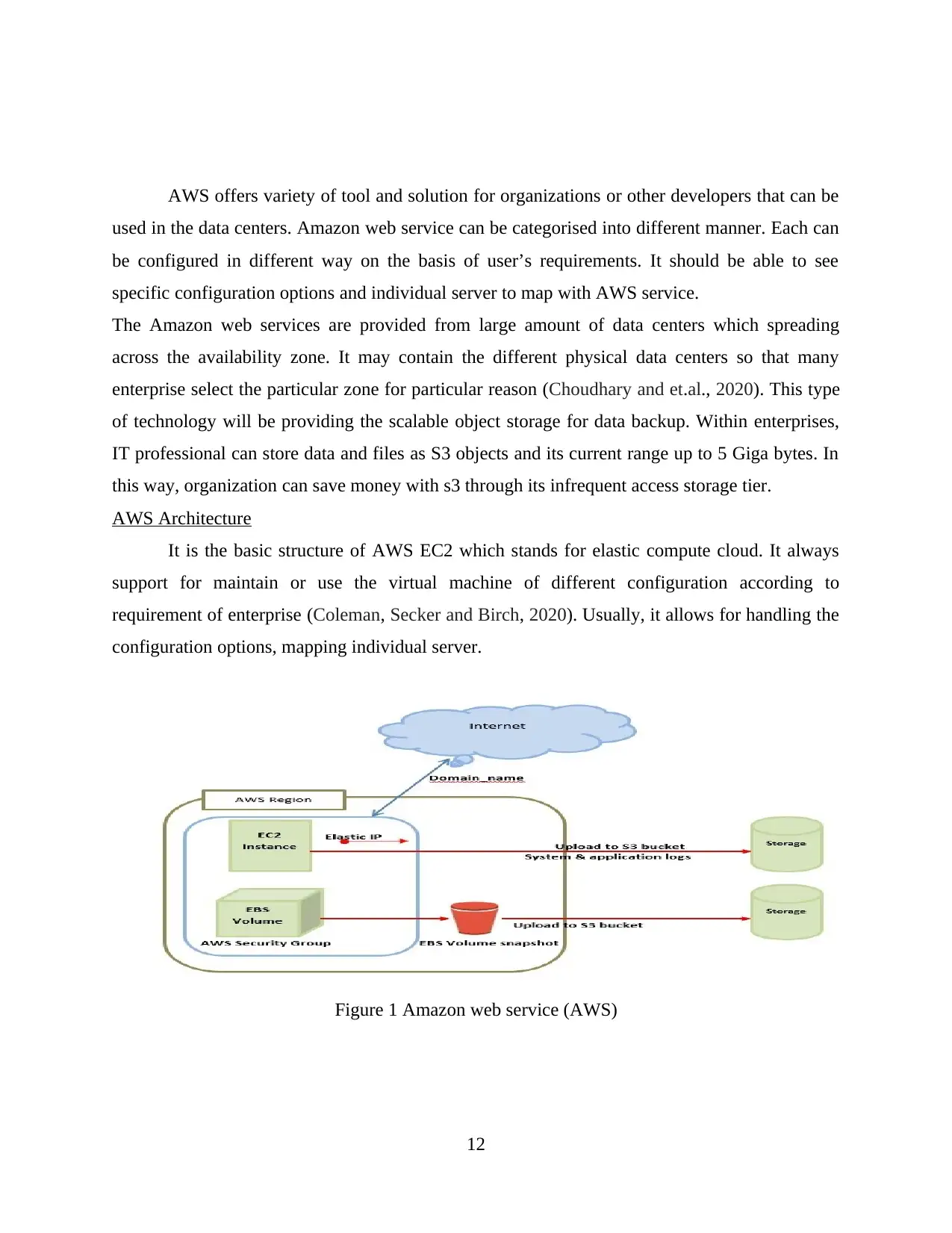

AWS Architecture

It is the basic structure of AWS EC2 which stands for elastic compute cloud. It always

support for maintain or use the virtual machine of different configuration according to

requirement of enterprise (Coleman, Secker and Birch, 2020). Usually, it allows for handling the

configuration options, mapping individual server.

Figure 1 Amazon web service (AWS)

12

used in the data centers. Amazon web service can be categorised into different manner. Each can

be configured in different way on the basis of user’s requirements. It should be able to see

specific configuration options and individual server to map with AWS service.

The Amazon web services are provided from large amount of data centers which spreading

across the availability zone. It may contain the different physical data centers so that many

enterprise select the particular zone for particular reason (Choudhary and et.al., 2020). This type

of technology will be providing the scalable object storage for data backup. Within enterprises,

IT professional can store data and files as S3 objects and its current range up to 5 Giga bytes. In

this way, organization can save money with s3 through its infrequent access storage tier.

AWS Architecture

It is the basic structure of AWS EC2 which stands for elastic compute cloud. It always

support for maintain or use the virtual machine of different configuration according to

requirement of enterprise (Coleman, Secker and Birch, 2020). Usually, it allows for handling the

configuration options, mapping individual server.

Figure 1 Amazon web service (AWS)

12

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 82

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.