BUS5PA Predictive Analytics Assignment 1: Model Evaluation

VerifiedAdded on 2020/03/01

|25

|2918

|37

Homework Assignment

AI Summary

This assignment, completed for the BUS5PA Predictive Analytics course at La Trobe Business School, focuses on building and evaluating predictive models for consumer loyalty analysis. The student utilizes SAS Miner to explore the ORGANICS dataset, employing decision tree and regression-based modeling techniques. The project involves setting up the project, exploratory data analysis, and data partitioning to create training and validation datasets. Decision tree models are constructed, analyzed, and compared based on metrics like average squared error and misclassification rate. Regression models are also developed, using stepwise selection and imputation of missing values. The assignment includes a detailed comparison of the decision tree and regression models, highlighting the strengths and weaknesses of each approach. Ultimately, the student concludes that a decision tree model is the most effective for this business case, offering ease of use, robustness, and interpretability. The report also includes an open-ended discussion about common pitfalls in predictive modeling, such as choosing the right target variable and avoiding overfitting, and extends the current knowledge with additional readings.

Building and Evaluating Predictive Models

Assignment 1

(BUS5PA Predictive Analytics – Semester 2, 2017)

By

<Student Name>

(19140689)

La Trobe Business School

Australia

Assignment 1

(BUS5PA Predictive Analytics – Semester 2, 2017)

By

<Student Name>

(19140689)

La Trobe Business School

Australia

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Table of Contents

1. Setting up the project and exploratory analysis 4

2. Decision tree based modeling and analysis 5

3. Regression based modeling and analysis 6

4. Open ended discussion 8

5. Extending current knowledge with additional reading 10

References 12

Annexure I-XII

1

1. Setting up the project and exploratory analysis 4

2. Decision tree based modeling and analysis 5

3. Regression based modeling and analysis 6

4. Open ended discussion 8

5. Extending current knowledge with additional reading 10

References 12

Annexure I-XII

1

List of Figures

Fig. 1 Project: BUS5PA_Assignment1_ 19140689.........................................................................I

Fig. 2 New Library...........................................................................................................................I

Fig. 3 New data source: Organics....................................................................................................I

Fig. 4 Column Metadata..................................................................................................................II

Fig. 5 Organics purchase indicator (%age).....................................................................................II

Fig. 6 Organics diagram workspace: Organics data source...........................................................III

Fig. 7 Data partition.......................................................................................................................III

Fig. 8 Data set Allocations.............................................................................................................III

Fig. 9 Addition of Decision Tree...................................................................................................IV

Fig. 10 Autonomously created decision tree model......................................................................IV

Fig. 11 Assessment measure..........................................................................................................IV

Fig. 12 Subtree Assessment Plot...................................................................................................IV

Fig. 13 Decision Tree (Tree 1)........................................................................................................V

Fig. 14 Decision Tree after adding Tree 2......................................................................................V

Fig. 15 Three-way Split.................................................................................................................VI

Fig. 16 Assessment Measure : Decision Tree 2............................................................................VI

2

Fig. 1 Project: BUS5PA_Assignment1_ 19140689.........................................................................I

Fig. 2 New Library...........................................................................................................................I

Fig. 3 New data source: Organics....................................................................................................I

Fig. 4 Column Metadata..................................................................................................................II

Fig. 5 Organics purchase indicator (%age).....................................................................................II

Fig. 6 Organics diagram workspace: Organics data source...........................................................III

Fig. 7 Data partition.......................................................................................................................III

Fig. 8 Data set Allocations.............................................................................................................III

Fig. 9 Addition of Decision Tree...................................................................................................IV

Fig. 10 Autonomously created decision tree model......................................................................IV

Fig. 11 Assessment measure..........................................................................................................IV

Fig. 12 Subtree Assessment Plot...................................................................................................IV

Fig. 13 Decision Tree (Tree 1)........................................................................................................V

Fig. 14 Decision Tree after adding Tree 2......................................................................................V

Fig. 15 Three-way Split.................................................................................................................VI

Fig. 16 Assessment Measure : Decision Tree 2............................................................................VI

2

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Fig. 17 Average square error : Tree 2............................................................................................VI

Fig. 18 StatExplore tool...............................................................................................................VII

Fig. 19 Default input method updation........................................................................................VII

Fig. 20 Indicator variables............................................................................................................VII

Fig. 21 Addition of Regression model........................................................................................VIII

Fig. 22 Regression Model Selection...........................................................................................VIII

Fig. 23 Result of Regression model..............................................................................................IX

Fig. 24 Summary of Stepwise Selection........................................................................................IX

Fig. 25 Odd ratio Estimates............................................................................................................X

Fig. 26 Average squared error (ASE).............................................................................................X

Fig. 27 Model Comparison............................................................................................................XI

Fig. 28 Model Comparison Result.................................................................................................XI

Fig. 29 ROC Chart.......................................................................................................................XII

Fig. 30 Cumulative Lift................................................................................................................XII

Fig. 31 Fit Statistics....................................................................................................................XIII

List of Tables

Table 1 Model performance comparison 8

3

Fig. 18 StatExplore tool...............................................................................................................VII

Fig. 19 Default input method updation........................................................................................VII

Fig. 20 Indicator variables............................................................................................................VII

Fig. 21 Addition of Regression model........................................................................................VIII

Fig. 22 Regression Model Selection...........................................................................................VIII

Fig. 23 Result of Regression model..............................................................................................IX

Fig. 24 Summary of Stepwise Selection........................................................................................IX

Fig. 25 Odd ratio Estimates............................................................................................................X

Fig. 26 Average squared error (ASE).............................................................................................X

Fig. 27 Model Comparison............................................................................................................XI

Fig. 28 Model Comparison Result.................................................................................................XI

Fig. 29 ROC Chart.......................................................................................................................XII

Fig. 30 Cumulative Lift................................................................................................................XII

Fig. 31 Fit Statistics....................................................................................................................XIII

List of Tables

Table 1 Model performance comparison 8

3

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1. Setting up the project and exploratory analysis

a] A new project BUS5PA_Assignment1_19140689 has been created (Fig. 1).

a.1] New SAS Library, has been created named As40689, and using SAS dataset ORGANICS

data source has been created (Fig. 2 and Fig. 3).

a.2] All the roles have been defined for the analysis variables for ORGANICS data source (Fig.

4).

a.3] “TargetBuy” has been defined as target variable. As mentioned in Fig. 5 (Percentage

distribution of “TargetBuy”), 24.77% individuals have purchased organic products and rest

i.e.75.23% have not purchased organic products.

a.4] Demcluster has been rejected (Fig. 4).

a.5] ORGANICS Data source has been created (Fig. 3).

a.6] ORGANICS data source has been added to Organics diagram workspace (Fig. 6).

b] Whether Individuals have purchased the organic item or not, is indicated by TargetBuy, and

TargetAmt indicates the number of organic amounts bought. TargetAmt will only be noted

when Targetbuy is Yes i.e. for those who have purchased any organic products. Hence, in

this model, to predict TargetBuy, TargetAmt cannot be used as the input. The objective of

supermarket’s is to develop a loyalty model by understanding whether customers have

purchased any of the organic products. So, TargetBuy is the suitable as target variable.

4

a] A new project BUS5PA_Assignment1_19140689 has been created (Fig. 1).

a.1] New SAS Library, has been created named As40689, and using SAS dataset ORGANICS

data source has been created (Fig. 2 and Fig. 3).

a.2] All the roles have been defined for the analysis variables for ORGANICS data source (Fig.

4).

a.3] “TargetBuy” has been defined as target variable. As mentioned in Fig. 5 (Percentage

distribution of “TargetBuy”), 24.77% individuals have purchased organic products and rest

i.e.75.23% have not purchased organic products.

a.4] Demcluster has been rejected (Fig. 4).

a.5] ORGANICS Data source has been created (Fig. 3).

a.6] ORGANICS data source has been added to Organics diagram workspace (Fig. 6).

b] Whether Individuals have purchased the organic item or not, is indicated by TargetBuy, and

TargetAmt indicates the number of organic amounts bought. TargetAmt will only be noted

when Targetbuy is Yes i.e. for those who have purchased any organic products. Hence, in

this model, to predict TargetBuy, TargetAmt cannot be used as the input. The objective of

supermarket’s is to develop a loyalty model by understanding whether customers have

purchased any of the organic products. So, TargetBuy is the suitable as target variable.

4

2. Decision tree based modeling and analysis

a] A node name Data partition has been inserted to the diagram and it has been linked to the

data source node (ORGANICS). 50% of the data for training and rest 50% for validation,

have been assigned in data partition (Fig. 7 and Fig. 8.)

b] In Fig. 9, it has been shown that the Decision Tree node has been inserted to the workspace

and it has been linked to the Data partition node (Fig. 9).

c] Decision Tree model has been created autonomously, and sub tree model assessment criteria

has been chosen by using average square error (Fig. 10 and 11).

c.1] There are 29 leaves in the optimal tree (Fig. 12).

c.2] For the first split, variable age has been used. It has divided the training data in two

subsets, first one is for the age less than 44.5. In this case, TargetBuy = 1 has higher than

average concentration. Second one is for age greater than or equals to 44.5, In this case,

TargetBuy = 0 has higher than average concentration. Using average square error, Decision

Tree model has been created autonomously (Fig. 13).

d] First Decision Tree has been renamed as Tree 1 and Second Decision Tree node which has

been renamed as Tree, has been added to the diagram workspace, and it has been connected

with the Data Partition (Fig. 14).

d.1] In the splitting rule of the new Decision Tree, maximum number of branches have been set

to 3 to permit three-way splits (Fig. 15).

5

a] A node name Data partition has been inserted to the diagram and it has been linked to the

data source node (ORGANICS). 50% of the data for training and rest 50% for validation,

have been assigned in data partition (Fig. 7 and Fig. 8.)

b] In Fig. 9, it has been shown that the Decision Tree node has been inserted to the workspace

and it has been linked to the Data partition node (Fig. 9).

c] Decision Tree model has been created autonomously, and sub tree model assessment criteria

has been chosen by using average square error (Fig. 10 and 11).

c.1] There are 29 leaves in the optimal tree (Fig. 12).

c.2] For the first split, variable age has been used. It has divided the training data in two

subsets, first one is for the age less than 44.5. In this case, TargetBuy = 1 has higher than

average concentration. Second one is for age greater than or equals to 44.5, In this case,

TargetBuy = 0 has higher than average concentration. Using average square error, Decision

Tree model has been created autonomously (Fig. 13).

d] First Decision Tree has been renamed as Tree 1 and Second Decision Tree node which has

been renamed as Tree, has been added to the diagram workspace, and it has been connected

with the Data Partition (Fig. 14).

d.1] In the splitting rule of the new Decision Tree, maximum number of branches have been set

to 3 to permit three-way splits (Fig. 15).

5

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

d.2] Decision tree model has been created using average square error (Fig. 16.)

d.3] After running Tree 2, There are 33 leaves in the optimal tree. Subtree assessment plot has

been depicted in Fig. 17. In the last question C, there were 29 leaves in the optimal tree for

Tree 1. With the decision tree, Tree 2, misclassification rate (Train:0.1848) of the model is

marginally lower than the model with the decision tree, Tree 1 (Train: 0.1851) and average

square error of the model with the decision tree, Tree 1 (Train: 0.1329) is lower than the

model with the decision tree, Tree 2 (Train: 0.1330). It shows that in terms of average square

error, tree with 29 leaves performs marginally better and in terms of misclassification rate,

tree with 33 leaves performs marginally better. However complexly rises with the higher

number of leaves, and tree with lower number of leaves is less complex and more reliable.

e] Using average square error, the decision tree model which has the smallest average square

error among the actual class and predicted class performs better. In this business case, Tree 1

performs better than model with Tree 2, as Average square error (Tree 1) < Average square

error (Tree 2) i.e. 0.1329 < 0.1330. lower average squared error indicates the model performs

better as a predictor because it is “wrong” less often.

3. Regression based modeling and analysis

a] From the explore, StatExplore tool has been inserted in the diagram and linked with the

ORGANICS data source, and it has been run (Fig. 18).

b] It is desirable to impute missing values prior to fitting a model that overlooks data with

missing values while comparing those models with a decision tree. Missing value imputation

6

d.3] After running Tree 2, There are 33 leaves in the optimal tree. Subtree assessment plot has

been depicted in Fig. 17. In the last question C, there were 29 leaves in the optimal tree for

Tree 1. With the decision tree, Tree 2, misclassification rate (Train:0.1848) of the model is

marginally lower than the model with the decision tree, Tree 1 (Train: 0.1851) and average

square error of the model with the decision tree, Tree 1 (Train: 0.1329) is lower than the

model with the decision tree, Tree 2 (Train: 0.1330). It shows that in terms of average square

error, tree with 29 leaves performs marginally better and in terms of misclassification rate,

tree with 33 leaves performs marginally better. However complexly rises with the higher

number of leaves, and tree with lower number of leaves is less complex and more reliable.

e] Using average square error, the decision tree model which has the smallest average square

error among the actual class and predicted class performs better. In this business case, Tree 1

performs better than model with Tree 2, as Average square error (Tree 1) < Average square

error (Tree 2) i.e. 0.1329 < 0.1330. lower average squared error indicates the model performs

better as a predictor because it is “wrong” less often.

3. Regression based modeling and analysis

a] From the explore, StatExplore tool has been inserted in the diagram and linked with the

ORGANICS data source, and it has been run (Fig. 18).

b] It is desirable to impute missing values prior to fitting a model that overlooks data with

missing values while comparing those models with a decision tree. Missing value imputation

6

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

is required for regression, as in SAS Miner regressions models ignore observations that

contain missing values, it usually reduces the size of the training data. Less training data can

strikingly fade the predictive power of these models. In this case, we should use the

imputation to overcome the obstruction of missing data, impute missing values before fit the

models are required.

c] For class variables, Default Input Method has been set to Default Constant Value and Default

Character Value has been set to U, and for interval variables Default Input Method has been

set as Mean (Fig. 19). Indicator Variable Type has been set as Unique, and Indicator Variable

Role has been set to Input, to create imputation indicators for all imputed inputs (Fig. 20).

d] Regression node has been inserted to the diagram and connected to the Impute node (Fig.

21).

e] Stepwise Selection Model, and Validation Error Selection Criteria has been chosen (Fig. 22).

f] Fig. 23 shows the result of the regression model. Trained model of step 6 has been selected,d

model, based on the error rate for the validation data, This model consists of the following

variables: IMP_DemAffl, IMP_DemAge, IMP_DemGender, M_DemAffl, M_DemAge and

M_DemGender (Fig. 24). Hence, for the supermarket management Affluence grade, age and

gender would be main variables to realize loyalty and to formulate a predictive model. The

result of odds ratio estimates shows that important parameter for this model will be imputed

value of gender (Female), gender (Male), Affluence grade and age.

The average squared error (ASE) of prediction is used to estimate the error in prediction of a

model fitted using the training data shown below in Equation 1

7

contain missing values, it usually reduces the size of the training data. Less training data can

strikingly fade the predictive power of these models. In this case, we should use the

imputation to overcome the obstruction of missing data, impute missing values before fit the

models are required.

c] For class variables, Default Input Method has been set to Default Constant Value and Default

Character Value has been set to U, and for interval variables Default Input Method has been

set as Mean (Fig. 19). Indicator Variable Type has been set as Unique, and Indicator Variable

Role has been set to Input, to create imputation indicators for all imputed inputs (Fig. 20).

d] Regression node has been inserted to the diagram and connected to the Impute node (Fig.

21).

e] Stepwise Selection Model, and Validation Error Selection Criteria has been chosen (Fig. 22).

f] Fig. 23 shows the result of the regression model. Trained model of step 6 has been selected,d

model, based on the error rate for the validation data, This model consists of the following

variables: IMP_DemAffl, IMP_DemAge, IMP_DemGender, M_DemAffl, M_DemAge and

M_DemGender (Fig. 24). Hence, for the supermarket management Affluence grade, age and

gender would be main variables to realize loyalty and to formulate a predictive model. The

result of odds ratio estimates shows that important parameter for this model will be imputed

value of gender (Female), gender (Male), Affluence grade and age.

The average squared error (ASE) of prediction is used to estimate the error in prediction of a

model fitted using the training data shown below in Equation 1

7

ASE= 1

n ∑ ( zi − ^zi )

2…………………………………………Equation 1

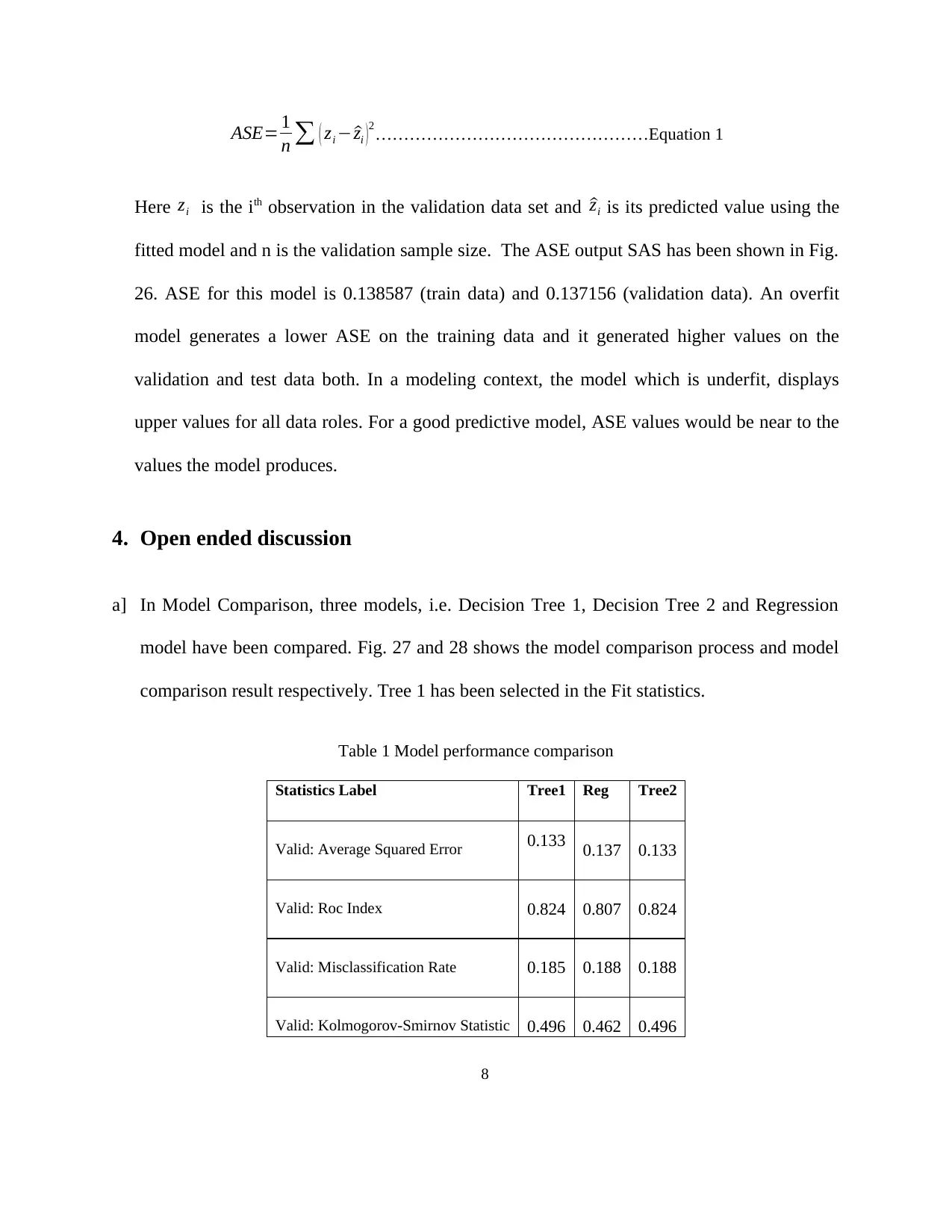

Here zi is the ith observation in the validation data set and ^zi is its predicted value using the

fitted model and n is the validation sample size. The ASE output SAS has been shown in Fig.

26. ASE for this model is 0.138587 (train data) and 0.137156 (validation data). An overfit

model generates a lower ASE on the training data and it generated higher values on the

validation and test data both. In a modeling context, the model which is underfit, displays

upper values for all data roles. For a good predictive model, ASE values would be near to the

values the model produces.

4. Open ended discussion

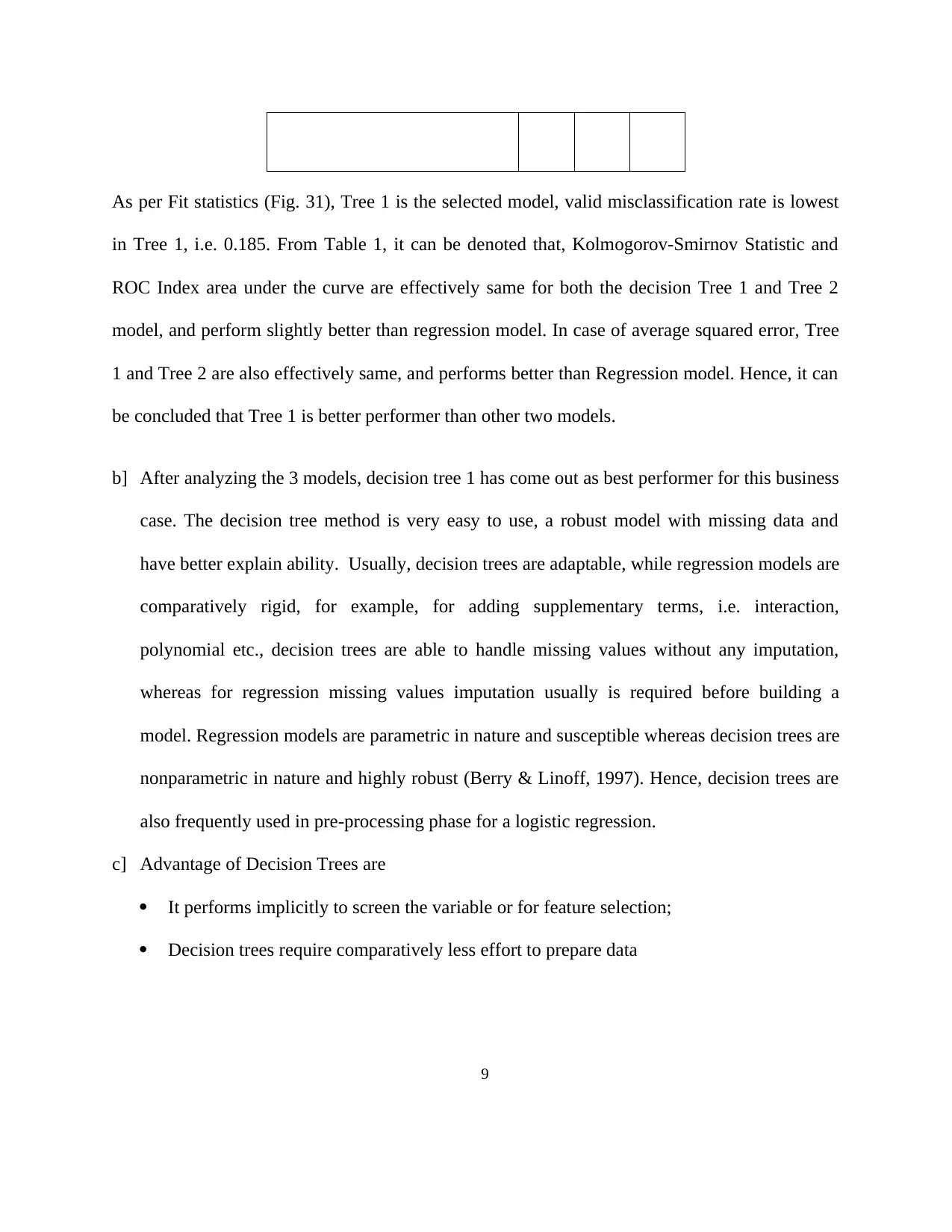

a] In Model Comparison, three models, i.e. Decision Tree 1, Decision Tree 2 and Regression

model have been compared. Fig. 27 and 28 shows the model comparison process and model

comparison result respectively. Tree 1 has been selected in the Fit statistics.

Table 1 Model performance comparison

Statistics Label Tree1 Reg Tree2

Valid: Average Squared Error 0.133 0.137 0.133

Valid: Roc Index 0.824 0.807 0.824

Valid: Misclassification Rate 0.185 0.188 0.188

Valid: Kolmogorov-Smirnov Statistic 0.496 0.462 0.496

8

n ∑ ( zi − ^zi )

2…………………………………………Equation 1

Here zi is the ith observation in the validation data set and ^zi is its predicted value using the

fitted model and n is the validation sample size. The ASE output SAS has been shown in Fig.

26. ASE for this model is 0.138587 (train data) and 0.137156 (validation data). An overfit

model generates a lower ASE on the training data and it generated higher values on the

validation and test data both. In a modeling context, the model which is underfit, displays

upper values for all data roles. For a good predictive model, ASE values would be near to the

values the model produces.

4. Open ended discussion

a] In Model Comparison, three models, i.e. Decision Tree 1, Decision Tree 2 and Regression

model have been compared. Fig. 27 and 28 shows the model comparison process and model

comparison result respectively. Tree 1 has been selected in the Fit statistics.

Table 1 Model performance comparison

Statistics Label Tree1 Reg Tree2

Valid: Average Squared Error 0.133 0.137 0.133

Valid: Roc Index 0.824 0.807 0.824

Valid: Misclassification Rate 0.185 0.188 0.188

Valid: Kolmogorov-Smirnov Statistic 0.496 0.462 0.496

8

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

As per Fit statistics (Fig. 31), Tree 1 is the selected model, valid misclassification rate is lowest

in Tree 1, i.e. 0.185. From Table 1, it can be denoted that, Kolmogorov-Smirnov Statistic and

ROC Index area under the curve are effectively same for both the decision Tree 1 and Tree 2

model, and perform slightly better than regression model. In case of average squared error, Tree

1 and Tree 2 are also effectively same, and performs better than Regression model. Hence, it can

be concluded that Tree 1 is better performer than other two models.

b] After analyzing the 3 models, decision tree 1 has come out as best performer for this business

case. The decision tree method is very easy to use, a robust model with missing data and

have better explain ability. Usually, decision trees are adaptable, while regression models are

comparatively rigid, for example, for adding supplementary terms, i.e. interaction,

polynomial etc., decision trees are able to handle missing values without any imputation,

whereas for regression missing values imputation usually is required before building a

model. Regression models are parametric in nature and susceptible whereas decision trees are

nonparametric in nature and highly robust (Berry & Linoff, 1997). Hence, decision trees are

also frequently used in pre-processing phase for a logistic regression.

c] Advantage of Decision Trees are

It performs implicitly to screen the variable or for feature selection;

Decision trees require comparatively less effort to prepare data

9

in Tree 1, i.e. 0.185. From Table 1, it can be denoted that, Kolmogorov-Smirnov Statistic and

ROC Index area under the curve are effectively same for both the decision Tree 1 and Tree 2

model, and perform slightly better than regression model. In case of average squared error, Tree

1 and Tree 2 are also effectively same, and performs better than Regression model. Hence, it can

be concluded that Tree 1 is better performer than other two models.

b] After analyzing the 3 models, decision tree 1 has come out as best performer for this business

case. The decision tree method is very easy to use, a robust model with missing data and

have better explain ability. Usually, decision trees are adaptable, while regression models are

comparatively rigid, for example, for adding supplementary terms, i.e. interaction,

polynomial etc., decision trees are able to handle missing values without any imputation,

whereas for regression missing values imputation usually is required before building a

model. Regression models are parametric in nature and susceptible whereas decision trees are

nonparametric in nature and highly robust (Berry & Linoff, 1997). Hence, decision trees are

also frequently used in pre-processing phase for a logistic regression.

c] Advantage of Decision Trees are

It performs implicitly to screen the variable or for feature selection;

Decision trees require comparatively less effort to prepare data

9

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Decision Tree’s performance is not affected by the nonlinear relationships between

parameters

Decision Trees are easy to understand and describe.

Regression-based forecasting technique’s main advantages is that it uses analysis and research

and for prediction of future occurrence. Regression models also permit to study the comparative

impacts of these independent, or predictor, variables on the dependent, or target, variable (Hayes

& Preacher, 2014). It has ability to measure the relative influence of one or more predictor

variables to the criterion value. And it has the ability to identify outliers, or anomalies. High

dimension increases the risk of overfitting due to correlations between redundant variables,

increases computation time, increases the cost and practicality of data collection for the model,

and makes interpretation of the model difficult. For the organic data, misclassification rate is

lowest in decision tree, and The ROC chart shows that the both decision tree and regression

model have good predictive accuracy. In this case, Decision Trees to consumer loyalty analysis

will be better for predictive modeling to understand the consumer segments.

5. Extending current knowledge with additional reading

Just getting things wrong

Problems should be recognized, without any clear objective, model will not be successful. In this

business case, at first, there were two options for target variables, TargetBuy, and TargetAmt.

TargetAmt was just a product of TargetBuy, and TargetAmt was not a binary variable. Hence

10

parameters

Decision Trees are easy to understand and describe.

Regression-based forecasting technique’s main advantages is that it uses analysis and research

and for prediction of future occurrence. Regression models also permit to study the comparative

impacts of these independent, or predictor, variables on the dependent, or target, variable (Hayes

& Preacher, 2014). It has ability to measure the relative influence of one or more predictor

variables to the criterion value. And it has the ability to identify outliers, or anomalies. High

dimension increases the risk of overfitting due to correlations between redundant variables,

increases computation time, increases the cost and practicality of data collection for the model,

and makes interpretation of the model difficult. For the organic data, misclassification rate is

lowest in decision tree, and The ROC chart shows that the both decision tree and regression

model have good predictive accuracy. In this case, Decision Trees to consumer loyalty analysis

will be better for predictive modeling to understand the consumer segments.

5. Extending current knowledge with additional reading

Just getting things wrong

Problems should be recognized, without any clear objective, model will not be successful. In this

business case, at first, there were two options for target variables, TargetBuy, and TargetAmt.

TargetAmt was just a product of TargetBuy, and TargetAmt was not a binary variable. Hence

10

selection of target variable is one of the most important thing, the model could go wrong if

TargetAmt be selected as Target Variable.

Overfitting

For the training data, with more leaves of the decision tree, model turns out to be more complex,

due to higher number of iterations of training for a neural network, it appears to be fit the

training data. But, in actual scenario, it brings noise as well as signal. In this business case, the

decision tree, Tree 2, misclassification rate (Train:0.1848) of the model is very marginally lower

than the model with the decision tree, Tree 1 (Train: 0.1851) and average square error of the

model with the decision tree, Tree 1 (Train: 0.1329) is lower than the model with the decision

tree, Tree 2 (Train: 0.1330). Hence it can be realized that tree 1 performs marginally better in

terms of average square error and tree 2 performs marginally better in terms of misclassification

rate. But as with the higher number of leaves complexity increases, a less complex and reliable

tree i.e. Tree 1 will be more suitable for the model.

Sample bias

Sample bias is caused by picking non-random data for statistical modelling For this business

case, this sample covers across 5 geographical regions and 13 television regions, and it covers all

the data of consumers (both who have purchased or not purchased the organic product both).

Hence, there will be different set of consumers and sample bias will not be present in the data.

11

TargetAmt be selected as Target Variable.

Overfitting

For the training data, with more leaves of the decision tree, model turns out to be more complex,

due to higher number of iterations of training for a neural network, it appears to be fit the

training data. But, in actual scenario, it brings noise as well as signal. In this business case, the

decision tree, Tree 2, misclassification rate (Train:0.1848) of the model is very marginally lower

than the model with the decision tree, Tree 1 (Train: 0.1851) and average square error of the

model with the decision tree, Tree 1 (Train: 0.1329) is lower than the model with the decision

tree, Tree 2 (Train: 0.1330). Hence it can be realized that tree 1 performs marginally better in

terms of average square error and tree 2 performs marginally better in terms of misclassification

rate. But as with the higher number of leaves complexity increases, a less complex and reliable

tree i.e. Tree 1 will be more suitable for the model.

Sample bias

Sample bias is caused by picking non-random data for statistical modelling For this business

case, this sample covers across 5 geographical regions and 13 television regions, and it covers all

the data of consumers (both who have purchased or not purchased the organic product both).

Hence, there will be different set of consumers and sample bias will not be present in the data.

11

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 25

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.