ICT707 Data Science: Exploring PySpark for Data Science Applications

VerifiedAdded on 2022/10/17

|13

|1910

|10

Report

AI Summary

This report delves into the practical application of PySpark in data science, focusing on key concepts such as machine learning pipelines, collaborative filtering, logistic regression, and K-means clustering. The introduction highlights the significance of data science and the role of PySpark as a powerful tool for handling large-scale data processing. The report explores the Apache Spark system, RDDs, DataFrames, and MLlib, providing a foundation for understanding the core components. Machine learning pipelines, including Transformers, Estimators, and DataFrames, are discussed in detail, along with their practical applications. The report then examines collaborative filtering for building recommendation engine systems, using the ALS method, and presents the model building process and prediction results. Logistic regression, a supervised learning model, is explained, and its implementation with RDD-based Logistic RegressionWithLBFGS is demonstrated. Finally, K-means clustering, an unsupervised learning model, is explored, including its application, model evaluation, and accuracy assessment. The conclusion summarizes the key insights gained from using PySpark in real-life data science problems. References to relevant sources are also provided.

DATA SCIENCE PRACTICES

USING PYSPARK

Report’s Author:

USING PYSPARK

Report’s Author:

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

DATA SCIENCE PRACTICES USING PYSPARK

Table of Contents

1.0 Introduction

2.0 Key System Concepts

2.1 Machine learning pipelines

2.2 Collaborative filtering

2.3 Logistic regression

2.4 K-Means Clustering

3.0 Conclusion

4.0 References

1

Table of Contents

1.0 Introduction

2.0 Key System Concepts

2.1 Machine learning pipelines

2.2 Collaborative filtering

2.3 Logistic regression

2.4 K-Means Clustering

3.0 Conclusion

4.0 References

1

DATA SCIENCE PRACTICES USING PYSPARK

1.0 Introduction

Nowadays data science plays an important role in various areas of applications.

The base of these applications depends on the various concepts and methods involves

in different fields like statistics, programming, mathematics. etc, and one of them we are

explaining here is pyspark. We can use python over distributed file systems of storage

like spark using pyspark.

Apache Spark is the open source cluster computing framework

which has a tremendous lightning fast speed also it can handle the large – scale data

preprocessing. PySpark is the Apache Sparks API launched and handled by

organization DataBricks Inc.

In this project, we are exploring the key concepts and functionality of pyspark and their

main uses in machine learning and data science applications using Exploratory Data

Analysis(EDA), Model Building Part with various other algorithms.

The dataset url listed below from kaggle:

1. Diabetes data (link: https://www.kaggle.com/uciml/pima-indians-diabetes-

database#diabetes.csv)

2. Movie Lens Data (link: https://www.kaggle.com/ashishpatel26/movie-

recommendation-of-movie-lens-data-set/data )

2

1.0 Introduction

Nowadays data science plays an important role in various areas of applications.

The base of these applications depends on the various concepts and methods involves

in different fields like statistics, programming, mathematics. etc, and one of them we are

explaining here is pyspark. We can use python over distributed file systems of storage

like spark using pyspark.

Apache Spark is the open source cluster computing framework

which has a tremendous lightning fast speed also it can handle the large – scale data

preprocessing. PySpark is the Apache Sparks API launched and handled by

organization DataBricks Inc.

In this project, we are exploring the key concepts and functionality of pyspark and their

main uses in machine learning and data science applications using Exploratory Data

Analysis(EDA), Model Building Part with various other algorithms.

The dataset url listed below from kaggle:

1. Diabetes data (link: https://www.kaggle.com/uciml/pima-indians-diabetes-

database#diabetes.csv)

2. Movie Lens Data (link: https://www.kaggle.com/ashishpatel26/movie-

recommendation-of-movie-lens-data-set/data )

2

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

DATA SCIENCE PRACTICES USING PYSPARK

2.0 Key System Concepts

We will discuss the key systems concepts used in our project as follows:

1. Understanding of Apache Spark System

Apache Spark is a open-source distributed cluster processing engine. It has a very fast

speed, easily using framework concept, able to solve different variety of problems

comes under the structured, semi-structured and unstructured data. It has a wide open

source community for full time support. It supports different programming languages like

Java, Scala, Python, R, and SQL. It gives the services of flexibility and extensibility of

MapReduce at significantly higher speeds of processing. Pyspark gives the efficient

data handeling and operational services with the SQL, DataFrames, and Datasets,

MlLib for machine learning algorithms, GraphX for graph processing, and Spark

Streaming.

2. RDD

Resilient Distributed Datasets (RDDs) are a distributed grouping of non-changable JVM

objects that allows us to perform the quicker calculations and this is the heart of Apache

Spark.

Features Of RDDs

1. Memory Computations are really faster

2. Improved Fault Tolerance with rebuild of data in the system

3. It helps to transform the data and takes action on that data.

3. Spark Dataframes

The DataFrame is also the non-changeable form of data storage like an RDD. It looks

like a data present in combinations of rows and columns form such as table form.

Pandas provide the best data frame storage service.

4. MLlib

The MLlib is sparks machine learning library [PySpark]. It handles all the machine

learning algorithms comprises in areas of clustering, classification, regression &

collaborative filtering also featurization, pipelines etc. It’s goal is to make practical

machine learning scalable and easy.

3

2.0 Key System Concepts

We will discuss the key systems concepts used in our project as follows:

1. Understanding of Apache Spark System

Apache Spark is a open-source distributed cluster processing engine. It has a very fast

speed, easily using framework concept, able to solve different variety of problems

comes under the structured, semi-structured and unstructured data. It has a wide open

source community for full time support. It supports different programming languages like

Java, Scala, Python, R, and SQL. It gives the services of flexibility and extensibility of

MapReduce at significantly higher speeds of processing. Pyspark gives the efficient

data handeling and operational services with the SQL, DataFrames, and Datasets,

MlLib for machine learning algorithms, GraphX for graph processing, and Spark

Streaming.

2. RDD

Resilient Distributed Datasets (RDDs) are a distributed grouping of non-changable JVM

objects that allows us to perform the quicker calculations and this is the heart of Apache

Spark.

Features Of RDDs

1. Memory Computations are really faster

2. Improved Fault Tolerance with rebuild of data in the system

3. It helps to transform the data and takes action on that data.

3. Spark Dataframes

The DataFrame is also the non-changeable form of data storage like an RDD. It looks

like a data present in combinations of rows and columns form such as table form.

Pandas provide the best data frame storage service.

4. MLlib

The MLlib is sparks machine learning library [PySpark]. It handles all the machine

learning algorithms comprises in areas of clustering, classification, regression &

collaborative filtering also featurization, pipelines etc. It’s goal is to make practical

machine learning scalable and easy.

3

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

DATA SCIENCE PRACTICES USING PYSPARK

2.1 Machine Learning Pipelines

ML Pipelines provide a couple of APIs which are they built on top of

DataFrames that help users to create and set the practical machine learning pipelines.

There are a few main concepts from ML Pipeline which we have acquire for our project

like DataFrame, Transformer, Estimator, Pipeline & Parameter.

l DataFrame

In machine learning, we used the different data types like vectors, structured

data, unstructured data, Images, text input. This APIs from pysaprk helps us to

create the dataframe in case to support the diffrent data types. Dataframe

supports many structured data types. For example, If we want to import any csv

(comma seperated files) into the spark session then we can just simply call the

class like SparkSession.read.csv. Where we can give the path of the file and

headers for them.

Use’s In Project:

1. We have a direct import CSV file in the data frame after creating a session in

PySpark.

2. Also used for exploratory data analysis.

3. Also use for SQL query processing

4. Also can be use along with the RDDs

l Transformers

The Transformer class helps us to create and transform the data into

different column where this column creates newly and added data into the

dataframe. There are different kinds of transformers are available in the MlLib for

example Binarizer, Bucketizer, ChiSquareSelector, CountVectorizer etc.

For example: Assume that the feature transformers has the dataframe which are

reading the columns contains text data into it that is called as feature vector and

the output of this feature vector appends the new column into data frame which

has the mapping to the existing column.

Use’s In Project:

1. Used standard scalar for scaling of all the variables.

2. Also used a vector assembler to assemble all features.

4

2.1 Machine Learning Pipelines

ML Pipelines provide a couple of APIs which are they built on top of

DataFrames that help users to create and set the practical machine learning pipelines.

There are a few main concepts from ML Pipeline which we have acquire for our project

like DataFrame, Transformer, Estimator, Pipeline & Parameter.

l DataFrame

In machine learning, we used the different data types like vectors, structured

data, unstructured data, Images, text input. This APIs from pysaprk helps us to

create the dataframe in case to support the diffrent data types. Dataframe

supports many structured data types. For example, If we want to import any csv

(comma seperated files) into the spark session then we can just simply call the

class like SparkSession.read.csv. Where we can give the path of the file and

headers for them.

Use’s In Project:

1. We have a direct import CSV file in the data frame after creating a session in

PySpark.

2. Also used for exploratory data analysis.

3. Also use for SQL query processing

4. Also can be use along with the RDDs

l Transformers

The Transformer class helps us to create and transform the data into

different column where this column creates newly and added data into the

dataframe. There are different kinds of transformers are available in the MlLib for

example Binarizer, Bucketizer, ChiSquareSelector, CountVectorizer etc.

For example: Assume that the feature transformers has the dataframe which are

reading the columns contains text data into it that is called as feature vector and

the output of this feature vector appends the new column into data frame which

has the mapping to the existing column.

Use’s In Project:

1. Used standard scalar for scaling of all the variables.

2. Also used a vector assembler to assemble all features.

4

DATA SCIENCE PRACTICES USING PYSPARK

l Estimators

Estimators are always be the statistical model where it can be used to measure

the performace of the created model of predictions or classifications. Generally,

estimators uses the method called fit() for fitting of the model where it can openly

accepts the dataframes and generates the model. For example, the clustering

model called KMeans is devotes as the estimator and after using fit() that helps

us to train the KMeans() model.

Use’s In Project:

1. Used this concept while building a model for K-means and for Logistic

Regression.

5

l Estimators

Estimators are always be the statistical model where it can be used to measure

the performace of the created model of predictions or classifications. Generally,

estimators uses the method called fit() for fitting of the model where it can openly

accepts the dataframes and generates the model. For example, the clustering

model called KMeans is devotes as the estimator and after using fit() that helps

us to train the KMeans() model.

Use’s In Project:

1. Used this concept while building a model for K-means and for Logistic

Regression.

5

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

DATA SCIENCE PRACTICES USING PYSPARK

2.2 Collaborative Filtering

Collaborative filtering is a one of method to build the recommendation engine system.

Specifically, collaborative filtering is used to recommend to the user based on their user

preferences for a group of items and their past experiences for the product categories.

To build a recommendation engine system, there are two methods available:

1. Content-based Filtering and

2. Collaborative Filtering

We have used ALS (Alternative Least Square) method used for collaborative filtering

recommendation engine with the help of MlLib apache spark.

There are so many types of collaborative types of filtering available like User-User

collaborative filtering, Item-Item collaborative filtering, etc.

It uses the k-nearest neighbor approach to recommend something to the user based on

the rating parameter. While building the recommendation model, I have used classes in

pyspark like ALS, MatrixFactorizationModel, Rating. ALS is for to create the

recommended model and after that predict the model. The rating is used to find the

rating based on product and userid.

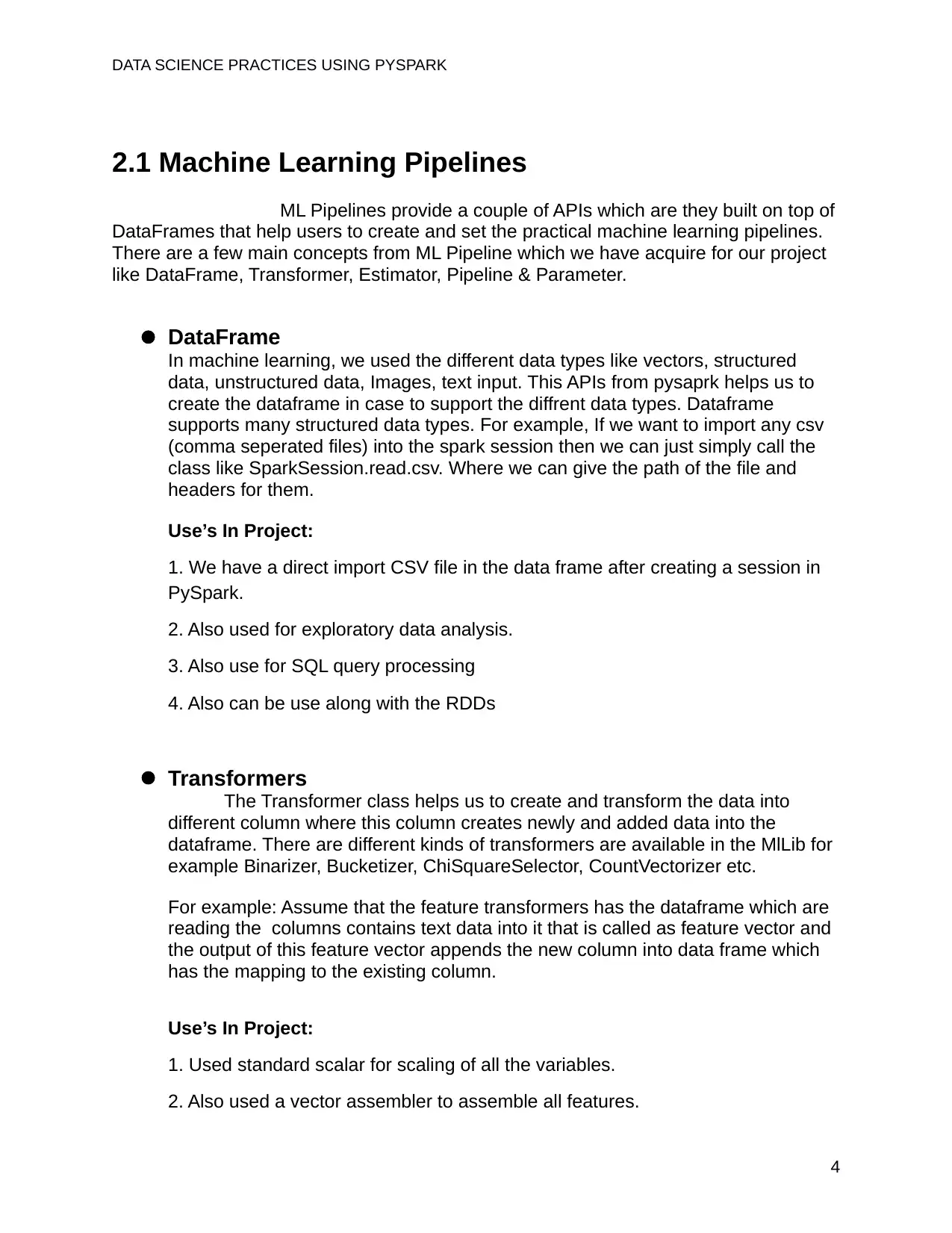

Fig below shows the how model gets build on top of the data.

Fig.1

6

2.2 Collaborative Filtering

Collaborative filtering is a one of method to build the recommendation engine system.

Specifically, collaborative filtering is used to recommend to the user based on their user

preferences for a group of items and their past experiences for the product categories.

To build a recommendation engine system, there are two methods available:

1. Content-based Filtering and

2. Collaborative Filtering

We have used ALS (Alternative Least Square) method used for collaborative filtering

recommendation engine with the help of MlLib apache spark.

There are so many types of collaborative types of filtering available like User-User

collaborative filtering, Item-Item collaborative filtering, etc.

It uses the k-nearest neighbor approach to recommend something to the user based on

the rating parameter. While building the recommendation model, I have used classes in

pyspark like ALS, MatrixFactorizationModel, Rating. ALS is for to create the

recommended model and after that predict the model. The rating is used to find the

rating based on product and userid.

Fig below shows the how model gets build on top of the data.

Fig.1

6

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

DATA SCIENCE PRACTICES USING PYSPARK

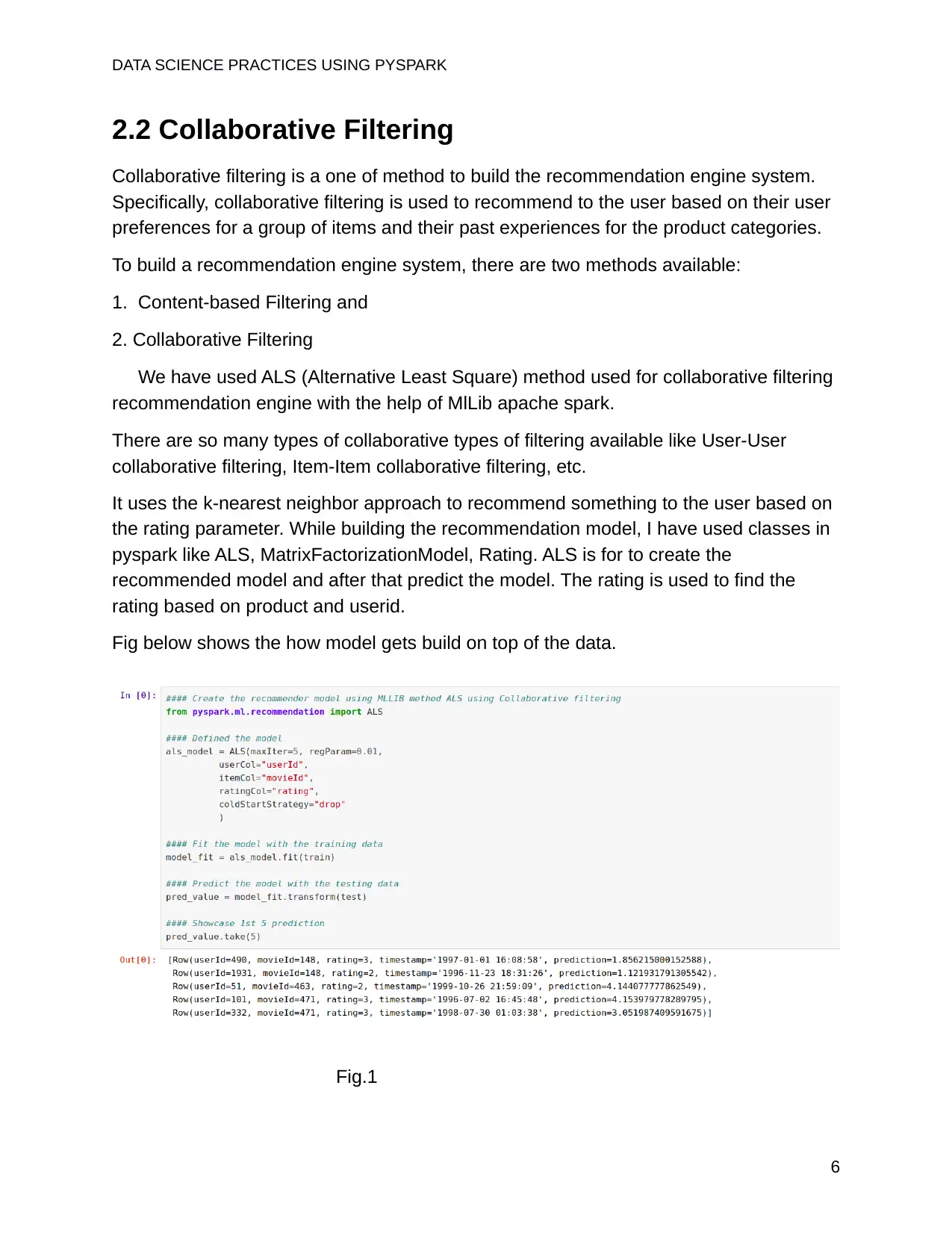

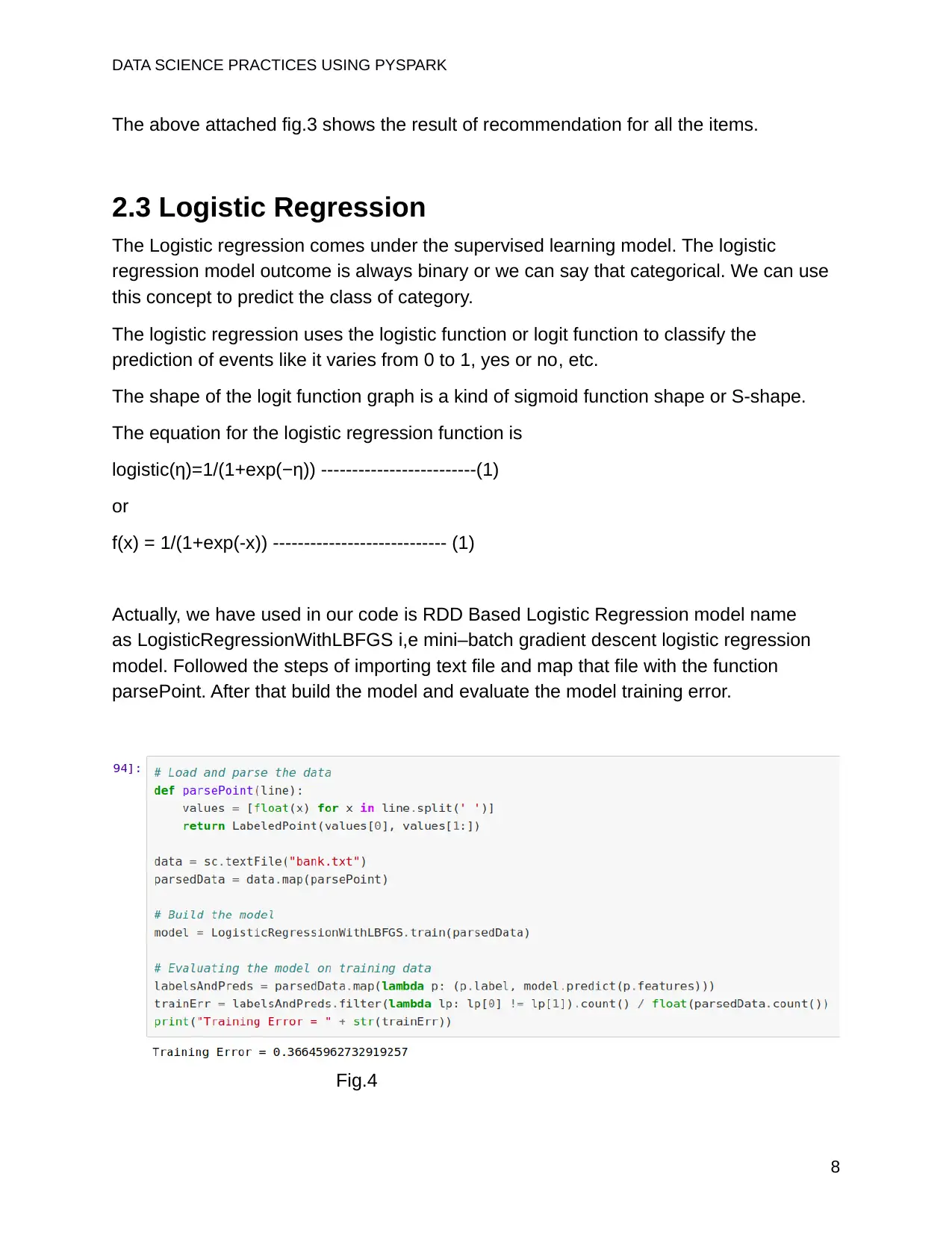

Fig.2

Fig.2 shows the result for prediction of the recommendation model.

Fig.3

7

Fig.2

Fig.2 shows the result for prediction of the recommendation model.

Fig.3

7

DATA SCIENCE PRACTICES USING PYSPARK

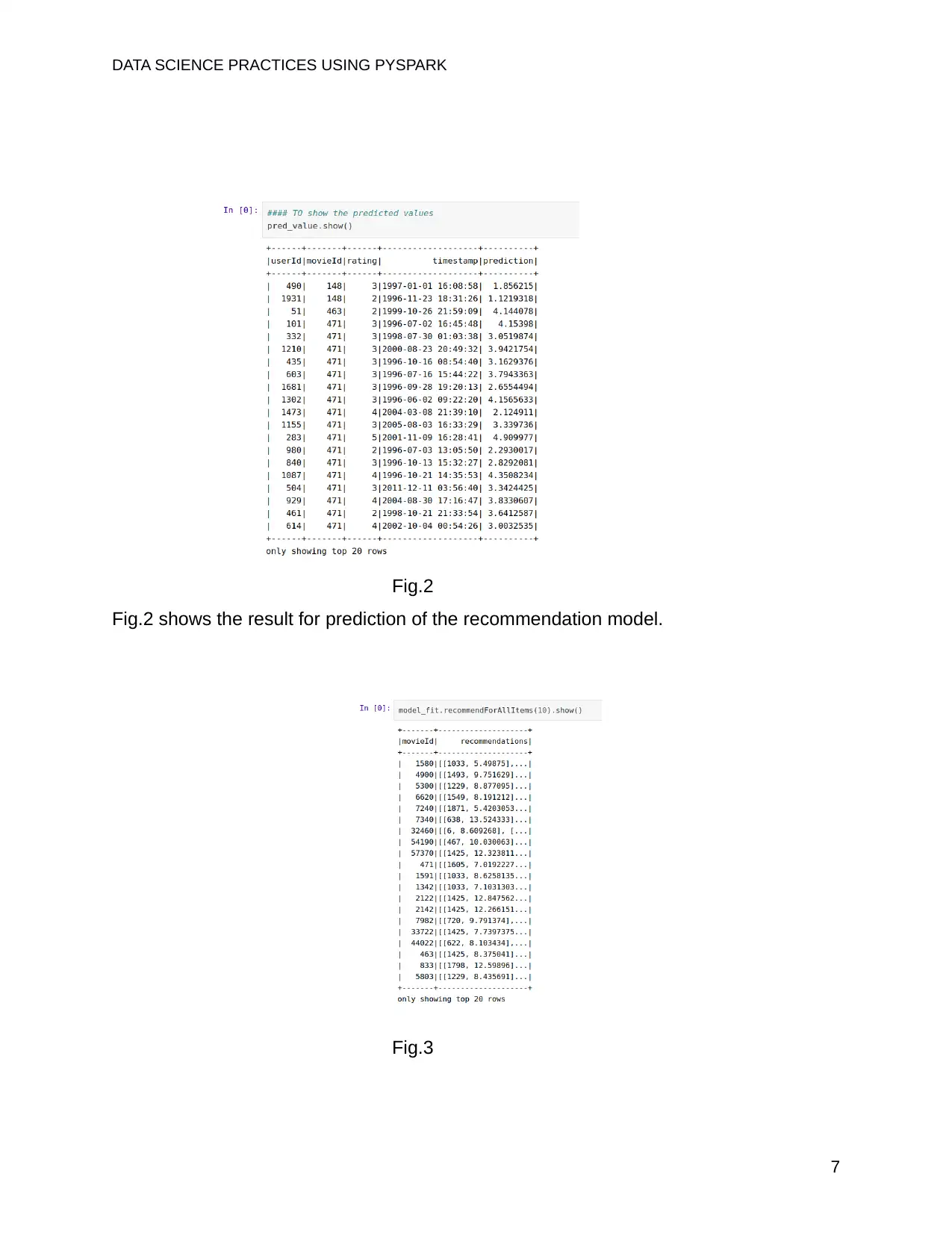

The above attached fig.3 shows the result of recommendation for all the items.

2.3 Logistic Regression

The Logistic regression comes under the supervised learning model. The logistic

regression model outcome is always binary or we can say that categorical. We can use

this concept to predict the class of category.

The logistic regression uses the logistic function or logit function to classify the

prediction of events like it varies from 0 to 1, yes or no, etc.

The shape of the logit function graph is a kind of sigmoid function shape or S-shape.

The equation for the logistic regression function is

logistic(η)=1/(1+exp(−η)) -------------------------(1)

or

f(x) = 1/(1+exp(-x)) ---------------------------- (1)

Actually, we have used in our code is RDD Based Logistic Regression model name

as LogisticRegressionWithLBFGS i,e mini–batch gradient descent logistic regression

model. Followed the steps of importing text file and map that file with the function

parsePoint. After that build the model and evaluate the model training error.

Fig.4

8

The above attached fig.3 shows the result of recommendation for all the items.

2.3 Logistic Regression

The Logistic regression comes under the supervised learning model. The logistic

regression model outcome is always binary or we can say that categorical. We can use

this concept to predict the class of category.

The logistic regression uses the logistic function or logit function to classify the

prediction of events like it varies from 0 to 1, yes or no, etc.

The shape of the logit function graph is a kind of sigmoid function shape or S-shape.

The equation for the logistic regression function is

logistic(η)=1/(1+exp(−η)) -------------------------(1)

or

f(x) = 1/(1+exp(-x)) ---------------------------- (1)

Actually, we have used in our code is RDD Based Logistic Regression model name

as LogisticRegressionWithLBFGS i,e mini–batch gradient descent logistic regression

model. Followed the steps of importing text file and map that file with the function

parsePoint. After that build the model and evaluate the model training error.

Fig.4

8

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

DATA SCIENCE PRACTICES USING PYSPARK

The fig.4 shows the part of model building and the accuracy of the model.

2.4 K-Means Clustering

Clustering comes under the option of unsupervised learning model because there is

unlabeled or non-featured data available. According to patterns of data we have to

figure out the clustering patterns. One of the most important clustering examples is K-

means. This is pretty simple and straight forward. The aim of this algorithm/model is to

collect the group of data points with the number of groups assigned by the k-variables.

The data points are separated based on the feature similarities present in the dataset.

The result of this model depicts:

1. The centroids of clusters k &

2. Labels for the training dataset.

Each centroid of clusters is a collection of features. It processes iteratively over the

dataset so that it can match with the centroids and gets the patterns of clusters.

For choosing the K value generally uses the elbow method. From the Euclidean

distance, we can find the centroid distances. So according to centroid distance with

respect to the value of pre-decide k, we can expect the elbow shape of the graph where

the graph decreases sharply to right we can select that K value as an ideal for our

model.

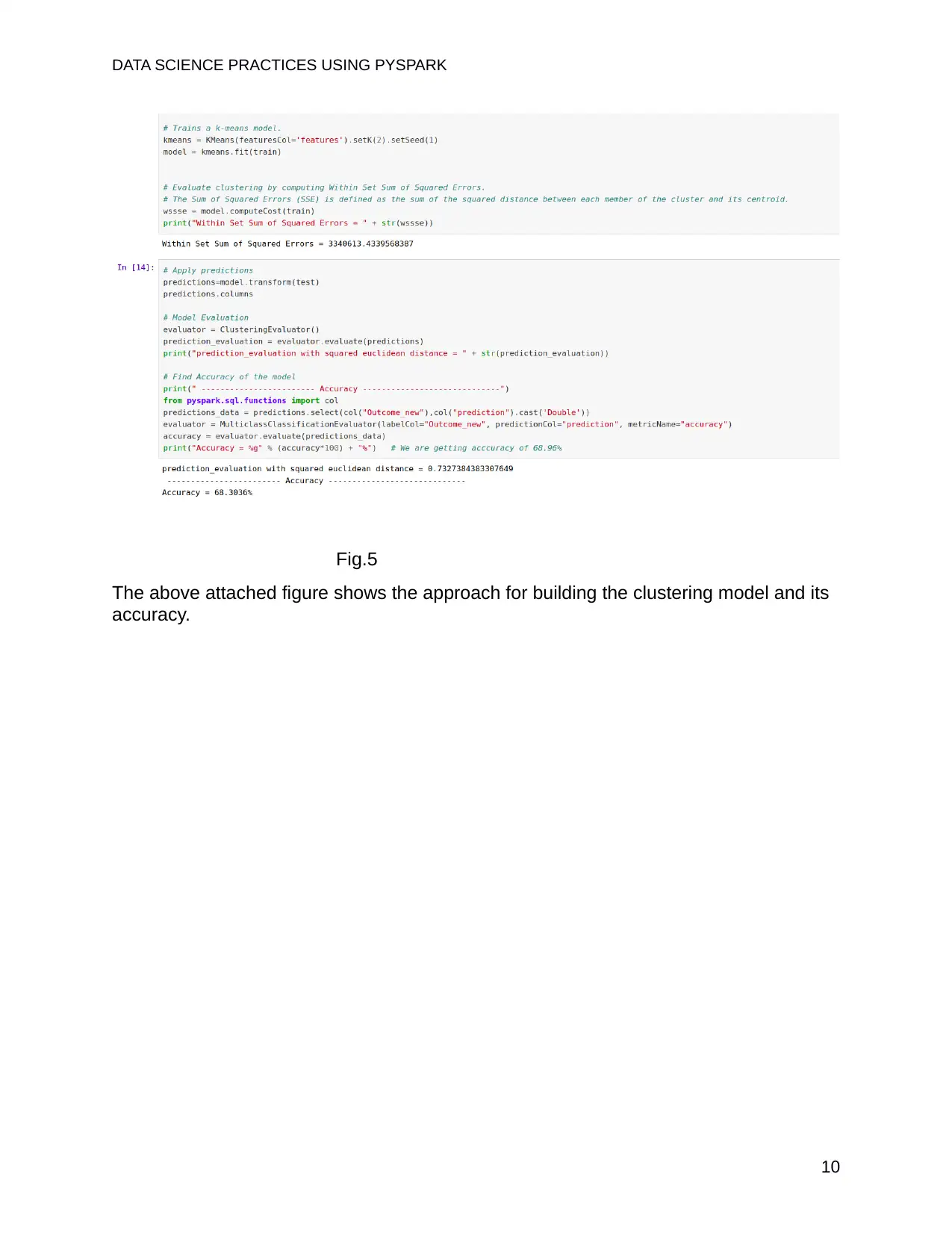

In pyspark.ml.clustering, there is one package Kmeans. This we have used to build the

model and set the K value and fit the model with training data. And for model evaluation

used ClusteringEvaluator class.

For creating prediction over test dataset we used model transform class. We used

MulticlassClassificationEvaluator to evaluate the model accuracy and we get 66.96%

accuracy which is very less.

9

The fig.4 shows the part of model building and the accuracy of the model.

2.4 K-Means Clustering

Clustering comes under the option of unsupervised learning model because there is

unlabeled or non-featured data available. According to patterns of data we have to

figure out the clustering patterns. One of the most important clustering examples is K-

means. This is pretty simple and straight forward. The aim of this algorithm/model is to

collect the group of data points with the number of groups assigned by the k-variables.

The data points are separated based on the feature similarities present in the dataset.

The result of this model depicts:

1. The centroids of clusters k &

2. Labels for the training dataset.

Each centroid of clusters is a collection of features. It processes iteratively over the

dataset so that it can match with the centroids and gets the patterns of clusters.

For choosing the K value generally uses the elbow method. From the Euclidean

distance, we can find the centroid distances. So according to centroid distance with

respect to the value of pre-decide k, we can expect the elbow shape of the graph where

the graph decreases sharply to right we can select that K value as an ideal for our

model.

In pyspark.ml.clustering, there is one package Kmeans. This we have used to build the

model and set the K value and fit the model with training data. And for model evaluation

used ClusteringEvaluator class.

For creating prediction over test dataset we used model transform class. We used

MulticlassClassificationEvaluator to evaluate the model accuracy and we get 66.96%

accuracy which is very less.

9

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

DATA SCIENCE PRACTICES USING PYSPARK

Fig.5

The above attached figure shows the approach for building the clustering model and its

accuracy.

10

Fig.5

The above attached figure shows the approach for building the clustering model and its

accuracy.

10

DATA SCIENCE PRACTICES USING PYSPARK

3.0 Conclusion

Here we learned the process and how to make more model efficient using PySpark.

Also how spark handles by python and creating clusters of data. Actually, we cover the

main area of a key component of PySpark which is DataFrame, MLlib, and RDD. This

gives us the focused insights that how to use the PySpark in real life problems.

11

3.0 Conclusion

Here we learned the process and how to make more model efficient using PySpark.

Also how spark handles by python and creating clusters of data. Actually, we cover the

main area of a key component of PySpark which is DataFrame, MLlib, and RDD. This

gives us the focused insights that how to use the PySpark in real life problems.

11

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 13

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.