BIT795: Assignment 1 - Database Design, Data Quality, and Transactions

VerifiedAdded on 2023/05/29

|9

|4166

|59

Homework Assignment

AI Summary

This assignment solution addresses key concepts in database design and management, covering topics relevant to BIT795 Relational Database Systems. It begins by exploring the impact of new technologies on database administrators and the importance of continuous learning. The solution then delves into the three-schema architecture, differentiating between user views, conceptual schemas, and internal schemas, with examples from a software development company. Data quality is examined, emphasizing its growing significance and the relationship between metadata and data quality. The solution also provides practical methods for improving data entry processes. The assignment further analyzes data redundancy, identifying its dangers and related anomalies, and proposes normalization strategies to mitigate these issues. The final sections cover database design for a kitchen equipment factory, including customer questions, Database Life Cycle phases, and an explanation of concurrent transactions and their associated problems. The assignment demonstrates a strong understanding of database design principles and practical application.

Question 1: Database environment

Discuss how new developments in technology influence the job of the database administrator.

The DBA is responsible for designing and maintaining an enterprise's database.

New technologies are always evolving. With the rise of the new technologies, the Database Administrator

has to study these technologies and adopt beneficial changes. A Database administrator has to always

study what is new, what new vulnerabilities are coming in the database and be ready for how to make

your database secure against these vulnerabilities.

With every new technology, the DBA has to get himself trained and re-skilled

Question 2: The three-schema architecture

Consider the case of a large software development company that has several departments, such as Research

and Development, Testing, Sales, Marketing, Finance, Human Resources, and so on. The company’s database

has been designed using the three-schema architecture approach.

a) Using this example, discuss the difference between user views, a conceptual schema and an internal schema.

We can say that in the above example, all these departments are interacting with the user views. These

users do not have any knowledge about how the data is stored? How many tables are there? Which are the

primary keys etc? They only know that this is the view where they are storing and retrieving data.

The research and development department may have knowledge of conceptual. They also will not have

any knowledge about the internal schema. The conceptual schema describes the database structure of the

database hiding information about physical storage structures.

Only the database administrator and his team will have the knowledge about the internal schema. As it

describes how the data is actually stored on the physical device. (Atkinson, 1981)

b) Explain which schema is relevant for the job of the:

manager of the Sales department

User Views

company’s database administrator

Internal Schema

company’s data administrator

Conceptual Schema

Question 3: Data quality

a) Discuss the reasons why data quality becomes even more important in today' environment.

Data quality becomes even more important in today' environment because: -

Discuss how new developments in technology influence the job of the database administrator.

The DBA is responsible for designing and maintaining an enterprise's database.

New technologies are always evolving. With the rise of the new technologies, the Database Administrator

has to study these technologies and adopt beneficial changes. A Database administrator has to always

study what is new, what new vulnerabilities are coming in the database and be ready for how to make

your database secure against these vulnerabilities.

With every new technology, the DBA has to get himself trained and re-skilled

Question 2: The three-schema architecture

Consider the case of a large software development company that has several departments, such as Research

and Development, Testing, Sales, Marketing, Finance, Human Resources, and so on. The company’s database

has been designed using the three-schema architecture approach.

a) Using this example, discuss the difference between user views, a conceptual schema and an internal schema.

We can say that in the above example, all these departments are interacting with the user views. These

users do not have any knowledge about how the data is stored? How many tables are there? Which are the

primary keys etc? They only know that this is the view where they are storing and retrieving data.

The research and development department may have knowledge of conceptual. They also will not have

any knowledge about the internal schema. The conceptual schema describes the database structure of the

database hiding information about physical storage structures.

Only the database administrator and his team will have the knowledge about the internal schema. As it

describes how the data is actually stored on the physical device. (Atkinson, 1981)

b) Explain which schema is relevant for the job of the:

manager of the Sales department

User Views

company’s database administrator

Internal Schema

company’s data administrator

Conceptual Schema

Question 3: Data quality

a) Discuss the reasons why data quality becomes even more important in today' environment.

Data quality becomes even more important in today' environment because: -

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Without high-quality data, we cannot understand data or stay in contact with customers. By

using high data quality we can easily find out the key information related to the potential and

current customer.

Accurate and timely information to manage accounts and services.

By using high quality of data we ensure the best use of recourse.

High-quality data management services effectiveness.

Having quality data gives better insight and thus the ability to execute anything with more

precision.

b) Discuss the relationship between metadata and data quality in a database system.

Metadata describes the quality of statistics.

Metadata themselves is a data quality component

This improves the accessibility and availability of statistical data.

c) One of the important factors that influence data quality in organizations is data entry. List and discuss

at least three ways that can improve data entry processes.

1) Eliminate data redundancy: - firstly check what data is an important and only store that data in

the database and discard unnecessary data. We can use the data entry technique to enter a lesser

number of files so that it redesigns our form and ensures that our database no longer contains

unnecessary files.

2) Data profiling or analysis: - data profiling or analysis ensures everything that user enters,

follows the data quality rules like completeness, uniqueness and correctness of the data. We know

that a complete form may contain wrong information if the user does not enter the correct

information in the field. Data profiling or analysis offers various other other ways to check the

information.

3) Choose advance software: - always check the advance software option because it helps you with

automating data entry. You can also find a system that is modified to meet your demand.

Question 4: Data redundancy Part One

a) Explain what data redundancy is in the database environment and what the main danger of redundant

data is.

Data Redundancy: - data redundancy is the repetition of unnecessary data in a single table or an

entity in the database. All the repetition of the same data in more tables, this cause of the unnecessary

waste of storage space. For example, in a business, there are many clients and they come to buy things

various times? If in all these times, customer data is stored in a single table, there are lots of redundant

data that may because of system failure. So the best way is to make multiple tables and store all the

data in the database after normalization. According to this example, we must make a customer table

and provide a unique key to the id of customer and store that key as the foreign key in other related

tables.

The main problem of redundant data is that it may lead to the size of the database increasing

dramatically, that can even cause system failure and you may be lost all the data. (Date & White,

1993)

b) Using the file structure given in Table 1, provide examples and discuss at least three data redundancy

problems and identify related data anomalies.

Data redundancy problems: -

1) Here consultant C202 is listed more than one time. So when we will try to read or write the

related records it would not give accurate information and will provide us inconsistent data.

using high data quality we can easily find out the key information related to the potential and

current customer.

Accurate and timely information to manage accounts and services.

By using high quality of data we ensure the best use of recourse.

High-quality data management services effectiveness.

Having quality data gives better insight and thus the ability to execute anything with more

precision.

b) Discuss the relationship between metadata and data quality in a database system.

Metadata describes the quality of statistics.

Metadata themselves is a data quality component

This improves the accessibility and availability of statistical data.

c) One of the important factors that influence data quality in organizations is data entry. List and discuss

at least three ways that can improve data entry processes.

1) Eliminate data redundancy: - firstly check what data is an important and only store that data in

the database and discard unnecessary data. We can use the data entry technique to enter a lesser

number of files so that it redesigns our form and ensures that our database no longer contains

unnecessary files.

2) Data profiling or analysis: - data profiling or analysis ensures everything that user enters,

follows the data quality rules like completeness, uniqueness and correctness of the data. We know

that a complete form may contain wrong information if the user does not enter the correct

information in the field. Data profiling or analysis offers various other other ways to check the

information.

3) Choose advance software: - always check the advance software option because it helps you with

automating data entry. You can also find a system that is modified to meet your demand.

Question 4: Data redundancy Part One

a) Explain what data redundancy is in the database environment and what the main danger of redundant

data is.

Data Redundancy: - data redundancy is the repetition of unnecessary data in a single table or an

entity in the database. All the repetition of the same data in more tables, this cause of the unnecessary

waste of storage space. For example, in a business, there are many clients and they come to buy things

various times? If in all these times, customer data is stored in a single table, there are lots of redundant

data that may because of system failure. So the best way is to make multiple tables and store all the

data in the database after normalization. According to this example, we must make a customer table

and provide a unique key to the id of customer and store that key as the foreign key in other related

tables.

The main problem of redundant data is that it may lead to the size of the database increasing

dramatically, that can even cause system failure and you may be lost all the data. (Date & White,

1993)

b) Using the file structure given in Table 1, provide examples and discuss at least three data redundancy

problems and identify related data anomalies.

Data redundancy problems: -

1) Here consultant C202 is listed more than one time. So when we will try to read or write the

related records it would not give accurate information and will provide us inconsistent data.

In this file structure, there is more than one record for each consultant. So it is very difficult to

maintain data integrity. There might be cases on updating that some data is changed and other remains

the same. (Date & White, 1990)

2) If patient number PN311 is deleted then the information of related consultant and room number is

also deleted.

Data anomalies: -

1) Insertion anomaly: - If we want to add a new consultant C101. If we try to add new consultant

then firstly we will need a patient that fixed the appointment with consultant C101. So insertion

anomaly occurs here.

2) Deletion anomaly: - if we delete Patient Number PN311 then we also lose Consultant C228 and

room number R02 information. So Deletion anomaly occurs here.

3) Updation or modification anomaly: - in this table structure if Consultant ‘Tom Bloom’ changes

his name, then this information will be changed in multiple rows. So it will cause update anomaly.

Question 5: Data redundancy Part Two

Explain the cause of data redundancy in the file structure shown in Table 1 (above). Suggest what needs to be

done to this file structure to avoid data redundancy and related data anomalies.

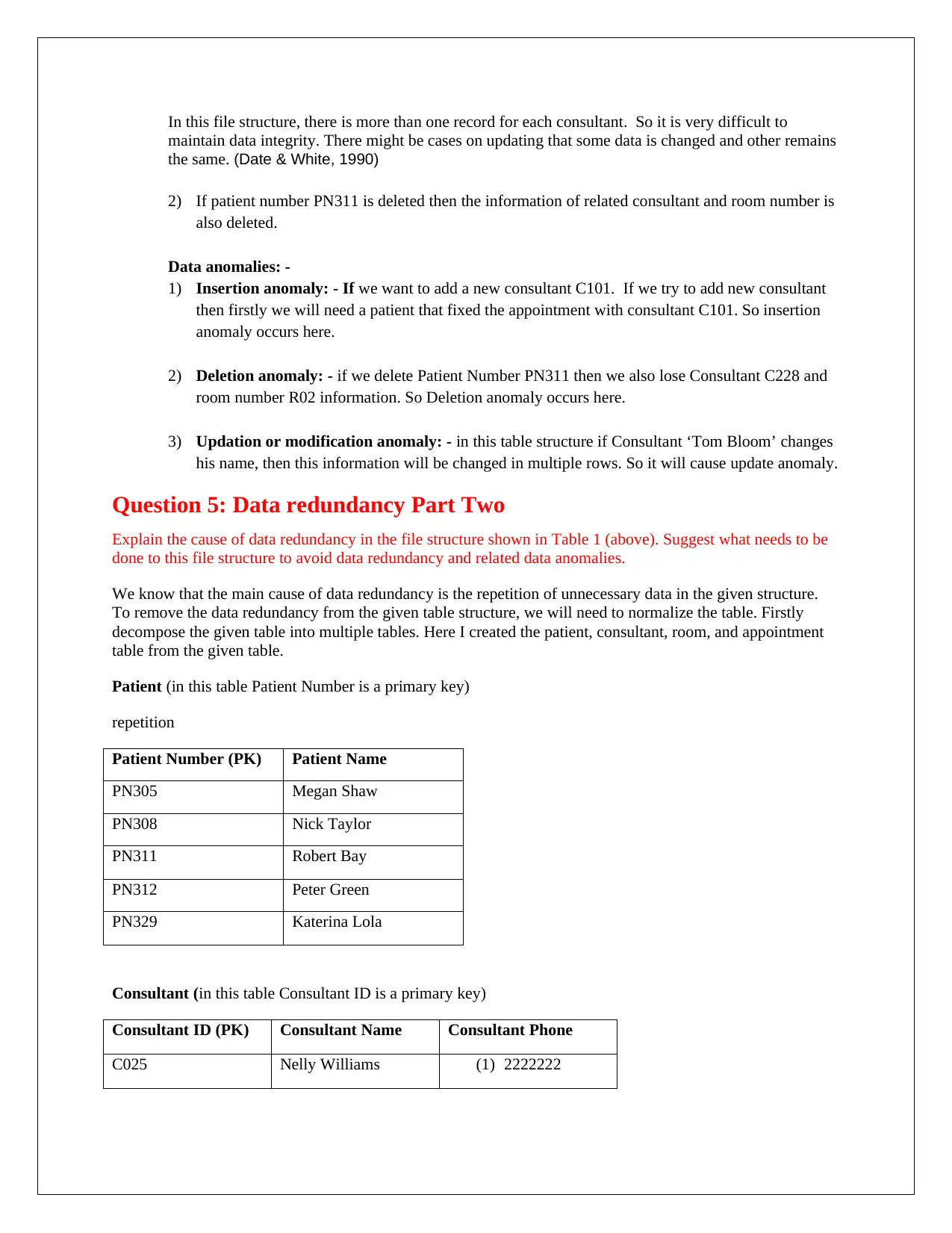

We know that the main cause of data redundancy is the repetition of unnecessary data in the given structure.

To remove the data redundancy from the given table structure, we will need to normalize the table. Firstly

decompose the given table into multiple tables. Here I created the patient, consultant, room, and appointment

table from the given table.

Patient (in this table Patient Number is a primary key)

repetition

Patient Number (PK) Patient Name

PN305 Megan Shaw

PN308 Nick Taylor

PN311 Robert Bay

PN312 Peter Green

PN329 Katerina Lola

Consultant (in this table Consultant ID is a primary key)

Consultant ID (PK) Consultant Name Consultant Phone

C025 Nelly Williams (1) 2222222

maintain data integrity. There might be cases on updating that some data is changed and other remains

the same. (Date & White, 1990)

2) If patient number PN311 is deleted then the information of related consultant and room number is

also deleted.

Data anomalies: -

1) Insertion anomaly: - If we want to add a new consultant C101. If we try to add new consultant

then firstly we will need a patient that fixed the appointment with consultant C101. So insertion

anomaly occurs here.

2) Deletion anomaly: - if we delete Patient Number PN311 then we also lose Consultant C228 and

room number R02 information. So Deletion anomaly occurs here.

3) Updation or modification anomaly: - in this table structure if Consultant ‘Tom Bloom’ changes

his name, then this information will be changed in multiple rows. So it will cause update anomaly.

Question 5: Data redundancy Part Two

Explain the cause of data redundancy in the file structure shown in Table 1 (above). Suggest what needs to be

done to this file structure to avoid data redundancy and related data anomalies.

We know that the main cause of data redundancy is the repetition of unnecessary data in the given structure.

To remove the data redundancy from the given table structure, we will need to normalize the table. Firstly

decompose the given table into multiple tables. Here I created the patient, consultant, room, and appointment

table from the given table.

Patient (in this table Patient Number is a primary key)

repetition

Patient Number (PK) Patient Name

PN305 Megan Shaw

PN308 Nick Taylor

PN311 Robert Bay

PN312 Peter Green

PN329 Katerina Lola

Consultant (in this table Consultant ID is a primary key)

Consultant ID (PK) Consultant Name Consultant Phone

C025 Nelly Williams (1) 2222222

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

C202 Tom Bloom (2) 1111111

C228 Marry Gardiner (3) 3333333

Room (Room is Primary key)

room (PK)

R02

R03

R08

Appointment (in this table Appointment number is a Primary key and Patient number, Consultant ID and

Room are the foreign keys)

Appointment Number

(PK)

Patient

Number (FK)

Consultant ID

(FK)

Room (FK) Appointment Date

and time

A101 PN305 C025 R08 5/10/2016 09.00

A102 PN308 C202 R03 8/10/2016 14.03

A103 PN311 C228 R02 5/10/2016 09.00

A104 PN311 C228 R02 6/10/2016 13.30

A105 PN312 C202 R03 7/10/2016 14.30

A106 PN329 C202 R08 6/10/2016 16.00

A107 PN329 C025 R08 5/10/2016 10.30

Remove anomalies: -

1) Insertion anomaly: - now we can easily insert the Consultant C101. No need to add the related

patient if not required.

2) Deletion anomaly: - now we can easily delete patient PN311. After normalization, we do not lose

relation consultant and room data and also no need to delete that patient multiple times.

3) Updation or modification anomaly: - now we can easily change ‘Tom Bloom’ name. no need to

update multiple records.

Question 6: Designing a database

You have been asked to develop a database system for a large factory that produces kitchen equipment, mostly

pots and pans of many types and sizes. The factory sends its products to many customers across the country.

You started the database design process by preparing the list of questions that you want to ask your customer.

You also prepared a draft of the Database Life Cycle (DBLC).

a) Provide examples of at least five of your questions for your customer and discuss how the answers to those

questions would influence the database design.

Q1. What are the main types of pans and pots that you want to buy?

C228 Marry Gardiner (3) 3333333

Room (Room is Primary key)

room (PK)

R02

R03

R08

Appointment (in this table Appointment number is a Primary key and Patient number, Consultant ID and

Room are the foreign keys)

Appointment Number

(PK)

Patient

Number (FK)

Consultant ID

(FK)

Room (FK) Appointment Date

and time

A101 PN305 C025 R08 5/10/2016 09.00

A102 PN308 C202 R03 8/10/2016 14.03

A103 PN311 C228 R02 5/10/2016 09.00

A104 PN311 C228 R02 6/10/2016 13.30

A105 PN312 C202 R03 7/10/2016 14.30

A106 PN329 C202 R08 6/10/2016 16.00

A107 PN329 C025 R08 5/10/2016 10.30

Remove anomalies: -

1) Insertion anomaly: - now we can easily insert the Consultant C101. No need to add the related

patient if not required.

2) Deletion anomaly: - now we can easily delete patient PN311. After normalization, we do not lose

relation consultant and room data and also no need to delete that patient multiple times.

3) Updation or modification anomaly: - now we can easily change ‘Tom Bloom’ name. no need to

update multiple records.

Question 6: Designing a database

You have been asked to develop a database system for a large factory that produces kitchen equipment, mostly

pots and pans of many types and sizes. The factory sends its products to many customers across the country.

You started the database design process by preparing the list of questions that you want to ask your customer.

You also prepared a draft of the Database Life Cycle (DBLC).

a) Provide examples of at least five of your questions for your customer and discuss how the answers to those

questions would influence the database design.

Q1. What are the main types of pans and pots that you want to buy?

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

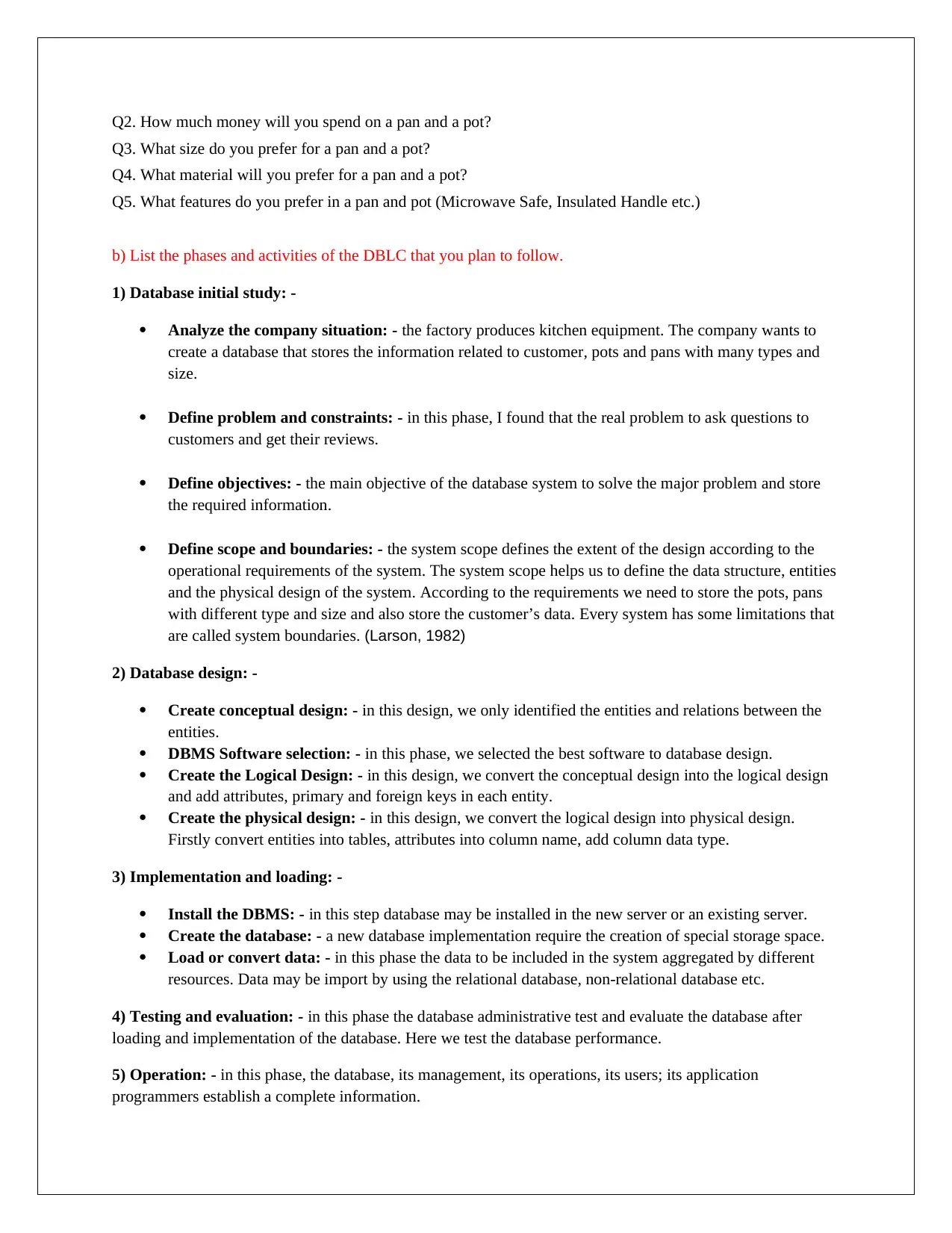

Q2. How much money will you spend on a pan and a pot?

Q3. What size do you prefer for a pan and a pot?

Q4. What material will you prefer for a pan and a pot?

Q5. What features do you prefer in a pan and pot (Microwave Safe, Insulated Handle etc.)

b) List the phases and activities of the DBLC that you plan to follow.

1) Database initial study: -

Analyze the company situation: - the factory produces kitchen equipment. The company wants to

create a database that stores the information related to customer, pots and pans with many types and

size.

Define problem and constraints: - in this phase, I found that the real problem to ask questions to

customers and get their reviews.

Define objectives: - the main objective of the database system to solve the major problem and store

the required information.

Define scope and boundaries: - the system scope defines the extent of the design according to the

operational requirements of the system. The system scope helps us to define the data structure, entities

and the physical design of the system. According to the requirements we need to store the pots, pans

with different type and size and also store the customer’s data. Every system has some limitations that

are called system boundaries. (Larson, 1982)

2) Database design: -

Create conceptual design: - in this design, we only identified the entities and relations between the

entities.

DBMS Software selection: - in this phase, we selected the best software to database design.

Create the Logical Design: - in this design, we convert the conceptual design into the logical design

and add attributes, primary and foreign keys in each entity.

Create the physical design: - in this design, we convert the logical design into physical design.

Firstly convert entities into tables, attributes into column name, add column data type.

3) Implementation and loading: -

Install the DBMS: - in this step database may be installed in the new server or an existing server.

Create the database: - a new database implementation require the creation of special storage space.

Load or convert data: - in this phase the data to be included in the system aggregated by different

resources. Data may be import by using the relational database, non-relational database etc.

4) Testing and evaluation: - in this phase the database administrative test and evaluate the database after

loading and implementation of the database. Here we test the database performance.

5) Operation: - in this phase, the database, its management, its operations, its users; its application

programmers establish a complete information.

Q3. What size do you prefer for a pan and a pot?

Q4. What material will you prefer for a pan and a pot?

Q5. What features do you prefer in a pan and pot (Microwave Safe, Insulated Handle etc.)

b) List the phases and activities of the DBLC that you plan to follow.

1) Database initial study: -

Analyze the company situation: - the factory produces kitchen equipment. The company wants to

create a database that stores the information related to customer, pots and pans with many types and

size.

Define problem and constraints: - in this phase, I found that the real problem to ask questions to

customers and get their reviews.

Define objectives: - the main objective of the database system to solve the major problem and store

the required information.

Define scope and boundaries: - the system scope defines the extent of the design according to the

operational requirements of the system. The system scope helps us to define the data structure, entities

and the physical design of the system. According to the requirements we need to store the pots, pans

with different type and size and also store the customer’s data. Every system has some limitations that

are called system boundaries. (Larson, 1982)

2) Database design: -

Create conceptual design: - in this design, we only identified the entities and relations between the

entities.

DBMS Software selection: - in this phase, we selected the best software to database design.

Create the Logical Design: - in this design, we convert the conceptual design into the logical design

and add attributes, primary and foreign keys in each entity.

Create the physical design: - in this design, we convert the logical design into physical design.

Firstly convert entities into tables, attributes into column name, add column data type.

3) Implementation and loading: -

Install the DBMS: - in this step database may be installed in the new server or an existing server.

Create the database: - a new database implementation require the creation of special storage space.

Load or convert data: - in this phase the data to be included in the system aggregated by different

resources. Data may be import by using the relational database, non-relational database etc.

4) Testing and evaluation: - in this phase the database administrative test and evaluate the database after

loading and implementation of the database. Here we test the database performance.

5) Operation: - in this phase, the database, its management, its operations, its users; its application

programmers establish a complete information.

6) Maintain and Evaluation: - after performing all the phase the database administrator has the responsibility

to perform routine maintenance activities. For example backup, recovery etc.

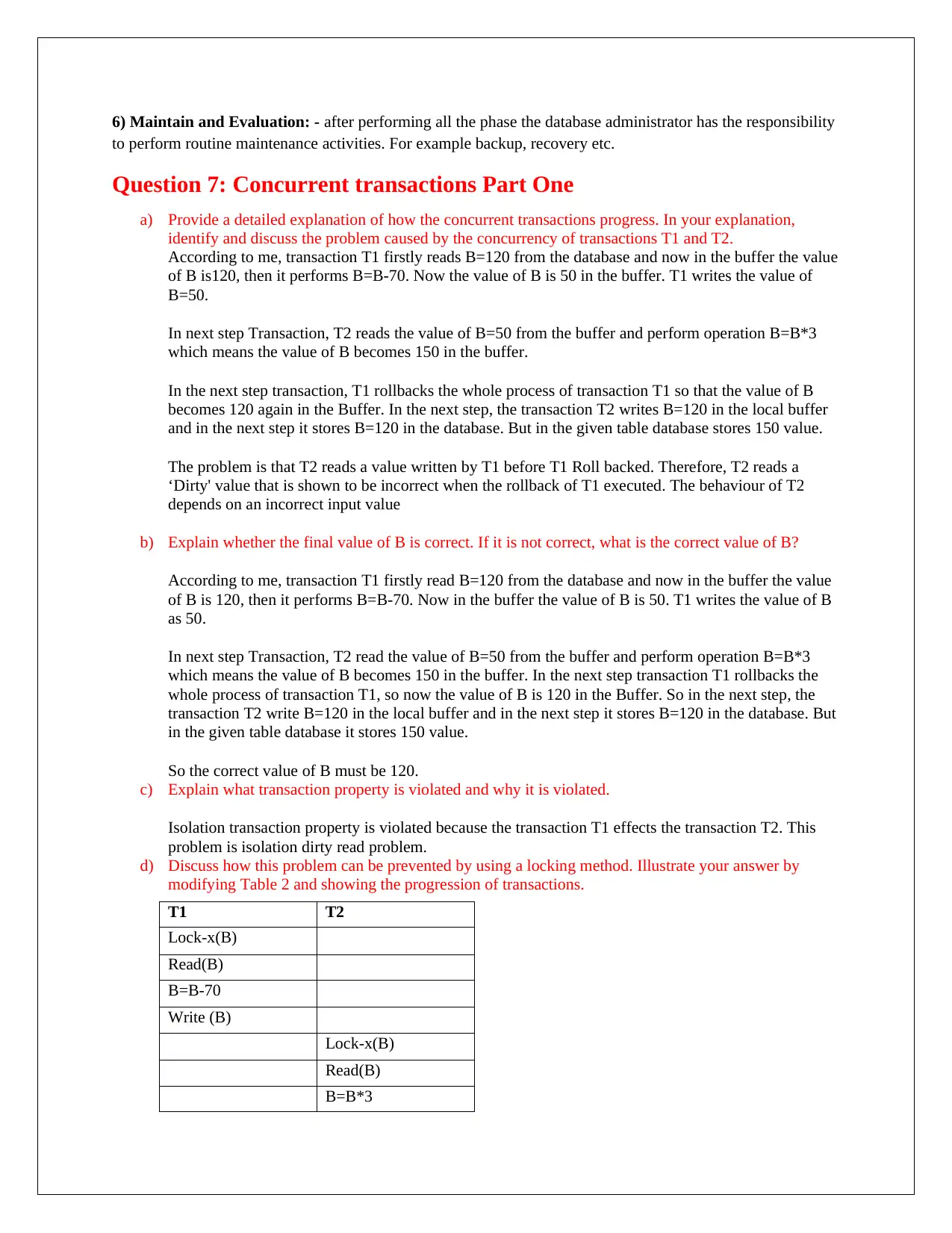

Question 7: Concurrent transactions Part One

a) Provide a detailed explanation of how the concurrent transactions progress. In your explanation,

identify and discuss the problem caused by the concurrency of transactions T1 and T2.

According to me, transaction T1 firstly reads B=120 from the database and now in the buffer the value

of B is120, then it performs B=B-70. Now the value of B is 50 in the buffer. T1 writes the value of

B=50.

In next step Transaction, T2 reads the value of B=50 from the buffer and perform operation B=B*3

which means the value of B becomes 150 in the buffer.

In the next step transaction, T1 rollbacks the whole process of transaction T1 so that the value of B

becomes 120 again in the Buffer. In the next step, the transaction T2 writes B=120 in the local buffer

and in the next step it stores B=120 in the database. But in the given table database stores 150 value.

The problem is that T2 reads a value written by T1 before T1 Roll backed. Therefore, T2 reads a

‘Dirty' value that is shown to be incorrect when the rollback of T1 executed. The behaviour of T2

depends on an incorrect input value

b) Explain whether the final value of B is correct. If it is not correct, what is the correct value of B?

According to me, transaction T1 firstly read B=120 from the database and now in the buffer the value

of B is 120, then it performs B=B-70. Now in the buffer the value of B is 50. T1 writes the value of B

as 50.

In next step Transaction, T2 read the value of B=50 from the buffer and perform operation B=B*3

which means the value of B becomes 150 in the buffer. In the next step transaction T1 rollbacks the

whole process of transaction T1, so now the value of B is 120 in the Buffer. So in the next step, the

transaction T2 write B=120 in the local buffer and in the next step it stores B=120 in the database. But

in the given table database it stores 150 value.

So the correct value of B must be 120.

c) Explain what transaction property is violated and why it is violated.

Isolation transaction property is violated because the transaction T1 effects the transaction T2. This

problem is isolation dirty read problem.

d) Discuss how this problem can be prevented by using a locking method. Illustrate your answer by

modifying Table 2 and showing the progression of transactions.

T1 T2

Lock-x(B)

Read(B)

B=B-70

Write (B)

Lock-x(B)

Read(B)

B=B*3

to perform routine maintenance activities. For example backup, recovery etc.

Question 7: Concurrent transactions Part One

a) Provide a detailed explanation of how the concurrent transactions progress. In your explanation,

identify and discuss the problem caused by the concurrency of transactions T1 and T2.

According to me, transaction T1 firstly reads B=120 from the database and now in the buffer the value

of B is120, then it performs B=B-70. Now the value of B is 50 in the buffer. T1 writes the value of

B=50.

In next step Transaction, T2 reads the value of B=50 from the buffer and perform operation B=B*3

which means the value of B becomes 150 in the buffer.

In the next step transaction, T1 rollbacks the whole process of transaction T1 so that the value of B

becomes 120 again in the Buffer. In the next step, the transaction T2 writes B=120 in the local buffer

and in the next step it stores B=120 in the database. But in the given table database stores 150 value.

The problem is that T2 reads a value written by T1 before T1 Roll backed. Therefore, T2 reads a

‘Dirty' value that is shown to be incorrect when the rollback of T1 executed. The behaviour of T2

depends on an incorrect input value

b) Explain whether the final value of B is correct. If it is not correct, what is the correct value of B?

According to me, transaction T1 firstly read B=120 from the database and now in the buffer the value

of B is 120, then it performs B=B-70. Now in the buffer the value of B is 50. T1 writes the value of B

as 50.

In next step Transaction, T2 read the value of B=50 from the buffer and perform operation B=B*3

which means the value of B becomes 150 in the buffer. In the next step transaction T1 rollbacks the

whole process of transaction T1, so now the value of B is 120 in the Buffer. So in the next step, the

transaction T2 write B=120 in the local buffer and in the next step it stores B=120 in the database. But

in the given table database it stores 150 value.

So the correct value of B must be 120.

c) Explain what transaction property is violated and why it is violated.

Isolation transaction property is violated because the transaction T1 effects the transaction T2. This

problem is isolation dirty read problem.

d) Discuss how this problem can be prevented by using a locking method. Illustrate your answer by

modifying Table 2 and showing the progression of transactions.

T1 T2

Lock-x(B)

Read(B)

B=B-70

Write (B)

Lock-x(B)

Read(B)

B=B*3

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Rollback

Unlock(B)

Write(B)

Commit

Unlock(B)

e) Explain whether or not timestamping can be used to prevent the identified problem. In your answer,

discuss the difference between locking methods and timestamping methods.

The timestamping is used when the concurrent transition occurs. These transactions are equivalent to a

particular serial order of arrival, rather than any serial ordering.

We can't use the timestamping because this is non-recoverable scheduling.

1) Locking method manages the order between the conflict pair transaction along the execution time.

But the timestamping method waits to create the transaction.

2) There are the various version of locking strict, conservation and rigorous but timestamping

method is free form deadlock but starvation may occur in it.

3) The timestamp cannot lead to the deadlock and lock method do this job.

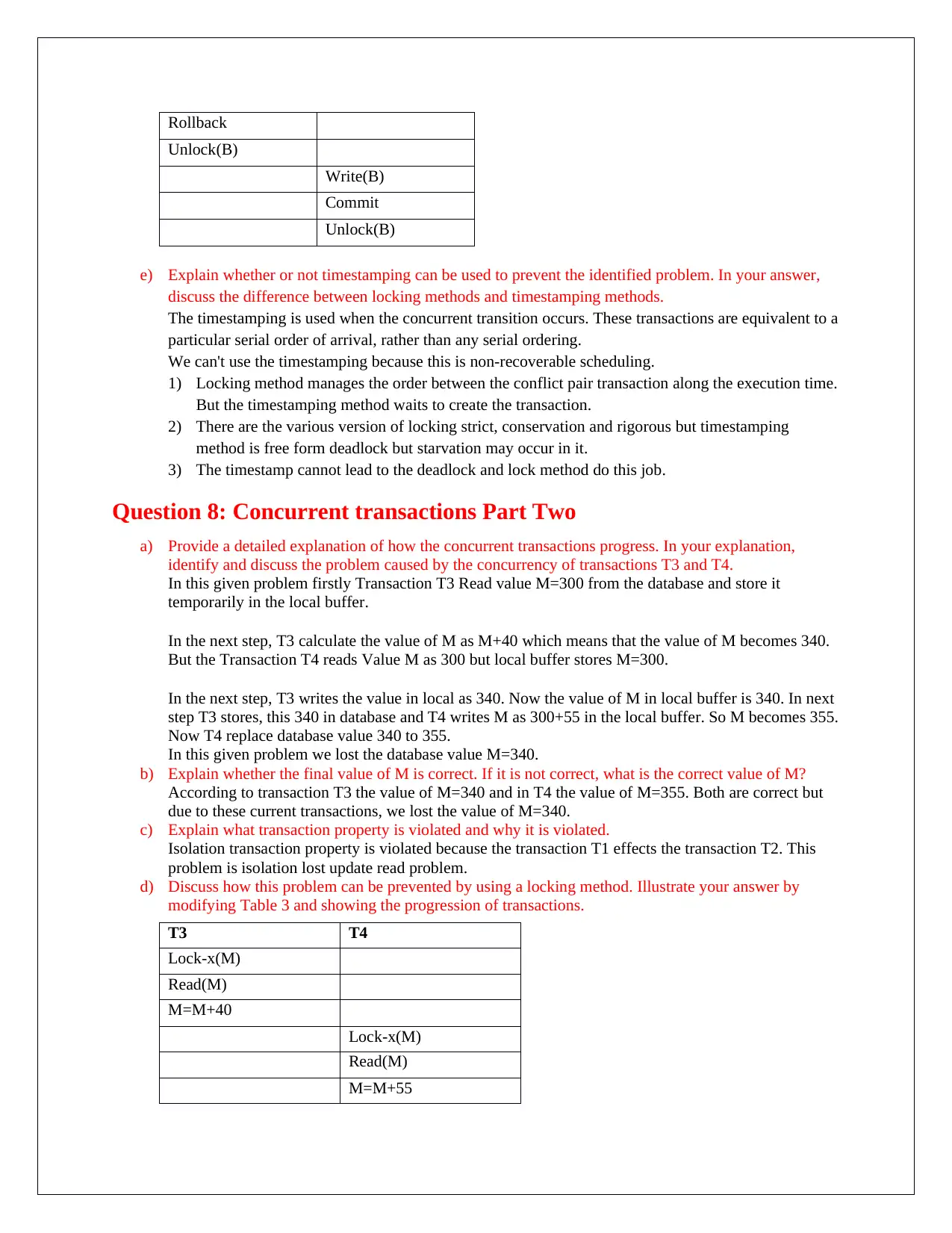

Question 8: Concurrent transactions Part Two

a) Provide a detailed explanation of how the concurrent transactions progress. In your explanation,

identify and discuss the problem caused by the concurrency of transactions T3 and T4.

In this given problem firstly Transaction T3 Read value M=300 from the database and store it

temporarily in the local buffer.

In the next step, T3 calculate the value of M as M+40 which means that the value of M becomes 340.

But the Transaction T4 reads Value M as 300 but local buffer stores M=300.

In the next step, T3 writes the value in local as 340. Now the value of M in local buffer is 340. In next

step T3 stores, this 340 in database and T4 writes M as 300+55 in the local buffer. So M becomes 355.

Now T4 replace database value 340 to 355.

In this given problem we lost the database value M=340.

b) Explain whether the final value of M is correct. If it is not correct, what is the correct value of M?

According to transaction T3 the value of M=340 and in T4 the value of M=355. Both are correct but

due to these current transactions, we lost the value of M=340.

c) Explain what transaction property is violated and why it is violated.

Isolation transaction property is violated because the transaction T1 effects the transaction T2. This

problem is isolation lost update read problem.

d) Discuss how this problem can be prevented by using a locking method. Illustrate your answer by

modifying Table 3 and showing the progression of transactions.

T3 T4

Lock-x(M)

Read(M)

M=M+40

Lock-x(M)

Read(M)

M=M+55

Unlock(B)

Write(B)

Commit

Unlock(B)

e) Explain whether or not timestamping can be used to prevent the identified problem. In your answer,

discuss the difference between locking methods and timestamping methods.

The timestamping is used when the concurrent transition occurs. These transactions are equivalent to a

particular serial order of arrival, rather than any serial ordering.

We can't use the timestamping because this is non-recoverable scheduling.

1) Locking method manages the order between the conflict pair transaction along the execution time.

But the timestamping method waits to create the transaction.

2) There are the various version of locking strict, conservation and rigorous but timestamping

method is free form deadlock but starvation may occur in it.

3) The timestamp cannot lead to the deadlock and lock method do this job.

Question 8: Concurrent transactions Part Two

a) Provide a detailed explanation of how the concurrent transactions progress. In your explanation,

identify and discuss the problem caused by the concurrency of transactions T3 and T4.

In this given problem firstly Transaction T3 Read value M=300 from the database and store it

temporarily in the local buffer.

In the next step, T3 calculate the value of M as M+40 which means that the value of M becomes 340.

But the Transaction T4 reads Value M as 300 but local buffer stores M=300.

In the next step, T3 writes the value in local as 340. Now the value of M in local buffer is 340. In next

step T3 stores, this 340 in database and T4 writes M as 300+55 in the local buffer. So M becomes 355.

Now T4 replace database value 340 to 355.

In this given problem we lost the database value M=340.

b) Explain whether the final value of M is correct. If it is not correct, what is the correct value of M?

According to transaction T3 the value of M=340 and in T4 the value of M=355. Both are correct but

due to these current transactions, we lost the value of M=340.

c) Explain what transaction property is violated and why it is violated.

Isolation transaction property is violated because the transaction T1 effects the transaction T2. This

problem is isolation lost update read problem.

d) Discuss how this problem can be prevented by using a locking method. Illustrate your answer by

modifying Table 3 and showing the progression of transactions.

T3 T4

Lock-x(M)

Read(M)

M=M+40

Lock-x(M)

Read(M)

M=M+55

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

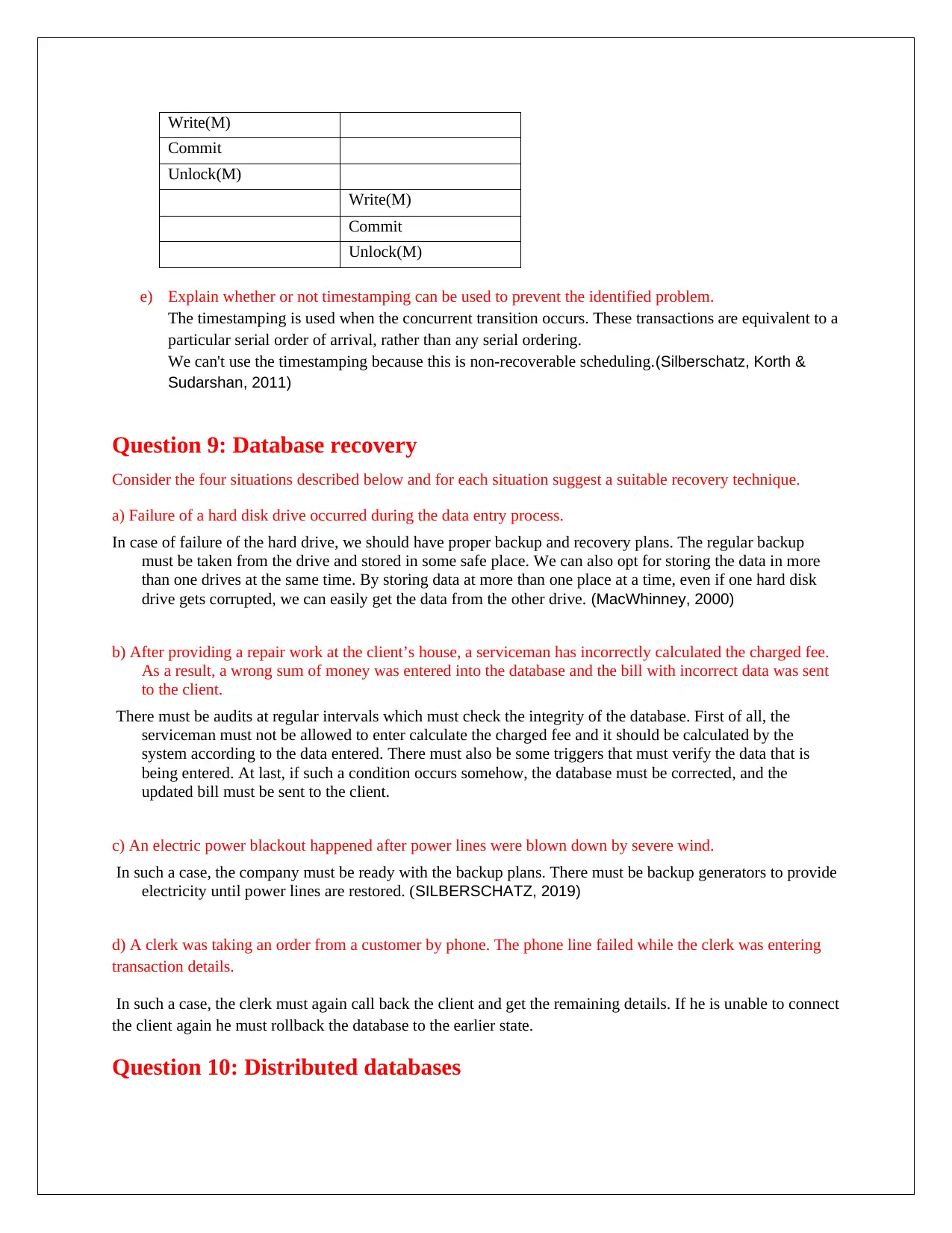

Write(M)

Commit

Unlock(M)

Write(M)

Commit

Unlock(M)

e) Explain whether or not timestamping can be used to prevent the identified problem.

The timestamping is used when the concurrent transition occurs. These transactions are equivalent to a

particular serial order of arrival, rather than any serial ordering.

We can't use the timestamping because this is non-recoverable scheduling.(Silberschatz, Korth &

Sudarshan, 2011)

Question 9: Database recovery

Consider the four situations described below and for each situation suggest a suitable recovery technique.

a) Failure of a hard disk drive occurred during the data entry process.

In case of failure of the hard drive, we should have proper backup and recovery plans. The regular backup

must be taken from the drive and stored in some safe place. We can also opt for storing the data in more

than one drives at the same time. By storing data at more than one place at a time, even if one hard disk

drive gets corrupted, we can easily get the data from the other drive. (MacWhinney, 2000)

b) After providing a repair work at the client’s house, a serviceman has incorrectly calculated the charged fee.

As a result, a wrong sum of money was entered into the database and the bill with incorrect data was sent

to the client.

There must be audits at regular intervals which must check the integrity of the database. First of all, the

serviceman must not be allowed to enter calculate the charged fee and it should be calculated by the

system according to the data entered. There must also be some triggers that must verify the data that is

being entered. At last, if such a condition occurs somehow, the database must be corrected, and the

updated bill must be sent to the client.

c) An electric power blackout happened after power lines were blown down by severe wind.

In such a case, the company must be ready with the backup plans. There must be backup generators to provide

electricity until power lines are restored. (SILBERSCHATZ, 2019)

d) A clerk was taking an order from a customer by phone. The phone line failed while the clerk was entering

transaction details.

In such a case, the clerk must again call back the client and get the remaining details. If he is unable to connect

the client again he must rollback the database to the earlier state.

Question 10: Distributed databases

Commit

Unlock(M)

Write(M)

Commit

Unlock(M)

e) Explain whether or not timestamping can be used to prevent the identified problem.

The timestamping is used when the concurrent transition occurs. These transactions are equivalent to a

particular serial order of arrival, rather than any serial ordering.

We can't use the timestamping because this is non-recoverable scheduling.(Silberschatz, Korth &

Sudarshan, 2011)

Question 9: Database recovery

Consider the four situations described below and for each situation suggest a suitable recovery technique.

a) Failure of a hard disk drive occurred during the data entry process.

In case of failure of the hard drive, we should have proper backup and recovery plans. The regular backup

must be taken from the drive and stored in some safe place. We can also opt for storing the data in more

than one drives at the same time. By storing data at more than one place at a time, even if one hard disk

drive gets corrupted, we can easily get the data from the other drive. (MacWhinney, 2000)

b) After providing a repair work at the client’s house, a serviceman has incorrectly calculated the charged fee.

As a result, a wrong sum of money was entered into the database and the bill with incorrect data was sent

to the client.

There must be audits at regular intervals which must check the integrity of the database. First of all, the

serviceman must not be allowed to enter calculate the charged fee and it should be calculated by the

system according to the data entered. There must also be some triggers that must verify the data that is

being entered. At last, if such a condition occurs somehow, the database must be corrected, and the

updated bill must be sent to the client.

c) An electric power blackout happened after power lines were blown down by severe wind.

In such a case, the company must be ready with the backup plans. There must be backup generators to provide

electricity until power lines are restored. (SILBERSCHATZ, 2019)

d) A clerk was taking an order from a customer by phone. The phone line failed while the clerk was entering

transaction details.

In such a case, the clerk must again call back the client and get the remaining details. If he is unable to connect

the client again he must rollback the database to the earlier state.

Question 10: Distributed databases

Consider a case of a large retail chain of grocery stores. The chain has many outlets across the country and a

large number of employees.

Discuss, giving reasons, what kind of database you would recommend for the retail chain. Your

recommendation may include distributed versus centralized; relational versus object-oriented, and transactional

versus analytical types of databases. In your discussion, explain all the advantages of the solution you

recommended. State any assumptions that you have made.

We would recommend a distributed database due to various reasons. As in the distributed database, the data is

stored in multiple physical locations, so in case of any disaster it will not affect the data and we can easily

retrieve it from other locations. We can have homogeneously distributed databases to store the data. It will also

make the operations faster.

I will prefer relational data because of high speed and high normalization. The data will be stored in rows and

columns and we can use the same data in relation to other data by using normalization. We will not have to

make copies of any data as in case of object-oriented database approach. Also, there are certain limits on the

size of each object that object-oriented database can have whereas relational database does not pose any such

restrictions.

I will also prefer a transactional database. In every database, there are cases when a transaction stops in

between, that may be because of power failures, hardware failures or any other reasons. If we use a

transactional database then the transactions will be rollbacked if they are not complete and we will ensure to

have a correct database at all times. Otherwise, it may lead to data corruption.(Ramarkrishnan, 1997)

References: -

Atkinson, M. (1981). Database. Maidenhead, Berkshire, England: Pergamon Infotech.

Date, C., & White, C. (1993). A guide to DB2. Reading: Addison-Wesley.

Date, C., & White, C. (1990). A guide to DB2. Reading, Mass.: Addison-Wesley.

Larson, J. (1982). Database management system anatomy. Lexington, Mass.: Lexington Books.

MacWhinney, B. (2000). The database. Mahwah, NJ [u.a.]: Lawrence Erlbaum.

Ramarkrishnan, R. (1997). Database management system. London: McGraw-Hill Pub. Co. (ISE Editions).

Silberschatz, A., Korth, H., & Sudarshan, S. (2011). Database system concepts. New York: McGraw.

SILBERSCHATZ. (2019). DATABASE SYSTEM CONCEPTS. [S.l.]: MCGRAW-HILL EDUCATION.

large number of employees.

Discuss, giving reasons, what kind of database you would recommend for the retail chain. Your

recommendation may include distributed versus centralized; relational versus object-oriented, and transactional

versus analytical types of databases. In your discussion, explain all the advantages of the solution you

recommended. State any assumptions that you have made.

We would recommend a distributed database due to various reasons. As in the distributed database, the data is

stored in multiple physical locations, so in case of any disaster it will not affect the data and we can easily

retrieve it from other locations. We can have homogeneously distributed databases to store the data. It will also

make the operations faster.

I will prefer relational data because of high speed and high normalization. The data will be stored in rows and

columns and we can use the same data in relation to other data by using normalization. We will not have to

make copies of any data as in case of object-oriented database approach. Also, there are certain limits on the

size of each object that object-oriented database can have whereas relational database does not pose any such

restrictions.

I will also prefer a transactional database. In every database, there are cases when a transaction stops in

between, that may be because of power failures, hardware failures or any other reasons. If we use a

transactional database then the transactions will be rollbacked if they are not complete and we will ensure to

have a correct database at all times. Otherwise, it may lead to data corruption.(Ramarkrishnan, 1997)

References: -

Atkinson, M. (1981). Database. Maidenhead, Berkshire, England: Pergamon Infotech.

Date, C., & White, C. (1993). A guide to DB2. Reading: Addison-Wesley.

Date, C., & White, C. (1990). A guide to DB2. Reading, Mass.: Addison-Wesley.

Larson, J. (1982). Database management system anatomy. Lexington, Mass.: Lexington Books.

MacWhinney, B. (2000). The database. Mahwah, NJ [u.a.]: Lawrence Erlbaum.

Ramarkrishnan, R. (1997). Database management system. London: McGraw-Hill Pub. Co. (ISE Editions).

Silberschatz, A., Korth, H., & Sudarshan, S. (2011). Database system concepts. New York: McGraw.

SILBERSCHATZ. (2019). DATABASE SYSTEM CONCEPTS. [S.l.]: MCGRAW-HILL EDUCATION.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 9

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.