BUS5CA Assignment 1: Social Media Analysis Using SAS Text Miner

VerifiedAdded on 2022/12/27

|8

|1446

|313

Practical Assignment

AI Summary

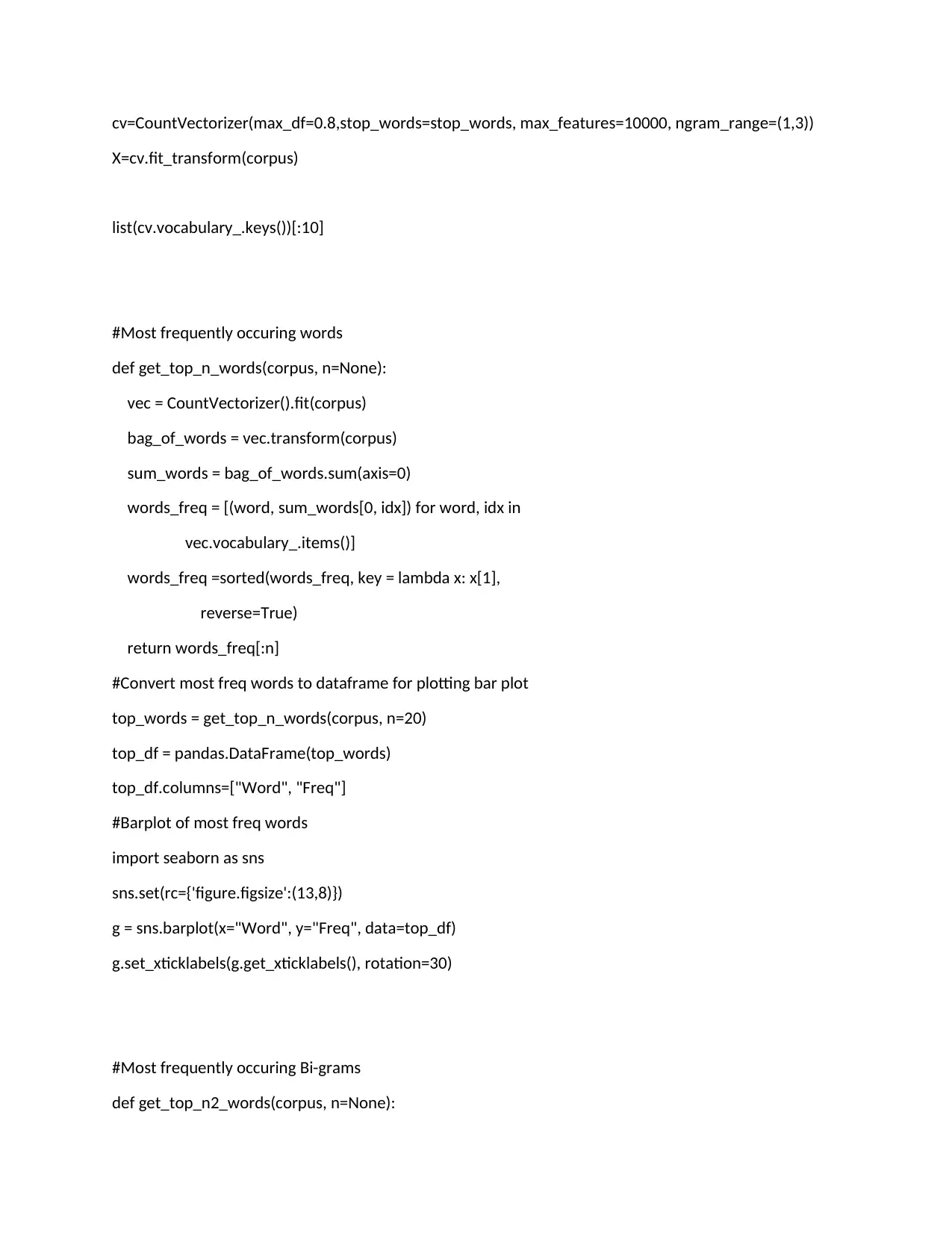

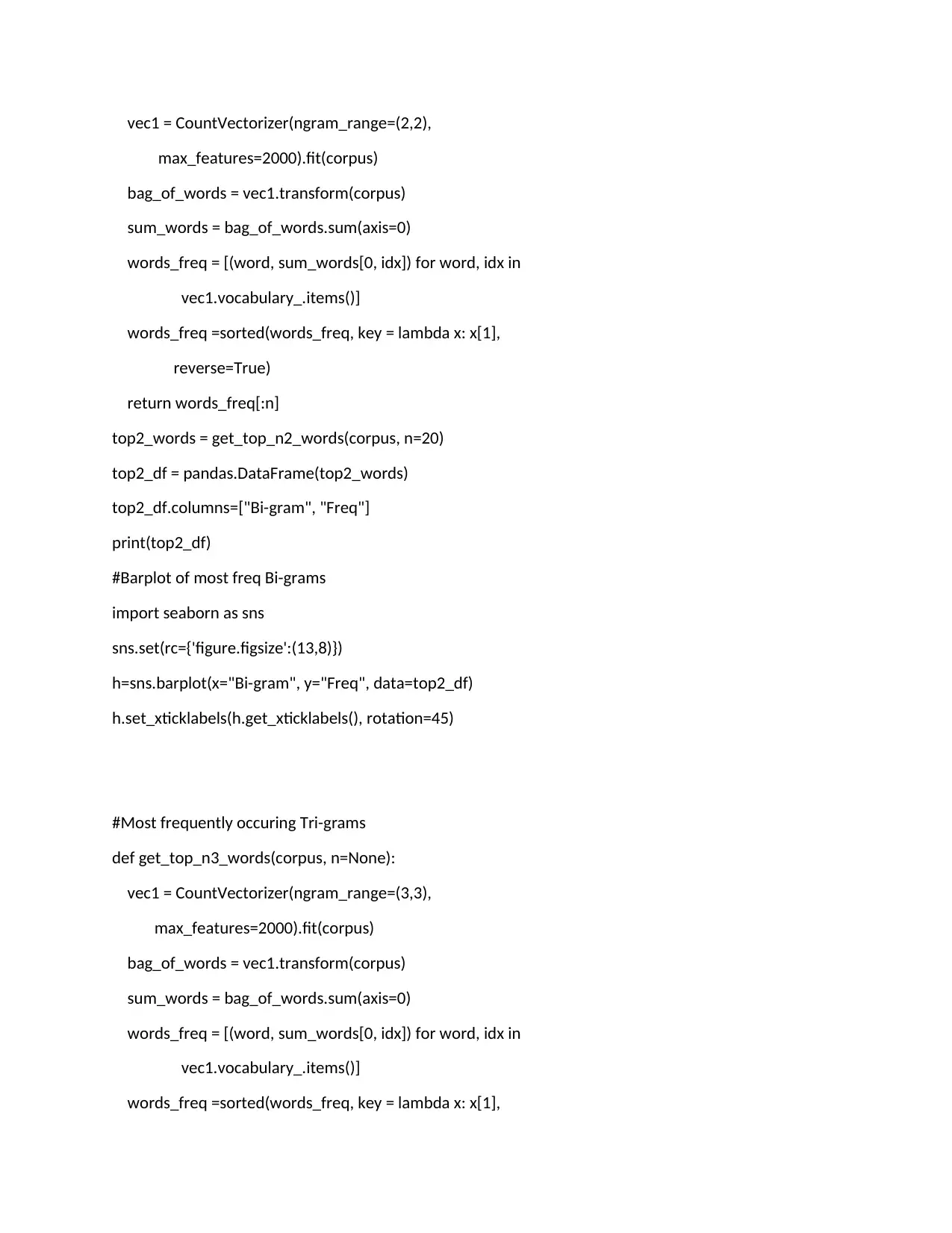

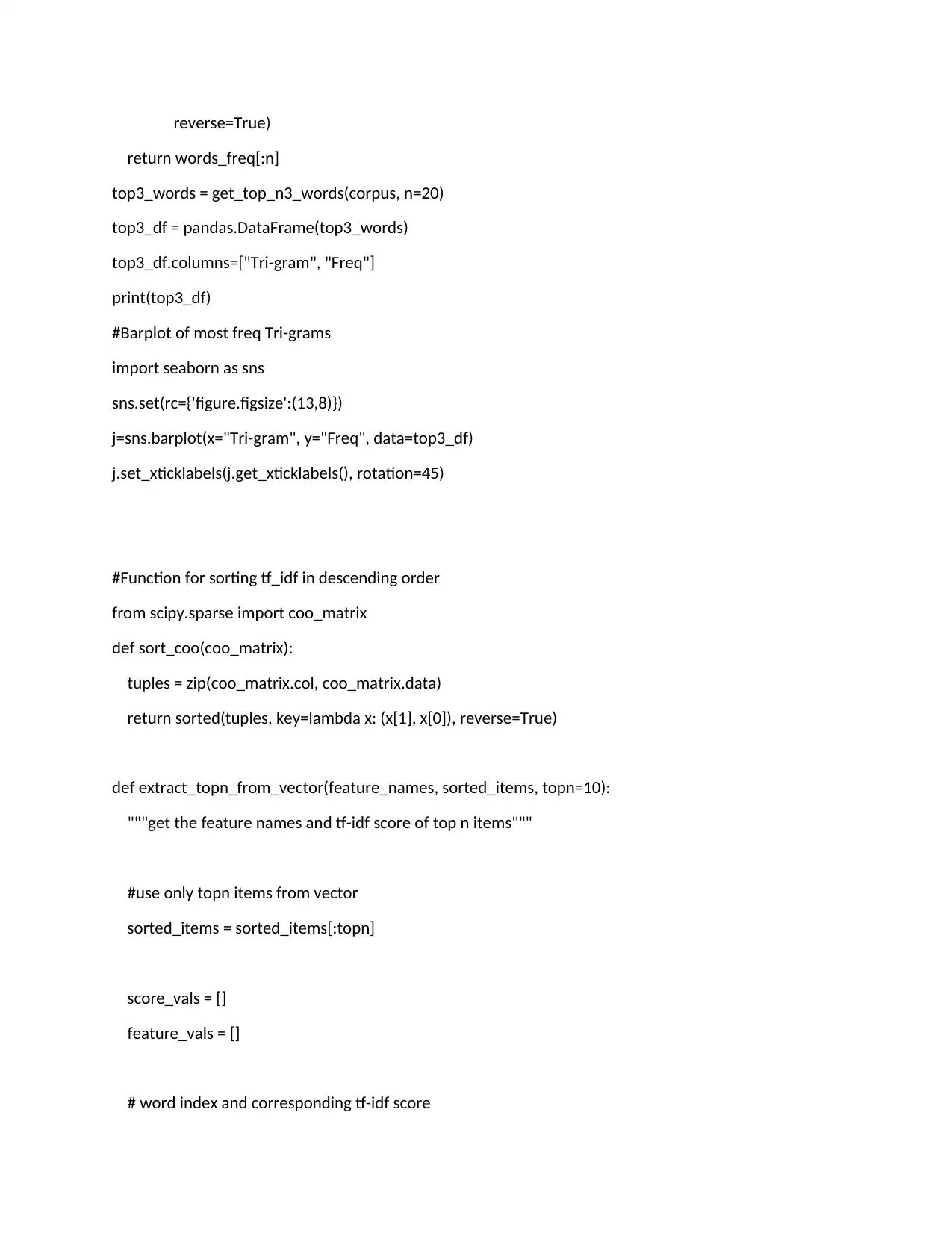

This assignment, completed for the BUS5CA Customer Analytics and Social Media course, utilizes SAS Text Miner to analyze social media data and understand customer preferences. The task involves extracting keywords from article titles across different data channels and identifying the top 10 most used topics in each category. The student employs the SAS Result window to explain the findings. Furthermore, the assignment investigates the relationship between topics and the number of shares, identifying common topics associated with both high and low share counts. The analysis uses the entire dataset, segmented into high and low share groups based on the top and bottom 10% of the dataset. The 'Title' column is used as the 'Text' role for topic modeling, and the results are supported by explanations. The solution includes code to process and analyze the data, including descriptive statistics, word count analysis, and the creation of word clouds and visualizations using Python libraries such as pandas, nltk, and sklearn. The analysis involves identifying common and uncommon words, text preprocessing techniques such as removing punctuation, converting text to lowercase, removing tags, stemming, and lemmatization. The code generates word clouds and analyzes the frequency of words, bi-grams, and tri-grams, providing insights into the most prominent themes within the dataset. The assignment aims to uncover the impacts of online advertising and communication with customers, educating marketing teams on maximizing customer involvement.

1 out of 8

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)