Deakin University SIT719 Assessment 2: Security and Privacy Report

VerifiedAdded on 2023/06/04

|14

|3749

|188

Report

AI Summary

This report examines the security and privacy issues faced by Dumnonia Corporation, an Australian insurance company, in handling sensitive customer data. It focuses on the implementation of k-anonymity as a method to protect data privacy, addressing cyber-security threats like ransomware and unauthorized access. The report outlines organizational drivers for deploying k-anonymity, analyzes technology solutions, and provides an implementation guide. It explores the advantages and limitations of k-anonymity, including re-identification risks and data loss, and discusses various techniques like generalization and suppression. The report also evaluates the costs associated with k-anonymity and its application in data sharing between organizations and governments, providing a comprehensive analysis of data security and privacy in the context of big data analytics. The report highlights the importance of balancing data utility and privacy, offering insights into mitigating security and privacy risks within the organization.

Running head: SECURITY AND PRIVACY ISSUES IN ANALYTICS

Security and Privacy Issues in Analytics

(Dumnonia Corporation)

Name of the student:

Name of the university:

Author Note

Security and Privacy Issues in Analytics

(Dumnonia Corporation)

Name of the student:

Name of the university:

Author Note

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1SECURITY AND PRIVACY ISSUES IN ANALYTICS

Executive summary

Dumnonia Corporation is a very popular insurance company situated in Australia. The company

sells different insurances. It includes medical, death and travel insurances. It has held various

medical data for customers. This is highly confidential, and the company has required to share-

person to person records so that they can find out who gets subjected to data that are not found out. It

has also released records adhering to k-anonymity. It shows that the document issued comprises if k-

1 additional records for releasing values. These values are no different over sectors that are being

found to appearing external data ever. It is also found out that methods of k-anonymity have been

dealing with problems of privacy that are acquired by Dumnonia. K-anonymity method has ensured

preservations of data and processes that are to be considered by Dumnonia. Further, the report is

useful to find out operational and related issues and expenses for implementing k-anonymity.

Moreover, the report also highlights whether the costs are once-off or ongoing. At last, the report

also examines the areas of applications of anonymity process that is helpful to share data sets taking

place between different organisational entities and governments with the current organisation at

Australia.

Executive summary

Dumnonia Corporation is a very popular insurance company situated in Australia. The company

sells different insurances. It includes medical, death and travel insurances. It has held various

medical data for customers. This is highly confidential, and the company has required to share-

person to person records so that they can find out who gets subjected to data that are not found out. It

has also released records adhering to k-anonymity. It shows that the document issued comprises if k-

1 additional records for releasing values. These values are no different over sectors that are being

found to appearing external data ever. It is also found out that methods of k-anonymity have been

dealing with problems of privacy that are acquired by Dumnonia. K-anonymity method has ensured

preservations of data and processes that are to be considered by Dumnonia. Further, the report is

useful to find out operational and related issues and expenses for implementing k-anonymity.

Moreover, the report also highlights whether the costs are once-off or ongoing. At last, the report

also examines the areas of applications of anonymity process that is helpful to share data sets taking

place between different organisational entities and governments with the current organisation at

Australia.

2SECURITY AND PRIVACY ISSUES IN ANALYTICS

Table of Contents

1. Introduction:......................................................................................................................................3

2. Discussion on organizational drivers for Dumnonia:........................................................................3

3. Understanding organisational drivers at Dumnonia related to the deployment of k-anonymity:......4

4. Analysis of Technology Solution:.....................................................................................................6

5. Technologies utilised to deploy k-anonymity as a model:................................................................7

6. K-anonymity Implementation Guide:................................................................................................8

7. Conclusion:......................................................................................................................................10

8. References:......................................................................................................................................11

Table of Contents

1. Introduction:......................................................................................................................................3

2. Discussion on organizational drivers for Dumnonia:........................................................................3

3. Understanding organisational drivers at Dumnonia related to the deployment of k-anonymity:......4

4. Analysis of Technology Solution:.....................................................................................................6

5. Technologies utilised to deploy k-anonymity as a model:................................................................7

6. K-anonymity Implementation Guide:................................................................................................8

7. Conclusion:......................................................................................................................................10

8. References:......................................................................................................................................11

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3SECURITY AND PRIVACY ISSUES IN ANALYTICS

1. Introduction:

Different issues regarding privacy and security are found to be originating from controlling,

storing and examining data that are gathered from probable and available sources. In this study, a

demonstration is made on organisational drivers that related to implementing k-anonymity of the

organisation named Dumnonia. Here technologies are outlined, and different methods to achieve k-

anonymity for protecting privacies are also investigated.

2. Discussion on organizational drivers for Dumnonia:

Different cyber-security threats are been faced through big-data systems of the company.

Specifically, Ransomware attacks has left deployment of big data that is subjected towards different

Ransom demands. Further, there are issues regarding unauthorized users who have been gaining

access for big data that is gathered through organization and then selling those precious information.

Vulnerability of the system gives rise to fraud information generation (Prasser et al. 2014).

Moreover, attackers are deliberately undermining quality of big data analysis. It is done by

fabricating information and placing those to various systems of big data systems. Further corrupting

of information in medical department of the Australian company created reports. This was

comprised of different mistaken information that has been trending. Moreover, a distinct lack of

perimeters of cybersecurity is also found for big data. These have ensured that all the points towards

entry and exit for big data systems are been secured. Failure to perimeter-based security is comprised

of networks of big data. This has also been admitted that challenges in cybersecurity and different

other individuals have been aware of those concerns. Besides, there are problems regarding how to

deploy encryptions within Dumnonia’s systems of big data (Dubovitskaya et al. 2015). Apart from

this, encodings from big systems have also been involved in calculating and processing a high

1. Introduction:

Different issues regarding privacy and security are found to be originating from controlling,

storing and examining data that are gathered from probable and available sources. In this study, a

demonstration is made on organisational drivers that related to implementing k-anonymity of the

organisation named Dumnonia. Here technologies are outlined, and different methods to achieve k-

anonymity for protecting privacies are also investigated.

2. Discussion on organizational drivers for Dumnonia:

Different cyber-security threats are been faced through big-data systems of the company.

Specifically, Ransomware attacks has left deployment of big data that is subjected towards different

Ransom demands. Further, there are issues regarding unauthorized users who have been gaining

access for big data that is gathered through organization and then selling those precious information.

Vulnerability of the system gives rise to fraud information generation (Prasser et al. 2014).

Moreover, attackers are deliberately undermining quality of big data analysis. It is done by

fabricating information and placing those to various systems of big data systems. Further corrupting

of information in medical department of the Australian company created reports. This was

comprised of different mistaken information that has been trending. Moreover, a distinct lack of

perimeters of cybersecurity is also found for big data. These have ensured that all the points towards

entry and exit for big data systems are been secured. Failure to perimeter-based security is comprised

of networks of big data. This has also been admitted that challenges in cybersecurity and different

other individuals have been aware of those concerns. Besides, there are problems regarding how to

deploy encryptions within Dumnonia’s systems of big data (Dubovitskaya et al. 2015). Apart from

this, encodings from big systems have also been involved in calculating and processing a high

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4SECURITY AND PRIVACY ISSUES IN ANALYTICS

quantity of data. Further, this has been making the system slower. This is because the information

has needed to go encryption and decryption.

3. Understanding organisational drivers at Dumnonia related to the deployment

of k-anonymity:

At Dumnonia, data holders are found to hold a collection of different structures of fields and

data that are person specific. Here, the data holders have been required to share various versions of

data with researches. There has been a rise in a query of how holders are released with a version of

private information comprising of scientific guarantees. Here people are subjected to information

that can never be re-determined. This occurs as the information remains practically helpful. Data

holders are required to share various versions of data under researches. There is a rise in queries of

how holders are released as versions of private data comprise of scientific guarantees (Meden et al.

2018). Here people are being subjected to information that has released of a version of private data

composed of scientific safeguards. Here, people are submitted to information that is impossible to

get re-determined. Here information has been staying practically helpful. This is formal protection

also referred as k-anonymity. It also comprises a set of various accompanying policies that are

needed to be deployed. Here, the release delivers protection of k-anonymity when the data for all the

people remain within the version and is never distinguished from at least k-1 individuals. The data

has also been appearing to be released in that scenario (Yeh et al. 2016).

Moreover, there is a rise in demand to re-identify those attacks that have been seeing releases

adhering k-anonymity. Here, those policies have been respected. The protection model of k-

anonymity has also been important. The reason is that it has formed a basis where systems if real

worlds are seen to be Datafly, μ-Argus and k-similar. Moreover, problems of the above k-anonymity

quantity of data. Further, this has been making the system slower. This is because the information

has needed to go encryption and decryption.

3. Understanding organisational drivers at Dumnonia related to the deployment

of k-anonymity:

At Dumnonia, data holders are found to hold a collection of different structures of fields and

data that are person specific. Here, the data holders have been required to share various versions of

data with researches. There has been a rise in a query of how holders are released with a version of

private information comprising of scientific guarantees. Here people are subjected to information

that can never be re-determined. This occurs as the information remains practically helpful. Data

holders are required to share various versions of data under researches. There is a rise in queries of

how holders are released as versions of private data comprise of scientific guarantees (Meden et al.

2018). Here people are being subjected to information that has released of a version of private data

composed of scientific safeguards. Here, people are submitted to information that is impossible to

get re-determined. Here information has been staying practically helpful. This is formal protection

also referred as k-anonymity. It also comprises a set of various accompanying policies that are

needed to be deployed. Here, the release delivers protection of k-anonymity when the data for all the

people remain within the version and is never distinguished from at least k-1 individuals. The data

has also been appearing to be released in that scenario (Yeh et al. 2016).

Moreover, there is a rise in demand to re-identify those attacks that have been seeing releases

adhering k-anonymity. Here, those policies have been respected. The protection model of k-

anonymity has also been important. The reason is that it has formed a basis where systems if real

worlds are seen to be Datafly, μ-Argus and k-similar. Moreover, problems of the above k-anonymity

5SECURITY AND PRIVACY ISSUES IN ANALYTICS

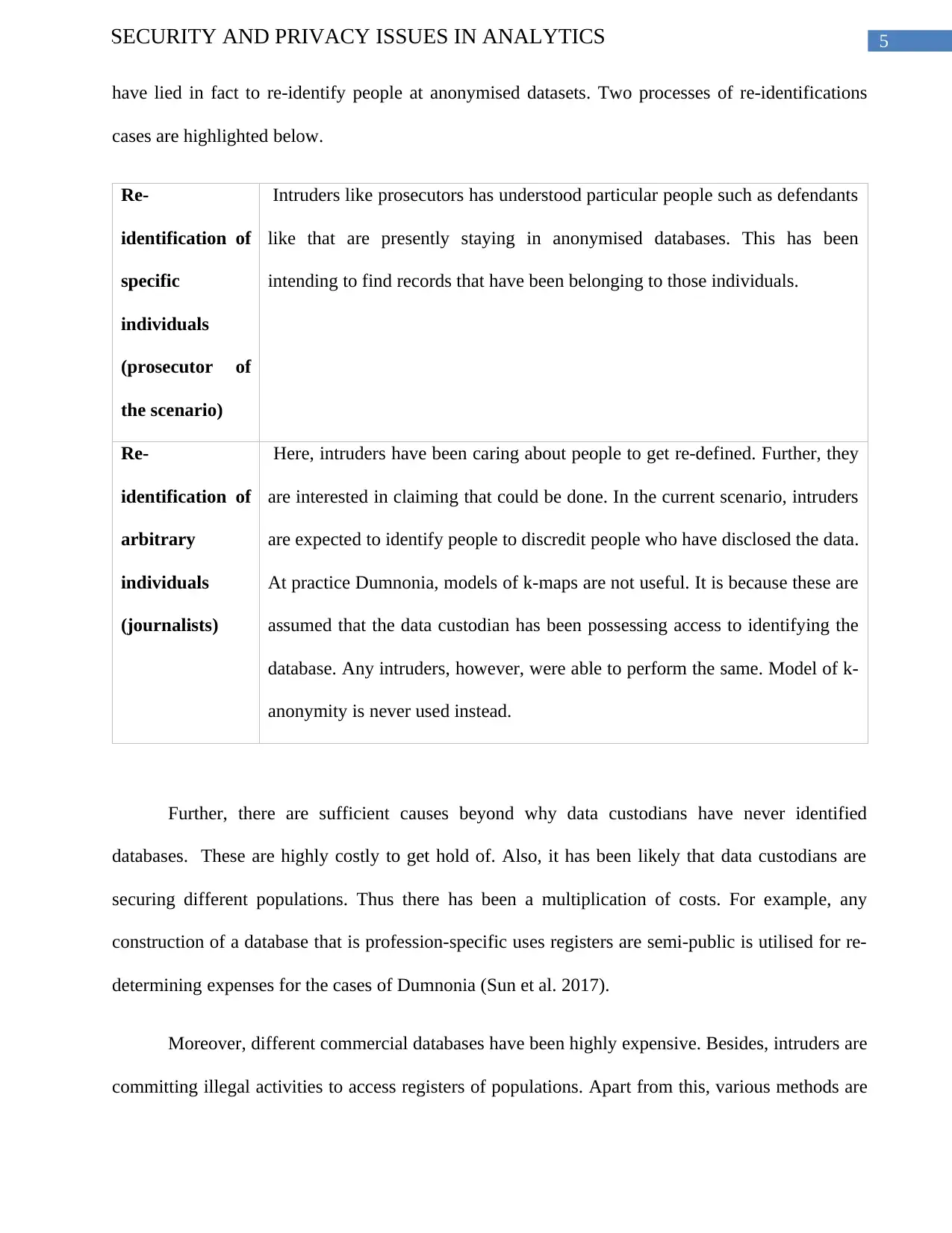

have lied in fact to re-identify people at anonymised datasets. Two processes of re-identifications

cases are highlighted below.

Re-

identification of

specific

individuals

(prosecutor of

the scenario)

Intruders like prosecutors has understood particular people such as defendants

like that are presently staying in anonymised databases. This has been

intending to find records that have been belonging to those individuals.

Re-

identification of

arbitrary

individuals

(journalists)

Here, intruders have been caring about people to get re-defined. Further, they

are interested in claiming that could be done. In the current scenario, intruders

are expected to identify people to discredit people who have disclosed the data.

At practice Dumnonia, models of k-maps are not useful. It is because these are

assumed that the data custodian has been possessing access to identifying the

database. Any intruders, however, were able to perform the same. Model of k-

anonymity is never used instead.

Further, there are sufficient causes beyond why data custodians have never identified

databases. These are highly costly to get hold of. Also, it has been likely that data custodians are

securing different populations. Thus there has been a multiplication of costs. For example, any

construction of a database that is profession-specific uses registers are semi-public is utilised for re-

determining expenses for the cases of Dumnonia (Sun et al. 2017).

Moreover, different commercial databases have been highly expensive. Besides, intruders are

committing illegal activities to access registers of populations. Apart from this, various methods are

have lied in fact to re-identify people at anonymised datasets. Two processes of re-identifications

cases are highlighted below.

Re-

identification of

specific

individuals

(prosecutor of

the scenario)

Intruders like prosecutors has understood particular people such as defendants

like that are presently staying in anonymised databases. This has been

intending to find records that have been belonging to those individuals.

Re-

identification of

arbitrary

individuals

(journalists)

Here, intruders have been caring about people to get re-defined. Further, they

are interested in claiming that could be done. In the current scenario, intruders

are expected to identify people to discredit people who have disclosed the data.

At practice Dumnonia, models of k-maps are not useful. It is because these are

assumed that the data custodian has been possessing access to identifying the

database. Any intruders, however, were able to perform the same. Model of k-

anonymity is never used instead.

Further, there are sufficient causes beyond why data custodians have never identified

databases. These are highly costly to get hold of. Also, it has been likely that data custodians are

securing different populations. Thus there has been a multiplication of costs. For example, any

construction of a database that is profession-specific uses registers are semi-public is utilised for re-

determining expenses for the cases of Dumnonia (Sun et al. 2017).

Moreover, different commercial databases have been highly expensive. Besides, intruders are

committing illegal activities to access registers of populations. Apart from this, various methods are

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

6SECURITY AND PRIVACY ISSUES IN ANALYTICS

also designed under statistical disclosure to estimate the size of equivalence classes as active samples

(Wong and Kim 2015). Whenever the estimates are proper, k-mapping are used approximately. It

ensures that the actual risks are close to the risk of threshold and consequently there are fewer scopes

of loss of information.

4. Analysis of Technology Solution:

Pure samples, at Dumnonia, are drawn randomly for each dataset. These are coming from

various sampling fractions. It has been occurring at a scale of 0.1 to 0.9 with an increment of 0.1.

Determination of variables gets removed, and all the samples are k-anonymized. A present global

algorithm of optimization is deployed to get the samples k-anonymized. Thus the algorithm is used

for cost-functions to guide a process of various k-anonymization (Liu, Xie and Wang 2017). Here,

the goal is to reduce the entire cost. It is a commonly used function to gain the baseline anonymity

that has been the discernibility metric. For all anonymised sets of data, the actual risks are measures,

and data loss is being measured as per the parameter of discernibility.

Further, standard rules for all fractions to perform sampling is performed over 1000 samples

done in Dumnonia. Moreover, the discernibility metric gets affected because of the sample size, and

it is complicated to compare different fractions of sampling. Further, these are normalised by the

value of the baseline. Because of the extent of suppressing has been an important indicator here, for

data quality, the various percentages are comprising of suppressed records that are performed over

every sampling fractions on multiple approaches (Kim and Li 2016). Further, clear two-

redetermination cases have shown that k-anonymity is developed to protect against prosecutor and

journalists. Baseline k-anonymity model represents practices working well for protecting against

various scenarios of re-identifying prosecutors (Zhang, Tong and Zhong 2016). Apart from this,

practical outcomes have demonstrated that the model of baseline k-anonymity is more conservative

also designed under statistical disclosure to estimate the size of equivalence classes as active samples

(Wong and Kim 2015). Whenever the estimates are proper, k-mapping are used approximately. It

ensures that the actual risks are close to the risk of threshold and consequently there are fewer scopes

of loss of information.

4. Analysis of Technology Solution:

Pure samples, at Dumnonia, are drawn randomly for each dataset. These are coming from

various sampling fractions. It has been occurring at a scale of 0.1 to 0.9 with an increment of 0.1.

Determination of variables gets removed, and all the samples are k-anonymized. A present global

algorithm of optimization is deployed to get the samples k-anonymized. Thus the algorithm is used

for cost-functions to guide a process of various k-anonymization (Liu, Xie and Wang 2017). Here,

the goal is to reduce the entire cost. It is a commonly used function to gain the baseline anonymity

that has been the discernibility metric. For all anonymised sets of data, the actual risks are measures,

and data loss is being measured as per the parameter of discernibility.

Further, standard rules for all fractions to perform sampling is performed over 1000 samples

done in Dumnonia. Moreover, the discernibility metric gets affected because of the sample size, and

it is complicated to compare different fractions of sampling. Further, these are normalised by the

value of the baseline. Because of the extent of suppressing has been an important indicator here, for

data quality, the various percentages are comprising of suppressed records that are performed over

every sampling fractions on multiple approaches (Kim and Li 2016). Further, clear two-

redetermination cases have shown that k-anonymity is developed to protect against prosecutor and

journalists. Baseline k-anonymity model represents practices working well for protecting against

various scenarios of re-identifying prosecutors (Zhang, Tong and Zhong 2016). Apart from this,

practical outcomes have demonstrated that the model of baseline k-anonymity is more conservative

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7SECURITY AND PRIVACY ISSUES IN ANALYTICS

as per re-identification of risks occurring under the case of re-identification. Various issues of

conservatism have been facing a high loss of data. It exacerbates fewer factors of sampling. The

reason of those outcomes deals with proper disclosure of control criterion about k-maps and the

scenario of a journalist.

However, it can never be regarded ask-anonymity. Next, three processes that are measured to

extend k-anonymity regarding proper kinds if k-maps are present. Further, it has assured the actual

risks to close threshold risks. This process of hypothesis testing has been from a Poisson distribution

of truncated-at-zero. It is also ensured that the real threat has been nearer to chances of a threshold. It

was comprised of lesser sampling processes and has been an effective approximation of k-map. Then

there is a considerable development of the approach of baseline-anonymity (Wu et al. 2014). The

reason is that it has been delivered with controlling of efficient risks consistent with intentions of

data custodians. Further, the path to test hypothesis has resulted in a minor loss of data as it is

compared with the attitude of baseline k-anonymity at the Australian organisation. This has been a

vital benefit that it has shown a huge percentage of records suppressed because of usage of methods

of baseline.

5. Technologies utilised to deploy k-anonymity as a model:

It must be reminded that anonymity is a formal model of protection. This has aimed all

frames of records that are still unclear. Here arrangements of data have been getting k-anonymized

for documents that have provided provisions of square measures and characteristics for event-k.

Various elective rules have been found to be matching those traits. Here the components are seen to

be accompanying different kinds of generalization (Wang et al. 2016). For example, the terms

“male” and “female” are generalised to the new word “any”. At varying levels of generalisation,

various procedures can also be connected. AG or attributes are performed at segment levels with

as per re-identification of risks occurring under the case of re-identification. Various issues of

conservatism have been facing a high loss of data. It exacerbates fewer factors of sampling. The

reason of those outcomes deals with proper disclosure of control criterion about k-maps and the

scenario of a journalist.

However, it can never be regarded ask-anonymity. Next, three processes that are measured to

extend k-anonymity regarding proper kinds if k-maps are present. Further, it has assured the actual

risks to close threshold risks. This process of hypothesis testing has been from a Poisson distribution

of truncated-at-zero. It is also ensured that the real threat has been nearer to chances of a threshold. It

was comprised of lesser sampling processes and has been an effective approximation of k-map. Then

there is a considerable development of the approach of baseline-anonymity (Wu et al. 2014). The

reason is that it has been delivered with controlling of efficient risks consistent with intentions of

data custodians. Further, the path to test hypothesis has resulted in a minor loss of data as it is

compared with the attitude of baseline k-anonymity at the Australian organisation. This has been a

vital benefit that it has shown a huge percentage of records suppressed because of usage of methods

of baseline.

5. Technologies utilised to deploy k-anonymity as a model:

It must be reminded that anonymity is a formal model of protection. This has aimed all

frames of records that are still unclear. Here arrangements of data have been getting k-anonymized

for documents that have provided provisions of square measures and characteristics for event-k.

Various elective rules have been found to be matching those traits. Here the components are seen to

be accompanying different kinds of generalization (Wang et al. 2016). For example, the terms

“male” and “female” are generalised to the new word “any”. At varying levels of generalisation,

various procedures can also be connected. AG or attributes are performed at segment levels with

8SECURITY AND PRIVACY ISSUES IN ANALYTICS

qualities in areas that are generalised to the speculations of steps. There are cells where this

generalisation are made on solitary cells. This has been lasting long on different summed up tables

containing particular sections and values of various levels of generalisations.

Suppressions have consisted of averting delicate data that are done through evacuating that.

This suppression is also inter-connected at different levels of any single cell. It is also done on

overall tuple and entire segment. This is done allowing diminishing of measures to speculate the

forces to undertake anonymity. At the TS or tuple, suppression is performed at the column level. It

has evacuated at the entire level (Tsai et al. 2016). Next, there are attributes or AS. Here suppression

is done at the level of a segment. This operation has shrouded on estimations of that sector. Next,

there is a CS or cell. Further, suppression is performed at one level of a cell with long-lasting data

that is k anonymised. This has wiped out specific cells to a particular tuple of quality.

6. K-anonymity Implementation Guide:

K-anonymity is the approach used through which Dumnonia can distinguish within any

group of least k individuals. Previously most of the suggested strategies to implementing k-

anonymity has been focusing on developing the efficiency of algorithms and putting less stress in

assuring utility of anonymized data from the perspective of researchers.

The process of checking individual transformation for anonymity is the main bottleneck. This

has been for different algorithms to get anonymised. Here, the main design goals are ARX system to

speed up processes for go into memory layout and data representations. Further, it has deployed

different optimisations enabled through the decisions of designs. Data representations, on the other

hand, is the framework that holds data in the primary memory. Data has been getting optimised and

compressed that is most efficient for consuming minds. It has feasible regarding different datasets

qualities in areas that are generalised to the speculations of steps. There are cells where this

generalisation are made on solitary cells. This has been lasting long on different summed up tables

containing particular sections and values of various levels of generalisations.

Suppressions have consisted of averting delicate data that are done through evacuating that.

This suppression is also inter-connected at different levels of any single cell. It is also done on

overall tuple and entire segment. This is done allowing diminishing of measures to speculate the

forces to undertake anonymity. At the TS or tuple, suppression is performed at the column level. It

has evacuated at the entire level (Tsai et al. 2016). Next, there are attributes or AS. Here suppression

is done at the level of a segment. This operation has shrouded on estimations of that sector. Next,

there is a CS or cell. Further, suppression is performed at one level of a cell with long-lasting data

that is k anonymised. This has wiped out specific cells to a particular tuple of quality.

6. K-anonymity Implementation Guide:

K-anonymity is the approach used through which Dumnonia can distinguish within any

group of least k individuals. Previously most of the suggested strategies to implementing k-

anonymity has been focusing on developing the efficiency of algorithms and putting less stress in

assuring utility of anonymized data from the perspective of researchers.

The process of checking individual transformation for anonymity is the main bottleneck. This

has been for different algorithms to get anonymised. Here, the main design goals are ARX system to

speed up processes for go into memory layout and data representations. Further, it has deployed

different optimisations enabled through the decisions of designs. Data representations, on the other

hand, is the framework that holds data in the primary memory. Data has been getting optimised and

compressed that is most efficient for consuming minds. It has feasible regarding different datasets

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

9SECURITY AND PRIVACY ISSUES IN ANALYTICS

having different data entries on commodities of hardware. Moreover, it has depended on the

available main memory and datasets characteristics (Soria-Comas et al. 2014). Furthermore, the

system has been deploying compressions on all data items and displaying hierarchies of

generalisations. The initial implementations about checking anonymity have been transformed to

input datasets. This is done by iterating rows in buffers and implementing assignments of all the

cells. Further, all the rows have been getting passed for grouping operators of equivalence classes.

Apart from this, it is deployed as a hash table. Here all the rows have been holding related counters

are incremented as the same key. Rows having the same cell values are also included.

Ultimately, this system has been iterating all entries within hash tables and checking whether

that can fulfil the provided set of criteria of privacies. This system has been permitting parameters of

suppression to define upper bounds of different suppressed rows. It is done by considering various

anonymised datasets. This comprises data losses as privacy criteria that have not been enforced for

multiple equivalent classes (Otgonbayar et al. 2018). Different non-anonymous teams are found to

remove datasets as the total amount of suppressed tuples are found to be lesser than that threshold.

Further, systems have also supplying different extensions to seek optimal solutions for criteria of

privacies that has been monotonic as the suppressions are deployed. Rolling of optimisation is

applied to an algorithm that has been moving from transformations. Moreover, these classes of

equivalences are generated through merging courses for monotonicity of generalisation hierarchies

(Niu et al. 2014). These analysing datasets and systems were assimilating all kinds of optimisations.

It has been deriving benefits for performing rolling-ups and projections. In this particular case, this

has required to transform column for different representative rows. It has resulted in various cells

that are needed to get modified. These challenges have been there for different combinations that are

valid.

having different data entries on commodities of hardware. Moreover, it has depended on the

available main memory and datasets characteristics (Soria-Comas et al. 2014). Furthermore, the

system has been deploying compressions on all data items and displaying hierarchies of

generalisations. The initial implementations about checking anonymity have been transformed to

input datasets. This is done by iterating rows in buffers and implementing assignments of all the

cells. Further, all the rows have been getting passed for grouping operators of equivalence classes.

Apart from this, it is deployed as a hash table. Here all the rows have been holding related counters

are incremented as the same key. Rows having the same cell values are also included.

Ultimately, this system has been iterating all entries within hash tables and checking whether

that can fulfil the provided set of criteria of privacies. This system has been permitting parameters of

suppression to define upper bounds of different suppressed rows. It is done by considering various

anonymised datasets. This comprises data losses as privacy criteria that have not been enforced for

multiple equivalent classes (Otgonbayar et al. 2018). Different non-anonymous teams are found to

remove datasets as the total amount of suppressed tuples are found to be lesser than that threshold.

Further, systems have also supplying different extensions to seek optimal solutions for criteria of

privacies that has been monotonic as the suppressions are deployed. Rolling of optimisation is

applied to an algorithm that has been moving from transformations. Moreover, these classes of

equivalences are generated through merging courses for monotonicity of generalisation hierarchies

(Niu et al. 2014). These analysing datasets and systems were assimilating all kinds of optimisations.

It has been deriving benefits for performing rolling-ups and projections. In this particular case, this

has required to transform column for different representative rows. It has resulted in various cells

that are needed to get modified. These challenges have been there for different combinations that are

valid.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

10SECURITY AND PRIVACY ISSUES IN ANALYTICS

7. Conclusion:

As medical data has continued to transition electronic formats, scopes have been there for

Dumnonia Corporation. These opportunities can be utilised to find out patterns and rise knowledge

that is helpful to improve cares of patients. With the advents in technology taking place in previous

decades, Dumnonia has amassed a massive quantity of health-related and electronic data and

electronic. This data is a valuable resource for decision makers, analysts and researchers. Thus, it

can be concluded that while deploying k-anonymity. Moreover, there are different limitations where

various valid combinations have there to optimise concerns. It should be assured that the data has

been within constant states. This it can be said that the transitions have been restricted to the area of

projections. It can also be noted that they have been successively at the current state permitting to

perform the rolling-up which has been transformed already. Thus the system has been needed to

deploy finite state of machines to comply with those challenges.

7. Conclusion:

As medical data has continued to transition electronic formats, scopes have been there for

Dumnonia Corporation. These opportunities can be utilised to find out patterns and rise knowledge

that is helpful to improve cares of patients. With the advents in technology taking place in previous

decades, Dumnonia has amassed a massive quantity of health-related and electronic data and

electronic. This data is a valuable resource for decision makers, analysts and researchers. Thus, it

can be concluded that while deploying k-anonymity. Moreover, there are different limitations where

various valid combinations have there to optimise concerns. It should be assured that the data has

been within constant states. This it can be said that the transitions have been restricted to the area of

projections. It can also be noted that they have been successively at the current state permitting to

perform the rolling-up which has been transformed already. Thus the system has been needed to

deploy finite state of machines to comply with those challenges.

11SECURITY AND PRIVACY ISSUES IN ANALYTICS

8. References:

Cooper, N. and Elstun, A., 2018. User-Controlled Generalization Boundaries for p-Sensitive k-

Anonymity.

Dubovitskaya, A., Urovi, V., Vasirani, M., Aberer, K. and Schumacher, M.I., 2015, May. A cloud-

based ehealth architecture for privacy preserving data integration. In IFIP International Information

Security Conference (pp. 585-598). Springer, Cham.

Heitmann, B., Hermsen, F. and Decker, S., 2017. k-RDF-Neighbourhood Anonymity: Combining

Structural and Attribute-based Anonymisation for Linked Data. In PrivOn@ ISWC.

Kang, A., Kim, K.I. and Lee, K.M., 2018. Anonymity Management for Privacy-Sensitive Data

Publication.

Kim, J.S. and Li, K.J., 2016. Location K-anonymity in indoor spaces. Geoinformatica, 20(3),

pp.415-451.

Liu, X., Xie, Q. and Wang, L., 2017. Personalized extended (α, k)‐anonymity model for privacy‐

preserving data publishing. Concurrency and Computation: Practice and Experience, 29(6),

p.e3886.

Meden, B., Emeršič, Ž., Štruc, V. and Peer, P., 2018. k-Same-Net: k-Anonymity with Generative

Deep Neural Networks for Face Deidentification. Entropy, 20(1), p.60.

Niu, B., Li, Q., Zhu, X., Cao, G. and Li, H., 2014, April. Achieving k-anonymity in privacy-aware

location-based services. In INFOCOM, 2014 Proceedings IEEE (pp. 754-762). IEEE.

8. References:

Cooper, N. and Elstun, A., 2018. User-Controlled Generalization Boundaries for p-Sensitive k-

Anonymity.

Dubovitskaya, A., Urovi, V., Vasirani, M., Aberer, K. and Schumacher, M.I., 2015, May. A cloud-

based ehealth architecture for privacy preserving data integration. In IFIP International Information

Security Conference (pp. 585-598). Springer, Cham.

Heitmann, B., Hermsen, F. and Decker, S., 2017. k-RDF-Neighbourhood Anonymity: Combining

Structural and Attribute-based Anonymisation for Linked Data. In PrivOn@ ISWC.

Kang, A., Kim, K.I. and Lee, K.M., 2018. Anonymity Management for Privacy-Sensitive Data

Publication.

Kim, J.S. and Li, K.J., 2016. Location K-anonymity in indoor spaces. Geoinformatica, 20(3),

pp.415-451.

Liu, X., Xie, Q. and Wang, L., 2017. Personalized extended (α, k)‐anonymity model for privacy‐

preserving data publishing. Concurrency and Computation: Practice and Experience, 29(6),

p.e3886.

Meden, B., Emeršič, Ž., Štruc, V. and Peer, P., 2018. k-Same-Net: k-Anonymity with Generative

Deep Neural Networks for Face Deidentification. Entropy, 20(1), p.60.

Niu, B., Li, Q., Zhu, X., Cao, G. and Li, H., 2014, April. Achieving k-anonymity in privacy-aware

location-based services. In INFOCOM, 2014 Proceedings IEEE (pp. 754-762). IEEE.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 14

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.