Detailed Analysis of Software Testing and Documentation Process

VerifiedAdded on 2023/03/23

|16

|2799

|68

Report

AI Summary

This document presents a comprehensive software testing report, focusing on the testing of an online backstage management system. It includes a system overview, test approach encompassing unit, integration, and user acceptance testing, a detailed test plan outlining features to be tested and excluded, testing tools and environment, and a traceability matrix. The report also provides specific test cases with defined purposes, inputs, expected outputs, pass/fail criteria, and test procedures. Furthermore, it includes a Gantt chart for project timelines, a budget breakdown for testing activities, and test logs documenting the results and incident reports. The objective is to ensure the software meets business requirements, reduces errors, and provides a user-friendly interface, with early identification and resolution of defects to enhance performance, usability, security, and maintainability.

Running head: SOFTWARE TESTING

Software Test Documentation

Name of the Student

Name of the University

Author’s Note

Software Test Documentation

Name of the Student

Name of the University

Author’s Note

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1

SOFTWARE TESTING

Table of Contents

1. INTRODUCTION..................................................................................................................2

1.1. System Overview............................................................................................................2

1.2. Test Approach.................................................................................................................2

2. TEST PLAN...........................................................................................................................4

2.1. Features to be tested........................................................................................................4

2.2. Features not to be tested..................................................................................................4

2.3. Testing tools and environment........................................................................................4

2.4. Traceability Matrix..........................................................................................................5

3. TEST CASES.........................................................................................................................5

3.1. Case 1..............................................................................................................................5

3.1.1. Purpose.....................................................................................................................5

3.1.2. Inputs........................................................................................................................5

3.1.3. Expected outputs & Pass/Fail Criteria.....................................................................6

3.1.4. Test Procedure..........................................................................................................6

4. Gantt Chart.............................................................................................................................7

5. Budget....................................................................................................................................7

Bibliography.............................................................................................................................10

APPENDIX A. TEST LOGS...................................................................................................12

A.1. Log for test 1................................................................................................................12

A.1.1. Test Results...........................................................................................................12

A.1.2. Incident Report......................................................................................................14

SOFTWARE TESTING

Table of Contents

1. INTRODUCTION..................................................................................................................2

1.1. System Overview............................................................................................................2

1.2. Test Approach.................................................................................................................2

2. TEST PLAN...........................................................................................................................4

2.1. Features to be tested........................................................................................................4

2.2. Features not to be tested..................................................................................................4

2.3. Testing tools and environment........................................................................................4

2.4. Traceability Matrix..........................................................................................................5

3. TEST CASES.........................................................................................................................5

3.1. Case 1..............................................................................................................................5

3.1.1. Purpose.....................................................................................................................5

3.1.2. Inputs........................................................................................................................5

3.1.3. Expected outputs & Pass/Fail Criteria.....................................................................6

3.1.4. Test Procedure..........................................................................................................6

4. Gantt Chart.............................................................................................................................7

5. Budget....................................................................................................................................7

Bibliography.............................................................................................................................10

APPENDIX A. TEST LOGS...................................................................................................12

A.1. Log for test 1................................................................................................................12

A.1.1. Test Results...........................................................................................................12

A.1.2. Incident Report......................................................................................................14

2

SOFTWARE TESTING

1. INTRODUCTION

1.1. System Overview

The application of eStage has been developed to manage online backstage while the

design of the application is made such that it would be able to select roles of each

stakeholders which have been associated with various managements of backstage. Users of

this system have to login in information system through selection of specific roles while also

passing authentication mechanism to use various features which are provided by this system.

When the user is login successfully, users are automatically redirected to welcome page in

which various links are provided accordingly to different users. The users could also select

various options like logoff, home, discipline, sections and competitors. All details could be

accessed by users by selection of appropriate options and also choosing system more wisely.

Registrations in the information system could be done by competitors through their details

after which various functionalities would be provided to every users having different roles.

Stage managers could also move competitors, change or withdraw sequences for competitors.

The results as well as judgements could be recorded as well in information systems through

logging into systems and inputting scores as well as results for managing information along

with automatically distributing results to its competitors.

1.2. Test Approach

In order to proceed with testing of software applications, various different approaches

should be evaluated so that selection of best approach could be done in order to test online

backstage system for management. The project limitation has been identified through analysis

on objective as well as scope of this project. The documents of test have been prepared

through analyzing below testing on developed information systems.

SOFTWARE TESTING

1. INTRODUCTION

1.1. System Overview

The application of eStage has been developed to manage online backstage while the

design of the application is made such that it would be able to select roles of each

stakeholders which have been associated with various managements of backstage. Users of

this system have to login in information system through selection of specific roles while also

passing authentication mechanism to use various features which are provided by this system.

When the user is login successfully, users are automatically redirected to welcome page in

which various links are provided accordingly to different users. The users could also select

various options like logoff, home, discipline, sections and competitors. All details could be

accessed by users by selection of appropriate options and also choosing system more wisely.

Registrations in the information system could be done by competitors through their details

after which various functionalities would be provided to every users having different roles.

Stage managers could also move competitors, change or withdraw sequences for competitors.

The results as well as judgements could be recorded as well in information systems through

logging into systems and inputting scores as well as results for managing information along

with automatically distributing results to its competitors.

1.2. Test Approach

In order to proceed with testing of software applications, various different approaches

should be evaluated so that selection of best approach could be done in order to test online

backstage system for management. The project limitation has been identified through analysis

on objective as well as scope of this project. The documents of test have been prepared

through analyzing below testing on developed information systems.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3

SOFTWARE TESTING

Unit Testing – In order to verify three codes as well as logics which are utilised for

developing programs, unit testing have to be performed after which it has to be compared

with current structure in that framework. For reducing bugs as well as errors in final report, it

is to be performed in final stages of software development.

Integration Testing – In order to test functionalities of developed software,

integration testing is done. This is done through the development of software in various

modules along with integration of this testing with other findings so that compatibility could

be found with other modules. Evaluation should be done with various platforms so that it

would be able to run in different software as well as hardware configurations.

Business requirement – Different test case should be designed and the test results

should be documented for troubleshooting the information system and increasing its

efficiency. The tests of this online backstage software for management have to be done to

verify requirements meets through solutions along with reducing errors in final product of

software which is being developed for managing functionality as well as information which

are required by organizations. All products of this software is to align with business

requirements. An evaluation has to be done on different criteria that needs to be met by

information systems. Tests should also be conducted to manage defects in final software.

User acceptance testing – While testing, friendly users should also be encouraged to

get involve who would also be helpful in analysing usability of this information system. The

final product interface has to be developed in such a way in which these users would not face

difficulties in finding various different functions. Information flow through end to end is to

be analysed spot error and steps should be taken to mitigate them. The important participants

of the testing of user’s acceptance are actual users who would be using this information

system, therefore rest cases have to be developed.

SOFTWARE TESTING

Unit Testing – In order to verify three codes as well as logics which are utilised for

developing programs, unit testing have to be performed after which it has to be compared

with current structure in that framework. For reducing bugs as well as errors in final report, it

is to be performed in final stages of software development.

Integration Testing – In order to test functionalities of developed software,

integration testing is done. This is done through the development of software in various

modules along with integration of this testing with other findings so that compatibility could

be found with other modules. Evaluation should be done with various platforms so that it

would be able to run in different software as well as hardware configurations.

Business requirement – Different test case should be designed and the test results

should be documented for troubleshooting the information system and increasing its

efficiency. The tests of this online backstage software for management have to be done to

verify requirements meets through solutions along with reducing errors in final product of

software which is being developed for managing functionality as well as information which

are required by organizations. All products of this software is to align with business

requirements. An evaluation has to be done on different criteria that needs to be met by

information systems. Tests should also be conducted to manage defects in final software.

User acceptance testing – While testing, friendly users should also be encouraged to

get involve who would also be helpful in analysing usability of this information system. The

final product interface has to be developed in such a way in which these users would not face

difficulties in finding various different functions. Information flow through end to end is to

be analysed spot error and steps should be taken to mitigate them. The important participants

of the testing of user’s acceptance are actual users who would be using this information

system, therefore rest cases have to be developed.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4

SOFTWARE TESTING

2. TEST PLAN

2.1. Features to be tested

All certificates which have been used for developing samples and functionalities of

various data output have to be tested. All artefact which needs to be efficiently tested have to

be included into the test plans to manage information systems. A documentation strategy of

very high level has to be utilized along with developing of a traceability matrix to identify

outputs as well as various inputs on this information system. All relevant modules for various

stakeholders which are involved in this system is to be developed very individually along

with testing these in integration of system module in order to identify the errors in the

development stage of this system.

2.2. Features not to be tested

All the relevant security features which have been implemented is not to be tested as

they have been excluded from management configurations as well as viewing control of this

information systems.

2.3. Testing tools and environment

A test tool has to be selected to detect faults and tools have to meet all following features

like:

● Full or partial monitoring of programs codes which be including the following:

o Instructions set simulator is to be used to provide permissions for instruction

level monitoring as well as providing trace facility.

SOFTWARE TESTING

2. TEST PLAN

2.1. Features to be tested

All certificates which have been used for developing samples and functionalities of

various data output have to be tested. All artefact which needs to be efficiently tested have to

be included into the test plans to manage information systems. A documentation strategy of

very high level has to be utilized along with developing of a traceability matrix to identify

outputs as well as various inputs on this information system. All relevant modules for various

stakeholders which are involved in this system is to be developed very individually along

with testing these in integration of system module in order to identify the errors in the

development stage of this system.

2.2. Features not to be tested

All the relevant security features which have been implemented is not to be tested as

they have been excluded from management configurations as well as viewing control of this

information systems.

2.3. Testing tools and environment

A test tool has to be selected to detect faults and tools have to meet all following features

like:

● Full or partial monitoring of programs codes which be including the following:

o Instructions set simulator is to be used to provide permissions for instruction

level monitoring as well as providing trace facility.

5

SOFTWARE TESTING

o Animations of programs and permissions to provide step by step

implementation and execution of conditional breakpoints at source level along

with machine code.

o Coverage of various code reports.

● Symbolic debug or the formatted dump and to allow inspection of this program for

identifications of error for the various chosen points.

● Benchmark which have been used for comparison of run time performances.

● The various analysis of performances to highlight the hotspots along with usage of

these available resources.

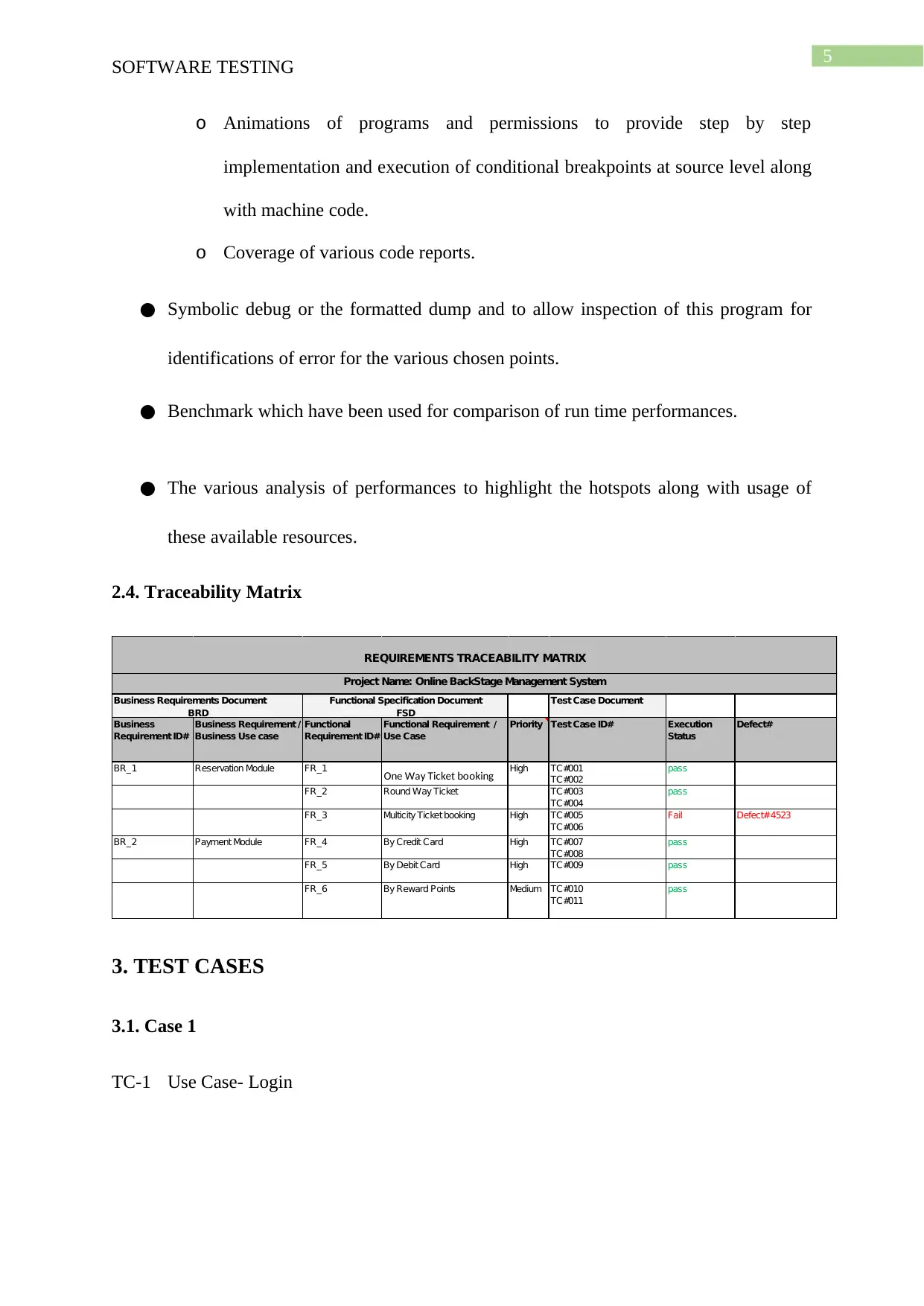

2.4. Traceability Matrix

Test Case Document

Business

Requirement ID#

Business Requirement /

Business Use case

Functional

Requirement ID#

Functional Requirement /

Use Case

Priority Test Case ID# Execution

Status

Defect#

BR_1 Reservation Module FR_1 One Way Ticket booking High TC#001

TC#002

pass

FR_2 Round Way Ticket TC#003

TC#004

pass

FR_3 Multicity Ticket booking High TC#005

TC#006

Fail Defect#4523

BR_2 Payment Module FR_4 By Credit Card High TC#007

TC#008

pass

FR_5 By Debit Card High TC#009 pass

FR_6 By Reward Points Medium TC#010

TC#011

pass

Project Name: Online BackStage Management System

REQUIREMENTS TRACEABILITY MATRIX

Business Requirements Document

BRD

Functional Specification Document

FSD

3. TEST CASES

3.1. Case 1

TC-1 Use Case- Login

SOFTWARE TESTING

o Animations of programs and permissions to provide step by step

implementation and execution of conditional breakpoints at source level along

with machine code.

o Coverage of various code reports.

● Symbolic debug or the formatted dump and to allow inspection of this program for

identifications of error for the various chosen points.

● Benchmark which have been used for comparison of run time performances.

● The various analysis of performances to highlight the hotspots along with usage of

these available resources.

2.4. Traceability Matrix

Test Case Document

Business

Requirement ID#

Business Requirement /

Business Use case

Functional

Requirement ID#

Functional Requirement /

Use Case

Priority Test Case ID# Execution

Status

Defect#

BR_1 Reservation Module FR_1 One Way Ticket booking High TC#001

TC#002

pass

FR_2 Round Way Ticket TC#003

TC#004

pass

FR_3 Multicity Ticket booking High TC#005

TC#006

Fail Defect#4523

BR_2 Payment Module FR_4 By Credit Card High TC#007

TC#008

pass

FR_5 By Debit Card High TC#009 pass

FR_6 By Reward Points Medium TC#010

TC#011

pass

Project Name: Online BackStage Management System

REQUIREMENTS TRACEABILITY MATRIX

Business Requirements Document

BRD

Functional Specification Document

FSD

3. TEST CASES

3.1. Case 1

TC-1 Use Case- Login

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

6

SOFTWARE TESTING

3.1.1. Purpose

The tests have been performed so that users will not be facing any errors while

logging in information system.

3.1.2. Inputs

The selection of users should be done from dropdown list. Password should be the

input for logging in the system while using information system to manage backstage

programs.

3.1.3. Expected outputs & Pass/Fail Criteria

All users can login in the information system while being redirected into welcome page for

this information system. The users should use these information system as the responsibility

and role demands. After being redirected, users have to be forwarded out redirected to

separate links according to various responsibilities and roles of the related users.

3.1.4. Test Procedure

In order to design test plans, various components in information system have to be

divided in various components along with defining the related testing procedure. These

testing should also be divided in non-functional as well as functional testing. All the related

methodology has to be followed in order to develop very specific methodology along with

proceeding with test plans. In order to test all securities constraints, an analysis has to made

into the performance as well as scalability of this information system. All the related failures

as well as defects is to be identified so that performance, usability, security as well as

maintainability of this information system could be considered as even any single defect or

error in this system could cause various failures in the internal structure of this information

system.

SOFTWARE TESTING

3.1.1. Purpose

The tests have been performed so that users will not be facing any errors while

logging in information system.

3.1.2. Inputs

The selection of users should be done from dropdown list. Password should be the

input for logging in the system while using information system to manage backstage

programs.

3.1.3. Expected outputs & Pass/Fail Criteria

All users can login in the information system while being redirected into welcome page for

this information system. The users should use these information system as the responsibility

and role demands. After being redirected, users have to be forwarded out redirected to

separate links according to various responsibilities and roles of the related users.

3.1.4. Test Procedure

In order to design test plans, various components in information system have to be

divided in various components along with defining the related testing procedure. These

testing should also be divided in non-functional as well as functional testing. All the related

methodology has to be followed in order to develop very specific methodology along with

proceeding with test plans. In order to test all securities constraints, an analysis has to made

into the performance as well as scalability of this information system. All the related failures

as well as defects is to be identified so that performance, usability, security as well as

maintainability of this information system could be considered as even any single defect or

error in this system could cause various failures in the internal structure of this information

system.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7

SOFTWARE TESTING

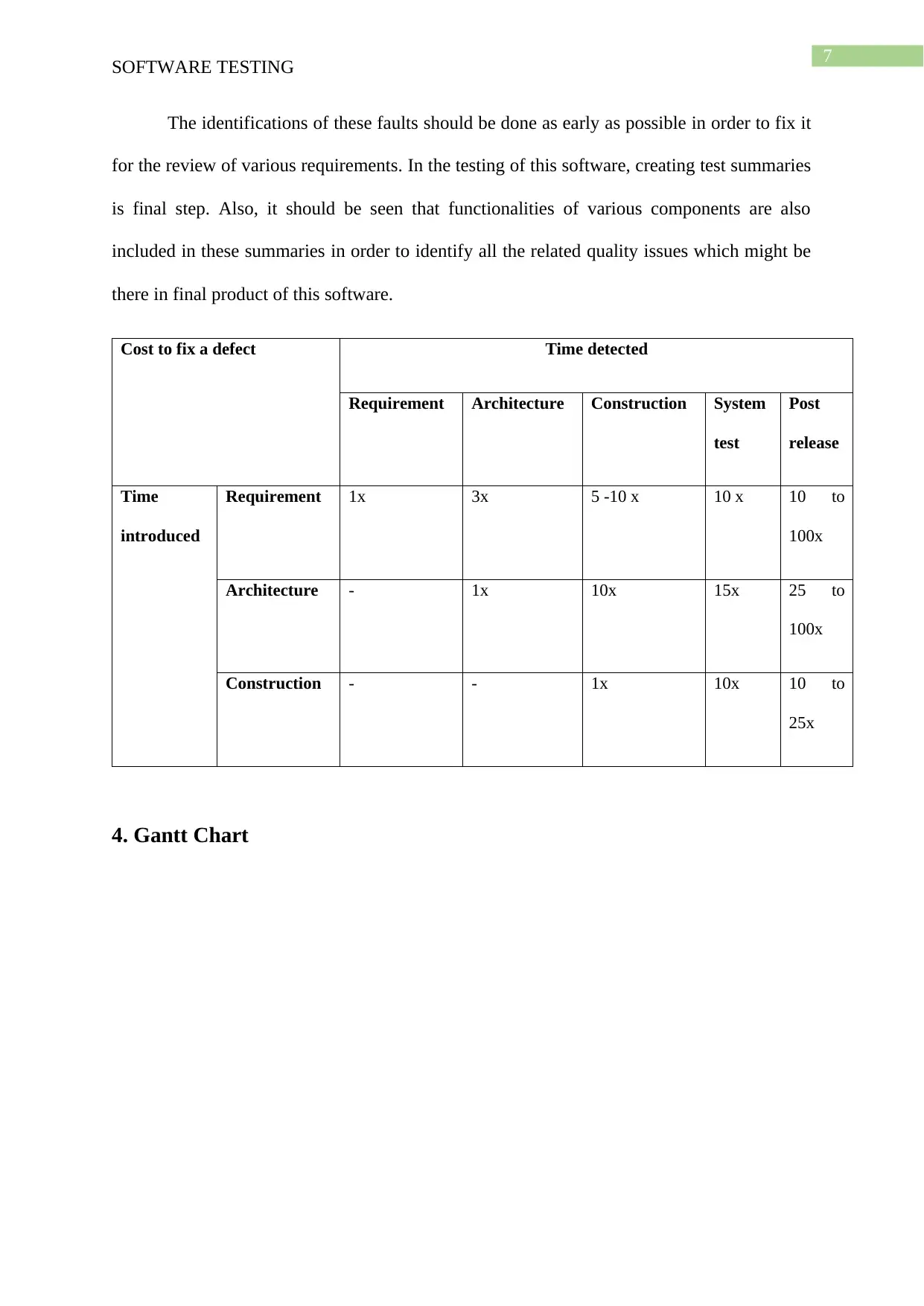

The identifications of these faults should be done as early as possible in order to fix it

for the review of various requirements. In the testing of this software, creating test summaries

is final step. Also, it should be seen that functionalities of various components are also

included in these summaries in order to identify all the related quality issues which might be

there in final product of this software.

Cost to fix a defect Time detected

Requirement Architecture Construction System

test

Post

release

Time

introduced

Requirement 1x 3x 5 -10 x 10 x 10 to

100x

Architecture - 1x 10x 15x 25 to

100x

Construction - - 1x 10x 10 to

25x

4. Gantt Chart

SOFTWARE TESTING

The identifications of these faults should be done as early as possible in order to fix it

for the review of various requirements. In the testing of this software, creating test summaries

is final step. Also, it should be seen that functionalities of various components are also

included in these summaries in order to identify all the related quality issues which might be

there in final product of this software.

Cost to fix a defect Time detected

Requirement Architecture Construction System

test

Post

release

Time

introduced

Requirement 1x 3x 5 -10 x 10 x 10 to

100x

Architecture - 1x 10x 15x 25 to

100x

Construction - - 1x 10x 10 to

25x

4. Gantt Chart

8

SOFTWARE TESTING

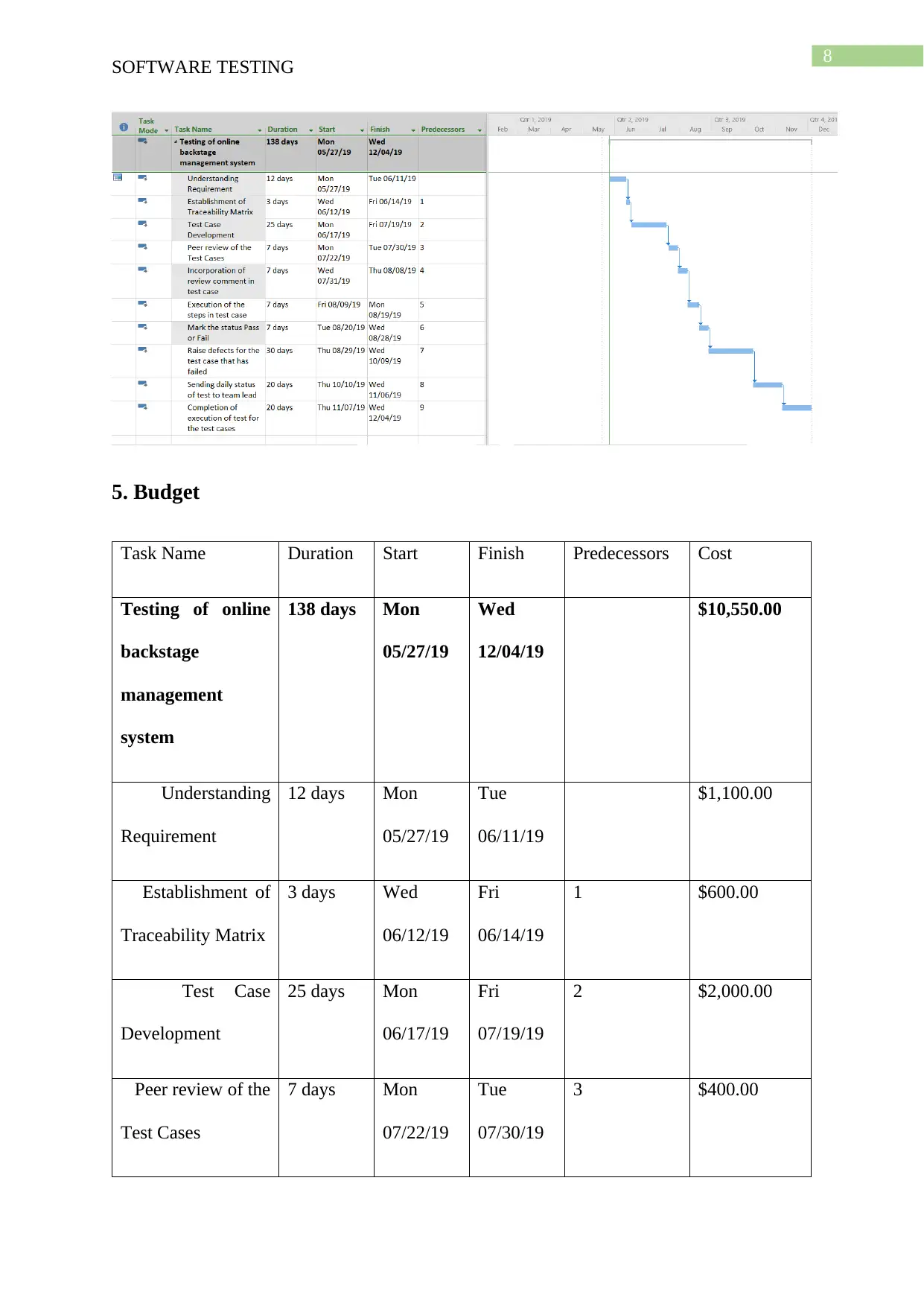

5. Budget

Task Name Duration Start Finish Predecessors Cost

Testing of online

backstage

management

system

138 days Mon

05/27/19

Wed

12/04/19

$10,550.00

Understanding

Requirement

12 days Mon

05/27/19

Tue

06/11/19

$1,100.00

Establishment of

Traceability Matrix

3 days Wed

06/12/19

Fri

06/14/19

1 $600.00

Test Case

Development

25 days Mon

06/17/19

Fri

07/19/19

2 $2,000.00

Peer review of the

Test Cases

7 days Mon

07/22/19

Tue

07/30/19

3 $400.00

SOFTWARE TESTING

5. Budget

Task Name Duration Start Finish Predecessors Cost

Testing of online

backstage

management

system

138 days Mon

05/27/19

Wed

12/04/19

$10,550.00

Understanding

Requirement

12 days Mon

05/27/19

Tue

06/11/19

$1,100.00

Establishment of

Traceability Matrix

3 days Wed

06/12/19

Fri

06/14/19

1 $600.00

Test Case

Development

25 days Mon

06/17/19

Fri

07/19/19

2 $2,000.00

Peer review of the

Test Cases

7 days Mon

07/22/19

Tue

07/30/19

3 $400.00

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

9

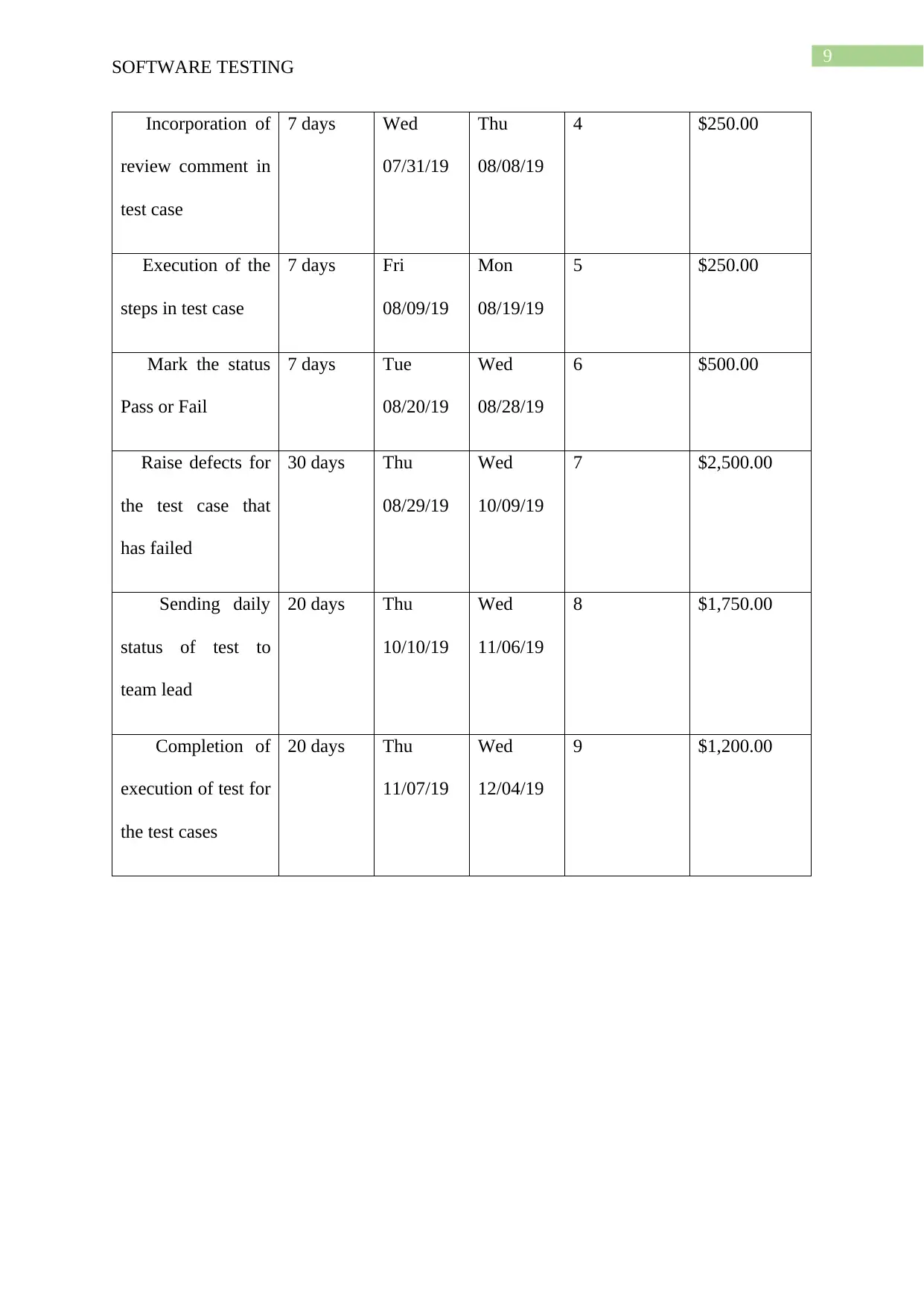

SOFTWARE TESTING

Incorporation of

review comment in

test case

7 days Wed

07/31/19

Thu

08/08/19

4 $250.00

Execution of the

steps in test case

7 days Fri

08/09/19

Mon

08/19/19

5 $250.00

Mark the status

Pass or Fail

7 days Tue

08/20/19

Wed

08/28/19

6 $500.00

Raise defects for

the test case that

has failed

30 days Thu

08/29/19

Wed

10/09/19

7 $2,500.00

Sending daily

status of test to

team lead

20 days Thu

10/10/19

Wed

11/06/19

8 $1,750.00

Completion of

execution of test for

the test cases

20 days Thu

11/07/19

Wed

12/04/19

9 $1,200.00

SOFTWARE TESTING

Incorporation of

review comment in

test case

7 days Wed

07/31/19

Thu

08/08/19

4 $250.00

Execution of the

steps in test case

7 days Fri

08/09/19

Mon

08/19/19

5 $250.00

Mark the status

Pass or Fail

7 days Tue

08/20/19

Wed

08/28/19

6 $500.00

Raise defects for

the test case that

has failed

30 days Thu

08/29/19

Wed

10/09/19

7 $2,500.00

Sending daily

status of test to

team lead

20 days Thu

10/10/19

Wed

11/06/19

8 $1,750.00

Completion of

execution of test for

the test cases

20 days Thu

11/07/19

Wed

12/04/19

9 $1,200.00

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

10

SOFTWARE TESTING

Bibliography

Barr, E. T., Harman, M., McMinn, P., Shahbaz, M., & Yoo, S. (2015). The oracle problem in

software testing: A survey. IEEE transactions on software engineering, 41(5), 507-525.

Black, R. (2014). Advanced Software Testing-Vol. 2: Guide to the Istqb Advanced

Certification as an Advanced Test Manager. Rocky Nook, Inc..

Briand, L., Nejati, S., Sabetzadeh, M., & Bianculli, D. (2016, May). Testing the untestable:

model testing of complex software-intensive systems. In Proceedings of the 38th

international conference on software engineering companion(pp. 789-792). ACM.

Deak, A., Stålhane, T., & Sindre, G. (2016). Challenges and strategies for motivating

software testing personnel. Information and software Technology, 73, 1-15.

Godefroid, P. (2015). Between Testing and Verification: Software Model Checking via

Systematic Testing. In Haifa Verification Conference.

Harman, M., Jia, Y., & Zhang, Y. (2015, April). Achievements, open problems and

challenges for search based software testing. In Software Testing, Verification and Validation

(ICST), 2015 IEEE 8th International Conference on (pp. 1-12). IEEE.

Kempka, J., McMinn, P., & Sudholt, D. (2015). Design and analysis of different alternating

variable searches for search-based software testing. Theoretical Computer Science, 605, 1-20.

Long, T. (2015, July). Collaborative testing across shared software components (doctoral

symposium). In Proceedings of the 2015 International Symposium on Software Testing and

Analysis (pp. 436-439). ACM.

SOFTWARE TESTING

Bibliography

Barr, E. T., Harman, M., McMinn, P., Shahbaz, M., & Yoo, S. (2015). The oracle problem in

software testing: A survey. IEEE transactions on software engineering, 41(5), 507-525.

Black, R. (2014). Advanced Software Testing-Vol. 2: Guide to the Istqb Advanced

Certification as an Advanced Test Manager. Rocky Nook, Inc..

Briand, L., Nejati, S., Sabetzadeh, M., & Bianculli, D. (2016, May). Testing the untestable:

model testing of complex software-intensive systems. In Proceedings of the 38th

international conference on software engineering companion(pp. 789-792). ACM.

Deak, A., Stålhane, T., & Sindre, G. (2016). Challenges and strategies for motivating

software testing personnel. Information and software Technology, 73, 1-15.

Godefroid, P. (2015). Between Testing and Verification: Software Model Checking via

Systematic Testing. In Haifa Verification Conference.

Harman, M., Jia, Y., & Zhang, Y. (2015, April). Achievements, open problems and

challenges for search based software testing. In Software Testing, Verification and Validation

(ICST), 2015 IEEE 8th International Conference on (pp. 1-12). IEEE.

Kempka, J., McMinn, P., & Sudholt, D. (2015). Design and analysis of different alternating

variable searches for search-based software testing. Theoretical Computer Science, 605, 1-20.

Long, T. (2015, July). Collaborative testing across shared software components (doctoral

symposium). In Proceedings of the 2015 International Symposium on Software Testing and

Analysis (pp. 436-439). ACM.

11

SOFTWARE TESTING

Luo, Q., Poshyvanyk, D., Nair, A., & Grechanik, M. (2016, May). FOREPOST: a tool for

detecting performance problems with feedback-driven learning software testing.

In Proceedings of the 38th International Conference on Software Engineering

Companion (pp. 593-596). ACM.

Mäntylä, M. V., Adams, B., Khomh, F., Engström, E., & Petersen, K. (2015). On rapid

releases and software testing: a case study and a semi-systematic literature review. Empirical

Software Engineering, 20(5), 1384-1425.

Marculescu, B., Feldt, R., Torkar, R., & Poulding, S. (2015). An initial industrial evaluation

of interactive search-based testing for embedded software. Applied Soft Computing, 29, 26-

39.

Orso, A., & Rothermel, G. (2014, May). Software testing: a research travelogue (2000–2014).

In Proceedings of the on Future of Software Engineering (pp. 117-132). ACM.

SOFTWARE TESTING

Luo, Q., Poshyvanyk, D., Nair, A., & Grechanik, M. (2016, May). FOREPOST: a tool for

detecting performance problems with feedback-driven learning software testing.

In Proceedings of the 38th International Conference on Software Engineering

Companion (pp. 593-596). ACM.

Mäntylä, M. V., Adams, B., Khomh, F., Engström, E., & Petersen, K. (2015). On rapid

releases and software testing: a case study and a semi-systematic literature review. Empirical

Software Engineering, 20(5), 1384-1425.

Marculescu, B., Feldt, R., Torkar, R., & Poulding, S. (2015). An initial industrial evaluation

of interactive search-based testing for embedded software. Applied Soft Computing, 29, 26-

39.

Orso, A., & Rothermel, G. (2014, May). Software testing: a research travelogue (2000–2014).

In Proceedings of the on Future of Software Engineering (pp. 117-132). ACM.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 16

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.