Comprehensive Software Testing Report: Backstage Management System

VerifiedAdded on 2020/04/01

|14

|2197

|359

Report

AI Summary

This report provides a comprehensive overview of the software testing process for an online backstage management system. It begins with an introduction that outlines the system overview and the testing approach, which includes component, integration, security, performance, regression, and acceptance testing. The report then details the test plan, specifying program modules, features to be tested and not tested, and environmental requirements. It presents several test cases, including registration, sign-in, print functionality, moving competitors, and entering results, along with their expected results. The report also defines pass/fail criteria, testing procedures, test deliverables, tasks, responsibilities, and a test schedule. The conclusion emphasizes the importance of preparing thorough test cases and provides references to support the information presented. The document is intended to guide the testing of the system, ensuring its functionality and adherence to user requirements. The report is a valuable resource for understanding the testing process and its practical application in software development.

Running head: Software Testing 1

Software Testing

Name

Affiliate Institution

Software Testing

Name

Affiliate Institution

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Running head: Software Testing 2

Table of Contents

1. INTRODUCTION...........................................................................................................3

1.1. System Overview......................................................................................................3

1.2. Testing Approach......................................................................................................3

2. TEST PLAN....................................................................................................................5

2.1. Program Modules......................................................................................................5

2.2. Features to be Tested................................................................................................5

2.3. Features Not to be Tested.........................................................................................6

2.4. Environmental Requirements...................................................................................6

3. TEST CASE.....................................................................................................................7

3.1. Pass / Fail Criteria...................................................................................................11

3.2. Testing Procedure...................................................................................................11

3.3. Test Deliverables....................................................................................................11

3.4. Testing Tasks..........................................................................................................12

3.5. Responsibilities.......................................................................................................12

3.6. Test Schedule..........................................................................................................12

4. Conclusion.....................................................................................................................12

References..........................................................................................................................14

Table of Contents

1. INTRODUCTION...........................................................................................................3

1.1. System Overview......................................................................................................3

1.2. Testing Approach......................................................................................................3

2. TEST PLAN....................................................................................................................5

2.1. Program Modules......................................................................................................5

2.2. Features to be Tested................................................................................................5

2.3. Features Not to be Tested.........................................................................................6

2.4. Environmental Requirements...................................................................................6

3. TEST CASE.....................................................................................................................7

3.1. Pass / Fail Criteria...................................................................................................11

3.2. Testing Procedure...................................................................................................11

3.3. Test Deliverables....................................................................................................11

3.4. Testing Tasks..........................................................................................................12

3.5. Responsibilities.......................................................................................................12

3.6. Test Schedule..........................................................................................................12

4. Conclusion.....................................................................................................................12

References..........................................................................................................................14

Running head: Software Testing 3

1.INTRODUCTION

This report defines the process and expectations for testing online backstage management

system.

1.1. System Overview

The objective of this document is to offer a manual on the testing procedures and expected

output. It outlines the essential functionalities of the online backstage management system

and may be utilize to train the users of the system on the general system functionality. It will

include testing of individual modules to ensure it meets the user requirements and aligns with

the expected output. The basic overview of this document outlines test plan, that is, feature

to be tested, those not to be tested, technical environmental requirement, test cases, test

deliverables, test procedure among others. The goal of this test procedure is to make sure

that the system meets the set specification, high level of confidence, accuracy and

importance or project deliverables.

1.2.Testing Approach

Testing is an activity of assessing a software and application components to uncover the

variance between present and needed objectives and to analyze the characteristic of

components of application under development. The process of assessing the online

backstage management system involves manual and automatic tests. Set of custom

scripts perform automatic tests on items of the system that don’t require interaction of the

user. On the other hand, testers or developers test manually items that require user

interaction. Testing is a very reliable operation. As such, testing an application is a

continuous operation done through the development stages of a system. Test plans should

be generated at each test level (Hass, 2014).

1.INTRODUCTION

This report defines the process and expectations for testing online backstage management

system.

1.1. System Overview

The objective of this document is to offer a manual on the testing procedures and expected

output. It outlines the essential functionalities of the online backstage management system

and may be utilize to train the users of the system on the general system functionality. It will

include testing of individual modules to ensure it meets the user requirements and aligns with

the expected output. The basic overview of this document outlines test plan, that is, feature

to be tested, those not to be tested, technical environmental requirement, test cases, test

deliverables, test procedure among others. The goal of this test procedure is to make sure

that the system meets the set specification, high level of confidence, accuracy and

importance or project deliverables.

1.2.Testing Approach

Testing is an activity of assessing a software and application components to uncover the

variance between present and needed objectives and to analyze the characteristic of

components of application under development. The process of assessing the online

backstage management system involves manual and automatic tests. Set of custom

scripts perform automatic tests on items of the system that don’t require interaction of the

user. On the other hand, testers or developers test manually items that require user

interaction. Testing is a very reliable operation. As such, testing an application is a

continuous operation done through the development stages of a system. Test plans should

be generated at each test level (Hass, 2014).

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Running head: Software Testing 4

Component Testing- component is the smallest unit of any system. As such, Component

testing is a method of testing the smallest unit of any system or application. An

application can be assumed as a merge and integration of several small individual units.

Before testing the whole system, it is important that every component or the lowest unit

of the system is tested carefully. In online backstage management system, every unit is

tested independently. Each unit gets an input, some processing is done and generates the

result. The output is then approved against the anticipated feature (Myers, Sandler &

Badgett, 2012).

Integration Testing- System Integration Testing is done to validate communication

between the units of an online backstage management system. It involves validation of

the low and high-level software needs stated in the Software Requirements descriptions.

It also validates the online backstage management system's conformity with others and

examine the interface between units. Units are tested individually first and then

combined to form a system.

Security Testing- Efforts will be made to access the online backstage management system

without right authentication. Testing should be done to make sure that only granted

authentication accesses occurs. Test carried out attempts the scripting of the cross-site

safety holes and overflow buffer to make sure the system is not exposed to a malicious

threat.

Performance Testing- is carried out manually. Test team will test the system by

communicating with it through the user interface and determine if the response time is

within acceptable/ reasonable limits. Response time is the amount of time the system

takes to respond to users’ requests. Performance testing is considered unsuccessful if the

tester can determine response, in hours or minutes, with a normal clock. (Homes, 2013).

Component Testing- component is the smallest unit of any system. As such, Component

testing is a method of testing the smallest unit of any system or application. An

application can be assumed as a merge and integration of several small individual units.

Before testing the whole system, it is important that every component or the lowest unit

of the system is tested carefully. In online backstage management system, every unit is

tested independently. Each unit gets an input, some processing is done and generates the

result. The output is then approved against the anticipated feature (Myers, Sandler &

Badgett, 2012).

Integration Testing- System Integration Testing is done to validate communication

between the units of an online backstage management system. It involves validation of

the low and high-level software needs stated in the Software Requirements descriptions.

It also validates the online backstage management system's conformity with others and

examine the interface between units. Units are tested individually first and then

combined to form a system.

Security Testing- Efforts will be made to access the online backstage management system

without right authentication. Testing should be done to make sure that only granted

authentication accesses occurs. Test carried out attempts the scripting of the cross-site

safety holes and overflow buffer to make sure the system is not exposed to a malicious

threat.

Performance Testing- is carried out manually. Test team will test the system by

communicating with it through the user interface and determine if the response time is

within acceptable/ reasonable limits. Response time is the amount of time the system

takes to respond to users’ requests. Performance testing is considered unsuccessful if the

tester can determine response, in hours or minutes, with a normal clock. (Homes, 2013).

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Running head: Software Testing 5

Regression Testing- this tests are carried out when there are some changes that have been

made to the system. The structure is to run all tests required to make sure regression does

not occur but there is no need to utilize more resources on unrelated test (Kaner, Bach &

Pettichord, 2012).

Acceptance Testing- involves provision of setups of simulation for online backstage

management system to verify and offer feedback. The simulation systems will be offered

as several aspects of capabilities to reach usability level appropriate for simulating.

Online backstage management system will offer response regarding modifications, that

should be employed just before the last release. All main modifications to the system

will be filed in SAD and SRS revised ways as appropriate (Alsmadi, 2012).

2.TEST PLAN

2.1.Program Modules

The execution of the User Interface- Manual of test scenarios sets will be carried for all

the user interfaces to facilitate appropriate product operation. The scenarios will include

all the needs in the SRS (Desai, 2016).

Batch Job- execution of new batch instructions shall be carried out on similar set of data

and directly compared for accuracy.

Database- execution of the chosen SQL commands shall be directly carried out by

utilizing tools such as SQL*Plus through tools like SQL*Plus to validate appropriate

functionality of stored procedure and triggers.

User Documentation- Reviews on document will be carried out by the development team

and validated to determine completeness and accuracy.

Regression Testing- this tests are carried out when there are some changes that have been

made to the system. The structure is to run all tests required to make sure regression does

not occur but there is no need to utilize more resources on unrelated test (Kaner, Bach &

Pettichord, 2012).

Acceptance Testing- involves provision of setups of simulation for online backstage

management system to verify and offer feedback. The simulation systems will be offered

as several aspects of capabilities to reach usability level appropriate for simulating.

Online backstage management system will offer response regarding modifications, that

should be employed just before the last release. All main modifications to the system

will be filed in SAD and SRS revised ways as appropriate (Alsmadi, 2012).

2.TEST PLAN

2.1.Program Modules

The execution of the User Interface- Manual of test scenarios sets will be carried for all

the user interfaces to facilitate appropriate product operation. The scenarios will include

all the needs in the SRS (Desai, 2016).

Batch Job- execution of new batch instructions shall be carried out on similar set of data

and directly compared for accuracy.

Database- execution of the chosen SQL commands shall be directly carried out by

utilizing tools such as SQL*Plus through tools like SQL*Plus to validate appropriate

functionality of stored procedure and triggers.

User Documentation- Reviews on document will be carried out by the development team

and validated to determine completeness and accuracy.

Running head: Software Testing 6

2.2.Features to be Tested

The following are some of the features that are to be tested on functionality and whether

it meets the requirements Registration of competitors’ interface, login interface, search,

entering results, viewing results, viewing sections of data, sorting, moving competitor

location, importation of information from guidebook, print function, edit details function,

2.3.Features Not to be Tested

The features that will not undergo testing because the testing team does not possess the

resources to check on their limits include the followingS: Limits of scalability, size of

dataset, availability of hardware.

2.4.Environmental Requirements

Hardware- Two computers with internet connection preferable running windows

operating system. One other computer running UNIX operating system

Software- Installation of web browser like chrome, Mozilla, safari, internet explorer 5.0

Resin EE server

Oracle 9i

MS office word and excel for writing reports.

Tools- In-house scripts will be the only tools for testing to facilitate configuration

automation of database to enable running tests manually and readiness for running

security tests and automated Ali files (Graham & Fewster, 2012).

Risks and Assumptions

Resources are extremely limited for the test environment in the project. Time and

personnel are quite scarce resource to get and hardware and software platforms are

contrasting from the target system. The assumption is that implementation environment

will be powerful and stable than the test environment. Thus, few scalability and

performance testing will be carried out in the test environment.

2.2.Features to be Tested

The following are some of the features that are to be tested on functionality and whether

it meets the requirements Registration of competitors’ interface, login interface, search,

entering results, viewing results, viewing sections of data, sorting, moving competitor

location, importation of information from guidebook, print function, edit details function,

2.3.Features Not to be Tested

The features that will not undergo testing because the testing team does not possess the

resources to check on their limits include the followingS: Limits of scalability, size of

dataset, availability of hardware.

2.4.Environmental Requirements

Hardware- Two computers with internet connection preferable running windows

operating system. One other computer running UNIX operating system

Software- Installation of web browser like chrome, Mozilla, safari, internet explorer 5.0

Resin EE server

Oracle 9i

MS office word and excel for writing reports.

Tools- In-house scripts will be the only tools for testing to facilitate configuration

automation of database to enable running tests manually and readiness for running

security tests and automated Ali files (Graham & Fewster, 2012).

Risks and Assumptions

Resources are extremely limited for the test environment in the project. Time and

personnel are quite scarce resource to get and hardware and software platforms are

contrasting from the target system. The assumption is that implementation environment

will be powerful and stable than the test environment. Thus, few scalability and

performance testing will be carried out in the test environment.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Running head: Software Testing 7

3.TEST CASE

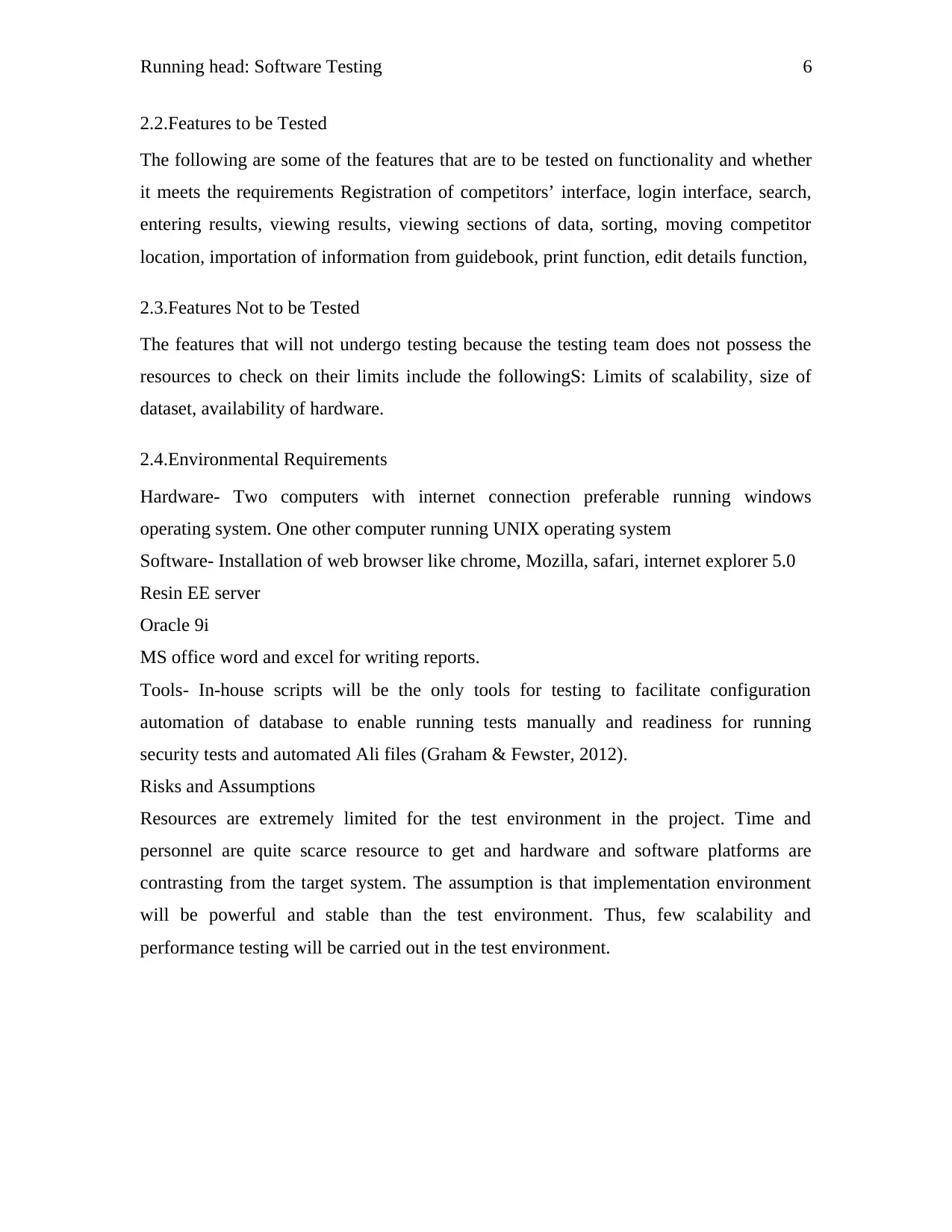

Title: New Registration Page – allow the competitors to register by keying in their details

on the registration form.

Description: the system should allow a competitor to register successfully with ease.

Condition: the competitor must have not registered earlier with the same credentials.

Assumption: the user has installed a supported web browser

Test Steps:

Navigate to the online backstage management system registration page and fill in the

details shown below:

Figure 1: New Registration Page

Then click ‘Save’

3.TEST CASE

Title: New Registration Page – allow the competitors to register by keying in their details

on the registration form.

Description: the system should allow a competitor to register successfully with ease.

Condition: the competitor must have not registered earlier with the same credentials.

Assumption: the user has installed a supported web browser

Test Steps:

Navigate to the online backstage management system registration page and fill in the

details shown below:

Figure 1: New Registration Page

Then click ‘Save’

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Running head: Software Testing 8

Expected Result: a page displaying backstage system control panel will be loaded

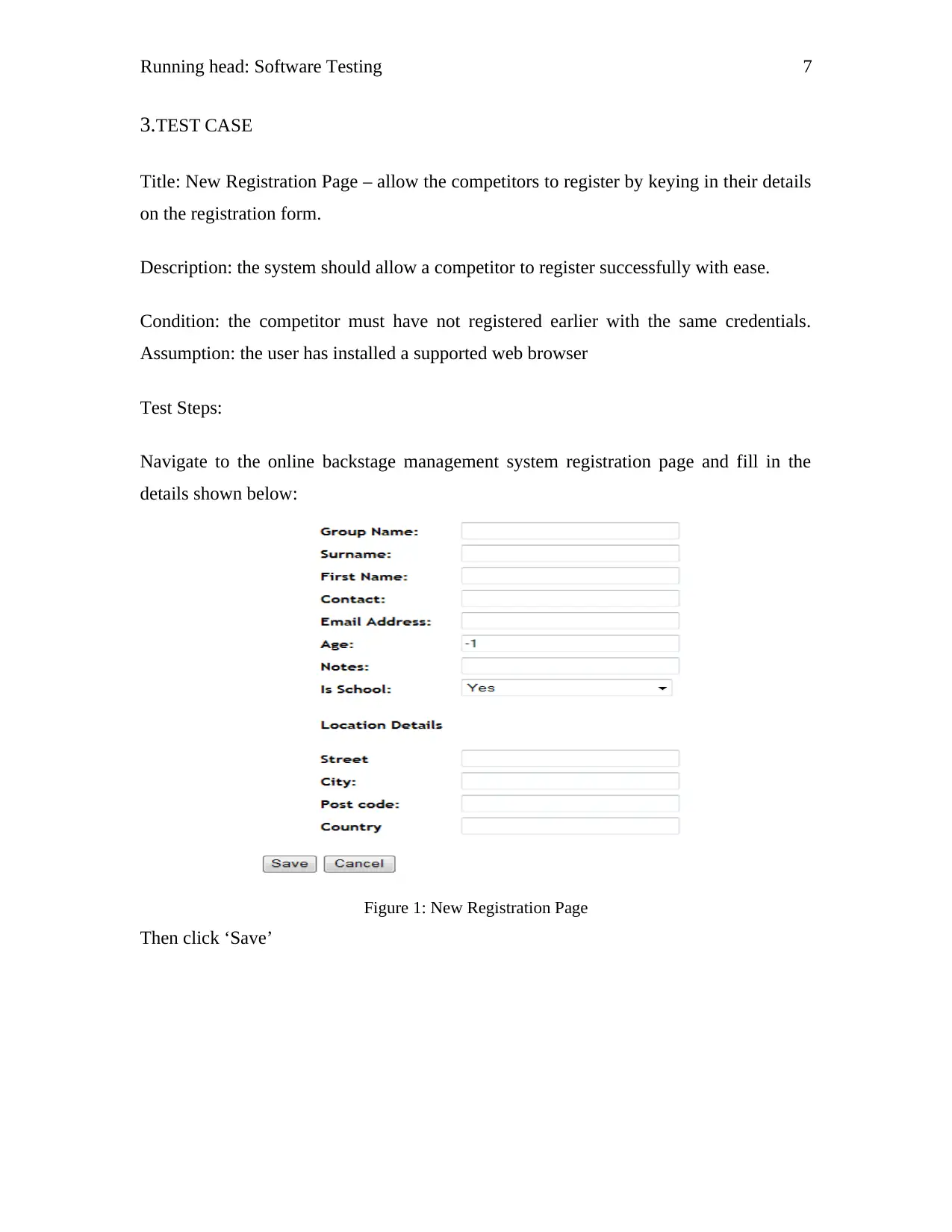

Title: Sign in Page – Authenticate users on online backstage management system.

Figure 2: Sign In Page

Description: the system should allow a user who has signed up to successfully sign in to

the system.

Condition: the user must have signed up with and email or username and password.

Assumption: the user has installed a supported web browser

Test Steps:

Navigate to the online backstage management system sign in page

In the ‘Role’ drop down list select your role

In the ‘password’ field enter the password you used to sign up

Then click ‘Sign in’

Expected Result: a page displaying backstage system control panel will be loaded

Title: Sign in Page – Authenticate users on online backstage management system.

Figure 2: Sign In Page

Description: the system should allow a user who has signed up to successfully sign in to

the system.

Condition: the user must have signed up with and email or username and password.

Assumption: the user has installed a supported web browser

Test Steps:

Navigate to the online backstage management system sign in page

In the ‘Role’ drop down list select your role

In the ‘password’ field enter the password you used to sign up

Then click ‘Sign in’

Running head: Software Testing 9

Expected Result: a page displaying backstage system control panel will be loaded

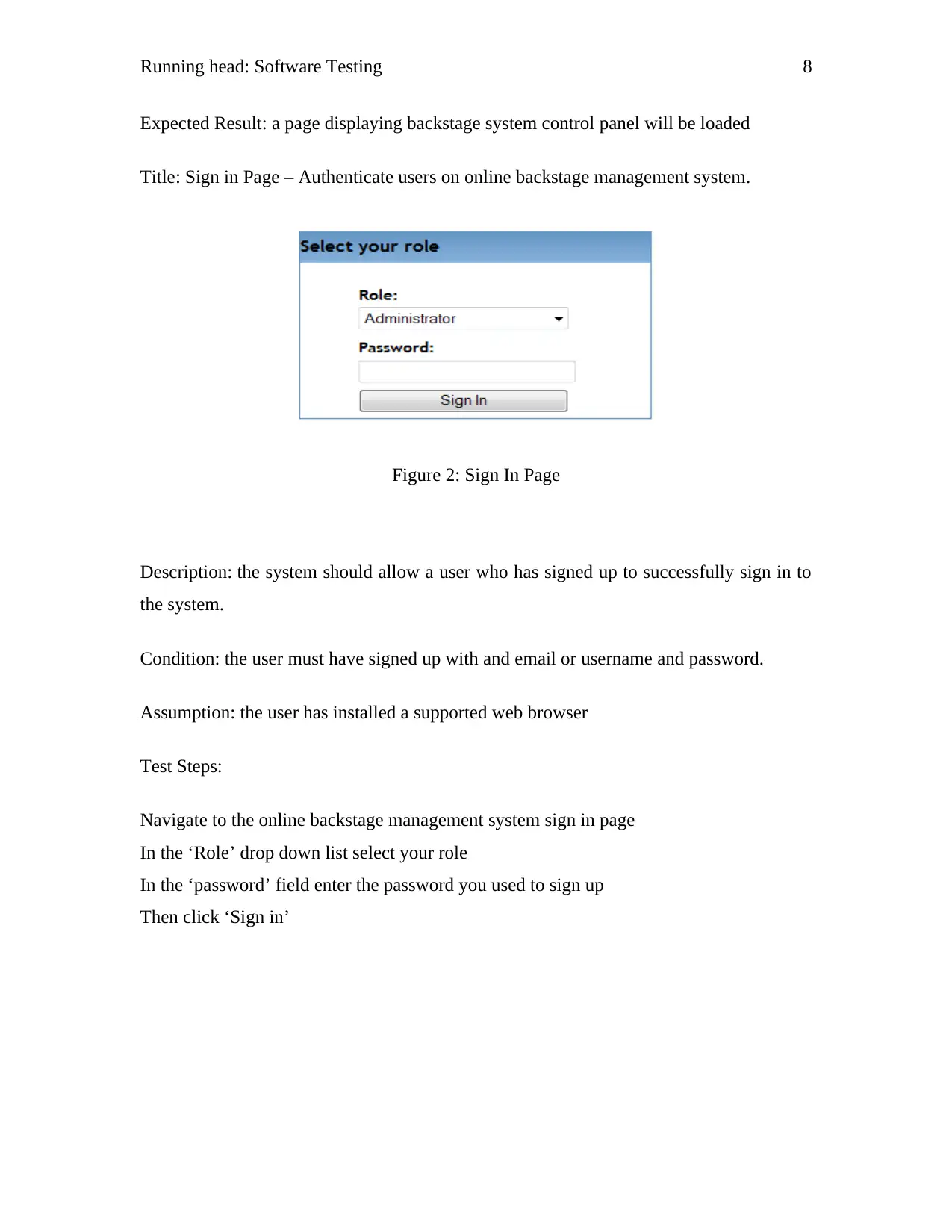

Title: Print – clicking on the print icon will open a new window in PDF and

prompts the user to select installed printer

Description: the system should allow a competitor to register successfully with ease.

Condition: the user must be in the view panel of either results or competitors.

Assumption: the user has installed a printer

Expected Result: a page displaying backstage system control panel will be loaded

Title: Print – clicking on the print icon will open a new window in PDF and

prompts the user to select installed printer

Description: the system should allow a competitor to register successfully with ease.

Condition: the user must be in the view panel of either results or competitors.

Assumption: the user has installed a printer

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Running head: Software Testing 10

Figure 3: Print Output

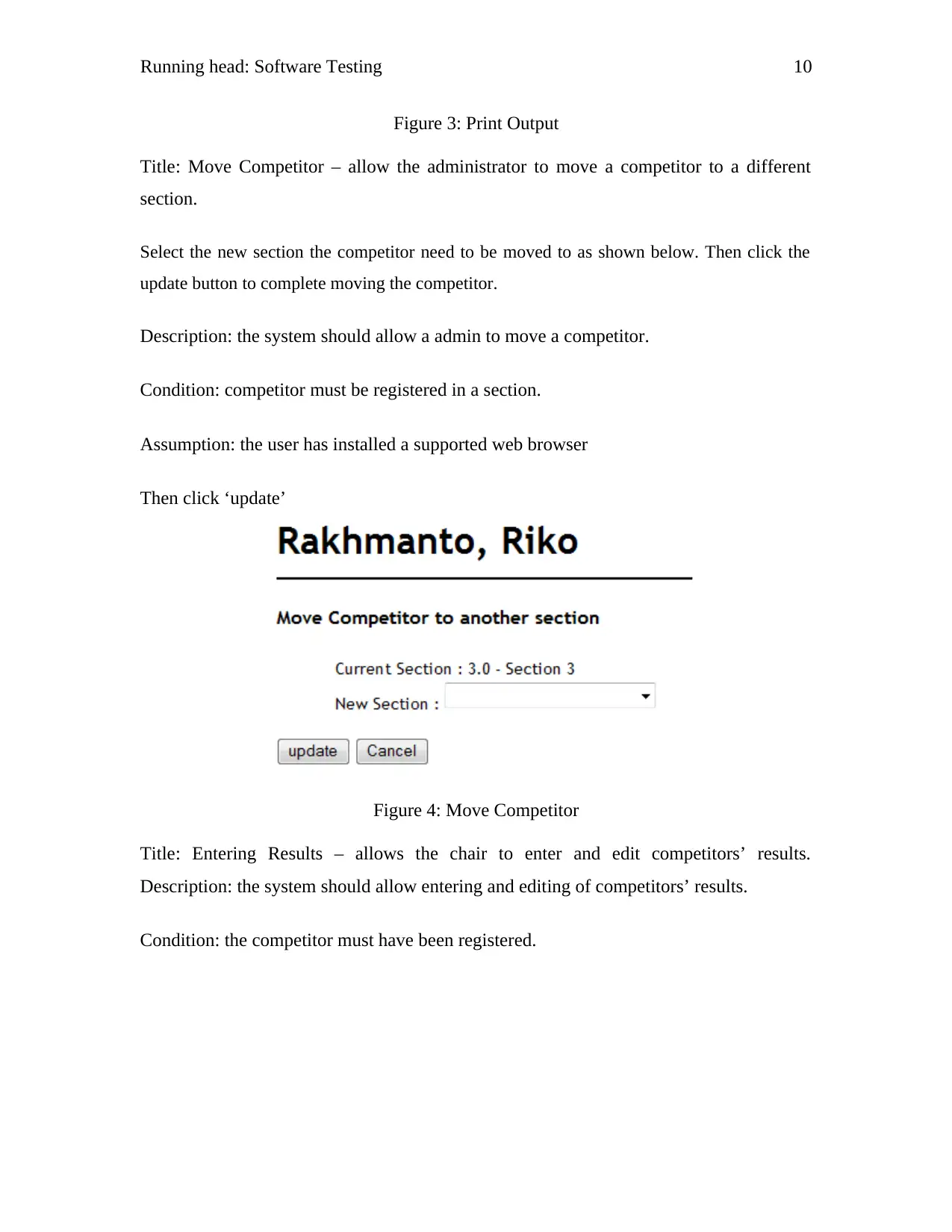

Title: Move Competitor – allow the administrator to move a competitor to a different

section.

Select the new section the competitor need to be moved to as shown below. Then click the

update button to complete moving the competitor.

Description: the system should allow a admin to move a competitor.

Condition: competitor must be registered in a section.

Assumption: the user has installed a supported web browser

Then click ‘update’

Figure 4: Move Competitor

Title: Entering Results – allows the chair to enter and edit competitors’ results.

Description: the system should allow entering and editing of competitors’ results.

Condition: the competitor must have been registered.

Figure 3: Print Output

Title: Move Competitor – allow the administrator to move a competitor to a different

section.

Select the new section the competitor need to be moved to as shown below. Then click the

update button to complete moving the competitor.

Description: the system should allow a admin to move a competitor.

Condition: competitor must be registered in a section.

Assumption: the user has installed a supported web browser

Then click ‘update’

Figure 4: Move Competitor

Title: Entering Results – allows the chair to enter and edit competitors’ results.

Description: the system should allow entering and editing of competitors’ results.

Condition: the competitor must have been registered.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Running head: Software Testing 11

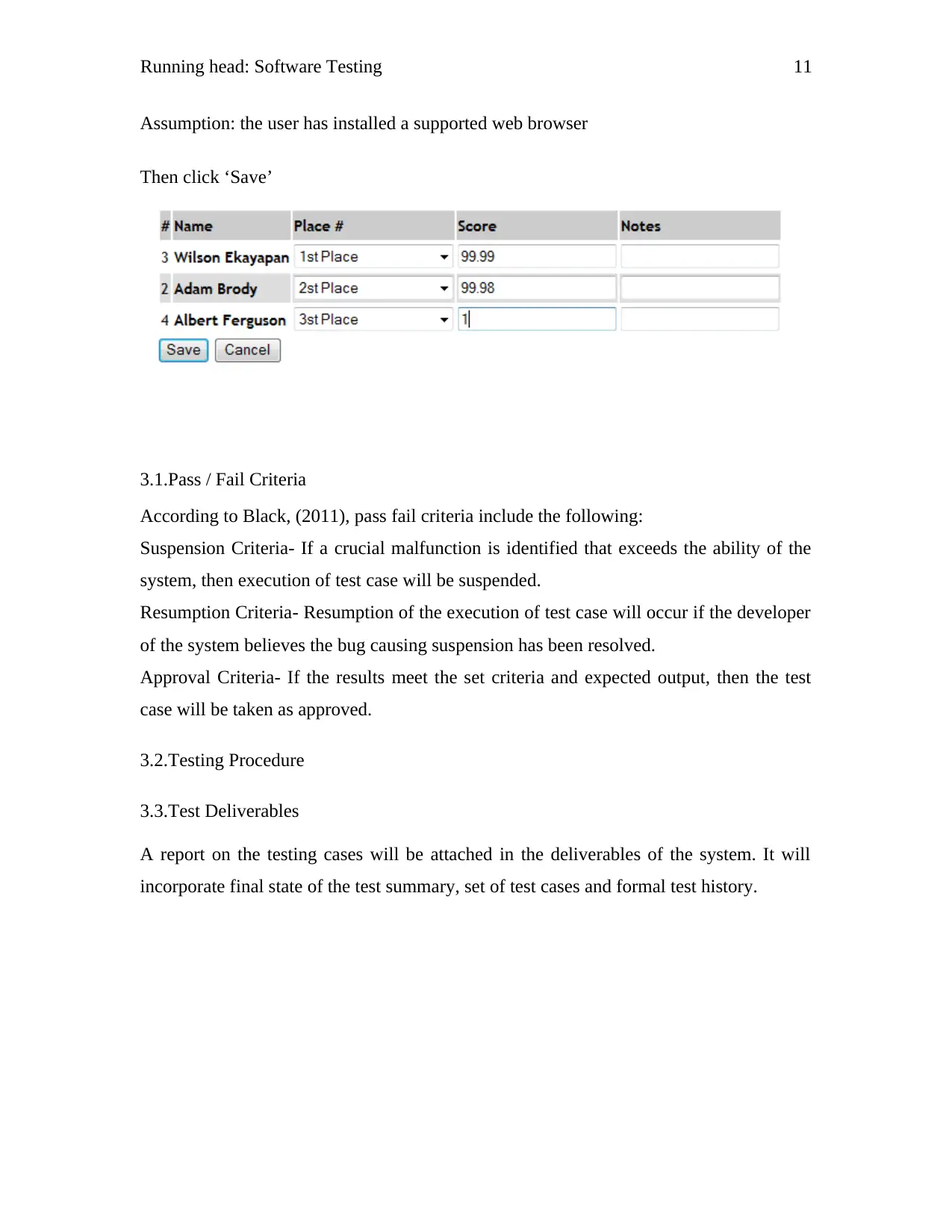

Assumption: the user has installed a supported web browser

Then click ‘Save’

3.1.Pass / Fail Criteria

According to Black, (2011), pass fail criteria include the following:

Suspension Criteria- If a crucial malfunction is identified that exceeds the ability of the

system, then execution of test case will be suspended.

Resumption Criteria- Resumption of the execution of test case will occur if the developer

of the system believes the bug causing suspension has been resolved.

Approval Criteria- If the results meet the set criteria and expected output, then the test

case will be taken as approved.

3.2.Testing Procedure

3.3.Test Deliverables

A report on the testing cases will be attached in the deliverables of the system. It will

incorporate final state of the test summary, set of test cases and formal test history.

Assumption: the user has installed a supported web browser

Then click ‘Save’

3.1.Pass / Fail Criteria

According to Black, (2011), pass fail criteria include the following:

Suspension Criteria- If a crucial malfunction is identified that exceeds the ability of the

system, then execution of test case will be suspended.

Resumption Criteria- Resumption of the execution of test case will occur if the developer

of the system believes the bug causing suspension has been resolved.

Approval Criteria- If the results meet the set criteria and expected output, then the test

case will be taken as approved.

3.2.Testing Procedure

3.3.Test Deliverables

A report on the testing cases will be attached in the deliverables of the system. It will

incorporate final state of the test summary, set of test cases and formal test history.

Running head: Software Testing 12

3.4.Testing Tasks

Come up with test cases, construct scripts for automatic test cases, database preparation

for automated testing, run tests, report bugs, finalize test report and manage changes.

Develop Test Cases (Hambling, Morgan & British Computer Society 2010).

3.5.Responsibilities

It is the responsibility of all the developers to ensure that component ad integration

testing has been carried out completely.

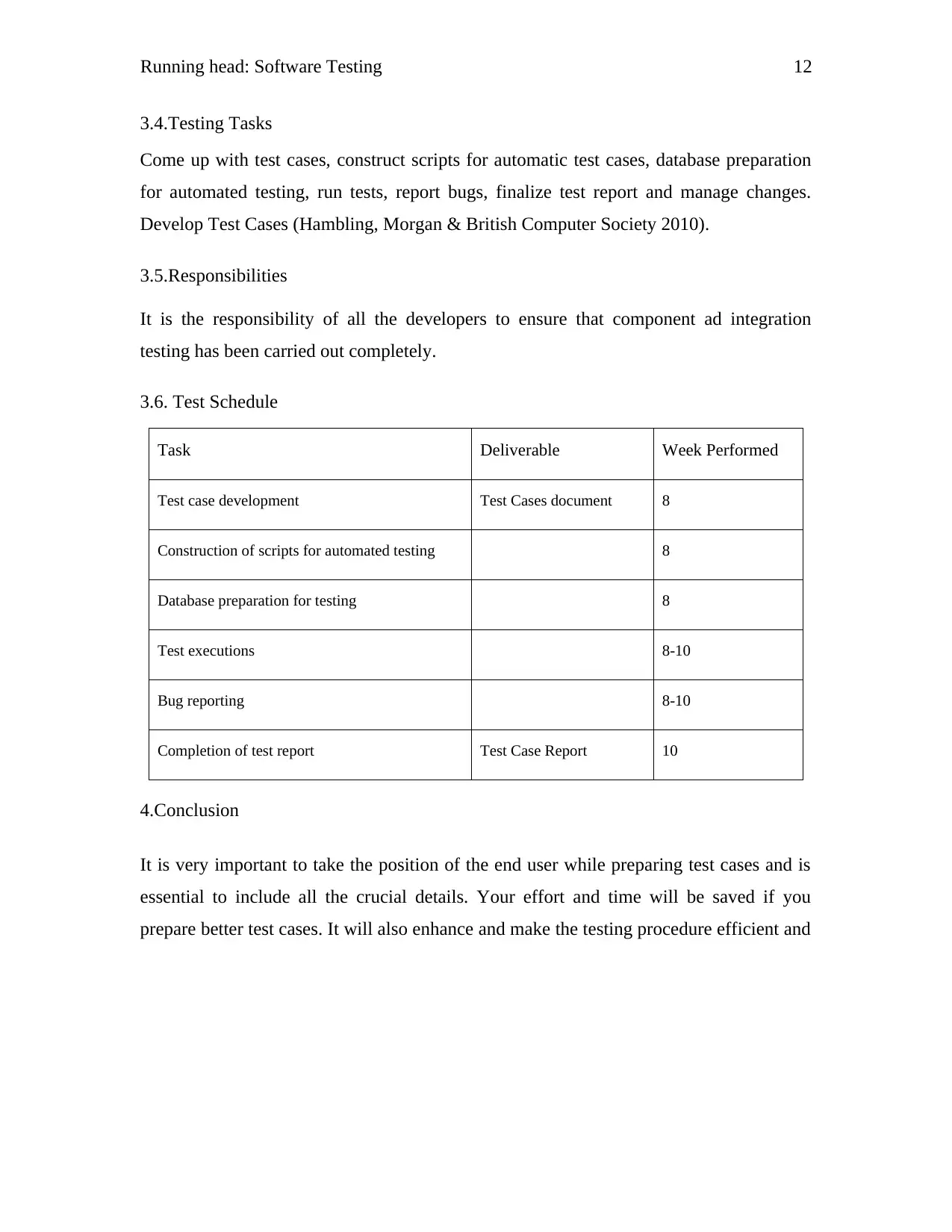

3.6. Test Schedule

Task Deliverable Week Performed

Test case development Test Cases document 8

Construction of scripts for automated testing 8

Database preparation for testing 8

Test executions 8-10

Bug reporting 8-10

Completion of test report Test Case Report 10

4.Conclusion

It is very important to take the position of the end user while preparing test cases and is

essential to include all the crucial details. Your effort and time will be saved if you

prepare better test cases. It will also enhance and make the testing procedure efficient and

3.4.Testing Tasks

Come up with test cases, construct scripts for automatic test cases, database preparation

for automated testing, run tests, report bugs, finalize test report and manage changes.

Develop Test Cases (Hambling, Morgan & British Computer Society 2010).

3.5.Responsibilities

It is the responsibility of all the developers to ensure that component ad integration

testing has been carried out completely.

3.6. Test Schedule

Task Deliverable Week Performed

Test case development Test Cases document 8

Construction of scripts for automated testing 8

Database preparation for testing 8

Test executions 8-10

Bug reporting 8-10

Completion of test report Test Case Report 10

4.Conclusion

It is very important to take the position of the end user while preparing test cases and is

essential to include all the crucial details. Your effort and time will be saved if you

prepare better test cases. It will also enhance and make the testing procedure efficient and

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 14

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.