AI-Powered Speech Recognition for Parkinson's Disease Classification

VerifiedAdded on 2022/08/21

|21

|1260

|11

Project

AI Summary

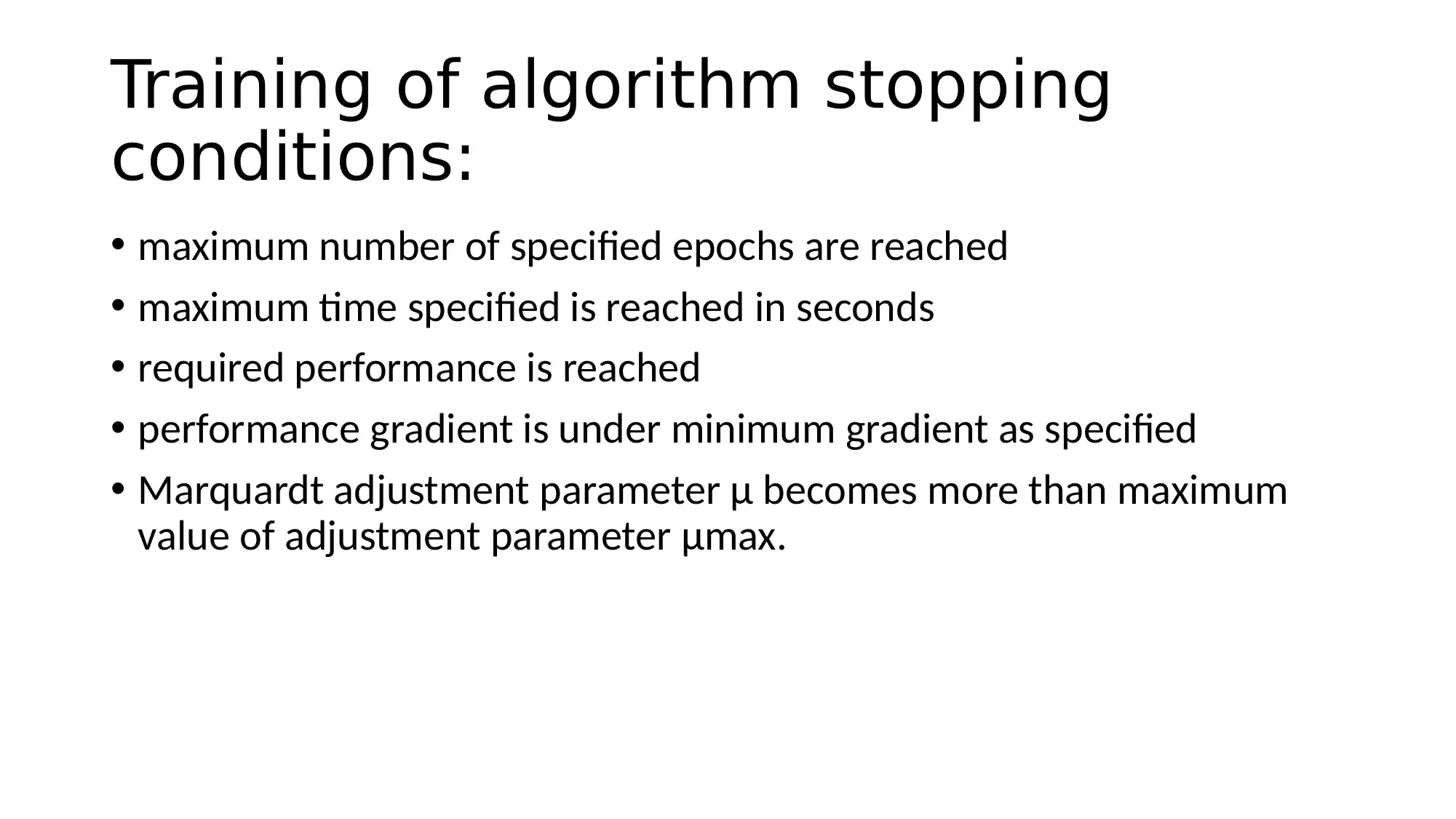

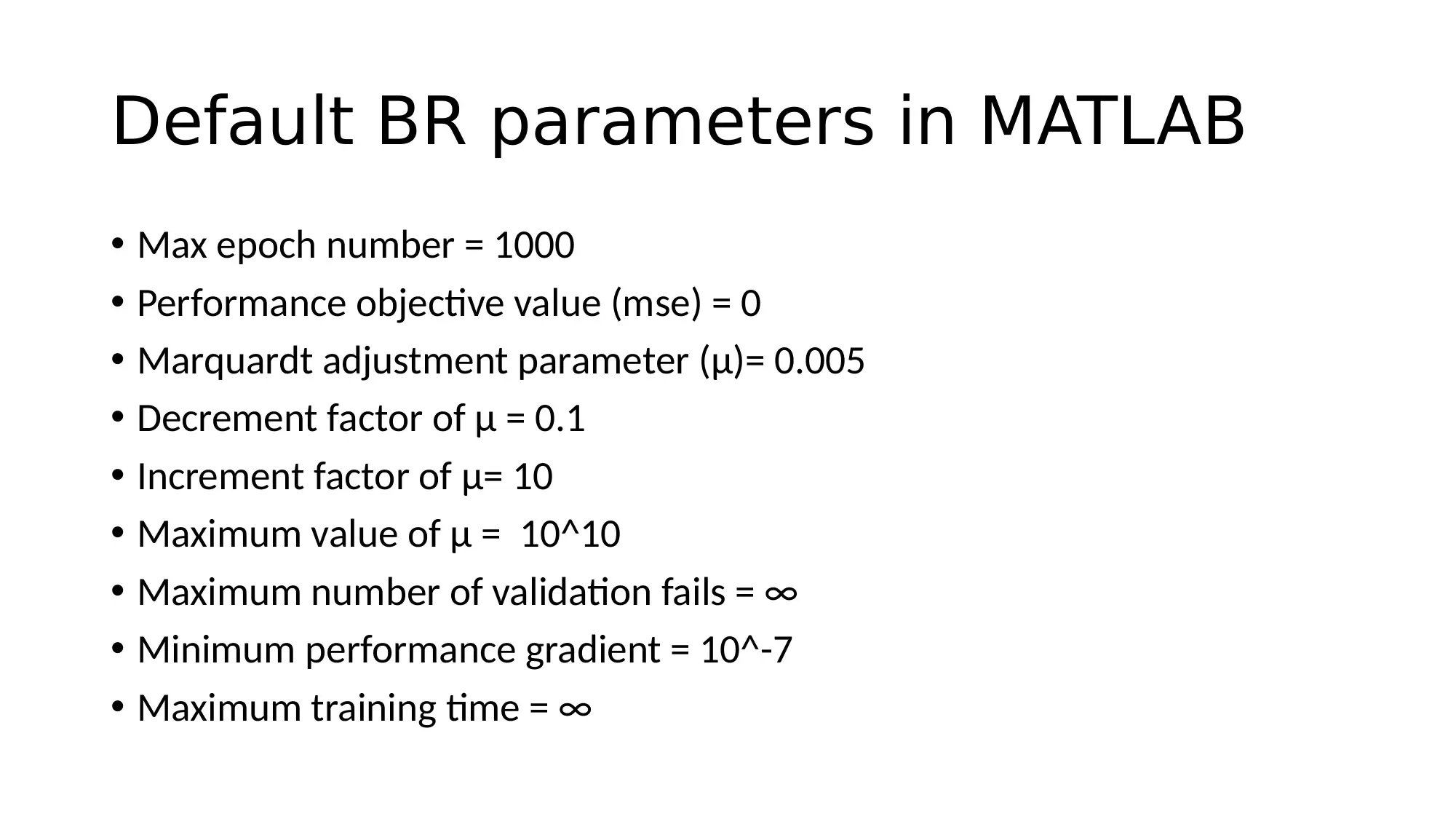

This project presents a speech recognition system designed to classify individuals as either healthy or affected by Parkinson's disease based on their voice characteristics. The project utilizes a dataset of speech recordings and employs a shallow neural network classification technique implemented in MATLAB. The methodology involves loading the dataset, applying different algorithms with varying parameters, and selecting the optimal algorithm (Bayesian Regularization back-propagation) based on performance. Experiments were conducted, and the results indicate a satisfactory classification accuracy, with over 73% of cases correctly identified. The project discusses the limitations of the system, such as its inability to handle multiple classes or directly process vocal samples without pre-processing. The report includes details on the dataset, methodology, experimental results, and MATLAB code, along with plots of training state, error histograms, ROC curves, and confusion matrices. The project highlights the successful application of AI algorithms in identifying Parkinson's disease through speech analysis, while also acknowledging areas for potential improvement and future research.

1 out of 21

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)