Homework 2: Statistical Analysis, Regression, Modeling, and R Code

VerifiedAdded on 2023/06/10

|5

|715

|100

Homework Assignment

AI Summary

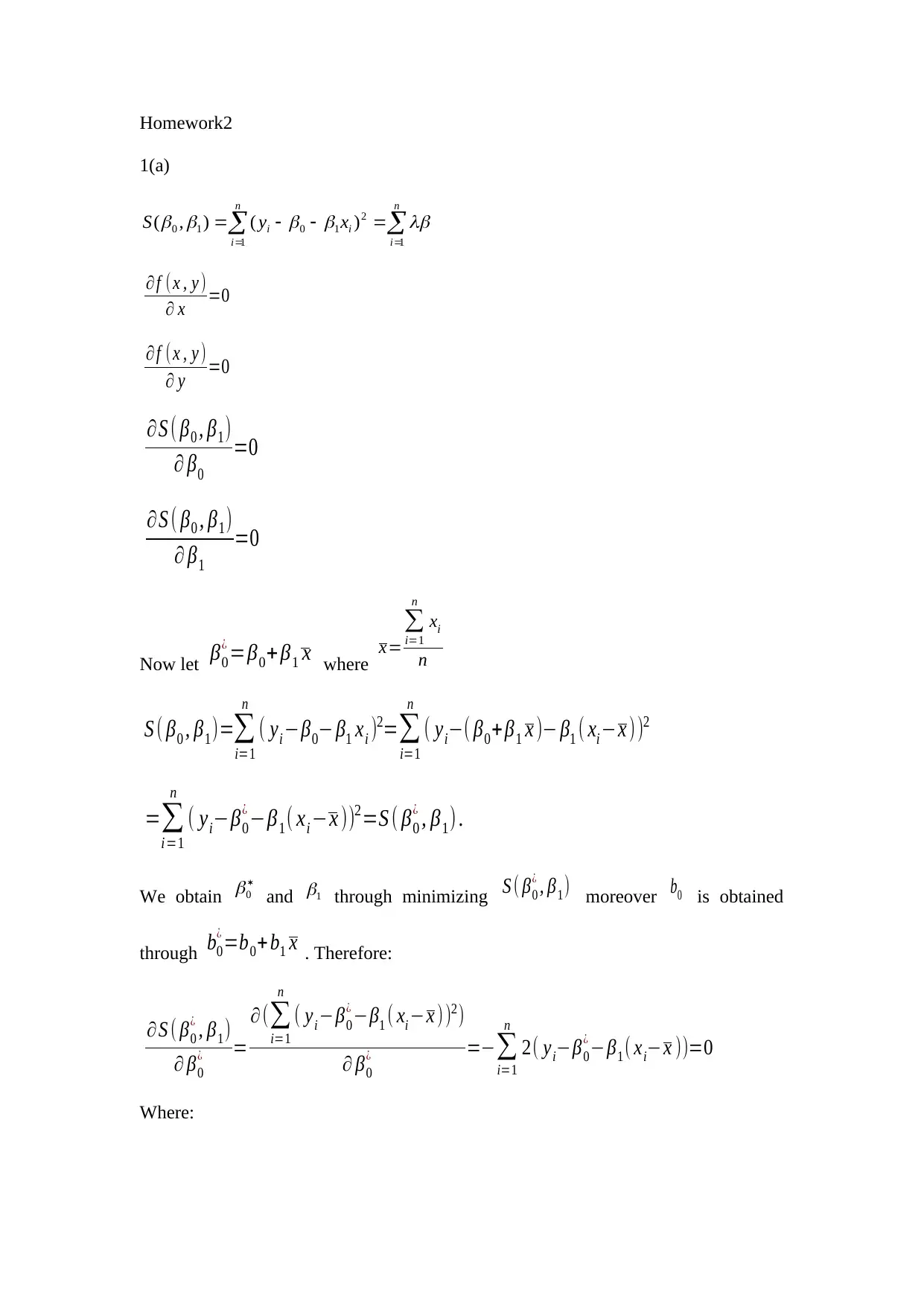

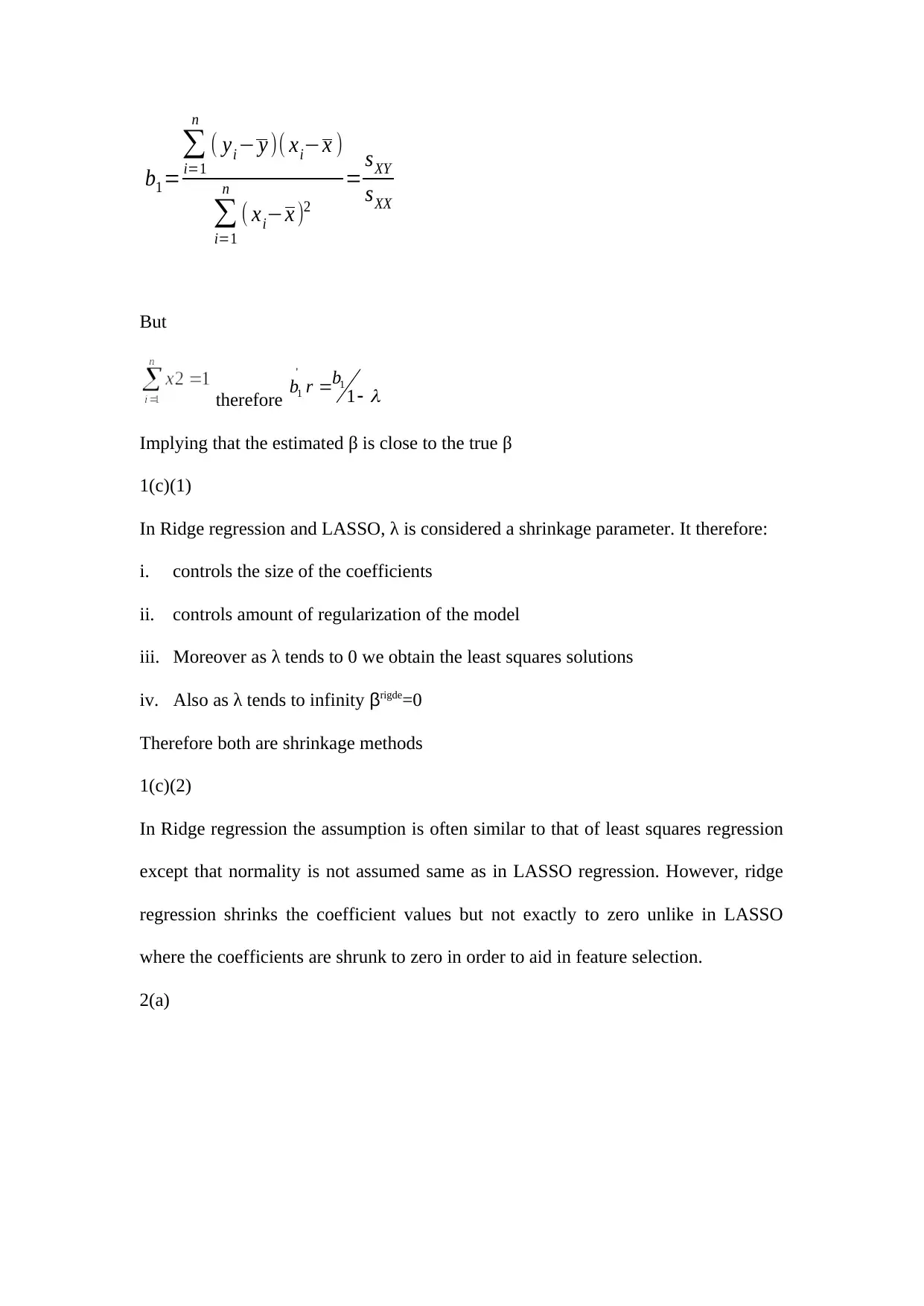

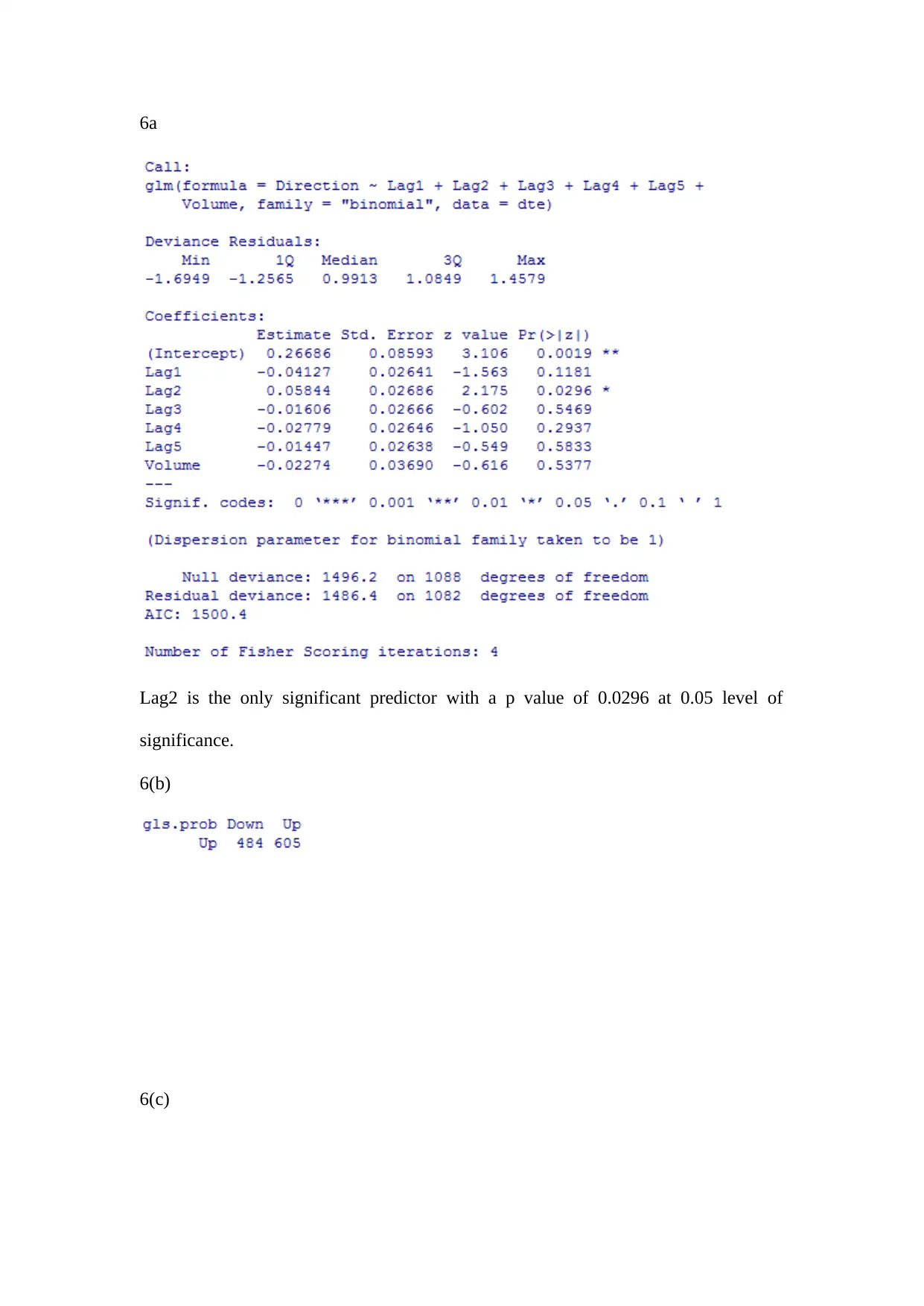

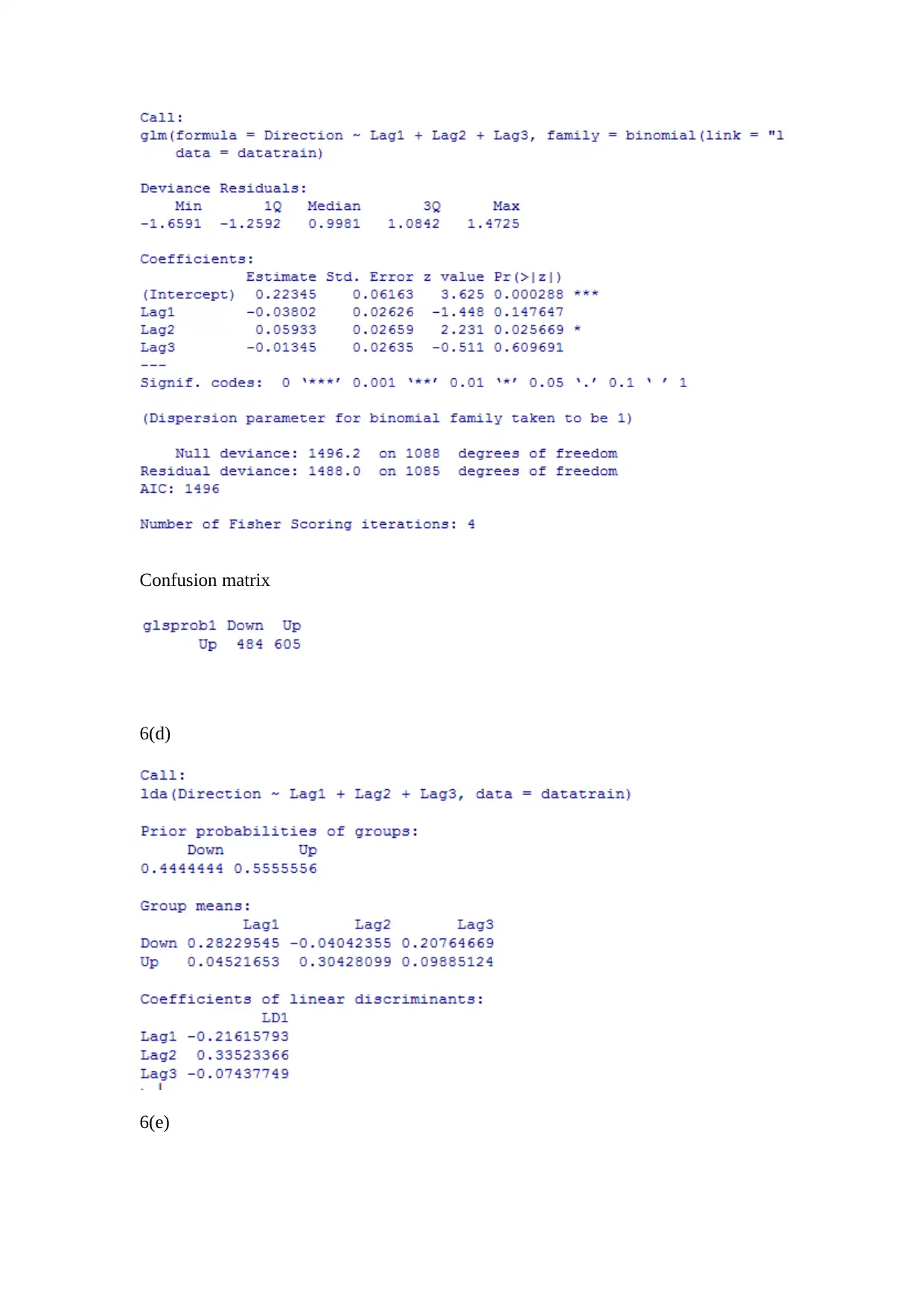

This document contains solutions to a statistics homework assignment, focusing on regression analysis and model evaluation. The assignment covers topics such as linear regression, ridge regression, and LASSO regression, with an emphasis on understanding shrinkage parameters and their effects on coefficient values. The solutions include R code implementations, standard error calculations, and interpretations of model outputs, including confusion matrices and false negative rates. The document also addresses specific questions related to the impact of omitted lines of code and the behavior of error metrics in the context of the provided data and model. The analysis involves interpreting statistical results, including p-values and significance levels, and understanding the implications of different modeling choices.

1 out of 5

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)