Statistics 630 - Assignment 8: Probability & Statistics Solutions

VerifiedAdded on 2023/04/20

|8

|1323

|339

Homework Assignment

AI Summary

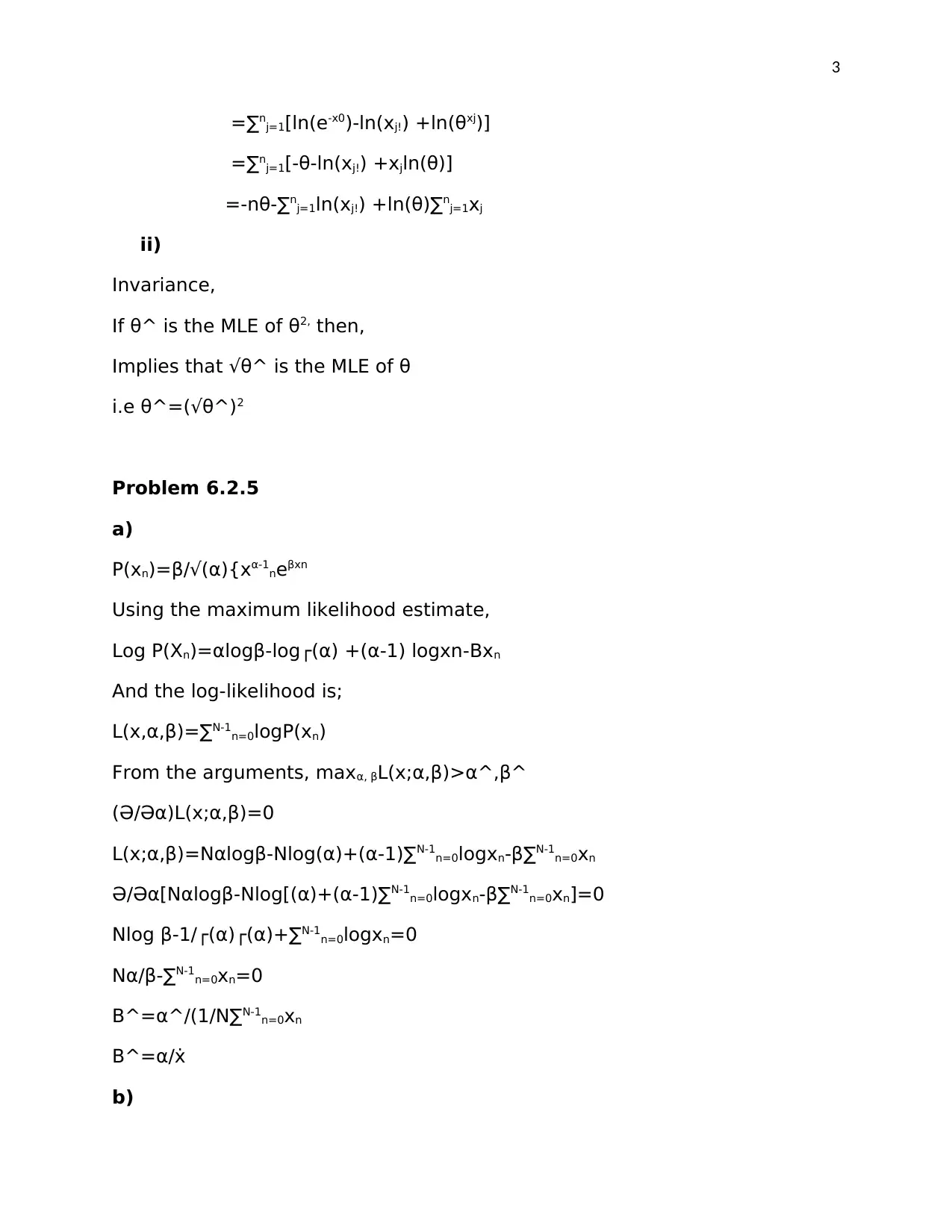

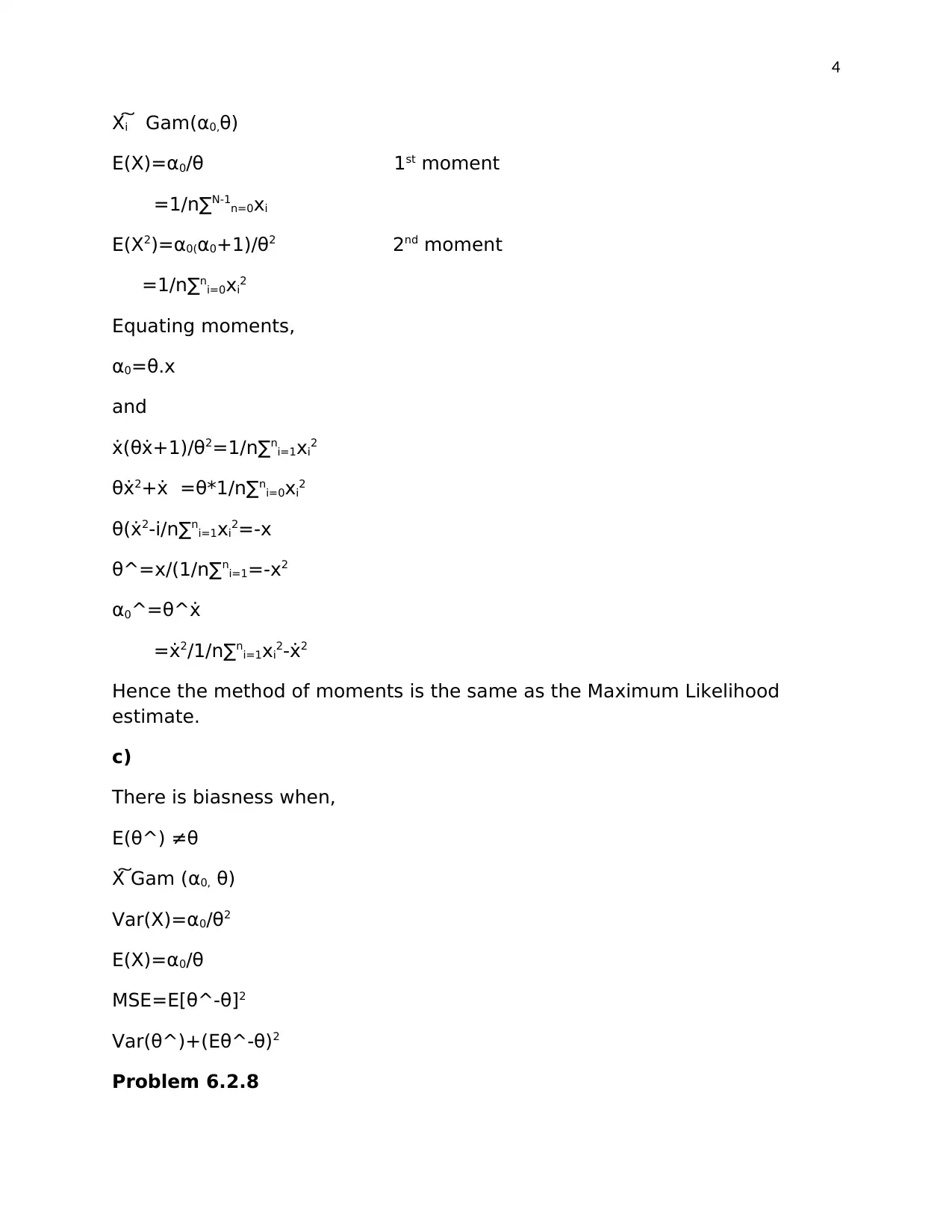

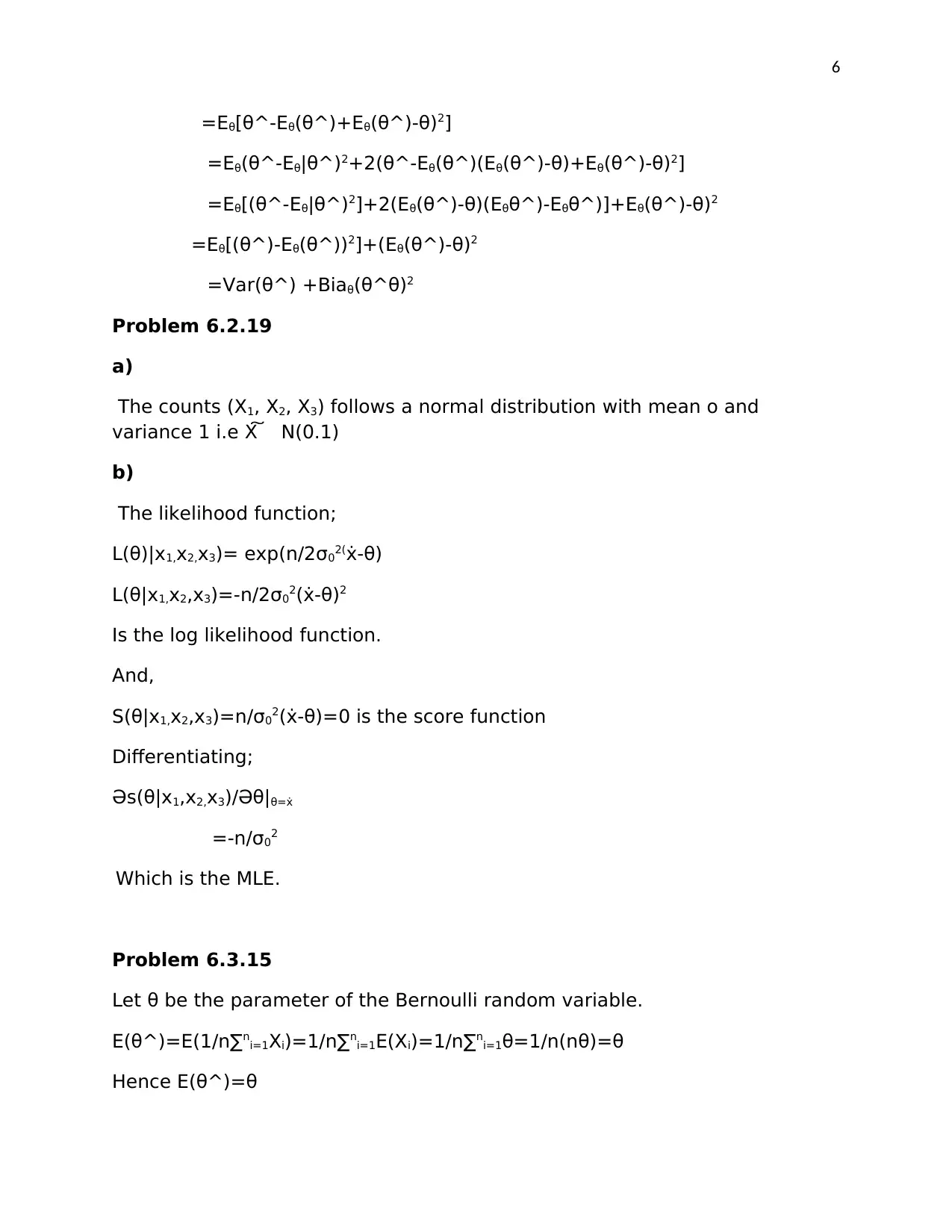

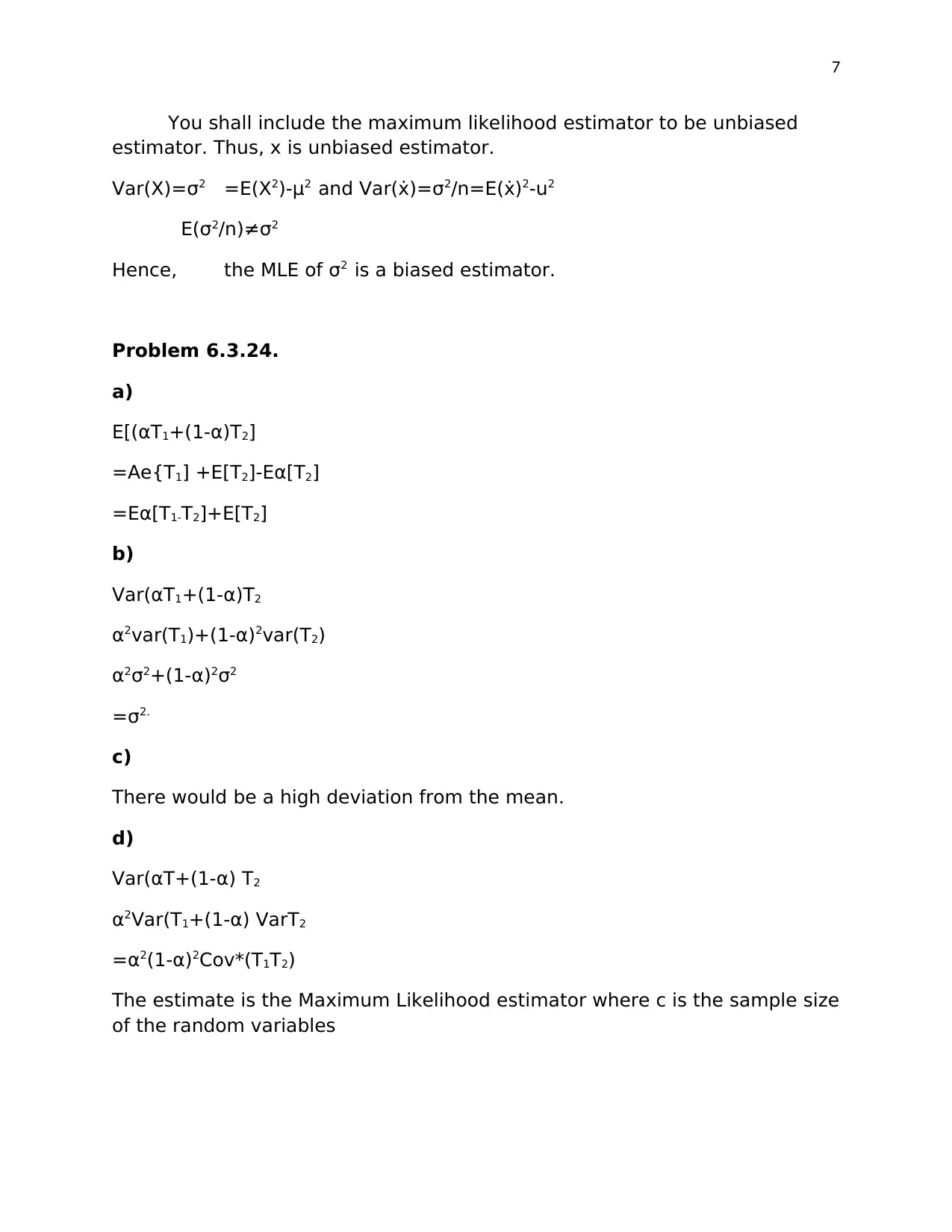

This document presents solutions to a set of probability and statistics problems, likely from a Statistics 630 course. The problems cover topics such as sufficient statistics, minimal sufficient statistics, maximum likelihood estimation (MLE), method of moments estimation, unbiased estimators, and properties of estimators like variance and bias. Specific problems address concepts related to Gamma distributions, Bernoulli random variables, and normal distributions. The solutions involve mathematical derivations, including likelihood functions, log-likelihood functions, score functions, and moment calculations. The document also references a paper on GMM estimators, suggesting a focus on advanced statistical methods. Desklib provides access to a wealth of student-contributed assignments and study resources to aid in understanding complex statistical concepts.

1 out of 8

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)