University Statistics: Bayesian Approach Assignment with R Code

VerifiedAdded on 2023/05/29

|11

|1406

|202

Homework Assignment

AI Summary

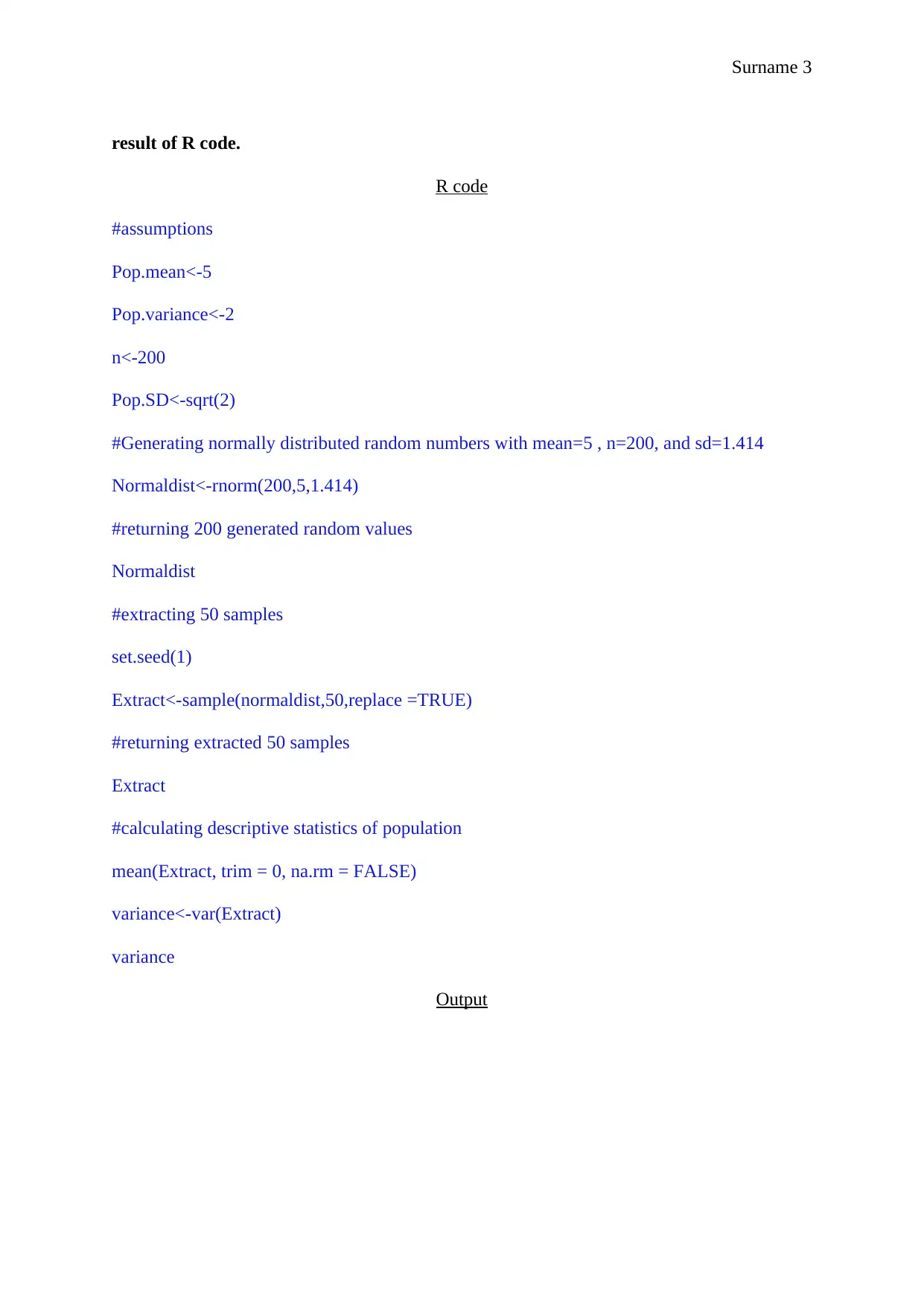

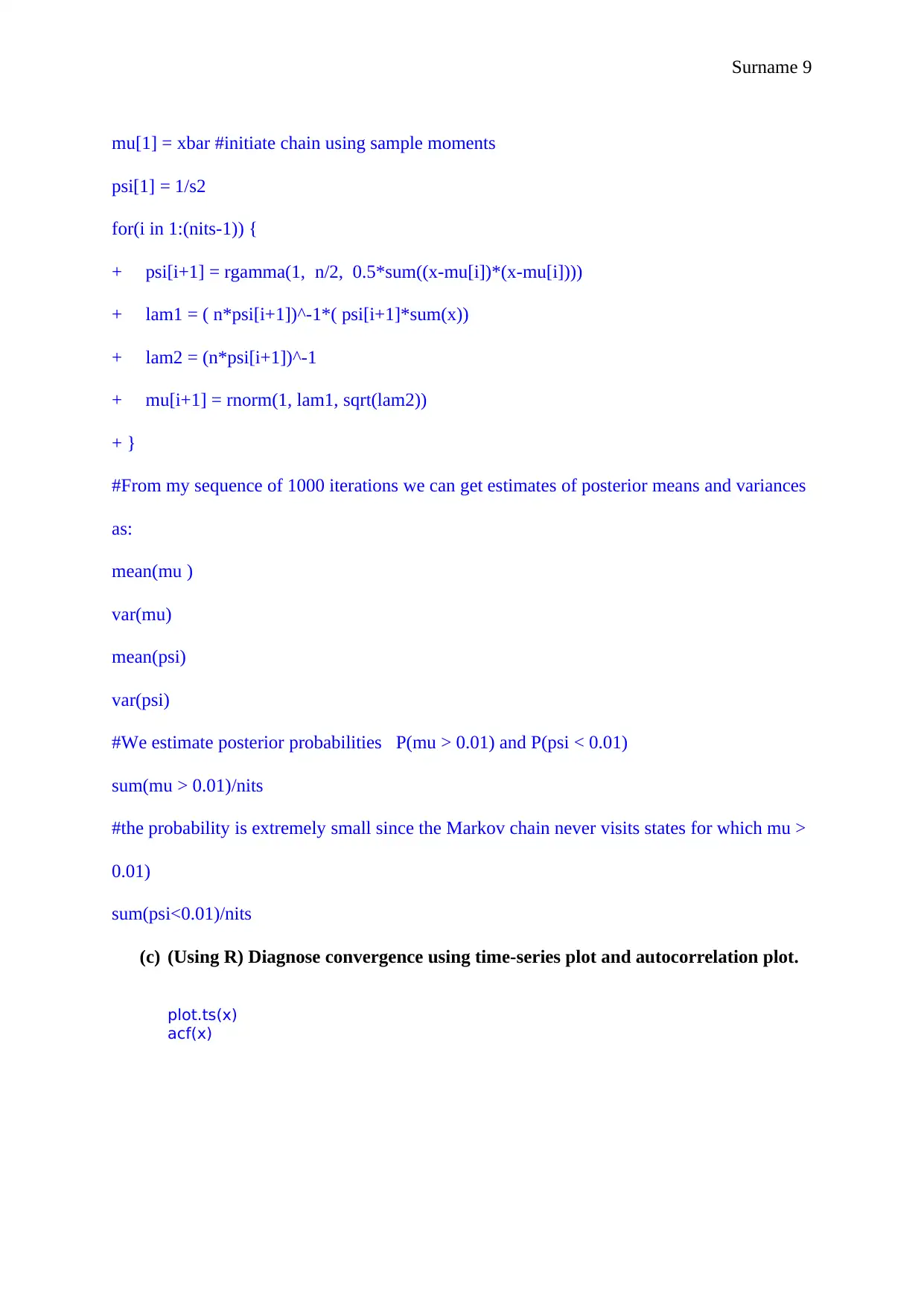

This assignment delves into Bayesian statistics, utilizing R code to explore various methods. It begins by calculating probabilities using Monte Carlo and importance sampling techniques. The assignment then focuses on extracting and analyzing samples from a normal distribution, comparing the results with R code implementations. Further, it investigates non-informative and semi-conjugate prior distributions, deriving conditional posterior distributions and implementing Gibbs sampling to extract samples. The analysis includes diagnosing convergence using time-series and autocorrelation plots, providing a comprehensive understanding of Bayesian methods and their practical application in statistical analysis. The assignment uses R to generate random variables, calculate probabilities, and visualize data to understand the concepts. The assignment covers topics such as calculating probabilities using Monte Carlo and importance sampling methods, finding conditional posterior distributions, Gibbs sampling, and convergence diagnostics using time-series and autocorrelation plots. It includes the use of R code for generating random variables, calculating probabilities, and visualizing data. The assignment also covers the concepts of non-informative and semi-conjugate prior distributions and the application of Bayesian methods in statistical analysis. The student used R to implement and analyze Bayesian methods, including Monte Carlo, importance sampling, and Gibbs sampling, demonstrating their understanding of these statistical techniques.

1 out of 11

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)