Probability and Statistics Assignment Solution - University

VerifiedAdded on 2022/09/26

|16

|367

|21

Homework Assignment

AI Summary

This document presents a comprehensive solution to a probability and statistics homework assignment. The solution covers a wide range of topics, including mutually exclusive events, indicator random variables, cumulative distribution functions, probability density functions, geometric and Poisson distributions, and maximum likelihood estimation. The assignment addresses concepts such as independence, joint probability, conditional probability, moment-generating functions, lognormal distributions, and method of moments estimation. The solutions involve detailed calculations and derivations, providing a thorough understanding of the statistical concepts presented in the assignment. The student has provided all the necessary steps to solve the problems. The document is intended to help students understand and solve similar problems.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

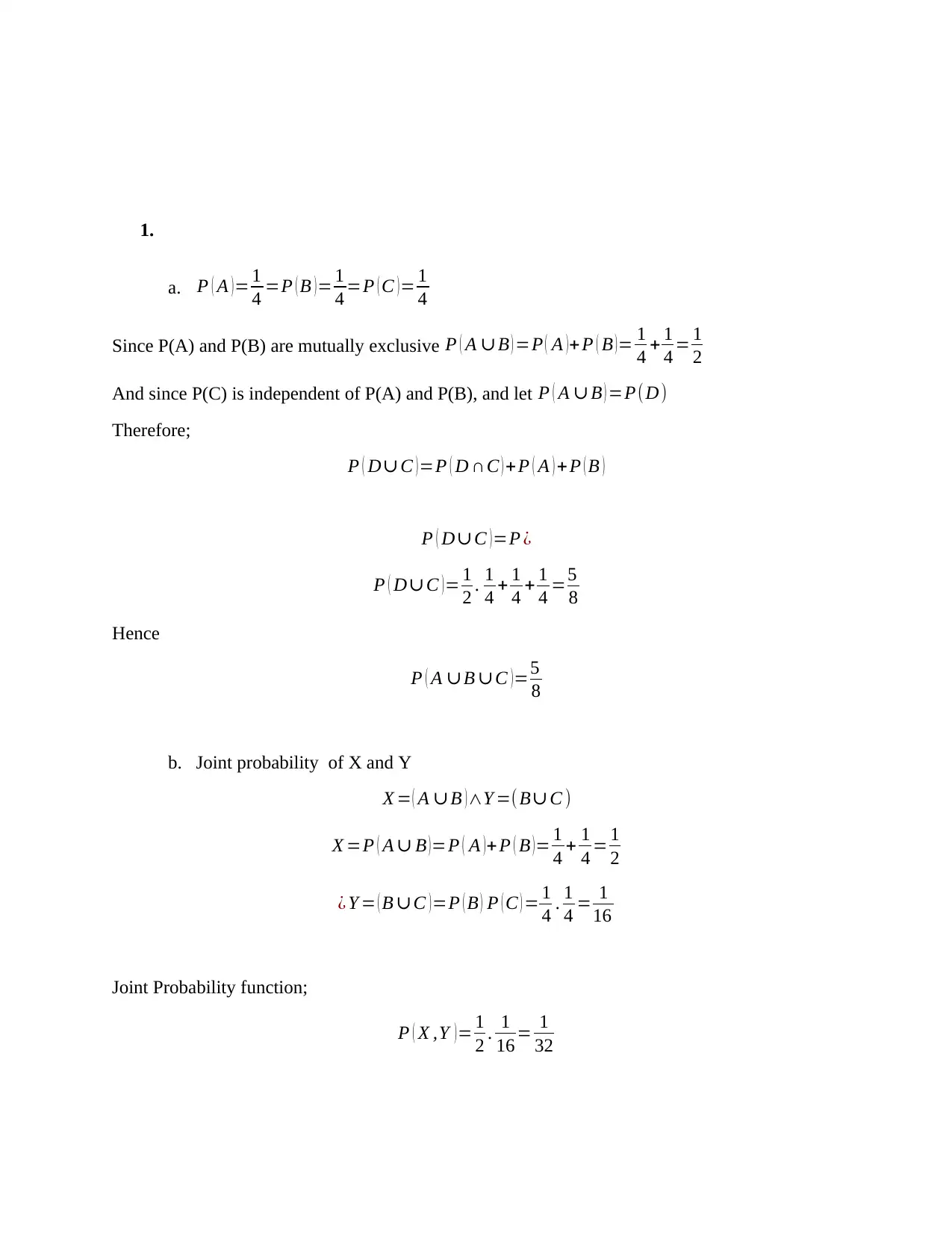

1.

a. P ( A ) = 1

4 =P ( B ) = 1

4 =P ( C ) = 1

4

Since P(A) and P(B) are mutually exclusive P ( A ∪ B ) =P ( A )+ P ( B )= 1

4 + 1

4 = 1

2

And since P(C) is independent of P(A) and P(B), and let P ( A ∪ B ) =P(D)

Therefore;

P ( D∪ C )=P ( D ∩C ) +P ( A ) + P ( B )

P ( D∪C )=P ¿

P ( D∪C ) = 1

2 . 1

4 + 1

4 + 1

4 =5

8

Hence

P ( A ∪B ∪C ) =5

8

b. Joint probability of X and Y

X = ( A ∪ B ) ∧Y =( B∪ C )

X =P ( A ∪ B ) =P ( A ) + P ( B ) = 1

4 + 1

4 = 1

2

¿ Y = ( B ∪C ) =P ( B ) P ( C ) = 1

4 . 1

4 = 1

16

Joint Probability function;

P ( X ,Y )= 1

2 . 1

16 = 1

32

a. P ( A ) = 1

4 =P ( B ) = 1

4 =P ( C ) = 1

4

Since P(A) and P(B) are mutually exclusive P ( A ∪ B ) =P ( A )+ P ( B )= 1

4 + 1

4 = 1

2

And since P(C) is independent of P(A) and P(B), and let P ( A ∪ B ) =P(D)

Therefore;

P ( D∪ C )=P ( D ∩C ) +P ( A ) + P ( B )

P ( D∪C )=P ¿

P ( D∪C ) = 1

2 . 1

4 + 1

4 + 1

4 =5

8

Hence

P ( A ∪B ∪C ) =5

8

b. Joint probability of X and Y

X = ( A ∪ B ) ∧Y =( B∪ C )

X =P ( A ∪ B ) =P ( A ) + P ( B ) = 1

4 + 1

4 = 1

2

¿ Y = ( B ∪C ) =P ( B ) P ( C ) = 1

4 . 1

4 = 1

16

Joint Probability function;

P ( X ,Y )= 1

2 . 1

16 = 1

32

c. Independence of X and Y

X and Y are independent because the occurrence of one event do not affect the occurrence of

another event, meaning they can occur at the same time.

2. CDF

F ( x )=

{ c1 ex if x< 0

c2 if 0 ≤ x< 1

c3−e−x otherwise

a.

f x=F' (x)=c1 ex=1 , x< 0

→ c1=1

f x=F' (x)=0=1 , 0 ≤ x <1

→ c2=0

→ c3=0

b. P(−1< X ≤ 1

2 )

F ( x )=ex

¿∫

−1

1

2

e x dx

¿ [ ex ]−1

1

2

¿ e

1

2 −e−1

¿ 1.281

X and Y are independent because the occurrence of one event do not affect the occurrence of

another event, meaning they can occur at the same time.

2. CDF

F ( x )=

{ c1 ex if x< 0

c2 if 0 ≤ x< 1

c3−e−x otherwise

a.

f x=F' (x)=c1 ex=1 , x< 0

→ c1=1

f x=F' (x)=0=1 , 0 ≤ x <1

→ c2=0

→ c3=0

b. P(−1< X ≤ 1

2 )

F ( x )=ex

¿∫

−1

1

2

e x dx

¿ [ ex ]−1

1

2

¿ e

1

2 −e−1

¿ 1.281

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

c. P(x=1)

¿ ∫

−∞

1

ex dx

¿ [ ex ]−∞

1

¿ e1−e−∞

¿ 2.718

d. E [min ( X , 1 ) ]

F ( x )=

{ c1 ex if x< 0

c2 if 0 ≤ x<1

c3−e−x otherwise

The expectation of the minimum variable of the cumulative distribution function is given by

F ( x ) =P ( X ≤ x ) =1−P ( X >x )

¿ 1−P( X > x , X 2 , … . Xn > x)

By independence;

¿ P ( X1 > x ) P ( X2>x ) … P( Xn> x)

¿ 1−[P ( X1> x ) ]n

¿ ∫

−∞

1

ex dx

¿ [ ex ]−∞

1

¿ e1−e−∞

¿ 2.718

d. E [min ( X , 1 ) ]

F ( x )=

{ c1 ex if x< 0

c2 if 0 ≤ x<1

c3−e−x otherwise

The expectation of the minimum variable of the cumulative distribution function is given by

F ( x ) =P ( X ≤ x ) =1−P ( X >x )

¿ 1−P( X > x , X 2 , … . Xn > x)

By independence;

¿ P ( X1 > x ) P ( X2>x ) … P( Xn> x)

¿ 1−[P ( X1> x ) ]n

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

3.

a. PDF

f ( x ) =∫

0

∞

k e−2 x dx

1=∫

0

∞

k e−2 x dx

1= [ k e−2 x ] 0

∞

¿ k

Thus k=1

b. Probability

P(2< X< 5)=∫

2

5

e−2 x dx

¿ [ −e−2 x ] 2

5

¿ [ e−2 ×2 ]− [ e−2× 5 ]

¿ 0.0183

c. PDF

Y = √ X

FY ( y ) =P ( Y ≤ y ) =P ( √ X ≤ y ) =P ( X ≤ y2 )=FX ( y2 ) for X> 0

The derivative of the above equation will be;

a. PDF

f ( x ) =∫

0

∞

k e−2 x dx

1=∫

0

∞

k e−2 x dx

1= [ k e−2 x ] 0

∞

¿ k

Thus k=1

b. Probability

P(2< X< 5)=∫

2

5

e−2 x dx

¿ [ −e−2 x ] 2

5

¿ [ e−2 ×2 ]− [ e−2× 5 ]

¿ 0.0183

c. PDF

Y = √ X

FY ( y ) =P ( Y ≤ y ) =P ( √ X ≤ y ) =P ( X ≤ y2 )=FX ( y2 ) for X> 0

The derivative of the above equation will be;

f Y ( y )= d

dy FY ( y ) = d

dy FY ( y2 )=f X ( y2 ) . f X ( y2 ) '

4.

a. i.i.d random variables

Y =∑ Xi

E ( Y )=∑ Xi P( X=x )

E ( Y )=∑ k pk (1− p)

let q=1− p

E ( Y )=q∑ k pk

E ( Y )=q∑

k ≥1

❑

k pk

¿ qp ∑

k ≥1

k pk−1

¿ qp 1

( 1− p ) 2 = pq

p2

¿ q

p

Var ( Y )=E ( X2 ) − [ E ( X ) ]2

¿ E [ X ( X −1 ) ]+ E ( X )− [ E ( X ) ]2

¿ 2(1− p)

p2 + 1

p + 1

p2

¿ 2−2 p+ p+1

p2 =1− p

p2 = q

p2

dy FY ( y ) = d

dy FY ( y2 )=f X ( y2 ) . f X ( y2 ) '

4.

a. i.i.d random variables

Y =∑ Xi

E ( Y )=∑ Xi P( X=x )

E ( Y )=∑ k pk (1− p)

let q=1− p

E ( Y )=q∑ k pk

E ( Y )=q∑

k ≥1

❑

k pk

¿ qp ∑

k ≥1

k pk−1

¿ qp 1

( 1− p ) 2 = pq

p2

¿ q

p

Var ( Y )=E ( X2 ) − [ E ( X ) ]2

¿ E [ X ( X −1 ) ]+ E ( X )− [ E ( X ) ]2

¿ 2(1− p)

p2 + 1

p + 1

p2

¿ 2−2 p+ p+1

p2 =1− p

p2 = q

p2

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

b. P(Y=0)

P ( Y =0 ) =1−P(Y ≥ 0)

¿ 1−∑ p qk−1

¿ 1−qk−1

c. Cov ( X , N )

Cov ( X , N ) =Var (X , N )

Var ( X , N ) =E [ X , N ]2 −E ( X ) E(N )

Since X is a random variables while is a mere number of variables in our data set, there is no

covariance between X and N

5. Joint probability density function

f ( x , y ) =e− y

a.

P ( Y ≥ 2 X ) =∬

0

∞

f XY ( x , y ) dydx

¿ ∫

0

2 X

e− y dydx

¿ [−e− y ]0

2 X

¿∫

0

∞

1−e−2 X dx

¿ [ x+ 2 e−2 X ]0

∞

P ( Y =0 ) =1−P(Y ≥ 0)

¿ 1−∑ p qk−1

¿ 1−qk−1

c. Cov ( X , N )

Cov ( X , N ) =Var (X , N )

Var ( X , N ) =E [ X , N ]2 −E ( X ) E(N )

Since X is a random variables while is a mere number of variables in our data set, there is no

covariance between X and N

5. Joint probability density function

f ( x , y ) =e− y

a.

P ( Y ≥ 2 X ) =∬

0

∞

f XY ( x , y ) dydx

¿ ∫

0

2 X

e− y dydx

¿ [−e− y ]0

2 X

¿∫

0

∞

1−e−2 X dx

¿ [ x+ 2 e−2 X ]0

∞

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

¿ 2

b. PDF of U

Here we are going to need to two random variables U and W, where we define W=X

Thus a function g is given by;

g= {U =X +Y

W =X

Inversing the function, we get;

g= { X=W

Y =U− X

Using Jacobean, we get

|J|=

|det [ 0 1

1 −1 ]|=|−1|=1

Thus,

f UW ( u , w ) =f XY ( w , u−w)

c. Correlation between X and Y

ρXY = σ XY

√ Var ( X ) Var (Y )

d. Conditional probability density

f ( x , y ) =e− y

f ( x∨ y )= f ( x , y )

f ( y ) , givenY = y

f ( x∨ y )= e− y

e− y =1

6.

a. Moment Generating functions

b. PDF of U

Here we are going to need to two random variables U and W, where we define W=X

Thus a function g is given by;

g= {U =X +Y

W =X

Inversing the function, we get;

g= { X=W

Y =U− X

Using Jacobean, we get

|J|=

|det [ 0 1

1 −1 ]|=|−1|=1

Thus,

f UW ( u , w ) =f XY ( w , u−w)

c. Correlation between X and Y

ρXY = σ XY

√ Var ( X ) Var (Y )

d. Conditional probability density

f ( x , y ) =e− y

f ( x∨ y )= f ( x , y )

f ( y ) , givenY = y

f ( x∨ y )= e− y

e− y =1

6.

a. Moment Generating functions

f X ( x ) =λ e− λ x

M x ( t ) =E(etx )

E ( etx ) =∫ etx f X ( x ) dx

¿∫etx λ e− λ x dx

¿∫ λ ex (t −λ) dx

¿ λ [ e x(t − λ) ]0

∞

¿ λ [ 0− 1

t−λ ]

¿ ʎ

t−λ

b. Probability density function

FX ( x ) = { λ e−λ x if x ∈ R

0 if x

f x=F X

' ( x )=−λ e−λx

c. Maximum likelihood estimation

f X ( x ) =λ e− λ x

f ( x1 , x2 , … , xn| λ )=Π λ e−λ x

L ( x1 , x2 , … , xn|λ ) =n λ e− λ∑ x

Getting the log,

logL ( x1 , x2, … , xn|λ )=log (n λ e− λ∑ x )

M x ( t ) =E(etx )

E ( etx ) =∫ etx f X ( x ) dx

¿∫etx λ e− λ x dx

¿∫ λ ex (t −λ) dx

¿ λ [ e x(t − λ) ]0

∞

¿ λ [ 0− 1

t−λ ]

¿ ʎ

t−λ

b. Probability density function

FX ( x ) = { λ e−λ x if x ∈ R

0 if x

f x=F X

' ( x )=−λ e−λx

c. Maximum likelihood estimation

f X ( x ) =λ e− λ x

f ( x1 , x2 , … , xn| λ )=Π λ e−λ x

L ( x1 , x2 , … , xn|λ ) =n λ e− λ∑ x

Getting the log,

logL ( x1 , x2, … , xn|λ )=log (n λ e− λ∑ x )

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

logL ( x1 , x2, … , xn|λ )=n log λ−λ ∑

i=1

n

xi

¿

d (n log λ−λ ∑

i=1

n

xi)

d λ

¿ n

λ −∑

i=1

n

xi

Therefore,

λ= n

∑

i=1

n

xi

ʎ is therefore the maximum likelihood estimator of the function.

d. Normalizing constants

To normalize a function,

f X ( x ) =λ e− λx

∫

−∞

∞

f X ( x ) =∫

−∞

∞

λ e− λx dx= √ 2 π

We therefore define a function;

φ ( x )= 1

√2 π f X ( x )= 1

√2 π λ e−λx

Such that;

∫

−∞

∞

φ ( x )=∫

−∞

∞

1

√ 2 π λ e−λx=1

Our normalizing factor is 1

√2 π , while second normalizing factor becomes √2 π and;

1

√ 2 π ( n

∑

i=1

n

xi

− √ 2 π

) converges to normal random variable

i=1

n

xi

¿

d (n log λ−λ ∑

i=1

n

xi)

d λ

¿ n

λ −∑

i=1

n

xi

Therefore,

λ= n

∑

i=1

n

xi

ʎ is therefore the maximum likelihood estimator of the function.

d. Normalizing constants

To normalize a function,

f X ( x ) =λ e− λx

∫

−∞

∞

f X ( x ) =∫

−∞

∞

λ e− λx dx= √ 2 π

We therefore define a function;

φ ( x )= 1

√2 π f X ( x )= 1

√2 π λ e−λx

Such that;

∫

−∞

∞

φ ( x )=∫

−∞

∞

1

√ 2 π λ e−λx=1

Our normalizing factor is 1

√2 π , while second normalizing factor becomes √2 π and;

1

√ 2 π ( n

∑

i=1

n

xi

− √ 2 π

) converges to normal random variable

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7. Lognormal distribution

a.

Y i= 1

√2 πσx exp (− ( ln ( x ) −μ )2

2 σ2 )

T =∏i=1

n

Y i=∏i=1

n 1

√2 π σx exp (− ( ln ( x )−μ )2

2 σ2 )

T =∏

i=1

n

( ( 2 π σ2 )

−1

2 x−1 exp (− ( ln ( x )−μ )2

2 σ2 ) )

T = ( 2 π σ2 )

−n

2

∏

i=1

n

x−1 exp ( −∑

i=1

n

( ln ( x ) −μ )

2

2 σ2 )

b.

E ( T )=eμ + σ2

2

Var ( T ) =e2 μ+ a2

(eσ2

−1)

a.

Y i= 1

√2 πσx exp (− ( ln ( x ) −μ )2

2 σ2 )

T =∏i=1

n

Y i=∏i=1

n 1

√2 π σx exp (− ( ln ( x )−μ )2

2 σ2 )

T =∏

i=1

n

( ( 2 π σ2 )

−1

2 x−1 exp (− ( ln ( x )−μ )2

2 σ2 ) )

T = ( 2 π σ2 )

−n

2

∏

i=1

n

x−1 exp ( −∑

i=1

n

( ln ( x ) −μ )

2

2 σ2 )

b.

E ( T )=eμ + σ2

2

Var ( T ) =e2 μ+ a2

(eσ2

−1)

c.

Successive geometric average converges to eμ + σ 2

2

Suppose x1 , … . , xn is a sequence of i. i. d draws with E ( X ) =μ∧Var ( x ) =σ <∞ for all i. then for any ε >0 the sa

lim

n

P (|^xn−μ|> ε )=0

Then ^xn converges in probability μ

In our situation,

lim

n → ∞ (∏

i=1

n

Y i )=¿ lim

n → ∞

P (|^xn−μ|> ε )=0 ¿

Y i coverges∈ probability ¿ eμ+ σ 2

2

d. Maximum Likelihood Estimator

T = ( 2 π σ2 )

−n

2

∏

i=1

n

x−1 exp ( −∑

i=1

n

( ln ( x ) −μ )

2

2 σ2 )

L ( T ) =ln ( ( 2 π σ2 )

−n

2

∏

i=1

n

x−1 exp ( −∑

i=1

n

( ln ( x ) −μ )2

2σ 2 ) )

¿− n

2 ln ( 2 π σ2 )−∑

i=1

n

ln ( xi ) −

∑

i=1

n

( ln ( x )−μ )2

2 σ2

¿− n

2 ln ( 2 π σ2 )−∑

i=1

n

ln ( xi ) −

∑

i=1

n

[ln ( xi )2−2 ln ( xi ) μ+μ¿ ¿ 2]

2σ 2 ¿

Successive geometric average converges to eμ + σ 2

2

Suppose x1 , … . , xn is a sequence of i. i. d draws with E ( X ) =μ∧Var ( x ) =σ <∞ for all i. then for any ε >0 the sa

lim

n

P (|^xn−μ|> ε )=0

Then ^xn converges in probability μ

In our situation,

lim

n → ∞ (∏

i=1

n

Y i )=¿ lim

n → ∞

P (|^xn−μ|> ε )=0 ¿

Y i coverges∈ probability ¿ eμ+ σ 2

2

d. Maximum Likelihood Estimator

T = ( 2 π σ2 )

−n

2

∏

i=1

n

x−1 exp ( −∑

i=1

n

( ln ( x ) −μ )

2

2 σ2 )

L ( T ) =ln ( ( 2 π σ2 )

−n

2

∏

i=1

n

x−1 exp ( −∑

i=1

n

( ln ( x ) −μ )2

2σ 2 ) )

¿− n

2 ln ( 2 π σ2 )−∑

i=1

n

ln ( xi ) −

∑

i=1

n

( ln ( x )−μ )2

2 σ2

¿− n

2 ln ( 2 π σ2 )−∑

i=1

n

ln ( xi ) −

∑

i=1

n

[ln ( xi )2−2 ln ( xi ) μ+μ¿ ¿ 2]

2σ 2 ¿

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 16

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.