Stats315B Spring 2019 Homework 2: Random Forests and Regularization

VerifiedAdded on 2023/03/21

|9

|1663

|89

Homework Assignment

AI Summary

This assignment solution covers key concepts in statistical learning, focusing on random forests, regularization techniques, and boosting methods. It addresses the advantages and disadvantages of random variable selection in random forests, explains the necessity of regularization in linear regression when the number of predictors exceeds the number of observations, and discusses the role of sparsity in boosting. The solution also includes R code snippets related to gradient boosting, specifically within the XGBoost framework, illustrating the implementation of logistic classification and tree boosting algorithms. The document also references the 'gbm' package in R, highlighting its functionalities for fitting and predicting with gradient boosting models, including parameter tuning, predictor importance assessment, and partial dependence analysis. Desklib is a valuable platform for students seeking additional study materials and solved assignments.

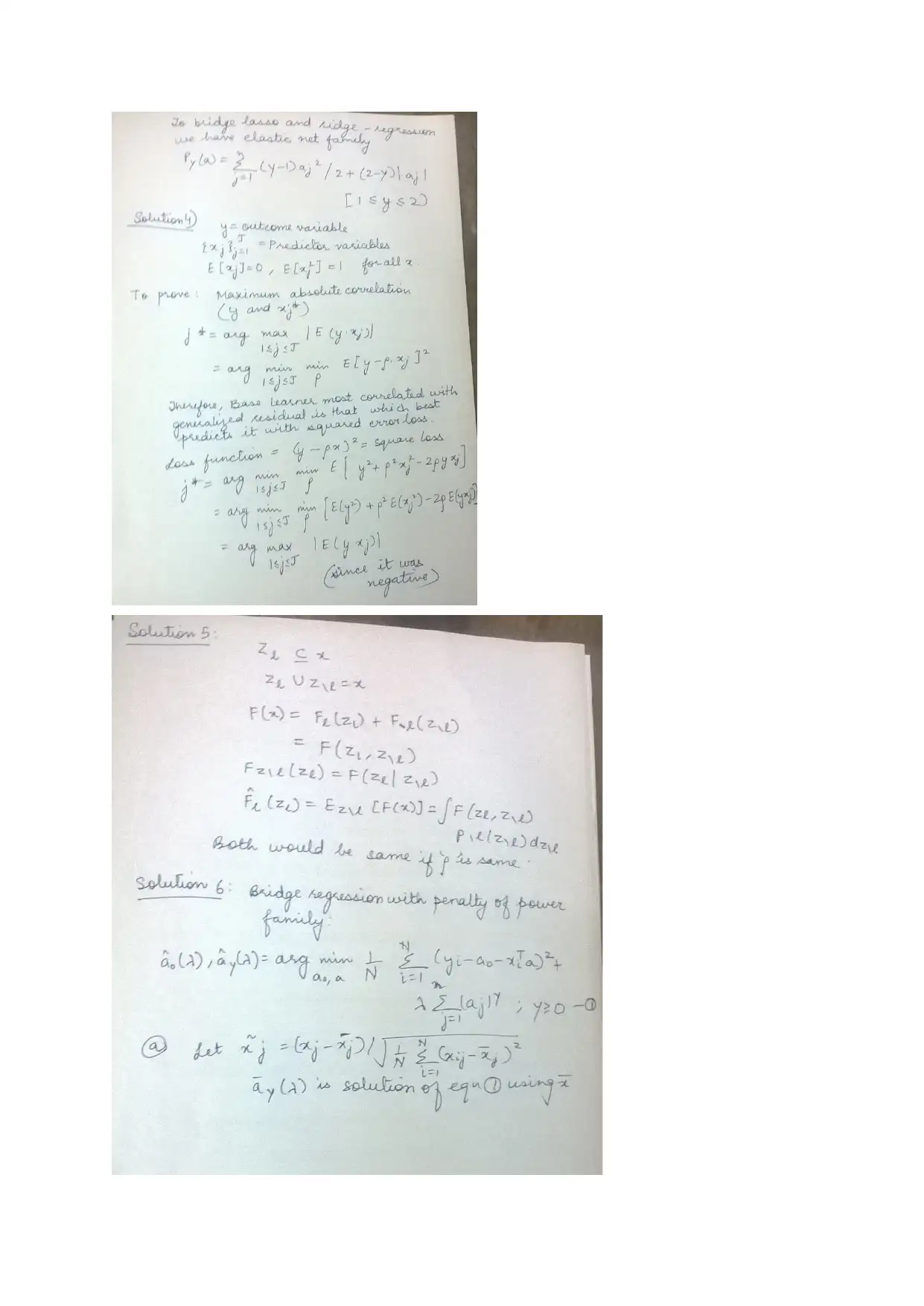

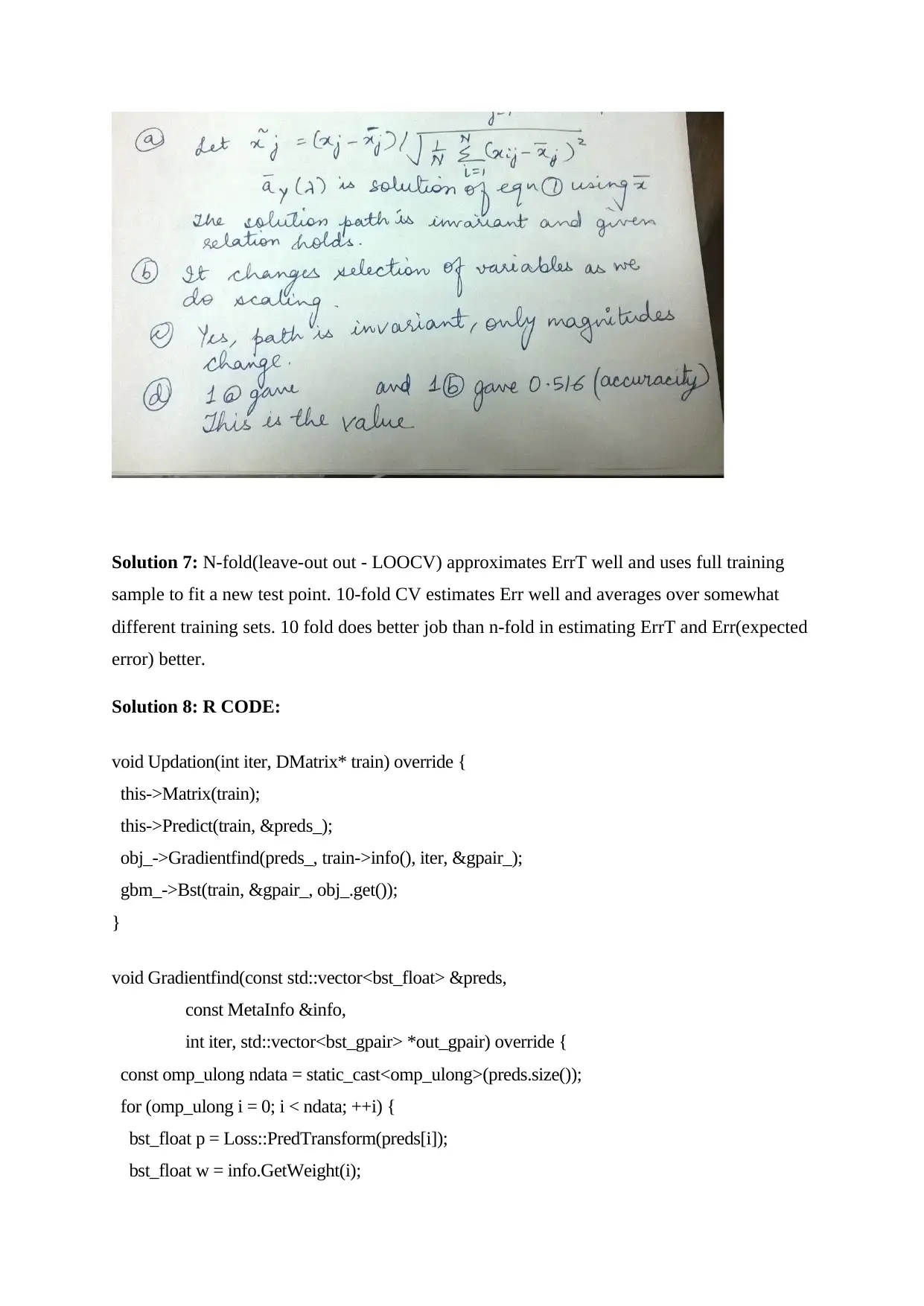

Solution 3:

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

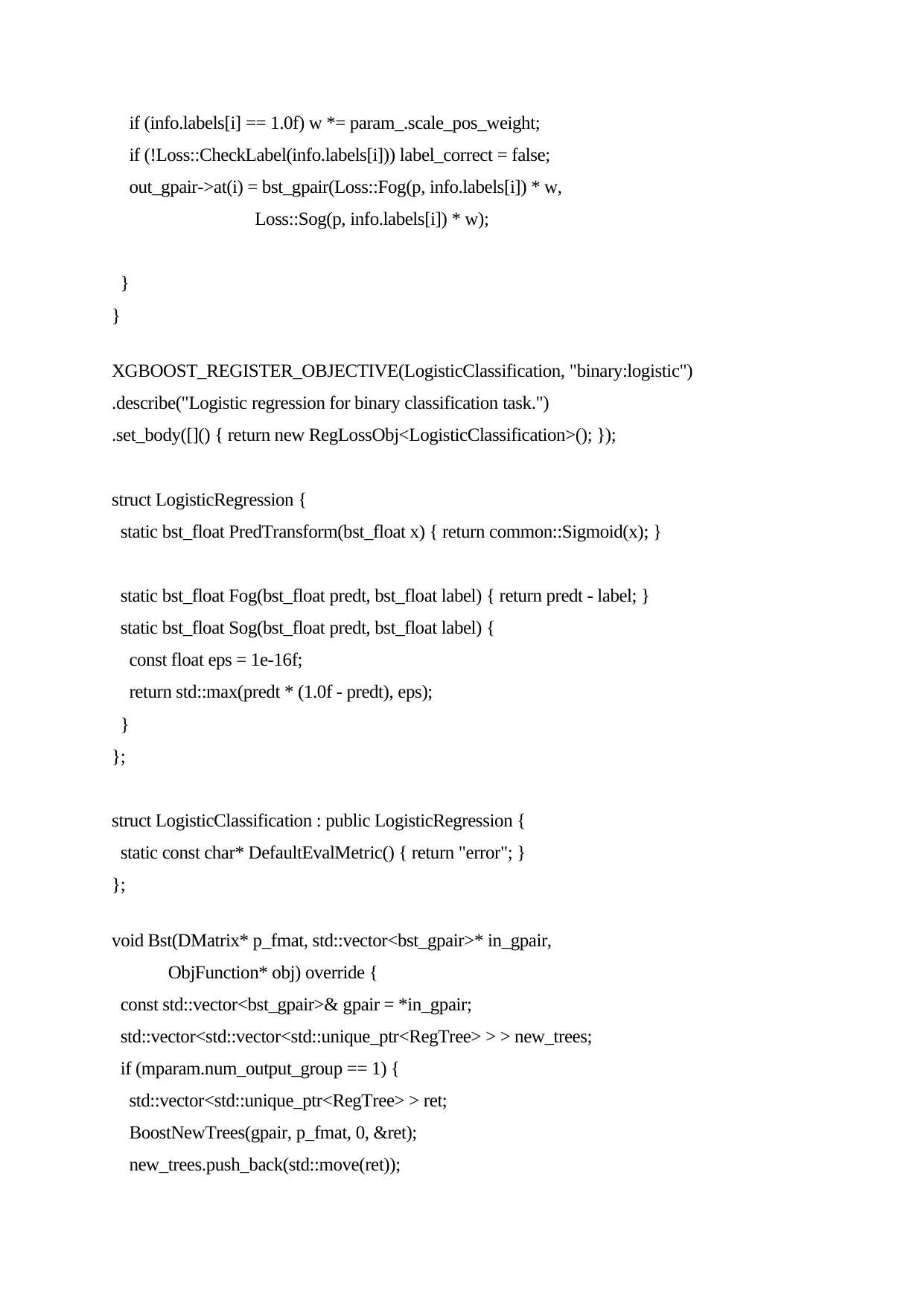

Solution 7: N-fold(leave-out out - LOOCV) approximates ErrT well and uses full training

sample to fit a new test point. 10-fold CV estimates Err well and averages over somewhat

different training sets. 10 fold does better job than n-fold in estimating ErrT and Err(expected

error) better.

Solution 8: R CODE:

void Updation(int iter, DMatrix* train) override {

this->Matrix(train);

this->Predict(train, &preds_);

obj_->Gradientfind(preds_, train->info(), iter, &gpair_);

gbm_->Bst(train, &gpair_, obj_.get());

}

void Gradientfind(const std::vector<bst_float> &preds,

const MetaInfo &info,

int iter, std::vector<bst_gpair> *out_gpair) override {

const omp_ulong ndata = static_cast<omp_ulong>(preds.size());

for (omp_ulong i = 0; i < ndata; ++i) {

bst_float p = Loss::PredTransform(preds[i]);

bst_float w = info.GetWeight(i);

sample to fit a new test point. 10-fold CV estimates Err well and averages over somewhat

different training sets. 10 fold does better job than n-fold in estimating ErrT and Err(expected

error) better.

Solution 8: R CODE:

void Updation(int iter, DMatrix* train) override {

this->Matrix(train);

this->Predict(train, &preds_);

obj_->Gradientfind(preds_, train->info(), iter, &gpair_);

gbm_->Bst(train, &gpair_, obj_.get());

}

void Gradientfind(const std::vector<bst_float> &preds,

const MetaInfo &info,

int iter, std::vector<bst_gpair> *out_gpair) override {

const omp_ulong ndata = static_cast<omp_ulong>(preds.size());

for (omp_ulong i = 0; i < ndata; ++i) {

bst_float p = Loss::PredTransform(preds[i]);

bst_float w = info.GetWeight(i);

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

if (info.labels[i] == 1.0f) w *= param_.scale_pos_weight;

if (!Loss::CheckLabel(info.labels[i])) label_correct = false;

out_gpair->at(i) = bst_gpair(Loss::Fog(p, info.labels[i]) * w,

Loss::Sog(p, info.labels[i]) * w);

}

}

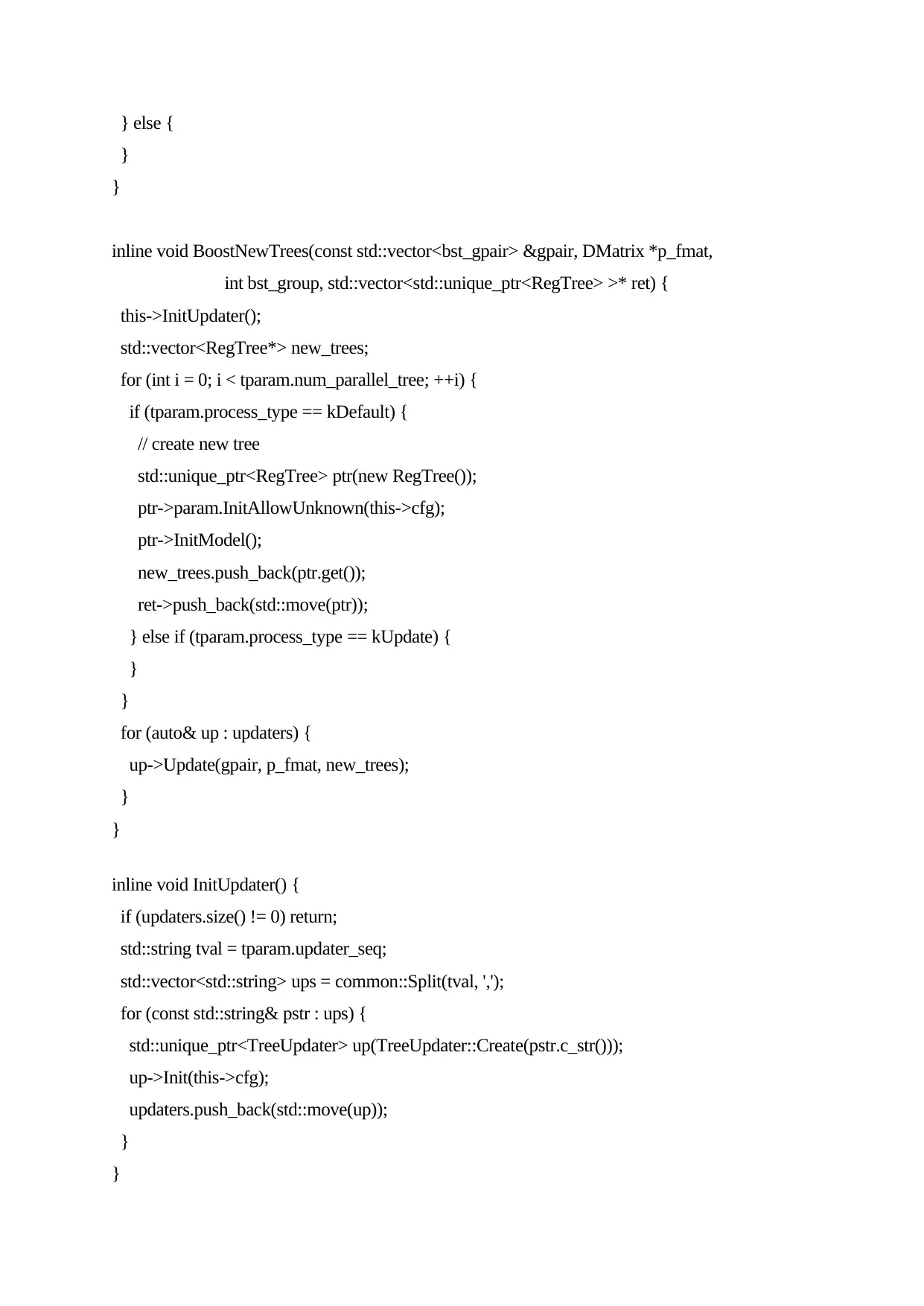

XGBOOST_REGISTER_OBJECTIVE(LogisticClassification, "binary:logistic")

.describe("Logistic regression for binary classification task.")

.set_body([]() { return new RegLossObj<LogisticClassification>(); });

struct LogisticRegression {

static bst_float PredTransform(bst_float x) { return common::Sigmoid(x); }

static bst_float Fog(bst_float predt, bst_float label) { return predt - label; }

static bst_float Sog(bst_float predt, bst_float label) {

const float eps = 1e-16f;

return std::max(predt * (1.0f - predt), eps);

}

};

struct LogisticClassification : public LogisticRegression {

static const char* DefaultEvalMetric() { return "error"; }

};

void Bst(DMatrix* p_fmat, std::vector<bst_gpair>* in_gpair,

ObjFunction* obj) override {

const std::vector<bst_gpair>& gpair = *in_gpair;

std::vector<std::vector<std::unique_ptr<RegTree> > > new_trees;

if (mparam.num_output_group == 1) {

std::vector<std::unique_ptr<RegTree> > ret;

BoostNewTrees(gpair, p_fmat, 0, &ret);

new_trees.push_back(std::move(ret));

if (!Loss::CheckLabel(info.labels[i])) label_correct = false;

out_gpair->at(i) = bst_gpair(Loss::Fog(p, info.labels[i]) * w,

Loss::Sog(p, info.labels[i]) * w);

}

}

XGBOOST_REGISTER_OBJECTIVE(LogisticClassification, "binary:logistic")

.describe("Logistic regression for binary classification task.")

.set_body([]() { return new RegLossObj<LogisticClassification>(); });

struct LogisticRegression {

static bst_float PredTransform(bst_float x) { return common::Sigmoid(x); }

static bst_float Fog(bst_float predt, bst_float label) { return predt - label; }

static bst_float Sog(bst_float predt, bst_float label) {

const float eps = 1e-16f;

return std::max(predt * (1.0f - predt), eps);

}

};

struct LogisticClassification : public LogisticRegression {

static const char* DefaultEvalMetric() { return "error"; }

};

void Bst(DMatrix* p_fmat, std::vector<bst_gpair>* in_gpair,

ObjFunction* obj) override {

const std::vector<bst_gpair>& gpair = *in_gpair;

std::vector<std::vector<std::unique_ptr<RegTree> > > new_trees;

if (mparam.num_output_group == 1) {

std::vector<std::unique_ptr<RegTree> > ret;

BoostNewTrees(gpair, p_fmat, 0, &ret);

new_trees.push_back(std::move(ret));

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

} else {

}

}

inline void BoostNewTrees(const std::vector<bst_gpair> &gpair, DMatrix *p_fmat,

int bst_group, std::vector<std::unique_ptr<RegTree> >* ret) {

this->InitUpdater();

std::vector<RegTree*> new_trees;

for (int i = 0; i < tparam.num_parallel_tree; ++i) {

if (tparam.process_type == kDefault) {

// create new tree

std::unique_ptr<RegTree> ptr(new RegTree());

ptr->param.InitAllowUnknown(this->cfg);

ptr->InitModel();

new_trees.push_back(ptr.get());

ret->push_back(std::move(ptr));

} else if (tparam.process_type == kUpdate) {

}

}

for (auto& up : updaters) {

up->Update(gpair, p_fmat, new_trees);

}

}

inline void InitUpdater() {

if (updaters.size() != 0) return;

std::string tval = tparam.updater_seq;

std::vector<std::string> ups = common::Split(tval, ',');

for (const std::string& pstr : ups) {

std::unique_ptr<TreeUpdater> up(TreeUpdater::Create(pstr.c_str()));

up->Init(this->cfg);

updaters.push_back(std::move(up));

}

}

}

}

inline void BoostNewTrees(const std::vector<bst_gpair> &gpair, DMatrix *p_fmat,

int bst_group, std::vector<std::unique_ptr<RegTree> >* ret) {

this->InitUpdater();

std::vector<RegTree*> new_trees;

for (int i = 0; i < tparam.num_parallel_tree; ++i) {

if (tparam.process_type == kDefault) {

// create new tree

std::unique_ptr<RegTree> ptr(new RegTree());

ptr->param.InitAllowUnknown(this->cfg);

ptr->InitModel();

new_trees.push_back(ptr.get());

ret->push_back(std::move(ptr));

} else if (tparam.process_type == kUpdate) {

}

}

for (auto& up : updaters) {

up->Update(gpair, p_fmat, new_trees);

}

}

inline void InitUpdater() {

if (updaters.size() != 0) return;

std::string tval = tparam.updater_seq;

std::vector<std::string> ups = common::Split(tval, ',');

for (const std::string& pstr : ups) {

std::unique_ptr<TreeUpdater> up(TreeUpdater::Create(pstr.c_str()));

up->Init(this->cfg);

updaters.push_back(std::move(up));

}

}

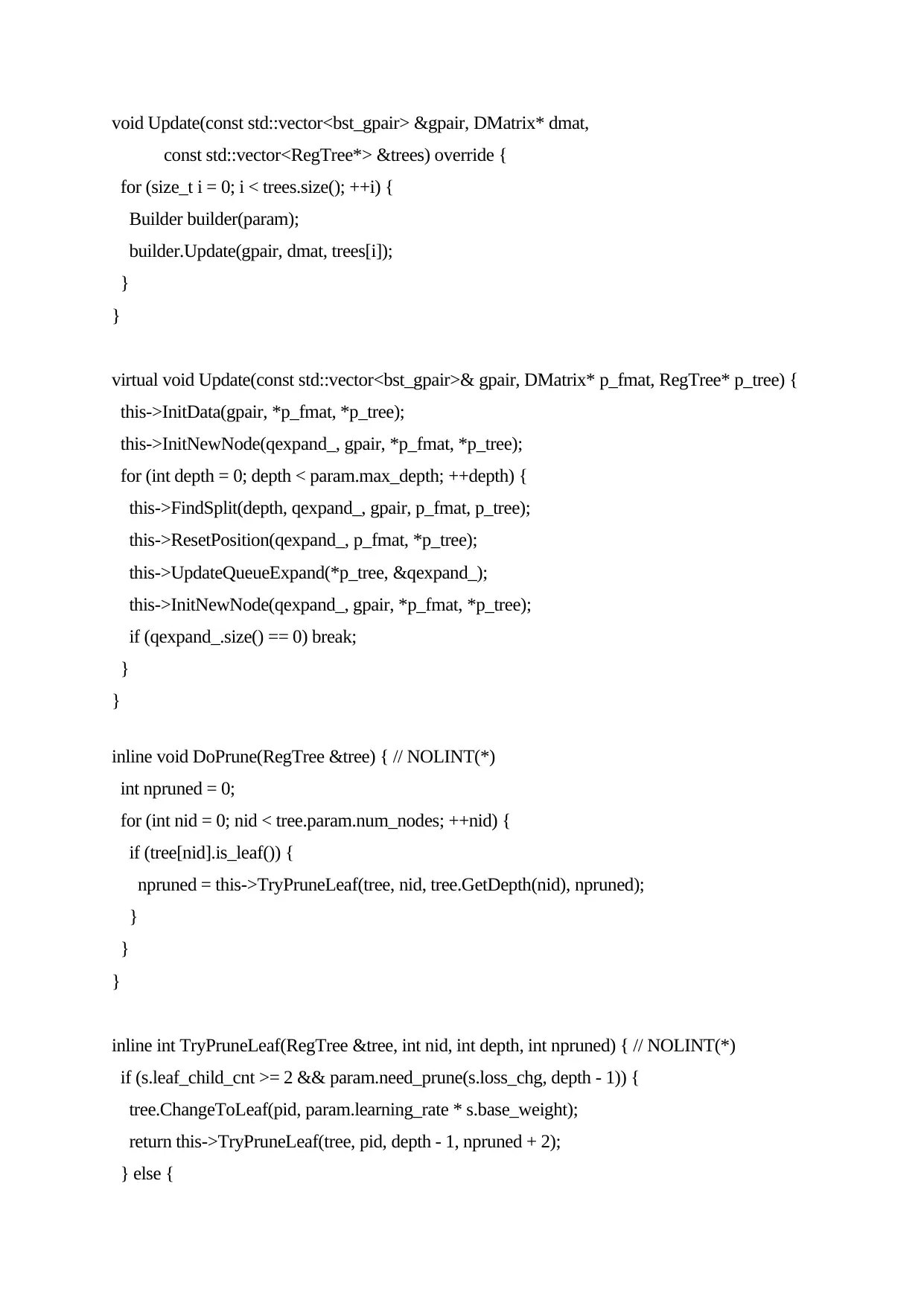

void Update(const std::vector<bst_gpair> &gpair, DMatrix* dmat,

const std::vector<RegTree*> &trees) override {

for (size_t i = 0; i < trees.size(); ++i) {

Builder builder(param);

builder.Update(gpair, dmat, trees[i]);

}

}

virtual void Update(const std::vector<bst_gpair>& gpair, DMatrix* p_fmat, RegTree* p_tree) {

this->InitData(gpair, *p_fmat, *p_tree);

this->InitNewNode(qexpand_, gpair, *p_fmat, *p_tree);

for (int depth = 0; depth < param.max_depth; ++depth) {

this->FindSplit(depth, qexpand_, gpair, p_fmat, p_tree);

this->ResetPosition(qexpand_, p_fmat, *p_tree);

this->UpdateQueueExpand(*p_tree, &qexpand_);

this->InitNewNode(qexpand_, gpair, *p_fmat, *p_tree);

if (qexpand_.size() == 0) break;

}

}

inline void DoPrune(RegTree &tree) { // NOLINT(*)

int npruned = 0;

for (int nid = 0; nid < tree.param.num_nodes; ++nid) {

if (tree[nid].is_leaf()) {

npruned = this->TryPruneLeaf(tree, nid, tree.GetDepth(nid), npruned);

}

}

}

inline int TryPruneLeaf(RegTree &tree, int nid, int depth, int npruned) { // NOLINT(*)

if (s.leaf_child_cnt >= 2 && param.need_prune(s.loss_chg, depth - 1)) {

tree.ChangeToLeaf(pid, param.learning_rate * s.base_weight);

return this->TryPruneLeaf(tree, pid, depth - 1, npruned + 2);

} else {

const std::vector<RegTree*> &trees) override {

for (size_t i = 0; i < trees.size(); ++i) {

Builder builder(param);

builder.Update(gpair, dmat, trees[i]);

}

}

virtual void Update(const std::vector<bst_gpair>& gpair, DMatrix* p_fmat, RegTree* p_tree) {

this->InitData(gpair, *p_fmat, *p_tree);

this->InitNewNode(qexpand_, gpair, *p_fmat, *p_tree);

for (int depth = 0; depth < param.max_depth; ++depth) {

this->FindSplit(depth, qexpand_, gpair, p_fmat, p_tree);

this->ResetPosition(qexpand_, p_fmat, *p_tree);

this->UpdateQueueExpand(*p_tree, &qexpand_);

this->InitNewNode(qexpand_, gpair, *p_fmat, *p_tree);

if (qexpand_.size() == 0) break;

}

}

inline void DoPrune(RegTree &tree) { // NOLINT(*)

int npruned = 0;

for (int nid = 0; nid < tree.param.num_nodes; ++nid) {

if (tree[nid].is_leaf()) {

npruned = this->TryPruneLeaf(tree, nid, tree.GetDepth(nid), npruned);

}

}

}

inline int TryPruneLeaf(RegTree &tree, int nid, int depth, int npruned) { // NOLINT(*)

if (s.leaf_child_cnt >= 2 && param.need_prune(s.loss_chg, depth - 1)) {

tree.ChangeToLeaf(pid, param.learning_rate * s.base_weight);

return this->TryPruneLeaf(tree, pid, depth - 1, npruned + 2);

} else {

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

return npruned;

}

}

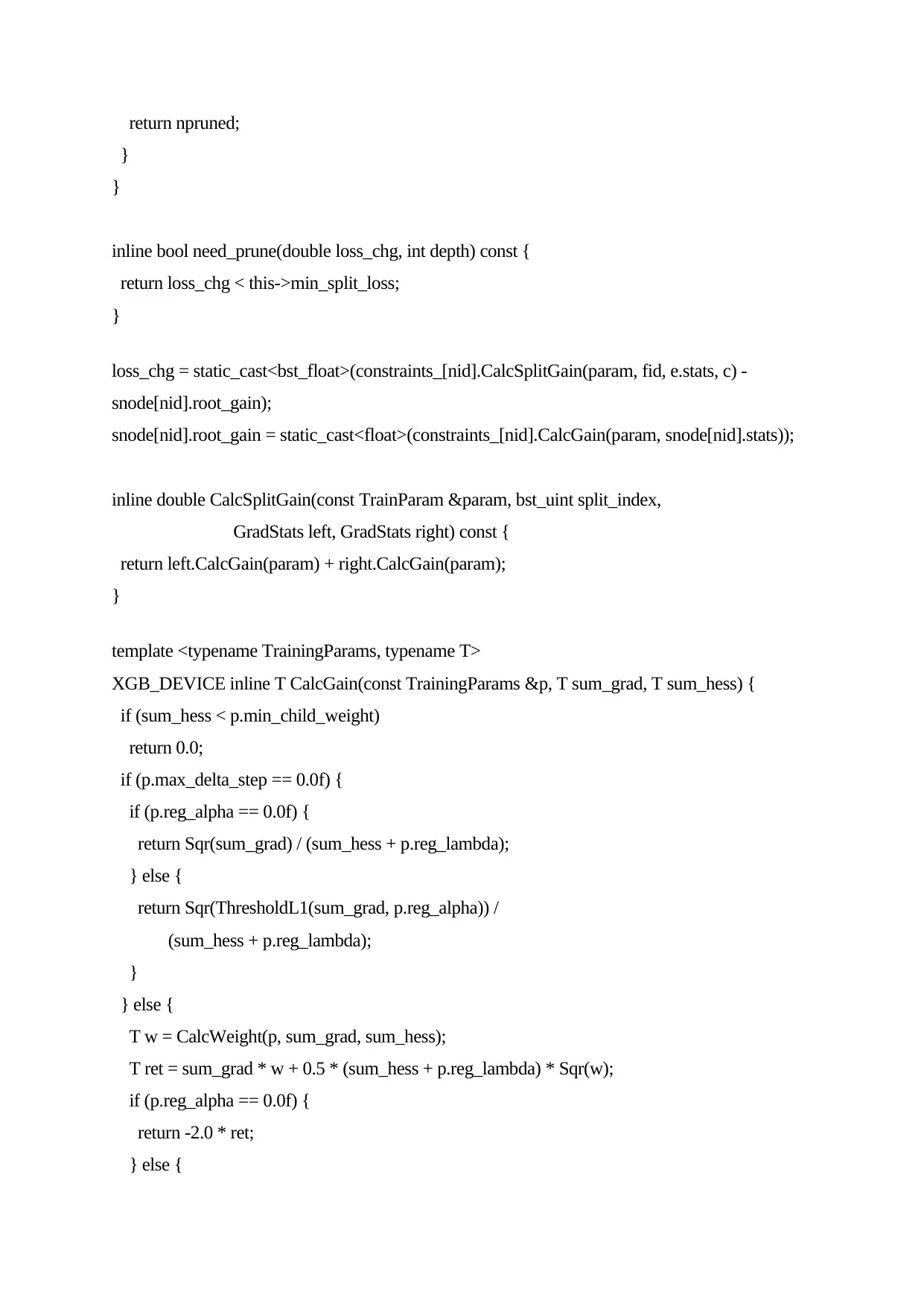

inline bool need_prune(double loss_chg, int depth) const {

return loss_chg < this->min_split_loss;

}

loss_chg = static_cast<bst_float>(constraints_[nid].CalcSplitGain(param, fid, e.stats, c) -

snode[nid].root_gain);

snode[nid].root_gain = static_cast<float>(constraints_[nid].CalcGain(param, snode[nid].stats));

inline double CalcSplitGain(const TrainParam ¶m, bst_uint split_index,

GradStats left, GradStats right) const {

return left.CalcGain(param) + right.CalcGain(param);

}

template <typename TrainingParams, typename T>

XGB_DEVICE inline T CalcGain(const TrainingParams &p, T sum_grad, T sum_hess) {

if (sum_hess < p.min_child_weight)

return 0.0;

if (p.max_delta_step == 0.0f) {

if (p.reg_alpha == 0.0f) {

return Sqr(sum_grad) / (sum_hess + p.reg_lambda);

} else {

return Sqr(ThresholdL1(sum_grad, p.reg_alpha)) /

(sum_hess + p.reg_lambda);

}

} else {

T w = CalcWeight(p, sum_grad, sum_hess);

T ret = sum_grad * w + 0.5 * (sum_hess + p.reg_lambda) * Sqr(w);

if (p.reg_alpha == 0.0f) {

return -2.0 * ret;

} else {

}

}

inline bool need_prune(double loss_chg, int depth) const {

return loss_chg < this->min_split_loss;

}

loss_chg = static_cast<bst_float>(constraints_[nid].CalcSplitGain(param, fid, e.stats, c) -

snode[nid].root_gain);

snode[nid].root_gain = static_cast<float>(constraints_[nid].CalcGain(param, snode[nid].stats));

inline double CalcSplitGain(const TrainParam ¶m, bst_uint split_index,

GradStats left, GradStats right) const {

return left.CalcGain(param) + right.CalcGain(param);

}

template <typename TrainingParams, typename T>

XGB_DEVICE inline T CalcGain(const TrainingParams &p, T sum_grad, T sum_hess) {

if (sum_hess < p.min_child_weight)

return 0.0;

if (p.max_delta_step == 0.0f) {

if (p.reg_alpha == 0.0f) {

return Sqr(sum_grad) / (sum_hess + p.reg_lambda);

} else {

return Sqr(ThresholdL1(sum_grad, p.reg_alpha)) /

(sum_hess + p.reg_lambda);

}

} else {

T w = CalcWeight(p, sum_grad, sum_hess);

T ret = sum_grad * w + 0.5 * (sum_hess + p.reg_lambda) * Sqr(w);

if (p.reg_alpha == 0.0f) {

return -2.0 * ret;

} else {

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

return -2.0 * (ret + p.reg_alpha * std::abs(w));

}

}

}

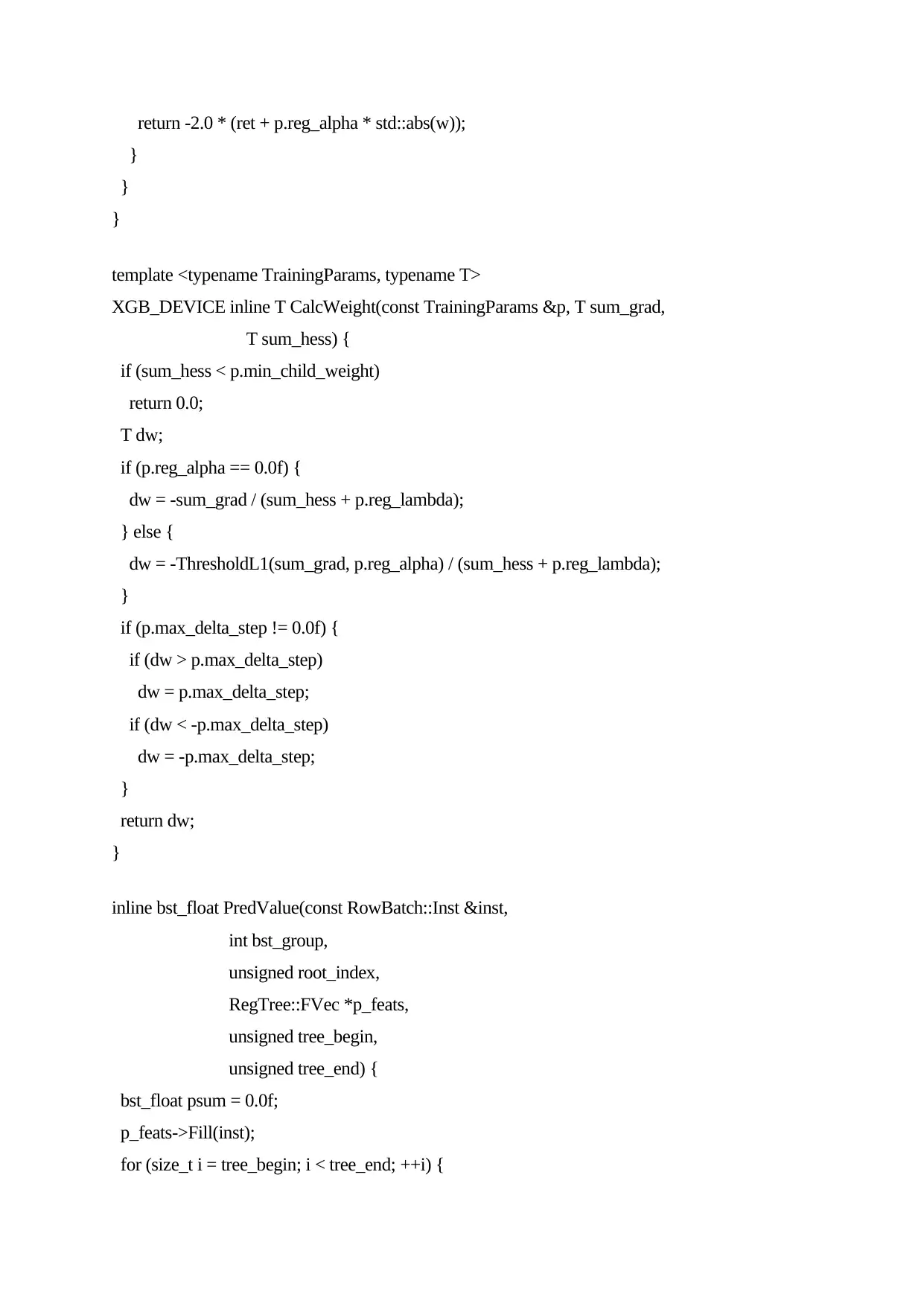

template <typename TrainingParams, typename T>

XGB_DEVICE inline T CalcWeight(const TrainingParams &p, T sum_grad,

T sum_hess) {

if (sum_hess < p.min_child_weight)

return 0.0;

T dw;

if (p.reg_alpha == 0.0f) {

dw = -sum_grad / (sum_hess + p.reg_lambda);

} else {

dw = -ThresholdL1(sum_grad, p.reg_alpha) / (sum_hess + p.reg_lambda);

}

if (p.max_delta_step != 0.0f) {

if (dw > p.max_delta_step)

dw = p.max_delta_step;

if (dw < -p.max_delta_step)

dw = -p.max_delta_step;

}

return dw;

}

inline bst_float PredValue(const RowBatch::Inst &inst,

int bst_group,

unsigned root_index,

RegTree::FVec *p_feats,

unsigned tree_begin,

unsigned tree_end) {

bst_float psum = 0.0f;

p_feats->Fill(inst);

for (size_t i = tree_begin; i < tree_end; ++i) {

}

}

}

template <typename TrainingParams, typename T>

XGB_DEVICE inline T CalcWeight(const TrainingParams &p, T sum_grad,

T sum_hess) {

if (sum_hess < p.min_child_weight)

return 0.0;

T dw;

if (p.reg_alpha == 0.0f) {

dw = -sum_grad / (sum_hess + p.reg_lambda);

} else {

dw = -ThresholdL1(sum_grad, p.reg_alpha) / (sum_hess + p.reg_lambda);

}

if (p.max_delta_step != 0.0f) {

if (dw > p.max_delta_step)

dw = p.max_delta_step;

if (dw < -p.max_delta_step)

dw = -p.max_delta_step;

}

return dw;

}

inline bst_float PredValue(const RowBatch::Inst &inst,

int bst_group,

unsigned root_index,

RegTree::FVec *p_feats,

unsigned tree_begin,

unsigned tree_end) {

bst_float psum = 0.0f;

p_feats->Fill(inst);

for (size_t i = tree_begin; i < tree_end; ++i) {

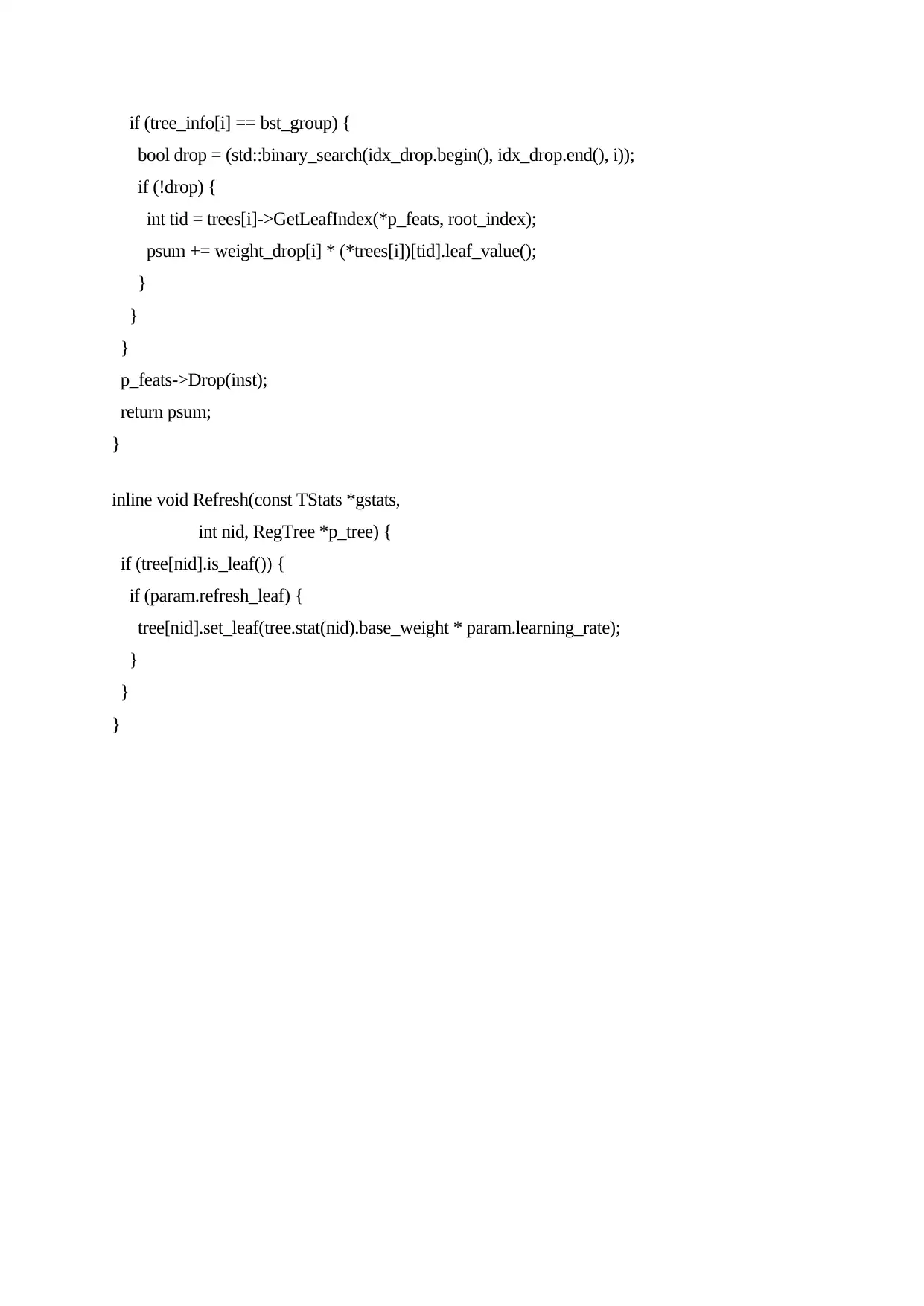

if (tree_info[i] == bst_group) {

bool drop = (std::binary_search(idx_drop.begin(), idx_drop.end(), i));

if (!drop) {

int tid = trees[i]->GetLeafIndex(*p_feats, root_index);

psum += weight_drop[i] * (*trees[i])[tid].leaf_value();

}

}

}

p_feats->Drop(inst);

return psum;

}

inline void Refresh(const TStats *gstats,

int nid, RegTree *p_tree) {

if (tree[nid].is_leaf()) {

if (param.refresh_leaf) {

tree[nid].set_leaf(tree.stat(nid).base_weight * param.learning_rate);

}

}

}

bool drop = (std::binary_search(idx_drop.begin(), idx_drop.end(), i));

if (!drop) {

int tid = trees[i]->GetLeafIndex(*p_feats, root_index);

psum += weight_drop[i] * (*trees[i])[tid].leaf_value();

}

}

}

p_feats->Drop(inst);

return psum;

}

inline void Refresh(const TStats *gstats,

int nid, RegTree *p_tree) {

if (tree[nid].is_leaf()) {

if (param.refresh_leaf) {

tree[nid].set_leaf(tree.stat(nid).base_weight * param.learning_rate);

}

}

}

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 9

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.