Demystifying Quantitative Research: A Step-by-Step Guide for Nurses

VerifiedAdded on 2021/09/02

|6

|5373

|220

Homework Assignment

AI Summary

This article provides a comprehensive step-by-step guide to critiquing quantitative research, specifically tailored for nurses. It aims to demystify the research process and decode complex terminology, emphasizing the importance of critical appraisal in evidence-based practice. The authors, Michael Coughlan, Patricia Cronin, and Frances Ryan, discuss the significance of evaluating research to ensure the use of current best practices in patient care. The article differentiates between critiquing and criticism, highlighting the need for an objective evaluation of a study's strengths and limitations. It introduces credibility and integrity variables as key elements in the research process, providing a framework for nurses to assess the believability and robustness of quantitative studies. The guide covers essential aspects of research evaluation, including writing style, author qualifications, report titles, abstracts, purpose, logical consistency, literature reviews, theoretical frameworks, aims, sample selection, ethical considerations, and data analysis. The article encourages a systematic approach to evaluating each step of the research process, emphasizing the implications of the researcher's decisions on the study's overall strength and applicability to nursing practice. The authors provide a table with research questions to guide nurses in critiquing a quantitative research study.

Step-by-step guide to critiq

research. Part 1: quantitative r

Abstract

When caring for patients it is essential that nurses are using the

current best practice. To determine what this is, nurses must be able

to read research critically. But for many qualified and student nurses

the terminology used in research can be difficult to understand

thus making critical reading even more daunting. It is imperative

in nursing that care has its foundations in sound research and it is

essential that all nurses have the ability to critically appraise research

to identify what is best practice. This article is a step-by step-approach

to critiquing quantitative research to help nurses demystify the

process and decode the terminology.

Key words: Quantitative researchn Review processn Research

methodologies

For many qualifiednursesand nursingstudents

research is research, and it is often quite difficult

to grasp what others are referring to when they

discussthe limitationsand or strengthswithin

a researchstudy.Researchtextsand journalsrefer to

critiquing the literature, critical analysis, reviewing the

literature, evaluation and appraisal of the literature which

are in essence the same thing (Bassett and Bassett, 2003).

Terminology in research can be confusing for the novice

research reader where a term like ‘random’ refers to an

organized manner of selecting items or participants, and the

word ‘significance’ is applied to a degree of chance. Thus

the aim of this article is to take a step-by-step approach to

critiquing research in an attempt to help nurses demystify

the process and decode the terminology.

When caring for patients it is essential that nurses are

using the current best practice. To determine what this is

nurses must be able to read research. The adage ‘All that

glitters is not gold’ is also true in research. Not all research

is of the same quality or of a high standard and therefore

nurses should not simply take research at face value simply

because it has been published (Cullum and Droogan, 1999;

Polit and Beck, 2006). Critiquing is a systematic method of

Michael Coughlan, Patricia Cronin, Frances R

appraising the strengths and limitations of a piece of res

in order to determine its credibility and/or its applicabilit

to practice (Valente, 2003). Seeking only limitations

study is criticism and critiquing and criticism are not the

same (Burns and Grove, 1997). A critique is an imperson

evaluation of the strengths and limitations of the researc

being reviewed and should not be seen as a disparagem

of the researchers ability. Neither should it be regarded a

a jousting match between the researcher and the review

Burns and Grove (1999) call this an ‘intellectual criti

in that it is not the creator but the creation that is being

evaluated. The reviewer maintains objectivity through

the critique.No personalviews are expressedby the

reviewer and the strengths and/or limitations of the stud

and the implications of these are highlighted with referen

to research texts or journals. It is also important to reme

that research works within the realms of probability whe

nothing is absolutely certain. It is therefore importan

refer to the apparent strengths, limitations and findi

of a piece of research (Burns and Grove, 1997). The

of personal pronouns is also avoided in order that a

appearance of objectivity can be maintained.

Credibility and integrity

There are numerous tools available to help both novice a

advanced reviewers to critique research studies (Tan

2003). These tools generally ask questions that can help

reviewer to determine the degree to which the steps in t

research process were followed. However, some step

more important than others and very few tools acknowle

this. Ryan-Wenger(1992)suggeststhat questionsin a

critiquing tool can be subdivided in those that are u

for getting a feel for the study being presented which she

calls ‘credibility variables’ and those that are essenti

evaluating the research process called ‘integrity variable

Credibility variables concentrate on how believable

work appears and focus on the researcher’s qualification

ability to undertake and accurately present the stud

answers to these questions are important when criti

a piece of research as they can offer the reader an

into what to expectin the remainderof the study.

However, the reader should be aware that identified stre

and limitationswithin this sectionwill not necessarily

correspond with what will be found in the rest of the wor

Integrity questions, on the other hand, are interested in t

robustness of the research method, seeking to identify h

appropriately and accurately the researcher followed

steps in the research process. The answers to these ques

658 British Journal of Nursing, 2007, Vol 16, No 11

Michael Coughlan, Patricia Cronin and Frances Ryan are Lecturers,

School of Nursing and Midwifery, University of Dublin, Trinity

College, Dublin

Accepted for publication: March 2007

research. Part 1: quantitative r

Abstract

When caring for patients it is essential that nurses are using the

current best practice. To determine what this is, nurses must be able

to read research critically. But for many qualified and student nurses

the terminology used in research can be difficult to understand

thus making critical reading even more daunting. It is imperative

in nursing that care has its foundations in sound research and it is

essential that all nurses have the ability to critically appraise research

to identify what is best practice. This article is a step-by step-approach

to critiquing quantitative research to help nurses demystify the

process and decode the terminology.

Key words: Quantitative researchn Review processn Research

methodologies

For many qualifiednursesand nursingstudents

research is research, and it is often quite difficult

to grasp what others are referring to when they

discussthe limitationsand or strengthswithin

a researchstudy.Researchtextsand journalsrefer to

critiquing the literature, critical analysis, reviewing the

literature, evaluation and appraisal of the literature which

are in essence the same thing (Bassett and Bassett, 2003).

Terminology in research can be confusing for the novice

research reader where a term like ‘random’ refers to an

organized manner of selecting items or participants, and the

word ‘significance’ is applied to a degree of chance. Thus

the aim of this article is to take a step-by-step approach to

critiquing research in an attempt to help nurses demystify

the process and decode the terminology.

When caring for patients it is essential that nurses are

using the current best practice. To determine what this is

nurses must be able to read research. The adage ‘All that

glitters is not gold’ is also true in research. Not all research

is of the same quality or of a high standard and therefore

nurses should not simply take research at face value simply

because it has been published (Cullum and Droogan, 1999;

Polit and Beck, 2006). Critiquing is a systematic method of

Michael Coughlan, Patricia Cronin, Frances R

appraising the strengths and limitations of a piece of res

in order to determine its credibility and/or its applicabilit

to practice (Valente, 2003). Seeking only limitations

study is criticism and critiquing and criticism are not the

same (Burns and Grove, 1997). A critique is an imperson

evaluation of the strengths and limitations of the researc

being reviewed and should not be seen as a disparagem

of the researchers ability. Neither should it be regarded a

a jousting match between the researcher and the review

Burns and Grove (1999) call this an ‘intellectual criti

in that it is not the creator but the creation that is being

evaluated. The reviewer maintains objectivity through

the critique.No personalviews are expressedby the

reviewer and the strengths and/or limitations of the stud

and the implications of these are highlighted with referen

to research texts or journals. It is also important to reme

that research works within the realms of probability whe

nothing is absolutely certain. It is therefore importan

refer to the apparent strengths, limitations and findi

of a piece of research (Burns and Grove, 1997). The

of personal pronouns is also avoided in order that a

appearance of objectivity can be maintained.

Credibility and integrity

There are numerous tools available to help both novice a

advanced reviewers to critique research studies (Tan

2003). These tools generally ask questions that can help

reviewer to determine the degree to which the steps in t

research process were followed. However, some step

more important than others and very few tools acknowle

this. Ryan-Wenger(1992)suggeststhat questionsin a

critiquing tool can be subdivided in those that are u

for getting a feel for the study being presented which she

calls ‘credibility variables’ and those that are essenti

evaluating the research process called ‘integrity variable

Credibility variables concentrate on how believable

work appears and focus on the researcher’s qualification

ability to undertake and accurately present the stud

answers to these questions are important when criti

a piece of research as they can offer the reader an

into what to expectin the remainderof the study.

However, the reader should be aware that identified stre

and limitationswithin this sectionwill not necessarily

correspond with what will be found in the rest of the wor

Integrity questions, on the other hand, are interested in t

robustness of the research method, seeking to identify h

appropriately and accurately the researcher followed

steps in the research process. The answers to these ques

658 British Journal of Nursing, 2007, Vol 16, No 11

Michael Coughlan, Patricia Cronin and Frances Ryan are Lecturers,

School of Nursing and Midwifery, University of Dublin, Trinity

College, Dublin

Accepted for publication: March 2007

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Author(s)

The author(s’) qualifications and job title can be a useful

indicator into the researcher(s’) knowledge of the area

under investigation and ability to ask the appropriate

questions(Conkin Dale, 2005).Converselya research

study should be evaluated on its own merits and not

assumed to be valid and reliable simply based on the

author(s’) qualifications.

Report title

The title should be between 10 and 15 words long and

should clearly identify for the reader the purpose of the

study (Connell Meehan, 1999). Titles that are too long or

too short can be confusing or misleading (Parahoo, 2006).

Abstract

The abstract should provide a succinct overview of the

research and should include information regarding the

purpose of the study, method, sample size and selection,

will help to identify the trustworthiness of the study and its

applicability to nursing practice.

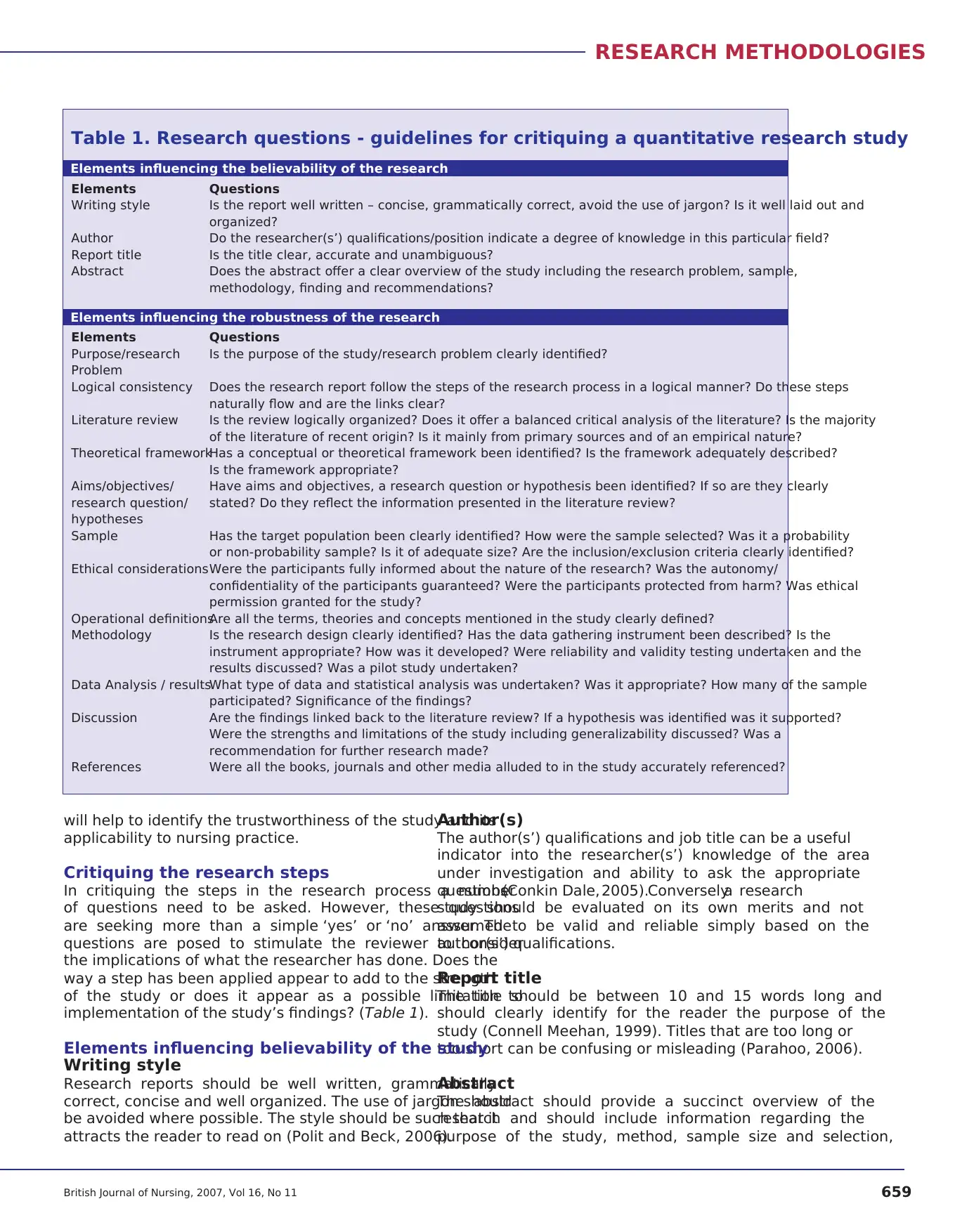

Critiquing the research steps

In critiquing the steps in the research process a number

of questions need to be asked. However, these questions

are seeking more than a simple ‘yes’ or ‘no’ answer. The

questions are posed to stimulate the reviewer to consider

the implications of what the researcher has done. Does the

way a step has been applied appear to add to the strength

of the study or does it appear as a possible limitation to

implementation of the study’s findings? (Table 1).

Elements influencing believability of the study

Writing style

Research reports should be well written, grammatically

correct, concise and well organized. The use of jargon should

be avoided where possible. The style should be such that it

attracts the reader to read on (Polit and Beck, 2006).

RESEARCH METHODOLOGIES

British Journal of Nursing, 2007, Vol 16, No 11 659

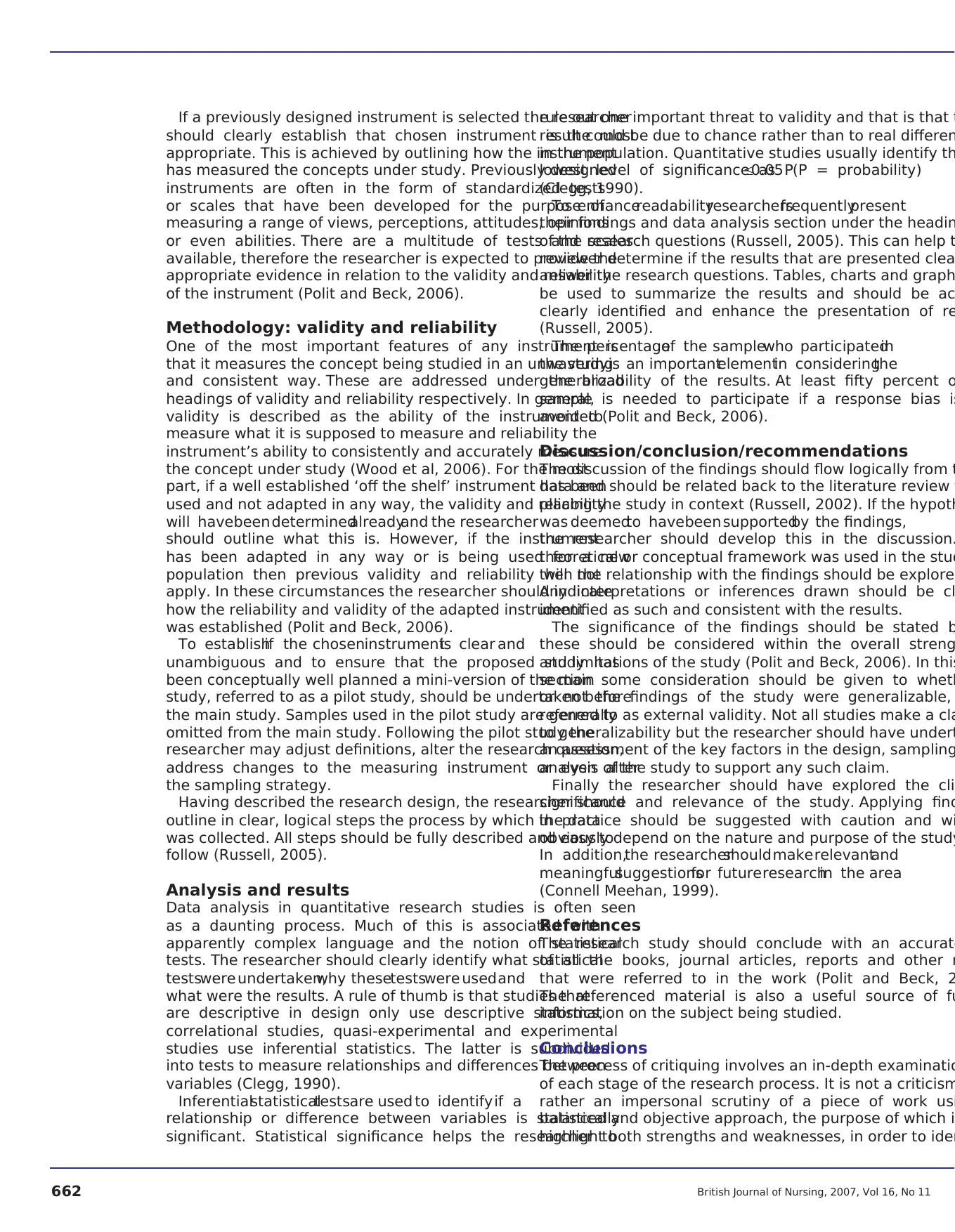

Table 1. Research questions - guidelines for critiquing a quantitative research study

Elements Questions

Writing style Is the report well written – concise, grammatically correct, avoid the use of jargon? Is it well laid out and

organized?

Author Do the researcher(s’) qualifications/position indicate a degree of knowledge in this particular field?

Report title Is the title clear, accurate and unambiguous?

Abstract Does the abstract offer a clear overview of the study including the research problem, sample,

methodology, finding and recommendations?

Elements Questions

Purpose/research Is the purpose of the study/research problem clearly identified?

Problem

Logical consistency Does the research report follow the steps of the research process in a logical manner? Do these steps

naturally flow and are the links clear?

Literature review Is the review logically organized? Does it offer a balanced critical analysis of the literature? Is the majority

of the literature of recent origin? Is it mainly from primary sources and of an empirical nature?

Theoretical frameworkHas a conceptual or theoretical framework been identified? Is the framework adequately described?

Is the framework appropriate?

Aims/objectives/ Have aims and objectives, a research question or hypothesis been identified? If so are they clearly

research question/ stated? Do they reflect the information presented in the literature review?

hypotheses

Sample Has the target population been clearly identified? How were the sample selected? Was it a probability

or non-probability sample? Is it of adequate size? Are the inclusion/exclusion criteria clearly identified?

Ethical considerations Were the participants fully informed about the nature of the research? Was the autonomy/

confidentiality of the participants guaranteed? Were the participants protected from harm? Was ethical

permission granted for the study?

Operational definitionsAre all the terms, theories and concepts mentioned in the study clearly defined?

Methodology Is the research design clearly identified? Has the data gathering instrument been described? Is the

instrument appropriate? How was it developed? Were reliability and validity testing undertaken and the

results discussed? Was a pilot study undertaken?

Data Analysis / resultsWhat type of data and statistical analysis was undertaken? Was it appropriate? How many of the sample

participated? Significance of the findings?

Discussion Are the findings linked back to the literature review? If a hypothesis was identified was it supported?

Were the strengths and limitations of the study including generalizability discussed? Was a

recommendation for further research made?

References Were all the books, journals and other media alluded to in the study accurately referenced?

Elements influencing the believability of the research

Elements influencing the robustness of the research

The author(s’) qualifications and job title can be a useful

indicator into the researcher(s’) knowledge of the area

under investigation and ability to ask the appropriate

questions(Conkin Dale, 2005).Converselya research

study should be evaluated on its own merits and not

assumed to be valid and reliable simply based on the

author(s’) qualifications.

Report title

The title should be between 10 and 15 words long and

should clearly identify for the reader the purpose of the

study (Connell Meehan, 1999). Titles that are too long or

too short can be confusing or misleading (Parahoo, 2006).

Abstract

The abstract should provide a succinct overview of the

research and should include information regarding the

purpose of the study, method, sample size and selection,

will help to identify the trustworthiness of the study and its

applicability to nursing practice.

Critiquing the research steps

In critiquing the steps in the research process a number

of questions need to be asked. However, these questions

are seeking more than a simple ‘yes’ or ‘no’ answer. The

questions are posed to stimulate the reviewer to consider

the implications of what the researcher has done. Does the

way a step has been applied appear to add to the strength

of the study or does it appear as a possible limitation to

implementation of the study’s findings? (Table 1).

Elements influencing believability of the study

Writing style

Research reports should be well written, grammatically

correct, concise and well organized. The use of jargon should

be avoided where possible. The style should be such that it

attracts the reader to read on (Polit and Beck, 2006).

RESEARCH METHODOLOGIES

British Journal of Nursing, 2007, Vol 16, No 11 659

Table 1. Research questions - guidelines for critiquing a quantitative research study

Elements Questions

Writing style Is the report well written – concise, grammatically correct, avoid the use of jargon? Is it well laid out and

organized?

Author Do the researcher(s’) qualifications/position indicate a degree of knowledge in this particular field?

Report title Is the title clear, accurate and unambiguous?

Abstract Does the abstract offer a clear overview of the study including the research problem, sample,

methodology, finding and recommendations?

Elements Questions

Purpose/research Is the purpose of the study/research problem clearly identified?

Problem

Logical consistency Does the research report follow the steps of the research process in a logical manner? Do these steps

naturally flow and are the links clear?

Literature review Is the review logically organized? Does it offer a balanced critical analysis of the literature? Is the majority

of the literature of recent origin? Is it mainly from primary sources and of an empirical nature?

Theoretical frameworkHas a conceptual or theoretical framework been identified? Is the framework adequately described?

Is the framework appropriate?

Aims/objectives/ Have aims and objectives, a research question or hypothesis been identified? If so are they clearly

research question/ stated? Do they reflect the information presented in the literature review?

hypotheses

Sample Has the target population been clearly identified? How were the sample selected? Was it a probability

or non-probability sample? Is it of adequate size? Are the inclusion/exclusion criteria clearly identified?

Ethical considerations Were the participants fully informed about the nature of the research? Was the autonomy/

confidentiality of the participants guaranteed? Were the participants protected from harm? Was ethical

permission granted for the study?

Operational definitionsAre all the terms, theories and concepts mentioned in the study clearly defined?

Methodology Is the research design clearly identified? Has the data gathering instrument been described? Is the

instrument appropriate? How was it developed? Were reliability and validity testing undertaken and the

results discussed? Was a pilot study undertaken?

Data Analysis / resultsWhat type of data and statistical analysis was undertaken? Was it appropriate? How many of the sample

participated? Significance of the findings?

Discussion Are the findings linked back to the literature review? If a hypothesis was identified was it supported?

Were the strengths and limitations of the study including generalizability discussed? Was a

recommendation for further research made?

References Were all the books, journals and other media alluded to in the study accurately referenced?

Elements influencing the believability of the research

Elements influencing the robustness of the research

the main findings and conclusions and recommendations

(Conkin Dale, 2005). From the abstract the reader should

be able to determine if the study is of interest and whether

or not to continue reading (Parahoo, 2006).

Elements influencing robustness

Purpose of the study/research problem

A research problem is often first presented to the reader in

the introduction to the study (Bassett and Bassett, 2003).

Depending on what is to be investigated some authors will

refer to it as the purpose of the study. In either case the

statement should at least broadly indicate to the reader what

is to be studied (Polit and Beck, 2006). Broad problems are

often multi-faceted and will need to become narrower and

more focused before they can be researched. In this the

literature review can play a major role (Parahoo, 2006).

Logical consistency

A research study needs to follow the steps in the process in a

logical manner. There should also be a clear link between the

steps beginning with the purpose of the study and following

through the literature review, the theoretical framework, the

research question, the methodology section, the data analysis,

and the findings (Ryan-Wenger, 1992).

Literature review

The primary purpose of the literature review is to define

or develop the research question while also identifying

an appropriatemethodof data collection(Burns and

Grove, 1997). It should also help to identify any gaps in

the literature relating to the problem and to suggest how

those gaps might be filled. The literature review should

demonstrate an appropriate depth and breadth of reading

around the topic in question. The majority of studies

included should be of recent origin and ideally less than

five years old. However, there may be exceptions to this,

for example, in areas where there is a lack of research, or a

seminal or all-important piece of work that is still relevant to

current practice. It is important also that the review should

include some historical as well as contemporary material

in order to put the subject being studied into context. The

depth of coverage will depend on the nature of the subject,

for example, for a subject with a vast range of literature then

the review will need to concentrate on a very specific area

(Carnwell, 1997). Another important consideration is the

type and source of literature presented. Primary empirical

data from the original source is more favourable than a

secondarysourceor anecdotalinformationwhere the

author relies on personal evidence or opinion that is not

founded on research.

A good review usually begins with an introduction which

identifies the key words used to conduct the search and

information about which databases were used. The themes

that emerged from the literature should then be presented

and discussed(Carnwell,1997).In presentingprevious

work it is important that the data is reviewed critically,

highlighting both the strengths and limitations of the study.

It should also be compared and contrasted with the findings

of other studies (Burns and Grove, 1997).

Theoretical framework

Following the identificationof the researchproblem

and the review of the literature the researcher shou

present the theoretical framework (Bassett and Bass

2003). Theoretical frameworks are a concept that no

and experienced researchers find confusing. It is init

important to note that not all research studies use a defi

theoreticalframework(Robson, 2002).A theoretical

framework can be a conceptual model that is used

guide for the study (Conkin Dale, 2005) or themes from

the literature that are conceptually mapped and used to

boundaries for the research (Miles and Huberman, 1994)

A sound framework also identifies the various conce

being studied and the relationship between those concep

(Burns and Grove, 1997). Such relationships should h

been identified in the literature. The research study shou

then build on this theory through empirical observat

Some theoretical frameworks may include a hypothe

Theoretical frameworks tend to be better developed

experimental and quasi-experimental studies and ofte

poorly developed or non-existent in descriptive studi

(Burns and Grove, 1999). The theoretical framework sho

be clearly identified and explained to the reader.

Aims and objectives/research question/

research hypothesis

The purpose of the aims and objectives of a study, the re

question and the research hypothesis is to form a link be

the initially stated purpose of the study or research prob

and how the study will be undertaken (Burns and G

1999). They should be clearly stated and be congruent w

the data presented in the literature review. The use of th

items is dependent on the type of research being perform

Some descriptive studies may not identify any of these it

but simply refer to the purpose of the study or the resea

problem, others will include either aims and objectiv

research questions (Burns and Grove, 1999). Correla

designs, study the relationships that exist between t

more variables and accordingly use either a research que

or hypothesis. Experimental and quasi-experimental s

should clearly state a hypothesis identifying the vari

be manipulated, the population that is being studied and

predicted outcome (Burns and Grove, 1999).

Sample and sample size

The degree to which a sample reflects the populatio

was drawn from is known as representativeness and

quantitative research this is a decisive factor in determin

the adequacy of a study (Polit and Beck, 2006). In

to select a sample that is likely to be representative

thus identify findings that are probably generalizable

the target population a probability sample should be use

(Parahoo, 2006). The size of the sample is also important

quantitative research as small samples are at risk o

overly representative of small subgroups within the targe

population. For example, if, in a sample of general nurse

was noticed that 40% of the respondents were males, th

males would appear to be over represented in the sampl

thereby creating a sampling error. The risk of sampli

660 British Journal of Nursing, 2007, Vol 16, No 11

(Conkin Dale, 2005). From the abstract the reader should

be able to determine if the study is of interest and whether

or not to continue reading (Parahoo, 2006).

Elements influencing robustness

Purpose of the study/research problem

A research problem is often first presented to the reader in

the introduction to the study (Bassett and Bassett, 2003).

Depending on what is to be investigated some authors will

refer to it as the purpose of the study. In either case the

statement should at least broadly indicate to the reader what

is to be studied (Polit and Beck, 2006). Broad problems are

often multi-faceted and will need to become narrower and

more focused before they can be researched. In this the

literature review can play a major role (Parahoo, 2006).

Logical consistency

A research study needs to follow the steps in the process in a

logical manner. There should also be a clear link between the

steps beginning with the purpose of the study and following

through the literature review, the theoretical framework, the

research question, the methodology section, the data analysis,

and the findings (Ryan-Wenger, 1992).

Literature review

The primary purpose of the literature review is to define

or develop the research question while also identifying

an appropriatemethodof data collection(Burns and

Grove, 1997). It should also help to identify any gaps in

the literature relating to the problem and to suggest how

those gaps might be filled. The literature review should

demonstrate an appropriate depth and breadth of reading

around the topic in question. The majority of studies

included should be of recent origin and ideally less than

five years old. However, there may be exceptions to this,

for example, in areas where there is a lack of research, or a

seminal or all-important piece of work that is still relevant to

current practice. It is important also that the review should

include some historical as well as contemporary material

in order to put the subject being studied into context. The

depth of coverage will depend on the nature of the subject,

for example, for a subject with a vast range of literature then

the review will need to concentrate on a very specific area

(Carnwell, 1997). Another important consideration is the

type and source of literature presented. Primary empirical

data from the original source is more favourable than a

secondarysourceor anecdotalinformationwhere the

author relies on personal evidence or opinion that is not

founded on research.

A good review usually begins with an introduction which

identifies the key words used to conduct the search and

information about which databases were used. The themes

that emerged from the literature should then be presented

and discussed(Carnwell,1997).In presentingprevious

work it is important that the data is reviewed critically,

highlighting both the strengths and limitations of the study.

It should also be compared and contrasted with the findings

of other studies (Burns and Grove, 1997).

Theoretical framework

Following the identificationof the researchproblem

and the review of the literature the researcher shou

present the theoretical framework (Bassett and Bass

2003). Theoretical frameworks are a concept that no

and experienced researchers find confusing. It is init

important to note that not all research studies use a defi

theoreticalframework(Robson, 2002).A theoretical

framework can be a conceptual model that is used

guide for the study (Conkin Dale, 2005) or themes from

the literature that are conceptually mapped and used to

boundaries for the research (Miles and Huberman, 1994)

A sound framework also identifies the various conce

being studied and the relationship between those concep

(Burns and Grove, 1997). Such relationships should h

been identified in the literature. The research study shou

then build on this theory through empirical observat

Some theoretical frameworks may include a hypothe

Theoretical frameworks tend to be better developed

experimental and quasi-experimental studies and ofte

poorly developed or non-existent in descriptive studi

(Burns and Grove, 1999). The theoretical framework sho

be clearly identified and explained to the reader.

Aims and objectives/research question/

research hypothesis

The purpose of the aims and objectives of a study, the re

question and the research hypothesis is to form a link be

the initially stated purpose of the study or research prob

and how the study will be undertaken (Burns and G

1999). They should be clearly stated and be congruent w

the data presented in the literature review. The use of th

items is dependent on the type of research being perform

Some descriptive studies may not identify any of these it

but simply refer to the purpose of the study or the resea

problem, others will include either aims and objectiv

research questions (Burns and Grove, 1999). Correla

designs, study the relationships that exist between t

more variables and accordingly use either a research que

or hypothesis. Experimental and quasi-experimental s

should clearly state a hypothesis identifying the vari

be manipulated, the population that is being studied and

predicted outcome (Burns and Grove, 1999).

Sample and sample size

The degree to which a sample reflects the populatio

was drawn from is known as representativeness and

quantitative research this is a decisive factor in determin

the adequacy of a study (Polit and Beck, 2006). In

to select a sample that is likely to be representative

thus identify findings that are probably generalizable

the target population a probability sample should be use

(Parahoo, 2006). The size of the sample is also important

quantitative research as small samples are at risk o

overly representative of small subgroups within the targe

population. For example, if, in a sample of general nurse

was noticed that 40% of the respondents were males, th

males would appear to be over represented in the sampl

thereby creating a sampling error. The risk of sampli

660 British Journal of Nursing, 2007, Vol 16, No 11

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

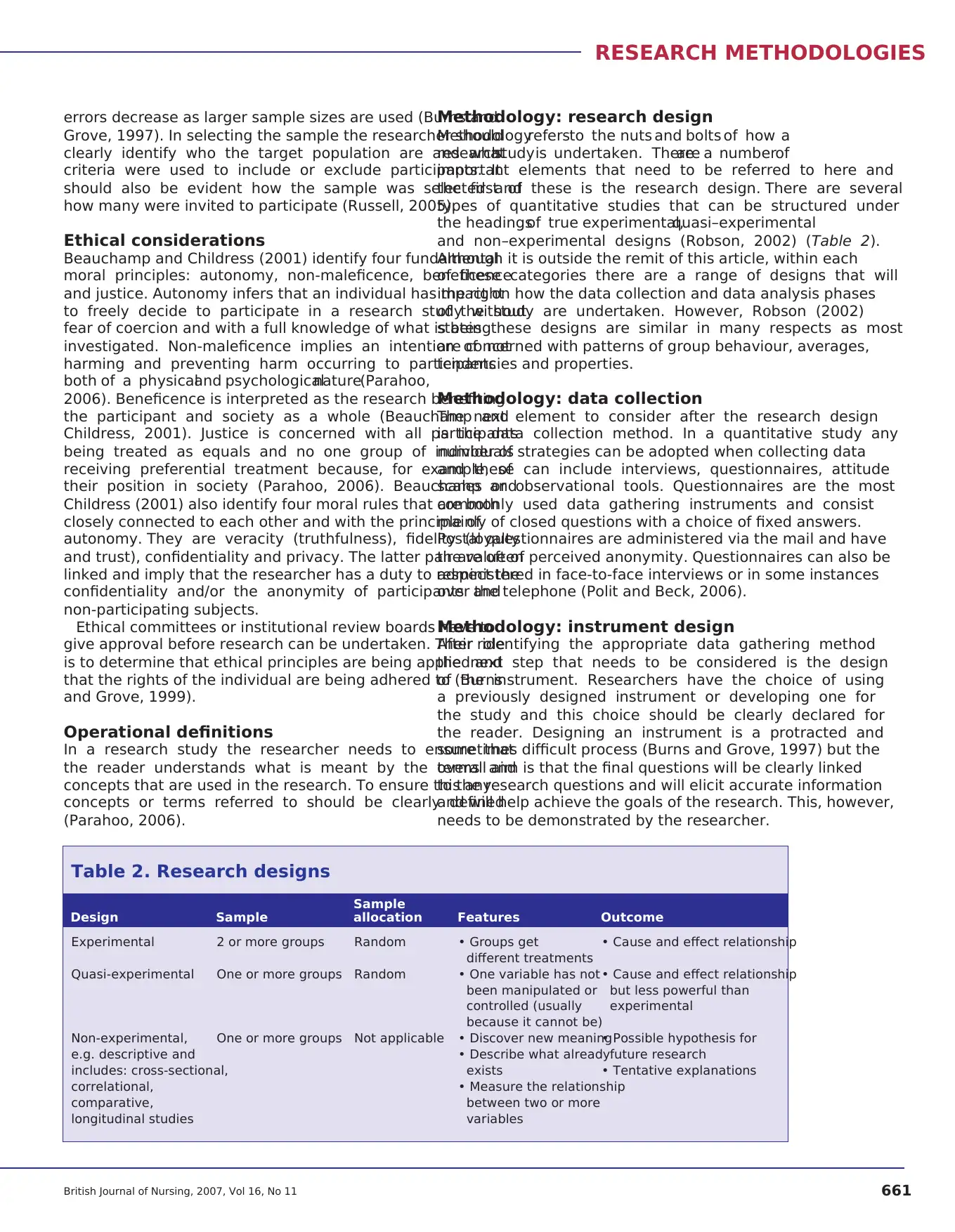

Methodology: research design

Methodologyrefersto the nuts and bolts of how a

researchstudy is undertaken. Thereare a numberof

important elements that need to be referred to here and

the first of these is the research design. There are several

types of quantitative studies that can be structured under

the headingsof true experimental,quasi–experimental

and non–experimental designs (Robson, 2002) (Table 2).

Although it is outside the remit of this article, within each

of these categories there are a range of designs that will

impact on how the data collection and data analysis phases

of the study are undertaken. However, Robson (2002)

states these designs are similar in many respects as most

are concerned with patterns of group behaviour, averages,

tendencies and properties.

Methodology: data collection

The next element to consider after the research design

is the data collection method. In a quantitative study any

number of strategies can be adopted when collecting data

and these can include interviews, questionnaires, attitude

scales or observational tools. Questionnaires are the most

commonly used data gathering instruments and consist

mainly of closed questions with a choice of fixed answers.

Postal questionnaires are administered via the mail and have

the value of perceived anonymity. Questionnaires can also be

administered in face-to-face interviews or in some instances

over the telephone (Polit and Beck, 2006).

Methodology: instrument design

After identifying the appropriate data gathering method

the next step that needs to be considered is the design

of the instrument. Researchers have the choice of using

a previously designed instrument or developing one for

the study and this choice should be clearly declared for

the reader. Designing an instrument is a protracted and

sometimes difficult process (Burns and Grove, 1997) but the

overall aim is that the final questions will be clearly linked

to the research questions and will elicit accurate information

and will help achieve the goals of the research. This, however,

needs to be demonstrated by the researcher.

RESEARCH METHODOLOGIES

British Journal of Nursing, 2007, Vol 16, No 11 661

errors decrease as larger sample sizes are used (Burns and

Grove, 1997). In selecting the sample the researcher should

clearly identify who the target population are and what

criteria were used to include or exclude participants. It

should also be evident how the sample was selected and

how many were invited to participate (Russell, 2005).

Ethical considerations

Beauchamp and Childress (2001) identify four fundamental

moral principles: autonomy, non-maleficence, beneficence

and justice. Autonomy infers that an individual has the right

to freely decide to participate in a research study without

fear of coercion and with a full knowledge of what is being

investigated. Non-maleficence implies an intention of not

harming and preventing harm occurring to participants

both of a physicaland psychologicalnature(Parahoo,

2006). Beneficence is interpreted as the research benefiting

the participant and society as a whole (Beauchamp and

Childress, 2001). Justice is concerned with all participants

being treated as equals and no one group of individuals

receiving preferential treatment because, for example, of

their position in society (Parahoo, 2006). Beauchamp and

Childress (2001) also identify four moral rules that are both

closely connected to each other and with the principle of

autonomy. They are veracity (truthfulness), fidelity (loyalty

and trust), confidentiality and privacy. The latter pair are often

linked and imply that the researcher has a duty to respect the

confidentiality and/or the anonymity of participants and

non-participating subjects.

Ethical committees or institutional review boards have to

give approval before research can be undertaken. Their role

is to determine that ethical principles are being applied and

that the rights of the individual are being adhered to (Burns

and Grove, 1999).

Operational definitions

In a research study the researcher needs to ensure that

the reader understands what is meant by the terms and

concepts that are used in the research. To ensure this any

concepts or terms referred to should be clearly defined

(Parahoo, 2006).

Table 2. Research designs

Experimental 2 or more groups Random • Groups get • Cause and effect relationship

different treatments

Quasi-experimental One or more groups Random • One variable has not • Cause and effect relationship

been manipulated or but less powerful than

controlled (usually experimental

because it cannot be)

Non-experimental, One or more groups Not applicable • Discover new meaning• Possible hypothesis for

e.g. descriptive and • Describe what alreadyfuture research

includes: cross-sectional, exists • Tentative explanations

correlational, • Measure the relationship

comparative, between two or more

longitudinal studies variables

Sample

Design Sample allocation Features Outcome

Methodologyrefersto the nuts and bolts of how a

researchstudy is undertaken. Thereare a numberof

important elements that need to be referred to here and

the first of these is the research design. There are several

types of quantitative studies that can be structured under

the headingsof true experimental,quasi–experimental

and non–experimental designs (Robson, 2002) (Table 2).

Although it is outside the remit of this article, within each

of these categories there are a range of designs that will

impact on how the data collection and data analysis phases

of the study are undertaken. However, Robson (2002)

states these designs are similar in many respects as most

are concerned with patterns of group behaviour, averages,

tendencies and properties.

Methodology: data collection

The next element to consider after the research design

is the data collection method. In a quantitative study any

number of strategies can be adopted when collecting data

and these can include interviews, questionnaires, attitude

scales or observational tools. Questionnaires are the most

commonly used data gathering instruments and consist

mainly of closed questions with a choice of fixed answers.

Postal questionnaires are administered via the mail and have

the value of perceived anonymity. Questionnaires can also be

administered in face-to-face interviews or in some instances

over the telephone (Polit and Beck, 2006).

Methodology: instrument design

After identifying the appropriate data gathering method

the next step that needs to be considered is the design

of the instrument. Researchers have the choice of using

a previously designed instrument or developing one for

the study and this choice should be clearly declared for

the reader. Designing an instrument is a protracted and

sometimes difficult process (Burns and Grove, 1997) but the

overall aim is that the final questions will be clearly linked

to the research questions and will elicit accurate information

and will help achieve the goals of the research. This, however,

needs to be demonstrated by the researcher.

RESEARCH METHODOLOGIES

British Journal of Nursing, 2007, Vol 16, No 11 661

errors decrease as larger sample sizes are used (Burns and

Grove, 1997). In selecting the sample the researcher should

clearly identify who the target population are and what

criteria were used to include or exclude participants. It

should also be evident how the sample was selected and

how many were invited to participate (Russell, 2005).

Ethical considerations

Beauchamp and Childress (2001) identify four fundamental

moral principles: autonomy, non-maleficence, beneficence

and justice. Autonomy infers that an individual has the right

to freely decide to participate in a research study without

fear of coercion and with a full knowledge of what is being

investigated. Non-maleficence implies an intention of not

harming and preventing harm occurring to participants

both of a physicaland psychologicalnature(Parahoo,

2006). Beneficence is interpreted as the research benefiting

the participant and society as a whole (Beauchamp and

Childress, 2001). Justice is concerned with all participants

being treated as equals and no one group of individuals

receiving preferential treatment because, for example, of

their position in society (Parahoo, 2006). Beauchamp and

Childress (2001) also identify four moral rules that are both

closely connected to each other and with the principle of

autonomy. They are veracity (truthfulness), fidelity (loyalty

and trust), confidentiality and privacy. The latter pair are often

linked and imply that the researcher has a duty to respect the

confidentiality and/or the anonymity of participants and

non-participating subjects.

Ethical committees or institutional review boards have to

give approval before research can be undertaken. Their role

is to determine that ethical principles are being applied and

that the rights of the individual are being adhered to (Burns

and Grove, 1999).

Operational definitions

In a research study the researcher needs to ensure that

the reader understands what is meant by the terms and

concepts that are used in the research. To ensure this any

concepts or terms referred to should be clearly defined

(Parahoo, 2006).

Table 2. Research designs

Experimental 2 or more groups Random • Groups get • Cause and effect relationship

different treatments

Quasi-experimental One or more groups Random • One variable has not • Cause and effect relationship

been manipulated or but less powerful than

controlled (usually experimental

because it cannot be)

Non-experimental, One or more groups Not applicable • Discover new meaning• Possible hypothesis for

e.g. descriptive and • Describe what alreadyfuture research

includes: cross-sectional, exists • Tentative explanations

correlational, • Measure the relationship

comparative, between two or more

longitudinal studies variables

Sample

Design Sample allocation Features Outcome

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

If a previously designed instrument is selected the researcher

should clearly establish that chosen instrument is the most

appropriate. This is achieved by outlining how the instrument

has measured the concepts under study. Previously designed

instruments are often in the form of standardized tests

or scales that have been developed for the purpose of

measuring a range of views, perceptions, attitudes, opinions

or even abilities. There are a multitude of tests and scales

available, therefore the researcher is expected to provide the

appropriate evidence in relation to the validity and reliability

of the instrument (Polit and Beck, 2006).

Methodology: validity and reliability

One of the most important features of any instrument is

that it measures the concept being studied in an unwavering

and consistent way. These are addressed under the broad

headings of validity and reliability respectively. In general,

validity is described as the ability of the instrument to

measure what it is supposed to measure and reliability the

instrument’s ability to consistently and accurately measure

the concept under study (Wood et al, 2006). For the most

part, if a well established ‘off the shelf’ instrument has been

used and not adapted in any way, the validity and reliability

will havebeen determinedalreadyand the researcher

should outline what this is. However, if the instrument

has been adapted in any way or is being used for a new

population then previous validity and reliability will not

apply. In these circumstances the researcher should indicate

how the reliability and validity of the adapted instrument

was established (Polit and Beck, 2006).

To establishif the choseninstrumentis clear and

unambiguous and to ensure that the proposed study has

been conceptually well planned a mini-version of the main

study, referred to as a pilot study, should be undertaken before

the main study. Samples used in the pilot study are generally

omitted from the main study. Following the pilot study the

researcher may adjust definitions, alter the research question,

address changes to the measuring instrument or even alter

the sampling strategy.

Having described the research design, the researcher should

outline in clear, logical steps the process by which the data

was collected. All steps should be fully described and easy to

follow (Russell, 2005).

Analysis and results

Data analysis in quantitative research studies is often seen

as a daunting process. Much of this is associated with

apparently complex language and the notion of statistical

tests. The researcher should clearly identify what statistical

testswere undertaken,why thesetestswere used and

what were the results. A rule of thumb is that studies that

are descriptive in design only use descriptive statistics,

correlational studies, quasi-experimental and experimental

studies use inferential statistics. The latter is subdivided

into tests to measure relationships and differences between

variables (Clegg, 1990).

Inferentialstatisticaltestsare used to identify if a

relationship or difference between variables is statistically

significant. Statistical significance helps the researcher to

rule out one important threat to validity and that is that t

result could be due to chance rather than to real differen

in the population. Quantitative studies usually identify th

lowest level of significance as P≤0.05 (P = probability)

(Clegg, 1990).

To enhancereadabilityresearchersfrequentlypresent

their findings and data analysis section under the headin

of the research questions (Russell, 2005). This can help t

reviewer determine if the results that are presented clea

answer the research questions. Tables, charts and graph

be used to summarize the results and should be ac

clearly identified and enhance the presentation of re

(Russell, 2005).

The percentageof the samplewho participatedin

the studyis an importantelementin consideringthe

generalizability of the results. At least fifty percent o

sample is needed to participate if a response bias is

avoided (Polit and Beck, 2006).

Discussion/conclusion/recommendations

The discussion of the findings should flow logically from t

data and should be related back to the literature review t

placing the study in context (Russell, 2002). If the hypoth

was deemedto havebeen supportedby the findings,

the researcher should develop this in the discussion.

theoretical or conceptual framework was used in the stud

then the relationship with the findings should be explored

Any interpretations or inferences drawn should be cl

identified as such and consistent with the results.

The significance of the findings should be stated b

these should be considered within the overall streng

and limitations of the study (Polit and Beck, 2006). In this

section some consideration should be given to wheth

or not the findings of the study were generalizable,

referred to as external validity. Not all studies make a cla

to generalizability but the researcher should have undert

an assessment of the key factors in the design, sampling

analysis of the study to support any such claim.

Finally the researcher should have explored the cli

significance and relevance of the study. Applying find

in practice should be suggested with caution and wi

obviously depend on the nature and purpose of the study

In addition,the researchershould make relevantand

meaningfulsuggestionsfor future researchin the area

(Connell Meehan, 1999).

References

The research study should conclude with an accurate

of all the books, journal articles, reports and other m

that were referred to in the work (Polit and Beck, 2

The referenced material is also a useful source of fu

information on the subject being studied.

Conclusions

The process of critiquing involves an in-depth examinatio

of each stage of the research process. It is not a criticism

rather an impersonal scrutiny of a piece of work usi

balanced and objective approach, the purpose of which i

highlight both strengths and weaknesses, in order to iden

662 British Journal of Nursing, 2007, Vol 16, No 11

should clearly establish that chosen instrument is the most

appropriate. This is achieved by outlining how the instrument

has measured the concepts under study. Previously designed

instruments are often in the form of standardized tests

or scales that have been developed for the purpose of

measuring a range of views, perceptions, attitudes, opinions

or even abilities. There are a multitude of tests and scales

available, therefore the researcher is expected to provide the

appropriate evidence in relation to the validity and reliability

of the instrument (Polit and Beck, 2006).

Methodology: validity and reliability

One of the most important features of any instrument is

that it measures the concept being studied in an unwavering

and consistent way. These are addressed under the broad

headings of validity and reliability respectively. In general,

validity is described as the ability of the instrument to

measure what it is supposed to measure and reliability the

instrument’s ability to consistently and accurately measure

the concept under study (Wood et al, 2006). For the most

part, if a well established ‘off the shelf’ instrument has been

used and not adapted in any way, the validity and reliability

will havebeen determinedalreadyand the researcher

should outline what this is. However, if the instrument

has been adapted in any way or is being used for a new

population then previous validity and reliability will not

apply. In these circumstances the researcher should indicate

how the reliability and validity of the adapted instrument

was established (Polit and Beck, 2006).

To establishif the choseninstrumentis clear and

unambiguous and to ensure that the proposed study has

been conceptually well planned a mini-version of the main

study, referred to as a pilot study, should be undertaken before

the main study. Samples used in the pilot study are generally

omitted from the main study. Following the pilot study the

researcher may adjust definitions, alter the research question,

address changes to the measuring instrument or even alter

the sampling strategy.

Having described the research design, the researcher should

outline in clear, logical steps the process by which the data

was collected. All steps should be fully described and easy to

follow (Russell, 2005).

Analysis and results

Data analysis in quantitative research studies is often seen

as a daunting process. Much of this is associated with

apparently complex language and the notion of statistical

tests. The researcher should clearly identify what statistical

testswere undertaken,why thesetestswere used and

what were the results. A rule of thumb is that studies that

are descriptive in design only use descriptive statistics,

correlational studies, quasi-experimental and experimental

studies use inferential statistics. The latter is subdivided

into tests to measure relationships and differences between

variables (Clegg, 1990).

Inferentialstatisticaltestsare used to identify if a

relationship or difference between variables is statistically

significant. Statistical significance helps the researcher to

rule out one important threat to validity and that is that t

result could be due to chance rather than to real differen

in the population. Quantitative studies usually identify th

lowest level of significance as P≤0.05 (P = probability)

(Clegg, 1990).

To enhancereadabilityresearchersfrequentlypresent

their findings and data analysis section under the headin

of the research questions (Russell, 2005). This can help t

reviewer determine if the results that are presented clea

answer the research questions. Tables, charts and graph

be used to summarize the results and should be ac

clearly identified and enhance the presentation of re

(Russell, 2005).

The percentageof the samplewho participatedin

the studyis an importantelementin consideringthe

generalizability of the results. At least fifty percent o

sample is needed to participate if a response bias is

avoided (Polit and Beck, 2006).

Discussion/conclusion/recommendations

The discussion of the findings should flow logically from t

data and should be related back to the literature review t

placing the study in context (Russell, 2002). If the hypoth

was deemedto havebeen supportedby the findings,

the researcher should develop this in the discussion.

theoretical or conceptual framework was used in the stud

then the relationship with the findings should be explored

Any interpretations or inferences drawn should be cl

identified as such and consistent with the results.

The significance of the findings should be stated b

these should be considered within the overall streng

and limitations of the study (Polit and Beck, 2006). In this

section some consideration should be given to wheth

or not the findings of the study were generalizable,

referred to as external validity. Not all studies make a cla

to generalizability but the researcher should have undert

an assessment of the key factors in the design, sampling

analysis of the study to support any such claim.

Finally the researcher should have explored the cli

significance and relevance of the study. Applying find

in practice should be suggested with caution and wi

obviously depend on the nature and purpose of the study

In addition,the researchershould make relevantand

meaningfulsuggestionsfor future researchin the area

(Connell Meehan, 1999).

References

The research study should conclude with an accurate

of all the books, journal articles, reports and other m

that were referred to in the work (Polit and Beck, 2

The referenced material is also a useful source of fu

information on the subject being studied.

Conclusions

The process of critiquing involves an in-depth examinatio

of each stage of the research process. It is not a criticism

rather an impersonal scrutiny of a piece of work usi

balanced and objective approach, the purpose of which i

highlight both strengths and weaknesses, in order to iden

662 British Journal of Nursing, 2007, Vol 16, No 11

whether a piece of research is trustworthy and unbiased. As

nursing practice is becoming increasingly more evidenced

based, it is important that care has its foundations in sound

research. It is therefore important that all nurses have the

ability to critically appraise research in order to identify what

is best practice. BJN

Bassett C, Bassett J (2003) Reading and critiquing research. Br J Perioper

Nurs 13(4): 162–4

Beauchamp T, Childress J (2001) Principles of Biomedical Ethics. 5th edn.

Oxford University Press, Oxford

Burns N, Grove S (1997) The Practice of Nursing Research: Conduct, Critique

and Utilization. 3rd edn. WB Saunders Company, Philadelphia

Burns N, Grove S (1999) Understanding Nursing Research. 2nd edn. WB

Saunders Company, Philadelphia

Carnell R (1997) Critiquing research. Nurs Pract 8(12): 16–21

Clegg F (1990) Simple Statistics: A Course Book for the Social Sciences. 2nd edn.

Cambridge University Press, Cambridge

Conkin Dale J (2005) Critiquing research for use in practice. J Pediatr Health

Care 19: 183–6

Connell Meehan T (1999) The research critique. In: Treacy P, Hyde A, eds.

Nursing Research and Design. UCD Press, Dublin: 57–74

Cullum N, Droogan J (1999) Using research and the role of systematic

reviews of the literature. In: Mulhall A, Le May A, eds. Nursing Research:

Disseminationand Implementation.Churchill Livingstone,Edinburgh:

109–23-

Miles M, Huberman A (1994) Qualitative Data Analysis. 2nd edn. Sage,

Thousand Oaks, Ca

Parahoo K (2006) Nursing Research: Principles, Process and Issues. 2nd edn.

Palgrave Macmillan, Houndmills Basingstoke

Polit D, Beck C (2006) Essentials of Nursing Care: Methods, Appraisal and

Utilization. 6th edn. Lippincott Williams and Wilkins, Philadelphia

Robson C (2002) Real World Research. 2nd edn. Blackwell Publishing,

Oxford

Russell C (2005) Evaluating quantitative research reports. Nephrol Nurs J

32(1): 61–4

Ryan-Wenger N (1992) Guidelines for critique of a research report. Heart

Lung 21(4): 394–401

Tanner J (2003) Reading and critiquing research. Br J Perioper Nurs 13(4):

162–4

Valente S (2003) Research dissemination and utilization: Improving care at

the bedside. J Nurs Care Quality 18(2): 114–21

Wood MJ, Ross-Kerr JC, Brink PJ (2006) Basic Steps in Planning Nursing

Research: From Question to Proposal 6th edn. Jones and Bartlett, Sudbury

KEY POINTS

■ Many qualified and student nurses have difficulty

understanding the concepts and terminology associated

with research and research critique.

■ The ability to critically read research is essential if the

profession is to achieve and maintain its goal to be

evidenced based.

■ A critique of a piece of research is not a criticism of

the work, but an impersonal review to highlight the

strengths and limitations of the study.

■ It is important that all nurses have the ability to critically

appraise research in order to identify what is best

practice.

RESEARCH METHODOLOGIES

British Journal of Nursing, 2007, Vol 16, No 11 663

nursing practice is becoming increasingly more evidenced

based, it is important that care has its foundations in sound

research. It is therefore important that all nurses have the

ability to critically appraise research in order to identify what

is best practice. BJN

Bassett C, Bassett J (2003) Reading and critiquing research. Br J Perioper

Nurs 13(4): 162–4

Beauchamp T, Childress J (2001) Principles of Biomedical Ethics. 5th edn.

Oxford University Press, Oxford

Burns N, Grove S (1997) The Practice of Nursing Research: Conduct, Critique

and Utilization. 3rd edn. WB Saunders Company, Philadelphia

Burns N, Grove S (1999) Understanding Nursing Research. 2nd edn. WB

Saunders Company, Philadelphia

Carnell R (1997) Critiquing research. Nurs Pract 8(12): 16–21

Clegg F (1990) Simple Statistics: A Course Book for the Social Sciences. 2nd edn.

Cambridge University Press, Cambridge

Conkin Dale J (2005) Critiquing research for use in practice. J Pediatr Health

Care 19: 183–6

Connell Meehan T (1999) The research critique. In: Treacy P, Hyde A, eds.

Nursing Research and Design. UCD Press, Dublin: 57–74

Cullum N, Droogan J (1999) Using research and the role of systematic

reviews of the literature. In: Mulhall A, Le May A, eds. Nursing Research:

Disseminationand Implementation.Churchill Livingstone,Edinburgh:

109–23-

Miles M, Huberman A (1994) Qualitative Data Analysis. 2nd edn. Sage,

Thousand Oaks, Ca

Parahoo K (2006) Nursing Research: Principles, Process and Issues. 2nd edn.

Palgrave Macmillan, Houndmills Basingstoke

Polit D, Beck C (2006) Essentials of Nursing Care: Methods, Appraisal and

Utilization. 6th edn. Lippincott Williams and Wilkins, Philadelphia

Robson C (2002) Real World Research. 2nd edn. Blackwell Publishing,

Oxford

Russell C (2005) Evaluating quantitative research reports. Nephrol Nurs J

32(1): 61–4

Ryan-Wenger N (1992) Guidelines for critique of a research report. Heart

Lung 21(4): 394–401

Tanner J (2003) Reading and critiquing research. Br J Perioper Nurs 13(4):

162–4

Valente S (2003) Research dissemination and utilization: Improving care at

the bedside. J Nurs Care Quality 18(2): 114–21

Wood MJ, Ross-Kerr JC, Brink PJ (2006) Basic Steps in Planning Nursing

Research: From Question to Proposal 6th edn. Jones and Bartlett, Sudbury

KEY POINTS

■ Many qualified and student nurses have difficulty

understanding the concepts and terminology associated

with research and research critique.

■ The ability to critically read research is essential if the

profession is to achieve and maintain its goal to be

evidenced based.

■ A critique of a piece of research is not a criticism of

the work, but an impersonal review to highlight the

strengths and limitations of the study.

■ It is important that all nurses have the ability to critically

appraise research in order to identify what is best

practice.

RESEARCH METHODOLOGIES

British Journal of Nursing, 2007, Vol 16, No 11 663

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 6

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.