Optimizing Support Vector Machines for Large-Scale Data

VerifiedAdded on 2020/05/16

|15

|3689

|122

AI Summary

Support Vector Machines (SVMs) are a fundamental tool in machine learning for classification and regression tasks. As datasets grow larger, the need for more efficient SVM training methods becomes crucial to maintain computational feasibility and accuracy. This paper examines various advancements in SVM training algorithms, focusing on techniques that enhance efficiency and performance. Key areas of exploration include linear programming approaches, decomposition methods, kernel optimization, and adaptive penalization strategies. The research highlights contributions from several studies, such as Rivas-Perea et al.'s algorithm for large-scale SVM regression using linear programming and decomposition, and Zhao & Sun's recursive reduced least squares support vector regression. Additionally, the paper discusses practical implementations of these algorithms in real-world applications, providing insights into their effectiveness and potential areas for further research. By analyzing these innovative techniques, this study aims to contribute to the ongoing development of more efficient SVM training methods that can handle large-scale data effectively.

1

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT

VECTOR MACHINES

Nishanth Yalam

Harrisburg University

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT

VECTOR MACHINES

Nishanth Yalam

Harrisburg University

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

2

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

Abstract

In the proposed research Large Scale Support Vector’s the company needs to improve

efficiency, reducing training data and to decrease the testing phase time. This research

includes the development of algorithm that can improve LSVM efficiency and training and

testing methodology to effectively choose minimum data sets to train and test LSVM. The

scope of the research will be limited to samples, LSVM algorithm and testing phase and will

not include LSVM implementation methods.

After analyzing the problem and LSVM’s needs, a methodology called variable

decomposition and constraint decomposition is chosen to address the training problem. This

methodology evades necessity to use high-end computational resources and also reduces

training time. To improve the generalization, a methodology is adopted to reduce the number

of support vector by optimizing the kernel parameters and also a vector correlating with

principle and greedy algorithm that are also included in the algorithm to further reduce the

support vectors involved in the testing phase.

A detailed list of research tasks and major milestones are included in this proposal. The

research will require an available data generation system to get the potential training data and

testing data. Completing the proposed research will require Latest version of the

programming software OCTAVE for developing and implementing necessary algorithms.

The deliverables of this research will include an improvised SVM algorithm with better

generalization capability. At the time of completion of the research, LSVM algorithm is

completely tested and is installed on client server.

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

Abstract

In the proposed research Large Scale Support Vector’s the company needs to improve

efficiency, reducing training data and to decrease the testing phase time. This research

includes the development of algorithm that can improve LSVM efficiency and training and

testing methodology to effectively choose minimum data sets to train and test LSVM. The

scope of the research will be limited to samples, LSVM algorithm and testing phase and will

not include LSVM implementation methods.

After analyzing the problem and LSVM’s needs, a methodology called variable

decomposition and constraint decomposition is chosen to address the training problem. This

methodology evades necessity to use high-end computational resources and also reduces

training time. To improve the generalization, a methodology is adopted to reduce the number

of support vector by optimizing the kernel parameters and also a vector correlating with

principle and greedy algorithm that are also included in the algorithm to further reduce the

support vectors involved in the testing phase.

A detailed list of research tasks and major milestones are included in this proposal. The

research will require an available data generation system to get the potential training data and

testing data. Completing the proposed research will require Latest version of the

programming software OCTAVE for developing and implementing necessary algorithms.

The deliverables of this research will include an improvised SVM algorithm with better

generalization capability. At the time of completion of the research, LSVM algorithm is

completely tested and is installed on client server.

3

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

1. Introduction

Machine learning is a part of artificial intelligence that concentrates on developing and

designing methods to teach systems to be more intelligent and independent by learning from

data. To exemplify, machine learning can be used to train systems to be able to distinguish

between spam and non-spam messages. The core of machine learning deals with

representation and generalization. Representation is about analyzing the data instances

learning important properties useful for learning. Generalization is the ability of the system to

perform the desired job well on unseen data instances. In another way, machine learning is all

about building a model and training the system with training examples from unknown

probability distribution to enable it to make accurate predictions on new instances. One of the

approaches to achieve machine learning is Support Vector Machines (SVM). Support vector

machines are supervised learning models based on learning algorithms to analyze data and

recognize patterns used for classification and regression analysis.

Traditional training algorithms for SVM such as chunking and SVM capable of scaling super

linearly with the number of examples becomes infeasible for large training sets. As dataset

sizes are steadily growing over past few years, this necessitates the development of training

algorithms that can handle large datasets. Large scale datasets are defined as datasets that

cannot be stored in a modern computer’s memory. Large scale training algorithms use one of

the following methods

1. Variants of primal stochastic gradient descent (SGD)

2. Quadratic programming in the dual

SGD generalizes well even though it is poor at optimization. Popular algorithms that use

SGD are PEGASOS and FOLOS.

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

1. Introduction

Machine learning is a part of artificial intelligence that concentrates on developing and

designing methods to teach systems to be more intelligent and independent by learning from

data. To exemplify, machine learning can be used to train systems to be able to distinguish

between spam and non-spam messages. The core of machine learning deals with

representation and generalization. Representation is about analyzing the data instances

learning important properties useful for learning. Generalization is the ability of the system to

perform the desired job well on unseen data instances. In another way, machine learning is all

about building a model and training the system with training examples from unknown

probability distribution to enable it to make accurate predictions on new instances. One of the

approaches to achieve machine learning is Support Vector Machines (SVM). Support vector

machines are supervised learning models based on learning algorithms to analyze data and

recognize patterns used for classification and regression analysis.

Traditional training algorithms for SVM such as chunking and SVM capable of scaling super

linearly with the number of examples becomes infeasible for large training sets. As dataset

sizes are steadily growing over past few years, this necessitates the development of training

algorithms that can handle large datasets. Large scale datasets are defined as datasets that

cannot be stored in a modern computer’s memory. Large scale training algorithms use one of

the following methods

1. Variants of primal stochastic gradient descent (SGD)

2. Quadratic programming in the dual

SGD generalizes well even though it is poor at optimization. Popular algorithms that use

SGD are PEGASOS and FOLOS.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

4

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

In general, LSVM model is used for linear classifications but with the help of kernel trick can

be applied to non-linear classification also. LSVM classifiers are used in Credit risk

evaluation, text and hypertext categorization, classification of images, in medical sciences.

For example, it classifies proteins up to 90% of the compounds and recognition of hand-

written characters. In past few years, LSVM has become very prominent machine learning

approach drawing much attention from many researchers and companies to invest huge

amounts in developing better algorithms.

1.2 Problem Description

A large training set poses a challenge for the computational complexity of a learning

algorithm demanding for more sophisticated computational equipment increasing the training

cost drastically. Since a huge training cost is involved in large scale support vector machines

(LVSM), small companies have to be very careful in making decisions to use LSVM. Lack of

access to high computational equipment to small companies slows down the training process

thereby, affecting the service time to the customers. Many researchers working on LSVM try

to find an optimal solution to handle large datasets by applying different techniques to make

it cost-effective and faster.

The training of LSVM algorithms requires enormous memory space and considerable

computational time due to enormous amounts of training data and the non-linear

programming problem. In general, most of the LSVM uses a random selection of training

samples resulting in large training times to identify prominent properties of training sets. The

main drawback in random selection is significant randomness that is involved in the training

sets. On the other hand, randomness in sample data is important in improving the

generalization capability of the algorithm, as it can be applied to a wide variety of data.

Training algorithm with random training sets requires high computational capable equipment

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

In general, LSVM model is used for linear classifications but with the help of kernel trick can

be applied to non-linear classification also. LSVM classifiers are used in Credit risk

evaluation, text and hypertext categorization, classification of images, in medical sciences.

For example, it classifies proteins up to 90% of the compounds and recognition of hand-

written characters. In past few years, LSVM has become very prominent machine learning

approach drawing much attention from many researchers and companies to invest huge

amounts in developing better algorithms.

1.2 Problem Description

A large training set poses a challenge for the computational complexity of a learning

algorithm demanding for more sophisticated computational equipment increasing the training

cost drastically. Since a huge training cost is involved in large scale support vector machines

(LVSM), small companies have to be very careful in making decisions to use LSVM. Lack of

access to high computational equipment to small companies slows down the training process

thereby, affecting the service time to the customers. Many researchers working on LSVM try

to find an optimal solution to handle large datasets by applying different techniques to make

it cost-effective and faster.

The training of LSVM algorithms requires enormous memory space and considerable

computational time due to enormous amounts of training data and the non-linear

programming problem. In general, most of the LSVM uses a random selection of training

samples resulting in large training times to identify prominent properties of training sets. The

main drawback in random selection is significant randomness that is involved in the training

sets. On the other hand, randomness in sample data is important in improving the

generalization capability of the algorithm, as it can be applied to a wide variety of data.

Training algorithm with random training sets requires high computational capable equipment

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

5

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

thereby, increasing training cost as well as total research cost. Apart from computational

complexity involved it also requires a large number of training times resulting slowing

services to clients (Cheng & Shih, 2007).

LSVM algorithm after training produces a number of support vectors which are used to deal

with new datasets. The complexity of LSVM mainly depends on the number of support

vectors. More the number of support vectors the better is the generalization of the SVM. On

the other hand, processing time increases as more support vectors slow down decision speed

and also affects accuracy. In some cases, companies have to use more than one LSVM

algorithm to improve accuracy and generalization that increases operational cost.

Generalization and accuracy are two contradicting terms that need to be balanced with great

care without compromising on any one of them. An easy way to address this issue is the

generation of support vectors depending on the data to be analyzed. This necessitates

developing a better LSVM algorithm (Zhana & Shenb, 2005).

Testing LSVM becomes a challenge in last few years due to large size testing datasets and a

large number of support vectors. The testing process is similar to training because it involves

the selection of training sets and validating the LSVM. Initially when collecting samples for

training sets one third is set aside for testing purpose. The results of LSVM over testing data

are validated to know the generalization and accuracy.

Considering all the factors, it can be said that to have a cost-effective and faster LSVM a lot

of improvement are required in training, algorithm and testing phases. The main purpose of

this research is to improve training, algorithm and testing phases simultaneously.

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

thereby, increasing training cost as well as total research cost. Apart from computational

complexity involved it also requires a large number of training times resulting slowing

services to clients (Cheng & Shih, 2007).

LSVM algorithm after training produces a number of support vectors which are used to deal

with new datasets. The complexity of LSVM mainly depends on the number of support

vectors. More the number of support vectors the better is the generalization of the SVM. On

the other hand, processing time increases as more support vectors slow down decision speed

and also affects accuracy. In some cases, companies have to use more than one LSVM

algorithm to improve accuracy and generalization that increases operational cost.

Generalization and accuracy are two contradicting terms that need to be balanced with great

care without compromising on any one of them. An easy way to address this issue is the

generation of support vectors depending on the data to be analyzed. This necessitates

developing a better LSVM algorithm (Zhana & Shenb, 2005).

Testing LSVM becomes a challenge in last few years due to large size testing datasets and a

large number of support vectors. The testing process is similar to training because it involves

the selection of training sets and validating the LSVM. Initially when collecting samples for

training sets one third is set aside for testing purpose. The results of LSVM over testing data

are validated to know the generalization and accuracy.

Considering all the factors, it can be said that to have a cost-effective and faster LSVM a lot

of improvement are required in training, algorithm and testing phases. The main purpose of

this research is to improve training, algorithm and testing phases simultaneously.

6

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

1.3 Research Objectives and Scope

The primary goal of this Research is to provide better and faster services to the clients as well

as minimizing the cost for the company in training thereby, maintaining large scale support

vector machines. This goal would be met through the following objectives

1. Improve generalization capability of the learning algorithms

2. Improve algorithm training by using optimizing techniques in training datasets

selection

The scope of the research will include training samples, LSVM algorithm and testing phase.

Algorithms related to other SVM are not included in this paper. LSVM implementation

methods are also not discussed in this paper.

1.4 Research Benefits and Deliverables

The proposed research has several benefits to SVM using companies and some of them are

listed below

1. Improved learning algorithm of LSVM results in more accurate generalization

capability enabling the algorithm to be applied to the large variety of datasets.

2. Faster services to clients by reducing training time by implementing proper

optimization techniques.

3. Reduced developing and operational cost of LSVM.

The deliverables from this research are listed below

1. Improvised SVM algorithm with better generalization capability

2. Installation of SVM on client server.

3. A detailed report that documents the research as well as the results

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

1.3 Research Objectives and Scope

The primary goal of this Research is to provide better and faster services to the clients as well

as minimizing the cost for the company in training thereby, maintaining large scale support

vector machines. This goal would be met through the following objectives

1. Improve generalization capability of the learning algorithms

2. Improve algorithm training by using optimizing techniques in training datasets

selection

The scope of the research will include training samples, LSVM algorithm and testing phase.

Algorithms related to other SVM are not included in this paper. LSVM implementation

methods are also not discussed in this paper.

1.4 Research Benefits and Deliverables

The proposed research has several benefits to SVM using companies and some of them are

listed below

1. Improved learning algorithm of LSVM results in more accurate generalization

capability enabling the algorithm to be applied to the large variety of datasets.

2. Faster services to clients by reducing training time by implementing proper

optimization techniques.

3. Reduced developing and operational cost of LSVM.

The deliverables from this research are listed below

1. Improvised SVM algorithm with better generalization capability

2. Installation of SVM on client server.

3. A detailed report that documents the research as well as the results

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

7

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

2. Literature Review

The importance of Support Vector Machines is highly recognized in recent times. Though the

roots of SVM concepts goes long back, it suggests incapable electronic technologies that

restricted SVM implementation. Current advancements in electronics support implementation

of SVM. However, the industries still strive to improve the process of SVM to make the

services faster and cheaper.

The identified areas to be improved for overall effective performance of SVM are organized

into following broad categories:

1. Training Support Vector Machine

2. Improving efficiency of algorithm

3. Improving testing phase

Table 1 shows a summary of the literature collected on the three categories as part of this

research, and the collected sources are discussed briefly in the following sections.

2.1 Training Support Vector Machine

Training SVM using large data sets is a kind of challenging task and time consuming.

Effective and quicker training of SVM is essential for profitability as well as effectual

performance. Rivas-Perea, Cota-Ruiz, & Rosiles (2013) proposes an algorithm to train large-

scale Linear Programming Support Vector Machine(LP-SVM). This algorithm uses

techniques like variable decomposition and constraint decomposition, which results in

number of sub-LP-SVR structures. The resulting LP problems are solved sequentially. Cheng

& Shih (2007) uses the active query in detecting substantially useful samples to train SVM

rather than selecting samples randomly. The samples are detected based on their weights

calculated from the confidence factor and their distance to the hyper plane. Zhou, Zhang, &

Jiao (2002) propose an effective method to train the linear programming and non-linear

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

2. Literature Review

The importance of Support Vector Machines is highly recognized in recent times. Though the

roots of SVM concepts goes long back, it suggests incapable electronic technologies that

restricted SVM implementation. Current advancements in electronics support implementation

of SVM. However, the industries still strive to improve the process of SVM to make the

services faster and cheaper.

The identified areas to be improved for overall effective performance of SVM are organized

into following broad categories:

1. Training Support Vector Machine

2. Improving efficiency of algorithm

3. Improving testing phase

Table 1 shows a summary of the literature collected on the three categories as part of this

research, and the collected sources are discussed briefly in the following sections.

2.1 Training Support Vector Machine

Training SVM using large data sets is a kind of challenging task and time consuming.

Effective and quicker training of SVM is essential for profitability as well as effectual

performance. Rivas-Perea, Cota-Ruiz, & Rosiles (2013) proposes an algorithm to train large-

scale Linear Programming Support Vector Machine(LP-SVM). This algorithm uses

techniques like variable decomposition and constraint decomposition, which results in

number of sub-LP-SVR structures. The resulting LP problems are solved sequentially. Cheng

& Shih (2007) uses the active query in detecting substantially useful samples to train SVM

rather than selecting samples randomly. The samples are detected based on their weights

calculated from the confidence factor and their distance to the hyper plane. Zhou, Zhang, &

Jiao (2002) propose an effective method to train the linear programming and non-linear

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

8

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

programming SVMs by relaxing the constraints in order to reduce the dimension of the

problem. This helps to quicken the training process with minimum generalization error. Zhan

& Shen (2005) explains a training method to improve the efficiency of the SVM. Initially,

support vectors are produced by training the SVM with all the training samples and then

support vectors which have highly convoluted hypersurface are excluded from training set.

2.2 Improving efficiency of algorithm

Efficient algorithm is very important for the better performance of SVM. Algorithm defines

the character and capabilities of SVM. Ayat, Cheriet, & Suen (2005) proposes algorithm to

reduce most common generalization error in SVM by reducing the number of support

vectors. This paper presents the techniques to reduce the number of support vectors by

optimizing the kernel parameters. Adankon & Cheriet (2007) proposes a method for

approximation of the gradient of the empirical error, along with incremental learning, to

reduce the resources required both in terms of processing time and of storage space. Yajima

(2005) discuss transforming commonly used quadratic programming problems in SVM to

linear programming problems. This paper also explains linear programming formulations for

multicategory classification problems. Zhan & Shen (2006) explains the inclusion of an

adaptive penalty term in the objective function to suppress the effect of outliers thereby

simplifying the separation hypersurface and increasing the classification efficiency of SVM.

Zhao & Sun (2009) proposes a recursive reduced least squares support vector regression. The

main objective of the proposed algorithm is to reduce the number of support vectors without

relaxing any constraints generated by the whole training set. This is achieved by generating

support vectors using data which make more contribution to target function.

2.3 Improving testing phase

Unfortunately, SVM is currently considerably slower in test phase caused by number of the

support vectors. Li, Jiao, & Hao (2007) uses an adaptive algorithm named feature vector

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

programming SVMs by relaxing the constraints in order to reduce the dimension of the

problem. This helps to quicken the training process with minimum generalization error. Zhan

& Shen (2005) explains a training method to improve the efficiency of the SVM. Initially,

support vectors are produced by training the SVM with all the training samples and then

support vectors which have highly convoluted hypersurface are excluded from training set.

2.2 Improving efficiency of algorithm

Efficient algorithm is very important for the better performance of SVM. Algorithm defines

the character and capabilities of SVM. Ayat, Cheriet, & Suen (2005) proposes algorithm to

reduce most common generalization error in SVM by reducing the number of support

vectors. This paper presents the techniques to reduce the number of support vectors by

optimizing the kernel parameters. Adankon & Cheriet (2007) proposes a method for

approximation of the gradient of the empirical error, along with incremental learning, to

reduce the resources required both in terms of processing time and of storage space. Yajima

(2005) discuss transforming commonly used quadratic programming problems in SVM to

linear programming problems. This paper also explains linear programming formulations for

multicategory classification problems. Zhan & Shen (2006) explains the inclusion of an

adaptive penalty term in the objective function to suppress the effect of outliers thereby

simplifying the separation hypersurface and increasing the classification efficiency of SVM.

Zhao & Sun (2009) proposes a recursive reduced least squares support vector regression. The

main objective of the proposed algorithm is to reduce the number of support vectors without

relaxing any constraints generated by the whole training set. This is achieved by generating

support vectors using data which make more contribution to target function.

2.3 Improving testing phase

Unfortunately, SVM is currently considerably slower in test phase caused by number of the

support vectors. Li, Jiao, & Hao (2007) uses an adaptive algorithm named feature vector

9

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

selection (FVS) to select support vectors based on the vector correlation principle and greedy

algorithm. This reduces the number of support vectors involved in testing phase.

2.4 Research Justification

Many techniques and methods are proposed in the reviewed papers in table 1 is used to

increase the efficiency of the SVM. The authors made their effort to improve SVM by

addressing issues separately in each of the identified potential areas that can determine the

performance of SVM. There is no effort made by an author to improve all the areas

simultaneously to have a better SVM than the existing ones. The main objective of the

proposed research is to develop an algorithm to simultaneously improve training, efficiency

of algorithm and testing phase of SVM in order to reduce the cost involved and for better

client service.

3. Research Methodology

Research plan is essential in order to guide both research execution and research control. The

main elements of research plan are to define research methodology for the research problem,

to schedule tasks and milestones for the research, and identifying the resources based on the

research methodology. The following sub-sections describe the elements of research plan.

3.1 Research procedure

To achieve research objectives an appropriate methodology must be identified depending

upon the research problems. The training problem in large scale support vector machines

(LSVM) can be addressed by using the algorithms implementing variable decomposition and

constraint decomposition (Rivas-Perea, Cota-Ruiz, & Rosiles, 2013). Variable decomposition

and constraint decomposition results in sub-LP-SVM structures that are solved sequentially.

The implementation of this algorithm evades the necessity to use high-end computational

resources and also reduces training time.

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

selection (FVS) to select support vectors based on the vector correlation principle and greedy

algorithm. This reduces the number of support vectors involved in testing phase.

2.4 Research Justification

Many techniques and methods are proposed in the reviewed papers in table 1 is used to

increase the efficiency of the SVM. The authors made their effort to improve SVM by

addressing issues separately in each of the identified potential areas that can determine the

performance of SVM. There is no effort made by an author to improve all the areas

simultaneously to have a better SVM than the existing ones. The main objective of the

proposed research is to develop an algorithm to simultaneously improve training, efficiency

of algorithm and testing phase of SVM in order to reduce the cost involved and for better

client service.

3. Research Methodology

Research plan is essential in order to guide both research execution and research control. The

main elements of research plan are to define research methodology for the research problem,

to schedule tasks and milestones for the research, and identifying the resources based on the

research methodology. The following sub-sections describe the elements of research plan.

3.1 Research procedure

To achieve research objectives an appropriate methodology must be identified depending

upon the research problems. The training problem in large scale support vector machines

(LSVM) can be addressed by using the algorithms implementing variable decomposition and

constraint decomposition (Rivas-Perea, Cota-Ruiz, & Rosiles, 2013). Variable decomposition

and constraint decomposition results in sub-LP-SVM structures that are solved sequentially.

The implementation of this algorithm evades the necessity to use high-end computational

resources and also reduces training time.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

10

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

The objective of improving generalization can be achieved by reducing the number of

support vectors. The number of support vectors can be reduced by optimizing the kernel

parameters (Ayat, Cheriet, & Suen, 2005). In this methodology, support vectors are generated

only after analysing the data sets, which is unlikely in conventional algorithms, thus

increasing the accuracy of the algorithm. A vector correlation principle and greedy algorithm

are also included in the algorithm to further reduce the support vectors involved in the testing

phase. Generalization can also be increased by applying techniques like using an adaptive

penalty term in objective function or by recursive reduced least squares support vector

regression but reducing the number of support vectors by proposed methodology has the

advantage of being cost effective and quicker.

3.3 Research Measures

The proposed research will require the following resources to complete the research fully and

in a timely manner.

1. The latest version of the programming software OCTAVE.

2. A personal computer with Windows 7 and Microsoft Office 2010 productivity tools.

The research will also require an available data generation system to get the potential training

data and testing data.

3.2. Research Analysis

The analysis involves mainly quantitative analysis. Quantitative analysis involves exploratory

data analysis, visualizations to show the performance trends of the different versions of the

algorithm and to measure the key metrics like train and test data preparation time. All the

improvement techniques performance is measured against a standard data set.

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

The objective of improving generalization can be achieved by reducing the number of

support vectors. The number of support vectors can be reduced by optimizing the kernel

parameters (Ayat, Cheriet, & Suen, 2005). In this methodology, support vectors are generated

only after analysing the data sets, which is unlikely in conventional algorithms, thus

increasing the accuracy of the algorithm. A vector correlation principle and greedy algorithm

are also included in the algorithm to further reduce the support vectors involved in the testing

phase. Generalization can also be increased by applying techniques like using an adaptive

penalty term in objective function or by recursive reduced least squares support vector

regression but reducing the number of support vectors by proposed methodology has the

advantage of being cost effective and quicker.

3.3 Research Measures

The proposed research will require the following resources to complete the research fully and

in a timely manner.

1. The latest version of the programming software OCTAVE.

2. A personal computer with Windows 7 and Microsoft Office 2010 productivity tools.

The research will also require an available data generation system to get the potential training

data and testing data.

3.2. Research Analysis

The analysis involves mainly quantitative analysis. Quantitative analysis involves exploratory

data analysis, visualizations to show the performance trends of the different versions of the

algorithm and to measure the key metrics like train and test data preparation time. All the

improvement techniques performance is measured against a standard data set.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

11

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

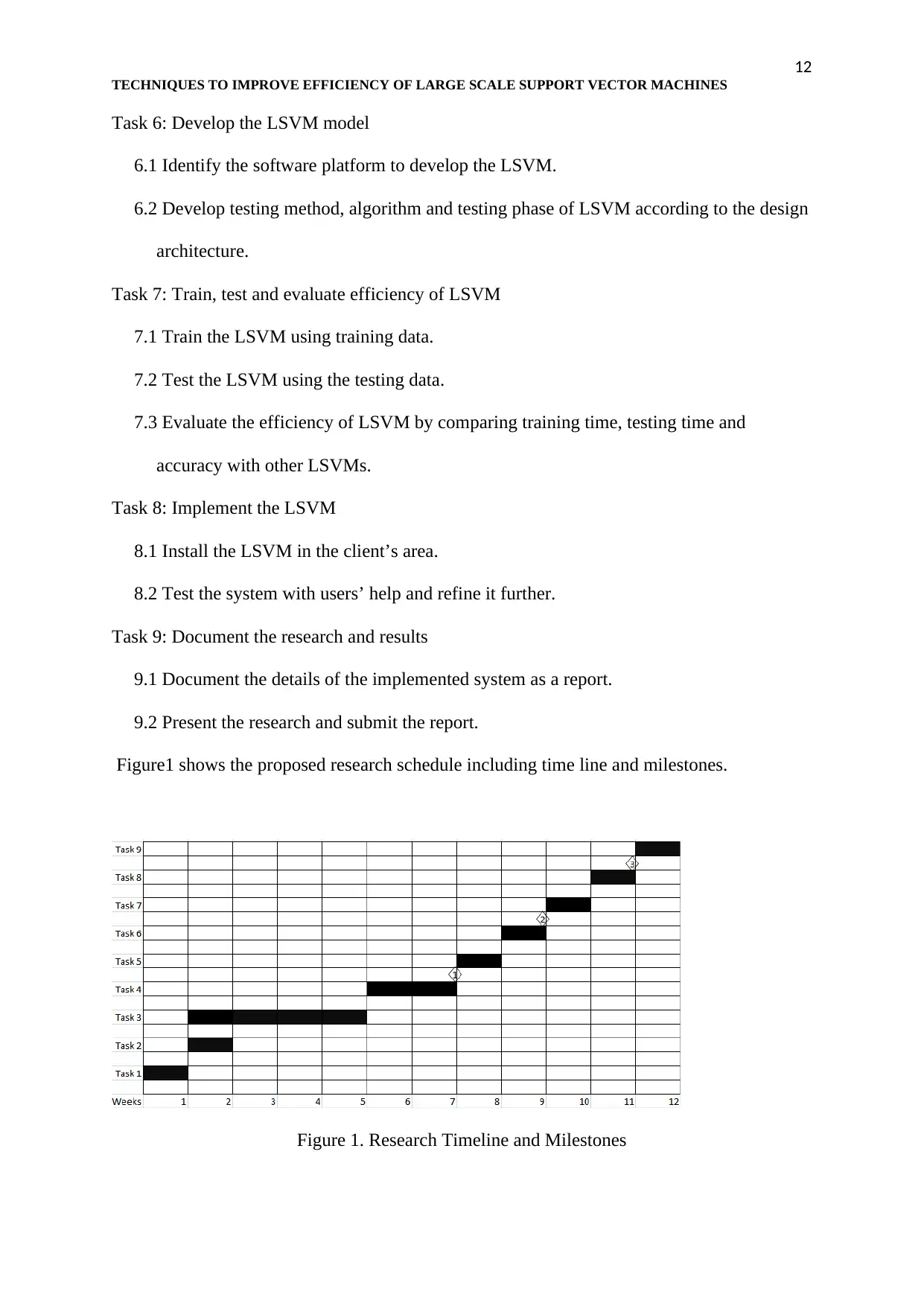

3.2 Research Schedule

The tasks that will be pursued to implement the proposed methodology are listed below.

Some tasks may proceed in parallel and some sequentially.

Task 1: Analyze research needs and define requirements

1.1 Conduct needs analysis and identifies Support Vector Machine (SVM) efficiency

needs.

1.2 Define research objectives and scope.

1.3 Define research requirements.

Task 2; Plan for the research

2.1 Develop a detailed research plan and establish milestones.

2.2 Identify resources needed and obtain the resources.

Task 3: Review literature related to the SVM efficiency

3.1 Identify literature sources related to the SVM efficiency

3.2 Collect relevant literature and analyze it.

3.3 Identify information from the literature applicable to improve SVM efficiency.

Task 4: Collect and analyze current SVM training and testing data.

4.1 Collect sample data that are used to train and test LSVM.

4.2 Analyze the sample data to identify the vital and necessary information required to

develop training and testing methods.

Task 5: Design the LSVM testing method, algorithm and testing phase

5.1 Design a training method of the system.

5.2 Design an algorithm of the system.

5.3 Design a testing method of the system.

5.4 Develop a preliminary design of the LSVM.

5.5 Validate the training and algorithm design of the LSVM.

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

3.2 Research Schedule

The tasks that will be pursued to implement the proposed methodology are listed below.

Some tasks may proceed in parallel and some sequentially.

Task 1: Analyze research needs and define requirements

1.1 Conduct needs analysis and identifies Support Vector Machine (SVM) efficiency

needs.

1.2 Define research objectives and scope.

1.3 Define research requirements.

Task 2; Plan for the research

2.1 Develop a detailed research plan and establish milestones.

2.2 Identify resources needed and obtain the resources.

Task 3: Review literature related to the SVM efficiency

3.1 Identify literature sources related to the SVM efficiency

3.2 Collect relevant literature and analyze it.

3.3 Identify information from the literature applicable to improve SVM efficiency.

Task 4: Collect and analyze current SVM training and testing data.

4.1 Collect sample data that are used to train and test LSVM.

4.2 Analyze the sample data to identify the vital and necessary information required to

develop training and testing methods.

Task 5: Design the LSVM testing method, algorithm and testing phase

5.1 Design a training method of the system.

5.2 Design an algorithm of the system.

5.3 Design a testing method of the system.

5.4 Develop a preliminary design of the LSVM.

5.5 Validate the training and algorithm design of the LSVM.

12

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

Task 6: Develop the LSVM model

6.1 Identify the software platform to develop the LSVM.

6.2 Develop testing method, algorithm and testing phase of LSVM according to the design

architecture.

Task 7: Train, test and evaluate efficiency of LSVM

7.1 Train the LSVM using training data.

7.2 Test the LSVM using the testing data.

7.3 Evaluate the efficiency of LSVM by comparing training time, testing time and

accuracy with other LSVMs.

Task 8: Implement the LSVM

8.1 Install the LSVM in the client’s area.

8.2 Test the system with users’ help and refine it further.

Task 9: Document the research and results

9.1 Document the details of the implemented system as a report.

9.2 Present the research and submit the report.

Figure1 shows the proposed research schedule including time line and milestones.

Figure 1. Research Timeline and Milestones

TECHNIQUES TO IMPROVE EFFICIENCY OF LARGE SCALE SUPPORT VECTOR MACHINES

Task 6: Develop the LSVM model

6.1 Identify the software platform to develop the LSVM.

6.2 Develop testing method, algorithm and testing phase of LSVM according to the design

architecture.

Task 7: Train, test and evaluate efficiency of LSVM

7.1 Train the LSVM using training data.

7.2 Test the LSVM using the testing data.

7.3 Evaluate the efficiency of LSVM by comparing training time, testing time and

accuracy with other LSVMs.

Task 8: Implement the LSVM

8.1 Install the LSVM in the client’s area.

8.2 Test the system with users’ help and refine it further.

Task 9: Document the research and results

9.1 Document the details of the implemented system as a report.

9.2 Present the research and submit the report.

Figure1 shows the proposed research schedule including time line and milestones.

Figure 1. Research Timeline and Milestones

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 15

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.