TETC Research: Multimethod Approach in Teacher Education, Iowa State

VerifiedAdded on 2022/09/09

|16

|8711

|87

Report

AI Summary

This article discusses the Teacher Educator Technology Competencies (TETCs) research project, which aimed to define essential technology knowledge, skills, and attitudes for teacher educators. The study employed a highly collaborative, multimethod approach to gather input from various stakeholders. The research team utilized crowdsourcing, Delphi, and public comment methods to build consensus and refine the TETCs. The crowdsourcing method gathered literature on existing technology competencies. The Delphi method then distilled expert opinions. Finally, a public comment period allowed for further feedback and refinement. The article emphasizes the importance of continuous examination and improvement in teacher preparation programs, especially regarding technology integration. The authors encourage others to adopt similar collaborative research processes to promote change and build consensus in the field of teacher education, highlighting the need for teacher educators to model effective technology use and support teacher candidates in developing their technology integration skills.

Schmidt-Crawford, D. A, Foulger, T. S., Graziano, K. J., & Slykhuis, D.A. (2019). Research methods

for the people, by the people, of the people: Using a highly collaborative, multimethod approach to

promote change. Contemporary Issues in Technology and Teacher Education, 19(2), 240-255.

240

Research Methods for the People, by the

People, of the People: Using a Highly

Collaborative, Multimethod Approach to

Promote Change

Denise A. Schmidt-Crawford

Iowa State University

Teresa S. Foulger

Arizona State University

Kevin J. Graziano

Nevada State College

David A. Slykhuis

University of Northern Colorado

This article highlights the highly collaborative, multimethod research approach

used to develop the Teacher Educator Technology Competencies (TETCs): a

specific list of knowledge, skills, and attitudes, developed with input from many

teacher educators in the field, to help guide the professional development of

teacher educatorswho strive to be more competentin the integrationof

technology. The purpose of this article is to describe and critique the sequence of

three different collaborative research approaches (crowdsourcing, Delphi, and

public comment) used by the TETC research team to gather critical opinions and

input from a variety of stakeholders. Researchers who desire large-scale adoption

of their research outcomes may consider the multimethod approach described in

this article to be useful.

for the people, by the people, of the people: Using a highly collaborative, multimethod approach to

promote change. Contemporary Issues in Technology and Teacher Education, 19(2), 240-255.

240

Research Methods for the People, by the

People, of the People: Using a Highly

Collaborative, Multimethod Approach to

Promote Change

Denise A. Schmidt-Crawford

Iowa State University

Teresa S. Foulger

Arizona State University

Kevin J. Graziano

Nevada State College

David A. Slykhuis

University of Northern Colorado

This article highlights the highly collaborative, multimethod research approach

used to develop the Teacher Educator Technology Competencies (TETCs): a

specific list of knowledge, skills, and attitudes, developed with input from many

teacher educators in the field, to help guide the professional development of

teacher educatorswho strive to be more competentin the integrationof

technology. The purpose of this article is to describe and critique the sequence of

three different collaborative research approaches (crowdsourcing, Delphi, and

public comment) used by the TETC research team to gather critical opinions and

input from a variety of stakeholders. Researchers who desire large-scale adoption

of their research outcomes may consider the multimethod approach described in

this article to be useful.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Contemporary Issues in Technology and Teacher Education, 19(2)

241

The theory and practice of preparing teacher candidates to teach with technology is

inconsistentat best and ineffectiveat worst (Angeli & Valanides,2009; Ertmer &

Ottenbreit-Leftwich, 2010; Tondeur, Roblin, van Braak, Fisser, & Voogt, 2013). Some

researchers have noted that the quantity and quality of technology experiences that teacher

candidates encounter during their preparation programs influence their adoption of

technology (Agyei & Voogt, 2011; Tondeur et al., 2012), while others have identified a gap

between what teacher candidates are taught in preparation courses and how PK–12

teachers are actually using technology in classrooms (Ottenbreit-Leftwich, Glazewski,

Newby, & Ertmer, 2010; Tondeur et al., 2012).

To address this gap, the ways teacher candidates are being prepared to integrate technology

within the context of their preparation programs must be continually examined. Those who

are preparing teacher candidates — teacher educators — must begin to examine and reflect

on their own practices to determine whether they are, indeed, designing and modeling

instructionalopportunitiesthat are preparingteachercandidatesto use technology

effectively in PK–12 classrooms.

The U.S. Department of Education (2017) has highlighted this concern, as well, and has

called for teacher certification programs to devise methods that address a technology

integration curriculum in a program-deep, program-wide manner. The challenge, then,

becomes determining what technology knowledge and skills all teacher educators would

need in order to design high-quality technology experiences for teacher candidates in their

courses.

With a goal of building consensus in the field of teacher education, our research team

embarked on an 18-month journey to bring focus and intentionality to efforts that prepare

teacher candidates to use technology for teaching and learning. This research process

solicited ideas from national and international experts on technology competencies that all

teacher educators should use and were presented to the field for further comment and

refinement all while being guided by an expert review panel.

The Teacher Educator Technology Competencies (TETCs) were developed using a unique

consensus-building and highly collaborative research methodology. Specific results from

this study are described in detail in Foulger, Graziano, Schmidt-Crawford, and Slykhuis

(2017; see also http://site.aace.org/tetc/). The purpose of this article is to focus on and

provide more detail around the three distinct collaborativeresearch approaches

(crowdsourcing, Delphi, and public comment) used to develop the TETCs.

The development of the TETCs was motivated by a call from the 2017 National Education

TechnologyPlan authored by the U.S. Departmentof Education (2017), Office of

Educational Technology, which recommended that teacher preparation programs “develop

a common set of technology competency expectations for university professors and

candidates exiting teacher preparation programs for teaching in technologically enabled

schools and postsecondary education institutions” (p. 40).

The 2017 National Education Technology Plan purposefully shifted the idea of technology

integration from a PK–12 focus of the prior plan to one that included commitment from

every educational level, PK–20 (U.S. Department of Education, 2017). Specifically, the plan

called for teacher preparation institutions to assure their graduates know that “effective

use of technology is not an optional add-on or a skill that [they] can simply ... pick up once

they get into the classroom” (p. 32).

241

The theory and practice of preparing teacher candidates to teach with technology is

inconsistentat best and ineffectiveat worst (Angeli & Valanides,2009; Ertmer &

Ottenbreit-Leftwich, 2010; Tondeur, Roblin, van Braak, Fisser, & Voogt, 2013). Some

researchers have noted that the quantity and quality of technology experiences that teacher

candidates encounter during their preparation programs influence their adoption of

technology (Agyei & Voogt, 2011; Tondeur et al., 2012), while others have identified a gap

between what teacher candidates are taught in preparation courses and how PK–12

teachers are actually using technology in classrooms (Ottenbreit-Leftwich, Glazewski,

Newby, & Ertmer, 2010; Tondeur et al., 2012).

To address this gap, the ways teacher candidates are being prepared to integrate technology

within the context of their preparation programs must be continually examined. Those who

are preparing teacher candidates — teacher educators — must begin to examine and reflect

on their own practices to determine whether they are, indeed, designing and modeling

instructionalopportunitiesthat are preparingteachercandidatesto use technology

effectively in PK–12 classrooms.

The U.S. Department of Education (2017) has highlighted this concern, as well, and has

called for teacher certification programs to devise methods that address a technology

integration curriculum in a program-deep, program-wide manner. The challenge, then,

becomes determining what technology knowledge and skills all teacher educators would

need in order to design high-quality technology experiences for teacher candidates in their

courses.

With a goal of building consensus in the field of teacher education, our research team

embarked on an 18-month journey to bring focus and intentionality to efforts that prepare

teacher candidates to use technology for teaching and learning. This research process

solicited ideas from national and international experts on technology competencies that all

teacher educators should use and were presented to the field for further comment and

refinement all while being guided by an expert review panel.

The Teacher Educator Technology Competencies (TETCs) were developed using a unique

consensus-building and highly collaborative research methodology. Specific results from

this study are described in detail in Foulger, Graziano, Schmidt-Crawford, and Slykhuis

(2017; see also http://site.aace.org/tetc/). The purpose of this article is to focus on and

provide more detail around the three distinct collaborativeresearch approaches

(crowdsourcing, Delphi, and public comment) used to develop the TETCs.

The development of the TETCs was motivated by a call from the 2017 National Education

TechnologyPlan authored by the U.S. Departmentof Education (2017), Office of

Educational Technology, which recommended that teacher preparation programs “develop

a common set of technology competency expectations for university professors and

candidates exiting teacher preparation programs for teaching in technologically enabled

schools and postsecondary education institutions” (p. 40).

The 2017 National Education Technology Plan purposefully shifted the idea of technology

integration from a PK–12 focus of the prior plan to one that included commitment from

every educational level, PK–20 (U.S. Department of Education, 2017). Specifically, the plan

called for teacher preparation institutions to assure their graduates know that “effective

use of technology is not an optional add-on or a skill that [they] can simply ... pick up once

they get into the classroom” (p. 32).

Contemporary Issues in Technology and Teacher Education, 19(2)

242

If all teacher preparation programs, in the United States and around the world, are charged

with the need to prepare teacher candidates to use technology in powerful ways, then all

teacher educators who are responsible for preparing these candidates must establish a

curriculum for teaching with technology, serve as role models for using technology in

teaching, and provide support to teacher candidates for developing their ability to teach

with technology (Borthwick & Hansen, 2017; Goktas, Yildirim & Yildirim, 2009; Tondeur

et al., 2012).

The technological pedagogical content knowledge framework (or technology, pedagogy,

and content knowledge [TPACK]; Mishra & Koehler, 2006) has been used extensively

across teacher education to guide and inform teacher preparation programs and to

measure teacher candidates’ learning outcomes (Mouza, 2016). Although this conceptual

framework identifies seven knowledge constructs teachers need to integrate technology

into instruction effectively, it does not offer specific solutions for developing TPACK among

teacher candidates (Mouza, 2016; Niess, 2012). Thus, ascertaining and defining the role all

teacher educators are expected to play in the process of preparing teacher candidates to

teach with technology is often difficult.

To address this challenge, four teacher education faculty members with educational

technology expertise from different teacher preparation programs across the United States

used a multimethod research approach to identify a set of technology competencies for

teacher educators in hopes of promoting and starting a paradigm shift in teacher education

on the ways teacher candidates are prepared to use technology. The result was an 18-

month, process-oriented approach that involved national and international experts in the

field providing input on the development of a set of TETCs (Foulger et al., 2017).

The goal of this article is to focus on and describe the research project’s multimethod

approach (Morse, 2003), which emphasized a highly collaborative and participatory set of

processes used to build consensus. By sharing our research process in more detail, we hope

to encourage others to consider applying similar collaborative and participatory research

processes in their own work.

Collectively, this article documents the methodological decisions made by the research

team in order to answer the call to develop a common set of technology competencies

specific for teacher educators (U.S. Department of Education, 2017). Teacher educators are

those individuals who “provide instruction or who give guidance and support to student

teachers [teacher candidates], and who thus render a substantial contribution to the

development of students into competent teachers” (Koster, Brekelmans, Korthagen, &

Wubbels, 2005, p. 157). Research decisions throughout the process were also framed and

guided by taking steps to include existing research to guide competency content, involve

educational technology experts who work in teacher preparation, and address varied

stakeholder needs.

The research team designed the project using a series of three highly collaborative research

methods for developing the TETCs. First, a crowdsourcing method was used to gather

literature on existing technology competencies specific to teacher educators. After an initial

list of technology competencies was extracted from the crowdsourced literature, a Delphi

method was used to elicit, distill, and determine the opinions of a panel of experts (Nworie,

2011).

Following six rounds of Delphi input and feedback from educational technology experts, a

list of 12 TETCs with related criteria were developed that represented the knowledge, skills,

and attitudes all teacher educators need in order to prepare teacher candidates who enter

PK–12 classrooms ready to integrate technology to support their teaching and student

242

If all teacher preparation programs, in the United States and around the world, are charged

with the need to prepare teacher candidates to use technology in powerful ways, then all

teacher educators who are responsible for preparing these candidates must establish a

curriculum for teaching with technology, serve as role models for using technology in

teaching, and provide support to teacher candidates for developing their ability to teach

with technology (Borthwick & Hansen, 2017; Goktas, Yildirim & Yildirim, 2009; Tondeur

et al., 2012).

The technological pedagogical content knowledge framework (or technology, pedagogy,

and content knowledge [TPACK]; Mishra & Koehler, 2006) has been used extensively

across teacher education to guide and inform teacher preparation programs and to

measure teacher candidates’ learning outcomes (Mouza, 2016). Although this conceptual

framework identifies seven knowledge constructs teachers need to integrate technology

into instruction effectively, it does not offer specific solutions for developing TPACK among

teacher candidates (Mouza, 2016; Niess, 2012). Thus, ascertaining and defining the role all

teacher educators are expected to play in the process of preparing teacher candidates to

teach with technology is often difficult.

To address this challenge, four teacher education faculty members with educational

technology expertise from different teacher preparation programs across the United States

used a multimethod research approach to identify a set of technology competencies for

teacher educators in hopes of promoting and starting a paradigm shift in teacher education

on the ways teacher candidates are prepared to use technology. The result was an 18-

month, process-oriented approach that involved national and international experts in the

field providing input on the development of a set of TETCs (Foulger et al., 2017).

The goal of this article is to focus on and describe the research project’s multimethod

approach (Morse, 2003), which emphasized a highly collaborative and participatory set of

processes used to build consensus. By sharing our research process in more detail, we hope

to encourage others to consider applying similar collaborative and participatory research

processes in their own work.

Collectively, this article documents the methodological decisions made by the research

team in order to answer the call to develop a common set of technology competencies

specific for teacher educators (U.S. Department of Education, 2017). Teacher educators are

those individuals who “provide instruction or who give guidance and support to student

teachers [teacher candidates], and who thus render a substantial contribution to the

development of students into competent teachers” (Koster, Brekelmans, Korthagen, &

Wubbels, 2005, p. 157). Research decisions throughout the process were also framed and

guided by taking steps to include existing research to guide competency content, involve

educational technology experts who work in teacher preparation, and address varied

stakeholder needs.

The research team designed the project using a series of three highly collaborative research

methods for developing the TETCs. First, a crowdsourcing method was used to gather

literature on existing technology competencies specific to teacher educators. After an initial

list of technology competencies was extracted from the crowdsourced literature, a Delphi

method was used to elicit, distill, and determine the opinions of a panel of experts (Nworie,

2011).

Following six rounds of Delphi input and feedback from educational technology experts, a

list of 12 TETCs with related criteria were developed that represented the knowledge, skills,

and attitudes all teacher educators need in order to prepare teacher candidates who enter

PK–12 classrooms ready to integrate technology to support their teaching and student

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Contemporary Issues in Technology and Teacher Education, 19(2)

243

learning (Foulger et al., 2017). Last, the TETCs were presented to the field at conferences

and a public comment period was used to gather additional feedback related to suitability,

allowing more teacher educators additional opportunity to critically appraise the TETCs

(Gopalakrishnan & Udayshankar, 2014).

Collectively, these research methods were carefully constructed, highly collaborative, and

contributed to building participant consensus throughout the entire 18-month research

process. The next sections of this article will discuss specific details that describe the

implementation of the multimethod research approach used for this project.

Implementing a Multimethod Research Approach

The TETCs project was intentionally designed to incorporate a multimethod approach that

fostered a high degree of collaboration among stakeholders during multiple points of data

collection and analysis. Because the overarchinggoal was to identify technology

competencies for all teacher educators, a multimethod research process was implemented

and included multiple opportunities for stakeholders’ input and feedback throughout the

project. As Morse (2003) noted, “Multiple methods are used in a research program when

a series of projects are interrelated within a broad topic and designed to solve an overall

research problem” (p. 196). A multimethod design can include separate projects that are

conducted sequentially in order to inform the research study as a comprehensive whole

(Morse, 2003).

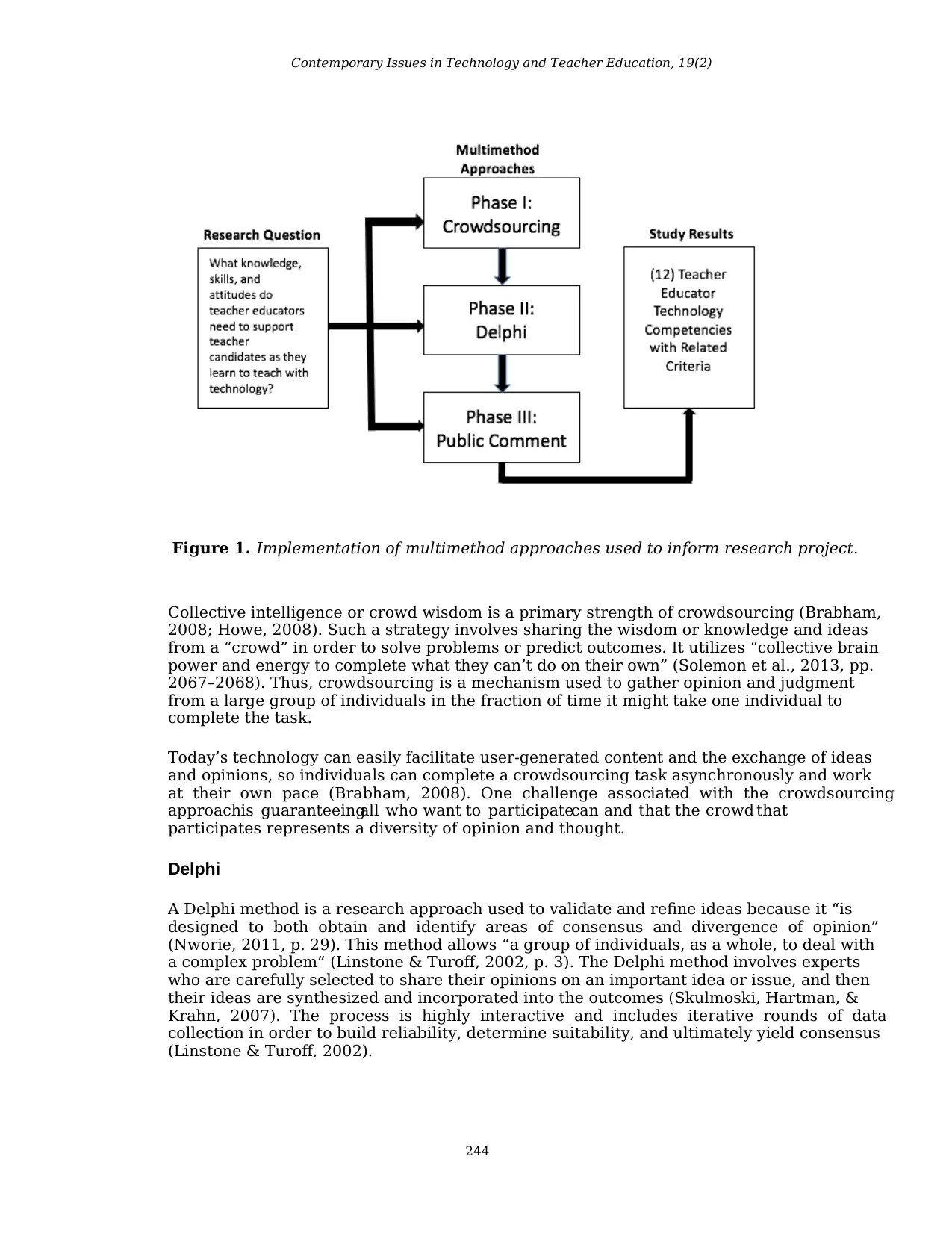

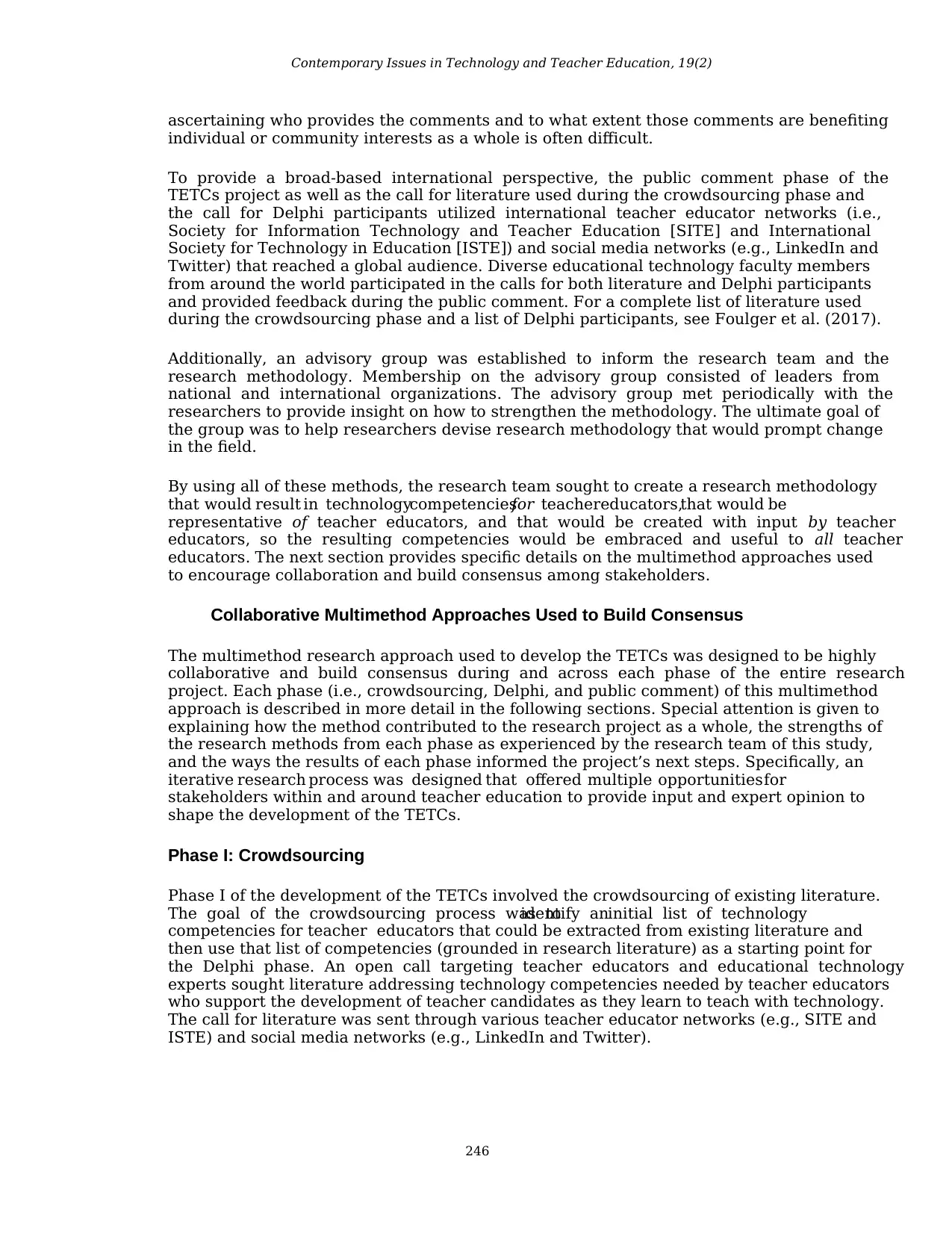

The described research project used the methods of crowdsourcing, Delphi, and public

comment to identify the TETCs (Figure 1). These multiple methods were conducted

sequentially because the crowdsourcing results were used to plan the Delphi process, while

the Delphi process findings informed the public comment phase of the research project.

Every member of the research team was highly involved with all phases of the research

project, compiling and interpreting feedback, while being active and continual facilitators

of the communication and feedback aspects of the project. Next, each research method is

described briefly and includes a summary of major strengths and challenges for each

method.

Crowdsourcing

Crowdsourcing is a Web 2.0 form of outsourcing a task or function to an undefined group

of people in the form of an open call (Howe, 2006). Although crowdsourcing started in the

business world (Brabham, 2008), it has gained considerable attention and popularity in

the academic community (Solemon, Ariffin, Din, & Anwar, 2013). Crowdsourcing

facilitatesthe connectivityand collaborationof many individuals to participatein

knowledgegeneration,and seeks to mobilize competenceand expertise,which are

distributed among the crowd (Zhao & Zhu, 2014).

In particular, technology enables a process that is highly collaborative and incorporates

research perspectives and opinions from individuals who work together across great

distances including across countries and continents. The product of a crowdsourcing

process is often shared freely and has strong agreement due to the participation of many

(Morris & McDuff, 2015).

243

learning (Foulger et al., 2017). Last, the TETCs were presented to the field at conferences

and a public comment period was used to gather additional feedback related to suitability,

allowing more teacher educators additional opportunity to critically appraise the TETCs

(Gopalakrishnan & Udayshankar, 2014).

Collectively, these research methods were carefully constructed, highly collaborative, and

contributed to building participant consensus throughout the entire 18-month research

process. The next sections of this article will discuss specific details that describe the

implementation of the multimethod research approach used for this project.

Implementing a Multimethod Research Approach

The TETCs project was intentionally designed to incorporate a multimethod approach that

fostered a high degree of collaboration among stakeholders during multiple points of data

collection and analysis. Because the overarchinggoal was to identify technology

competencies for all teacher educators, a multimethod research process was implemented

and included multiple opportunities for stakeholders’ input and feedback throughout the

project. As Morse (2003) noted, “Multiple methods are used in a research program when

a series of projects are interrelated within a broad topic and designed to solve an overall

research problem” (p. 196). A multimethod design can include separate projects that are

conducted sequentially in order to inform the research study as a comprehensive whole

(Morse, 2003).

The described research project used the methods of crowdsourcing, Delphi, and public

comment to identify the TETCs (Figure 1). These multiple methods were conducted

sequentially because the crowdsourcing results were used to plan the Delphi process, while

the Delphi process findings informed the public comment phase of the research project.

Every member of the research team was highly involved with all phases of the research

project, compiling and interpreting feedback, while being active and continual facilitators

of the communication and feedback aspects of the project. Next, each research method is

described briefly and includes a summary of major strengths and challenges for each

method.

Crowdsourcing

Crowdsourcing is a Web 2.0 form of outsourcing a task or function to an undefined group

of people in the form of an open call (Howe, 2006). Although crowdsourcing started in the

business world (Brabham, 2008), it has gained considerable attention and popularity in

the academic community (Solemon, Ariffin, Din, & Anwar, 2013). Crowdsourcing

facilitatesthe connectivityand collaborationof many individuals to participatein

knowledgegeneration,and seeks to mobilize competenceand expertise,which are

distributed among the crowd (Zhao & Zhu, 2014).

In particular, technology enables a process that is highly collaborative and incorporates

research perspectives and opinions from individuals who work together across great

distances including across countries and continents. The product of a crowdsourcing

process is often shared freely and has strong agreement due to the participation of many

(Morris & McDuff, 2015).

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Contemporary Issues in Technology and Teacher Education, 19(2)

244

Figure 1. Implementation of multimethod approaches used to inform research project.

Collective intelligence or crowd wisdom is a primary strength of crowdsourcing (Brabham,

2008; Howe, 2008). Such a strategy involves sharing the wisdom or knowledge and ideas

from a “crowd” in order to solve problems or predict outcomes. It utilizes “collective brain

power and energy to complete what they can’t do on their own” (Solemon et al., 2013, pp.

2067–2068). Thus, crowdsourcing is a mechanism used to gather opinion and judgment

from a large group of individuals in the fraction of time it might take one individual to

complete the task.

Today’s technology can easily facilitate user-generated content and the exchange of ideas

and opinions, so individuals can complete a crowdsourcing task asynchronously and work

at their own pace (Brabham, 2008). One challenge associated with the crowdsourcing

approachis guaranteeingall who want to participatecan and that the crowd that

participates represents a diversity of opinion and thought.

Delphi

A Delphi method is a research approach used to validate and refine ideas because it “is

designed to both obtain and identify areas of consensus and divergence of opinion”

(Nworie, 2011, p. 29). This method allows “a group of individuals, as a whole, to deal with

a complex problem” (Linstone & Turoff, 2002, p. 3). The Delphi method involves experts

who are carefully selected to share their opinions on an important idea or issue, and then

their ideas are synthesized and incorporated into the outcomes (Skulmoski, Hartman, &

Krahn, 2007). The process is highly interactive and includes iterative rounds of data

collection in order to build reliability, determine suitability, and ultimately yield consensus

(Linstone & Turoff, 2002).

244

Figure 1. Implementation of multimethod approaches used to inform research project.

Collective intelligence or crowd wisdom is a primary strength of crowdsourcing (Brabham,

2008; Howe, 2008). Such a strategy involves sharing the wisdom or knowledge and ideas

from a “crowd” in order to solve problems or predict outcomes. It utilizes “collective brain

power and energy to complete what they can’t do on their own” (Solemon et al., 2013, pp.

2067–2068). Thus, crowdsourcing is a mechanism used to gather opinion and judgment

from a large group of individuals in the fraction of time it might take one individual to

complete the task.

Today’s technology can easily facilitate user-generated content and the exchange of ideas

and opinions, so individuals can complete a crowdsourcing task asynchronously and work

at their own pace (Brabham, 2008). One challenge associated with the crowdsourcing

approachis guaranteeingall who want to participatecan and that the crowd that

participates represents a diversity of opinion and thought.

Delphi

A Delphi method is a research approach used to validate and refine ideas because it “is

designed to both obtain and identify areas of consensus and divergence of opinion”

(Nworie, 2011, p. 29). This method allows “a group of individuals, as a whole, to deal with

a complex problem” (Linstone & Turoff, 2002, p. 3). The Delphi method involves experts

who are carefully selected to share their opinions on an important idea or issue, and then

their ideas are synthesized and incorporated into the outcomes (Skulmoski, Hartman, &

Krahn, 2007). The process is highly interactive and includes iterative rounds of data

collection in order to build reliability, determine suitability, and ultimately yield consensus

(Linstone & Turoff, 2002).

Contemporary Issues in Technology and Teacher Education, 19(2)

245

Questionnaires are typically constructed for each round of the Delphi process to obtain

feedback from a panel of experts. Panelists’ responses from each round are analyzed and

then used to construct the questionnaire for the next round. This iterative process

continues until consensus among the panelists is reached. Consensus is achieved “when a

certain percentageof responsesfall within a prescribedrange for the value being

estimated” (Dajani, Sincoff, & Talley, 1979, p. 83).

The Delphi method offers a unique research approach for investigating critical issues,

defining problem areas, and identifying best practices and skill sets (Nworie, 2011).

Strengthsfor using the Delphi method include obtaining expert opinion, building

consensus, forecasting trends, and interacting with research subjects. The approach is

conducive to bringing geographically dispersed individuals together to serve as a panel of

experts who share their expertise about the topic under investigation. When using this

technique, researchers are able to analyze data based upon the panelists’ expert opinions.

There are also challenges associated with the Delphi method that are worth noting.

Delphi studies typically involve multiple rounds of data collection and feedback; therefore,

it can become a lengthy process and result in the attrition of participants (Nworie, 2011).

Slow or nonresponse by participants to a questionnaire during a Delphi round is also a

related concern. Another challenge relates to the assumptions that can be made about the

expertise and experience of individuals who are selected for the Delphi panel. It is assumed

that all individuals selected will have a thorough understanding of the topic under

investigation and that personal biases will not influence their responses.

Public Comment

Public comment is used in a variety of contexts to assure a goal will be met before

finalization of a product, document, or decision. Successful approaches to public comment

depend on information that is reliable, or in the case of human opinion, to people who are

well informed on the subject matter. Public comment processes are typically used in high-

stakes assessment practices, such as those employed in medical schools and government.

Public comment addressing questions posed by a review committee assures specified

criteria are met, potential flaw areas are identified, and possible edits are noted with the

goal of improving the validity of items.

Modifications are often adopted with the goal of making sure questions are correct, fair,

valid, and reliable (Gopalakrishnan & Udayshankar, 2014). The technology industry

frequently solicits public comment prior to establishing manufacturing and distribution, to

minimize any vulnerabilities, make known any unavoidable risks to consumers, and ensure

maximum security. This type of public comment requires both human analysis and

technology-based analysis (Quirolgico, Voas, & Kuhn, 2011).

The public comment approach was applied to this research project for the purpose of

increasing the visibility of the TETCs with yet another set of stakeholders before the final

version of the competencies was released. Thus, one strength of using public comment is

for gathering additional insight or thought about a topic, rule, or regulation with the

understanding that comments might “have substantial effect” on the final outcome of what

is being proposed (Balla, 2014, para. 1). Another strength associated with using public

comment involves bringing legitimacy to the process; the public is given a chance to

provide feedback so the process appears “democratic and legitimate” (Innes & Booher,

2004, p. 423). One challenge commonly associated with the public comment process is

whose voice is being heard? Although broad-based participation is typically encouraged,

245

Questionnaires are typically constructed for each round of the Delphi process to obtain

feedback from a panel of experts. Panelists’ responses from each round are analyzed and

then used to construct the questionnaire for the next round. This iterative process

continues until consensus among the panelists is reached. Consensus is achieved “when a

certain percentageof responsesfall within a prescribedrange for the value being

estimated” (Dajani, Sincoff, & Talley, 1979, p. 83).

The Delphi method offers a unique research approach for investigating critical issues,

defining problem areas, and identifying best practices and skill sets (Nworie, 2011).

Strengthsfor using the Delphi method include obtaining expert opinion, building

consensus, forecasting trends, and interacting with research subjects. The approach is

conducive to bringing geographically dispersed individuals together to serve as a panel of

experts who share their expertise about the topic under investigation. When using this

technique, researchers are able to analyze data based upon the panelists’ expert opinions.

There are also challenges associated with the Delphi method that are worth noting.

Delphi studies typically involve multiple rounds of data collection and feedback; therefore,

it can become a lengthy process and result in the attrition of participants (Nworie, 2011).

Slow or nonresponse by participants to a questionnaire during a Delphi round is also a

related concern. Another challenge relates to the assumptions that can be made about the

expertise and experience of individuals who are selected for the Delphi panel. It is assumed

that all individuals selected will have a thorough understanding of the topic under

investigation and that personal biases will not influence their responses.

Public Comment

Public comment is used in a variety of contexts to assure a goal will be met before

finalization of a product, document, or decision. Successful approaches to public comment

depend on information that is reliable, or in the case of human opinion, to people who are

well informed on the subject matter. Public comment processes are typically used in high-

stakes assessment practices, such as those employed in medical schools and government.

Public comment addressing questions posed by a review committee assures specified

criteria are met, potential flaw areas are identified, and possible edits are noted with the

goal of improving the validity of items.

Modifications are often adopted with the goal of making sure questions are correct, fair,

valid, and reliable (Gopalakrishnan & Udayshankar, 2014). The technology industry

frequently solicits public comment prior to establishing manufacturing and distribution, to

minimize any vulnerabilities, make known any unavoidable risks to consumers, and ensure

maximum security. This type of public comment requires both human analysis and

technology-based analysis (Quirolgico, Voas, & Kuhn, 2011).

The public comment approach was applied to this research project for the purpose of

increasing the visibility of the TETCs with yet another set of stakeholders before the final

version of the competencies was released. Thus, one strength of using public comment is

for gathering additional insight or thought about a topic, rule, or regulation with the

understanding that comments might “have substantial effect” on the final outcome of what

is being proposed (Balla, 2014, para. 1). Another strength associated with using public

comment involves bringing legitimacy to the process; the public is given a chance to

provide feedback so the process appears “democratic and legitimate” (Innes & Booher,

2004, p. 423). One challenge commonly associated with the public comment process is

whose voice is being heard? Although broad-based participation is typically encouraged,

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Contemporary Issues in Technology and Teacher Education, 19(2)

246

ascertaining who provides the comments and to what extent those comments are benefiting

individual or community interests as a whole is often difficult.

To provide a broad-based international perspective, the public comment phase of the

TETCs project as well as the call for literature used during the crowdsourcing phase and

the call for Delphi participants utilized international teacher educator networks (i.e.,

Society for Information Technology and Teacher Education [SITE] and International

Society for Technology in Education [ISTE]) and social media networks (e.g., LinkedIn and

Twitter) that reached a global audience. Diverse educational technology faculty members

from around the world participated in the calls for both literature and Delphi participants

and provided feedback during the public comment. For a complete list of literature used

during the crowdsourcing phase and a list of Delphi participants, see Foulger et al. (2017).

Additionally, an advisory group was established to inform the research team and the

research methodology. Membership on the advisory group consisted of leaders from

national and international organizations. The advisory group met periodically with the

researchers to provide insight on how to strengthen the methodology. The ultimate goal of

the group was to help researchers devise research methodology that would prompt change

in the field.

By using all of these methods, the research team sought to create a research methodology

that would result in technologycompetenciesfor teachereducators,that would be

representative of teacher educators, and that would be created with input by teacher

educators, so the resulting competencies would be embraced and useful to all teacher

educators. The next section provides specific details on the multimethod approaches used

to encourage collaboration and build consensus among stakeholders.

Collaborative Multimethod Approaches Used to Build Consensus

The multimethod research approach used to develop the TETCs was designed to be highly

collaborative and build consensus during and across each phase of the entire research

project. Each phase (i.e., crowdsourcing, Delphi, and public comment) of this multimethod

approach is described in more detail in the following sections. Special attention is given to

explaining how the method contributed to the research project as a whole, the strengths of

the research methods from each phase as experienced by the research team of this study,

and the ways the results of each phase informed the project’s next steps. Specifically, an

iterative research process was designed that offered multiple opportunities for

stakeholders within and around teacher education to provide input and expert opinion to

shape the development of the TETCs.

Phase I: Crowdsourcing

Phase I of the development of the TETCs involved the crowdsourcing of existing literature.

The goal of the crowdsourcing process was toidentify aninitial list of technology

competencies for teacher educators that could be extracted from existing literature and

then use that list of competencies (grounded in research literature) as a starting point for

the Delphi phase. An open call targeting teacher educators and educational technology

experts sought literature addressing technology competencies needed by teacher educators

who support the development of teacher candidates as they learn to teach with technology.

The call for literature was sent through various teacher educator networks (e.g., SITE and

ISTE) and social media networks (e.g., LinkedIn and Twitter).

246

ascertaining who provides the comments and to what extent those comments are benefiting

individual or community interests as a whole is often difficult.

To provide a broad-based international perspective, the public comment phase of the

TETCs project as well as the call for literature used during the crowdsourcing phase and

the call for Delphi participants utilized international teacher educator networks (i.e.,

Society for Information Technology and Teacher Education [SITE] and International

Society for Technology in Education [ISTE]) and social media networks (e.g., LinkedIn and

Twitter) that reached a global audience. Diverse educational technology faculty members

from around the world participated in the calls for both literature and Delphi participants

and provided feedback during the public comment. For a complete list of literature used

during the crowdsourcing phase and a list of Delphi participants, see Foulger et al. (2017).

Additionally, an advisory group was established to inform the research team and the

research methodology. Membership on the advisory group consisted of leaders from

national and international organizations. The advisory group met periodically with the

researchers to provide insight on how to strengthen the methodology. The ultimate goal of

the group was to help researchers devise research methodology that would prompt change

in the field.

By using all of these methods, the research team sought to create a research methodology

that would result in technologycompetenciesfor teachereducators,that would be

representative of teacher educators, and that would be created with input by teacher

educators, so the resulting competencies would be embraced and useful to all teacher

educators. The next section provides specific details on the multimethod approaches used

to encourage collaboration and build consensus among stakeholders.

Collaborative Multimethod Approaches Used to Build Consensus

The multimethod research approach used to develop the TETCs was designed to be highly

collaborative and build consensus during and across each phase of the entire research

project. Each phase (i.e., crowdsourcing, Delphi, and public comment) of this multimethod

approach is described in more detail in the following sections. Special attention is given to

explaining how the method contributed to the research project as a whole, the strengths of

the research methods from each phase as experienced by the research team of this study,

and the ways the results of each phase informed the project’s next steps. Specifically, an

iterative research process was designed that offered multiple opportunities for

stakeholders within and around teacher education to provide input and expert opinion to

shape the development of the TETCs.

Phase I: Crowdsourcing

Phase I of the development of the TETCs involved the crowdsourcing of existing literature.

The goal of the crowdsourcing process was toidentify aninitial list of technology

competencies for teacher educators that could be extracted from existing literature and

then use that list of competencies (grounded in research literature) as a starting point for

the Delphi phase. An open call targeting teacher educators and educational technology

experts sought literature addressing technology competencies needed by teacher educators

who support the development of teacher candidates as they learn to teach with technology.

The call for literature was sent through various teacher educator networks (e.g., SITE and

ISTE) and social media networks (e.g., LinkedIn and Twitter).

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Contemporary Issues in Technology and Teacher Education, 19(2)

247

Respondents to the call uploaded 93 related articles and book chapters to a Web portal,

which was developed and managed by the research team. To assure a comprehensive

review of the literature, the research team also searched for articles and uploaded

additional literature to the web portal. After a thorough review of the crowdsourced

literature by the research team, literature not specific to teacher educators was eliminated.

In the end, 43 articles were selected as a starting point to begin extracting a list of possible

technology competencies for teacher educators.

Guidelines for writing an effective competencystatement (European Commission:

Education and Training, 2013; Sturgis, 2012; University of Texas School of Public Health,

2012) were utilized by the research team to draft a list of initial competencies that stemmed

from the crowdsourcedliterature.This list of technologycompetenciesfor teacher

educators from the crowdsourced literature included 31 competencies, related criteria

aligned with each competency, and references for each competency connected back to the

crowdsourced articles.

The research team carefully reviewed the 31 technology competencies with a focus on

relevancy, duplication, wording, and quality assurance, according to the guidelines used

for writing an effective competency. Several competencies were combined, while others

were revised. As a result, an initial list of 24 TETCs were extracted from the crowdsourced

literature.

A strength of using the crowdsourcing technique to begin this research project was the

ability to reach a large number of national and internationalexperts with related

knowledgeand researchthat would have been unknown or otherwiseunavailable

(Brabham, 2008; Howe, 2008). One challenge the research team encountered with the

crowdsourcing phase was sourcing relevant literature and articles that focused on teacher

educators. More than half of the articles submitted to the open call were not used because

the content was not specific to teacher educators. Phase II of the research project involved

using a Delphi method that assisted with the identification and further refinement of the

24 competencies identified from the crowdsourced literature.

Phase II: Delphi Method

To identify participants for the Delphi phase of the research project, an application was

developed that included questions about participants’ educational organization affiliation,

department or college affiliation,role in preparing PK–12 teachers, and country of

residence. A broad-based call for participation was posted on the same online networks as

the call for literature during the crowdsourcing phase. Forty-six applications were received

from individuals who wanted to participate in the Delphi phase of the project. Nworie

(2011) recommended selecting divergent experts to help account for future developments

in technology, the rapid expansion of pedagogy due to technology use, and any potential or

probable changes in policy. Given that the Delphi process was conducted virtually and was

not limited to time and location of the experts,a divergenceof content expertise,

geographiclocation, organizationalaffiliations,and college/universitysettings were

considered while selecting the panel participants.

Eighteen participants were selected with the intention of providing a broad perspective as

a team through complementary individual expertise, experience, and affiliation. Of the 18

participantsselected,17 agreed to participatein the Delphi phase and signed the

Institutional Review Board agreement. During this phase, participants were asked to

complete six rounds of data collection and were never made aware of the identity of the

other participants.

247

Respondents to the call uploaded 93 related articles and book chapters to a Web portal,

which was developed and managed by the research team. To assure a comprehensive

review of the literature, the research team also searched for articles and uploaded

additional literature to the web portal. After a thorough review of the crowdsourced

literature by the research team, literature not specific to teacher educators was eliminated.

In the end, 43 articles were selected as a starting point to begin extracting a list of possible

technology competencies for teacher educators.

Guidelines for writing an effective competencystatement (European Commission:

Education and Training, 2013; Sturgis, 2012; University of Texas School of Public Health,

2012) were utilized by the research team to draft a list of initial competencies that stemmed

from the crowdsourcedliterature.This list of technologycompetenciesfor teacher

educators from the crowdsourced literature included 31 competencies, related criteria

aligned with each competency, and references for each competency connected back to the

crowdsourced articles.

The research team carefully reviewed the 31 technology competencies with a focus on

relevancy, duplication, wording, and quality assurance, according to the guidelines used

for writing an effective competency. Several competencies were combined, while others

were revised. As a result, an initial list of 24 TETCs were extracted from the crowdsourced

literature.

A strength of using the crowdsourcing technique to begin this research project was the

ability to reach a large number of national and internationalexperts with related

knowledgeand researchthat would have been unknown or otherwiseunavailable

(Brabham, 2008; Howe, 2008). One challenge the research team encountered with the

crowdsourcing phase was sourcing relevant literature and articles that focused on teacher

educators. More than half of the articles submitted to the open call were not used because

the content was not specific to teacher educators. Phase II of the research project involved

using a Delphi method that assisted with the identification and further refinement of the

24 competencies identified from the crowdsourced literature.

Phase II: Delphi Method

To identify participants for the Delphi phase of the research project, an application was

developed that included questions about participants’ educational organization affiliation,

department or college affiliation,role in preparing PK–12 teachers, and country of

residence. A broad-based call for participation was posted on the same online networks as

the call for literature during the crowdsourcing phase. Forty-six applications were received

from individuals who wanted to participate in the Delphi phase of the project. Nworie

(2011) recommended selecting divergent experts to help account for future developments

in technology, the rapid expansion of pedagogy due to technology use, and any potential or

probable changes in policy. Given that the Delphi process was conducted virtually and was

not limited to time and location of the experts,a divergenceof content expertise,

geographiclocation, organizationalaffiliations,and college/universitysettings were

considered while selecting the panel participants.

Eighteen participants were selected with the intention of providing a broad perspective as

a team through complementary individual expertise, experience, and affiliation. Of the 18

participantsselected,17 agreed to participatein the Delphi phase and signed the

Institutional Review Board agreement. During this phase, participants were asked to

complete six rounds of data collection and were never made aware of the identity of the

other participants.

Contemporary Issues in Technology and Teacher Education, 19(2)

248

For each of the six rounds, the Delphi participants were sent a questionnaire with a

preamble to guide their thinking, and then a series of questions about the teacher educator

competencies or criteria asking them to either provide rankings or an open-ended response

to document their thoughts and ideas. The research team compiled and analyzed the

responses after each Delphi round, formed the next iteration of the TETCs, and then sent

another questionnaire to the participants. This iterative feedback loop allowed the research

team to build both quantitative and qualitative consensus on the content of the TETCs and

their associated criteria (Dajani et al., 1979).

One strength of the Delphi process used for this research project was the lack of attrition

of our Delphi participants. While not all 17 Delphi participants contributed to each of the

six rounds, no participant asked to be removed from the study, and all contributed

throughout the duration of the process. It is important for researchers to develop strategies

that encourage participation because a low response rate during the Delphi process can

impact the study’s validity (Hsu & Sandford, 2007). In addition, the research team

attributes the high participant retention during the Delphi phase to the perceived value of

the TETCs and related criteria by the panel participants.

The participants knew they were helping develop a list of competencies to address an

identified need within the teacher education community, and most expressed they planned

to use the TETCs within their universities to guide technology integration efforts at their

institutions. A related strength involved gaining six rounds of expert opinion specifically

on the competencies, while building consensus with the Delphi participants during and

after each round (Nworie, 2011). Most Delphi studies typically include three or four rounds

of expert opinion.

One clear challenge with the Delphi process was the extended time that was necessary to

complete this phase of the research project. Designing and sending the questionnaires,

allowing time for panel responses, compiling and analyzing the results, and changing the

competencies and criteria accordingly, took 4-6 weeks of elapsed time for each of the six

Delphi rounds. As noted by Nworie (2011), Delphi studies involving multiple rounds of data

collection and feedback can take a significant time to complete. Although the Delphi phase

of this research project took 9 months to complete, each round was deemed necessary and

important in providing the time needed for input. With the Delphi phase of the research

completed, the list of 12 TETCs was ready for public comment.

Phase III: Public Comment

Once the research team was assured the Delphi process had run its course and the Delphi

participants were in agreement that the TETCs were indicative of the knowledge, skills, and

attitudes all teacher educators needed to support the development of teacher candidates’

abilities to teach with technology, the multimethod research approach transitioned to

Phase III, public comment. The research-related purpose of using public comment was to

provide one final opportunity for additional stakeholders in educational technology and

teacher education to offer input on the TETCs. Thus, the research team sought to influence

change in the field by (a) distributing the TETCs to as many teacher educators as possible,

(b) increasing anticipation for the release of the final TETCs, (c) soliciting input for further

refinement, and (d) helping teacher educators begin to reflect on how the TETCs might be

used in their college/university.

A brief questionnaire designed by the research team gathered broad-based input from

additional stakeholders and organizations in the teacher education community about the

perceived usefulness and usability of the TETCs. The questionnaire was sent through the

248

For each of the six rounds, the Delphi participants were sent a questionnaire with a

preamble to guide their thinking, and then a series of questions about the teacher educator

competencies or criteria asking them to either provide rankings or an open-ended response

to document their thoughts and ideas. The research team compiled and analyzed the

responses after each Delphi round, formed the next iteration of the TETCs, and then sent

another questionnaire to the participants. This iterative feedback loop allowed the research

team to build both quantitative and qualitative consensus on the content of the TETCs and

their associated criteria (Dajani et al., 1979).

One strength of the Delphi process used for this research project was the lack of attrition

of our Delphi participants. While not all 17 Delphi participants contributed to each of the

six rounds, no participant asked to be removed from the study, and all contributed

throughout the duration of the process. It is important for researchers to develop strategies

that encourage participation because a low response rate during the Delphi process can

impact the study’s validity (Hsu & Sandford, 2007). In addition, the research team

attributes the high participant retention during the Delphi phase to the perceived value of

the TETCs and related criteria by the panel participants.

The participants knew they were helping develop a list of competencies to address an

identified need within the teacher education community, and most expressed they planned

to use the TETCs within their universities to guide technology integration efforts at their

institutions. A related strength involved gaining six rounds of expert opinion specifically

on the competencies, while building consensus with the Delphi participants during and

after each round (Nworie, 2011). Most Delphi studies typically include three or four rounds

of expert opinion.

One clear challenge with the Delphi process was the extended time that was necessary to

complete this phase of the research project. Designing and sending the questionnaires,

allowing time for panel responses, compiling and analyzing the results, and changing the

competencies and criteria accordingly, took 4-6 weeks of elapsed time for each of the six

Delphi rounds. As noted by Nworie (2011), Delphi studies involving multiple rounds of data

collection and feedback can take a significant time to complete. Although the Delphi phase

of this research project took 9 months to complete, each round was deemed necessary and

important in providing the time needed for input. With the Delphi phase of the research

completed, the list of 12 TETCs was ready for public comment.

Phase III: Public Comment

Once the research team was assured the Delphi process had run its course and the Delphi

participants were in agreement that the TETCs were indicative of the knowledge, skills, and

attitudes all teacher educators needed to support the development of teacher candidates’

abilities to teach with technology, the multimethod research approach transitioned to

Phase III, public comment. The research-related purpose of using public comment was to

provide one final opportunity for additional stakeholders in educational technology and

teacher education to offer input on the TETCs. Thus, the research team sought to influence

change in the field by (a) distributing the TETCs to as many teacher educators as possible,

(b) increasing anticipation for the release of the final TETCs, (c) soliciting input for further

refinement, and (d) helping teacher educators begin to reflect on how the TETCs might be

used in their college/university.

A brief questionnaire designed by the research team gathered broad-based input from

additional stakeholders and organizations in the teacher education community about the

perceived usefulness and usability of the TETCs. The questionnaire was sent through the

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Contemporary Issues in Technology and Teacher Education, 19(2)

249

same channels as were used for the crowdsourcing and Delphi phases. The questionnaire

included an explanation of the research project process, a draft copy of the TETCs for

participant review, and three questions:

1. What aspects of the TETCs do you/does your organization find most useful?

2. How would you/your organization make use of the TETCs?

3. What concerns do you/does your organization have about the TETCs?

A space for additional comments was also provided so participants could provide insight

and input beyond the questions listed on the questionnaire. In this process, anyone (the

public) could contribute comments about the TETCs; however, these comments were not

made available for other commenters to view. The comments were used by the research

team to further refine the TETCs.

Several national and international teacher educators and stakeholders viewed a draft copy

of the TETCs during the public comment phase. The public comment process increased

awareness in the field about the TETCs and justified the need for the TETCs. Providing a

draft copy of the TETCs to the public also allowed those in teacher education who were

anticipating the release to begin planning how they might use the TETCs in their colleges

and schools of education. In sum, 31 individuals completed the questionnaire on the TETCs

during the public comment phase of the project. Twenty-nine responses were from

individuals and two responses were from organizations. All responses originated from

either the United States or Australia.

Respondents during the public comment phase stated that the TETCs were targeted,

helpful, and fitting for the field. Several respondents noted that the TETCs were aligned

with the ISTE (2018) Standards for Educators, and one respondent said there was

redundancy with the ISTE standards. Some respondents commented they wanted to share

the TETCs with senior faculty and administrators at their institutions.

The TETCs seemed to overwhelm a few respondents, who noted concerns such as, “could

be misinterpreted as more standards” and “too many.” One respondent discussed fitting

terminology (e.g., technology to be an outdated term) and another noted lack of alignment

to other educational organizations such as libraries and museums. Because the TETCs are

specific to teacher educators who prepare teacher candidates for licensure positions, such

comments were noted to be outside the scope of the study and were not included for

analysis.

All told, the results and feedback collected from the public comment phase warranted no

significant changes to the TETCs; however, the research team opted to modify the initial

stem of each competency to include the words “teacher educator” to help clarify the

intended audience. The research team hoped this approach would continually remind

readers that the TETCs are intended for teacher educators specifically and not for PK-12

teachers.

The public comment phase of this research project provided the research team with

additional insight into the development process of the TETCs. Although the TETCs did not

change substantially because of any comments received, this phase provided another

chance for teacher educators and interested stakeholders to provide feedback about the

TETCs and their possible use in teacher education institutions. Because public comment

was allowed and considered, it did bring more legitimacy and clarity when developing the

final version of the TETCs (Innes & Booher, 2004).

249

same channels as were used for the crowdsourcing and Delphi phases. The questionnaire

included an explanation of the research project process, a draft copy of the TETCs for

participant review, and three questions:

1. What aspects of the TETCs do you/does your organization find most useful?

2. How would you/your organization make use of the TETCs?

3. What concerns do you/does your organization have about the TETCs?

A space for additional comments was also provided so participants could provide insight

and input beyond the questions listed on the questionnaire. In this process, anyone (the

public) could contribute comments about the TETCs; however, these comments were not

made available for other commenters to view. The comments were used by the research

team to further refine the TETCs.

Several national and international teacher educators and stakeholders viewed a draft copy

of the TETCs during the public comment phase. The public comment process increased

awareness in the field about the TETCs and justified the need for the TETCs. Providing a

draft copy of the TETCs to the public also allowed those in teacher education who were

anticipating the release to begin planning how they might use the TETCs in their colleges

and schools of education. In sum, 31 individuals completed the questionnaire on the TETCs

during the public comment phase of the project. Twenty-nine responses were from

individuals and two responses were from organizations. All responses originated from

either the United States or Australia.

Respondents during the public comment phase stated that the TETCs were targeted,

helpful, and fitting for the field. Several respondents noted that the TETCs were aligned

with the ISTE (2018) Standards for Educators, and one respondent said there was

redundancy with the ISTE standards. Some respondents commented they wanted to share

the TETCs with senior faculty and administrators at their institutions.

The TETCs seemed to overwhelm a few respondents, who noted concerns such as, “could

be misinterpreted as more standards” and “too many.” One respondent discussed fitting

terminology (e.g., technology to be an outdated term) and another noted lack of alignment

to other educational organizations such as libraries and museums. Because the TETCs are

specific to teacher educators who prepare teacher candidates for licensure positions, such

comments were noted to be outside the scope of the study and were not included for

analysis.

All told, the results and feedback collected from the public comment phase warranted no

significant changes to the TETCs; however, the research team opted to modify the initial

stem of each competency to include the words “teacher educator” to help clarify the

intended audience. The research team hoped this approach would continually remind

readers that the TETCs are intended for teacher educators specifically and not for PK-12

teachers.

The public comment phase of this research project provided the research team with

additional insight into the development process of the TETCs. Although the TETCs did not

change substantially because of any comments received, this phase provided another

chance for teacher educators and interested stakeholders to provide feedback about the

TETCs and their possible use in teacher education institutions. Because public comment

was allowed and considered, it did bring more legitimacy and clarity when developing the

final version of the TETCs (Innes & Booher, 2004).

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Contemporary Issues in Technology and Teacher Education, 19(2)

250

Originally, the goal of the public comment phase was to obtain additional feedback from

the field to improve the TETCs before publication. However, once the process began the

research team realized that this phase could be used to meet more far-reaching goals

related to individual and organizational usability related to the TETCs.

Still, it was challenging using public comment to promote the TETCs by encouraging

additional stakeholders to react and provide feedback on the competencies. Although the

research team constantly looked for ways to promote collaboration and provide feedback

about the TETCs, only 31 comments were received during this phase of the research project.

It was unclear how many viewed the draft TETCs but did not provide comments. Broad-

based participation during the public comment phase was encouraged, yet only a small

percentage of individuals still chose to participate and provide comments (as also in Innes

& Booher, 2004). For a list of the findings from the project including the 12 competencies

and related criteria and a more detailed description of the data collection and data analysis,

see Foulger et al. (2017).

Implications for Research

In order to respond to the need to develop a set of technology competencies for teacher

educators (U.S. Department of Education, 2017), the research team designed a research

project that used a highly collaborative,multimethod approach. Each method

(crowdsourcing, Delphi, and public comment) was conducted separately with a specific

purpose in mind, and each was planned sequentially as one approach informed the next

(Morse, 2003). Eventually, a list of technology competencies was developed identifying the

knowledge, skills, and attitudes all teacher educators need for preparing teacher candidates

to use and integrate technology for teaching and learning (Foulger et al., 2017).

Professional organizations have typically taken the lead for developing standards to guide

the professional development required for an organization’s membership (e.g., Association

of MathematicsTeacher Educators,2017; ISTE, 2018; National Science Teachers

Association, 2012; Thomas & Knezek, 2008). Large projects like these are usually funded,

seek experts in the field to assist in the development of such standards, and go through

multiple iterations of draft documents to reach consensus.

Since this task was similar to what organizations have instituted in the past, the research

team carefully designed the project by replicating methods that would be highly inclusive

and collaborative by including multiple opportunities throughout the project for expert

opinion and comment. It was a process-oriented approach designed to include as many

experts (i.e., national and international teacher educators with expertise in educational

technology and educational technology experts) as possible in each phase of the research

project.

All three methods selected and incorporated into this multimethod design —

crowdsourcing, Delphi, and public comment — encouraged gathering collective wisdom

and knowledge from a crowd or panel of experts (Brabham, 2008; Howe, 2008; Nworie,

2011; Okoli & Pawlowski, 2004; Rice, 2009; Shelton & Creghan, 2015). As a result of these

efforts, other researchers may see the value of combining multiple methods for research

projects designedfor investigatingcritical issues or developingskill sets requiring

divergence of opinion and the building of consensus.

In order to successfully develop the list of TETCs, the research team placed emphasis on

keeping the stakeholders actively involved and engaged in all research activities during

each phase and throughout the entire project. Since the target audience for the TETCs was

250

Originally, the goal of the public comment phase was to obtain additional feedback from

the field to improve the TETCs before publication. However, once the process began the

research team realized that this phase could be used to meet more far-reaching goals

related to individual and organizational usability related to the TETCs.

Still, it was challenging using public comment to promote the TETCs by encouraging

additional stakeholders to react and provide feedback on the competencies. Although the

research team constantly looked for ways to promote collaboration and provide feedback

about the TETCs, only 31 comments were received during this phase of the research project.

It was unclear how many viewed the draft TETCs but did not provide comments. Broad-

based participation during the public comment phase was encouraged, yet only a small

percentage of individuals still chose to participate and provide comments (as also in Innes

& Booher, 2004). For a list of the findings from the project including the 12 competencies

and related criteria and a more detailed description of the data collection and data analysis,

see Foulger et al. (2017).

Implications for Research

In order to respond to the need to develop a set of technology competencies for teacher

educators (U.S. Department of Education, 2017), the research team designed a research

project that used a highly collaborative,multimethod approach. Each method

(crowdsourcing, Delphi, and public comment) was conducted separately with a specific

purpose in mind, and each was planned sequentially as one approach informed the next

(Morse, 2003). Eventually, a list of technology competencies was developed identifying the

knowledge, skills, and attitudes all teacher educators need for preparing teacher candidates

to use and integrate technology for teaching and learning (Foulger et al., 2017).

Professional organizations have typically taken the lead for developing standards to guide

the professional development required for an organization’s membership (e.g., Association

of MathematicsTeacher Educators,2017; ISTE, 2018; National Science Teachers

Association, 2012; Thomas & Knezek, 2008). Large projects like these are usually funded,

seek experts in the field to assist in the development of such standards, and go through

multiple iterations of draft documents to reach consensus.

Since this task was similar to what organizations have instituted in the past, the research

team carefully designed the project by replicating methods that would be highly inclusive

and collaborative by including multiple opportunities throughout the project for expert

opinion and comment. It was a process-oriented approach designed to include as many

experts (i.e., national and international teacher educators with expertise in educational

technology and educational technology experts) as possible in each phase of the research

project.

All three methods selected and incorporated into this multimethod design —

crowdsourcing, Delphi, and public comment — encouraged gathering collective wisdom

and knowledge from a crowd or panel of experts (Brabham, 2008; Howe, 2008; Nworie,

2011; Okoli & Pawlowski, 2004; Rice, 2009; Shelton & Creghan, 2015). As a result of these