Exploring Multimodal Interface in Transformed Learning for Dyslexia

VerifiedAdded on 2023/04/07

|10

|1962

|437

Report

AI Summary

This report discusses the application of multimodal interfaces in transforming the learning experience for students with dyslexia. It highlights the increasing role of mobile technology and m-learning in providing accessible and flexible educational tools. The report outlines a framework for designing a multimodal interface tailored to the specific needs of students with dyslexia, emphasizing data collection, analysis, and tool design. It explores input and output modes, multimodal fusion techniques, and a proposed three-tier architecture consisting of a mobile client, a public network, and a cloud environment. The tool aims to provide an interactive user interface with customizable content, supporting various learning styles through text, audio, and images. The report concludes that multimodal interfaces hold significant potential for enhancing learning outcomes for students with dyslexia, while acknowledging the need for further research and development in this area. Desklib offers a wealth of similar resources for students seeking academic support.

Running head: MULTIMODAL INTERFACE IN TRANSFORMED LEARNING FOR

DYSLEXIA

Multimodal Interface in Transformed Learning for Dyslexia

Name of the student:

Name of the university:

Author Note:

DYSLEXIA

Multimodal Interface in Transformed Learning for Dyslexia

Name of the student:

Name of the university:

Author Note:

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1MULTIMODAL INTERFACE IN TRANSFORMED LEARNING FOR DYSLEXIA

Introduction

The growth rate of mobile technology as well as the developments in the fields of this

technology has provided the modern era with new and strong possibilities for m-learning.

This m learning may be said as an influential tool, which is implemented in the learning

procedure to promote the accessibility of learning and flexibly put a substantial as well as

valuable input to the society mainly for the students with dyslexia. The learning procedure

could be enhanced by the implementation of the multimodal interface in m-learning for the

students with dyslexia (Jackson, 2014). To cope up with the student’s needs with dyslexia the

multimodal interface should be customized according to particular students.

Dyslexia can be explained as a language-based disability of learning. The students

with dyslexia possess difficulties in reading, writing and speaking. These students needs extra

support from the society to carry on their education (Ghisio et al., 2017). For this reason, it is

necessary to adopt functions of multimodality that will not only understand the needs of the

students but also help them to choose their preferred style of learning.

Framework for the interface tool

The theoretical framework is provided for the implementation of the multimodal

interface for enhancing the learning procedure for students with dyslexia (Alghabban, Salama

& Altalhi, 2017). The methods that can be used to implement the multimodal interface are

data collection, analysis of data and the design for the tool.

The first phase of the method that is to be implemented may be said as the

specification of the needs of the users. The aim of this first phase is to identify the needs of

the students with dyslexia. While designing the multimodal interface it is necessary to know

the needs of the student learning procedure as well as the style, the student will prefer. Many

surveys were held all throughout the world to understand the needs of the students. These

Introduction

The growth rate of mobile technology as well as the developments in the fields of this

technology has provided the modern era with new and strong possibilities for m-learning.

This m learning may be said as an influential tool, which is implemented in the learning

procedure to promote the accessibility of learning and flexibly put a substantial as well as

valuable input to the society mainly for the students with dyslexia. The learning procedure

could be enhanced by the implementation of the multimodal interface in m-learning for the

students with dyslexia (Jackson, 2014). To cope up with the student’s needs with dyslexia the

multimodal interface should be customized according to particular students.

Dyslexia can be explained as a language-based disability of learning. The students

with dyslexia possess difficulties in reading, writing and speaking. These students needs extra

support from the society to carry on their education (Ghisio et al., 2017). For this reason, it is

necessary to adopt functions of multimodality that will not only understand the needs of the

students but also help them to choose their preferred style of learning.

Framework for the interface tool

The theoretical framework is provided for the implementation of the multimodal

interface for enhancing the learning procedure for students with dyslexia (Alghabban, Salama

& Altalhi, 2017). The methods that can be used to implement the multimodal interface are

data collection, analysis of data and the design for the tool.

The first phase of the method that is to be implemented may be said as the

specification of the needs of the users. The aim of this first phase is to identify the needs of

the students with dyslexia. While designing the multimodal interface it is necessary to know

the needs of the student learning procedure as well as the style, the student will prefer. Many

surveys were held all throughout the world to understand the needs of the students. These

2MULTIMODAL INTERFACE IN TRANSFORMED LEARNING FOR DYSLEXIA

surveys helped the experts to customize the multimodal interface according to the needs of

the particular students.

The second phase is related with the analysis of data. After the conduction of survey,

the data from these surveys are critically analysed. From these surveys, it is identified that the

students with dyslexia prefer to study from anywhere and access the data from other place

rather than the class. Therefore, the application of the multimodal interface should cover the

real needs of the students.

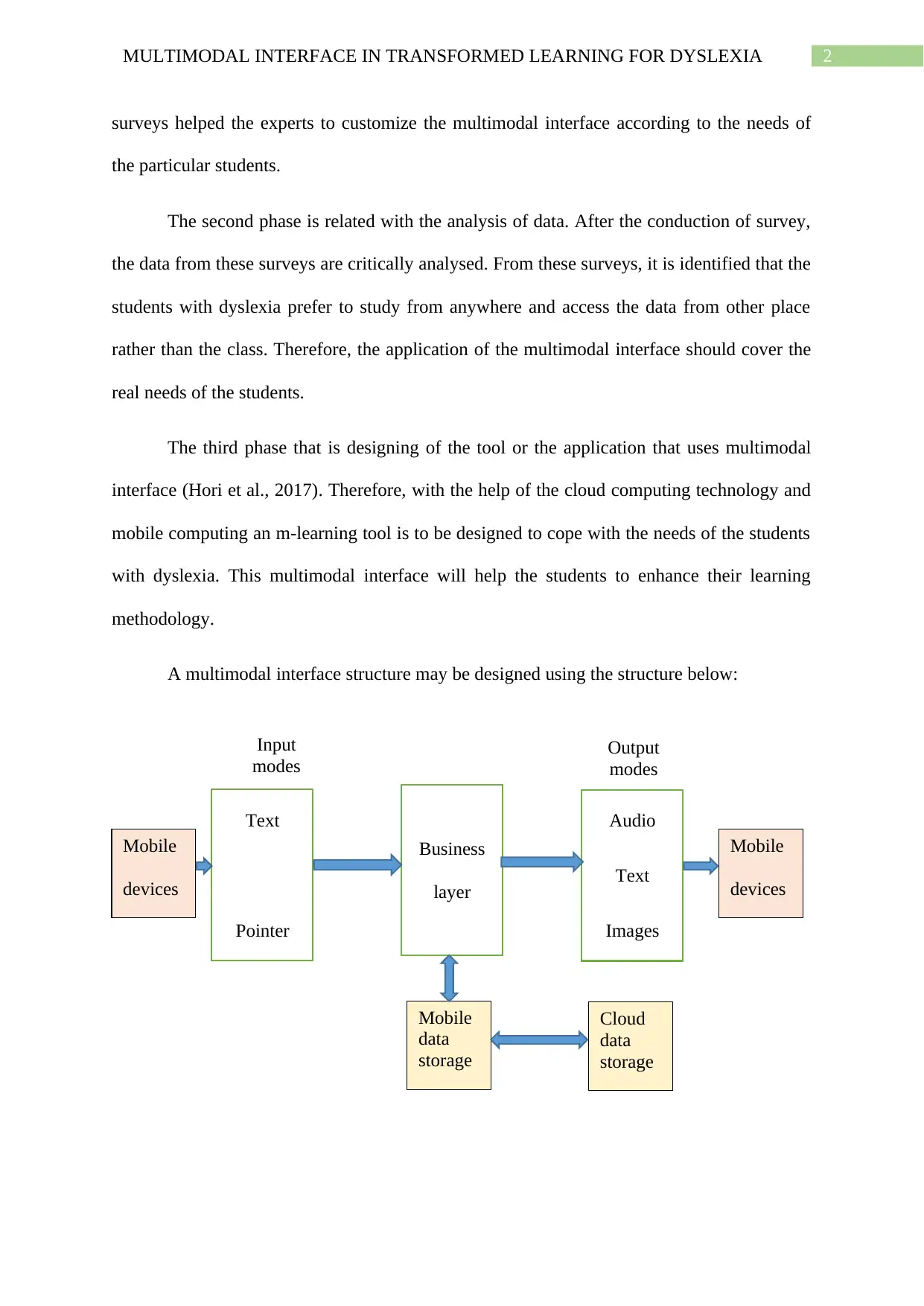

The third phase that is designing of the tool or the application that uses multimodal

interface (Hori et al., 2017). Therefore, with the help of the cloud computing technology and

mobile computing an m-learning tool is to be designed to cope with the needs of the students

with dyslexia. This multimodal interface will help the students to enhance their learning

methodology.

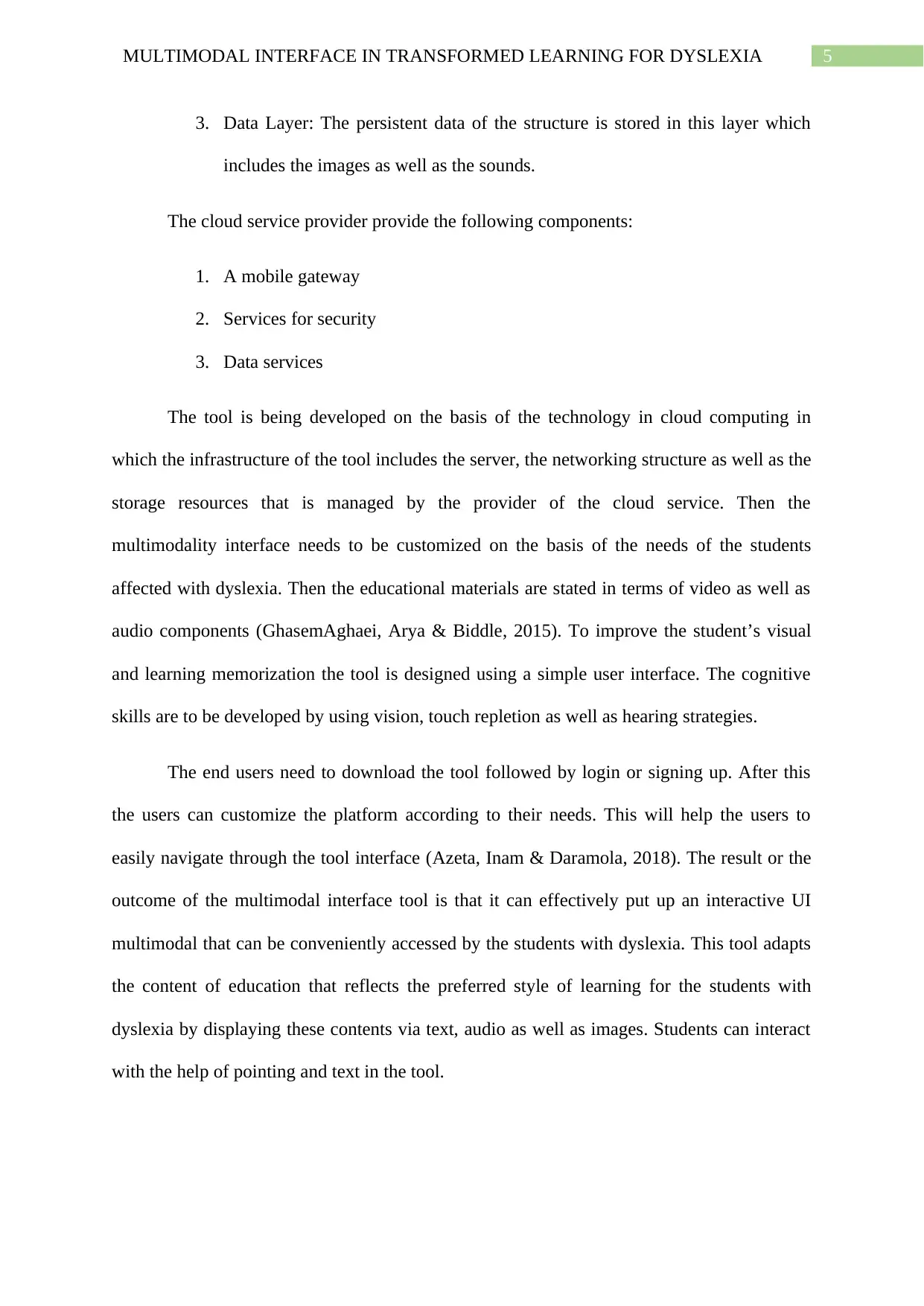

A multimodal interface structure may be designed using the structure below:

Business

layer

Audio

Text

Images

Text

Pointer

Input

modes

Output

modes

Mobile

devices

Mobile

devices

Mobile

data

storage

Cloud

data

storage

surveys helped the experts to customize the multimodal interface according to the needs of

the particular students.

The second phase is related with the analysis of data. After the conduction of survey,

the data from these surveys are critically analysed. From these surveys, it is identified that the

students with dyslexia prefer to study from anywhere and access the data from other place

rather than the class. Therefore, the application of the multimodal interface should cover the

real needs of the students.

The third phase that is designing of the tool or the application that uses multimodal

interface (Hori et al., 2017). Therefore, with the help of the cloud computing technology and

mobile computing an m-learning tool is to be designed to cope with the needs of the students

with dyslexia. This multimodal interface will help the students to enhance their learning

methodology.

A multimodal interface structure may be designed using the structure below:

Business

layer

Audio

Text

Images

Text

Pointer

Input

modes

Output

modes

Mobile

devices

Mobile

devices

Mobile

data

storage

Cloud

data

storage

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3MULTIMODAL INTERFACE IN TRANSFORMED LEARNING FOR DYSLEXIA

With the implementation of the above multimodal interface, the dyslexia students are

wide opened to free and natural communication as well as learning, helping users to interface

with both input as well as output in the automated systems.

The multimodal systems allow users to learn flexibly and efficiently with the help of

input modalities (speech, hand gesture, gaze) and then receives the information of the system

with the help of output modalities (smart graphics, synthesis of speech).

The multimodal input combines the interface with visual and voice modalities

(Freeman et al., 2017). The advantage of this multimodal input has increased the usability of

the dyslexia students. This multimodal input will enhance the usability of the systems. The

learning procedure is enhanced by the multimodal interface, which helps the students to

understand the procedure of learning in a better way.

The creation of natural mappings between the information and tasks and the modalities is an

important step in designing the multimodal interface (Marchetti & Valente, 2016). The

procedure of integrating all the information from the various sources of input modalities and

after that combining all of them into a compact complete command is often referred as the

multimodal fusion.

Multimodal fusion has three main approaches to the fusion process (Zhuhadar et al.,

2016). Firstly, the recognition based fusion process, which consists of the merging of the

outcomes of every modal recognizer with the help of integration mechanisms such as agent

theory, statistical integration techniques and many more. Input vectors as well as slots are the

common example of this type of modal.

Secondly, the decision based fusion process merges the semantic information, which

is extracted with the help of specific dialogue-driven procedures of fusion to achieve

With the implementation of the above multimodal interface, the dyslexia students are

wide opened to free and natural communication as well as learning, helping users to interface

with both input as well as output in the automated systems.

The multimodal systems allow users to learn flexibly and efficiently with the help of

input modalities (speech, hand gesture, gaze) and then receives the information of the system

with the help of output modalities (smart graphics, synthesis of speech).

The multimodal input combines the interface with visual and voice modalities

(Freeman et al., 2017). The advantage of this multimodal input has increased the usability of

the dyslexia students. This multimodal input will enhance the usability of the systems. The

learning procedure is enhanced by the multimodal interface, which helps the students to

understand the procedure of learning in a better way.

The creation of natural mappings between the information and tasks and the modalities is an

important step in designing the multimodal interface (Marchetti & Valente, 2016). The

procedure of integrating all the information from the various sources of input modalities and

after that combining all of them into a compact complete command is often referred as the

multimodal fusion.

Multimodal fusion has three main approaches to the fusion process (Zhuhadar et al.,

2016). Firstly, the recognition based fusion process, which consists of the merging of the

outcomes of every modal recognizer with the help of integration mechanisms such as agent

theory, statistical integration techniques and many more. Input vectors as well as slots are the

common example of this type of modal.

Secondly, the decision based fusion process merges the semantic information, which

is extracted with the help of specific dialogue-driven procedures of fusion to achieve

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4MULTIMODAL INTERFACE IN TRANSFORMED LEARNING FOR DYSLEXIA

complete interaction (GhasemAghaei, 2017). Typed feature structures is an example of

decision based fusion process.

Thirdly, the hybrid multi-level fusion process is distributed with the decision levels and the

recognition modules (Clayton & Hulme, 2018). Three methodologies are present in the

hybrid multi-level fusion process that is multimodal grammars, finite-state transducers as

well as dialogue moves.

Moreover, the advantage of the multiple modalities of input is the increase in usability

(Srivastava & Haider, 2017). On the other hand, the weakness of a single modality are said to

be the offset by the strength of the other modalities present there.

Proposed development of tool its implementation and the evaluation

The current research suggests the proposed design of the tool as the an architecture

which composes of three tiers:

A client of mobile

Public network that will connect the devices with the cloud services

Cloud environment where the cloud services will be provided

The mobile client has some layers which are as follows

1. Presentation Layer: This layer will include the user interfaces which will give

the students to access the various services within the environment. The

students with dyslexia can access the materials as well as perform the

exercises anytime and from anywhere. The students interact with the help of

the multimodal interface where the input and output modalities are clearly

provided.

2. Business Layer: The business logic of the multimodal tool is represented by

this layer.

complete interaction (GhasemAghaei, 2017). Typed feature structures is an example of

decision based fusion process.

Thirdly, the hybrid multi-level fusion process is distributed with the decision levels and the

recognition modules (Clayton & Hulme, 2018). Three methodologies are present in the

hybrid multi-level fusion process that is multimodal grammars, finite-state transducers as

well as dialogue moves.

Moreover, the advantage of the multiple modalities of input is the increase in usability

(Srivastava & Haider, 2017). On the other hand, the weakness of a single modality are said to

be the offset by the strength of the other modalities present there.

Proposed development of tool its implementation and the evaluation

The current research suggests the proposed design of the tool as the an architecture

which composes of three tiers:

A client of mobile

Public network that will connect the devices with the cloud services

Cloud environment where the cloud services will be provided

The mobile client has some layers which are as follows

1. Presentation Layer: This layer will include the user interfaces which will give

the students to access the various services within the environment. The

students with dyslexia can access the materials as well as perform the

exercises anytime and from anywhere. The students interact with the help of

the multimodal interface where the input and output modalities are clearly

provided.

2. Business Layer: The business logic of the multimodal tool is represented by

this layer.

5MULTIMODAL INTERFACE IN TRANSFORMED LEARNING FOR DYSLEXIA

3. Data Layer: The persistent data of the structure is stored in this layer which

includes the images as well as the sounds.

The cloud service provider provide the following components:

1. A mobile gateway

2. Services for security

3. Data services

The tool is being developed on the basis of the technology in cloud computing in

which the infrastructure of the tool includes the server, the networking structure as well as the

storage resources that is managed by the provider of the cloud service. Then the

multimodality interface needs to be customized on the basis of the needs of the students

affected with dyslexia. Then the educational materials are stated in terms of video as well as

audio components (GhasemAghaei, Arya & Biddle, 2015). To improve the student’s visual

and learning memorization the tool is designed using a simple user interface. The cognitive

skills are to be developed by using vision, touch repletion as well as hearing strategies.

The end users need to download the tool followed by login or signing up. After this

the users can customize the platform according to their needs. This will help the users to

easily navigate through the tool interface (Azeta, Inam & Daramola, 2018). The result or the

outcome of the multimodal interface tool is that it can effectively put up an interactive UI

multimodal that can be conveniently accessed by the students with dyslexia. This tool adapts

the content of education that reflects the preferred style of learning for the students with

dyslexia by displaying these contents via text, audio as well as images. Students can interact

with the help of pointing and text in the tool.

3. Data Layer: The persistent data of the structure is stored in this layer which

includes the images as well as the sounds.

The cloud service provider provide the following components:

1. A mobile gateway

2. Services for security

3. Data services

The tool is being developed on the basis of the technology in cloud computing in

which the infrastructure of the tool includes the server, the networking structure as well as the

storage resources that is managed by the provider of the cloud service. Then the

multimodality interface needs to be customized on the basis of the needs of the students

affected with dyslexia. Then the educational materials are stated in terms of video as well as

audio components (GhasemAghaei, Arya & Biddle, 2015). To improve the student’s visual

and learning memorization the tool is designed using a simple user interface. The cognitive

skills are to be developed by using vision, touch repletion as well as hearing strategies.

The end users need to download the tool followed by login or signing up. After this

the users can customize the platform according to their needs. This will help the users to

easily navigate through the tool interface (Azeta, Inam & Daramola, 2018). The result or the

outcome of the multimodal interface tool is that it can effectively put up an interactive UI

multimodal that can be conveniently accessed by the students with dyslexia. This tool adapts

the content of education that reflects the preferred style of learning for the students with

dyslexia by displaying these contents via text, audio as well as images. Students can interact

with the help of pointing and text in the tool.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

6MULTIMODAL INTERFACE IN TRANSFORMED LEARNING FOR DYSLEXIA

VOICE

FACIAL

EXPRESSION

TOUCH

SCREEN

GESTURE

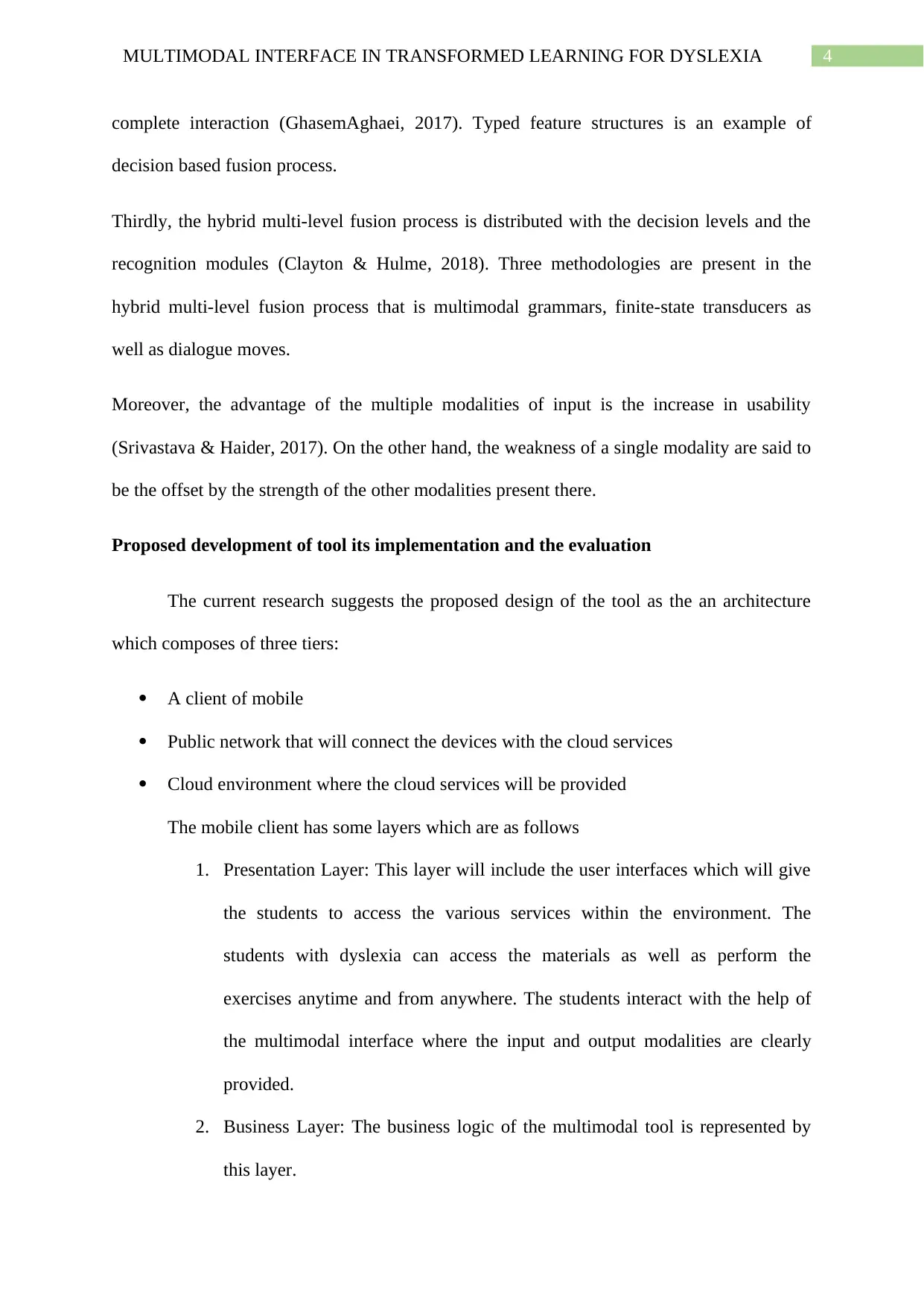

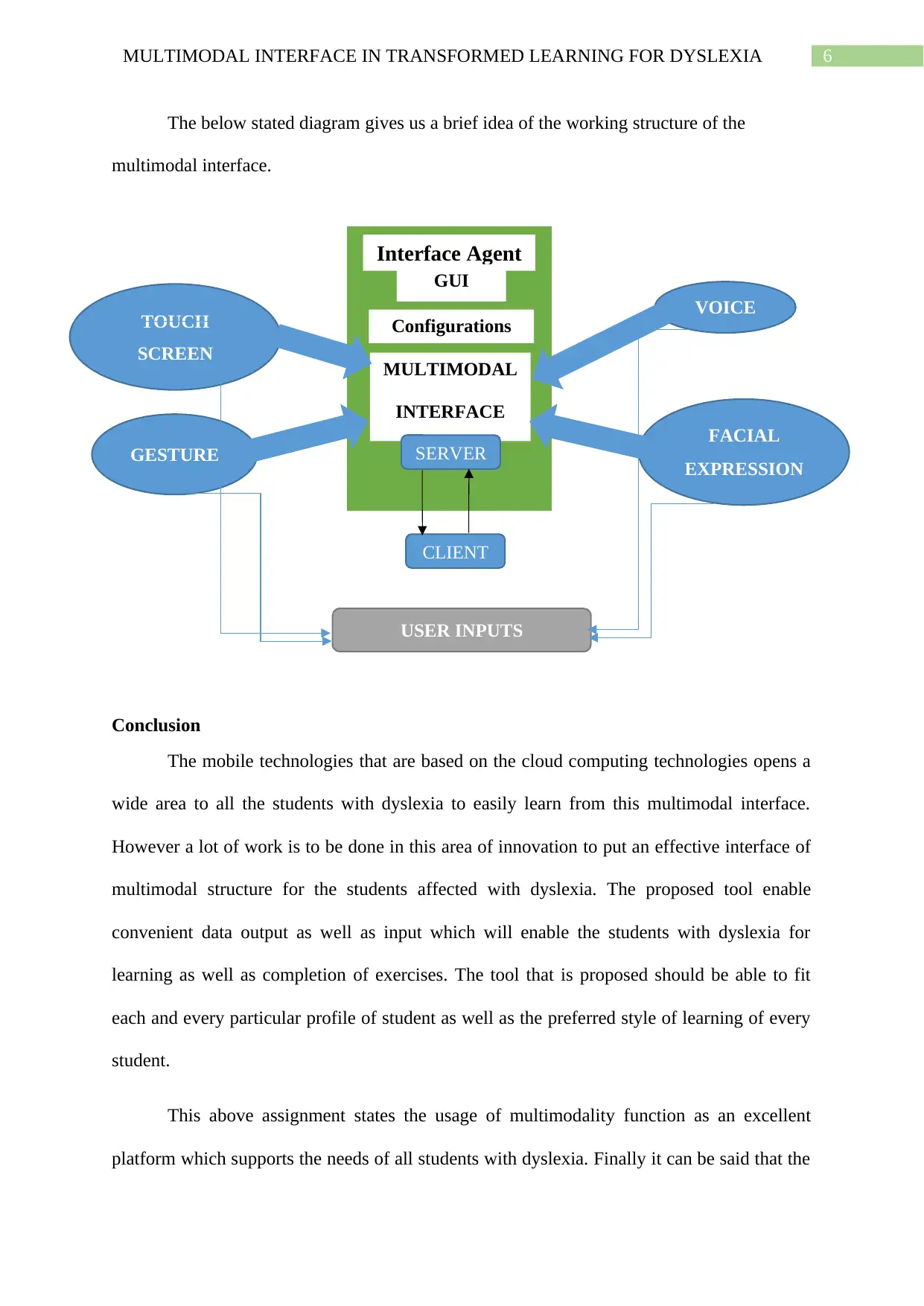

The below stated diagram gives us a brief idea of the working structure of the

multimodal interface.

Conclusion

The mobile technologies that are based on the cloud computing technologies opens a

wide area to all the students with dyslexia to easily learn from this multimodal interface.

However a lot of work is to be done in this area of innovation to put an effective interface of

multimodal structure for the students affected with dyslexia. The proposed tool enable

convenient data output as well as input which will enable the students with dyslexia for

learning as well as completion of exercises. The tool that is proposed should be able to fit

each and every particular profile of student as well as the preferred style of learning of every

student.

This above assignment states the usage of multimodality function as an excellent

platform which supports the needs of all students with dyslexia. Finally it can be said that the

Interface Agent

GUI

Configurations

MULTIMODAL

INTERFACE

SERVER

CLIENT

USER INPUTS

VOICE

FACIAL

EXPRESSION

TOUCH

SCREEN

GESTURE

The below stated diagram gives us a brief idea of the working structure of the

multimodal interface.

Conclusion

The mobile technologies that are based on the cloud computing technologies opens a

wide area to all the students with dyslexia to easily learn from this multimodal interface.

However a lot of work is to be done in this area of innovation to put an effective interface of

multimodal structure for the students affected with dyslexia. The proposed tool enable

convenient data output as well as input which will enable the students with dyslexia for

learning as well as completion of exercises. The tool that is proposed should be able to fit

each and every particular profile of student as well as the preferred style of learning of every

student.

This above assignment states the usage of multimodality function as an excellent

platform which supports the needs of all students with dyslexia. Finally it can be said that the

Interface Agent

GUI

Configurations

MULTIMODAL

INTERFACE

SERVER

CLIENT

USER INPUTS

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7MULTIMODAL INTERFACE IN TRANSFORMED LEARNING FOR DYSLEXIA

multimodal interface is a usable mode of learning procedure for the persons with dyslexia and

the content of the learning materials can be correctly designed on the basis of the requirement

of the students. Moreover the needs of the students are critically assessed to customize the

tool according to the student’s needs.

multimodal interface is a usable mode of learning procedure for the persons with dyslexia and

the content of the learning materials can be correctly designed on the basis of the requirement

of the students. Moreover the needs of the students are critically assessed to customize the

tool according to the student’s needs.

8MULTIMODAL INTERFACE IN TRANSFORMED LEARNING FOR DYSLEXIA

References

A. Jackson, S. (2014). Student reflections on multimodal course content delivery.

Reference Services Review, 42(3), 467-483.

Alghabban, W. G., Salama, R. M., & Altalhi, A. H. (2017). Mobile cloud computing: An

effective multimodal interface tool for students with dyslexia. Computers in

Human Behavior, 75, 160-166.

Azeta, A. A., Inam, I. A., & Daramola, O. (2018). A Voice-Based E-Examination

Framework for Visually Impaired Students in Open and Distance Learning.

Turkish Online Journal of Distance Education, 19(2), 34-46.

Clayton, F. J., & Hulme, C. (2018). Automatic activation of sounds by letters occurs early

in development but is not impaired in children with dyslexia. Scientific Studies of

Reading, 22(2), 137-151.

Freeman, E., Wilson, G., Vo, D. B., Ng, A., Politis, I., & Brewster, S. (2017, April).

Multimodal feedback in HCI: haptics, non-speech audio, and their applications. In

The Handbook of Multimodal-Multisensor Interfaces (pp. 277-317). Association

for Computing Machinery and Morgan & Claypool.

GhasemAghaei, R. (2017). Multimodal Software For Affective Education: User

Interaction Design And Evaluation (Doctoral dissertation, Carleton University).

GhasemAghaei, R., Arya, A., & Biddle, R. (2015, June). Multimodal Software for

Affective Education: UI Design. In EdMedia+ Innovate Learning (pp. 1844-

1850). Association for the Advancement of Computing in Education (AACE).

Ghisio, S., Alborno, P., Volta, E., Gori, M., & Volpe, G. (2017, November). A

multimodal serious-game to teach fractions in primary school. In Proceedings of

References

A. Jackson, S. (2014). Student reflections on multimodal course content delivery.

Reference Services Review, 42(3), 467-483.

Alghabban, W. G., Salama, R. M., & Altalhi, A. H. (2017). Mobile cloud computing: An

effective multimodal interface tool for students with dyslexia. Computers in

Human Behavior, 75, 160-166.

Azeta, A. A., Inam, I. A., & Daramola, O. (2018). A Voice-Based E-Examination

Framework for Visually Impaired Students in Open and Distance Learning.

Turkish Online Journal of Distance Education, 19(2), 34-46.

Clayton, F. J., & Hulme, C. (2018). Automatic activation of sounds by letters occurs early

in development but is not impaired in children with dyslexia. Scientific Studies of

Reading, 22(2), 137-151.

Freeman, E., Wilson, G., Vo, D. B., Ng, A., Politis, I., & Brewster, S. (2017, April).

Multimodal feedback in HCI: haptics, non-speech audio, and their applications. In

The Handbook of Multimodal-Multisensor Interfaces (pp. 277-317). Association

for Computing Machinery and Morgan & Claypool.

GhasemAghaei, R. (2017). Multimodal Software For Affective Education: User

Interaction Design And Evaluation (Doctoral dissertation, Carleton University).

GhasemAghaei, R., Arya, A., & Biddle, R. (2015, June). Multimodal Software for

Affective Education: UI Design. In EdMedia+ Innovate Learning (pp. 1844-

1850). Association for the Advancement of Computing in Education (AACE).

Ghisio, S., Alborno, P., Volta, E., Gori, M., & Volpe, G. (2017, November). A

multimodal serious-game to teach fractions in primary school. In Proceedings of

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

9MULTIMODAL INTERFACE IN TRANSFORMED LEARNING FOR DYSLEXIA

the 1st ACM SIGCHI International Workshop on Multimodal Interaction for

Education (pp. 67-70). ACM.

Hori, C., Hori, T., Lee, T. Y., Zhang, Z., Harsham, B., Hershey, J. R., ... & Sumi, K.

(2017). Attention-based multimodal fusion for video description. In Proceedings

of the IEEE international conference on computer vision (pp. 4193-4202).

Marchetti, E., & Valente, A. (2016, July). The Many Voices of Audiobooks: Interactivity

and Multimodality in Language Learning. In International Conference on

Learning and Collaboration Technologies (pp. 165-176). Springer, Cham.

Srivastava, B., & Haider, M. T. U. (2017). Computer and Information Sciences.

Zhuhadar, L., Carson, B., Daday, J., Thrasher, E., & Nasraoui, O. (2016). Computer-

assisted learning based on universal design, multimodal presentation and textual

linkage. Journal of the Knowledge Economy, 7(2), 373-387.

the 1st ACM SIGCHI International Workshop on Multimodal Interaction for

Education (pp. 67-70). ACM.

Hori, C., Hori, T., Lee, T. Y., Zhang, Z., Harsham, B., Hershey, J. R., ... & Sumi, K.

(2017). Attention-based multimodal fusion for video description. In Proceedings

of the IEEE international conference on computer vision (pp. 4193-4202).

Marchetti, E., & Valente, A. (2016, July). The Many Voices of Audiobooks: Interactivity

and Multimodality in Language Learning. In International Conference on

Learning and Collaboration Technologies (pp. 165-176). Springer, Cham.

Srivastava, B., & Haider, M. T. U. (2017). Computer and Information Sciences.

Zhuhadar, L., Carson, B., Daday, J., Thrasher, E., & Nasraoui, O. (2016). Computer-

assisted learning based on universal design, multimodal presentation and textual

linkage. Journal of the Knowledge Economy, 7(2), 373-387.

1 out of 10

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.