Solution: INFO411 Data Mining Assignment 1, University of Wollongong

VerifiedAdded on 2023/01/23

|3

|1135

|84

Homework Assignment

AI Summary

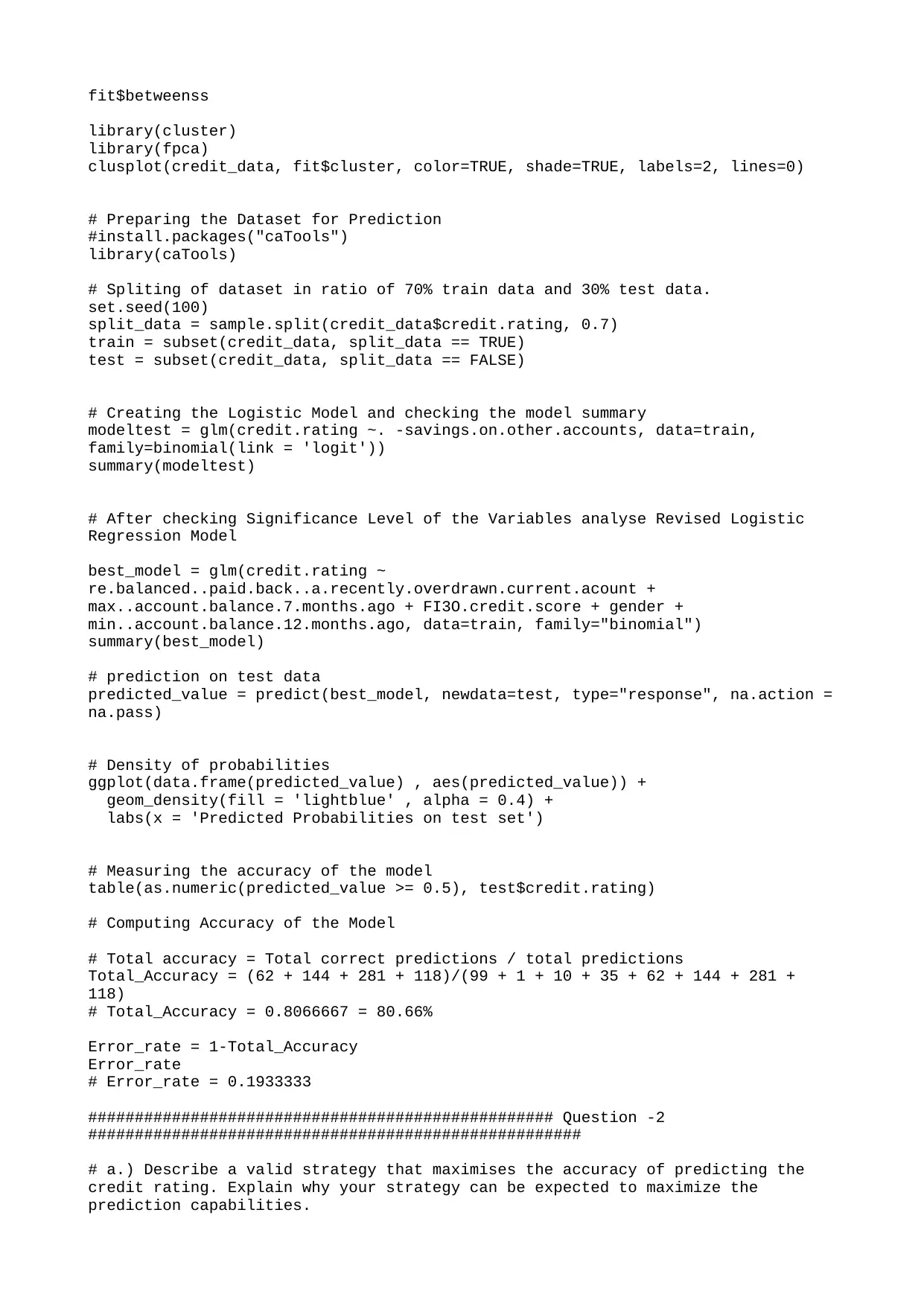

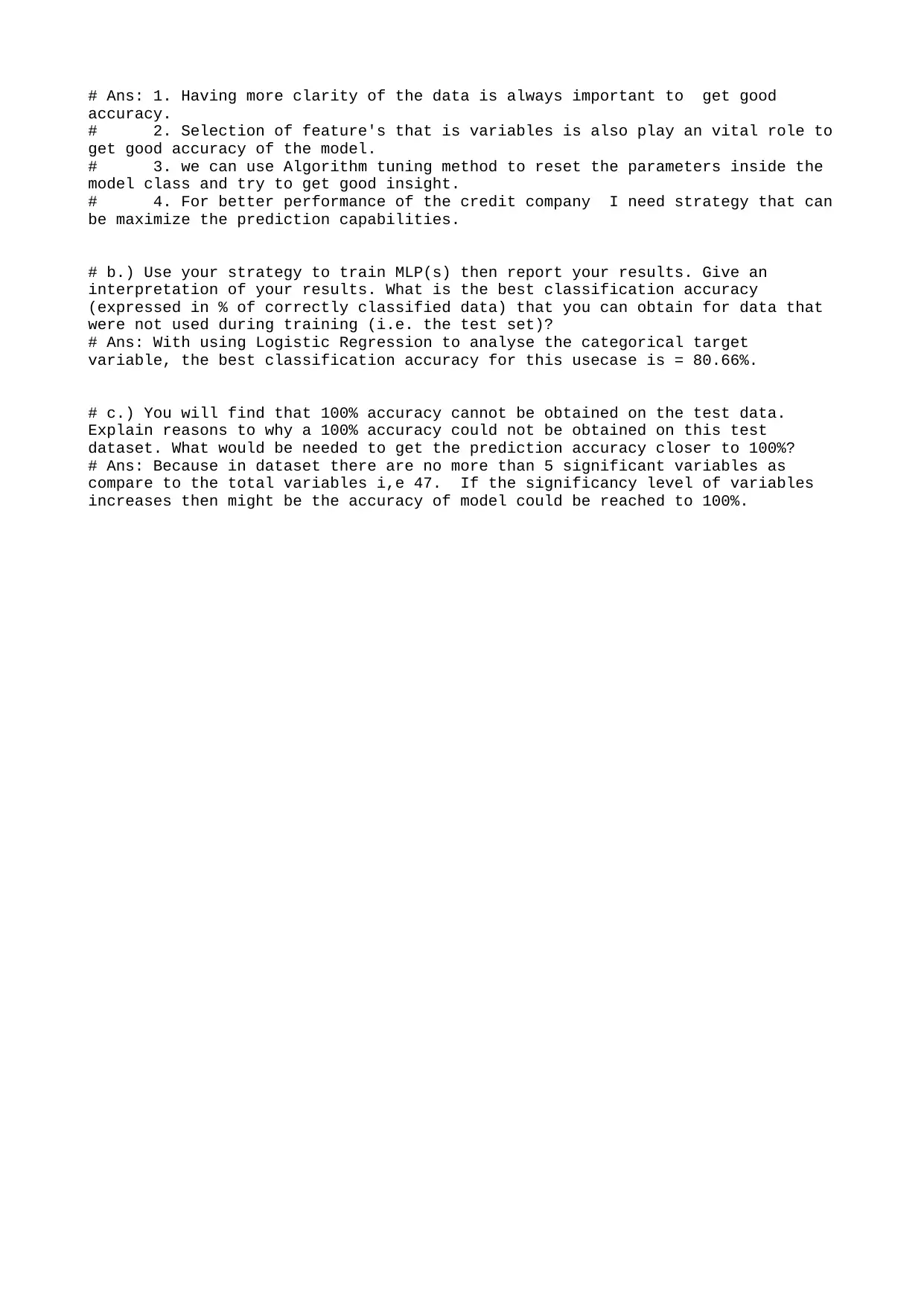

This document presents a comprehensive solution to a data mining assignment, focusing on credit rating prediction using the provided dataset. The solution begins with data import, cleaning (handling missing values and outliers), and exploratory data analysis. K-means clustering is applied for initial data exploration. The core of the solution involves building a logistic regression model to predict credit ratings, including feature selection and model evaluation. The document reports the accuracy achieved and discusses strategies to maximize prediction accuracy, including feature selection and algorithm tuning. The document also explains why 100% accuracy is not achievable in the given context, and provides insights on how to improve the model's performance. The solution provides a detailed analysis of the model's performance and provides a breakdown of the results and discusses strategies for improving model accuracy.

1 out of 3

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)