Usability Test Report: CI6310 User Experience, University, Semester 1

VerifiedAdded on 2019/09/18

|4

|1720

|433

Report

AI Summary

This report details a usability test conducted as part of the CI6310 User Experience module, adhering to the CIF standard method. The assignment required students to evaluate an existing system through usability testing, focusing on user needs, activities, and the implications of technology. The report includes aims, study method (experimental design, participants, tasks, metrics, materials, procedure, and expected results), evaluation results (written summary, performance data, usability issues, and redesign recommendations), and a discussion of the evaluation, including limitations. The report also emphasizes the importance of clear communication of human-computer interaction issues and user experiences. The viva component of the assessment required students to present raw data files to verify the test's completion. The marking scheme assesses the conciseness of the summary, performance data, usability issues, and redesign suggestions, with a focus on the empirical basis of the findings and the clarity of the explanations. The report should follow a standard format and provides insights on design and evaluation.

2017-2018

Faculty of Science, Engineering and Computing

Assessment Form

Module: CI 6310 User Experience

Title of Assignment: Usability Test Report

Submission details: see Hand-in of Reports (p1)

Module Learning Outcomes assessed in this piece of coursework

The learning outcomes for this piece of coursework are:

Research user needs and the implications of technology for work practice

Analyse users and their activities, and carry forward lessons learned

Design input modalities, output media and interactive content to appeal to an audience

Evaluate the quality of users’ experience

Reflect upon design practice and discuss the strengths and weaknesses of alternative techniques

The coursework is also an opportunity for you to develop as individuals, and for employment (though this is not assessed)

Communicate and collaborate in a professional manner

To work in an ethical, social and security –conscious manner

Assignment Brief and assessment criteria (these will be discussed within a formally timetabled class)

This piece of coursework is to conduct and report a usability test of an existing system. The usability test should be based on the

CIF standard method and reporting format described in the lectures. Typically, students ask their friends and family to participate in

usability test, or play ‘participant’ for each other – no need to approach strangers! A handful of participants is usually sufficient to

cover a range of user personas and identify a range of usability issues. I know you do not have enough time to test a

Faculty of Science, Engineering and Computing

Assessment Form

Module: CI 6310 User Experience

Title of Assignment: Usability Test Report

Submission details: see Hand-in of Reports (p1)

Module Learning Outcomes assessed in this piece of coursework

The learning outcomes for this piece of coursework are:

Research user needs and the implications of technology for work practice

Analyse users and their activities, and carry forward lessons learned

Design input modalities, output media and interactive content to appeal to an audience

Evaluate the quality of users’ experience

Reflect upon design practice and discuss the strengths and weaknesses of alternative techniques

The coursework is also an opportunity for you to develop as individuals, and for employment (though this is not assessed)

Communicate and collaborate in a professional manner

To work in an ethical, social and security –conscious manner

Assignment Brief and assessment criteria (these will be discussed within a formally timetabled class)

This piece of coursework is to conduct and report a usability test of an existing system. The usability test should be based on the

CIF standard method and reporting format described in the lectures. Typically, students ask their friends and family to participate in

usability test, or play ‘participant’ for each other – no need to approach strangers! A handful of participants is usually sufficient to

cover a range of user personas and identify a range of usability issues. I know you do not have enough time to test a

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

2017-2018

representative sample adequate for statistical analysis, but go about your work and analyse the results as if you were going to test

many participants eventually.

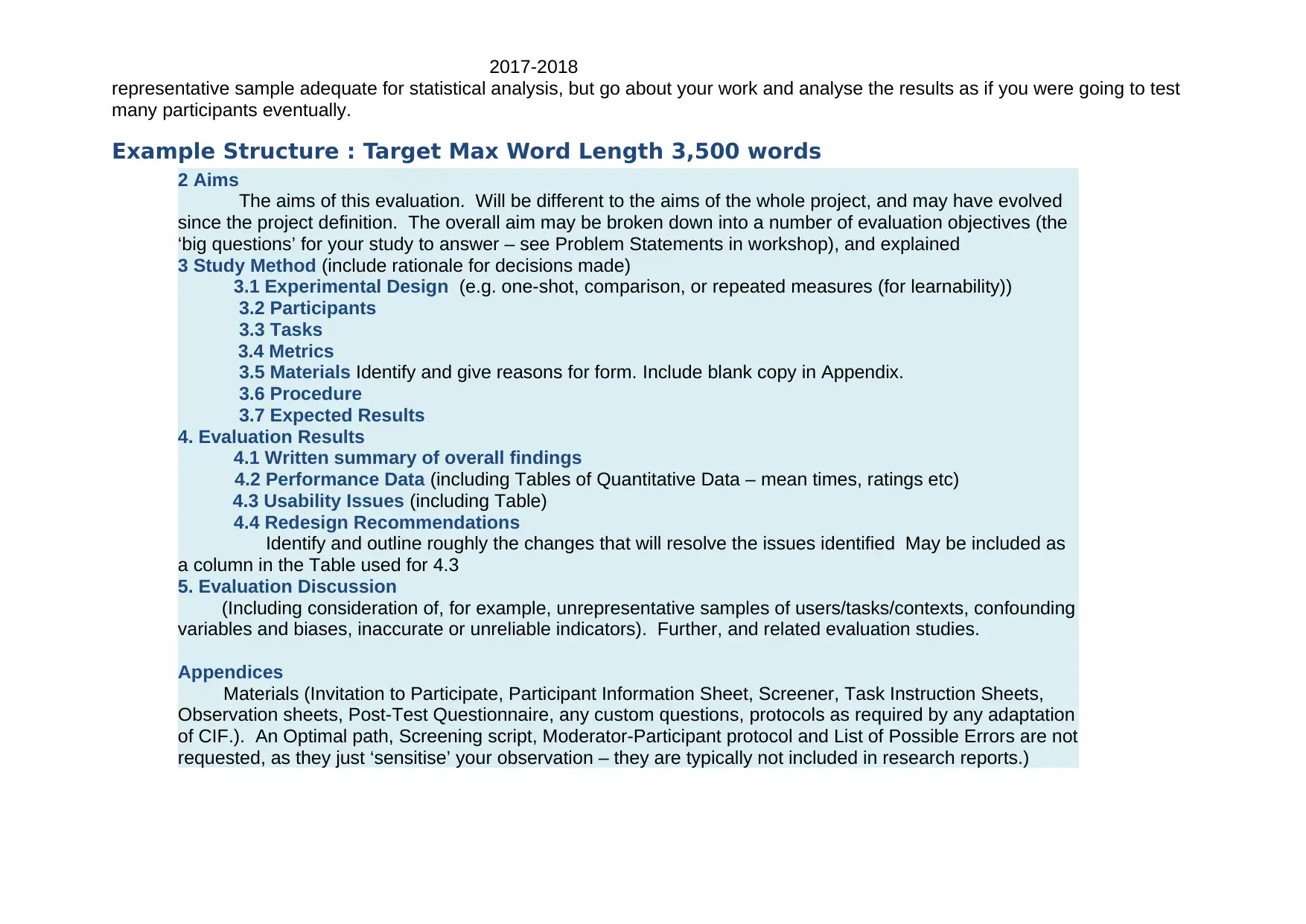

Example Structure : Target Max Word Length 3,500 words

2 Aims

The aims of this evaluation. Will be different to the aims of the whole project, and may have evolved

since the project definition. The overall aim may be broken down into a number of evaluation objectives (the

‘big questions’ for your study to answer – see Problem Statements in workshop), and explained

3 Study Method (include rationale for decisions made)

3.1 Experimental Design (e.g. one-shot, comparison, or repeated measures (for learnability))

3.2 Participants

3.3 Tasks

3.4 Metrics

3.5 Materials Identify and give reasons for form. Include blank copy in Appendix.

3.6 Procedure

3.7 Expected Results

4. Evaluation Results

4.1 Written summary of overall findings

4.2 Performance Data (including Tables of Quantitative Data – mean times, ratings etc)

4.3 Usability Issues (including Table)

4.4 Redesign Recommendations

Identify and outline roughly the changes that will resolve the issues identified May be included as

a column in the Table used for 4.3

5. Evaluation Discussion

(Including consideration of, for example, unrepresentative samples of users/tasks/contexts, confounding

variables and biases, inaccurate or unreliable indicators). Further, and related evaluation studies.

Appendices

Materials (Invitation to Participate, Participant Information Sheet, Screener, Task Instruction Sheets,

Observation sheets, Post-Test Questionnaire, any custom questions, protocols as required by any adaptation

of CIF.). An Optimal path, Screening script, Moderator-Participant protocol and List of Possible Errors are not

requested, as they just ‘sensitise’ your observation – they are typically not included in research reports.)

representative sample adequate for statistical analysis, but go about your work and analyse the results as if you were going to test

many participants eventually.

Example Structure : Target Max Word Length 3,500 words

2 Aims

The aims of this evaluation. Will be different to the aims of the whole project, and may have evolved

since the project definition. The overall aim may be broken down into a number of evaluation objectives (the

‘big questions’ for your study to answer – see Problem Statements in workshop), and explained

3 Study Method (include rationale for decisions made)

3.1 Experimental Design (e.g. one-shot, comparison, or repeated measures (for learnability))

3.2 Participants

3.3 Tasks

3.4 Metrics

3.5 Materials Identify and give reasons for form. Include blank copy in Appendix.

3.6 Procedure

3.7 Expected Results

4. Evaluation Results

4.1 Written summary of overall findings

4.2 Performance Data (including Tables of Quantitative Data – mean times, ratings etc)

4.3 Usability Issues (including Table)

4.4 Redesign Recommendations

Identify and outline roughly the changes that will resolve the issues identified May be included as

a column in the Table used for 4.3

5. Evaluation Discussion

(Including consideration of, for example, unrepresentative samples of users/tasks/contexts, confounding

variables and biases, inaccurate or unreliable indicators). Further, and related evaluation studies.

Appendices

Materials (Invitation to Participate, Participant Information Sheet, Screener, Task Instruction Sheets,

Observation sheets, Post-Test Questionnaire, any custom questions, protocols as required by any adaptation

of CIF.). An Optimal path, Screening script, Moderator-Participant protocol and List of Possible Errors are not

requested, as they just ‘sensitise’ your observation – they are typically not included in research reports.)

2017-2018

Illustrations

Follow the evaluation lectures carefully, and try to work systematically – a usability test is a little bit of science!. A good usability

test report is complete, and follows the standard format. More advanced students will be able to adapt the standard method to

accommodate the unique features of their project and the problem at hand – for example, to evaluate user experience, not just

usability. It is important for usability test reports to be clear – human - computer interaction and user experiences can be difficult to

describe fully and unambiguously, and we need to all understand the issues first, before we can think about how to resolve them.

Viva

You are asked to give a demo/viva of raw data files (signed consent forms, completed questionnaires, observation sheets,

video/audio recordings) to verify you have indeed performed the usability test you claim to have done. The viva will occur during

any timetabled workshop during January and February i.e. immediately following the hand-in of the usability test report. Please

bring along your raw data files to any one of these workshops, and we can talk about how well your ‘test sessions’ ran. The

marking scheme awards a few marks for a complete, well organised and rich set of data. When a viva is requested, attendance

and evidence is absolutely essential.

Feedback (including details of how and where feedback will be provided) As standard see p4

Further Guidance

Raw Data is Private, Keep a BackUp

Please keep your raw evaluation data (completed questionnaires, task instruction sheets, video recordings etc) as hard copy for

use in the viva. Do not include raw data sets, or any software you may have created in your report. To ensure participants’

privacy, please do not include personal information in online submissions, as these are copied to servers and plagiarism databases

etc. all over the Internet. You may, however, include anonymised data extracts for illustration – quotes, video-clips and

screenshots with faces blurred out etc. Where possible, please place these figures next to the relevant paragraph in the report,

though this may not always be possible.

Major mistakes

Conducting heuristic evaluation, or some kind of analytic inspection, rather than an empirical study.

Illustrations

Follow the evaluation lectures carefully, and try to work systematically – a usability test is a little bit of science!. A good usability

test report is complete, and follows the standard format. More advanced students will be able to adapt the standard method to

accommodate the unique features of their project and the problem at hand – for example, to evaluate user experience, not just

usability. It is important for usability test reports to be clear – human - computer interaction and user experiences can be difficult to

describe fully and unambiguously, and we need to all understand the issues first, before we can think about how to resolve them.

Viva

You are asked to give a demo/viva of raw data files (signed consent forms, completed questionnaires, observation sheets,

video/audio recordings) to verify you have indeed performed the usability test you claim to have done. The viva will occur during

any timetabled workshop during January and February i.e. immediately following the hand-in of the usability test report. Please

bring along your raw data files to any one of these workshops, and we can talk about how well your ‘test sessions’ ran. The

marking scheme awards a few marks for a complete, well organised and rich set of data. When a viva is requested, attendance

and evidence is absolutely essential.

Feedback (including details of how and where feedback will be provided) As standard see p4

Further Guidance

Raw Data is Private, Keep a BackUp

Please keep your raw evaluation data (completed questionnaires, task instruction sheets, video recordings etc) as hard copy for

use in the viva. Do not include raw data sets, or any software you may have created in your report. To ensure participants’

privacy, please do not include personal information in online submissions, as these are copied to servers and plagiarism databases

etc. all over the Internet. You may, however, include anonymised data extracts for illustration – quotes, video-clips and

screenshots with faces blurred out etc. Where possible, please place these figures next to the relevant paragraph in the report,

though this may not always be possible.

Major mistakes

Conducting heuristic evaluation, or some kind of analytic inspection, rather than an empirical study.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

2017-2018

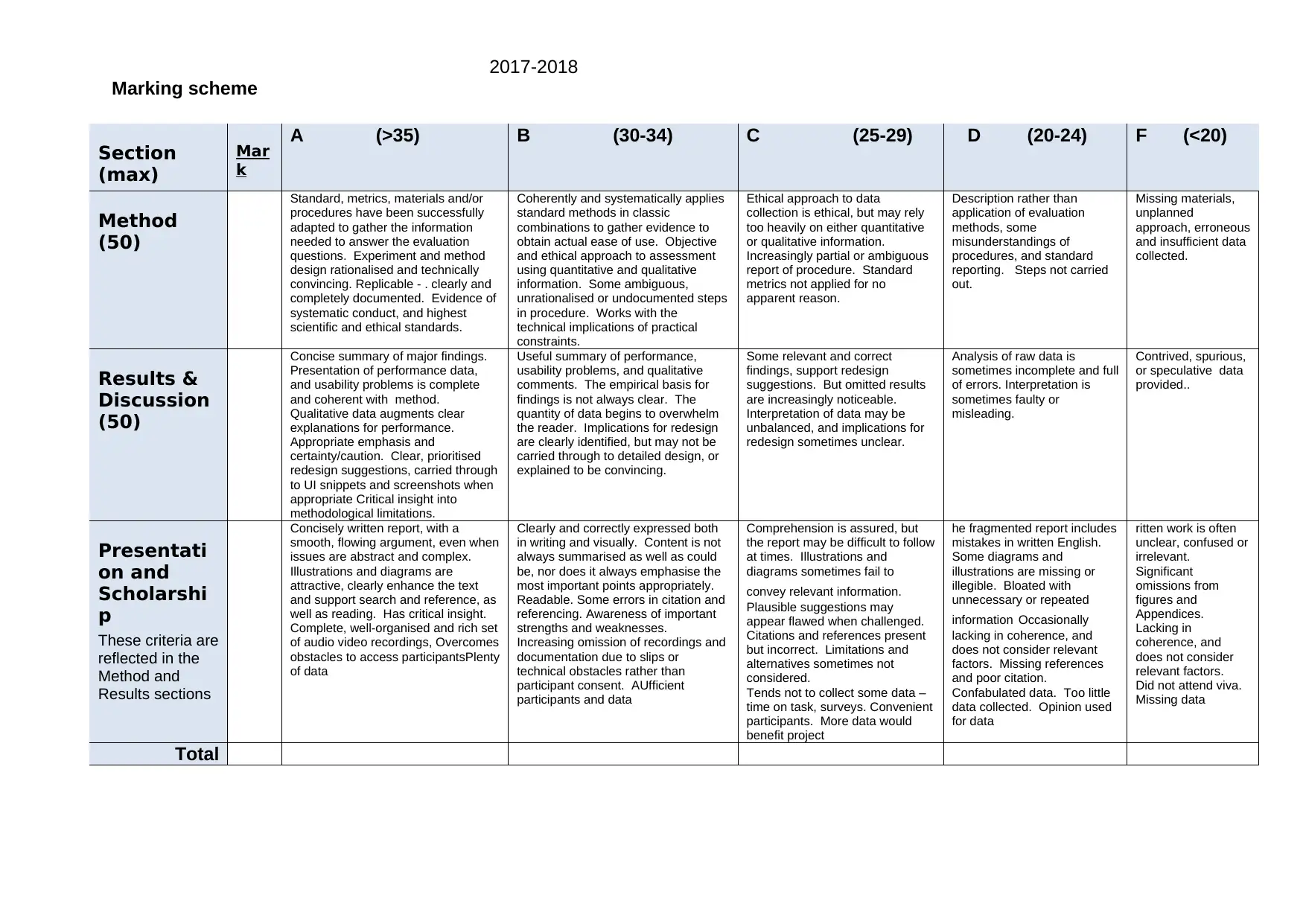

Marking scheme

Section

(max)

Mar

k

A (>35) B (30-34) C (25-29) D (20-24) F (<20)

Method

(50)

Standard, metrics, materials and/or

procedures have been successfully

adapted to gather the information

needed to answer the evaluation

questions. Experiment and method

design rationalised and technically

convincing. Replicable - . clearly and

completely documented. Evidence of

systematic conduct, and highest

scientific and ethical standards.

Coherently and systematically applies

standard methods in classic

combinations to gather evidence to

obtain actual ease of use. Objective

and ethical approach to assessment

using quantitative and qualitative

information. Some ambiguous,

unrationalised or undocumented steps

in procedure. Works with the

technical implications of practical

constraints.

Ethical approach to data

collection is ethical, but may rely

too heavily on either quantitative

or qualitative information.

Increasingly partial or ambiguous

report of procedure. Standard

metrics not applied for no

apparent reason.

Description rather than

application of evaluation

methods, some

misunderstandings of

procedures, and standard

reporting. Steps not carried

out.

Missing materials,

unplanned

approach, erroneous

and insufficient data

collected.

Results &

Discussion

(50)

Concise summary of major findings.

Presentation of performance data,

and usability problems is complete

and coherent with method.

Qualitative data augments clear

explanations for performance.

Appropriate emphasis and

certainty/caution. Clear, prioritised

redesign suggestions, carried through

to UI snippets and screenshots when

appropriate Critical insight into

methodological limitations.

Useful summary of performance,

usability problems, and qualitative

comments. The empirical basis for

findings is not always clear. The

quantity of data begins to overwhelm

the reader. Implications for redesign

are clearly identified, but may not be

carried through to detailed design, or

explained to be convincing.

Some relevant and correct

findings, support redesign

suggestions. But omitted results

are increasingly noticeable.

Interpretation of data may be

unbalanced, and implications for

redesign sometimes unclear.

Analysis of raw data is

sometimes incomplete and full

of errors. Interpretation is

sometimes faulty or

misleading.

Contrived, spurious,

or speculative data

provided..

Presentati

on and

Scholarshi

p

These criteria are

reflected in the

Method and

Results sections

Concisely written report, with a

smooth, flowing argument, even when

issues are abstract and complex.

Illustrations and diagrams are

attractive, clearly enhance the text

and support search and reference, as

well as reading. Has critical insight.

Complete, well-organised and rich set

of audio video recordings, Overcomes

obstacles to access participantsPlenty

of data

Clearly and correctly expressed both

in writing and visually. Content is not

always summarised as well as could

be, nor does it always emphasise the

most important points appropriately.

Readable. Some errors in citation and

referencing. Awareness of important

strengths and weaknesses.

Increasing omission of recordings and

documentation due to slips or

technical obstacles rather than

participant consent. AUfficient

participants and data

Comprehension is assured, but

the report may be difficult to follow

at times. Illustrations and

diagrams sometimes fail to

convey relevant information.

Plausible suggestions may

appear flawed when challenged.

Citations and references present

but incorrect. Limitations and

alternatives sometimes not

considered.

Tends not to collect some data –

time on task, surveys. Convenient

participants. More data would

benefit project

he fragmented report includes

mistakes in written English.

Some diagrams and

illustrations are missing or

illegible. Bloated with

unnecessary or repeated

information Occasionally

lacking in coherence, and

does not consider relevant

factors. Missing references

and poor citation.

Confabulated data. Too little

data collected. Opinion used

for data

ritten work is often

unclear, confused or

irrelevant.

Significant

omissions from

figures and

Appendices.

Lacking in

coherence, and

does not consider

relevant factors.

Did not attend viva.

Missing data

Total

Marking scheme

Section

(max)

Mar

k

A (>35) B (30-34) C (25-29) D (20-24) F (<20)

Method

(50)

Standard, metrics, materials and/or

procedures have been successfully

adapted to gather the information

needed to answer the evaluation

questions. Experiment and method

design rationalised and technically

convincing. Replicable - . clearly and

completely documented. Evidence of

systematic conduct, and highest

scientific and ethical standards.

Coherently and systematically applies

standard methods in classic

combinations to gather evidence to

obtain actual ease of use. Objective

and ethical approach to assessment

using quantitative and qualitative

information. Some ambiguous,

unrationalised or undocumented steps

in procedure. Works with the

technical implications of practical

constraints.

Ethical approach to data

collection is ethical, but may rely

too heavily on either quantitative

or qualitative information.

Increasingly partial or ambiguous

report of procedure. Standard

metrics not applied for no

apparent reason.

Description rather than

application of evaluation

methods, some

misunderstandings of

procedures, and standard

reporting. Steps not carried

out.

Missing materials,

unplanned

approach, erroneous

and insufficient data

collected.

Results &

Discussion

(50)

Concise summary of major findings.

Presentation of performance data,

and usability problems is complete

and coherent with method.

Qualitative data augments clear

explanations for performance.

Appropriate emphasis and

certainty/caution. Clear, prioritised

redesign suggestions, carried through

to UI snippets and screenshots when

appropriate Critical insight into

methodological limitations.

Useful summary of performance,

usability problems, and qualitative

comments. The empirical basis for

findings is not always clear. The

quantity of data begins to overwhelm

the reader. Implications for redesign

are clearly identified, but may not be

carried through to detailed design, or

explained to be convincing.

Some relevant and correct

findings, support redesign

suggestions. But omitted results

are increasingly noticeable.

Interpretation of data may be

unbalanced, and implications for

redesign sometimes unclear.

Analysis of raw data is

sometimes incomplete and full

of errors. Interpretation is

sometimes faulty or

misleading.

Contrived, spurious,

or speculative data

provided..

Presentati

on and

Scholarshi

p

These criteria are

reflected in the

Method and

Results sections

Concisely written report, with a

smooth, flowing argument, even when

issues are abstract and complex.

Illustrations and diagrams are

attractive, clearly enhance the text

and support search and reference, as

well as reading. Has critical insight.

Complete, well-organised and rich set

of audio video recordings, Overcomes

obstacles to access participantsPlenty

of data

Clearly and correctly expressed both

in writing and visually. Content is not

always summarised as well as could

be, nor does it always emphasise the

most important points appropriately.

Readable. Some errors in citation and

referencing. Awareness of important

strengths and weaknesses.

Increasing omission of recordings and

documentation due to slips or

technical obstacles rather than

participant consent. AUfficient

participants and data

Comprehension is assured, but

the report may be difficult to follow

at times. Illustrations and

diagrams sometimes fail to

convey relevant information.

Plausible suggestions may

appear flawed when challenged.

Citations and references present

but incorrect. Limitations and

alternatives sometimes not

considered.

Tends not to collect some data –

time on task, surveys. Convenient

participants. More data would

benefit project

he fragmented report includes

mistakes in written English.

Some diagrams and

illustrations are missing or

illegible. Bloated with

unnecessary or repeated

information Occasionally

lacking in coherence, and

does not consider relevant

factors. Missing references

and poor citation.

Confabulated data. Too little

data collected. Opinion used

for data

ritten work is often

unclear, confused or

irrelevant.

Significant

omissions from

figures and

Appendices.

Lacking in

coherence, and

does not consider

relevant factors.

Did not attend viva.

Missing data

Total

1 out of 4

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.