Performance Analysis: Apriori Algorithm with Regression in Weka Tool

VerifiedAdded on 2020/03/07

|23

|3651

|71

Report

AI Summary

This report presents an experimental analysis of the Apriori algorithm and regression techniques implemented within the Weka tool. The study focuses on optimizing execution time when generating frequent patterns, strong rules, and maximal rules. The implementation uses synthetic and real datasets, including a supermarket dataset, to evaluate the performance of the algorithms under varying support and confidence levels. The report includes implementation snapshots, result tables, and graphs comparing the execution times of Apriori and improved Apriori algorithms, along with Apriori with regression. Key findings highlight the impact of different support and confidence values on the execution time, with a specific emphasis on how linear regression can reduce the execution time for item sets with a predicted confidence of zero. Additionally, the report touches upon big data concepts like clustering and association rule mining, with a focus on minimizing costs associated with big data processing.

CHAPTER 1

EXPERIMENTAL RESULTS

5.1 IMPLEMENTATION OF PROPOSED WORK

In our thesis a weka tool of apriori and apriori algorithm with regression technique has been

actualized in weka tool to analyze the execution time of generating the frequent pattern,

strong rules, close rules and maximal. With the help of this application, we can easily find

the time which has taken to discover the frequent designs through apriori algorithm as well

as apriori with regression technique, in this weka tool we have found the frequent patterns

on dynamic value of support and confidence. By this work we can easily find out which

algorithm takes less execution time.

5.1.1 WEKA TOOL

Weka tool 4.5 was discharged on 15 August 2012; an arrangement of new or enhanced

features were included into this version. The Weka tool 4.5 will also run on Windows

Vista or later. The Weka tool 4.5 uses Common Language Runtime 4.0, with some

additional runtime features.

. Weka tool 4.5 is supported on Windows Vista, Server 2008, 7, Server 2008 R2, 8, Server

2012, 8.1 and Server 2012 R2. Applications utilizing Weka tool 4.5 will likewise keep

running on computers with Weka tool 4.6 installed, which supports additional operating

systems.

.NET Weka tool is available for building Metro-style apps using C# or Visual Basic.

CORE FEATURES

Ability to restrict to what extent the regular expression engine will endeavor to

determine a regular articulation before it times out.

Ability to characterize the culture for an application domain.

1

EXPERIMENTAL RESULTS

5.1 IMPLEMENTATION OF PROPOSED WORK

In our thesis a weka tool of apriori and apriori algorithm with regression technique has been

actualized in weka tool to analyze the execution time of generating the frequent pattern,

strong rules, close rules and maximal. With the help of this application, we can easily find

the time which has taken to discover the frequent designs through apriori algorithm as well

as apriori with regression technique, in this weka tool we have found the frequent patterns

on dynamic value of support and confidence. By this work we can easily find out which

algorithm takes less execution time.

5.1.1 WEKA TOOL

Weka tool 4.5 was discharged on 15 August 2012; an arrangement of new or enhanced

features were included into this version. The Weka tool 4.5 will also run on Windows

Vista or later. The Weka tool 4.5 uses Common Language Runtime 4.0, with some

additional runtime features.

. Weka tool 4.5 is supported on Windows Vista, Server 2008, 7, Server 2008 R2, 8, Server

2012, 8.1 and Server 2012 R2. Applications utilizing Weka tool 4.5 will likewise keep

running on computers with Weka tool 4.6 installed, which supports additional operating

systems.

.NET Weka tool is available for building Metro-style apps using C# or Visual Basic.

CORE FEATURES

Ability to restrict to what extent the regular expression engine will endeavor to

determine a regular articulation before it times out.

Ability to characterize the culture for an application domain.

1

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Console bolster for Unicode (UTF-16) encoding.

Support for forming of social string requesting and comparison data. Better

execution while recovering resources.

Native help for Zip compression (previous versions supported the compression

algorithm, but not the archive format).

Ability to customize a reflection context to override default reflection behavior through

the Custom Reflection Context class.

New asynchronous elements were added to the C# and Visual Basic languages. These

components include a task-based model for performing offbeat

operations, implementing futures and promises.

5.1.2 IMPLEMENTATION SNAPSHOT

We will create the weka tool frame work with the help of regression and apriori

calculation to design the basic generating frequent use of data set values.

In this frame work we will define some selected item set for implementation of

synthesis data set. In this, we compare the values of apriori and regression on different

confidence and support values.

Fig.5.1 Regression implemented for optimization weka snap shot

2

Support for forming of social string requesting and comparison data. Better

execution while recovering resources.

Native help for Zip compression (previous versions supported the compression

algorithm, but not the archive format).

Ability to customize a reflection context to override default reflection behavior through

the Custom Reflection Context class.

New asynchronous elements were added to the C# and Visual Basic languages. These

components include a task-based model for performing offbeat

operations, implementing futures and promises.

5.1.2 IMPLEMENTATION SNAPSHOT

We will create the weka tool frame work with the help of regression and apriori

calculation to design the basic generating frequent use of data set values.

In this frame work we will define some selected item set for implementation of

synthesis data set. In this, we compare the values of apriori and regression on different

confidence and support values.

Fig.5.1 Regression implemented for optimization weka snap shot

2

Fig 5.1.1 snap shot of regression implemented in weka tool for time consuming.

Fig. 5.2 Snap Shot of Time Optimization through Apriori on support and confidence

Weka snap shot

3

Fig. 5.2 Snap Shot of Time Optimization through Apriori on support and confidence

Weka snap shot

3

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

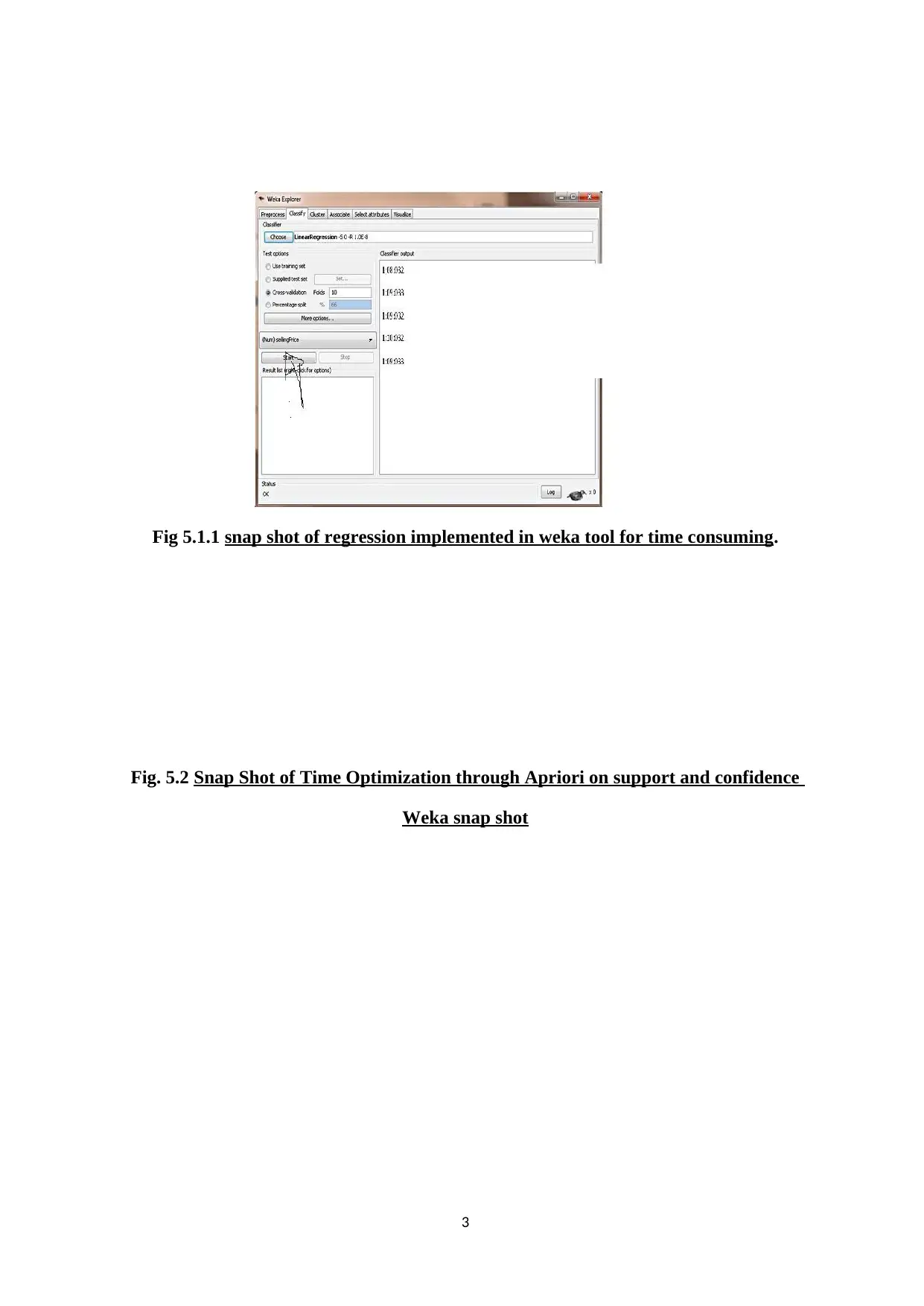

Fig. 5.3 Snap Shot of Time Optimization through improve Apriori support and

confidence using weka snap shot

.

4

confidence using weka snap shot

.

4

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

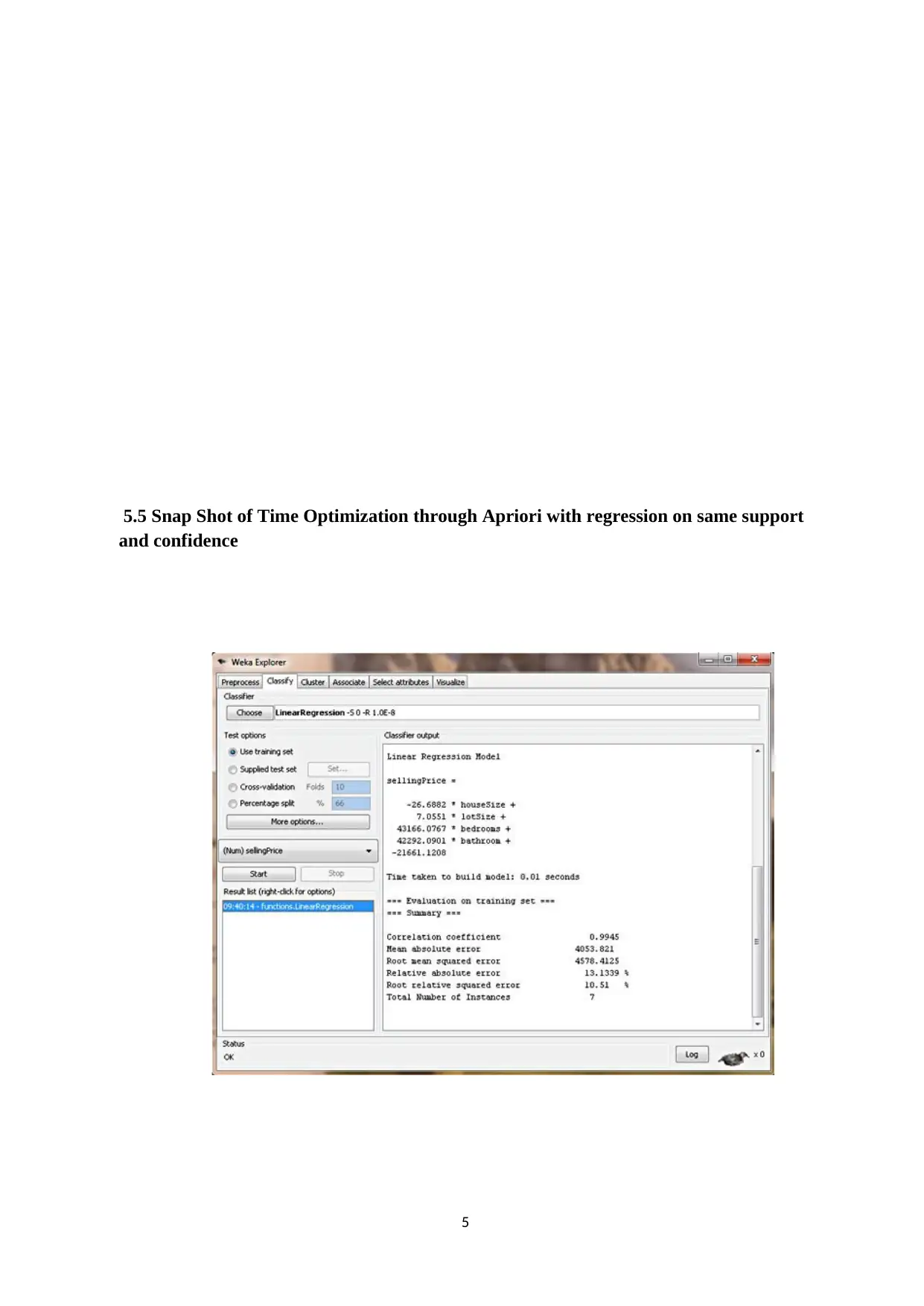

5.5 Snap Shot of Time Optimization through Apriori with regression on same support

and confidence

5

and confidence

5

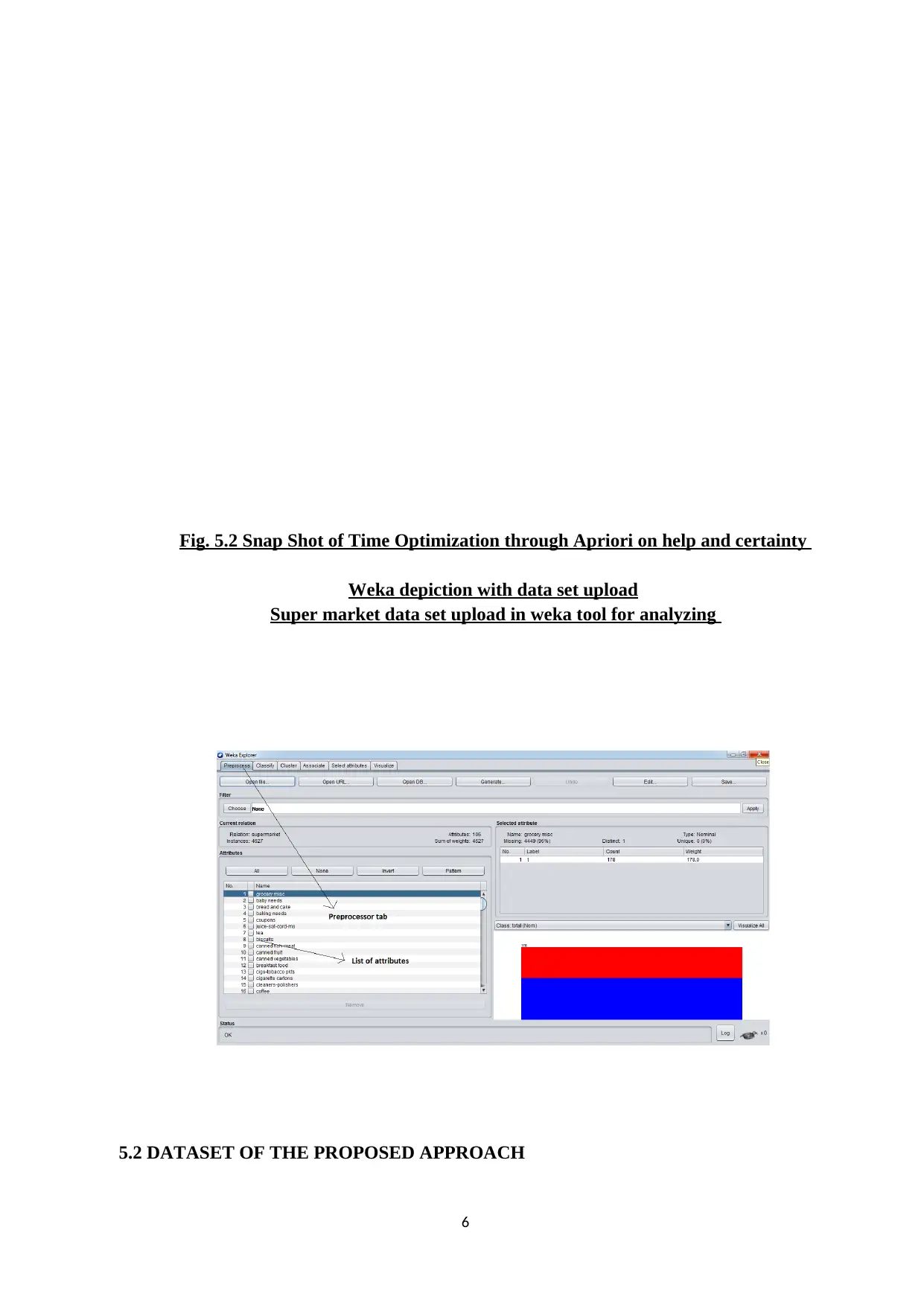

Fig. 5.2 Snap Shot of Time Optimization through Apriori on help and certainty

Weka depiction with data set upload

Super market data set upload in weka tool for analyzing

5.2 DATASET OF THE PROPOSED APPROACH

6

Weka depiction with data set upload

Super market data set upload in weka tool for analyzing

5.2 DATASET OF THE PROPOSED APPROACH

6

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Remembering the true objective to assess the three proposed approaches, only single class of

dataset is utilized. They are,

Synthetic dataset

Supermarket dataset

Real dataset

Synthetic Dataset

Synthetic databases were created utilizing the MS-EXCEL. The data impersonates the

exchanges in a retailing domain. The execution of the algorithms is shown for manufactured

datasets. The accompanying datasets were produced with the true goal of assessing the three

proposed approaches,

super market data set

We have taken the data set of Super-market, in which there are 106 attributes

and 4627 instances.

We can convert the real database into synthetic database on the basis of apriori

algorithm format given by a, b………..z values.

Example:

a=bread

b= butter and so on.

REAL DATASET

Association Rule mining likewise assumes significant part in finding the frequent example

from Real databases, overview data from Real research, data about the items and frequent

patterns, data containing, data concerning. This can confidence basic leadership concerning

the choice of items to be developed in a specific way in less time consuming.

5.2.1 ASSESSMENT PARAMETERS

7

dataset is utilized. They are,

Synthetic dataset

Supermarket dataset

Real dataset

Synthetic Dataset

Synthetic databases were created utilizing the MS-EXCEL. The data impersonates the

exchanges in a retailing domain. The execution of the algorithms is shown for manufactured

datasets. The accompanying datasets were produced with the true goal of assessing the three

proposed approaches,

super market data set

We have taken the data set of Super-market, in which there are 106 attributes

and 4627 instances.

We can convert the real database into synthetic database on the basis of apriori

algorithm format given by a, b………..z values.

Example:

a=bread

b= butter and so on.

REAL DATASET

Association Rule mining likewise assumes significant part in finding the frequent example

from Real databases, overview data from Real research, data about the items and frequent

patterns, data containing, data concerning. This can confidence basic leadership concerning

the choice of items to be developed in a specific way in less time consuming.

5.2.1 ASSESSMENT PARAMETERS

7

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

The execution of the proposed Market Basket methodology is assessed utilizing the

parameter like,

Execution time

Execution time

Execution time demonstrates the time occupied by the standard Apriori procedure and

regression strategies to execute the procedure in all datasets. The technique which takes less

time in execution is best. This parameter is critical in Market Basket Investigation as

through this one can arrive at a decision about the relative execution of the proposed

methods. These performance values cause changes in the advancement of Super Market.

Assessment Parameters

The execution of the proposed Market Basket Investigation methodologies are assessed

utilizing the parameters like,

Accuracy,

Area Under the Curve :

Sensitivity and

Specificity

Execution time

5.3 RESULT ANALYSIS

In this section, we have examined about the results like tables, graphs on different types of

support and confidence estimations of proposed work in different ways.

5.3.1 SIMULATION PARAMETERS

8

parameter like,

Execution time

Execution time

Execution time demonstrates the time occupied by the standard Apriori procedure and

regression strategies to execute the procedure in all datasets. The technique which takes less

time in execution is best. This parameter is critical in Market Basket Investigation as

through this one can arrive at a decision about the relative execution of the proposed

methods. These performance values cause changes in the advancement of Super Market.

Assessment Parameters

The execution of the proposed Market Basket Investigation methodologies are assessed

utilizing the parameters like,

Accuracy,

Area Under the Curve :

Sensitivity and

Specificity

Execution time

5.3 RESULT ANALYSIS

In this section, we have examined about the results like tables, graphs on different types of

support and confidence estimations of proposed work in different ways.

5.3.1 SIMULATION PARAMETERS

8

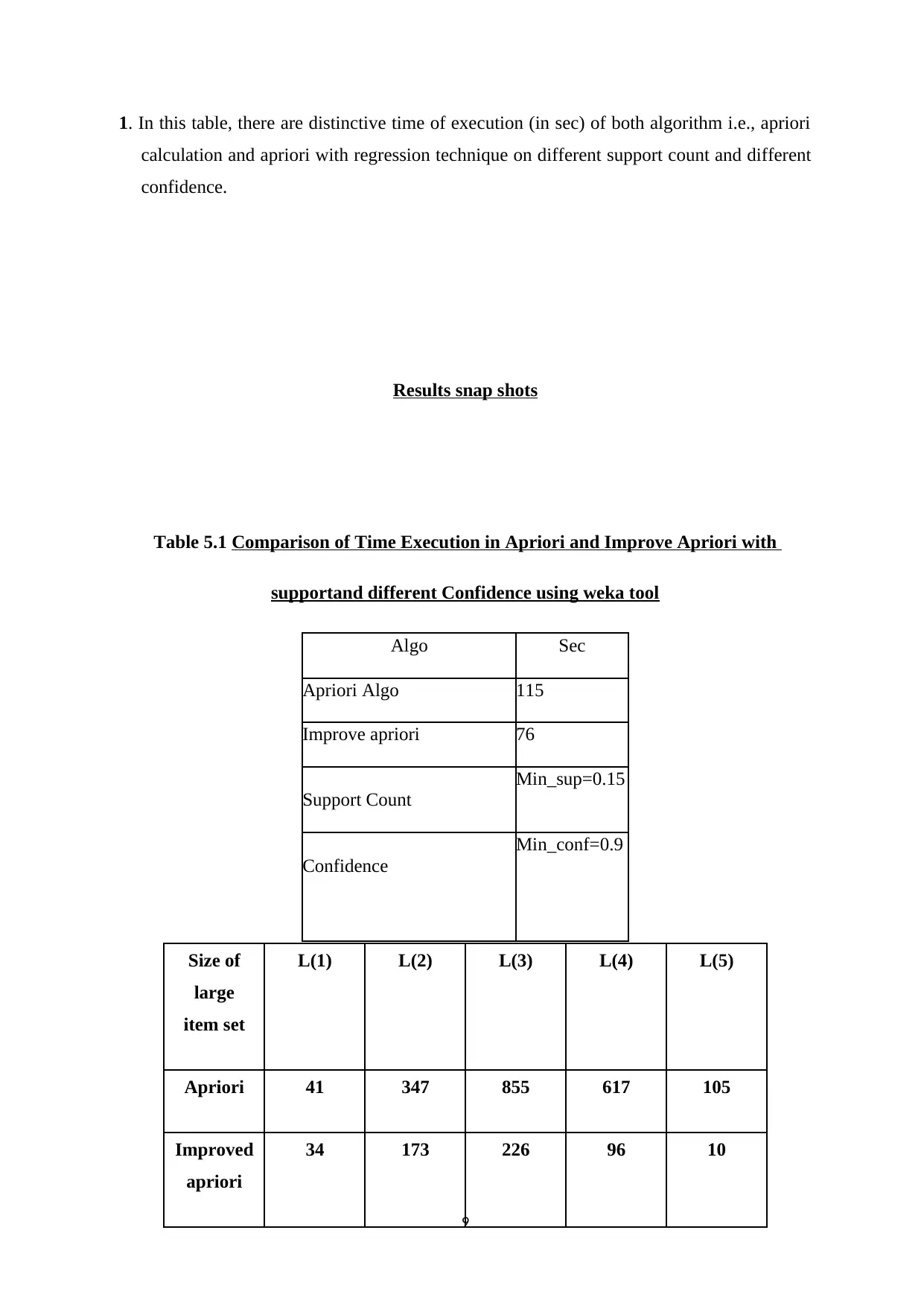

1. In this table, there are distinctive time of execution (in sec) of both algorithm i.e., apriori

calculation and apriori with regression technique on different support count and different

confidence.

Results snap shots

Table 5.1 Comparison of Time Execution in Apriori and Improve Apriori with

supportand different Confidence using weka tool

Algo Sec

Apriori Algo 115

Improve apriori 76

Support Count

Min_sup=0.15

Confidence

Min_conf=0.9

9

Size of

large

item set

L(1) L(2) L(3) L(4) L(5)

Apriori 41 347 855 617 105

Improved

apriori

34 173 226 96 10

calculation and apriori with regression technique on different support count and different

confidence.

Results snap shots

Table 5.1 Comparison of Time Execution in Apriori and Improve Apriori with

supportand different Confidence using weka tool

Algo Sec

Apriori Algo 115

Improve apriori 76

Support Count

Min_sup=0.15

Confidence

Min_conf=0.9

9

Size of

large

item set

L(1) L(2) L(3) L(4) L(5)

Apriori 41 347 855 617 105

Improved

apriori

34 173 226 96 10

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

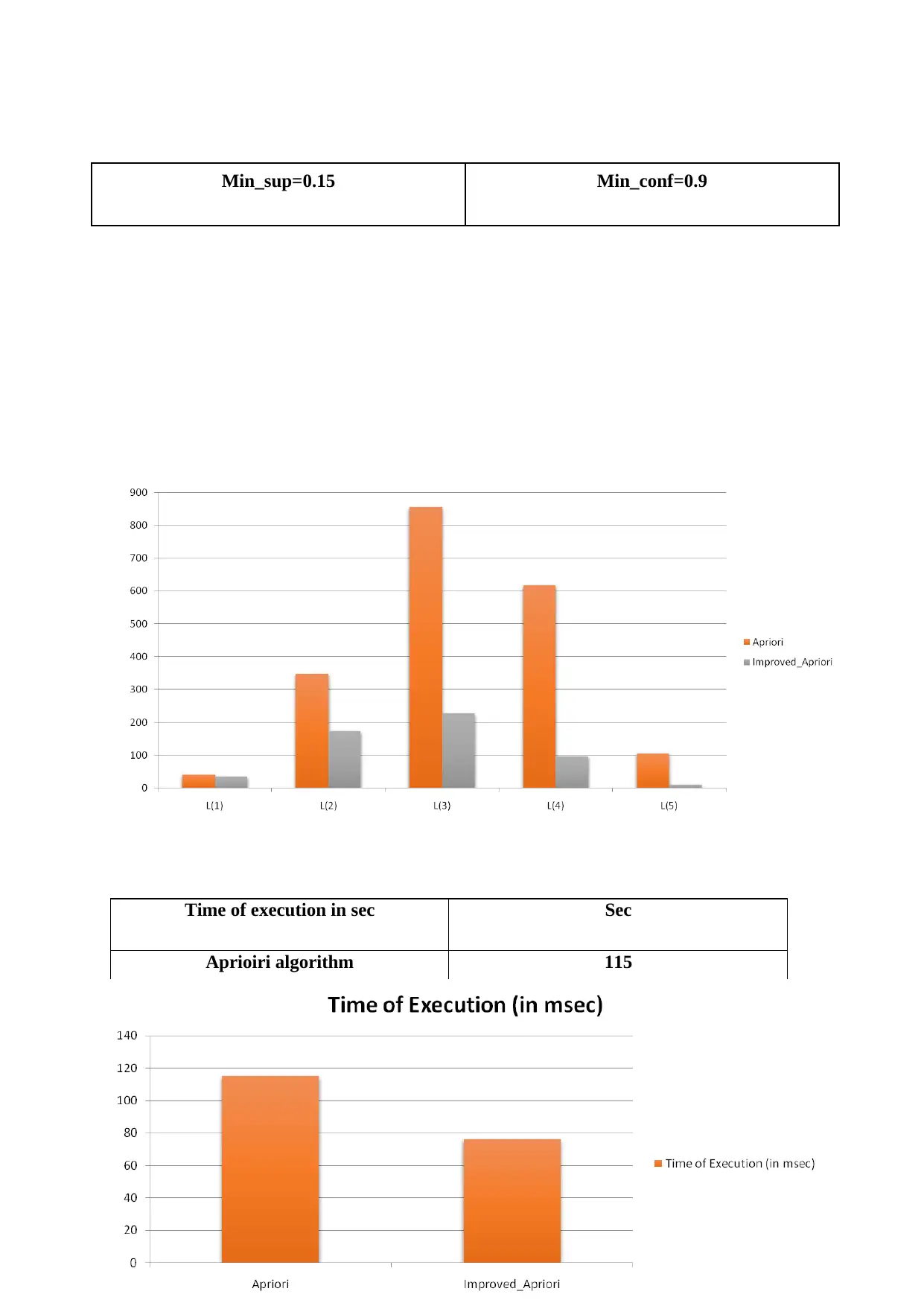

Min_sup=0.15 Min_conf=0.9

Fig. 5.6 Time execution with different support and different confidence.

In this result analysis we will create the graph based on different support and different

confidence rules. As well as we have used the dot net frame work for implementation of this

time execution result. We analysis the linear regression technique reduced the time of

execution of those item set values whose confidence to be predict should be zero.

Time of execution in sec Sec

Aprioiri algorithm 115

Improve aprioiri 76

10

Fig. 5.6 Time execution with different support and different confidence.

In this result analysis we will create the graph based on different support and different

confidence rules. As well as we have used the dot net frame work for implementation of this

time execution result. We analysis the linear regression technique reduced the time of

execution of those item set values whose confidence to be predict should be zero.

Time of execution in sec Sec

Aprioiri algorithm 115

Improve aprioiri 76

10

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Fig.5.7 Time optimization of Apriori with Improve Apriori in sec

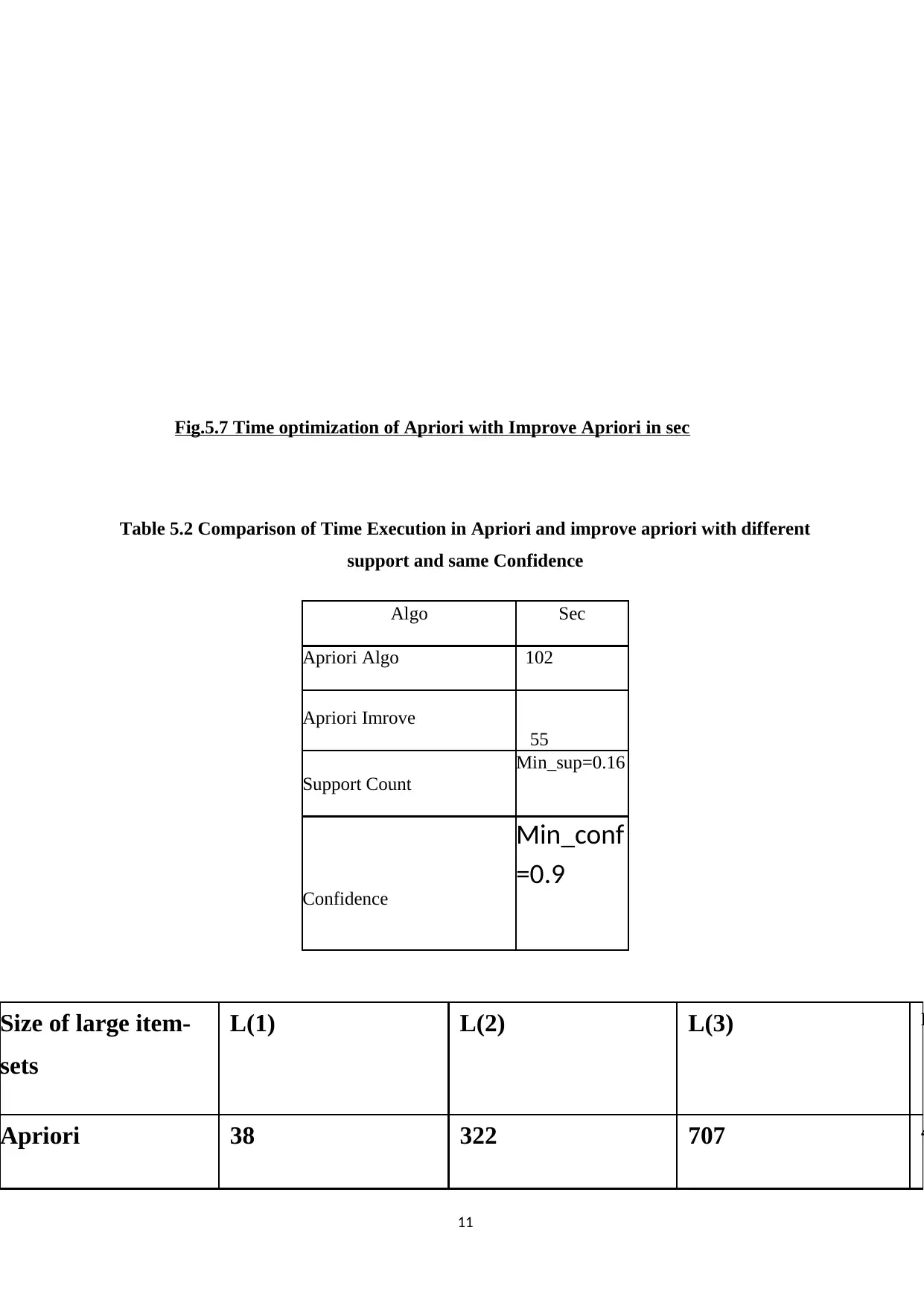

Table 5.2 Comparison of Time Execution in Apriori and improve apriori with different

support and same Confidence

Algo Sec

Apriori Algo 102

Apriori Imrove

55

Support Count

Min_sup=0.16

Confidence

Min_conf

=0.9

Size of large item-

sets

L(1) L(2) L(3) L

Apriori 38 322 707 4

11

Table 5.2 Comparison of Time Execution in Apriori and improve apriori with different

support and same Confidence

Algo Sec

Apriori Algo 102

Apriori Imrove

55

Support Count

Min_sup=0.16

Confidence

Min_conf

=0.9

Size of large item-

sets

L(1) L(2) L(3) L

Apriori 38 322 707 4

11

Improved Apriori 31 148 78 5

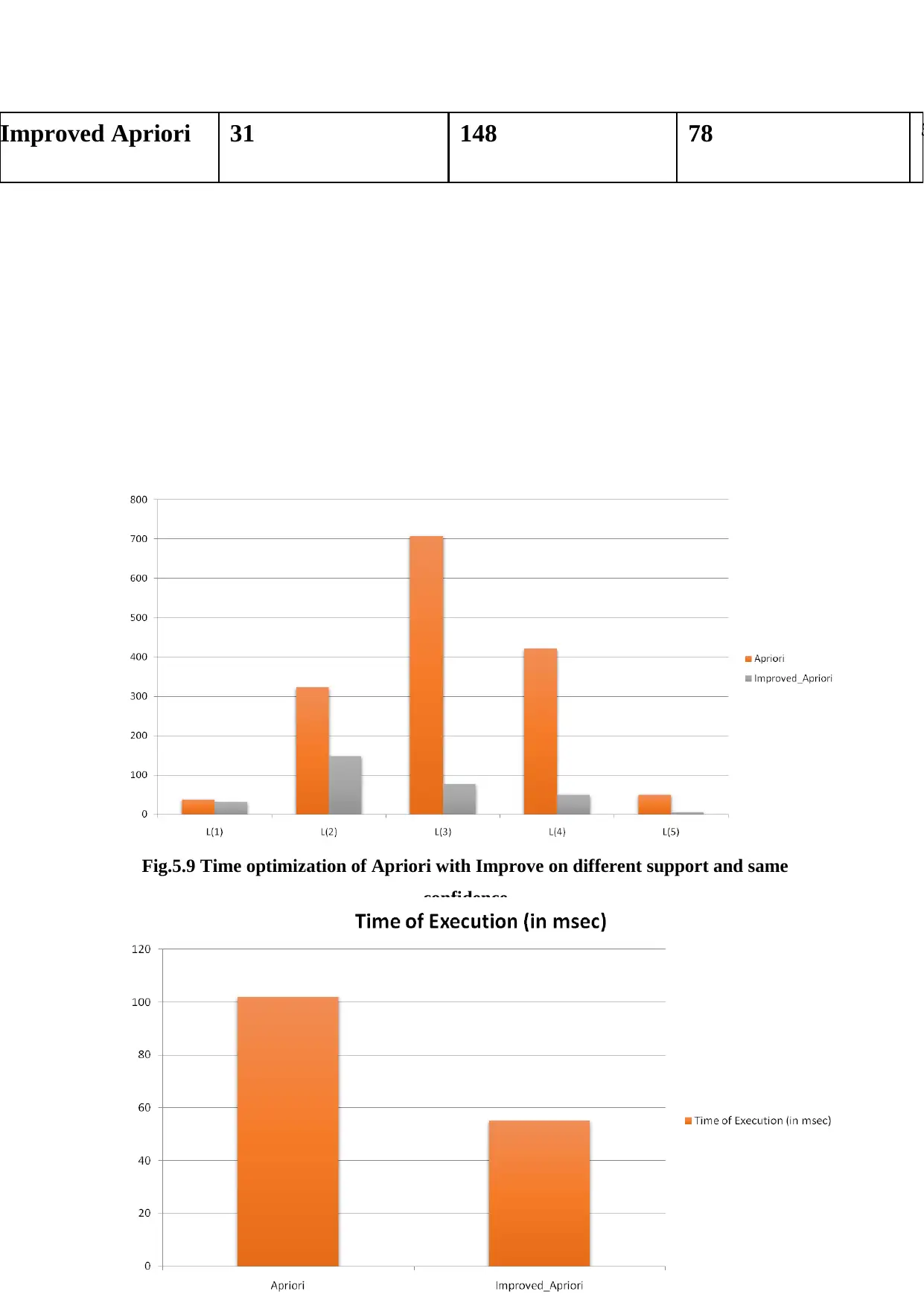

Fig. 5.8 Time execution with diverse support and same confidence.

In this result analysis we will create the graph in light of different support and same

confidence rules. And in addition we have used the dot net frame work for implementation

of this time execution result. We analysis the linear regression technique reduced the time of

execution of individuals item set values whose confidence to be predict should be zero.

=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-

Fig.5.9 Time optimization of Apriori with Improve on different support and same

confidence

12

Fig. 5.8 Time execution with diverse support and same confidence.

In this result analysis we will create the graph in light of different support and same

confidence rules. And in addition we have used the dot net frame work for implementation

of this time execution result. We analysis the linear regression technique reduced the time of

execution of individuals item set values whose confidence to be predict should be zero.

=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-

Fig.5.9 Time optimization of Apriori with Improve on different support and same

confidence

12

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 23

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.