Analysis of Strange Sum Algorithms

VerifiedAdded on 2019/09/21

|9

|2425

|481

Report

AI Summary

The provided content discusses the complexity of two recursive functions, `strange_sumA` and `strange_sumB`, which are used to calculate the sum of an array's elements. The analysis shows that `strange_sumA` has a time complexity of O(n log2 n) and `strange_sumB` has a time complexity of O(n). The functions' complexities differ due to the different number of operations performed before calling themselves recursively.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

/*

Author : Yaman Kumar

For any further assistance contact : mr.yamankumar@gmail.com

*/

Ans .1 ) Ryan is not right. It is not the case that O(n log n)-time solution must always be faster than

Brandon’s O(n2 ) solution. This is so since big Oh notation hides constant factor, in this notation we just

take the upper bound on the number of operations as a function of n. The actual number of operations

includes not just this upper bound but a constant factor too. This constant factor though plays a trivial

role once n gets larger but it plays a significant role when n is small. This is the case that Brandon has

observed i.e. n must have been small and thus the overall running time depended largely on these

constant factors

Ans. 2)

a. (200 n^3) > (5nlogn + 4n) > (10n + 2n) >(4 logn)

b. (60000*n^6) > (7nlogn) > (8n+9) = (6n) (asymptotically and not actually)

c. (3^(n-1)) > (n^90) > (n^3 – 2000) > (2n^2 + 200n^2logn) >(2 ^100)

d. (2^(n+2)) > (10^logn) > (4n) > (3logn) > 63

Ans.3)

a) n^4 + 3n <= 4*n^4

This is true for all n>=1.

Thus the big Oh notation for the above is O(n^4)

b) 15n^16 + 3nlogn + 2n <= 17*n^16

This is true for all n>=1.

Thus the big Oh notation for the above is O(n^16)

c) 3nlogn + 2logn <= 4nlogn

This is true for all n>=1.

Thus the big Oh notation for the above is O(nlogn)

d) 12n*3^n -50n <= 12n*3^n

This is true for all n>=1.

Thus the big Oh notation for the above is O(n*3^n)

Ans.4)

a) 11*n^2 <= 5logn + 12n^2 <= 13*n^2

This is true for all n>=1.

Here k0 = 11 , k1 = 13, n0 = 1

Thus the theta notation for the above is Θ(n^2)

Author : Yaman Kumar

For any further assistance contact : mr.yamankumar@gmail.com

*/

Ans .1 ) Ryan is not right. It is not the case that O(n log n)-time solution must always be faster than

Brandon’s O(n2 ) solution. This is so since big Oh notation hides constant factor, in this notation we just

take the upper bound on the number of operations as a function of n. The actual number of operations

includes not just this upper bound but a constant factor too. This constant factor though plays a trivial

role once n gets larger but it plays a significant role when n is small. This is the case that Brandon has

observed i.e. n must have been small and thus the overall running time depended largely on these

constant factors

Ans. 2)

a. (200 n^3) > (5nlogn + 4n) > (10n + 2n) >(4 logn)

b. (60000*n^6) > (7nlogn) > (8n+9) = (6n) (asymptotically and not actually)

c. (3^(n-1)) > (n^90) > (n^3 – 2000) > (2n^2 + 200n^2logn) >(2 ^100)

d. (2^(n+2)) > (10^logn) > (4n) > (3logn) > 63

Ans.3)

a) n^4 + 3n <= 4*n^4

This is true for all n>=1.

Thus the big Oh notation for the above is O(n^4)

b) 15n^16 + 3nlogn + 2n <= 17*n^16

This is true for all n>=1.

Thus the big Oh notation for the above is O(n^16)

c) 3nlogn + 2logn <= 4nlogn

This is true for all n>=1.

Thus the big Oh notation for the above is O(nlogn)

d) 12n*3^n -50n <= 12n*3^n

This is true for all n>=1.

Thus the big Oh notation for the above is O(n*3^n)

Ans.4)

a) 11*n^2 <= 5logn + 12n^2 <= 13*n^2

This is true for all n>=1.

Here k0 = 11 , k1 = 13, n0 = 1

Thus the theta notation for the above is Θ(n^2)

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

b) 6nlogn <= 6nlogn + 4n <= 20nlogn

This is true for all n>=2.

Here k0 = 6 , k1 = 20, n0 = 2

Thus the theta notation for the above is Θ(nlogn)

Ans. 5)

a) 2^(n+5) ∈ O(2^n)

This is true since

If f(n) ∈ O(2^n), then this implies

0 <= f(n) <= k*(2^n) for all n >= n0

This is true for 2^(n+5), here

0 <= 2^(n+5) <= 32*2^n for all n >= 1

Thus n0 = 1 and k = 32 in the above formula

b) n^2 ∈ Ω(nlogn)

This is true since

If f(n) ∈ Ω (2^n), then this implies

0 <= k*(nlogn) <= f(n) for all n >= n0

This is true for n^2, here

0 <= nlogn <= n^2 for all n >= 1

Thus n0 = 1 and k = 1 in the above formula

Ans.6)

a)

This has order O(n^2). This is shown in the following justification:

Let n= arr.length

In the worst case, i.e. when arr[i] + arr[j] is never zero for any (i,j), then the following takes place

For i=0, the inner loop runs from j=0 to j=n, thus total operations executed = n

For i=1, the inner loop runs from j=1 to j=n, thus total operations executed = n-1

For i=2, the inner loop runs from j=2 to j=n, thus total operations executed = n-2

.

.

This is true for all n>=2.

Here k0 = 6 , k1 = 20, n0 = 2

Thus the theta notation for the above is Θ(nlogn)

Ans. 5)

a) 2^(n+5) ∈ O(2^n)

This is true since

If f(n) ∈ O(2^n), then this implies

0 <= f(n) <= k*(2^n) for all n >= n0

This is true for 2^(n+5), here

0 <= 2^(n+5) <= 32*2^n for all n >= 1

Thus n0 = 1 and k = 32 in the above formula

b) n^2 ∈ Ω(nlogn)

This is true since

If f(n) ∈ Ω (2^n), then this implies

0 <= k*(nlogn) <= f(n) for all n >= n0

This is true for n^2, here

0 <= nlogn <= n^2 for all n >= 1

Thus n0 = 1 and k = 1 in the above formula

Ans.6)

a)

This has order O(n^2). This is shown in the following justification:

Let n= arr.length

In the worst case, i.e. when arr[i] + arr[j] is never zero for any (i,j), then the following takes place

For i=0, the inner loop runs from j=0 to j=n, thus total operations executed = n

For i=1, the inner loop runs from j=1 to j=n, thus total operations executed = n-1

For i=2, the inner loop runs from j=2 to j=n, thus total operations executed = n-2

.

.

.

For i=n-1, the inner loop runs from j=n-1 to j=n, thus total operations executed = 1

Summing over all the operations,

Total number of operations executed = Sum of all the above steps

= n + n-1 + n-2 + …. + 1

= n*(n+1)/2

Thus total number of operations = n^2/2 + n/2

n^2/2 + n/2 ∈ O(n^2)

This is true since

If f(n) ∈ O(n^2), then this implies

0 <= f(n) <= k*(n^2) for all n >= n0

This is true for n^2/2 + n/2, here

0 <= n^2/2 + n/2 <= n^2 for all n >= 1

Thus n0 = 1 and k = 2 in the above formula

b)

The running time of this is O(1). This is so since:

For i = 1, the loop gets executed

For i=2, the condition i%2 == 0 becomes true, and hence it breaks out of loop

Then 1 is returned

Thus the number of operations carried out is independent of n and is a constant of 2 operations.

Thus the complexity is also O(1) which is a constant.

For i=n-1, the inner loop runs from j=n-1 to j=n, thus total operations executed = 1

Summing over all the operations,

Total number of operations executed = Sum of all the above steps

= n + n-1 + n-2 + …. + 1

= n*(n+1)/2

Thus total number of operations = n^2/2 + n/2

n^2/2 + n/2 ∈ O(n^2)

This is true since

If f(n) ∈ O(n^2), then this implies

0 <= f(n) <= k*(n^2) for all n >= n0

This is true for n^2/2 + n/2, here

0 <= n^2/2 + n/2 <= n^2 for all n >= 1

Thus n0 = 1 and k = 2 in the above formula

b)

The running time of this is O(1). This is so since:

For i = 1, the loop gets executed

For i=2, the condition i%2 == 0 becomes true, and hence it breaks out of loop

Then 1 is returned

Thus the number of operations carried out is independent of n and is a constant of 2 operations.

Thus the complexity is also O(1) which is a constant.

c)

The running time of inside is O(Na). This is so since

The number of operations carried out is a constant multiple of the number of times loop

gets executed

The loop gets executed equal to the length of array c

The length of array c is equal to the length of array a, which is equal to Na

Thus, the running time is O(Na)

l*Na ∈ O(Na) (for some constant l, this constant depends on which branch of

if else gets executed, but is nevertheless constant since it is never greater

than 3 operations.)

This is true since

If f(Na) ∈ O(Na), then this implies

0 <= f(Na) <= k*(Na) for all Na >= Na0

This is true for l*n, here

0 <= l*Na <= 4*Na for all n >= 1

Thus n0 = 1 and k = 4 in the above formula

The running time of inside is O(Na). This is so since

The number of operations carried out is a constant multiple of the number of times loop

gets executed

The loop gets executed equal to the length of array c

The length of array c is equal to the length of array a, which is equal to Na

Thus, the running time is O(Na)

l*Na ∈ O(Na) (for some constant l, this constant depends on which branch of

if else gets executed, but is nevertheless constant since it is never greater

than 3 operations.)

This is true since

If f(Na) ∈ O(Na), then this implies

0 <= f(Na) <= k*(Na) for all Na >= Na0

This is true for l*n, here

0 <= l*Na <= 4*Na for all n >= 1

Thus n0 = 1 and k = 4 in the above formula

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

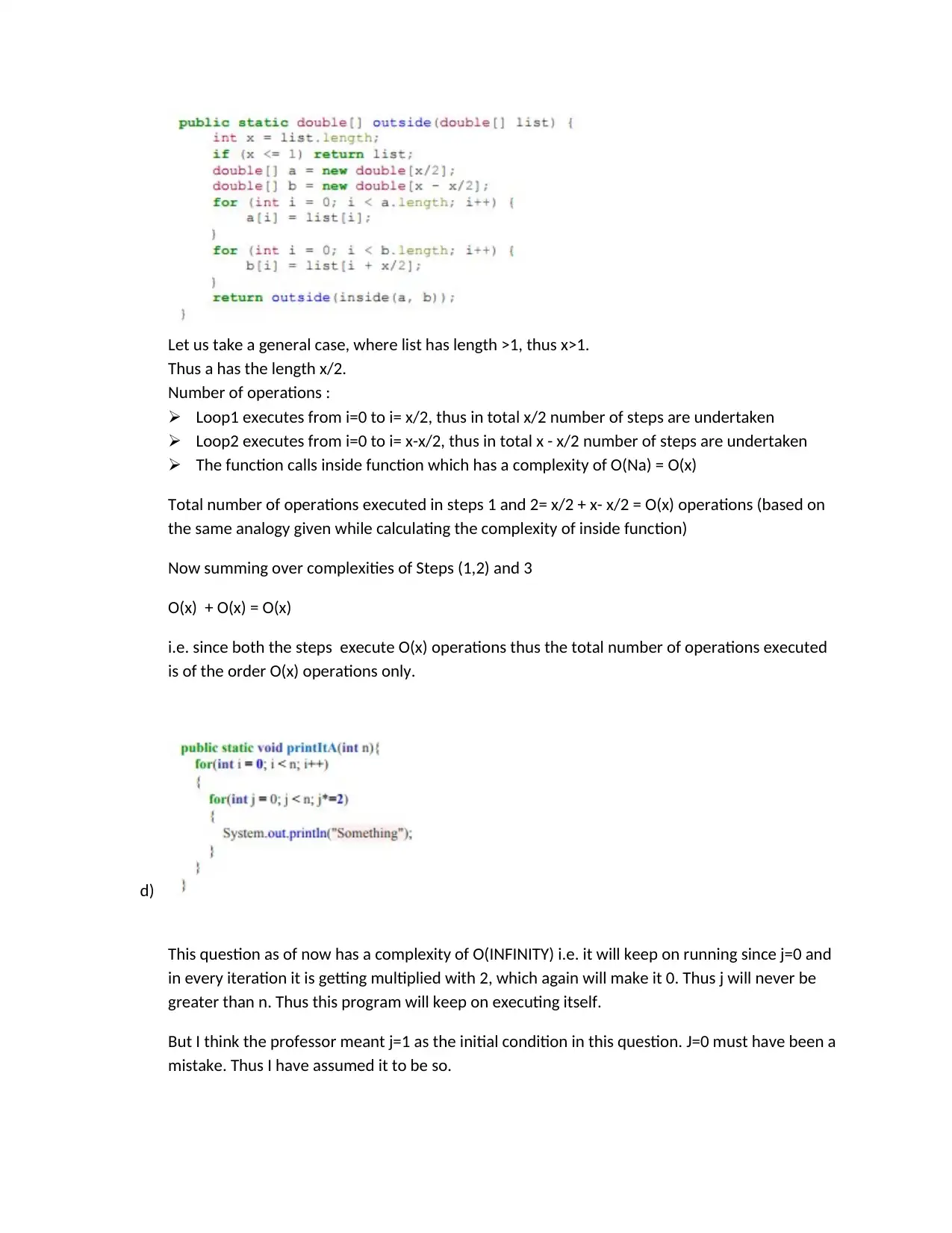

Let us take a general case, where list has length >1, thus x>1.

Thus a has the length x/2.

Number of operations :

Loop1 executes from i=0 to i= x/2, thus in total x/2 number of steps are undertaken

Loop2 executes from i=0 to i= x-x/2, thus in total x - x/2 number of steps are undertaken

The function calls inside function which has a complexity of O(Na) = O(x)

Total number of operations executed in steps 1 and 2= x/2 + x- x/2 = O(x) operations (based on

the same analogy given while calculating the complexity of inside function)

Now summing over complexities of Steps (1,2) and 3

O(x) + O(x) = O(x)

i.e. since both the steps execute O(x) operations thus the total number of operations executed

is of the order O(x) operations only.

d)

This question as of now has a complexity of O(INFINITY) i.e. it will keep on running since j=0 and

in every iteration it is getting multiplied with 2, which again will make it 0. Thus j will never be

greater than n. Thus this program will keep on executing itself.

But I think the professor meant j=1 as the initial condition in this question. J=0 must have been a

mistake. Thus I have assumed it to be so.

Thus a has the length x/2.

Number of operations :

Loop1 executes from i=0 to i= x/2, thus in total x/2 number of steps are undertaken

Loop2 executes from i=0 to i= x-x/2, thus in total x - x/2 number of steps are undertaken

The function calls inside function which has a complexity of O(Na) = O(x)

Total number of operations executed in steps 1 and 2= x/2 + x- x/2 = O(x) operations (based on

the same analogy given while calculating the complexity of inside function)

Now summing over complexities of Steps (1,2) and 3

O(x) + O(x) = O(x)

i.e. since both the steps execute O(x) operations thus the total number of operations executed

is of the order O(x) operations only.

d)

This question as of now has a complexity of O(INFINITY) i.e. it will keep on running since j=0 and

in every iteration it is getting multiplied with 2, which again will make it 0. Thus j will never be

greater than n. Thus this program will keep on executing itself.

But I think the professor meant j=1 as the initial condition in this question. J=0 must have been a

mistake. Thus I have assumed it to be so.

This has order O(nlog2(n)). This is shown in the following justification:

Total number of operations executed in the inner loop is log2(n). This is so since j is getting

multiplied by 2 in each iteration of the loop, thus it will be greater than n in log2(n)

iterations. Now this analysis will be used in the subsequent steps

For i=0, the inner loop runs from j=0 to j=n, thus total operations executed = log2(n)

For i=1, the inner loop runs from j=0 to j=n, thus total operations executed = log2(n)

For i=2, the inner loop runs from j=0 to j=n, thus total operations executed = log2(n)

.

.

.

For i=n-1, the inner loop runs from j=0 to j=n, thus total operations executed = log2(n)

Summing over all the operations,

Total number of operations executed = Sum of all the above steps

= log2(n) + log2(n) + log2(n) + …. + log2(n) (n times)

= n* log2(n)

Thus total number of operations = n log2(n)

n log2(n) ∈ O(n log2(n))

This is true since

If f(n) ∈ O(n log2(n)), then this implies

0 <= f(n) <= k*(n log2(n)) for all n >= n0

This is true for n log2(n), here

0 <= n log2(n) <= n log2(n) for all n >= 1

Thus n0 = 1 and k = 1 in the above formula

e)

This has order O(nlog2(n)). This is shown in the following justification:

Total number of operations executed in the inner loop is log2(n). This is so since j is getting

divided by 2 in each iteration of the loop, thus it will be equal to 0 in log2(n) iterations. Now

this analysis will be used in the subsequent steps

Total number of operations executed in the inner loop is log2(n). This is so since j is getting

multiplied by 2 in each iteration of the loop, thus it will be greater than n in log2(n)

iterations. Now this analysis will be used in the subsequent steps

For i=0, the inner loop runs from j=0 to j=n, thus total operations executed = log2(n)

For i=1, the inner loop runs from j=0 to j=n, thus total operations executed = log2(n)

For i=2, the inner loop runs from j=0 to j=n, thus total operations executed = log2(n)

.

.

.

For i=n-1, the inner loop runs from j=0 to j=n, thus total operations executed = log2(n)

Summing over all the operations,

Total number of operations executed = Sum of all the above steps

= log2(n) + log2(n) + log2(n) + …. + log2(n) (n times)

= n* log2(n)

Thus total number of operations = n log2(n)

n log2(n) ∈ O(n log2(n))

This is true since

If f(n) ∈ O(n log2(n)), then this implies

0 <= f(n) <= k*(n log2(n)) for all n >= n0

This is true for n log2(n), here

0 <= n log2(n) <= n log2(n) for all n >= 1

Thus n0 = 1 and k = 1 in the above formula

e)

This has order O(nlog2(n)). This is shown in the following justification:

Total number of operations executed in the inner loop is log2(n). This is so since j is getting

divided by 2 in each iteration of the loop, thus it will be equal to 0 in log2(n) iterations. Now

this analysis will be used in the subsequent steps

For i=0, the inner loop runs from j=n to j=0, thus total operations executed = log2(n)

For i=1, the inner loop runs from j=n to j=0, thus total operations executed = log2(n)

For i=2, the inner loop runs from j=n to j=0, thus total operations executed = log2(n)

.

.

.

For i=n-1, the inner loop runs from j=n to j=0, thus total operations executed = log2(n)

Summing over all the operations,

Total number of operations executed = Sum of all the above steps

= log2(n) + log2(n) + log2(n) + …. + log2(n) (n times)

= n* log2(n)

Thus total number of operations = n log2(n)

n log2(n) ∈ O(n log2(n))

This is true since

If f(n) ∈ O(n log2(n)), then this implies

0 <= f(n) <= k*(n log2(n)) for all n >= n0

This is true for n log2(n), here

0 <= n log2(n) <= n log2(n) for all n >= 1

Thus n0 = 1 and k = 1 in the above formula

f)

First calculating the number of operations executed before calling the strange_sumA recursively,

Let arr.length = n

newlen = arr.length/2 = n/2

For i=1, the inner loop runs from j=n to j=0, thus total operations executed = log2(n)

For i=2, the inner loop runs from j=n to j=0, thus total operations executed = log2(n)

.

.

.

For i=n-1, the inner loop runs from j=n to j=0, thus total operations executed = log2(n)

Summing over all the operations,

Total number of operations executed = Sum of all the above steps

= log2(n) + log2(n) + log2(n) + …. + log2(n) (n times)

= n* log2(n)

Thus total number of operations = n log2(n)

n log2(n) ∈ O(n log2(n))

This is true since

If f(n) ∈ O(n log2(n)), then this implies

0 <= f(n) <= k*(n log2(n)) for all n >= n0

This is true for n log2(n), here

0 <= n log2(n) <= n log2(n) for all n >= 1

Thus n0 = 1 and k = 1 in the above formula

f)

First calculating the number of operations executed before calling the strange_sumA recursively,

Let arr.length = n

newlen = arr.length/2 = n/2

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

Thus the two loops execute O(n) operations in total

Now , let T(n) be the complexity of strange_A

Thus, writing the recurrence relation, we get

T(n) = 2T(n/2) + n

I guess the solution as T(n) = O(nLogn). Now using

induction

to prove this guess.

T(n) <= cn/2Log(n/2) + n

= cnLogn - cnLog2 + n

= cnLogn - cn + n

<= cnLogn

g)

Again this question has a complexity of O(INFINITY). This is so since let left = 0, right =n, then

Strange_sumB(0,n) calls Strange_sumB(0,n/2) and Strange_sumB(n/2,n).

Lets take the case of Strange_sumB(n/2,n)

Strange_sumB(n/2,n) calls Strange_sumB(n/2, n/2) and Strange_sumB(n/2, n).

Thus it calls itself again and again until it hits stack overflow error.

But assuming this was not the intention:

Assuming that the length of arr is a power of 2

Let T(n) be the running time of Strange_sumB for a length of array as n

Now , let T(n) be the complexity of strange_A

Thus, writing the recurrence relation, we get

T(n) = 2T(n/2) + n

I guess the solution as T(n) = O(nLogn). Now using

induction

to prove this guess.

T(n) <= cn/2Log(n/2) + n

= cnLogn - cnLog2 + n

= cnLogn - cn + n

<= cnLogn

g)

Again this question has a complexity of O(INFINITY). This is so since let left = 0, right =n, then

Strange_sumB(0,n) calls Strange_sumB(0,n/2) and Strange_sumB(n/2,n).

Lets take the case of Strange_sumB(n/2,n)

Strange_sumB(n/2,n) calls Strange_sumB(n/2, n/2) and Strange_sumB(n/2, n).

Thus it calls itself again and again until it hits stack overflow error.

But assuming this was not the intention:

Assuming that the length of arr is a power of 2

Let T(n) be the running time of Strange_sumB for a length of array as n

Thus recurrence relation

T(n) = 2*T(n/2) + 1

1 operation has been incurred for checking the if condition.

I guess the solution as T(n) = O(n).

Now using induction to prove the guess.

T(n) = 2T(n/2) + 1

<= cn/2 + 1

<= cn + 1

<= c’n for some c’

Thus T(n) = O(n)

h) They have different asymptotic running times since the number of operations performed before

calling themselves recursively are different. Had the number of operations been the same then

their complexities would have been the same.

/*

Author : mr.yamankumar@gmail.com

For any further assistance and assignment contact : mr.yamankumar@gmail.com

*/

T(n) = 2*T(n/2) + 1

1 operation has been incurred for checking the if condition.

I guess the solution as T(n) = O(n).

Now using induction to prove the guess.

T(n) = 2T(n/2) + 1

<= cn/2 + 1

<= cn + 1

<= c’n for some c’

Thus T(n) = O(n)

h) They have different asymptotic running times since the number of operations performed before

calling themselves recursively are different. Had the number of operations been the same then

their complexities would have been the same.

/*

Author : mr.yamankumar@gmail.com

For any further assistance and assignment contact : mr.yamankumar@gmail.com

*/

1 out of 9

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

© 2024 | Zucol Services PVT LTD | All rights reserved.