Data Science Practice: Decision Tree, Gradient Boost Tree, and Linear Regression

VerifiedAdded on 2023/06/04

|22

|1599

|156

AI Summary

This project explores decision tree, gradient boost tree, and linear regression in data science. It covers datasets, regression models, and python implementation. The content includes decision tree categorical features, decision tree log, decision tree max bins, decision tree max depth, gradient boost tree iteration, gradient boost tree maximum bins, gradient boost tree maximum depth, linear regression cross validation, linear regression intercept, linear regression iteration, linear regression step size, linear regression L1 regularization, linear regression L2 regularization, and linear regression log.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

Data Science Practice

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

Contents

Introduction.........................................................................................................................................2

Decision Tree.......................................................................................................................................2

Decision Tree categorical Features.................................................................................................2

Decision Tree Log............................................................................................................................4

Decision Tree Max Bins...................................................................................................................4

Decision Tree Max Depth................................................................................................................6

Gradient Boost Tree............................................................................................................................7

Gradient Boost Tree Iteration........................................................................................................7

Gradient Boost Tree Maximum Bins.............................................................................................9

Gradient Boost Tree Maximum Depth........................................................................................10

Linear Regression..............................................................................................................................12

Linear Regression Cross Validation.............................................................................................12

Linear Regression Intercept......................................................................................................12

Linear Regression Iteration......................................................................................................13

Linear Regression Step Size......................................................................................................15

Linear Regression L1 Regularization.......................................................................................16

Linear Regression L2 Regularization.......................................................................................18

Linear Regression Log..............................................................................................................18

Conclusion..........................................................................................................................................19

References..........................................................................................................................................20

Introduction.........................................................................................................................................2

Decision Tree.......................................................................................................................................2

Decision Tree categorical Features.................................................................................................2

Decision Tree Log............................................................................................................................4

Decision Tree Max Bins...................................................................................................................4

Decision Tree Max Depth................................................................................................................6

Gradient Boost Tree............................................................................................................................7

Gradient Boost Tree Iteration........................................................................................................7

Gradient Boost Tree Maximum Bins.............................................................................................9

Gradient Boost Tree Maximum Depth........................................................................................10

Linear Regression..............................................................................................................................12

Linear Regression Cross Validation.............................................................................................12

Linear Regression Intercept......................................................................................................12

Linear Regression Iteration......................................................................................................13

Linear Regression Step Size......................................................................................................15

Linear Regression L1 Regularization.......................................................................................16

Linear Regression L2 Regularization.......................................................................................18

Linear Regression Log..............................................................................................................18

Conclusion..........................................................................................................................................19

References..........................................................................................................................................20

Introduction

In this project to develop the decision tree, gradient boosted tree and linear regression

tree by using python. We develop the regression models using given datasets. The dataset has

particular row and columns. Every dataset has the lot of data’s and using develops the

regression model. The real value can take from target value that is called as the regression

model. It is a form of supervised learning. It also builds with python. The training model

makes the prediction data. It has the two type of variable. One is independent variable and

another one is dependent variable (Ades et al., 2013). The dependent variable and one or

more independent variable relationship defined the regression model. It has the one more than

types of regression model. We take only linear regression model, decision tree and gradient

boosted tree and implement by the python.

Dataset

We are using three type of the dataset. The first two dataset is given and third one is

take the kaggle dataset. The dataset day and hour dataset has the instant, day, workingday,

hour, weathersit, temp, atemp and windspeed.In this dataset using implement the decision

tree, linear regression tree and gradient boost tree. Every dataset measure by the python code.

In this dataset are import the python file and implement by the python code (Data science,

2015).

Decision Tree

It represents the decision making and decision and it used to visually. It takes the

given dataset and it designs the tree for that particular data. Normally it has the root, leaf and

edges (Goetz, 2011). It covers the regression and classification model and it is concept of

machine learning. We are implement the decision tree categorical feature, decision tree max

bins, and decision tree depth and decision tree log.

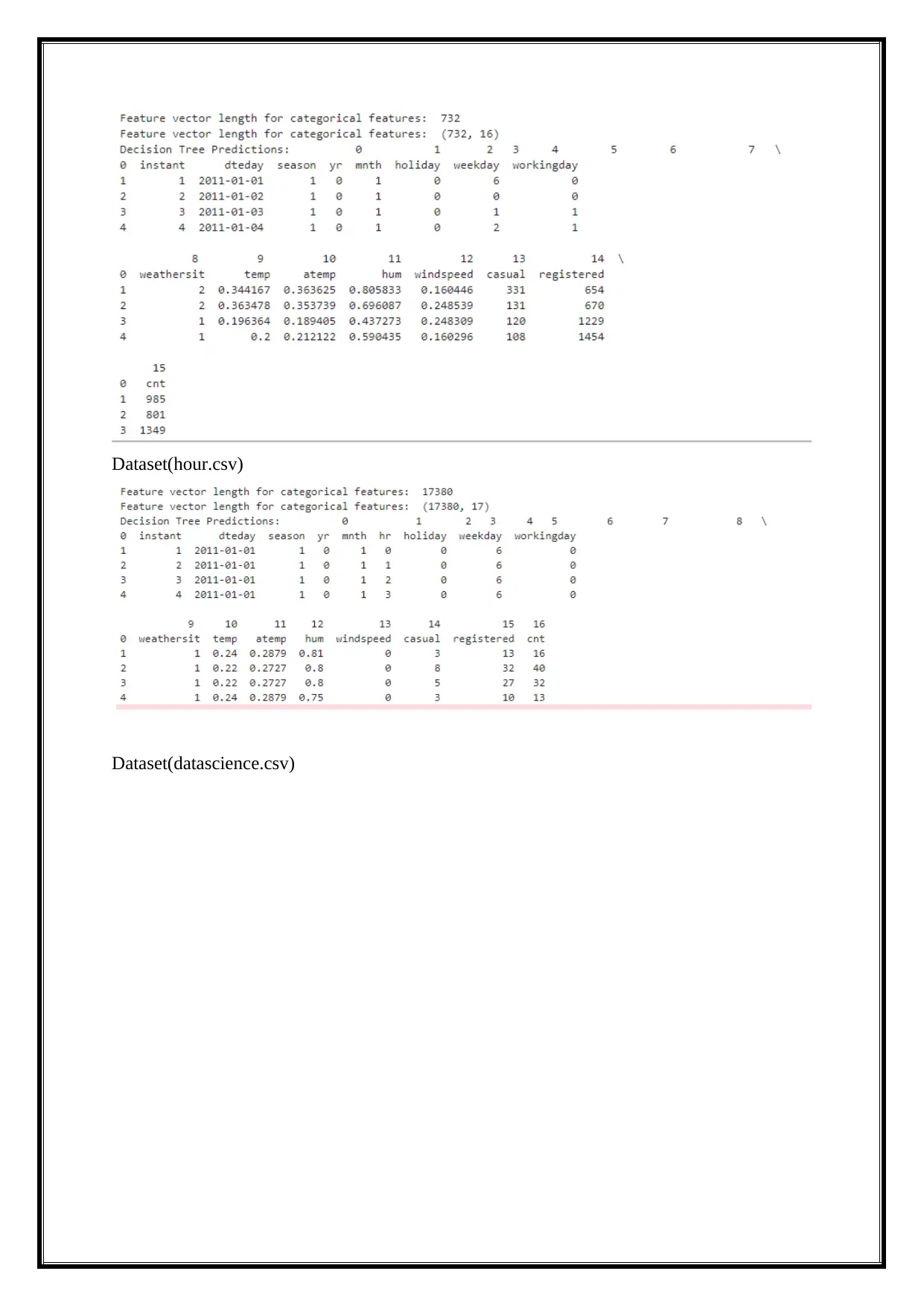

Decision Tree categorical Features

It measures the decision tree categorical and numerical features for given datasets. In

the datasets has the particular data.

Dataset(day.csv)

In this project to develop the decision tree, gradient boosted tree and linear regression

tree by using python. We develop the regression models using given datasets. The dataset has

particular row and columns. Every dataset has the lot of data’s and using develops the

regression model. The real value can take from target value that is called as the regression

model. It is a form of supervised learning. It also builds with python. The training model

makes the prediction data. It has the two type of variable. One is independent variable and

another one is dependent variable (Ades et al., 2013). The dependent variable and one or

more independent variable relationship defined the regression model. It has the one more than

types of regression model. We take only linear regression model, decision tree and gradient

boosted tree and implement by the python.

Dataset

We are using three type of the dataset. The first two dataset is given and third one is

take the kaggle dataset. The dataset day and hour dataset has the instant, day, workingday,

hour, weathersit, temp, atemp and windspeed.In this dataset using implement the decision

tree, linear regression tree and gradient boost tree. Every dataset measure by the python code.

In this dataset are import the python file and implement by the python code (Data science,

2015).

Decision Tree

It represents the decision making and decision and it used to visually. It takes the

given dataset and it designs the tree for that particular data. Normally it has the root, leaf and

edges (Goetz, 2011). It covers the regression and classification model and it is concept of

machine learning. We are implement the decision tree categorical feature, decision tree max

bins, and decision tree depth and decision tree log.

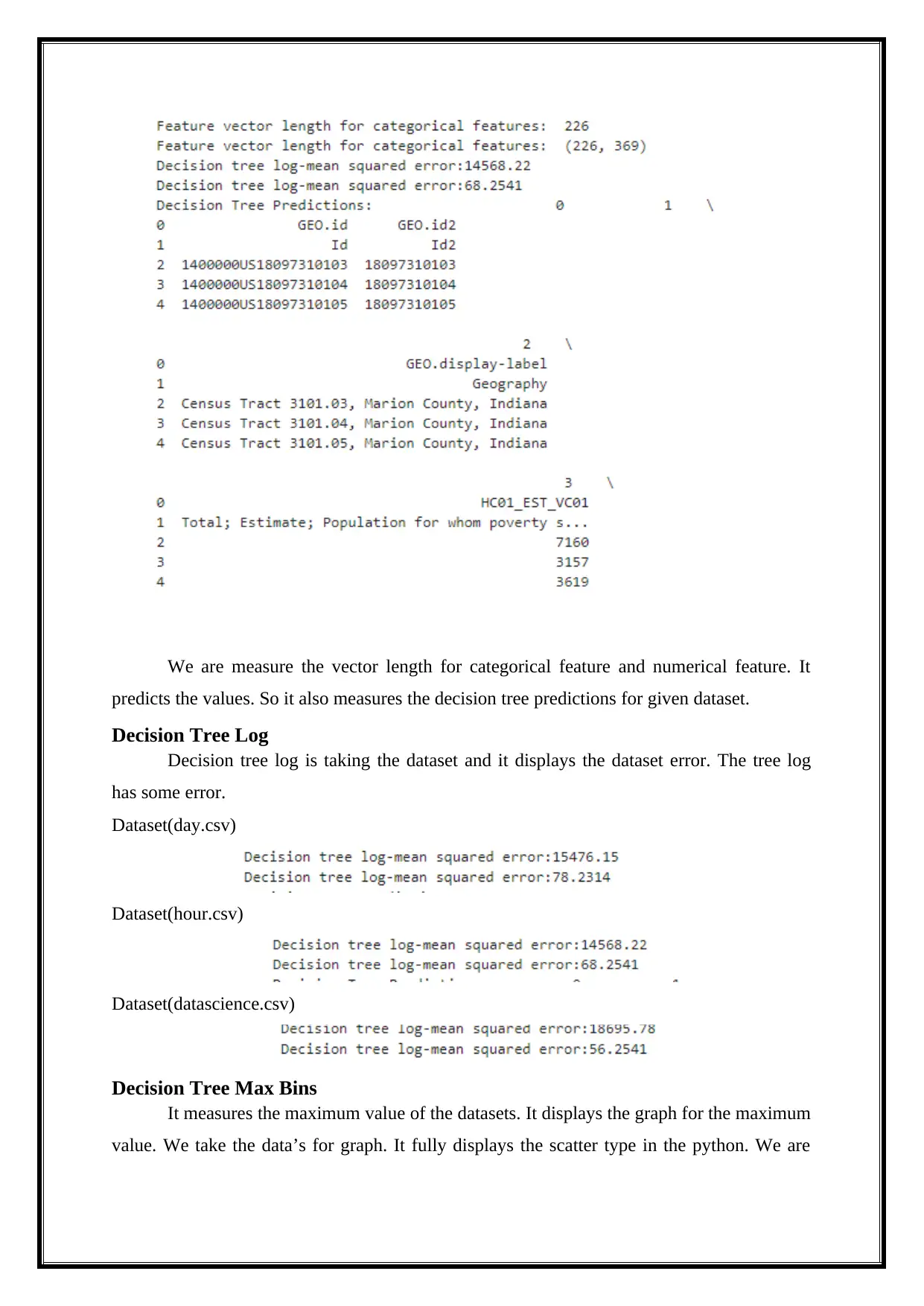

Decision Tree categorical Features

It measures the decision tree categorical and numerical features for given datasets. In

the datasets has the particular data.

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

Dataset(datascience.csv)

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

We are measure the vector length for categorical feature and numerical feature. It

predicts the values. So it also measures the decision tree predictions for given dataset.

Decision Tree Log

Decision tree log is taking the dataset and it displays the dataset error. The tree log

has some error.

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

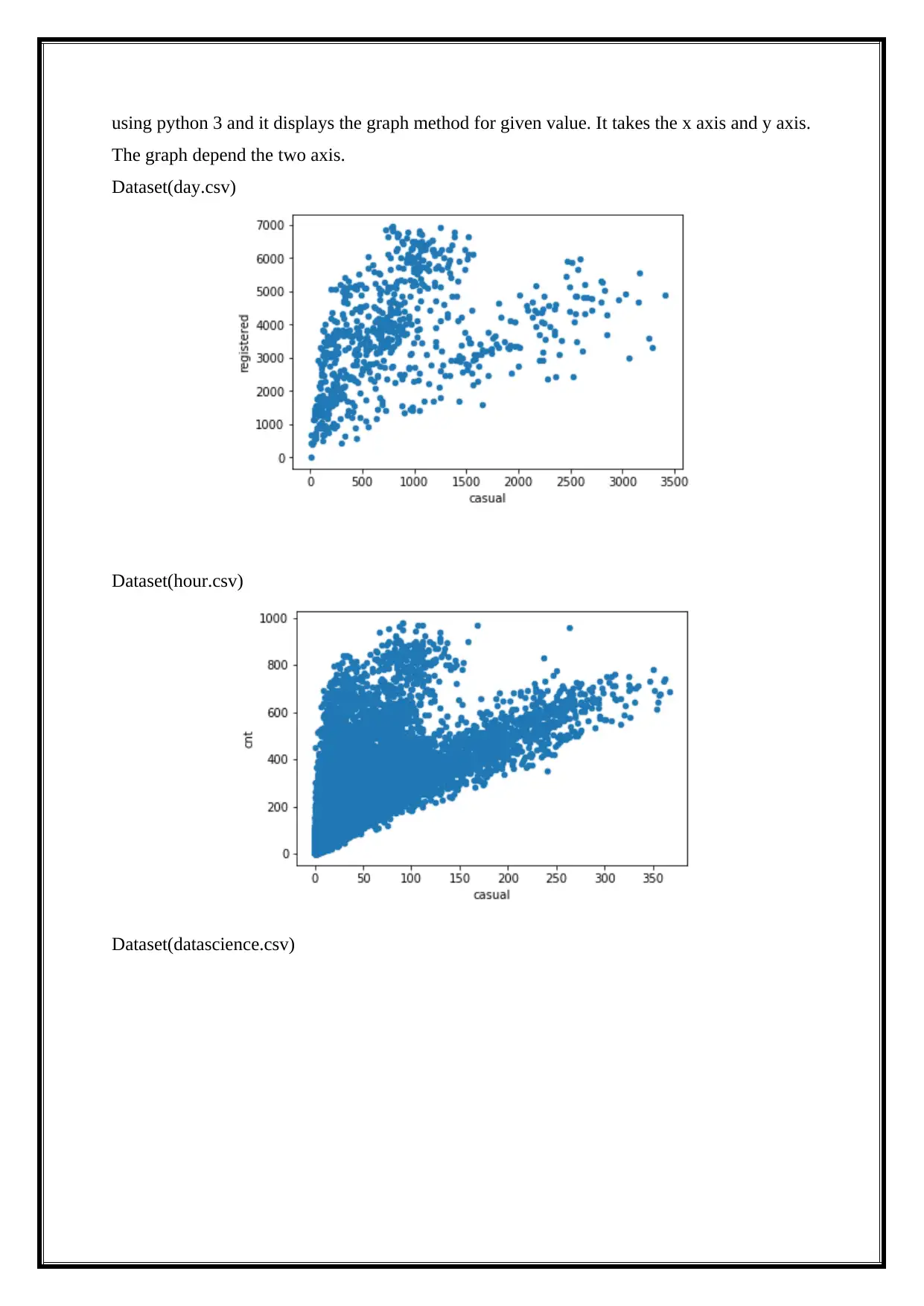

Decision Tree Max Bins

It measures the maximum value of the datasets. It displays the graph for the maximum

value. We take the data’s for graph. It fully displays the scatter type in the python. We are

predicts the values. So it also measures the decision tree predictions for given dataset.

Decision Tree Log

Decision tree log is taking the dataset and it displays the dataset error. The tree log

has some error.

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

Decision Tree Max Bins

It measures the maximum value of the datasets. It displays the graph for the maximum

value. We take the data’s for graph. It fully displays the scatter type in the python. We are

using python 3 and it displays the graph method for given value. It takes the x axis and y axis.

The graph depend the two axis.

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

The graph depend the two axis.

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

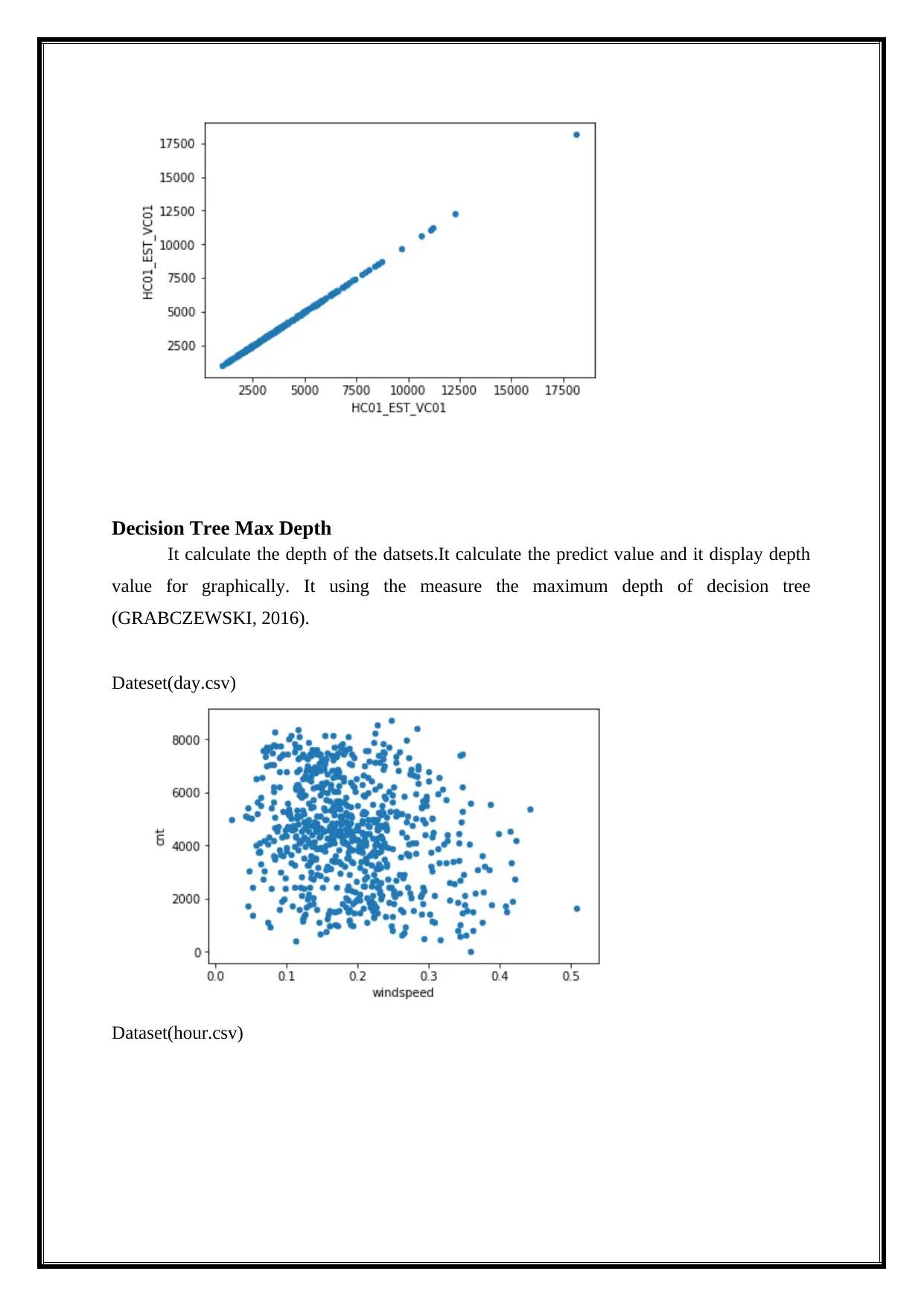

Decision Tree Max Depth

It calculate the depth of the datsets.It calculate the predict value and it display depth

value for graphically. It using the measure the maximum depth of decision tree

(GRABCZEWSKI, 2016).

Dateset(day.csv)

Dataset(hour.csv)

It calculate the depth of the datsets.It calculate the predict value and it display depth

value for graphically. It using the measure the maximum depth of decision tree

(GRABCZEWSKI, 2016).

Dateset(day.csv)

Dataset(hour.csv)

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

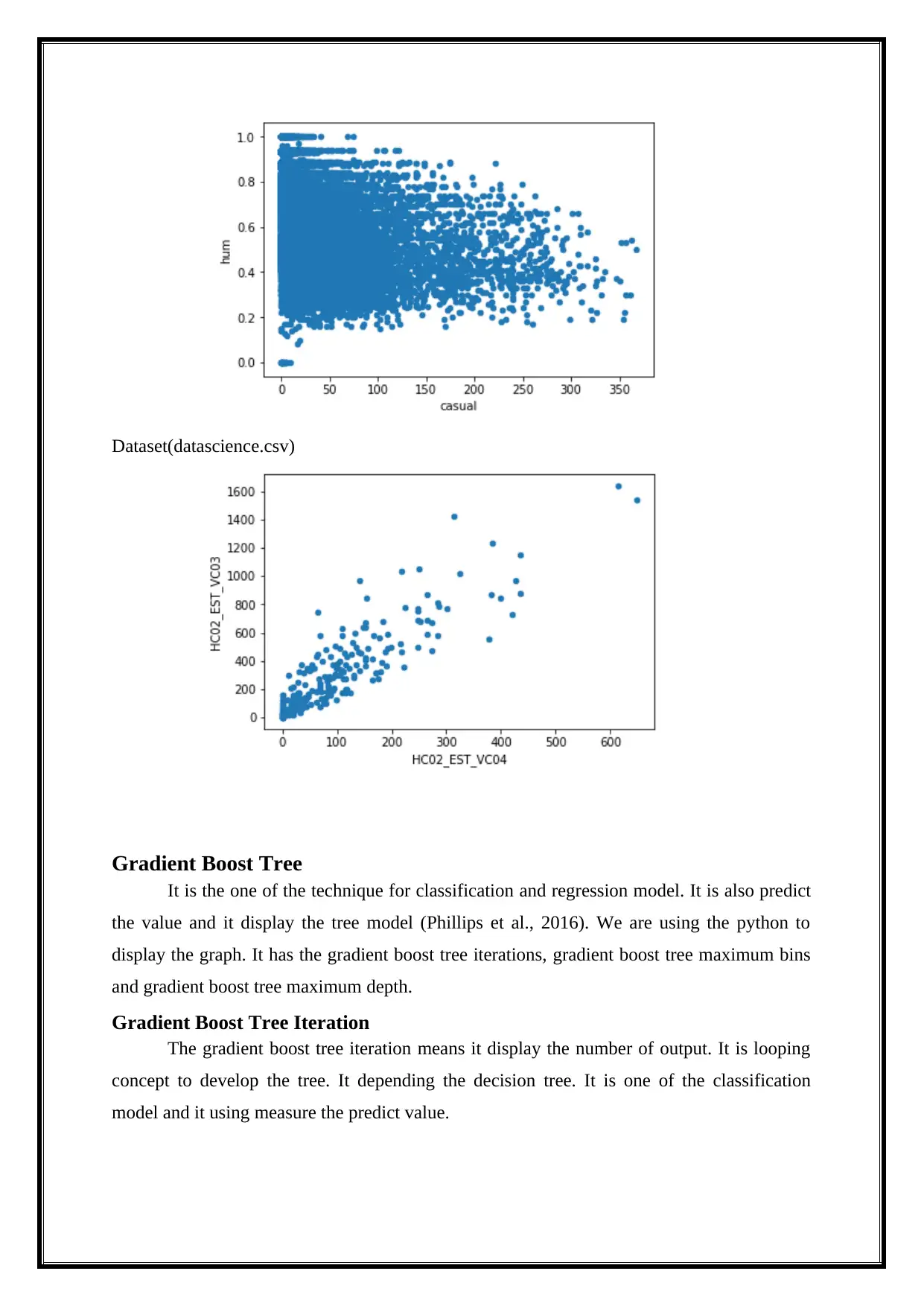

Dataset(datascience.csv)

Gradient Boost Tree

It is the one of the technique for classification and regression model. It is also predict

the value and it display the tree model (Phillips et al., 2016). We are using the python to

display the graph. It has the gradient boost tree iterations, gradient boost tree maximum bins

and gradient boost tree maximum depth.

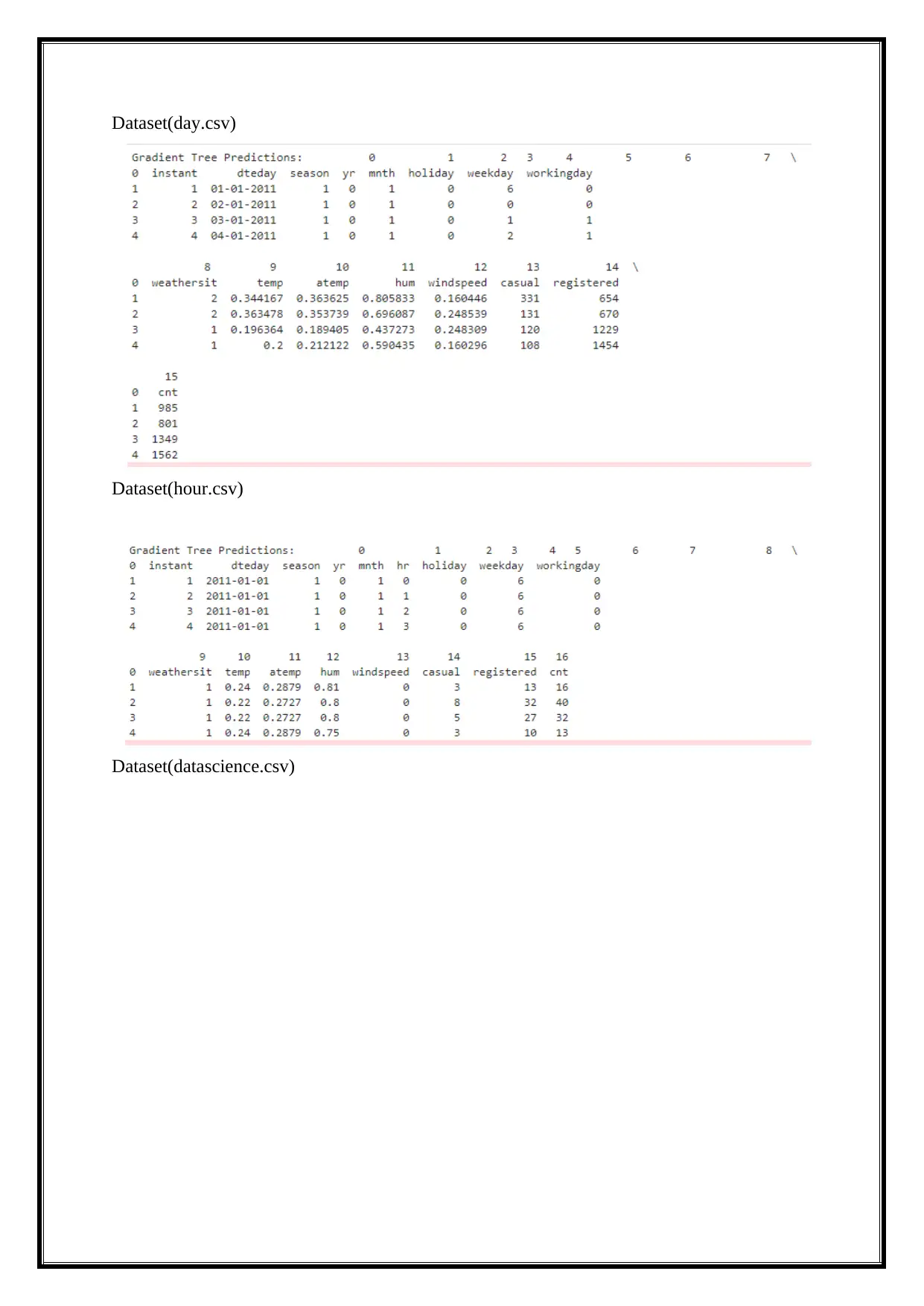

Gradient Boost Tree Iteration

The gradient boost tree iteration means it display the number of output. It is looping

concept to develop the tree. It depending the decision tree. It is one of the classification

model and it using measure the predict value.

Gradient Boost Tree

It is the one of the technique for classification and regression model. It is also predict

the value and it display the tree model (Phillips et al., 2016). We are using the python to

display the graph. It has the gradient boost tree iterations, gradient boost tree maximum bins

and gradient boost tree maximum depth.

Gradient Boost Tree Iteration

The gradient boost tree iteration means it display the number of output. It is looping

concept to develop the tree. It depending the decision tree. It is one of the classification

model and it using measure the predict value.

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

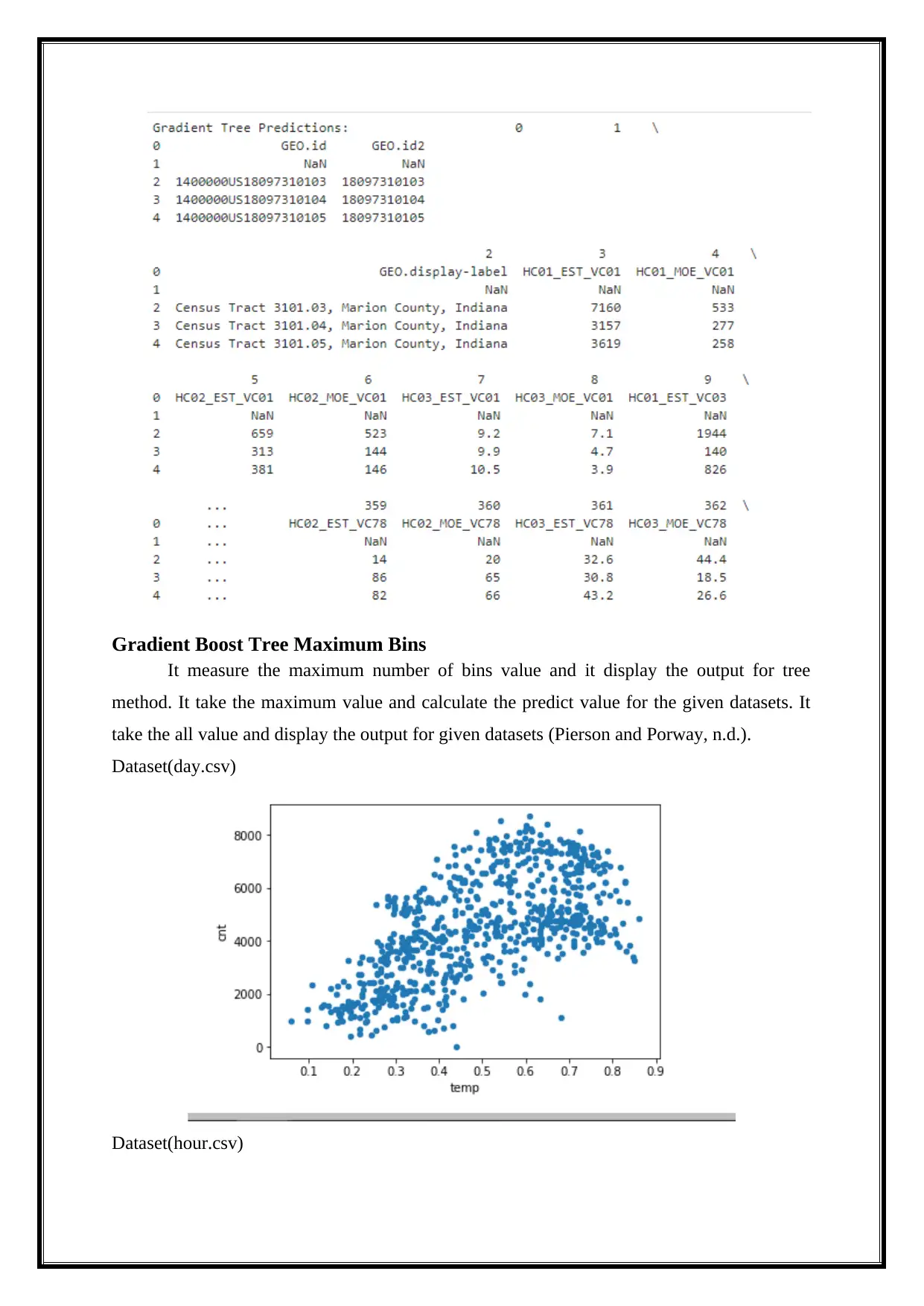

Gradient Boost Tree Maximum Bins

It measure the maximum number of bins value and it display the output for tree

method. It take the maximum value and calculate the predict value for the given datasets. It

take the all value and display the output for given datasets (Pierson and Porway, n.d.).

Dataset(day.csv)

Dataset(hour.csv)

It measure the maximum number of bins value and it display the output for tree

method. It take the maximum value and calculate the predict value for the given datasets. It

take the all value and display the output for given datasets (Pierson and Porway, n.d.).

Dataset(day.csv)

Dataset(hour.csv)

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

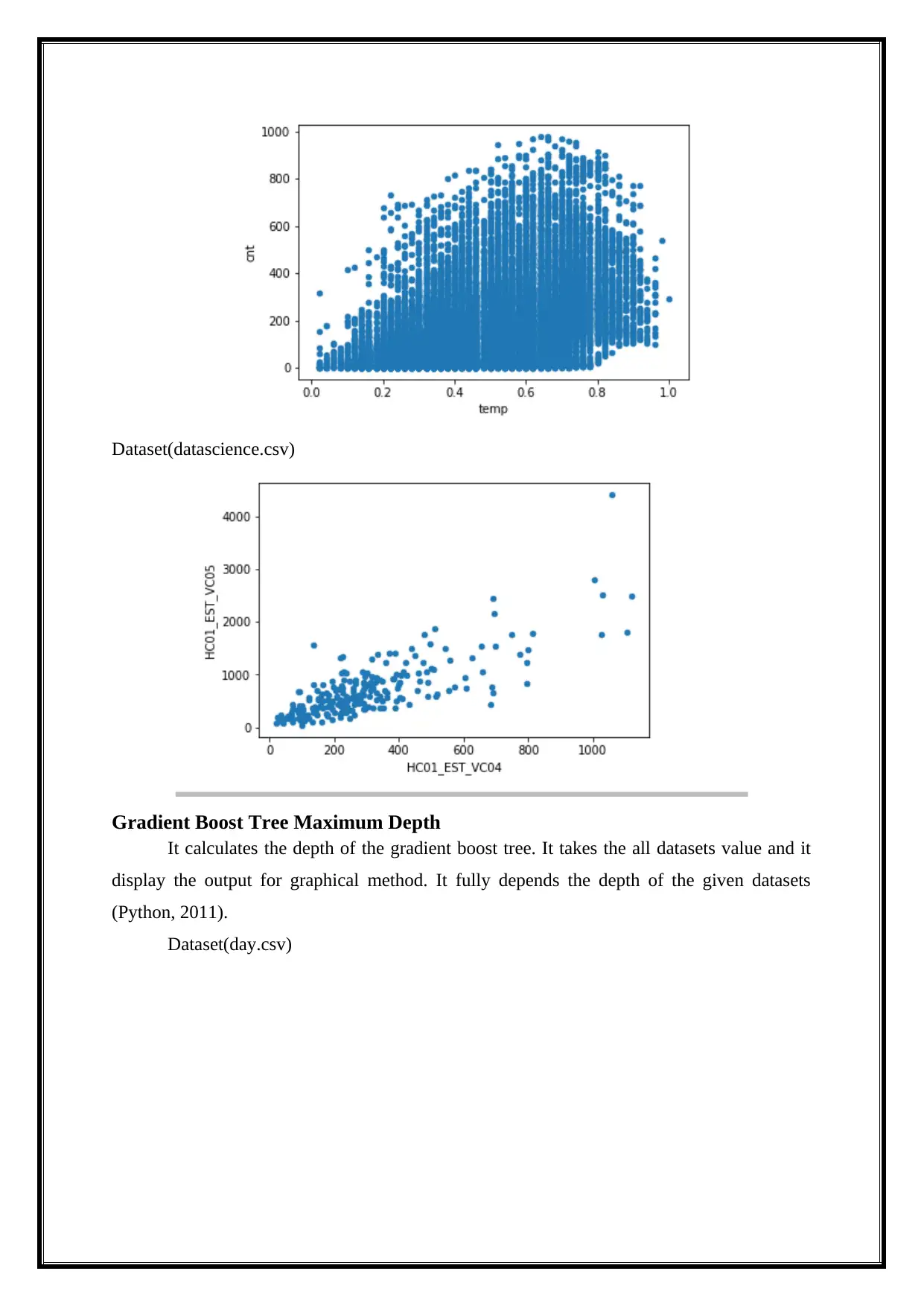

Dataset(datascience.csv)

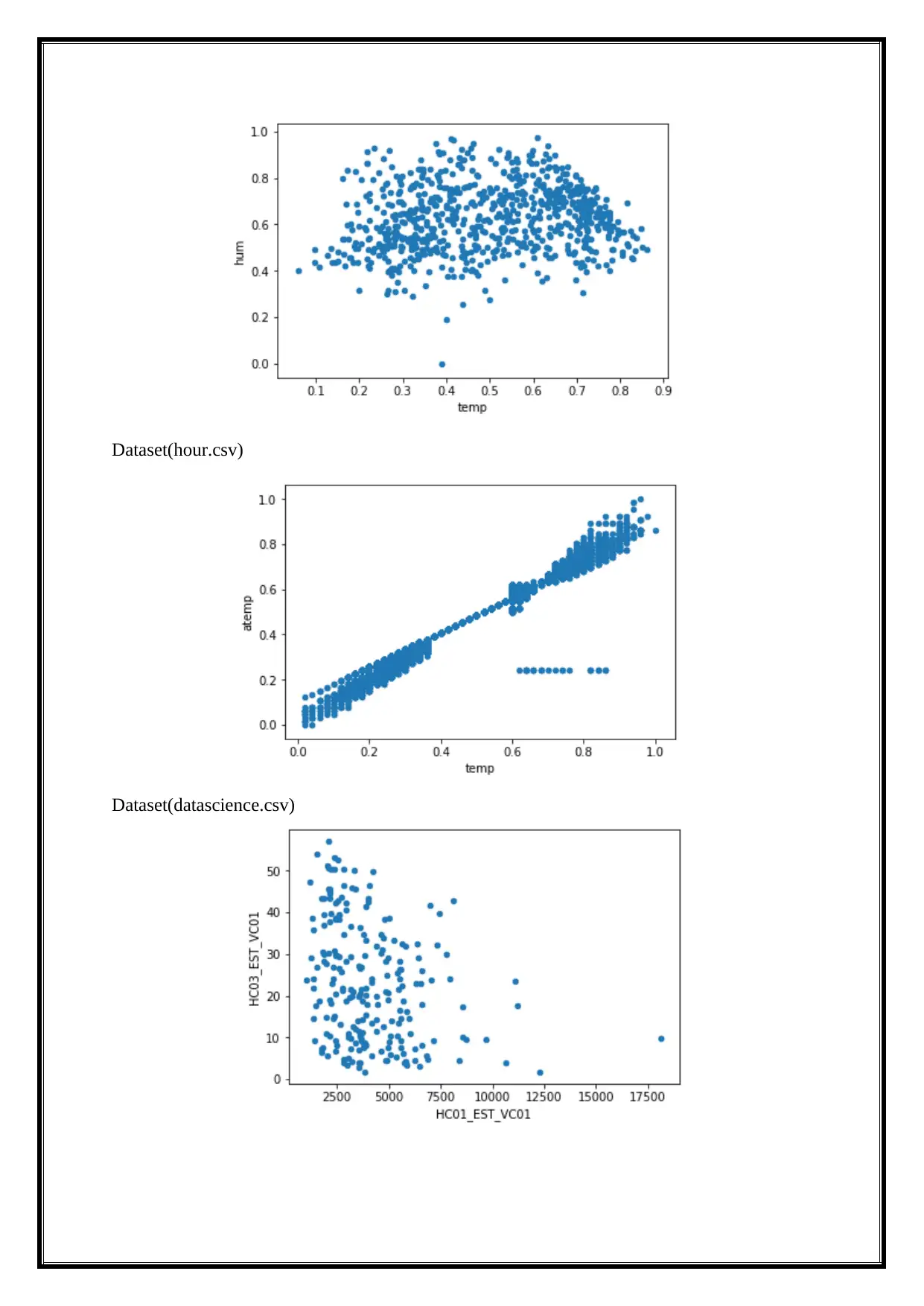

Gradient Boost Tree Maximum Depth

It calculates the depth of the gradient boost tree. It takes the all datasets value and it

display the output for graphical method. It fully depends the depth of the given datasets

(Python, 2011).

Dataset(day.csv)

Gradient Boost Tree Maximum Depth

It calculates the depth of the gradient boost tree. It takes the all datasets value and it

display the output for graphical method. It fully depends the depth of the given datasets

(Python, 2011).

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

Dataset(datascience.csv)

Linear Regression

It is one type of the regression model and it displays the output for graphically. Linear

regression is used for predict the value of a variable and it support vector machines. We are

import the particular file for implementation and it display the all the records for given

datasets. We are implementing the linear regression cross validation and linear regression log.

The linear regression cross validation is include the linear regression iteration. Linear

regression intercepts, linear regression step size, L1 Regularization and L2 Regularization

(Somervill, 2010).

Linear Regression Cross Validation

Linear regression means relationship between the dependent variable and independent

variable. It using the cross validation. It includes the intercet, iteration and step size.

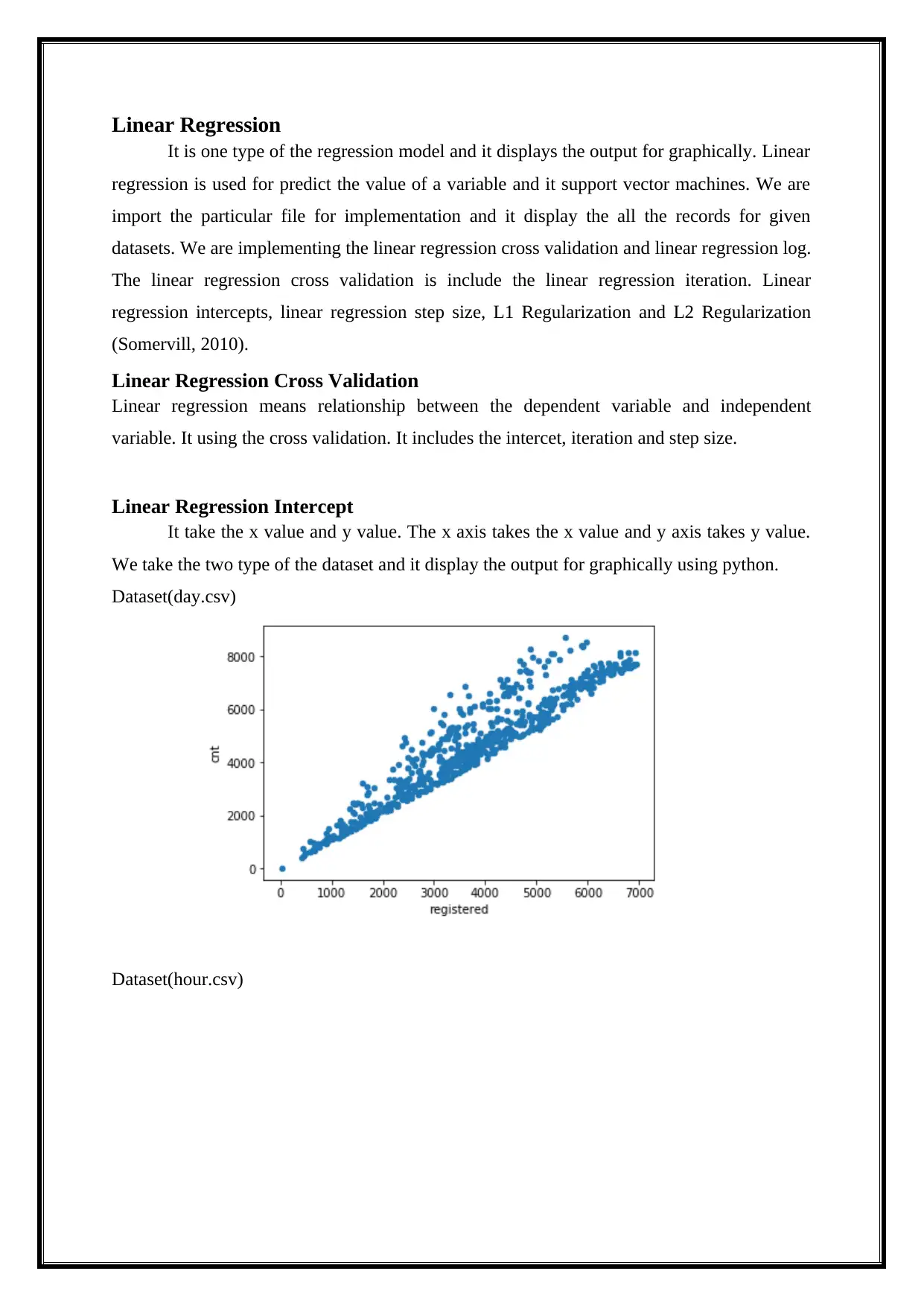

Linear Regression Intercept

It take the x value and y value. The x axis takes the x value and y axis takes y value.

We take the two type of the dataset and it display the output for graphically using python.

Dataset(day.csv)

Dataset(hour.csv)

It is one type of the regression model and it displays the output for graphically. Linear

regression is used for predict the value of a variable and it support vector machines. We are

import the particular file for implementation and it display the all the records for given

datasets. We are implementing the linear regression cross validation and linear regression log.

The linear regression cross validation is include the linear regression iteration. Linear

regression intercepts, linear regression step size, L1 Regularization and L2 Regularization

(Somervill, 2010).

Linear Regression Cross Validation

Linear regression means relationship between the dependent variable and independent

variable. It using the cross validation. It includes the intercet, iteration and step size.

Linear Regression Intercept

It take the x value and y value. The x axis takes the x value and y axis takes y value.

We take the two type of the dataset and it display the output for graphically using python.

Dataset(day.csv)

Dataset(hour.csv)

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

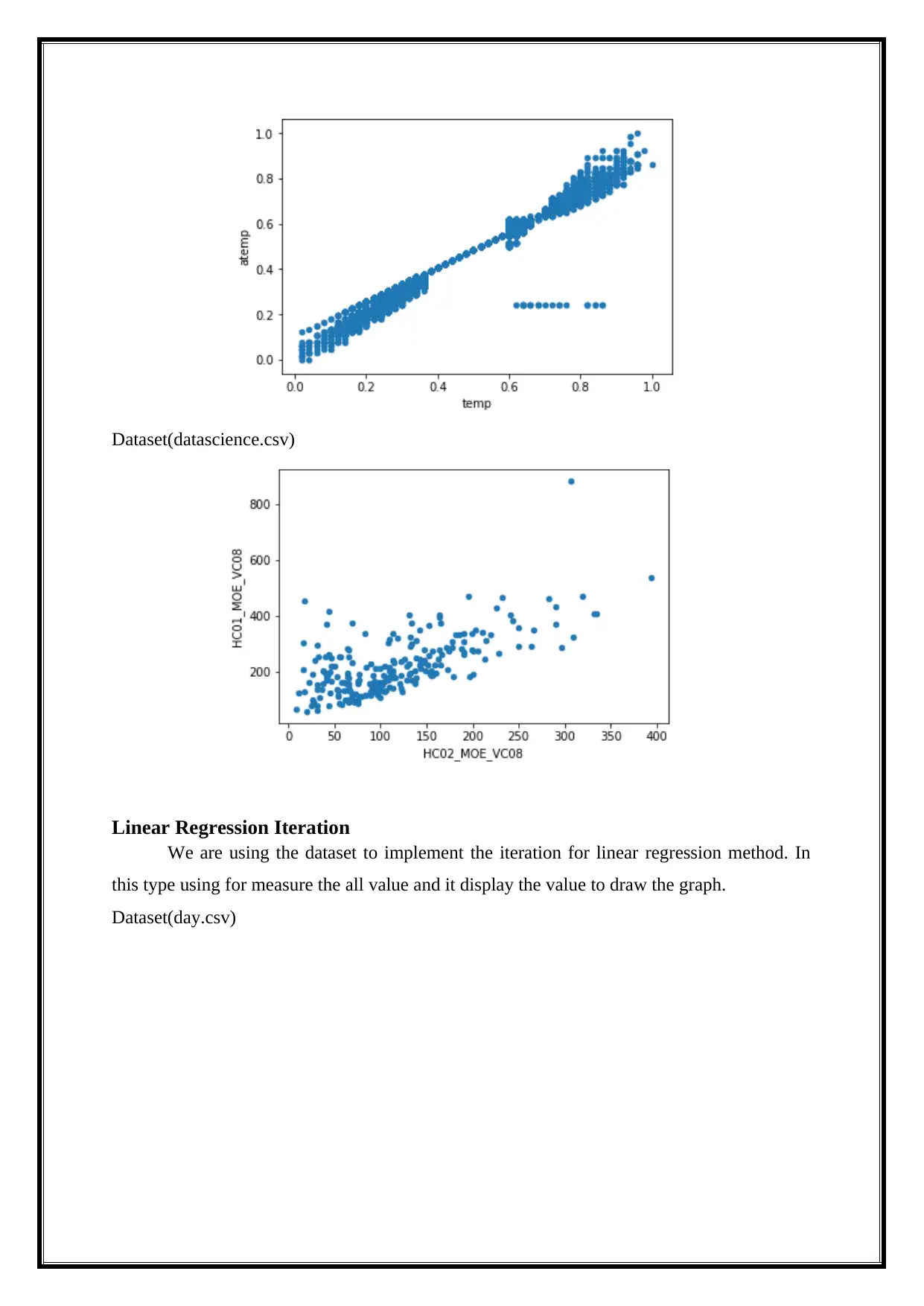

Dataset(datascience.csv)

Linear Regression Iteration

We are using the dataset to implement the iteration for linear regression method. In

this type using for measure the all value and it display the value to draw the graph.

Dataset(day.csv)

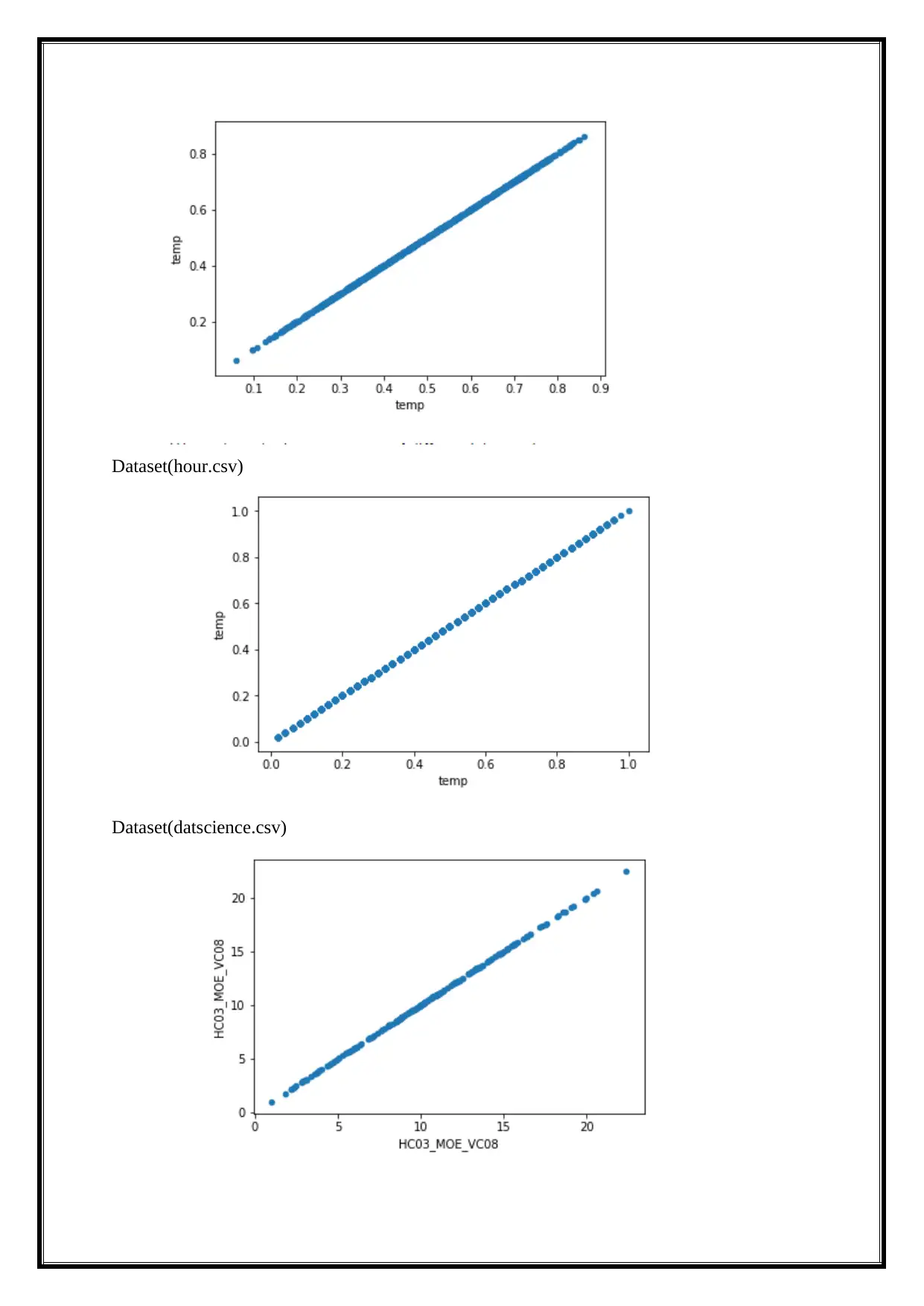

Linear Regression Iteration

We are using the dataset to implement the iteration for linear regression method. In

this type using for measure the all value and it display the value to draw the graph.

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datscience.csv)

Dataset(datscience.csv)

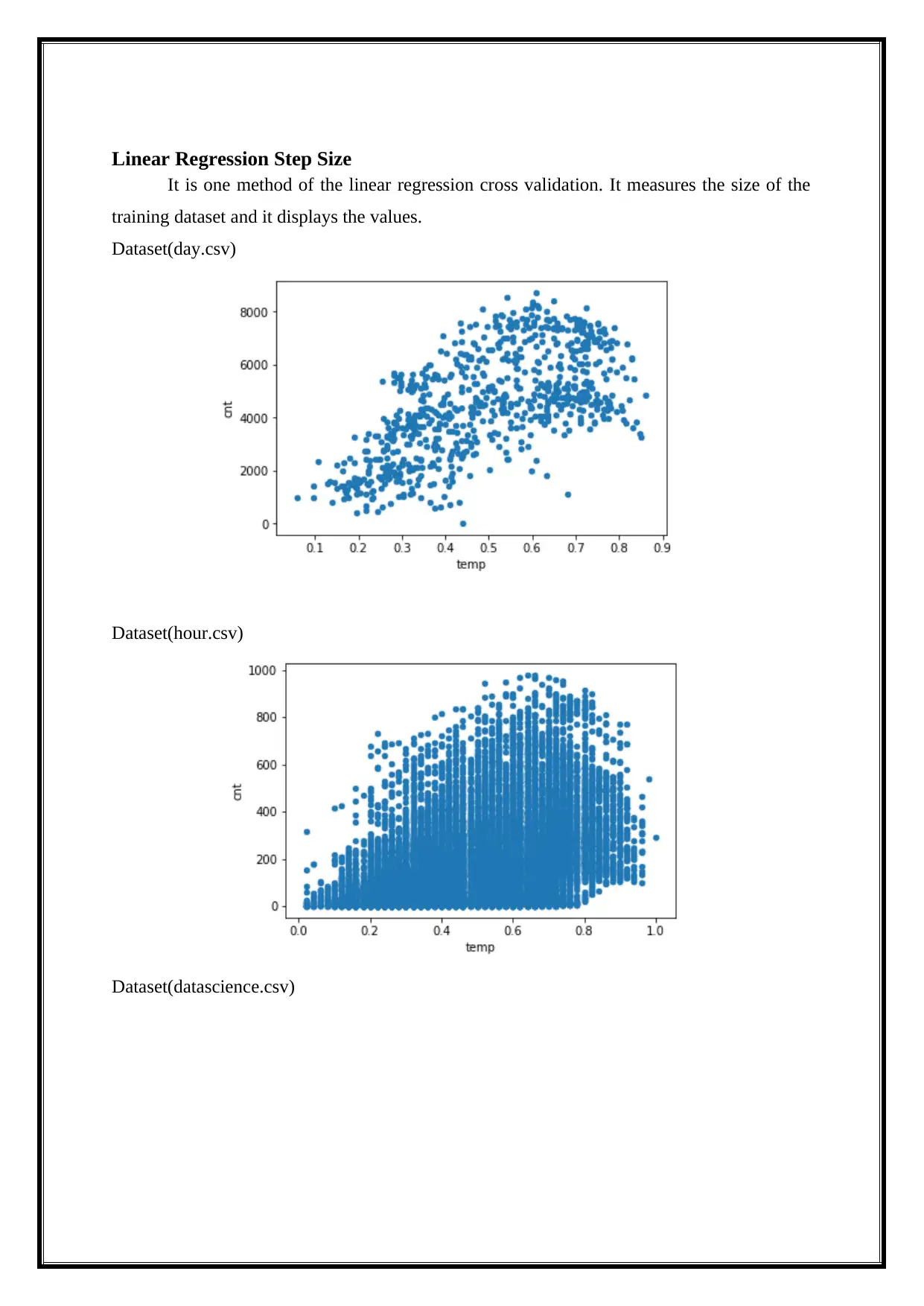

Linear Regression Step Size

It is one method of the linear regression cross validation. It measures the size of the

training dataset and it displays the values.

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

It is one method of the linear regression cross validation. It measures the size of the

training dataset and it displays the values.

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

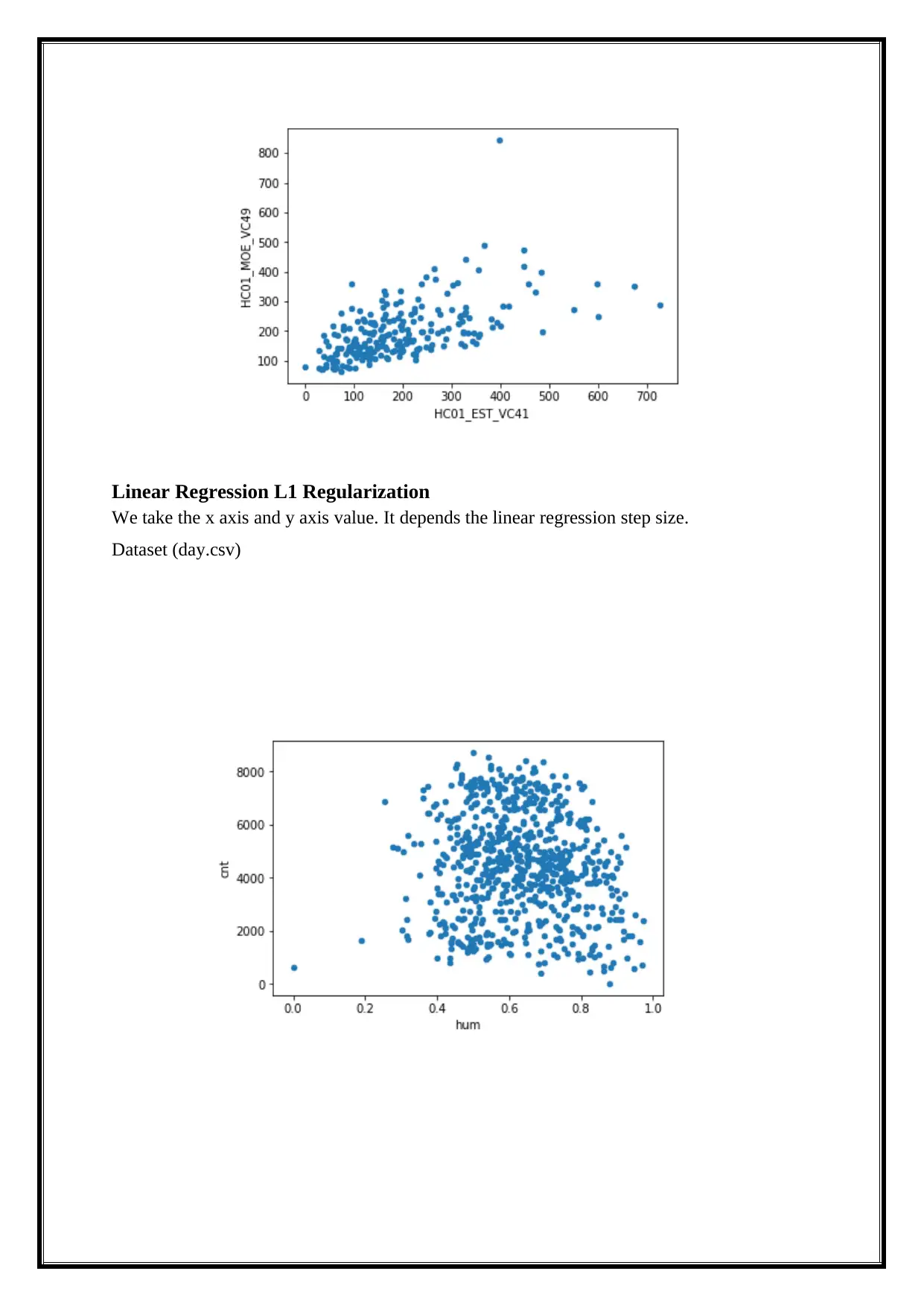

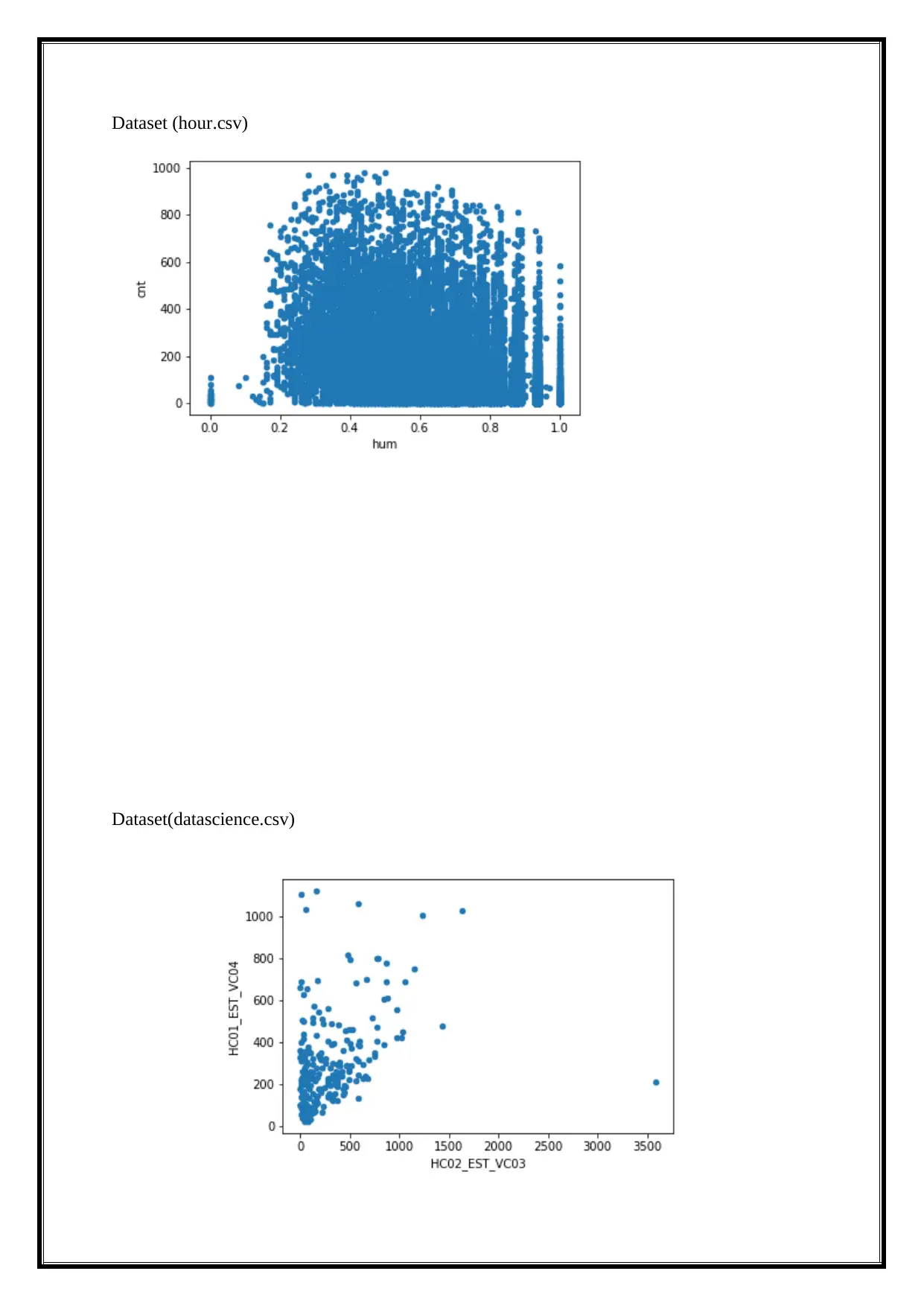

Linear Regression L1 Regularization

We take the x axis and y axis value. It depends the linear regression step size.

Dataset (day.csv)

We take the x axis and y axis value. It depends the linear regression step size.

Dataset (day.csv)

Dataset (hour.csv)

Dataset(datascience.csv)

Dataset(datascience.csv)

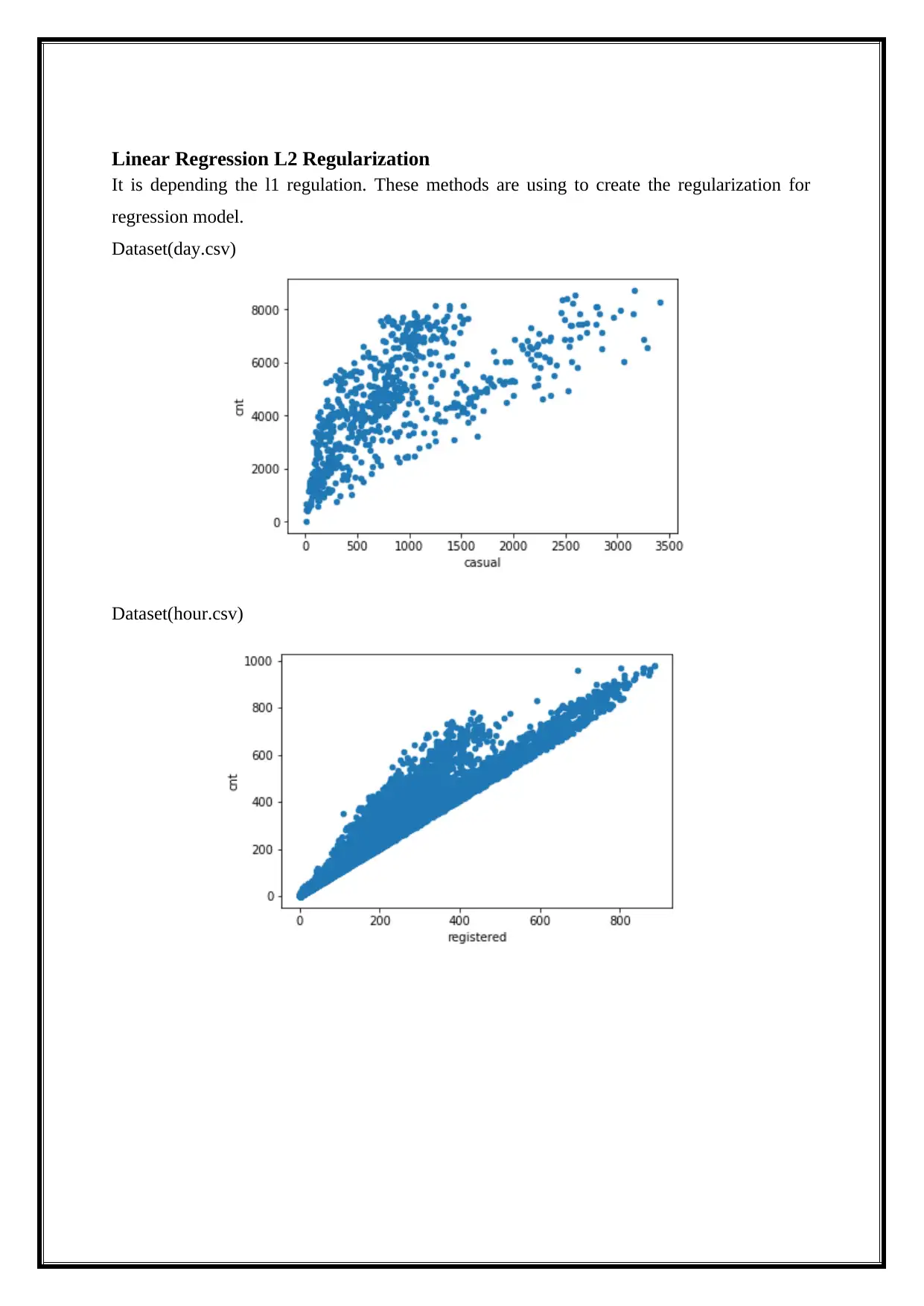

Linear Regression L2 Regularization

It is depending the l1 regulation. These methods are using to create the regularization for

regression model.

Dataset(day.csv)

Dataset(hour.csv)

It is depending the l1 regulation. These methods are using to create the regularization for

regression model.

Dataset(day.csv)

Dataset(hour.csv)

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Dataset(datascience.csv)

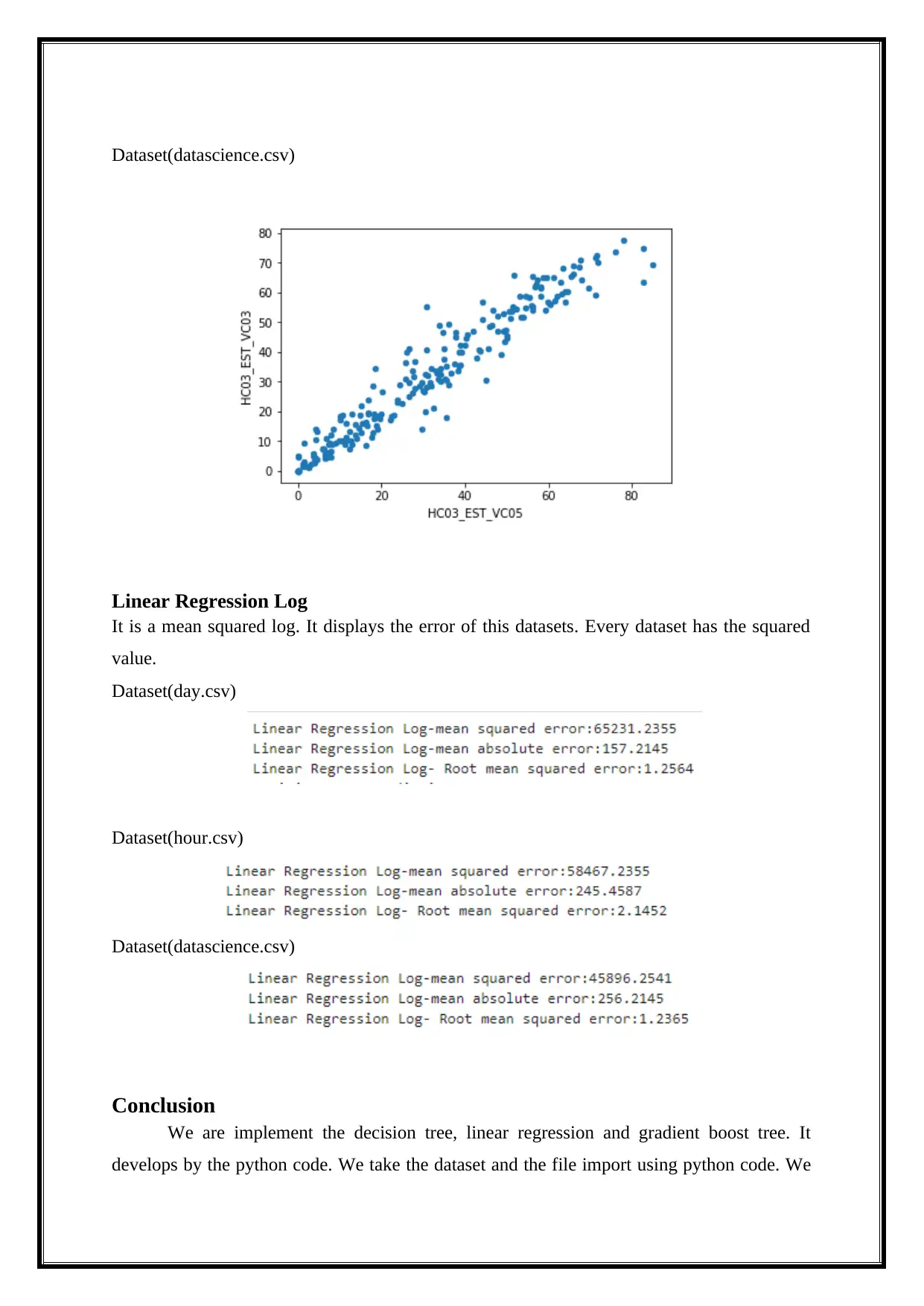

Linear Regression Log

It is a mean squared log. It displays the error of this datasets. Every dataset has the squared

value.

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

Conclusion

We are implement the decision tree, linear regression and gradient boost tree. It

develops by the python code. We take the dataset and the file import using python code. We

Linear Regression Log

It is a mean squared log. It displays the error of this datasets. Every dataset has the squared

value.

Dataset(day.csv)

Dataset(hour.csv)

Dataset(datascience.csv)

Conclusion

We are implement the decision tree, linear regression and gradient boost tree. It

develops by the python code. We take the dataset and the file import using python code. We

implement the decision tree log, decision tree categorical feature, decision tree numerical

features, gradient boost tree, gradient boost tree maxbins, gradient boost tree maximum

depth, Linear regression model, Linear regression cross validation, linear regression intercept,

Linear regression step size, Linear regression iteration and linear regression regulation. The

regression model develops by the python code using given dataset and display the output is

graphically.

References

Ades, A., Cooper, N., Welton, N., Abrams, K. and Sutton, A. (2013). Evidence synthesis for

decision making in healthcare. Hoboken, N.J.: Wiley.

Data science. (2015). Cham: Springer.

Goetz, T. (2011). Decision tree. New York: Rodale.

GRABCZEWSKI, K. (2016). META-LEARNING IN DECISION TREE INDUCTION. [Place

of publication not identified]: SPRINGER INTERNATIONAL PU.

Phillips, D., Romano, F., Vo. T.H, P., Czygan, M., Layton, R. and Raschka, S.

(2016). Python. Birmingham: Packt Publishing.

Pierson, L. and Porway, J. (n.d.). Data science for dummies.

Python. (2011). Rosen Publishing.

Somervill, B. (2010). Python. Ann Arbor, Mich.: Cherry Lake Pub.

features, gradient boost tree, gradient boost tree maxbins, gradient boost tree maximum

depth, Linear regression model, Linear regression cross validation, linear regression intercept,

Linear regression step size, Linear regression iteration and linear regression regulation. The

regression model develops by the python code using given dataset and display the output is

graphically.

References

Ades, A., Cooper, N., Welton, N., Abrams, K. and Sutton, A. (2013). Evidence synthesis for

decision making in healthcare. Hoboken, N.J.: Wiley.

Data science. (2015). Cham: Springer.

Goetz, T. (2011). Decision tree. New York: Rodale.

GRABCZEWSKI, K. (2016). META-LEARNING IN DECISION TREE INDUCTION. [Place

of publication not identified]: SPRINGER INTERNATIONAL PU.

Phillips, D., Romano, F., Vo. T.H, P., Czygan, M., Layton, R. and Raschka, S.

(2016). Python. Birmingham: Packt Publishing.

Pierson, L. and Porway, J. (n.d.). Data science for dummies.

Python. (2011). Rosen Publishing.

Somervill, B. (2010). Python. Ann Arbor, Mich.: Cherry Lake Pub.

1 out of 22

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

© 2024 | Zucol Services PVT LTD | All rights reserved.