BN104 Operating Systems: Memory and Process Management Report (2020)

VerifiedAdded on 2022/12/30

|10

|1843

|63

Report

AI Summary

This report provides a comprehensive overview of operating systems, focusing on memory management and process scheduling. It explains the necessity of address translation, differentiating between logical and physical addresses, and illustrates the process with an example. The report explores typical page sizes and the factors OS developers consider. It calculates total memory requirements in scenarios using segmentation, demonstrating internal fragmentation with different page sizes. Furthermore, the report analyzes CPU cycle times and arrival times, drawing timelines and calculating turnaround and waiting times for various scheduling algorithms, including FCFS, SJN, SRT, and Round Robin. The report concludes by summarizing the key aspects of memory allocation and scheduling algorithms, highlighting their importance in resource management within an operating system.

Operating System

Table of Contents

Table of Contents

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Part B...............................................................................................................................................1

Introduction......................................................................................................................................1

3. Explicate why operating system require address translation. Provide example for

illustrating this aspect.............................................................................................................1

4. Discover typical page sizes along with the factors that need to be taken into account by OS

developers...............................................................................................................................2

5. Total memory needed in case segmentation is used in different subroutines....................2

6. CPU cycle times and arrival time are given.......................................................................4

a. Draw a timeline for different scheduling algorithm......................................................4

b. Define turnaround time and waiting time. Furthermore calculate them for different

algorithms illustrated above...................................................................................................5

Conclusion.......................................................................................................................................7

References........................................................................................................................................8

Introduction......................................................................................................................................1

3. Explicate why operating system require address translation. Provide example for

illustrating this aspect.............................................................................................................1

4. Discover typical page sizes along with the factors that need to be taken into account by OS

developers...............................................................................................................................2

5. Total memory needed in case segmentation is used in different subroutines....................2

6. CPU cycle times and arrival time are given.......................................................................4

a. Draw a timeline for different scheduling algorithm......................................................4

b. Define turnaround time and waiting time. Furthermore calculate them for different

algorithms illustrated above...................................................................................................5

Conclusion.......................................................................................................................................7

References........................................................................................................................................8

Part B

Introduction

The functionality that is being provided by an OS that is further liable for handling as

well as managing primary memory along with move process among disk and main memory

while execution is defined as memory management. Basically, it provides the methods for

dynamic allocation of some portion of memory to certain programs as per the request and frees

them after utilisation (Dronamraju and et. al, 2020). For doing so distinct scheduling algorithms

are being used in order to make sure that memory and resources are allocated as per the

requirements. This report will provide an insight into address translation with the usage of

examples. Furthermore, total page size, memory required and distinct algorithms will be

illustrated with reference to ways in which resources will be allocated.

3. Explicate why operating system require address translation. Provide example for illustrating

this aspect

Logical address: This is generated via CPU when program is executing. This is basically

a virtual address that is not present physically but is utilised like a reference for accessing

physical memory location by the CPU. The space is utilised for setting logical addresses that are

generated by the perspectives of the program.

Physical address: This is liable for identification of physical location of needed data

within the memory. The user of the system is not directly involved in dealing with physical

address but they can only have access to correspondent logical address.

The respective user program will be liable for generation of logical address by

considering that program is being executing within the same but for this program needs to have

physical memory for executing this (Oldcorn and Paltashev, 2017). Therefore, logical address

have to be mapped with physical address via MMU (main memory unit) before they are being

utilised. Within the system, that have virtual memory can be seen like a cache for the entire disk

that will serve like a lower store.

For an example the logical address space is 8 pages each of 2kb and physical address

space with 4 frames. The physical address has to be computed when the given logical address is

2500.

1

Introduction

The functionality that is being provided by an OS that is further liable for handling as

well as managing primary memory along with move process among disk and main memory

while execution is defined as memory management. Basically, it provides the methods for

dynamic allocation of some portion of memory to certain programs as per the request and frees

them after utilisation (Dronamraju and et. al, 2020). For doing so distinct scheduling algorithms

are being used in order to make sure that memory and resources are allocated as per the

requirements. This report will provide an insight into address translation with the usage of

examples. Furthermore, total page size, memory required and distinct algorithms will be

illustrated with reference to ways in which resources will be allocated.

3. Explicate why operating system require address translation. Provide example for illustrating

this aspect

Logical address: This is generated via CPU when program is executing. This is basically

a virtual address that is not present physically but is utilised like a reference for accessing

physical memory location by the CPU. The space is utilised for setting logical addresses that are

generated by the perspectives of the program.

Physical address: This is liable for identification of physical location of needed data

within the memory. The user of the system is not directly involved in dealing with physical

address but they can only have access to correspondent logical address.

The respective user program will be liable for generation of logical address by

considering that program is being executing within the same but for this program needs to have

physical memory for executing this (Oldcorn and Paltashev, 2017). Therefore, logical address

have to be mapped with physical address via MMU (main memory unit) before they are being

utilised. Within the system, that have virtual memory can be seen like a cache for the entire disk

that will serve like a lower store.

For an example the logical address space is 8 pages each of 2kb and physical address

space with 4 frames. The physical address has to be computed when the given logical address is

2500.

1

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Size of page = 2kb. (This means that 14 bits are required for offset)

Pages = 8 (Thus, 3 bits for each)

The given virtual address is 2500 in binary = 000 00100111000100

The initial three bits from left indicates page number and rest offset

Page number = 000

Offset = 00100111000100

Page 0 is mapped with frame 1. This means that physical address will be 0100100111000100 =

18884.

4. Discover typical page sizes along with the factors that need to be taken into account by OS

developers

It is important to divide jobs into equal parts before they are loaded, this is known as page

which will be loaded into the memory location referred to as page frame. The concept of paged

memory allocation is dependent on segregating the jobs that are coming into pages that are of

identical size (Silberschatz, Galvin and Gagne, 2014). It entirely depends upon the operating

system that is present within the computer. In some system the page size is identical memory

block size as well as this is alike to size of each section of the disk in which job is present or

stored.

The operating system developer, before execution of the program takes into account

certain aspects, they are as following:

Identification of number of pages present within the program

Determining empty page frames that are in the main memory

Load program pages within them

This will enable them to prepare program for loading where pages are present in the

logical sequence that is first page will comprise of initial instructions for the program and

similarly goes for the last.

5. Total memory needed in case segmentation is used in different subroutines

The wasted space in each of the allocated space due to rounding up from actual required

allocation to allocation granularity is defined as internal fragmentation. This takes place when

memory is segregated within fixed sized block. Whenever any request is for the memory by

process then fixed size blocks are allocated. In this context, the memory that is being provided is

2

Pages = 8 (Thus, 3 bits for each)

The given virtual address is 2500 in binary = 000 00100111000100

The initial three bits from left indicates page number and rest offset

Page number = 000

Offset = 00100111000100

Page 0 is mapped with frame 1. This means that physical address will be 0100100111000100 =

18884.

4. Discover typical page sizes along with the factors that need to be taken into account by OS

developers

It is important to divide jobs into equal parts before they are loaded, this is known as page

which will be loaded into the memory location referred to as page frame. The concept of paged

memory allocation is dependent on segregating the jobs that are coming into pages that are of

identical size (Silberschatz, Galvin and Gagne, 2014). It entirely depends upon the operating

system that is present within the computer. In some system the page size is identical memory

block size as well as this is alike to size of each section of the disk in which job is present or

stored.

The operating system developer, before execution of the program takes into account

certain aspects, they are as following:

Identification of number of pages present within the program

Determining empty page frames that are in the main memory

Load program pages within them

This will enable them to prepare program for loading where pages are present in the

logical sequence that is first page will comprise of initial instructions for the program and

similarly goes for the last.

5. Total memory needed in case segmentation is used in different subroutines

The wasted space in each of the allocated space due to rounding up from actual required

allocation to allocation granularity is defined as internal fragmentation. This takes place when

memory is segregated within fixed sized block. Whenever any request is for the memory by

process then fixed size blocks are allocated. In this context, the memory that is being provided is

2

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

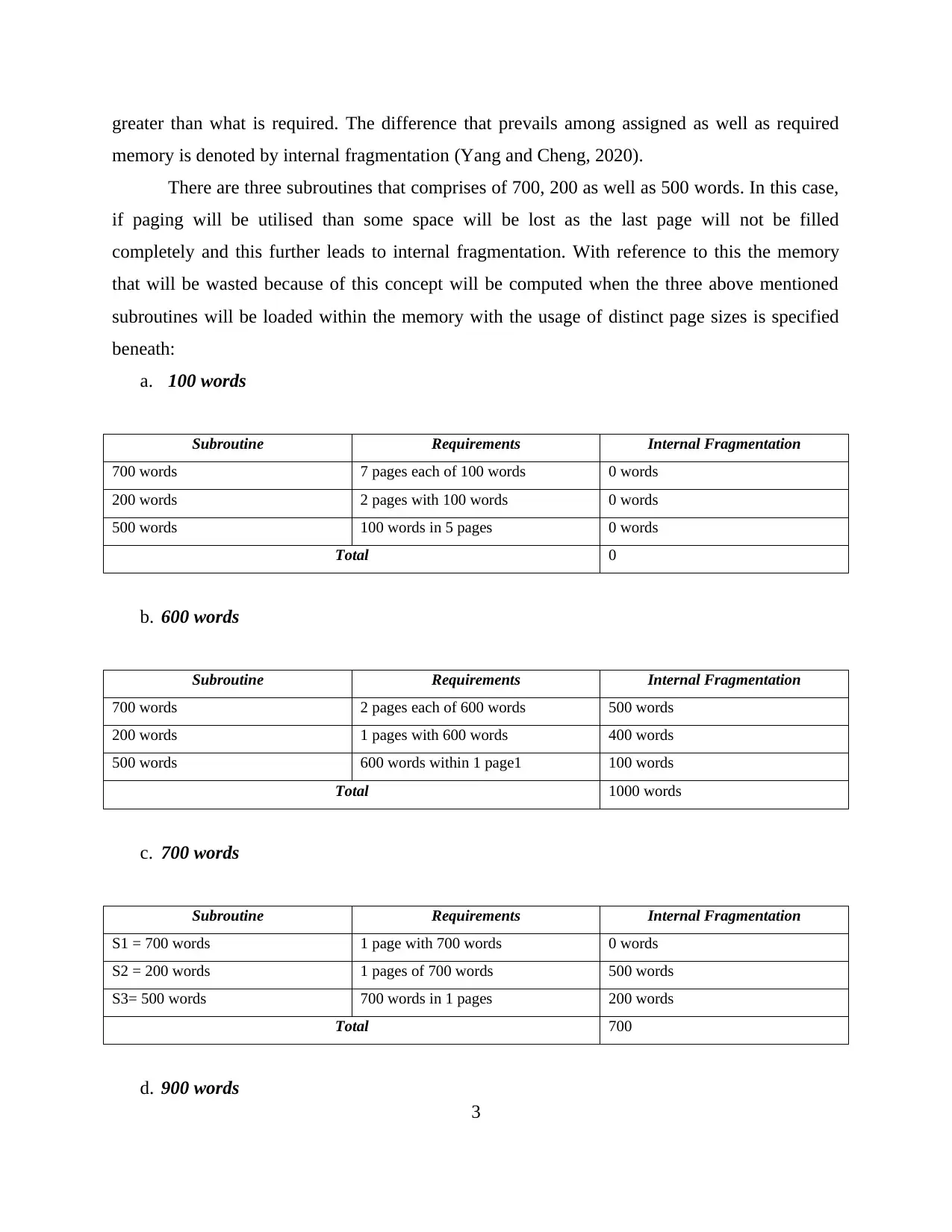

greater than what is required. The difference that prevails among assigned as well as required

memory is denoted by internal fragmentation (Yang and Cheng, 2020).

There are three subroutines that comprises of 700, 200 as well as 500 words. In this case,

if paging will be utilised than some space will be lost as the last page will not be filled

completely and this further leads to internal fragmentation. With reference to this the memory

that will be wasted because of this concept will be computed when the three above mentioned

subroutines will be loaded within the memory with the usage of distinct page sizes is specified

beneath:

a. 100 words

Subroutine Requirements Internal Fragmentation

700 words 7 pages each of 100 words 0 words

200 words 2 pages with 100 words 0 words

500 words 100 words in 5 pages 0 words

Total 0

b. 600 words

Subroutine Requirements Internal Fragmentation

700 words 2 pages each of 600 words 500 words

200 words 1 pages with 600 words 400 words

500 words 600 words within 1 page1 100 words

Total 1000 words

c. 700 words

Subroutine Requirements Internal Fragmentation

S1 = 700 words 1 page with 700 words 0 words

S2 = 200 words 1 pages of 700 words 500 words

S3= 500 words 700 words in 1 pages 200 words

Total 700

d. 900 words

3

memory is denoted by internal fragmentation (Yang and Cheng, 2020).

There are three subroutines that comprises of 700, 200 as well as 500 words. In this case,

if paging will be utilised than some space will be lost as the last page will not be filled

completely and this further leads to internal fragmentation. With reference to this the memory

that will be wasted because of this concept will be computed when the three above mentioned

subroutines will be loaded within the memory with the usage of distinct page sizes is specified

beneath:

a. 100 words

Subroutine Requirements Internal Fragmentation

700 words 7 pages each of 100 words 0 words

200 words 2 pages with 100 words 0 words

500 words 100 words in 5 pages 0 words

Total 0

b. 600 words

Subroutine Requirements Internal Fragmentation

700 words 2 pages each of 600 words 500 words

200 words 1 pages with 600 words 400 words

500 words 600 words within 1 page1 100 words

Total 1000 words

c. 700 words

Subroutine Requirements Internal Fragmentation

S1 = 700 words 1 page with 700 words 0 words

S2 = 200 words 1 pages of 700 words 500 words

S3= 500 words 700 words in 1 pages 200 words

Total 700

d. 900 words

3

Subroutine Requirements Internal Fragmentation

700 words 900 words within 1 page 200 words

200 words 1 page with 900 words 700 words

500 words 900 words in the 1 page 400 words

Total 1300

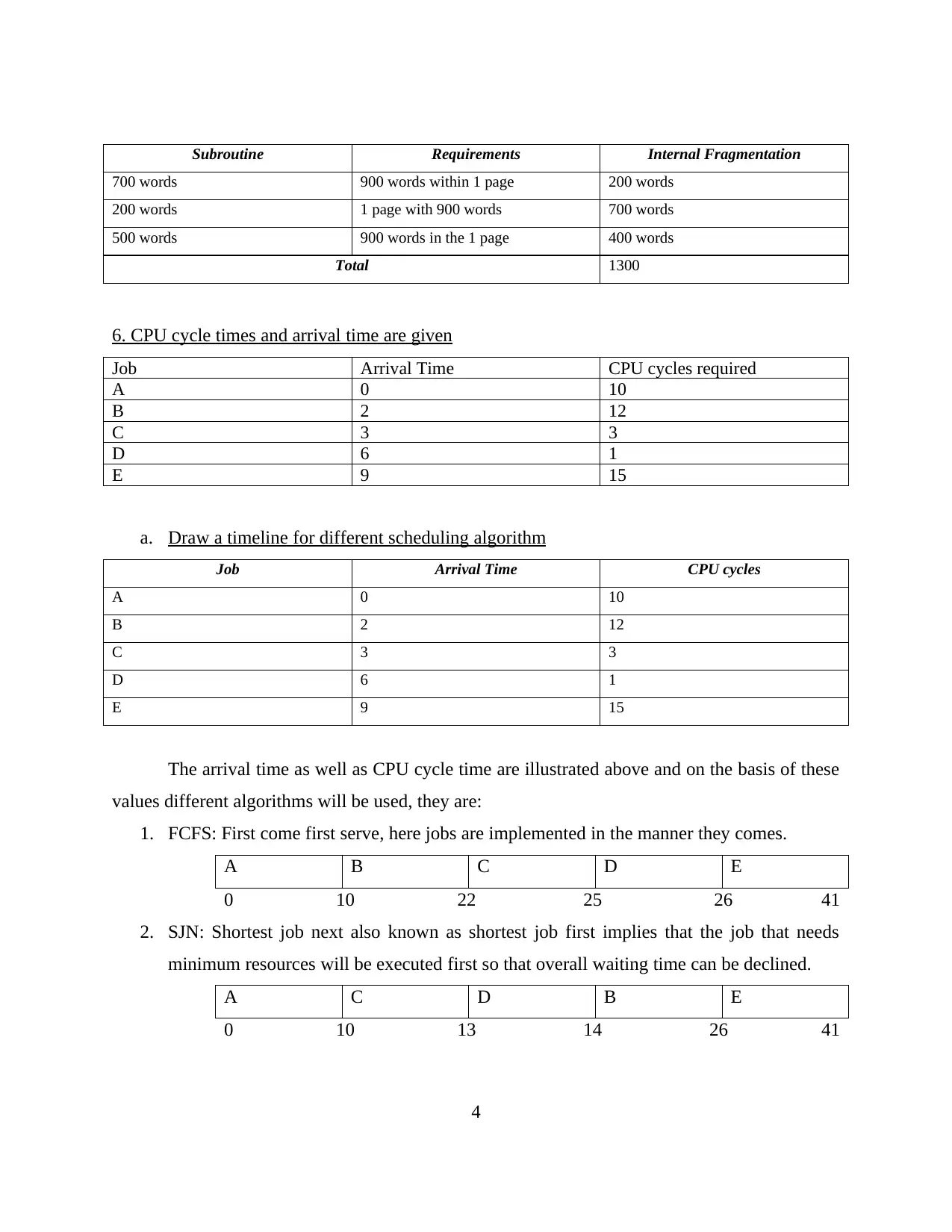

6. CPU cycle times and arrival time are given

Job Arrival Time CPU cycles required

A 0 10

B 2 12

C 3 3

D 6 1

E 9 15

a. Draw a timeline for different scheduling algorithm

Job Arrival Time CPU cycles

A 0 10

B 2 12

C 3 3

D 6 1

E 9 15

The arrival time as well as CPU cycle time are illustrated above and on the basis of these

values different algorithms will be used, they are:

1. FCFS: First come first serve, here jobs are implemented in the manner they comes.

A B C D E

0 10 22 25 26 41

2. SJN: Shortest job next also known as shortest job first implies that the job that needs

minimum resources will be executed first so that overall waiting time can be declined.

A C D B E

0 10 13 14 26 41

4

700 words 900 words within 1 page 200 words

200 words 1 page with 900 words 700 words

500 words 900 words in the 1 page 400 words

Total 1300

6. CPU cycle times and arrival time are given

Job Arrival Time CPU cycles required

A 0 10

B 2 12

C 3 3

D 6 1

E 9 15

a. Draw a timeline for different scheduling algorithm

Job Arrival Time CPU cycles

A 0 10

B 2 12

C 3 3

D 6 1

E 9 15

The arrival time as well as CPU cycle time are illustrated above and on the basis of these

values different algorithms will be used, they are:

1. FCFS: First come first serve, here jobs are implemented in the manner they comes.

A B C D E

0 10 22 25 26 41

2. SJN: Shortest job next also known as shortest job first implies that the job that needs

minimum resources will be executed first so that overall waiting time can be declined.

A C D B E

0 10 13 14 26 41

4

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

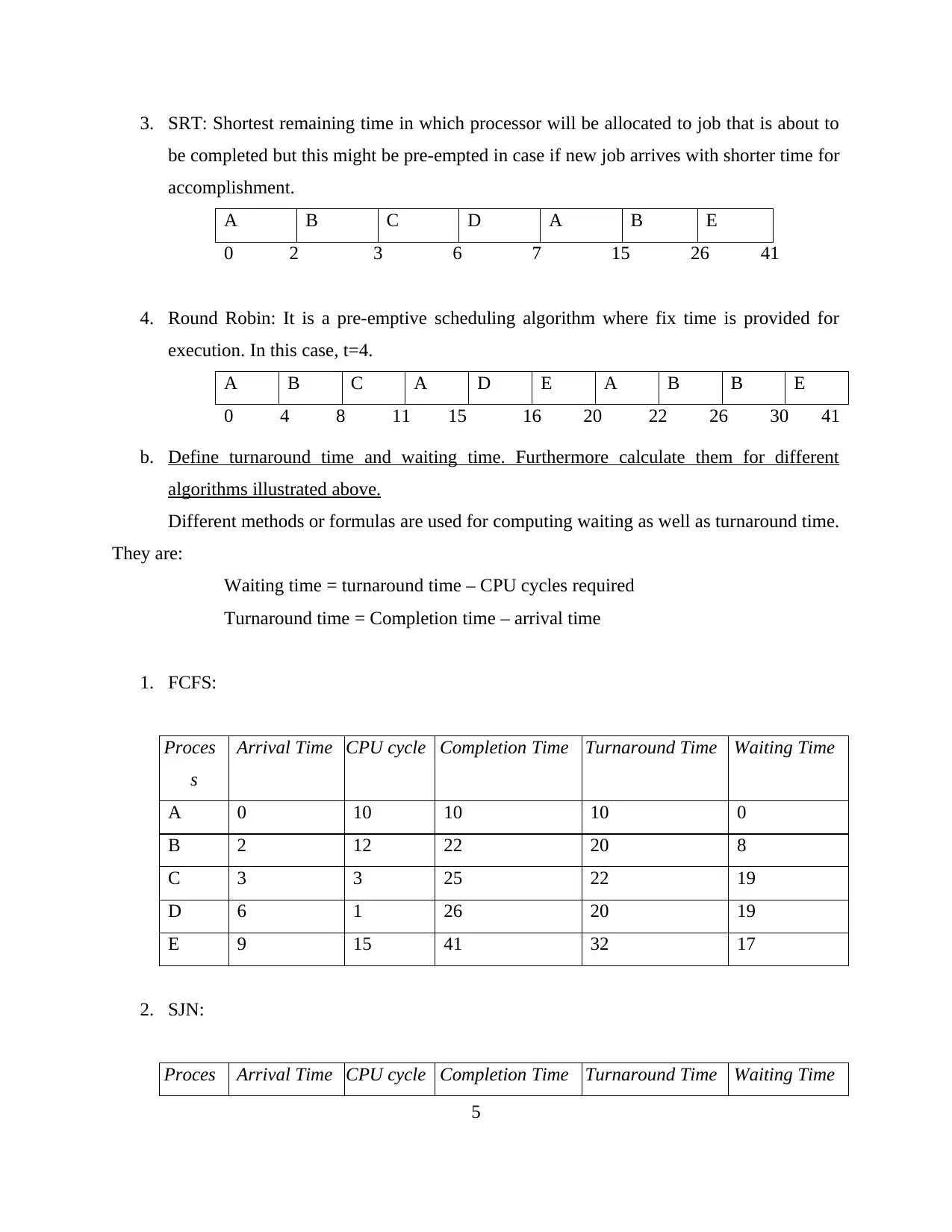

3. SRT: Shortest remaining time in which processor will be allocated to job that is about to

be completed but this might be pre-empted in case if new job arrives with shorter time for

accomplishment.

A B C D A B E

0 2 3 6 7 15 26 41

4. Round Robin: It is a pre-emptive scheduling algorithm where fix time is provided for

execution. In this case, t=4.

A B C A D E A B B E

0 4 8 11 15 16 20 22 26 30 41

b. Define turnaround time and waiting time. Furthermore calculate them for different

algorithms illustrated above.

Different methods or formulas are used for computing waiting as well as turnaround time.

They are:

Waiting time = turnaround time – CPU cycles required

Turnaround time = Completion time – arrival time

1. FCFS:

Proces

s

Arrival Time CPU cycle Completion Time Turnaround Time Waiting Time

A 0 10 10 10 0

B 2 12 22 20 8

C 3 3 25 22 19

D 6 1 26 20 19

E 9 15 41 32 17

2. SJN:

Proces Arrival Time CPU cycle Completion Time Turnaround Time Waiting Time

5

be completed but this might be pre-empted in case if new job arrives with shorter time for

accomplishment.

A B C D A B E

0 2 3 6 7 15 26 41

4. Round Robin: It is a pre-emptive scheduling algorithm where fix time is provided for

execution. In this case, t=4.

A B C A D E A B B E

0 4 8 11 15 16 20 22 26 30 41

b. Define turnaround time and waiting time. Furthermore calculate them for different

algorithms illustrated above.

Different methods or formulas are used for computing waiting as well as turnaround time.

They are:

Waiting time = turnaround time – CPU cycles required

Turnaround time = Completion time – arrival time

1. FCFS:

Proces

s

Arrival Time CPU cycle Completion Time Turnaround Time Waiting Time

A 0 10 10 10 0

B 2 12 22 20 8

C 3 3 25 22 19

D 6 1 26 20 19

E 9 15 41 32 17

2. SJN:

Proces Arrival Time CPU cycle Completion Time Turnaround Time Waiting Time

5

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

s

A 0 10 10 10 0

B 2 12 26 24 12

C 3 3 13 10 7

D 6 1 14 8 7

E 9 15 41 32 17

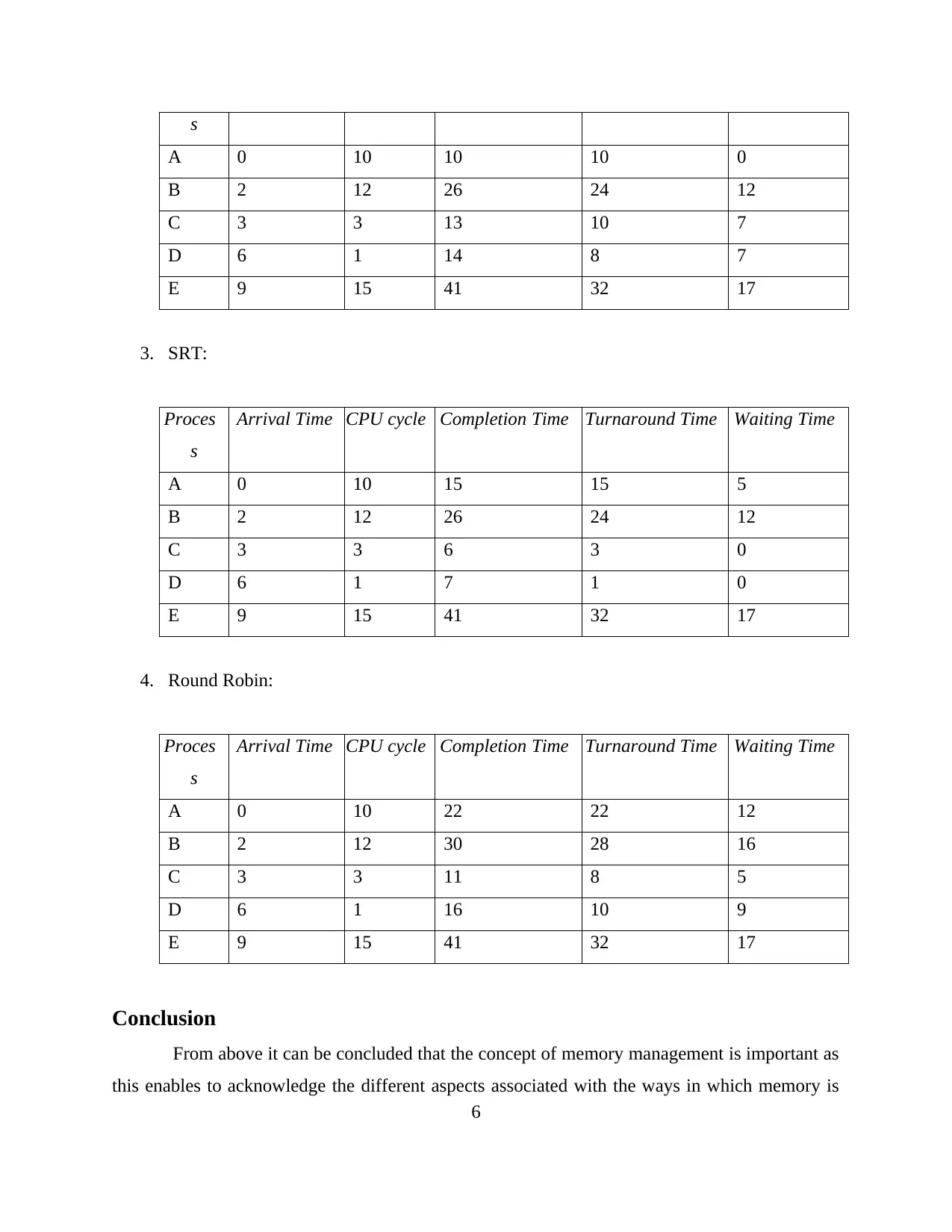

3. SRT:

Proces

s

Arrival Time CPU cycle Completion Time Turnaround Time Waiting Time

A 0 10 15 15 5

B 2 12 26 24 12

C 3 3 6 3 0

D 6 1 7 1 0

E 9 15 41 32 17

4. Round Robin:

Proces

s

Arrival Time CPU cycle Completion Time Turnaround Time Waiting Time

A 0 10 22 22 12

B 2 12 30 28 16

C 3 3 11 8 5

D 6 1 16 10 9

E 9 15 41 32 17

Conclusion

From above it can be concluded that the concept of memory management is important as

this enables to acknowledge the different aspects associated with the ways in which memory is

6

A 0 10 10 10 0

B 2 12 26 24 12

C 3 3 13 10 7

D 6 1 14 8 7

E 9 15 41 32 17

3. SRT:

Proces

s

Arrival Time CPU cycle Completion Time Turnaround Time Waiting Time

A 0 10 15 15 5

B 2 12 26 24 12

C 3 3 6 3 0

D 6 1 7 1 0

E 9 15 41 32 17

4. Round Robin:

Proces

s

Arrival Time CPU cycle Completion Time Turnaround Time Waiting Time

A 0 10 22 22 12

B 2 12 30 28 16

C 3 3 11 8 5

D 6 1 16 10 9

E 9 15 41 32 17

Conclusion

From above it can be concluded that the concept of memory management is important as

this enables to acknowledge the different aspects associated with the ways in which memory is

6

allocated to distinct resources. In addition to this, there are different algorithms that are being

designed for doing so depending upon the requirement of the system.

7

designed for doing so depending upon the requirement of the system.

7

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

References

Books and Journals

Dronamraju, R. and et. al, NetApp Inc, 2020. Methods for minimizing fragmentation in ssd

within a storage system and devices thereof. U.S. Patent Application 16/584,025.

Oldcorn, D. and Paltashev, T.T., Advanced Micro Devices Inc, 2017. Memory management with

reduced fragmentation. U.S. Patent Application 15/094,171.

Silberschatz, A., Galvin, P.B. and Gagne, G., 2014. Operating system concepts essentials (pp. I-

XX). Hoboken: Wiley.

Yang, D. and Cheng, D., 2020, June. Efficient gpu memory management for nonlinear dnns.

In Proceedings of the 29th International Symposium on High-Performance Parallel and

Distributed Computing (pp. 185-196).

8

Books and Journals

Dronamraju, R. and et. al, NetApp Inc, 2020. Methods for minimizing fragmentation in ssd

within a storage system and devices thereof. U.S. Patent Application 16/584,025.

Oldcorn, D. and Paltashev, T.T., Advanced Micro Devices Inc, 2017. Memory management with

reduced fragmentation. U.S. Patent Application 15/094,171.

Silberschatz, A., Galvin, P.B. and Gagne, G., 2014. Operating system concepts essentials (pp. I-

XX). Hoboken: Wiley.

Yang, D. and Cheng, D., 2020, June. Efficient gpu memory management for nonlinear dnns.

In Proceedings of the 29th International Symposium on High-Performance Parallel and

Distributed Computing (pp. 185-196).

8

1 out of 10

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.