MG6088 Software Project Management: Agile, RAD, SCRUM, and Estimation

VerifiedAdded on 2022/10/27

|34

|8172

|39

Homework Assignment

AI Summary

This document provides a comprehensive overview of software project management, covering key concepts and methodologies. It begins by defining SCRUM and its role in agile development, along with an explanation of the RAD model and its applications. The assignment then delves into the advantages of agile unified processes, the function of the spiral model, and the importance of activity models. It enumerates various software process models, including waterfall, spiral, and agile, and defines agile methods and their approaches. Furthermore, the document explores extreme programming (XP), the COCOMO model, and its types, along with the advantages of function point analysis. It also covers application composition, the stages of estimation in a software project, and provides examples of RAD tools. Finally, the document explains how cost estimation is done in agile projects, emphasizing the differences between agile and traditional methodologies and the importance of sprints in agile development. This assignment provides a detailed analysis of software project management techniques and methodologies.

VIVEKANANDHA COLLEGE OF TECHNOLOGY

FOR WOMEN

DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING

MG6088 SOFTWARE PROJECT MANAGEMENT

Question Bank

REGULATION -2013

IV- YEAR –CSE (VIII-SEMESTER)

STAFF INCHARGE HOD DEAN

UNIT- II

FOR WOMEN

DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING

MG6088 SOFTWARE PROJECT MANAGEMENT

Question Bank

REGULATION -2013

IV- YEAR –CSE (VIII-SEMESTER)

STAFF INCHARGE HOD DEAN

UNIT- II

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Page 1

MG6088 Software Project Management

UNIT II

PROJECT LIFE CYCLE AND EFFORT ESTIMATION

PART A

1. What is SCRUM? (Nov/Dec 2018) (APR/MAY 2018)(NOV/Dec 2019)

Answer:

• Scrum is an efficient framework within which you can develop software with

teamwork. It is based on agile principles.

• Scrum supports continuous collaboration among the customer, team members,

and relevant stakeholders.

2. Expand RAD. Is it incremental model? Justify. (Nov/Dec 2018)

Answer:

• RAD model is Rapid Application Development model. It is a type of incremental

model. In RAD model the components or functions are developed in parallel as if

they were mini projects.

• The developments are time boxed, delivered and then assembled into a working

prototype.

3. Identify the uses of RAD model. (APR/MAY2019)

Answer:

• Rapid application development (RAD) describes a method of software

development which heavily emphasizes rapid prototyping and iterative

delivery.

• The RAD model is, therefore, a sharp alternative to the typical waterfall

development model, which often focuses largely on planning and sequential

design practices.

4. Brief about two ways of setting objectives. (APR/MAY 2018)

Answer:

Software process and Process Models – Choice of Process models - mental delivery – Rapid

Application development – Agile methods – Extreme Programming – SCRUM – Managing

interactive processes – Basics of Software estimation – Effort and Cost estimation techniques –

COSMIC Full function points - COCOMO II A Parametric Productivity Model - Staffing

Pattern.

MG6088 Software Project Management

UNIT II

PROJECT LIFE CYCLE AND EFFORT ESTIMATION

PART A

1. What is SCRUM? (Nov/Dec 2018) (APR/MAY 2018)(NOV/Dec 2019)

Answer:

• Scrum is an efficient framework within which you can develop software with

teamwork. It is based on agile principles.

• Scrum supports continuous collaboration among the customer, team members,

and relevant stakeholders.

2. Expand RAD. Is it incremental model? Justify. (Nov/Dec 2018)

Answer:

• RAD model is Rapid Application Development model. It is a type of incremental

model. In RAD model the components or functions are developed in parallel as if

they were mini projects.

• The developments are time boxed, delivered and then assembled into a working

prototype.

3. Identify the uses of RAD model. (APR/MAY2019)

Answer:

• Rapid application development (RAD) describes a method of software

development which heavily emphasizes rapid prototyping and iterative

delivery.

• The RAD model is, therefore, a sharp alternative to the typical waterfall

development model, which often focuses largely on planning and sequential

design practices.

4. Brief about two ways of setting objectives. (APR/MAY 2018)

Answer:

Software process and Process Models – Choice of Process models - mental delivery – Rapid

Application development – Agile methods – Extreme Programming – SCRUM – Managing

interactive processes – Basics of Software estimation – Effort and Cost estimation techniques –

COSMIC Full function points - COCOMO II A Parametric Productivity Model - Staffing

Pattern.

Page 2

• For any project to be success , the project objectives should be clearly defined

and the key people involved in the project must known about it. This only

will make them work with single focus and concentration towards achieving

the objectives

• These key people or organisation is called ‘Stakeholder’. A stackholder is

defined as a person or organization who are directly or indirectly affected by

the project .

• A software project is normally complex in nature and would involve many

people draw from various specialities.

• It is always advisable to set sub objectives suitable for every individual or a

group of individuals in line with the main objectives. Sub objectives are more

meaningful to a team so that it is under their control and achievable by them.

• A workable goal setting must always be done using SMART approach.

5. What is rapid application development? (NOV /DEC 2017)

Answer:

RAD or Rapid Application Development process is an adoption of the

waterfall model; it targets at developing software in a short span of time.

RAD follow the iterative method.

SDLC RAD model has following phases

• Business Modeling

• Data Modeling

• Process Modeling

• Application Generation

• Testing and Turnover

6. Outline the advantage of agile unified process. (NOV /DEC 2017)

Answer:

1. Agile methodology has an adaptive approach which is able to respond to the

changing requirements of the clients

2. Direct communication and constant feedback from customer representative leave

no space for any guesswork in the system.

• For any project to be success , the project objectives should be clearly defined

and the key people involved in the project must known about it. This only

will make them work with single focus and concentration towards achieving

the objectives

• These key people or organisation is called ‘Stakeholder’. A stackholder is

defined as a person or organization who are directly or indirectly affected by

the project .

• A software project is normally complex in nature and would involve many

people draw from various specialities.

• It is always advisable to set sub objectives suitable for every individual or a

group of individuals in line with the main objectives. Sub objectives are more

meaningful to a team so that it is under their control and achievable by them.

• A workable goal setting must always be done using SMART approach.

5. What is rapid application development? (NOV /DEC 2017)

Answer:

RAD or Rapid Application Development process is an adoption of the

waterfall model; it targets at developing software in a short span of time.

RAD follow the iterative method.

SDLC RAD model has following phases

• Business Modeling

• Data Modeling

• Process Modeling

• Application Generation

• Testing and Turnover

6. Outline the advantage of agile unified process. (NOV /DEC 2017)

Answer:

1. Agile methodology has an adaptive approach which is able to respond to the

changing requirements of the clients

2. Direct communication and constant feedback from customer representative leave

no space for any guesswork in the system.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Page 3

7. What is the function of spiral model? (APR/MAY 2017)

Answer:

• The spiral model is similar to the incremental model, with more emphasis

placed on risk analysis.

• A software project repeatedly passes through these phases in iterations (called

Spirals in this model)

8. What is activity model? (APR/MAY 2017)

Answer:

• The activity model indicates the set of activities needed to turn a set of inputs

(capital, raw materials and labour) into the firm’s value proposition (benefits

to customers).

• Examples of such activities include product development, purchasing,

manufacturing, marketing and sales and service delivery.

9. What is a software process model?

Answer:

• A Process Model describes the sequence of phases for the entire lifetime of a

product.

• Therefore it is sometimes also called Product Life Cycle.

• This covers everything from the initial commercial idea until the final de-

installation or disassembling of the product after its use.

10. What were the phases in software process model?

Answer:

• There are three main phases:

∗ Concept phase

∗ Implementation phase

∗ Maintenance phase

• Each of these main phases usually has some sub-phases, like a requirement

engineering phase, a design phase, a build phase and a testing phase.

• The sub-phases may occur in more than one main phase each of them with a

specific peculiarity depending on the main phase.

7. What is the function of spiral model? (APR/MAY 2017)

Answer:

• The spiral model is similar to the incremental model, with more emphasis

placed on risk analysis.

• A software project repeatedly passes through these phases in iterations (called

Spirals in this model)

8. What is activity model? (APR/MAY 2017)

Answer:

• The activity model indicates the set of activities needed to turn a set of inputs

(capital, raw materials and labour) into the firm’s value proposition (benefits

to customers).

• Examples of such activities include product development, purchasing,

manufacturing, marketing and sales and service delivery.

9. What is a software process model?

Answer:

• A Process Model describes the sequence of phases for the entire lifetime of a

product.

• Therefore it is sometimes also called Product Life Cycle.

• This covers everything from the initial commercial idea until the final de-

installation or disassembling of the product after its use.

10. What were the phases in software process model?

Answer:

• There are three main phases:

∗ Concept phase

∗ Implementation phase

∗ Maintenance phase

• Each of these main phases usually has some sub-phases, like a requirement

engineering phase, a design phase, a build phase and a testing phase.

• The sub-phases may occur in more than one main phase each of them with a

specific peculiarity depending on the main phase.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Page 4

11. List various software process models.

Answer:

• Waterfall model,

• Spiral model,

• V-model,

• Iterative model,

• Agile model and RAD model.

12. Define Agile Methods.

Answer:

• Agile model is a combination of iterative and incremental process models with

focus on process adaptability and customer satisfaction by rapid delivery of

working software product.

• Agile Methods break the product into small incremental builds. These builds

are provided in iterations. Every iteration involves cross functional teams

working simultaneously on various areas like planning, requirements

analysis, design, coding, unit testing, and acceptance testing.

13. List out the various agile approaches.

Answer:

• Crystal Technologies

• Atern(formerly DSDM)

• Feature-driven Development

• Scrum

• Extreme Programming(XP)

14. What is extreme programming?

Answer:

• Extreme programming (XP) is a software development methodology, which is

intended to improve software quality and responsiveness to changing

customer requirements.

11. List various software process models.

Answer:

• Waterfall model,

• Spiral model,

• V-model,

• Iterative model,

• Agile model and RAD model.

12. Define Agile Methods.

Answer:

• Agile model is a combination of iterative and incremental process models with

focus on process adaptability and customer satisfaction by rapid delivery of

working software product.

• Agile Methods break the product into small incremental builds. These builds

are provided in iterations. Every iteration involves cross functional teams

working simultaneously on various areas like planning, requirements

analysis, design, coding, unit testing, and acceptance testing.

13. List out the various agile approaches.

Answer:

• Crystal Technologies

• Atern(formerly DSDM)

• Feature-driven Development

• Scrum

• Extreme Programming(XP)

14. What is extreme programming?

Answer:

• Extreme programming (XP) is a software development methodology, which is

intended to improve software quality and responsiveness to changing

customer requirements.

Page 5

• As a type of agile software development, it advocates frequent "releases" in

short development cycles, to improve productivity and introduce checkpoints

at which new customer requirements can be adopted.

15. Write about COCOMO model.

• Constructive Cost Model.

• It refers to a group of models.

• The basic model was built around the equation:

Effort=c*sizek,

• Where effort is measured in pm,or the number of ‘person-months’.

16. Define organic mode.

Answer:

• Organic mode is the case when relatively small teams developed software in a

highly familiar in-house environment and when the system being developed

was small and the interface requirements were flexible.

17. Give an idea about parametric model?

Answer:

• Models that focus on task or system size. Eg.Function Points.

• FPs originally used to estimate Lines of Code, rather than effort

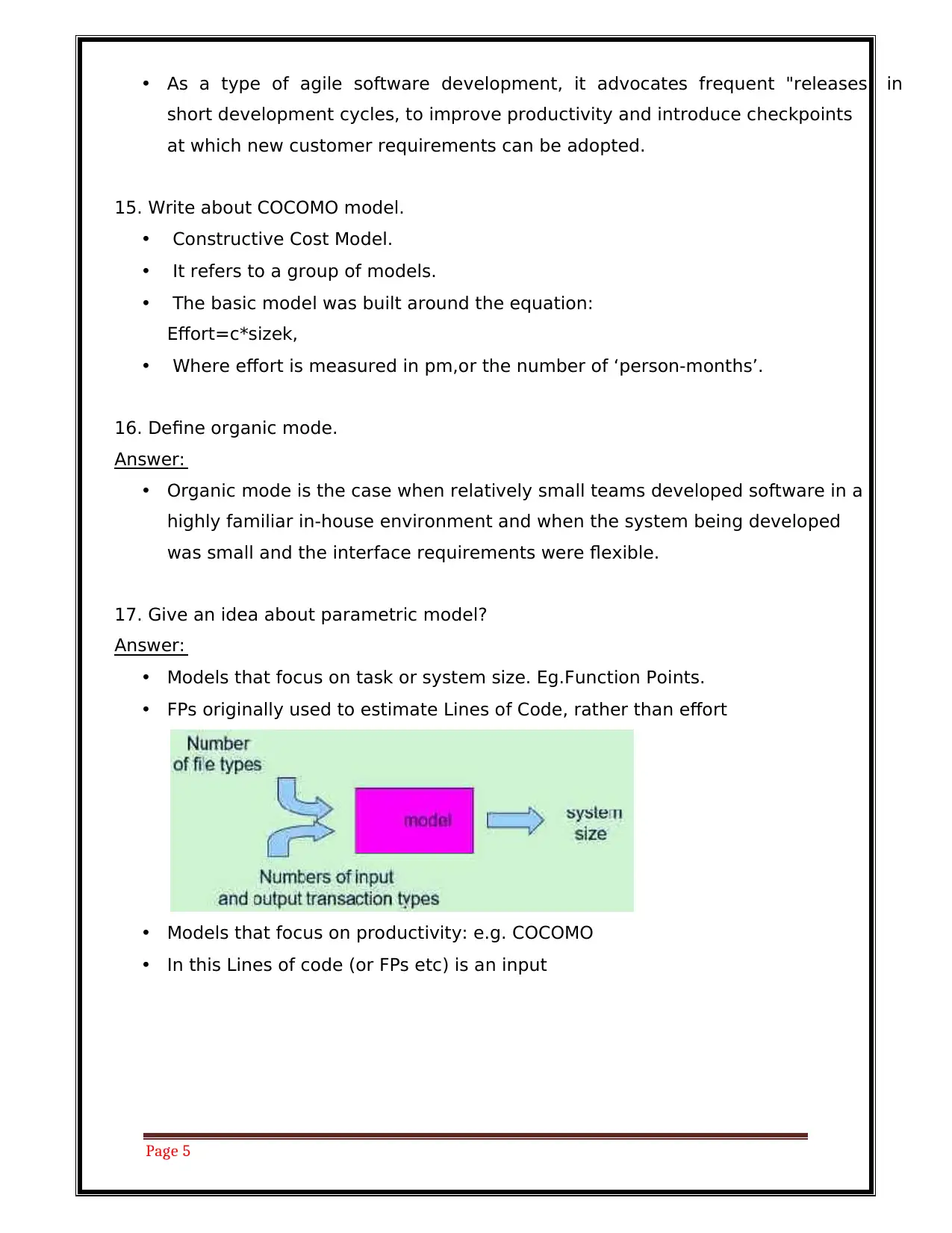

• Models that focus on productivity: e.g. COCOMO

• In this Lines of code (or FPs etc) is an input

• As a type of agile software development, it advocates frequent "releases" in

short development cycles, to improve productivity and introduce checkpoints

at which new customer requirements can be adopted.

15. Write about COCOMO model.

• Constructive Cost Model.

• It refers to a group of models.

• The basic model was built around the equation:

Effort=c*sizek,

• Where effort is measured in pm,or the number of ‘person-months’.

16. Define organic mode.

Answer:

• Organic mode is the case when relatively small teams developed software in a

highly familiar in-house environment and when the system being developed

was small and the interface requirements were flexible.

17. Give an idea about parametric model?

Answer:

• Models that focus on task or system size. Eg.Function Points.

• FPs originally used to estimate Lines of Code, rather than effort

• Models that focus on productivity: e.g. COCOMO

• In this Lines of code (or FPs etc) is an input

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Page 6

18. What is the use of COCOMO model and its types?

Answer:

• COCOMO predicts the effort and schedule for a software product development

based on inputs relating to the size of the software and a number of cost

drivers that affect productivity.

• COCOMO has three different models that reflect the complexity:

• The Basic Model

• The Intermediate Model

• The Detailed Model

19. Write any two advantages of function point analysis.

Answer:

• Improved project estimating

• Understanding project and maintenance productivity

• Managing changing project requirements

• Gathering user requirements.

20. Define application composition.

Answer:

• In application composition the external features of the system that the users

will experience are designed. Prototyping will typically be employed to do this

with small application that can be built using high-productivity application

building tools, development can stop at this point.

21. Determine the stages of estimation carried out in a software project.

(APR/MAY2019)

1. Scoping.

18. What is the use of COCOMO model and its types?

Answer:

• COCOMO predicts the effort and schedule for a software product development

based on inputs relating to the size of the software and a number of cost

drivers that affect productivity.

• COCOMO has three different models that reflect the complexity:

• The Basic Model

• The Intermediate Model

• The Detailed Model

19. Write any two advantages of function point analysis.

Answer:

• Improved project estimating

• Understanding project and maintenance productivity

• Managing changing project requirements

• Gathering user requirements.

20. Define application composition.

Answer:

• In application composition the external features of the system that the users

will experience are designed. Prototyping will typically be employed to do this

with small application that can be built using high-productivity application

building tools, development can stop at this point.

21. Determine the stages of estimation carried out in a software project.

(APR/MAY2019)

1. Scoping.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Page 7

2. Decomposition.

3. Sizing.

4. Expert and Peer Review.

5. Estimation Finalization.

22) Give example for rapid application development.

Some of the tools that can be used in RAD are those that are strong in

automated code generating, such as:

Super Mojo by Penumbra.

Microsoft Lightswitch.

Visual Studio.

Wavemaker.

Delphi RAD studio.

Lazarus IDE.

PART B

1) How the cost- estimation of Agile projects are done? Explain in detail

(Nov/Dec 2018) (13m)

Answer:

• Agile software development methodology is a process for developing software

(like other software development methodologies – Waterfall model, V-Model,

Iterative model etc.)

• However, Agile methodology differs significantly from other methodologies. In

English, Agile means ‘ability to move quickly and easily’ and responding

swiftly to change – this is a key aspect of Agile software development as well.

• Cost estimation in software engineering is the process of predicting the

resources (money, time, and people) necessary to finish a project within

the defined scope.

• Accurate estimates help everyone involved in the project: Project owners

can decide whether to take on the project

Brief overview of Agile Methodology

Why agile estimates?

There are two generally accepted methodologies for developing software:

waterfall, also known as the traditional model, and agile. Their

approaches to estimating projects are quite different. At Steelkiwi, we follow

2. Decomposition.

3. Sizing.

4. Expert and Peer Review.

5. Estimation Finalization.

22) Give example for rapid application development.

Some of the tools that can be used in RAD are those that are strong in

automated code generating, such as:

Super Mojo by Penumbra.

Microsoft Lightswitch.

Visual Studio.

Wavemaker.

Delphi RAD studio.

Lazarus IDE.

PART B

1) How the cost- estimation of Agile projects are done? Explain in detail

(Nov/Dec 2018) (13m)

Answer:

• Agile software development methodology is a process for developing software

(like other software development methodologies – Waterfall model, V-Model,

Iterative model etc.)

• However, Agile methodology differs significantly from other methodologies. In

English, Agile means ‘ability to move quickly and easily’ and responding

swiftly to change – this is a key aspect of Agile software development as well.

• Cost estimation in software engineering is the process of predicting the

resources (money, time, and people) necessary to finish a project within

the defined scope.

• Accurate estimates help everyone involved in the project: Project owners

can decide whether to take on the project

Brief overview of Agile Methodology

Why agile estimates?

There are two generally accepted methodologies for developing software:

waterfall, also known as the traditional model, and agile. Their

approaches to estimating projects are quite different. At Steelkiwi, we follow

Page 8

an agile development methodology. Why? Let’s explain using a real-world

example of two similar projects by the Federal Bureau of Investigation (FBI)

that were developed with two different methodologies and resulted in vastly

different outcomes.

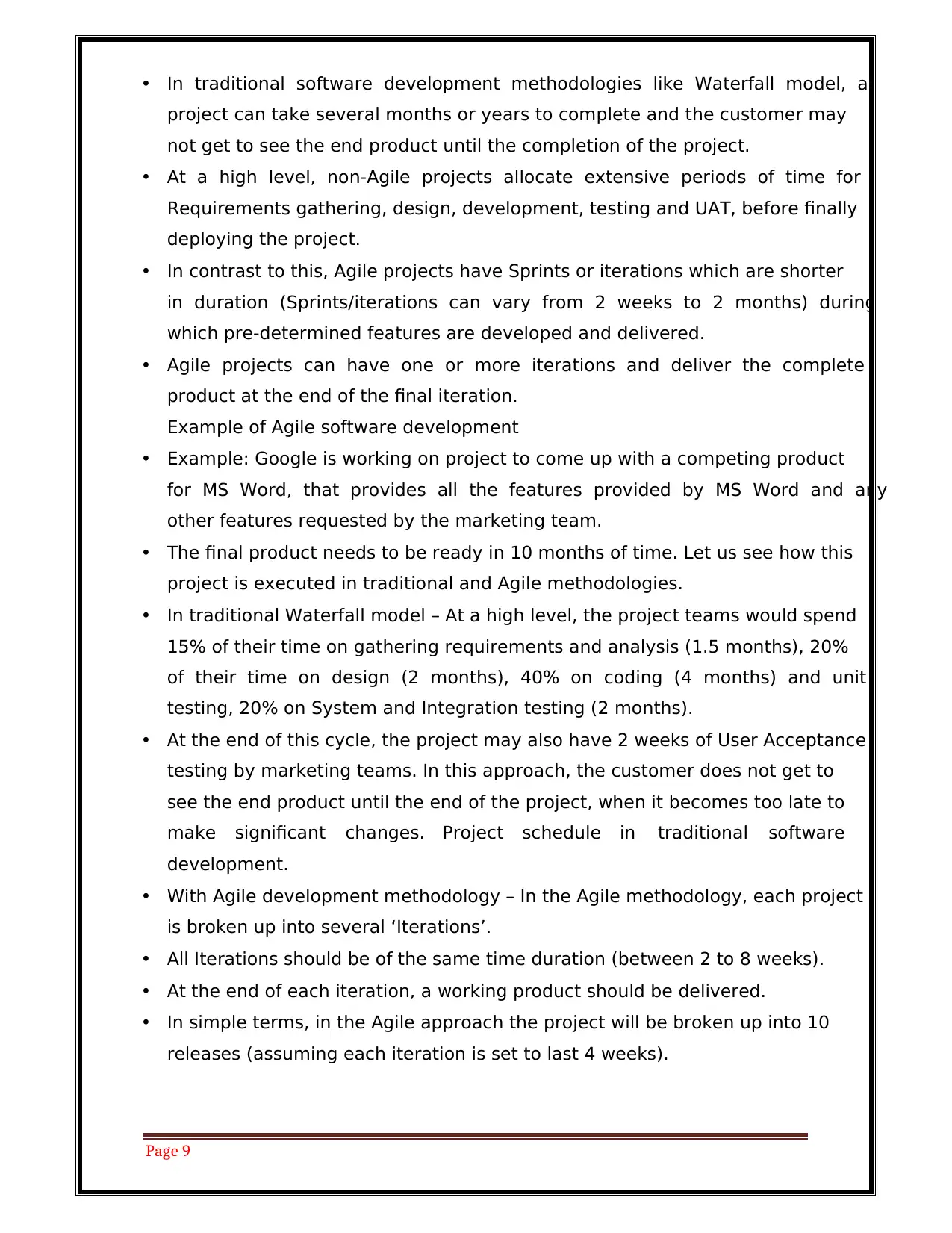

Waterfall vs Agile

The waterfall methodology is a sequential design process, meaning each

phase is started and completed before moving on to the next. Once a phase

is completed, developers can’t go back to a previous step.

• With agile development, planning consists of three main building blocks:

scope (requirements), resources (software development budget), and

time. With waterfall, the scope is fixed while the budget and time are

flexible to make sure all required functionally is delivered. This means that

the project isn’t considered finished until it meets all requirements. The

agile development, on the other hand, is quality-driven, meaning that

developers aim at maximizing value while sticking to a fixed budget and

schedule.

an agile development methodology. Why? Let’s explain using a real-world

example of two similar projects by the Federal Bureau of Investigation (FBI)

that were developed with two different methodologies and resulted in vastly

different outcomes.

Waterfall vs Agile

The waterfall methodology is a sequential design process, meaning each

phase is started and completed before moving on to the next. Once a phase

is completed, developers can’t go back to a previous step.

• With agile development, planning consists of three main building blocks:

scope (requirements), resources (software development budget), and

time. With waterfall, the scope is fixed while the budget and time are

flexible to make sure all required functionally is delivered. This means that

the project isn’t considered finished until it meets all requirements. The

agile development, on the other hand, is quality-driven, meaning that

developers aim at maximizing value while sticking to a fixed budget and

schedule.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Page 9

• In traditional software development methodologies like Waterfall model, a

project can take several months or years to complete and the customer may

not get to see the end product until the completion of the project.

• At a high level, non-Agile projects allocate extensive periods of time for

Requirements gathering, design, development, testing and UAT, before finally

deploying the project.

• In contrast to this, Agile projects have Sprints or iterations which are shorter

in duration (Sprints/iterations can vary from 2 weeks to 2 months) during

which pre-determined features are developed and delivered.

• Agile projects can have one or more iterations and deliver the complete

product at the end of the final iteration.

Example of Agile software development

• Example: Google is working on project to come up with a competing product

for MS Word, that provides all the features provided by MS Word and any

other features requested by the marketing team.

• The final product needs to be ready in 10 months of time. Let us see how this

project is executed in traditional and Agile methodologies.

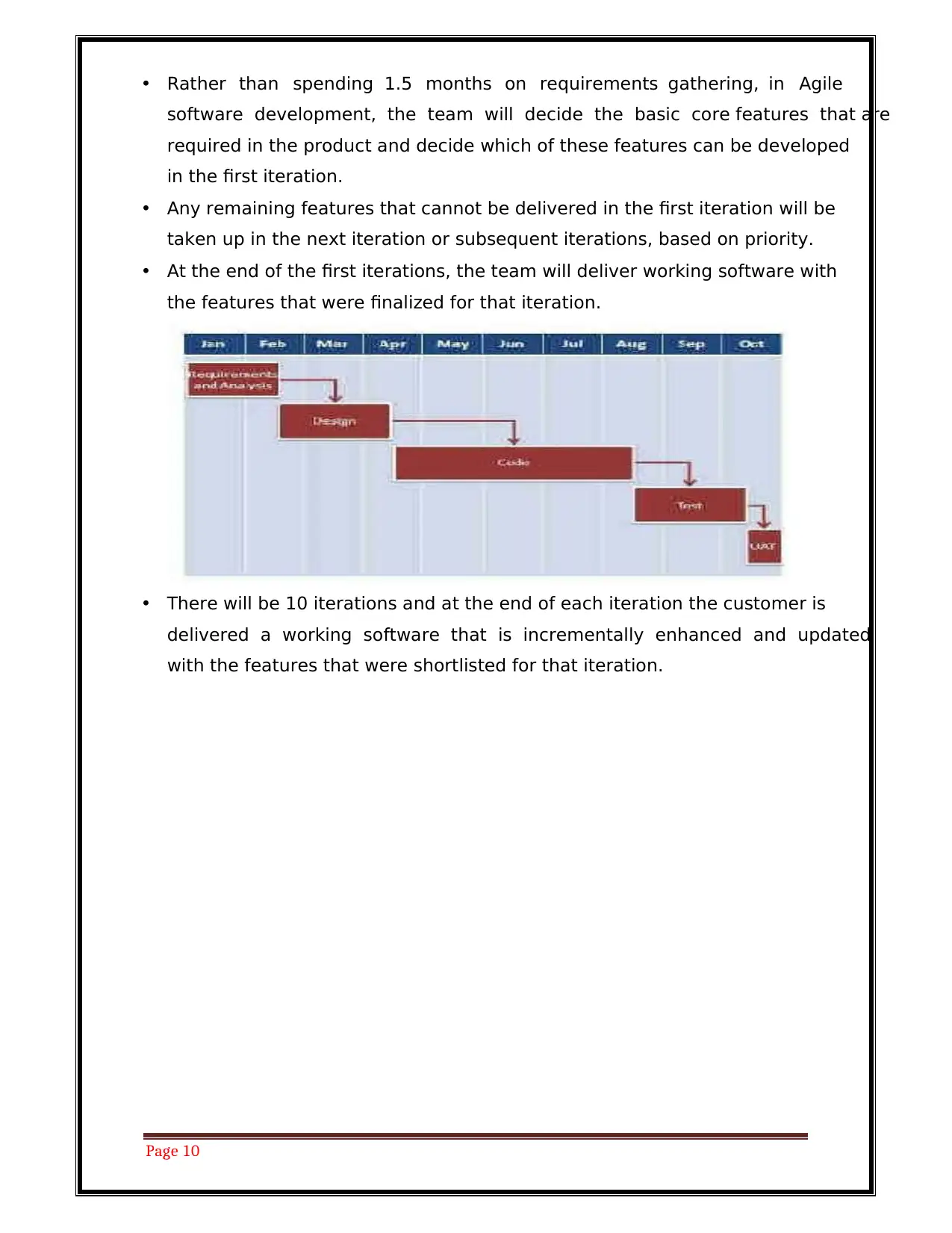

• In traditional Waterfall model – At a high level, the project teams would spend

15% of their time on gathering requirements and analysis (1.5 months), 20%

of their time on design (2 months), 40% on coding (4 months) and unit

testing, 20% on System and Integration testing (2 months).

• At the end of this cycle, the project may also have 2 weeks of User Acceptance

testing by marketing teams. In this approach, the customer does not get to

see the end product until the end of the project, when it becomes too late to

make significant changes. Project schedule in traditional software

development.

• With Agile development methodology – In the Agile methodology, each project

is broken up into several ‘Iterations’.

• All Iterations should be of the same time duration (between 2 to 8 weeks).

• At the end of each iteration, a working product should be delivered.

• In simple terms, in the Agile approach the project will be broken up into 10

releases (assuming each iteration is set to last 4 weeks).

• In traditional software development methodologies like Waterfall model, a

project can take several months or years to complete and the customer may

not get to see the end product until the completion of the project.

• At a high level, non-Agile projects allocate extensive periods of time for

Requirements gathering, design, development, testing and UAT, before finally

deploying the project.

• In contrast to this, Agile projects have Sprints or iterations which are shorter

in duration (Sprints/iterations can vary from 2 weeks to 2 months) during

which pre-determined features are developed and delivered.

• Agile projects can have one or more iterations and deliver the complete

product at the end of the final iteration.

Example of Agile software development

• Example: Google is working on project to come up with a competing product

for MS Word, that provides all the features provided by MS Word and any

other features requested by the marketing team.

• The final product needs to be ready in 10 months of time. Let us see how this

project is executed in traditional and Agile methodologies.

• In traditional Waterfall model – At a high level, the project teams would spend

15% of their time on gathering requirements and analysis (1.5 months), 20%

of their time on design (2 months), 40% on coding (4 months) and unit

testing, 20% on System and Integration testing (2 months).

• At the end of this cycle, the project may also have 2 weeks of User Acceptance

testing by marketing teams. In this approach, the customer does not get to

see the end product until the end of the project, when it becomes too late to

make significant changes. Project schedule in traditional software

development.

• With Agile development methodology – In the Agile methodology, each project

is broken up into several ‘Iterations’.

• All Iterations should be of the same time duration (between 2 to 8 weeks).

• At the end of each iteration, a working product should be delivered.

• In simple terms, in the Agile approach the project will be broken up into 10

releases (assuming each iteration is set to last 4 weeks).

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Page 10

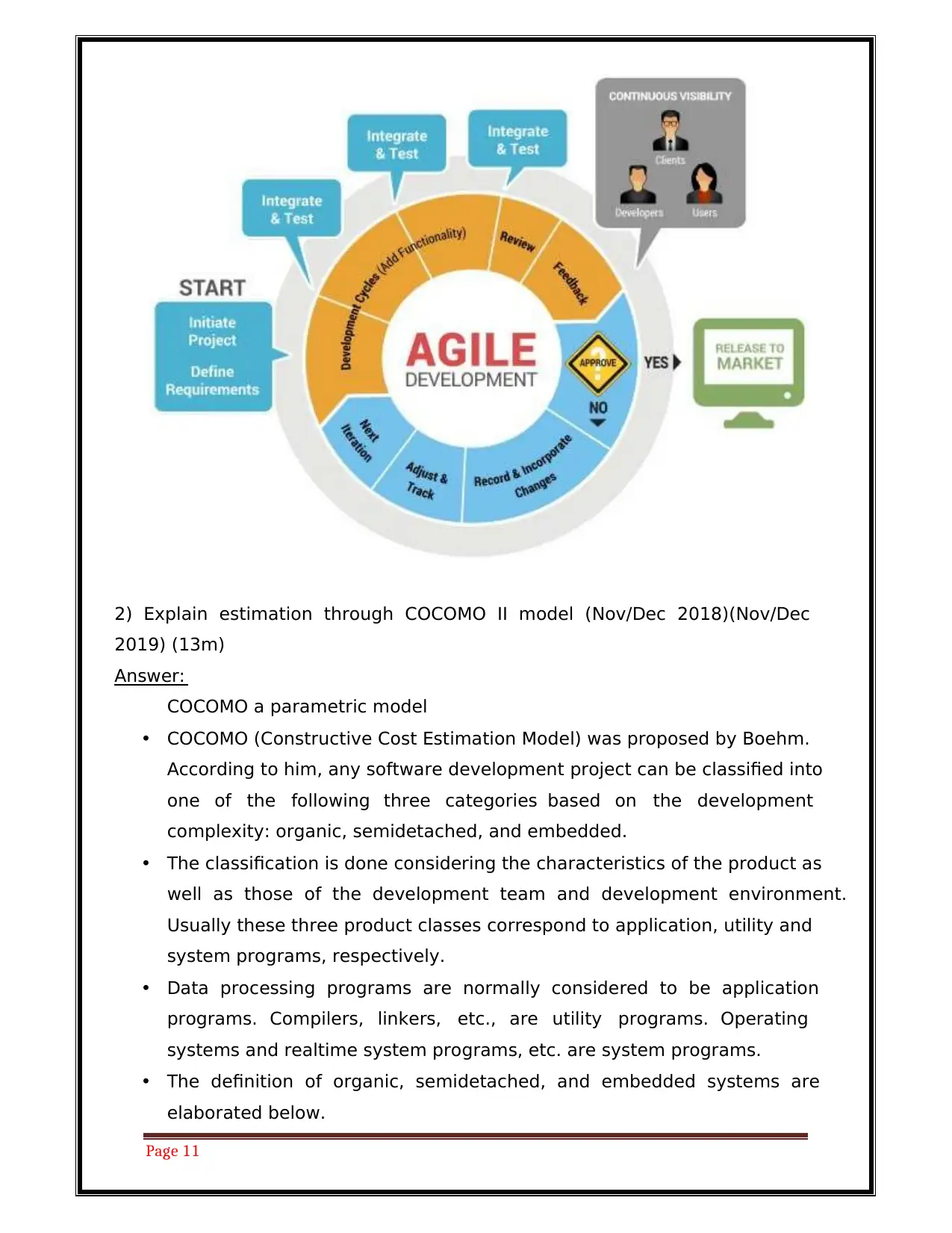

• Rather than spending 1.5 months on requirements gathering, in Agile

software development, the team will decide the basic core features that are

required in the product and decide which of these features can be developed

in the first iteration.

• Any remaining features that cannot be delivered in the first iteration will be

taken up in the next iteration or subsequent iterations, based on priority.

• At the end of the first iterations, the team will deliver working software with

the features that were finalized for that iteration.

• There will be 10 iterations and at the end of each iteration the customer is

delivered a working software that is incrementally enhanced and updated

with the features that were shortlisted for that iteration.

• Rather than spending 1.5 months on requirements gathering, in Agile

software development, the team will decide the basic core features that are

required in the product and decide which of these features can be developed

in the first iteration.

• Any remaining features that cannot be delivered in the first iteration will be

taken up in the next iteration or subsequent iterations, based on priority.

• At the end of the first iterations, the team will deliver working software with

the features that were finalized for that iteration.

• There will be 10 iterations and at the end of each iteration the customer is

delivered a working software that is incrementally enhanced and updated

with the features that were shortlisted for that iteration.

Page 11

2) Explain estimation through COCOMO II model (Nov/Dec 2018)(Nov/Dec

2019) (13m)

Answer:

COCOMO a parametric model

• COCOMO (Constructive Cost Estimation Model) was proposed by Boehm.

According to him, any software development project can be classified into

one of the following three categories based on the development

complexity: organic, semidetached, and embedded.

• The classification is done considering the characteristics of the product as

well as those of the development team and development environment.

Usually these three product classes correspond to application, utility and

system programs, respectively.

• Data processing programs are normally considered to be application

programs. Compilers, linkers, etc., are utility programs. Operating

systems and realtime system programs, etc. are system programs.

• The definition of organic, semidetached, and embedded systems are

elaborated below.

2) Explain estimation through COCOMO II model (Nov/Dec 2018)(Nov/Dec

2019) (13m)

Answer:

COCOMO a parametric model

• COCOMO (Constructive Cost Estimation Model) was proposed by Boehm.

According to him, any software development project can be classified into

one of the following three categories based on the development

complexity: organic, semidetached, and embedded.

• The classification is done considering the characteristics of the product as

well as those of the development team and development environment.

Usually these three product classes correspond to application, utility and

system programs, respectively.

• Data processing programs are normally considered to be application

programs. Compilers, linkers, etc., are utility programs. Operating

systems and realtime system programs, etc. are system programs.

• The definition of organic, semidetached, and embedded systems are

elaborated below.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 34

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.