Regression Analysis and Statistical Inference: A Statistics Assignment

VerifiedAdded on 2023/04/26

|6

|1397

|479

Homework Assignment

AI Summary

This assignment explores a multiple regression analysis, examining the relationship between the heights of sons, fathers, and mothers. The student begins by interpreting key statistical outputs, including the standard error of estimate and the coefficient of determination, to assess the model's accura...

Running Head: STATISTICS 1

Topic: Statistics

By (Name of Student)

(Institutional Affiliation)

Topic: Statistics

By (Name of Student)

(Institutional Affiliation)

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

STATISTICS 2

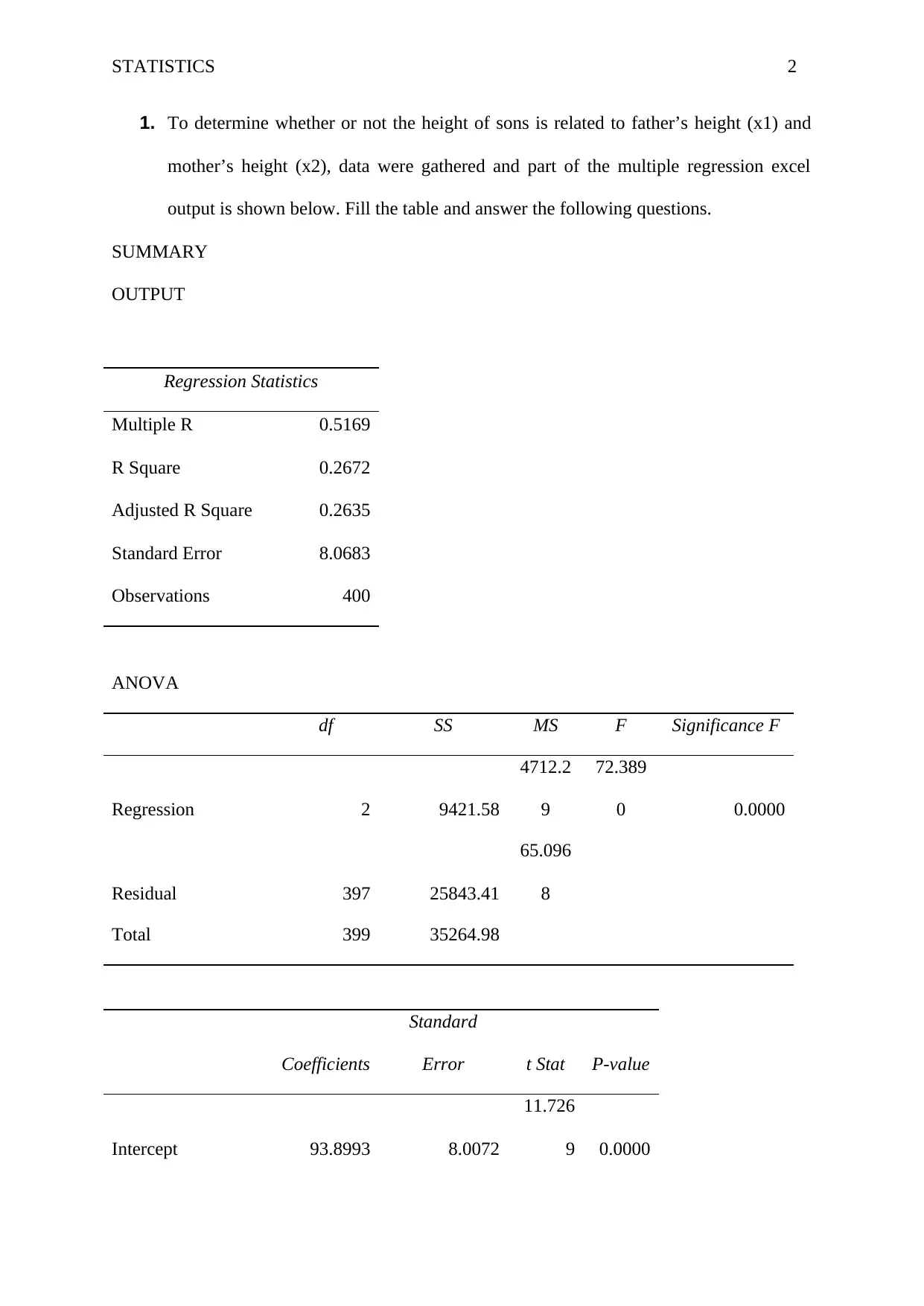

1. To determine whether or not the height of sons is related to father’s height (x1) and

mother’s height (x2), data were gathered and part of the multiple regression excel

output is shown below. Fill the table and answer the following questions.

SUMMARY

OUTPUT

Regression Statistics

Multiple R 0.5169

R Square 0.2672

Adjusted R Square 0.2635

Standard Error 8.0683

Observations 400

ANOVA

df SS MS F Significance F

Regression 2 9421.58

4712.2

9

72.389

0 0.0000

Residual 397 25843.41

65.096

8

Total 399 35264.98

Coefficients

Standard

Error t Stat P-value

Intercept 93.8993 8.0072

11.726

9 0.0000

1. To determine whether or not the height of sons is related to father’s height (x1) and

mother’s height (x2), data were gathered and part of the multiple regression excel

output is shown below. Fill the table and answer the following questions.

SUMMARY

OUTPUT

Regression Statistics

Multiple R 0.5169

R Square 0.2672

Adjusted R Square 0.2635

Standard Error 8.0683

Observations 400

ANOVA

df SS MS F Significance F

Regression 2 9421.58

4712.2

9

72.389

0 0.0000

Residual 397 25843.41

65.096

8

Total 399 35264.98

Coefficients

Standard

Error t Stat P-value

Intercept 93.8993 8.0072

11.726

9 0.0000

STATISTICS 3

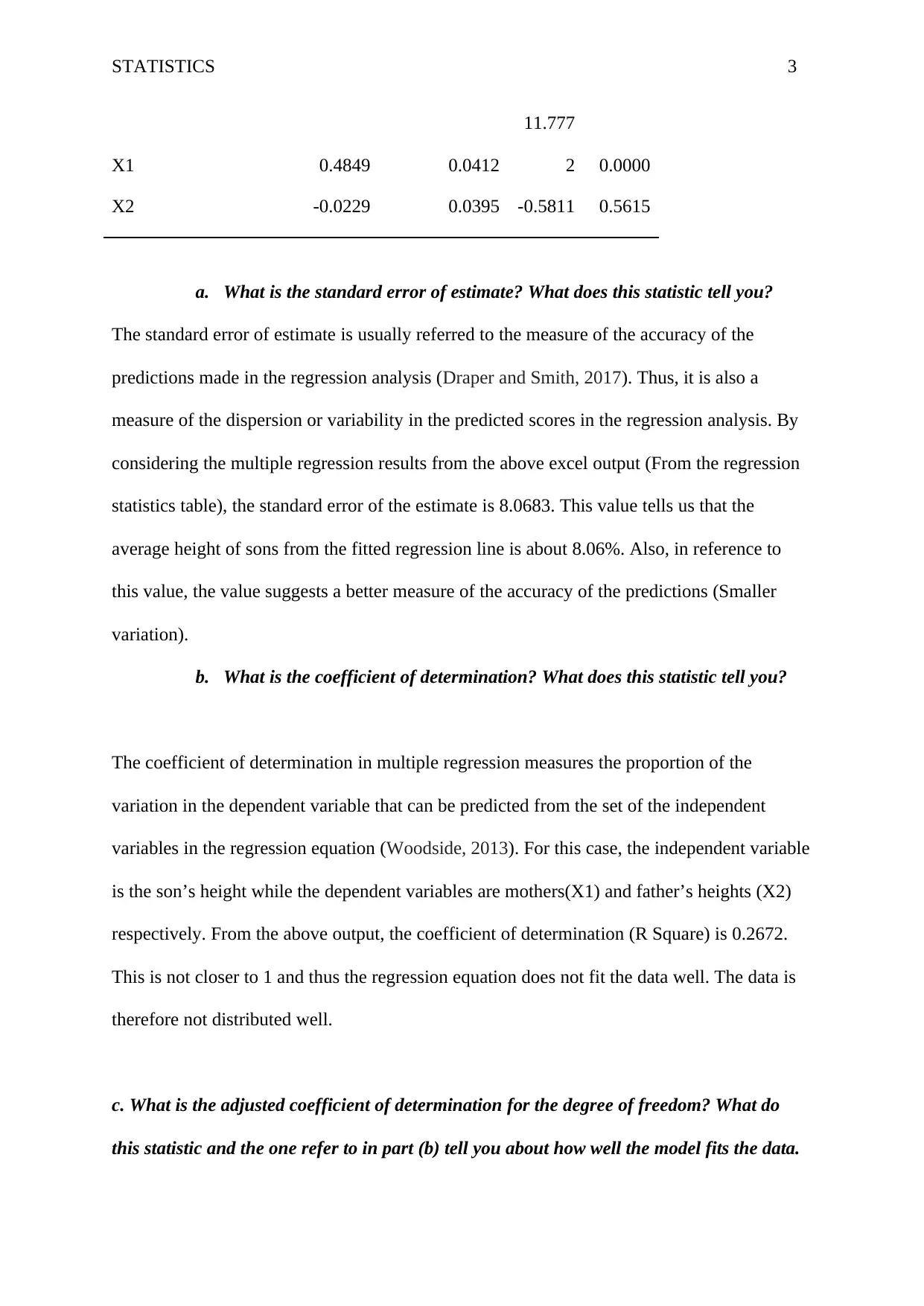

X1 0.4849 0.0412

11.777

2 0.0000

X2 -0.0229 0.0395 -0.5811 0.5615

a. What is the standard error of estimate? What does this statistic tell you?

The standard error of estimate is usually referred to the measure of the accuracy of the

predictions made in the regression analysis (Draper and Smith, 2017). Thus, it is also a

measure of the dispersion or variability in the predicted scores in the regression analysis. By

considering the multiple regression results from the above excel output (From the regression

statistics table), the standard error of the estimate is 8.0683. This value tells us that the

average height of sons from the fitted regression line is about 8.06%. Also, in reference to

this value, the value suggests a better measure of the accuracy of the predictions (Smaller

variation).

b. What is the coefficient of determination? What does this statistic tell you?

The coefficient of determination in multiple regression measures the proportion of the

variation in the dependent variable that can be predicted from the set of the independent

variables in the regression equation (Woodside, 2013). For this case, the independent variable

is the son’s height while the dependent variables are mothers(X1) and father’s heights (X2)

respectively. From the above output, the coefficient of determination (R Square) is 0.2672.

This is not closer to 1 and thus the regression equation does not fit the data well. The data is

therefore not distributed well.

c. What is the adjusted coefficient of determination for the degree of freedom? What do

this statistic and the one refer to in part (b) tell you about how well the model fits the data.

X1 0.4849 0.0412

11.777

2 0.0000

X2 -0.0229 0.0395 -0.5811 0.5615

a. What is the standard error of estimate? What does this statistic tell you?

The standard error of estimate is usually referred to the measure of the accuracy of the

predictions made in the regression analysis (Draper and Smith, 2017). Thus, it is also a

measure of the dispersion or variability in the predicted scores in the regression analysis. By

considering the multiple regression results from the above excel output (From the regression

statistics table), the standard error of the estimate is 8.0683. This value tells us that the

average height of sons from the fitted regression line is about 8.06%. Also, in reference to

this value, the value suggests a better measure of the accuracy of the predictions (Smaller

variation).

b. What is the coefficient of determination? What does this statistic tell you?

The coefficient of determination in multiple regression measures the proportion of the

variation in the dependent variable that can be predicted from the set of the independent

variables in the regression equation (Woodside, 2013). For this case, the independent variable

is the son’s height while the dependent variables are mothers(X1) and father’s heights (X2)

respectively. From the above output, the coefficient of determination (R Square) is 0.2672.

This is not closer to 1 and thus the regression equation does not fit the data well. The data is

therefore not distributed well.

c. What is the adjusted coefficient of determination for the degree of freedom? What do

this statistic and the one refer to in part (b) tell you about how well the model fits the data.

You're viewing a preview

Unlock full access by subscribing today!

STATISTICS 4

From the above output results (From the regression statistics table), the adjusted coefficient

of the determination for the degrees of freedom (Adjusted R Square) is 0.2635. Since both the

coefficient of determination and the adjusted coefficient of the determination of the degrees

of freedom are not close to one, the model thus does not fits the data well. Normally, a value

closer to 1 indicates a better fit of the data than a value not closer to 1 (Cohen et al., 2016).

d. Test the overall utility of the model. What does the test result tell you?

In testing the overall utility of the model, the F-test is applied since the F-test of the overall

significance indicates whether the linear regression model provides a better fit to the data

than a model that contains no independent variables (Todeschini et al., 2018). Thus to test the

overall utility of the model, we conduct hypothesis testing and examine the results as follows;

Stating the null and alternative hypotheses as;

Null Hypothesis (Ho): This model with independent variables fits the data well i.e.

β1 = 0 and β3 = 0

Alternative Hypothesis (Ha): This model with independent does not variables (fits

the data better than the intercept) i.e. β1 ≠ 0 and β3 ≠ 0

From the Analysis of Variance table (ANOVA table), the F-test statistics is 72.3890 with a P-

Value of 0.000. Since this P-value is less than 0.05, we reject the null hypothesis that the

regression parameters are zero at significance level 0.05. Thus it can be concluded that the

parameters are jointly statistically significant at a significance level of 0.05 and hence the

model does not fit the data well

e. Interpret each of the coefficients.

To interpret the coefficients, we consider the regression coefficient table;

The y-intercept of this regression model is 93.8993. This is the constant of the regression

model.

From the above output results (From the regression statistics table), the adjusted coefficient

of the determination for the degrees of freedom (Adjusted R Square) is 0.2635. Since both the

coefficient of determination and the adjusted coefficient of the determination of the degrees

of freedom are not close to one, the model thus does not fits the data well. Normally, a value

closer to 1 indicates a better fit of the data than a value not closer to 1 (Cohen et al., 2016).

d. Test the overall utility of the model. What does the test result tell you?

In testing the overall utility of the model, the F-test is applied since the F-test of the overall

significance indicates whether the linear regression model provides a better fit to the data

than a model that contains no independent variables (Todeschini et al., 2018). Thus to test the

overall utility of the model, we conduct hypothesis testing and examine the results as follows;

Stating the null and alternative hypotheses as;

Null Hypothesis (Ho): This model with independent variables fits the data well i.e.

β1 = 0 and β3 = 0

Alternative Hypothesis (Ha): This model with independent does not variables (fits

the data better than the intercept) i.e. β1 ≠ 0 and β3 ≠ 0

From the Analysis of Variance table (ANOVA table), the F-test statistics is 72.3890 with a P-

Value of 0.000. Since this P-value is less than 0.05, we reject the null hypothesis that the

regression parameters are zero at significance level 0.05. Thus it can be concluded that the

parameters are jointly statistically significant at a significance level of 0.05 and hence the

model does not fit the data well

e. Interpret each of the coefficients.

To interpret the coefficients, we consider the regression coefficient table;

The y-intercept of this regression model is 93.8993. This is the constant of the regression

model.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

STATISTICS 5

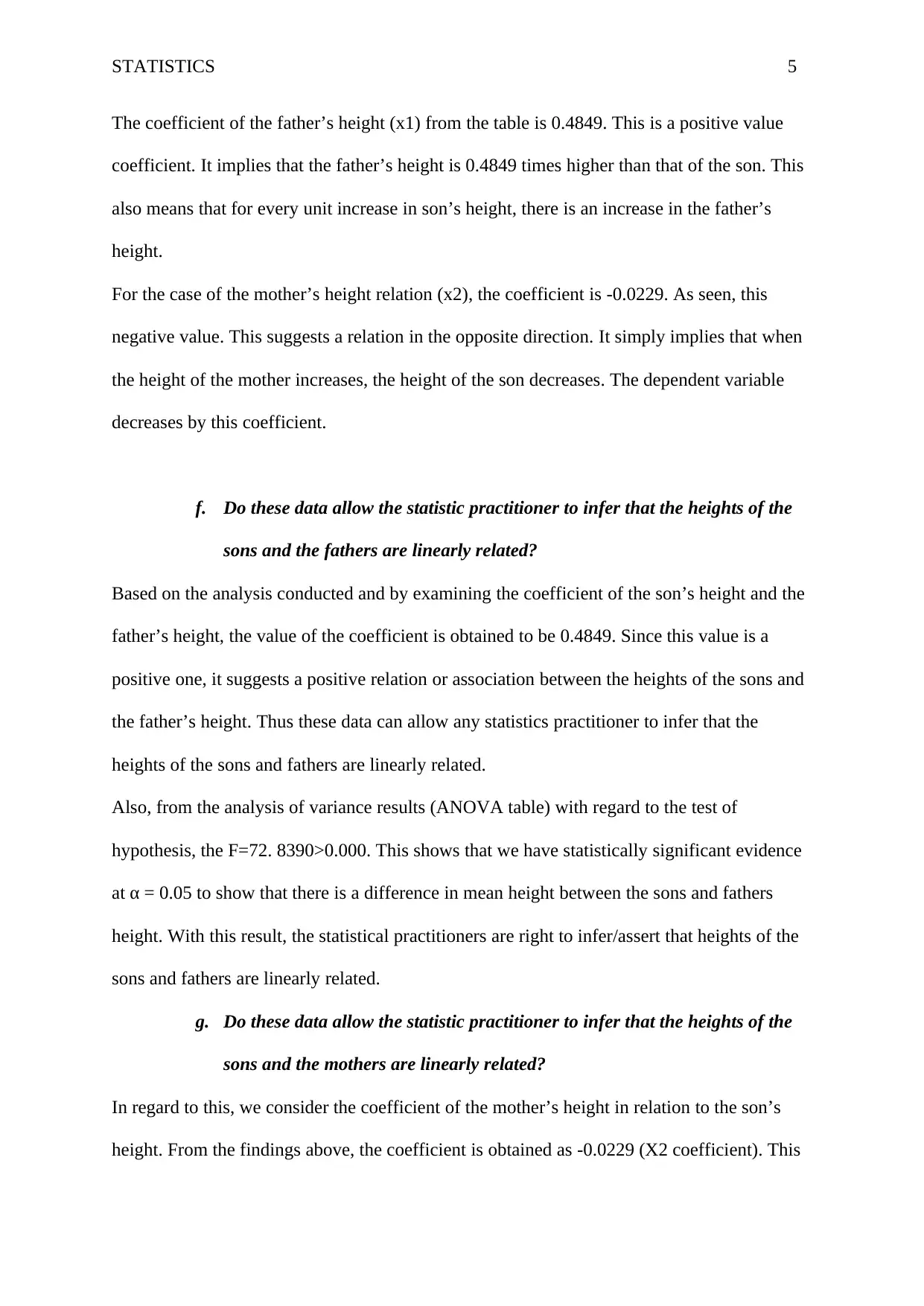

The coefficient of the father’s height (x1) from the table is 0.4849. This is a positive value

coefficient. It implies that the father’s height is 0.4849 times higher than that of the son. This

also means that for every unit increase in son’s height, there is an increase in the father’s

height.

For the case of the mother’s height relation (x2), the coefficient is -0.0229. As seen, this

negative value. This suggests a relation in the opposite direction. It simply implies that when

the height of the mother increases, the height of the son decreases. The dependent variable

decreases by this coefficient.

f. Do these data allow the statistic practitioner to infer that the heights of the

sons and the fathers are linearly related?

Based on the analysis conducted and by examining the coefficient of the son’s height and the

father’s height, the value of the coefficient is obtained to be 0.4849. Since this value is a

positive one, it suggests a positive relation or association between the heights of the sons and

the father’s height. Thus these data can allow any statistics practitioner to infer that the

heights of the sons and fathers are linearly related.

Also, from the analysis of variance results (ANOVA table) with regard to the test of

hypothesis, the F=72. 8390>0.000. This shows that we have statistically significant evidence

at α = 0.05 to show that there is a difference in mean height between the sons and fathers

height. With this result, the statistical practitioners are right to infer/assert that heights of the

sons and fathers are linearly related.

g. Do these data allow the statistic practitioner to infer that the heights of the

sons and the mothers are linearly related?

In regard to this, we consider the coefficient of the mother’s height in relation to the son’s

height. From the findings above, the coefficient is obtained as -0.0229 (X2 coefficient). This

The coefficient of the father’s height (x1) from the table is 0.4849. This is a positive value

coefficient. It implies that the father’s height is 0.4849 times higher than that of the son. This

also means that for every unit increase in son’s height, there is an increase in the father’s

height.

For the case of the mother’s height relation (x2), the coefficient is -0.0229. As seen, this

negative value. This suggests a relation in the opposite direction. It simply implies that when

the height of the mother increases, the height of the son decreases. The dependent variable

decreases by this coefficient.

f. Do these data allow the statistic practitioner to infer that the heights of the

sons and the fathers are linearly related?

Based on the analysis conducted and by examining the coefficient of the son’s height and the

father’s height, the value of the coefficient is obtained to be 0.4849. Since this value is a

positive one, it suggests a positive relation or association between the heights of the sons and

the father’s height. Thus these data can allow any statistics practitioner to infer that the

heights of the sons and fathers are linearly related.

Also, from the analysis of variance results (ANOVA table) with regard to the test of

hypothesis, the F=72. 8390>0.000. This shows that we have statistically significant evidence

at α = 0.05 to show that there is a difference in mean height between the sons and fathers

height. With this result, the statistical practitioners are right to infer/assert that heights of the

sons and fathers are linearly related.

g. Do these data allow the statistic practitioner to infer that the heights of the

sons and the mothers are linearly related?

In regard to this, we consider the coefficient of the mother’s height in relation to the son’s

height. From the findings above, the coefficient is obtained as -0.0229 (X2 coefficient). This

STATISTICS 6

is a negative coefficient. This suggests that there is a negative relationship between the

variables. This basically means that the height of the son is negatively related to the height of

the mother. Hence it would be appropriate for the statistics practitioner to infer that the

heights of the sons and the height of the mothers are linearly related or associated.

References

Draper, N. R., & Smith, H. (2017). Applied regression analysis (Vol. 326). John Wiley &

Sons.

Cohen, P., West, S. G., & Aiken, L. S. (2016). Applied multiple regression/correlation

analysis for the behavioral sciences. Psychology Press.

Todeschini, R., Consonni, V., Mauri, A., & Pavan, M. (2018). Detecting “bad” regression

models: multicriteria fitness functions in regression analysis. Analytica Chimica

Acta, 515(1), 199-208.

Woodside, A. G. (2013). Moving beyond multiple regression analysis to algorithms: Calling

for adoption of a paradigm shift from symmetric to asymmetric thinking in data

analysis and crafting theory. John Wiley & Sons.

is a negative coefficient. This suggests that there is a negative relationship between the

variables. This basically means that the height of the son is negatively related to the height of

the mother. Hence it would be appropriate for the statistics practitioner to infer that the

heights of the sons and the height of the mothers are linearly related or associated.

References

Draper, N. R., & Smith, H. (2017). Applied regression analysis (Vol. 326). John Wiley &

Sons.

Cohen, P., West, S. G., & Aiken, L. S. (2016). Applied multiple regression/correlation

analysis for the behavioral sciences. Psychology Press.

Todeschini, R., Consonni, V., Mauri, A., & Pavan, M. (2018). Detecting “bad” regression

models: multicriteria fitness functions in regression analysis. Analytica Chimica

Acta, 515(1), 199-208.

Woodside, A. G. (2013). Moving beyond multiple regression analysis to algorithms: Calling

for adoption of a paradigm shift from symmetric to asymmetric thinking in data

analysis and crafting theory. John Wiley & Sons.

You're viewing a preview

Unlock full access by subscribing today!

1 out of 6

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

© 2024 | Zucol Services PVT LTD | All rights reserved.