The advent of AWS to Data Management in the 24th Century

VerifiedAdded on 2023/01/11

|58

|20799

|85

AI Summary

This dissertation explores the challenges faced by Amazon in data management before the advent of cloud computing and the potential impact of AWS on their functionalities. It discusses the administrative process of data management and the importance of managing data effectively. The dissertation also highlights the various services offered by AWS for cloud data management and their benefits. The research aims to identify the challenges faced by Amazon in data management and the ways in which AWS helps in business expansion through effective utilization of data management.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

The advent of AWS to

Data Mgt in the 24th

Century

Data Mgt in the 24th

Century

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

Abstract

Data management refers to the administrative process that comprises of acquisition,

validation, storage, protection along with processing of data is needed for ensuring accessibility,

reliability along with timeliness of data for their users. Enterprises makes use of big data for

informing business decisions through which deeper insight can be attained with reference to

trends, opportunities and customer behaviour through which extraordinary customer experiences

can be created. There are different challenges that are faced by organisation while delivering

their services within market. It becomes necessary to ensure that data is managed in an adequate

manner so that relevant decisions can be formulated. With evolution of technology, data

management has also evolved a lot with respect to issues that are faced in this aspect and

designing a relevant solution for dealing with that.

Amazon Web Services renders on demand services to firms through which primitive

distributed computing and technical infrastructure can be provided as a building tools and blocks.

AWS not only provides the firms with an option for data management but also offers services at

low cost, high elasticity, agility, flexibility, security, backup, application hosting, database and

many other features are being furnished. Along with this, the pay per use model aids firms to

easily expand their operations and functionalities within the global market. The challenges that

are being faced by organisation while delivering their services includes integration, storage, cost

of infrastructure, security, migration, lack of training & human workforce, analysis and many

more are there. In addition to this, the amount of data is continuously increasing which makes it

important for firms to take adequate measures or techniques through which this can be analysed

and decisions can be formulated by making use of knowledge.

Data management refers to the administrative process that comprises of acquisition,

validation, storage, protection along with processing of data is needed for ensuring accessibility,

reliability along with timeliness of data for their users. Enterprises makes use of big data for

informing business decisions through which deeper insight can be attained with reference to

trends, opportunities and customer behaviour through which extraordinary customer experiences

can be created. There are different challenges that are faced by organisation while delivering

their services within market. It becomes necessary to ensure that data is managed in an adequate

manner so that relevant decisions can be formulated. With evolution of technology, data

management has also evolved a lot with respect to issues that are faced in this aspect and

designing a relevant solution for dealing with that.

Amazon Web Services renders on demand services to firms through which primitive

distributed computing and technical infrastructure can be provided as a building tools and blocks.

AWS not only provides the firms with an option for data management but also offers services at

low cost, high elasticity, agility, flexibility, security, backup, application hosting, database and

many other features are being furnished. Along with this, the pay per use model aids firms to

easily expand their operations and functionalities within the global market. The challenges that

are being faced by organisation while delivering their services includes integration, storage, cost

of infrastructure, security, migration, lack of training & human workforce, analysis and many

more are there. In addition to this, the amount of data is continuously increasing which makes it

important for firms to take adequate measures or techniques through which this can be analysed

and decisions can be formulated by making use of knowledge.

Table of Contents

Abstract............................................................................................................................................2

Title: “The advent of AWS to Data Management in the 24th Century”...........................................1

Chapter 1..........................................................................................................................................1

Introduction......................................................................................................................................1

Background of the research....................................................................................................3

Problem statement..................................................................................................................4

Research aim..........................................................................................................................4

Research Objectives...............................................................................................................5

Research Questions................................................................................................................5

Statement of Hypothesis.........................................................................................................5

Rationale of the study.............................................................................................................5

Significance of the study........................................................................................................6

Structure of the dissertation....................................................................................................6

Summary..........................................................................................................................................8

Chapter 2..........................................................................................................................................9

Literature Review.............................................................................................................................9

Introduction......................................................................................................................................9

Review...........................................................................................................................................10

History of data management...............................................................................................10

Identify challenges faced by Amazon in data management...............................................16

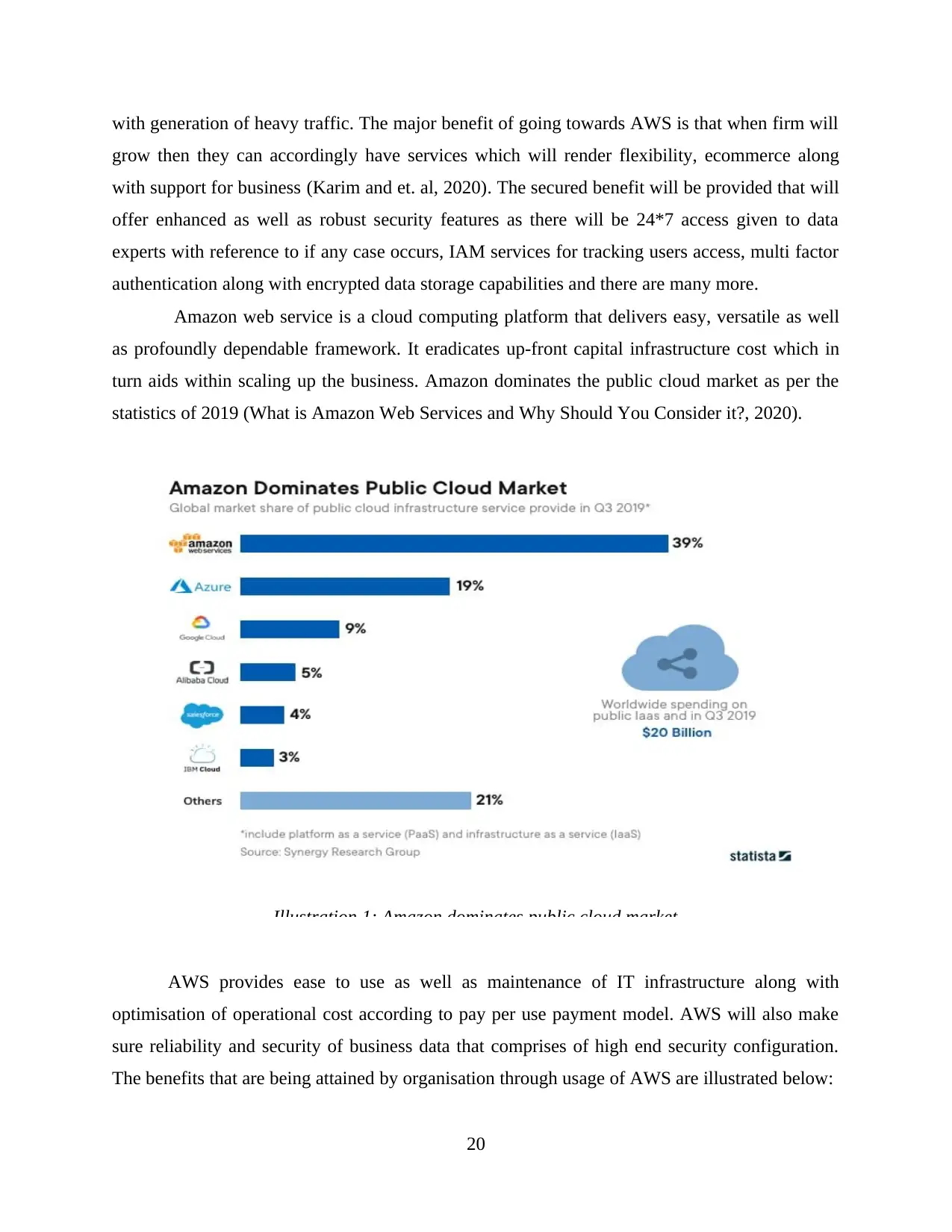

Benefits collected by Amazon by using AWS (cloud computing) in data management...19

Ways through which AWS help in business expansion by effective utilisation of data

management.........................................................................................................................23

Summary........................................................................................................................................27

Chapter 3........................................................................................................................................28

Research Methodology..................................................................................................................28

Introduction....................................................................................................................................28

Methodologies................................................................................................................................28

Summary........................................................................................................................................36

Abstract............................................................................................................................................2

Title: “The advent of AWS to Data Management in the 24th Century”...........................................1

Chapter 1..........................................................................................................................................1

Introduction......................................................................................................................................1

Background of the research....................................................................................................3

Problem statement..................................................................................................................4

Research aim..........................................................................................................................4

Research Objectives...............................................................................................................5

Research Questions................................................................................................................5

Statement of Hypothesis.........................................................................................................5

Rationale of the study.............................................................................................................5

Significance of the study........................................................................................................6

Structure of the dissertation....................................................................................................6

Summary..........................................................................................................................................8

Chapter 2..........................................................................................................................................9

Literature Review.............................................................................................................................9

Introduction......................................................................................................................................9

Review...........................................................................................................................................10

History of data management...............................................................................................10

Identify challenges faced by Amazon in data management...............................................16

Benefits collected by Amazon by using AWS (cloud computing) in data management...19

Ways through which AWS help in business expansion by effective utilisation of data

management.........................................................................................................................23

Summary........................................................................................................................................27

Chapter 3........................................................................................................................................28

Research Methodology..................................................................................................................28

Introduction....................................................................................................................................28

Methodologies................................................................................................................................28

Summary........................................................................................................................................36

Chapter 4........................................................................................................................................37

Implementation..............................................................................................................................37

Introduction....................................................................................................................................37

Themes...........................................................................................................................................37

Theme 1: Illustrate the history of data management?..........................................................37

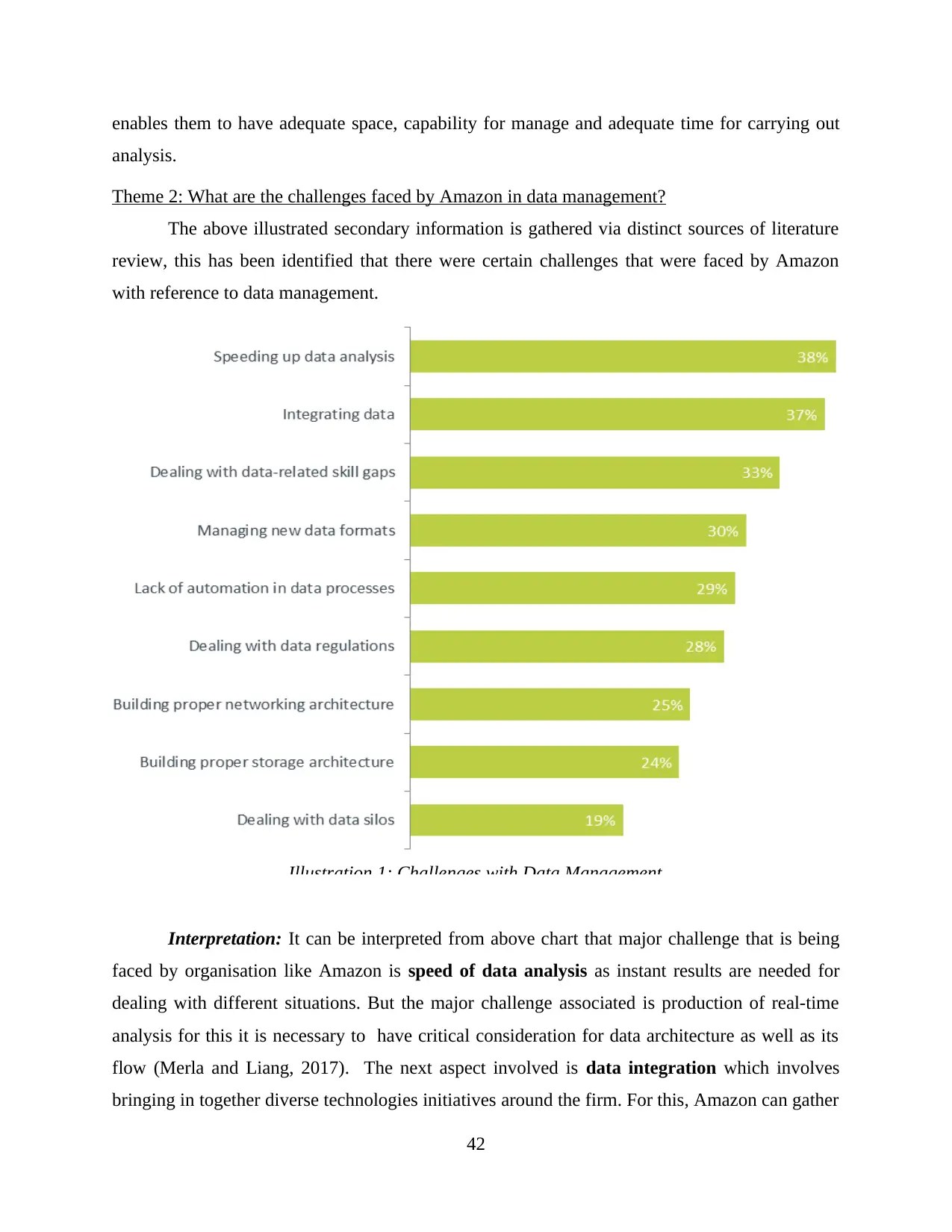

Theme 2: What are the challenges faced by Amazon in data management?.......................40

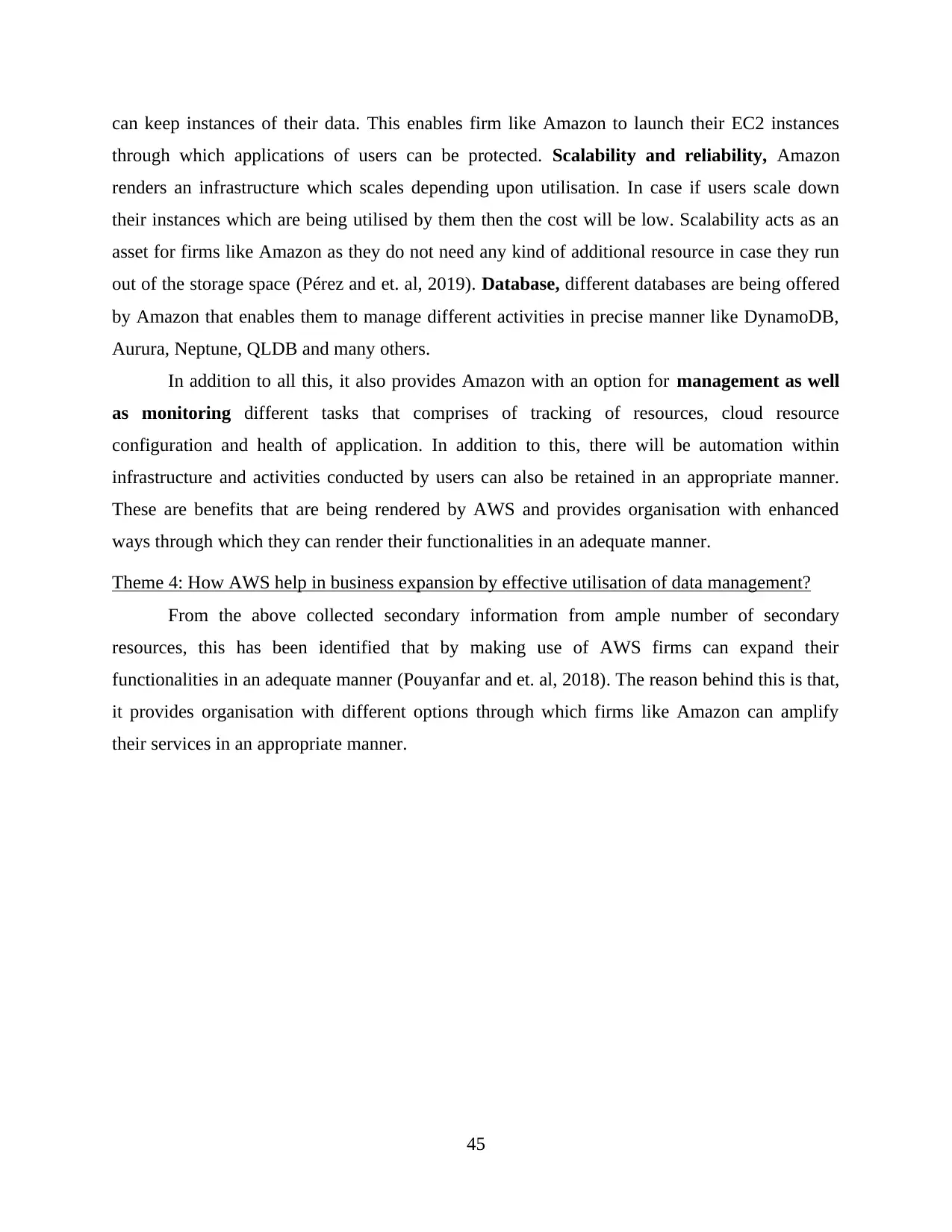

Theme 3: Depict benefits collected by Amazon through usage of AWS (cloud computing) in

data management?................................................................................................................41

Theme 4: How AWS help in business expansion by effective utilisation of data management?

..............................................................................................................................................43

Summary........................................................................................................................................45

Chapter 5........................................................................................................................................46

Conclusion and Recommendation.................................................................................................46

Introduction....................................................................................................................................46

Overview of dissertation.......................................................................................................46

Recommendation...........................................................................................................................47

Summary........................................................................................................................................47

References......................................................................................................................................49

Implementation..............................................................................................................................37

Introduction....................................................................................................................................37

Themes...........................................................................................................................................37

Theme 1: Illustrate the history of data management?..........................................................37

Theme 2: What are the challenges faced by Amazon in data management?.......................40

Theme 3: Depict benefits collected by Amazon through usage of AWS (cloud computing) in

data management?................................................................................................................41

Theme 4: How AWS help in business expansion by effective utilisation of data management?

..............................................................................................................................................43

Summary........................................................................................................................................45

Chapter 5........................................................................................................................................46

Conclusion and Recommendation.................................................................................................46

Introduction....................................................................................................................................46

Overview of dissertation.......................................................................................................46

Recommendation...........................................................................................................................47

Summary........................................................................................................................................47

References......................................................................................................................................49

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

Title: “The advent of AWS to Data Management in the 24th Century”

Chapter 1

Introduction

The administrative process that comprises of acquisition, validation, storage, protection

and processing of required data for ensuring that data is being created as well as are collected by

firm is referred to as data management. It acts as critical piece within deployment of IT systems

that are liable for execution of business applications thereby, leads to analytical information for

driving operational decision making and strategic planning by business managers, corporate

executives as well as other end users (Demirbas, 2020). Data management process comprises of

aggregation of distinct functions which aims at ensuring that data within corporate system is

precise, accessible and available. With evolution of technology, various enhancements are being

made with respect to ways in which it is being processed and utilised by firms for delivering

their functionalities. AWS (Amazon Web Services) renders on-demand cloud platforms along

with APIs to companies, governments and individuals. This cloud service is liable for furnishing

a abstract technical infrastructure along with building blocks and tools of distributed computing.

This dissertation will furnish relevant details associated with challenges that were being faced by

firm before cloud computing existed and potential impact created by this aspect on their

functionalities.

Data management can be defined as an administrative process where data or information

can be stored, managed, protected, processed and so on in order to improve the reliability,

accessibility and including timeliness of information for the users. implies ambiguous and broad

concept which denotes development of policies, procedures, architectures and practices for

management of data lifecycle (Kaoudi, Manolescu and Zampetakis, 2020). With reference to

data management the five possibilities are specified beneath: Cloud data management: This implies the process associated with integration of data

from ecosystem of cloud applications. All the intake, data storage as well as processing

takes place within cloud dependent storage medium. ETL and data integration: It comprises of loading of data from sources within the data

warehouse, transforming them, their summarisation along with their aggregation in the

adequate format for carrying out in-depth analysis.

1

Chapter 1

Introduction

The administrative process that comprises of acquisition, validation, storage, protection

and processing of required data for ensuring that data is being created as well as are collected by

firm is referred to as data management. It acts as critical piece within deployment of IT systems

that are liable for execution of business applications thereby, leads to analytical information for

driving operational decision making and strategic planning by business managers, corporate

executives as well as other end users (Demirbas, 2020). Data management process comprises of

aggregation of distinct functions which aims at ensuring that data within corporate system is

precise, accessible and available. With evolution of technology, various enhancements are being

made with respect to ways in which it is being processed and utilised by firms for delivering

their functionalities. AWS (Amazon Web Services) renders on-demand cloud platforms along

with APIs to companies, governments and individuals. This cloud service is liable for furnishing

a abstract technical infrastructure along with building blocks and tools of distributed computing.

This dissertation will furnish relevant details associated with challenges that were being faced by

firm before cloud computing existed and potential impact created by this aspect on their

functionalities.

Data management can be defined as an administrative process where data or information

can be stored, managed, protected, processed and so on in order to improve the reliability,

accessibility and including timeliness of information for the users. implies ambiguous and broad

concept which denotes development of policies, procedures, architectures and practices for

management of data lifecycle (Kaoudi, Manolescu and Zampetakis, 2020). With reference to

data management the five possibilities are specified beneath: Cloud data management: This implies the process associated with integration of data

from ecosystem of cloud applications. All the intake, data storage as well as processing

takes place within cloud dependent storage medium. ETL and data integration: It comprises of loading of data from sources within the data

warehouse, transforming them, their summarisation along with their aggregation in the

adequate format for carrying out in-depth analysis.

1

Master data management: This denotes method for management of critical data of

organisation that is accounts, parties and customers within the business transactions in the

standardised manner which will further prevent duplication in the organisation (Mbelli,

2019). Reference data management: This denotes a permissible values that are utilised by other

data fields like list of countries, cities, postal codes, product serial number and regions.

This might be externally provided or within the premises of organisation.

Data analytics & visualisation: The process that is utilised for processing of data via data

warehouses of big data sources for carrying out enhanced data analytics and enables

analysts to slice, dice as well as illustrate the visualisations along with dashboards.

With the massive quantities of data, relevant tools are needed with reference to aspect

illustrated above. Firms which deals with large amount of data , they have to store, sift through

this and also conduct analysis routinely for storing as well as managing them across the cloud

(Nagpure and et. al, 2019). The tools which firm can utilise are Amazon web services, Microsoft

Azure, Google Cloud, and Panoply. With reference to this dissertation, emphasis will be laid on

Amazon Web Service (AWS) which offers ever-expanding set of tools which can be put together

within effectual cloud data management stack. The key services are: Amazon S3: Simple storage service which is being delivered by AWS and furnish object

storage via web service interface. This can be utilised storing any kind of object that will

assist within storage for backup, internet applications, disaster recovery, data lakes for

having analytics, data archives along with cloud storage. This provides temporary and

intermediate storage. Amazon Glacier: It is a secured, durable as well as low cost storage class for data

archival as well as maintaining long-term backup. This is designed for having long-term

storage of the information which is accessed infrequently and for this latency time that is

acceptable is around 3 to 5 hours.

AWS Glue: It is a fully managed ETL that denotes extraction, transformation and loading

of service which makes this simple as well as cost efficacious for categorising data,

cleaning it, enriching this and moving it reliably among different data streams and stores

(Roe, 2020).

2

organisation that is accounts, parties and customers within the business transactions in the

standardised manner which will further prevent duplication in the organisation (Mbelli,

2019). Reference data management: This denotes a permissible values that are utilised by other

data fields like list of countries, cities, postal codes, product serial number and regions.

This might be externally provided or within the premises of organisation.

Data analytics & visualisation: The process that is utilised for processing of data via data

warehouses of big data sources for carrying out enhanced data analytics and enables

analysts to slice, dice as well as illustrate the visualisations along with dashboards.

With the massive quantities of data, relevant tools are needed with reference to aspect

illustrated above. Firms which deals with large amount of data , they have to store, sift through

this and also conduct analysis routinely for storing as well as managing them across the cloud

(Nagpure and et. al, 2019). The tools which firm can utilise are Amazon web services, Microsoft

Azure, Google Cloud, and Panoply. With reference to this dissertation, emphasis will be laid on

Amazon Web Service (AWS) which offers ever-expanding set of tools which can be put together

within effectual cloud data management stack. The key services are: Amazon S3: Simple storage service which is being delivered by AWS and furnish object

storage via web service interface. This can be utilised storing any kind of object that will

assist within storage for backup, internet applications, disaster recovery, data lakes for

having analytics, data archives along with cloud storage. This provides temporary and

intermediate storage. Amazon Glacier: It is a secured, durable as well as low cost storage class for data

archival as well as maintaining long-term backup. This is designed for having long-term

storage of the information which is accessed infrequently and for this latency time that is

acceptable is around 3 to 5 hours.

AWS Glue: It is a fully managed ETL that denotes extraction, transformation and loading

of service which makes this simple as well as cost efficacious for categorising data,

cleaning it, enriching this and moving it reliably among different data streams and stores

(Roe, 2020).

2

In present context researcher has taken into consideration Amazon which is an American

multinational technological firm that emphasise on delivering e-commerce services, digital

streaming, artificial intelligence and cloud computing. This was founded on 5th July, 1994 by Jeff

Bezos and is headquartered in Seattle, US. They deliver their services worldwide and for this

they have near about 8,40,400 employees. This is regarded as one of the most influential cultural

as well as economic force in the world and is valuable brand. They are also known for their

disruption within the technological innovation. Amazon provides AI assistant, live streaming,

cloud computing platform and online marketplace. They are the world' s largest provider with

respect to cloud computing services in context of infrastructure as a service (IaaS) and platform

as a service (PaaS).

Background of the research

Data management has important role within the organisation in terms of manner in which

operations are being conducted by them. The reason behind using data management in an

organisation is that this helps in in making sense towards vast quantities of information that does

already been collected by accompany where is storage of that particular data gathering and

managing of it considering an effective platform and Data Management solutions. With the help

of this, and organisation can easily conduct essential functions in a lesser time frame. The

practice that assists within collection, keeping as well as usage of data in secured, efficient and

cost efficacious manner is referred to as data management (Roh, Heo and Whang, 2019). This

aims at assisting organisations, individuals and associated things for having optimised usage of

data in certain bounds of regulations along with policies through which decisions can be made

effectively by taking actions in an appropriate manner through which organisation can have

maximised benefits. At present scenario, firms need to have relevant data management solution

that will render them with efficient ways through which data can be managed across distinct

tiers. Data management systems can be defined as solutions which helps in managing data in

much more effective and efficient ways considering diverse but unified data tier. These systems

are built on data management platforms which consist with different range of data basis,

warehouses, big data management systems, data analytics and so on. All these entities work in

collaboration like a data utility for delivering relevant management capabilities. But when

manual interventions are being carried out then the chances of errors also enhances. The new

system like AWS, big data or cloud computing aims at making sure that information stored

3

multinational technological firm that emphasise on delivering e-commerce services, digital

streaming, artificial intelligence and cloud computing. This was founded on 5th July, 1994 by Jeff

Bezos and is headquartered in Seattle, US. They deliver their services worldwide and for this

they have near about 8,40,400 employees. This is regarded as one of the most influential cultural

as well as economic force in the world and is valuable brand. They are also known for their

disruption within the technological innovation. Amazon provides AI assistant, live streaming,

cloud computing platform and online marketplace. They are the world' s largest provider with

respect to cloud computing services in context of infrastructure as a service (IaaS) and platform

as a service (PaaS).

Background of the research

Data management has important role within the organisation in terms of manner in which

operations are being conducted by them. The reason behind using data management in an

organisation is that this helps in in making sense towards vast quantities of information that does

already been collected by accompany where is storage of that particular data gathering and

managing of it considering an effective platform and Data Management solutions. With the help

of this, and organisation can easily conduct essential functions in a lesser time frame. The

practice that assists within collection, keeping as well as usage of data in secured, efficient and

cost efficacious manner is referred to as data management (Roh, Heo and Whang, 2019). This

aims at assisting organisations, individuals and associated things for having optimised usage of

data in certain bounds of regulations along with policies through which decisions can be made

effectively by taking actions in an appropriate manner through which organisation can have

maximised benefits. At present scenario, firms need to have relevant data management solution

that will render them with efficient ways through which data can be managed across distinct

tiers. Data management systems can be defined as solutions which helps in managing data in

much more effective and efficient ways considering diverse but unified data tier. These systems

are built on data management platforms which consist with different range of data basis,

warehouses, big data management systems, data analytics and so on. All these entities work in

collaboration like a data utility for delivering relevant management capabilities. But when

manual interventions are being carried out then the chances of errors also enhances. The new

system like AWS, big data or cloud computing aims at making sure that information stored

3

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

within them is precise, is available as per the requirements and can accessed easily through

which overall functionalities of firms can be amplified. The basic reason behind utilising new

systems like big data, AWS including cloud computing is that it helps in storing the data in a

precise manner which improve the overall functionality of an organisation at the time of

conducting different range of operations that would help in profit maximization (Sudmanns and

et. al, 2019).

Problem statement

Information acts as critical asset for each organisation that enables them to deliver their

services in precise manner (Sanchez, Beak and Saxena, 2019). An instance can be taken into

consideration with respect to this aspect suppose if organisation do not have information related

with their clients in terms of their preferences then there is a high probability that services

delivered by them will not be regarded by them as adequate as their requirements might not have

been addressed. Thus, it is important to ensure that information is maintained otherwise it leads

to wastage of human resources, raw materials, cost and efforts that are being made by the

organisation. But this is done in wrong direction. Therefore, for having a precise results or

outcomes it is necessary to have adequate information. Before the evolution of technology also

firms deliver their services and they face certain challenges while doing this (Sanchez, Beak and

Saxena, 2019). The other example can be taken to acknowledge this aspect like previously

Amazon or any other organisation made use of manual methods for making sure that they deliver

their services. But effort required was and high probability of errors, redundancies, cost and

cross verification of transactions also require more time. So, with the advent of time and

evolution of services that are being provided by Amazon Web Services will furnish them with

precise tools for dealing with the information.

Research aim

This is regarded as one of the important section of the dissertation as this assist researcher

within conducting complete research along with associated activities within the systematic as

well as planned manner through which prospective outcome can be attained. Research aim refers

a predetermined statement on which all the activities are dependent on while conducting research

(SGIT, 2019). This acts as a guide for research project which assists within carrying out analysis

of research through usage of distinct methods as well as measures within effectual manner for

accomplishment of the purpose of research. The goal of researcher behind this is to achieve aims

4

which overall functionalities of firms can be amplified. The basic reason behind utilising new

systems like big data, AWS including cloud computing is that it helps in storing the data in a

precise manner which improve the overall functionality of an organisation at the time of

conducting different range of operations that would help in profit maximization (Sudmanns and

et. al, 2019).

Problem statement

Information acts as critical asset for each organisation that enables them to deliver their

services in precise manner (Sanchez, Beak and Saxena, 2019). An instance can be taken into

consideration with respect to this aspect suppose if organisation do not have information related

with their clients in terms of their preferences then there is a high probability that services

delivered by them will not be regarded by them as adequate as their requirements might not have

been addressed. Thus, it is important to ensure that information is maintained otherwise it leads

to wastage of human resources, raw materials, cost and efforts that are being made by the

organisation. But this is done in wrong direction. Therefore, for having a precise results or

outcomes it is necessary to have adequate information. Before the evolution of technology also

firms deliver their services and they face certain challenges while doing this (Sanchez, Beak and

Saxena, 2019). The other example can be taken to acknowledge this aspect like previously

Amazon or any other organisation made use of manual methods for making sure that they deliver

their services. But effort required was and high probability of errors, redundancies, cost and

cross verification of transactions also require more time. So, with the advent of time and

evolution of services that are being provided by Amazon Web Services will furnish them with

precise tools for dealing with the information.

Research aim

This is regarded as one of the important section of the dissertation as this assist researcher

within conducting complete research along with associated activities within the systematic as

well as planned manner through which prospective outcome can be attained. Research aim refers

a predetermined statement on which all the activities are dependent on while conducting research

(SGIT, 2019). This acts as a guide for research project which assists within carrying out analysis

of research through usage of distinct methods as well as measures within effectual manner for

accomplishment of the purpose of research. The goal of researcher behind this is to achieve aims

4

through execution of objectives as well as ensure that targets of research are attained in adequate

way. It is crucial element of research that aids within creation of statement with respect to the

research issue is depicted within the form of statement. In addition to this, it has to be measured

like an evidence that will lead to identify exact issues that have to resolved for attainment of

peculiar outcome. This aspect of research aids researcher to have exact direction for

accumulation of final outcomes. The probable aim of research is depicted beneath:

“To identify the challenges faced by a company before cloud computing in data

management.” Case study on Amazon

Research Objectives

1. To study the history of data management.

2. To identify challenges faced by Amazon in data management

3. To determine benefits collected by Amazon by using AWS (cloud computing) in data

management.

4. To classify ways through which AWS help in business expansion by effective utilisation

of data management.

Research Questions

1. Illustrate the history of data management?

2. What are the challenges faced by Amazon in data management?

3. Depict benefits collected by Amazon through usage of AWS (cloud computing) in data

management?

4. How AWS help in business expansion by effective utilisation of data management?

Statement of Hypothesis

H0: The new data management methodologies or tools leads organisation to error free results

with minimised redundancy and high accuracy.

H1: The new data management tools are not adequate as compared to old tools in concept of

errors, accuracy and also offers duplication of information.

Rationale of the study

The present research will provide an insight into aspects associated with challenges that

are being faced by organisation while delivering their services before the advent of technology.

The reasons associated with need of technology is that there are various problems that are being

5

way. It is crucial element of research that aids within creation of statement with respect to the

research issue is depicted within the form of statement. In addition to this, it has to be measured

like an evidence that will lead to identify exact issues that have to resolved for attainment of

peculiar outcome. This aspect of research aids researcher to have exact direction for

accumulation of final outcomes. The probable aim of research is depicted beneath:

“To identify the challenges faced by a company before cloud computing in data

management.” Case study on Amazon

Research Objectives

1. To study the history of data management.

2. To identify challenges faced by Amazon in data management

3. To determine benefits collected by Amazon by using AWS (cloud computing) in data

management.

4. To classify ways through which AWS help in business expansion by effective utilisation

of data management.

Research Questions

1. Illustrate the history of data management?

2. What are the challenges faced by Amazon in data management?

3. Depict benefits collected by Amazon through usage of AWS (cloud computing) in data

management?

4. How AWS help in business expansion by effective utilisation of data management?

Statement of Hypothesis

H0: The new data management methodologies or tools leads organisation to error free results

with minimised redundancy and high accuracy.

H1: The new data management tools are not adequate as compared to old tools in concept of

errors, accuracy and also offers duplication of information.

Rationale of the study

The present research will provide an insight into aspects associated with challenges that

are being faced by organisation while delivering their services before the advent of technology.

The reasons associated with need of technology is that there are various problems that are being

5

faced due to manual delivery of the services and to identify this aspect the study is being carried

out. This dissertation aims at providing insight into different tools associated with AWS so that

in-depth knowledge can be attained and the reader will also be able to identify the tool which

will be apt for them (Smith and et. al, 2019). The study will illustrate challenges associated with

data management and ways in which AWS resolves the issues associated with this aspect. This

will further enable firm within expansion of their operations. Like if any e-commerce firm want

to increase their product portfolio then they need to have additional space according to this.

AWS which is a service provided by Amazon for can be used for having relevant storage and

firm can also have more space as per their requirement. The benefits that will be attained by its

usage will be analysed to ensure that they are apt for dealing with problems associated with data

management.

Significance of the study

The present dissertation emphasise on challenges that were being faced by organisation

when adequate data management tools were not available. This study will provide an insight into

problems that were faced, their elimination by usage of AWS and expansion of operations

carried out by firm through relevant data management method (Sudmanns and et. al, 2019). The

research will render adequate knowledge on the advent of technology in 21st century. This study

will also illustrate different tools that are being provided by Amazon for data management and

ways in which problems associated with this are minimised through their usage. The research

will assist within having exact need for tools and as a result organisations will be able to deliver

their services in an adequate manner. In addition to this, it will also enable them maximise their

overall productivity as they will be having a platform through which they can have accurate

details related with the operation conducted by the. This section is significant as this will also

minimise the redundancy of the information, cross verification of the data, transactions that are

made will be automatically stored and will further lead to minimisation of efforts that are being

made by humans (Sun and Scanlon, 2019). This will furnish knowledge related with

technologies that are being used by firms for having competitive edge by making use of peculiar

tool.

Structure of the dissertation

The research work is dependent on adequate framework and this has to be followed by

researcher with reference to completion of this research in a significant way. The befitting

6

out. This dissertation aims at providing insight into different tools associated with AWS so that

in-depth knowledge can be attained and the reader will also be able to identify the tool which

will be apt for them (Smith and et. al, 2019). The study will illustrate challenges associated with

data management and ways in which AWS resolves the issues associated with this aspect. This

will further enable firm within expansion of their operations. Like if any e-commerce firm want

to increase their product portfolio then they need to have additional space according to this.

AWS which is a service provided by Amazon for can be used for having relevant storage and

firm can also have more space as per their requirement. The benefits that will be attained by its

usage will be analysed to ensure that they are apt for dealing with problems associated with data

management.

Significance of the study

The present dissertation emphasise on challenges that were being faced by organisation

when adequate data management tools were not available. This study will provide an insight into

problems that were faced, their elimination by usage of AWS and expansion of operations

carried out by firm through relevant data management method (Sudmanns and et. al, 2019). The

research will render adequate knowledge on the advent of technology in 21st century. This study

will also illustrate different tools that are being provided by Amazon for data management and

ways in which problems associated with this are minimised through their usage. The research

will assist within having exact need for tools and as a result organisations will be able to deliver

their services in an adequate manner. In addition to this, it will also enable them maximise their

overall productivity as they will be having a platform through which they can have accurate

details related with the operation conducted by the. This section is significant as this will also

minimise the redundancy of the information, cross verification of the data, transactions that are

made will be automatically stored and will further lead to minimisation of efforts that are being

made by humans (Sun and Scanlon, 2019). This will furnish knowledge related with

technologies that are being used by firms for having competitive edge by making use of peculiar

tool.

Structure of the dissertation

The research work is dependent on adequate framework and this has to be followed by

researcher with reference to completion of this research in a significant way. The befitting

6

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

structure is crucial for ensuring effectual implementation of research activities along with

presentation of needs of work must be adequately designed (Taori and Dasararaju, 2019). In

context of present case, the investigator will follow the structure that is illustrated below:

Chapter 1: Introduction

The research work in initialised with introduction which furnish an in-depth insight into

the chosen area of study. The variable of research which are available within this are depicted in

term dependent as well as independent variables that have to be acknowledged in trenchant way.

In addition to this, this also liable for identification of aims as well as objectives which are

dependent on the issue that has to be explored through which investigator can have relevant

guidance. This implies the initial step by which researcher can identify the probable rationale

behind the research.

Chapter 2: Literature review

This section will be after the introduction chapter is completed. This implies second

chapter of the dissertation which furnishes core benefits for researcher by accumulation of

relevant data along with information that are associated with challenges that are experienced by

organisation before the advent of AWS for management of data (Wingerath, Ritter and Gessert,

2019). Within this, section, information is collected via distinct sources such as journals, books,

published articles or any other source in which data is published. This provides a strong base or

platform for conducting the dissertation by ensuring that proposed aims along with objectives

can be attained in peculiar time span.

Chapter 3: Research Methodology

This denotes next chapter within the dissertation that is being done after the section of

literature review is completed. This part have an efficacious role within ensuring that right

direction is taken with reference to usage of research methodologies. This involves various tools

along with techniques that will assist within collection as well as making analysis of data through

which valid outcomes can be produced. It implies primary concern for researcher where research

methodologies are being utilised for creation of relevant results in context of the topic.

Chapter 4: Data Analysis on Case Study

It is critical part within the research work as within this section associated with the

investigation, data that is being gathered will be analysed with respect to research questions

along with their objectives. This is liable for furnishing data related with findings and by

7

presentation of needs of work must be adequately designed (Taori and Dasararaju, 2019). In

context of present case, the investigator will follow the structure that is illustrated below:

Chapter 1: Introduction

The research work in initialised with introduction which furnish an in-depth insight into

the chosen area of study. The variable of research which are available within this are depicted in

term dependent as well as independent variables that have to be acknowledged in trenchant way.

In addition to this, this also liable for identification of aims as well as objectives which are

dependent on the issue that has to be explored through which investigator can have relevant

guidance. This implies the initial step by which researcher can identify the probable rationale

behind the research.

Chapter 2: Literature review

This section will be after the introduction chapter is completed. This implies second

chapter of the dissertation which furnishes core benefits for researcher by accumulation of

relevant data along with information that are associated with challenges that are experienced by

organisation before the advent of AWS for management of data (Wingerath, Ritter and Gessert,

2019). Within this, section, information is collected via distinct sources such as journals, books,

published articles or any other source in which data is published. This provides a strong base or

platform for conducting the dissertation by ensuring that proposed aims along with objectives

can be attained in peculiar time span.

Chapter 3: Research Methodology

This denotes next chapter within the dissertation that is being done after the section of

literature review is completed. This part have an efficacious role within ensuring that right

direction is taken with reference to usage of research methodologies. This involves various tools

along with techniques that will assist within collection as well as making analysis of data through

which valid outcomes can be produced. It implies primary concern for researcher where research

methodologies are being utilised for creation of relevant results in context of the topic.

Chapter 4: Data Analysis on Case Study

It is critical part within the research work as within this section associated with the

investigation, data that is being gathered will be analysed with respect to research questions

along with their objectives. This is liable for furnishing data related with findings and by

7

analysing perspectives of different work that is being conducted by distinct authors or

researchers. This chapter will aid researcher within presenting results within relevant format so

that readers can be provided with trenchant understanding with references to nature of peculiar

study area.

Chapter 5: Conclusion and Recommendations

This implies last chapter associated with research dissertation in which conclusion or

implications will be drawn with reference to research objectives along with aim depending upon

peculiar research issue that is to be studied. Within this section suitable summary will be created

for illustration of significant understanding with references to data management and challenges

which are involved with this. The summarised discussion will be rendered on probable

consequences of the research. In addition to this, relevant recommendations will be given

through which improvisations can be brought in the organisation by effectual usage of AWS

tools for managing data for having and delivering enhanced services.

Summary

This section illustrates the brief overview of dissertation topic that is challenges that are

being faced by firms before the advent of technology. This is a critical aspect that have to be

handled by an organisation to ensure that they are able to deliver their services in precise

manner. In addition to this, it is important for firms to have records of the the activities and

strategies that are used by them as this will assist them within making decisions. The aim and

objectives are also provided on the basis of which the entire dissertation will be done. In addition

to this, the overview of what other chapters will contain is also presented.

The next chapter is literature review that implies the work that is conducted by various

authors will be studied for identification of gap so that researcher conduct a review in an

adequate manner with reference to the research topic. This will enable investigator within having

relevant information with respect to what has been already and what aspects are left that are

critical as well as needs to be studied.

8

researchers. This chapter will aid researcher within presenting results within relevant format so

that readers can be provided with trenchant understanding with references to nature of peculiar

study area.

Chapter 5: Conclusion and Recommendations

This implies last chapter associated with research dissertation in which conclusion or

implications will be drawn with reference to research objectives along with aim depending upon

peculiar research issue that is to be studied. Within this section suitable summary will be created

for illustration of significant understanding with references to data management and challenges

which are involved with this. The summarised discussion will be rendered on probable

consequences of the research. In addition to this, relevant recommendations will be given

through which improvisations can be brought in the organisation by effectual usage of AWS

tools for managing data for having and delivering enhanced services.

Summary

This section illustrates the brief overview of dissertation topic that is challenges that are

being faced by firms before the advent of technology. This is a critical aspect that have to be

handled by an organisation to ensure that they are able to deliver their services in precise

manner. In addition to this, it is important for firms to have records of the the activities and

strategies that are used by them as this will assist them within making decisions. The aim and

objectives are also provided on the basis of which the entire dissertation will be done. In addition

to this, the overview of what other chapters will contain is also presented.

The next chapter is literature review that implies the work that is conducted by various

authors will be studied for identification of gap so that researcher conduct a review in an

adequate manner with reference to the research topic. This will enable investigator within having

relevant information with respect to what has been already and what aspects are left that are

critical as well as needs to be studied.

8

Chapter 2

Literature Review

Introduction

This chapter of research will cover the point of view of different researchers and scholars

that will provide insight into aim as well as objectives of the research. Along with this, distinct

secondary sources of research will be utilised that comprises of articles, website of organisation,

past reports, journals, newspapers, online sources for identification, measuring as well as

evaluation of research questions and objectives of the research (Xu and et. al, 2019). This section

has critical role within each research as this aids researcher within development of knowledge

with respect to selected area or topic within a deeper manner. This has been analysed that, in this

section information will be contained from perspective attained of diverse authors or researchers.

As a outcome, it will become easier for researcher for drawing in valid results for specific

research problem. In context of this research, researcher will efficaciously utilise distinct

secondary sources such as journals, books, online articles and many other published information

through which valid conclusion can be attained towards the same.

Within this section, elaborated literature will be conferred on challenges faced by firm

before advent of cloud computing in context of data management within effectual way. This

section is further bifurcated within several parts that will be explicated one after the other within

effectual way (Demirbas, 2020). The initial part will illustrate the history of data management

which will depict the ways in which information is being managed before the technological

advent. The other section will provide detailed data related with challenges which were being

faced by Amazon while dealing with huge amount of information. The next section will illustrate

benefits firm will attain by opting for Amazon web services with respect to data management.

The last section specify the way by which AWS will aid within expanding operations by

effectual usage of data management. By carrying out analysis of information that is presented

within this section, it will become easy for researcher within identification of challenges that

were faced by organisation before cloud computing was brought in within the market (Kaoudi,

Manolescu and Zampetakis, 2020). Through this researcher will be able address the exact results

of the research in and appropriate way.

9

Literature Review

Introduction

This chapter of research will cover the point of view of different researchers and scholars

that will provide insight into aim as well as objectives of the research. Along with this, distinct

secondary sources of research will be utilised that comprises of articles, website of organisation,

past reports, journals, newspapers, online sources for identification, measuring as well as

evaluation of research questions and objectives of the research (Xu and et. al, 2019). This section

has critical role within each research as this aids researcher within development of knowledge

with respect to selected area or topic within a deeper manner. This has been analysed that, in this

section information will be contained from perspective attained of diverse authors or researchers.

As a outcome, it will become easier for researcher for drawing in valid results for specific

research problem. In context of this research, researcher will efficaciously utilise distinct

secondary sources such as journals, books, online articles and many other published information

through which valid conclusion can be attained towards the same.

Within this section, elaborated literature will be conferred on challenges faced by firm

before advent of cloud computing in context of data management within effectual way. This

section is further bifurcated within several parts that will be explicated one after the other within

effectual way (Demirbas, 2020). The initial part will illustrate the history of data management

which will depict the ways in which information is being managed before the technological

advent. The other section will provide detailed data related with challenges which were being

faced by Amazon while dealing with huge amount of information. The next section will illustrate

benefits firm will attain by opting for Amazon web services with respect to data management.

The last section specify the way by which AWS will aid within expanding operations by

effectual usage of data management. By carrying out analysis of information that is presented

within this section, it will become easy for researcher within identification of challenges that

were faced by organisation before cloud computing was brought in within the market (Kaoudi,

Manolescu and Zampetakis, 2020). Through this researcher will be able address the exact results

of the research in and appropriate way.

9

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Review

History of data management

According to Da Silva and Nascimento (2020), data management can be defined as a

process associated with ingestion, storage, organisation along with maintenance of data that is

being created as well as collection by firm. It implies the practice of collection, keeping as well

as usage of data efficiently, securely and in a cost efficient manner. The goal behind this is assist

people as well as firms within having optimised usage of data in boundaries of their policies as

well as regulations that will aid them within formulation of decisions and have precise actions

that will enhance benefits of firm (da Silva and Nascimento, 2020). The robust data management

strategy is critical for being more essential as compared to relying on intangible assets for

creation of values. This involves wide range of tasks, practices, policies and procedures. This

involves creation, assessment along with updation of data around distinct data tiers. It is liable

for storage of data around various clouds as well as on premises and render higher availability

for disaster recovery. This aids within having surety associated with data privacy and attaining

security of data stored within system. This is also liable for destroying data with reference to

retention schedules and compliance with demands that are being made. The reason that came in

front is that, privacy policy which has been followed by organizations has not been that much

effective and that may result into damage to the data when it comes to meet the demands of

particular aim or the objective which may result into negative outcomes.

Data management is defined as organisations management of data as well as information

for having structured and secured access & storage. This aims at organising and controlling data

resources in such manner that they can be easily accessed, relied on and are available as per the

needs of an individual (Mbelli, 2019). The tasks associated with this comprises of formulation of

data governance policies, carrying out their analysis, architecture, DMS (database management

system) integration, data source identification & security, storage and segregation. The initial

mechanical enhancement within data management is tracked back in 1890s with invention of

mechanical punch cards which were liable for recording information on the thick card. The

concept of data management was not emphasised until 1960s when ADPSO (Association of Data

Processing Service Organisations) started rendering advice to professionals with reference to this

aspect. In 1950, the management of data became issue as computers were clumsy, slow and

needed huge amount of data with manual labour for operating on them (Nagpure and et. al,

10

History of data management

According to Da Silva and Nascimento (2020), data management can be defined as a

process associated with ingestion, storage, organisation along with maintenance of data that is

being created as well as collection by firm. It implies the practice of collection, keeping as well

as usage of data efficiently, securely and in a cost efficient manner. The goal behind this is assist

people as well as firms within having optimised usage of data in boundaries of their policies as

well as regulations that will aid them within formulation of decisions and have precise actions

that will enhance benefits of firm (da Silva and Nascimento, 2020). The robust data management

strategy is critical for being more essential as compared to relying on intangible assets for

creation of values. This involves wide range of tasks, practices, policies and procedures. This

involves creation, assessment along with updation of data around distinct data tiers. It is liable

for storage of data around various clouds as well as on premises and render higher availability

for disaster recovery. This aids within having surety associated with data privacy and attaining

security of data stored within system. This is also liable for destroying data with reference to

retention schedules and compliance with demands that are being made. The reason that came in

front is that, privacy policy which has been followed by organizations has not been that much

effective and that may result into damage to the data when it comes to meet the demands of

particular aim or the objective which may result into negative outcomes.

Data management is defined as organisations management of data as well as information

for having structured and secured access & storage. This aims at organising and controlling data

resources in such manner that they can be easily accessed, relied on and are available as per the

needs of an individual (Mbelli, 2019). The tasks associated with this comprises of formulation of

data governance policies, carrying out their analysis, architecture, DMS (database management

system) integration, data source identification & security, storage and segregation. The initial

mechanical enhancement within data management is tracked back in 1890s with invention of

mechanical punch cards which were liable for recording information on the thick card. The

concept of data management was not emphasised until 1960s when ADPSO (Association of Data

Processing Service Organisations) started rendering advice to professionals with reference to this

aspect. In 1950, the management of data became issue as computers were clumsy, slow and

needed huge amount of data with manual labour for operating on them (Nagpure and et. al,

10

2019). Various computer-oriented firms utilised floors for warehousing and emphasised on

managing their data by storing them on punch cards. They also had a floor in which they

maintained tabulators, sorters and banks of punch cards. The programs at that instance of time

frame were setup within decimal or binary form which were read from toggled off/on switches at

front of magnetic tape, computer and punch cards. This was referred to as first generation

programming language (absolute machine language).

Assembly language or second generation programming language are utilised like

early method for organisation and management of data. These languages became popular and

utilised letters for programming instead of making use of critical strings that comprised of ones

and zeros (Roe, 2020). This enabled programmers to have assembly mnemonics through which it

becomes easy to remember codes. It made easy for the programmers to have programs that are

readable as well as freed them from error-prone and tedious calculations. High level languages

are older that were easy for human beings to interpret and allowed them to compose generic

programs that were entirely not dependent on peculiar system. Their primary purpose is

organisation as well as management of data. The different languages used for doing this are: FORTRAN: This was created in 1950 by IBM for dealing with science and engineering

applications. IT is still utilised within making predictions of weather, computational fluid

dynamics, finite element analysis, crystallography, computational physics and chemistry. LISP: In 1958, this became popular for carrying out AI research and this was the first

language that initiated wide range of ideas in context of computer science like automatic

storage management, tree data structures and dynamic typing (Roh, Heo and Whang,

2019).

In addition to these languages, there are many other which were used. They are common

business oriented language (COBOL), beginner's all-purpose symbolic instruction code

(BASIC), C and C++. After this the online data management came which involved, making

travel reservations, having stock market trading that must be coordinated as well as manage data

in quick and efficient manner. Various industries conducts online transactions through usage of

online data management systems (Sanchez, Beak and Saxena, 2019). In context of productivity

around 7.5 million sessions are conducted per day with respect to healthcare information. These

systems are liable fro allowing programs for reading records or files, carrying out updates as well

11

managing their data by storing them on punch cards. They also had a floor in which they

maintained tabulators, sorters and banks of punch cards. The programs at that instance of time

frame were setup within decimal or binary form which were read from toggled off/on switches at

front of magnetic tape, computer and punch cards. This was referred to as first generation

programming language (absolute machine language).

Assembly language or second generation programming language are utilised like

early method for organisation and management of data. These languages became popular and

utilised letters for programming instead of making use of critical strings that comprised of ones

and zeros (Roe, 2020). This enabled programmers to have assembly mnemonics through which it

becomes easy to remember codes. It made easy for the programmers to have programs that are

readable as well as freed them from error-prone and tedious calculations. High level languages

are older that were easy for human beings to interpret and allowed them to compose generic

programs that were entirely not dependent on peculiar system. Their primary purpose is

organisation as well as management of data. The different languages used for doing this are: FORTRAN: This was created in 1950 by IBM for dealing with science and engineering

applications. IT is still utilised within making predictions of weather, computational fluid

dynamics, finite element analysis, crystallography, computational physics and chemistry. LISP: In 1958, this became popular for carrying out AI research and this was the first

language that initiated wide range of ideas in context of computer science like automatic

storage management, tree data structures and dynamic typing (Roh, Heo and Whang,

2019).

In addition to these languages, there are many other which were used. They are common

business oriented language (COBOL), beginner's all-purpose symbolic instruction code

(BASIC), C and C++. After this the online data management came which involved, making

travel reservations, having stock market trading that must be coordinated as well as manage data

in quick and efficient manner. Various industries conducts online transactions through usage of

online data management systems (Sanchez, Beak and Saxena, 2019). In context of productivity

around 7.5 million sessions are conducted per day with respect to healthcare information. These

systems are liable fro allowing programs for reading records or files, carrying out updates as well

11

as send updated information to online users. For this different languages are utilised, they are

mentioned beneath: Structured query language (SQL): In 1970 this was developed that emphasised on

relational database and rendered consistent data processing by minimised duplication of

the data. This assisted within processing huge amount of data in an efficient and quick

manner. Relational models illustrates both subject and relationships within a uniform

manner and they assist within navigation, manipulation as well as defining data instead of

making use of different languages for each individual (Sanchez, Beak and Saxena, 2019).

Relational algebra is being utilised as a process record that sets like a group and operators

are applied for entire record sets. This aids within client-server computing, parallel

processing and graphical user interfaces. Furthermore, it will enable various users to have

access for identical database within simultaneous manner.

NoSQL: The primary rationale of NoSQL implies processing along with research for Big

Data and this is not a part of relational database. This is utilised due to high storage

capacity as well as filtering ample of unstructured and structured data. It will support

firms to have horizontal scalability that will allow huge data warehouses such as CIA,

Amazon and Google for processing enhanced amount of information. This concept has

gained popularity after 2005.

Enterprise or data warehousing: In 1990's this concept was widely opted by

organisations around the world and was viewed like a means for dealing with chaos that was

created while integration as well as orientation of the subject. The emphasis was laid on creation

of single version of truth (SGIT, 2019). It is an important aspect that is associated formalisation

of architecture of data along with its management practices. This will also assist within dealing

with inconsistencies with different data sources by furnishing comprehensible source for data

through which user can have access to this, complexities will be reduced from being tangled and

fragile from point-to-point interface of application.

Data lakes: In 1990's data quake shaken the foundations for data management practices

and this aspect became volatile as well as dynamic. It is a core architectural component that is

liable for elimination of restrictions with respect to RDBMS like dominant database technologies

(Smith and et. al, 2019). Data lakes assists within making affirmative contributions for the

12

mentioned beneath: Structured query language (SQL): In 1970 this was developed that emphasised on

relational database and rendered consistent data processing by minimised duplication of

the data. This assisted within processing huge amount of data in an efficient and quick

manner. Relational models illustrates both subject and relationships within a uniform

manner and they assist within navigation, manipulation as well as defining data instead of

making use of different languages for each individual (Sanchez, Beak and Saxena, 2019).

Relational algebra is being utilised as a process record that sets like a group and operators

are applied for entire record sets. This aids within client-server computing, parallel

processing and graphical user interfaces. Furthermore, it will enable various users to have

access for identical database within simultaneous manner.

NoSQL: The primary rationale of NoSQL implies processing along with research for Big

Data and this is not a part of relational database. This is utilised due to high storage

capacity as well as filtering ample of unstructured and structured data. It will support

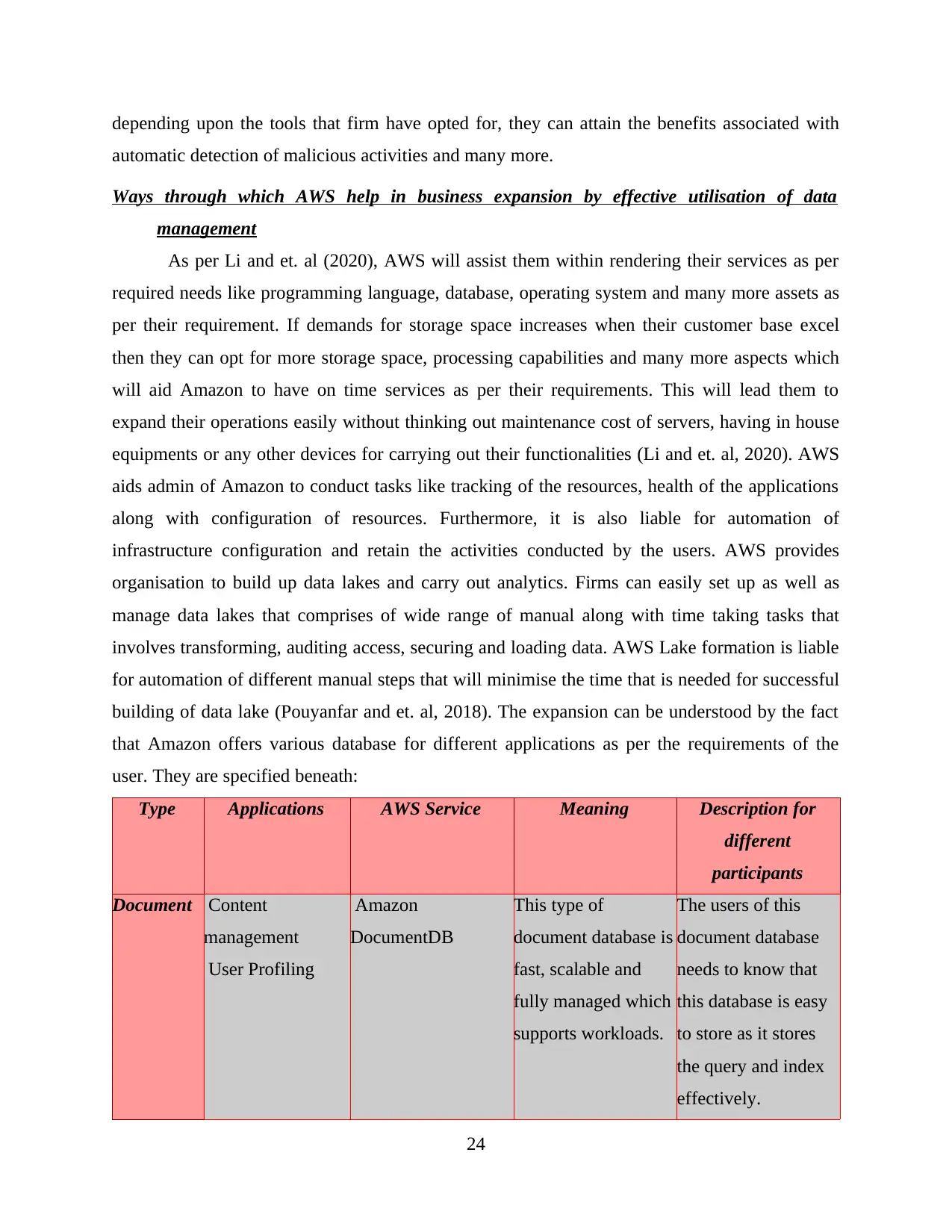

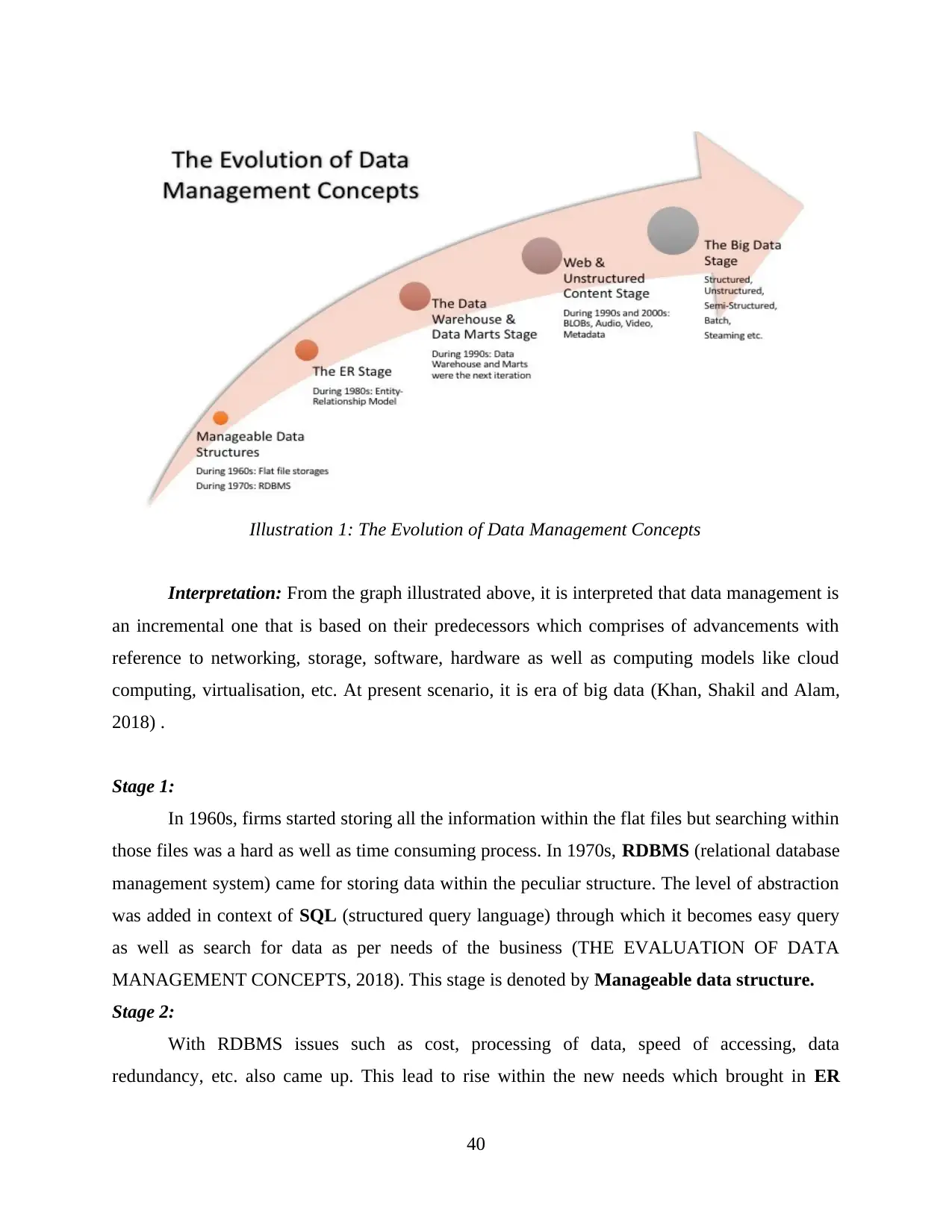

firms to have horizontal scalability that will allow huge data warehouses such as CIA,