E-Commerce User Behavior Analysis using Data Mining Techniques

VerifiedAdded on 2023/06/14

|60

|14194

|55

Report

AI Summary

This report delves into the analysis of customer behavior on e-commerce websites, leveraging data mining techniques to understand user preferences and patterns. It explores the evolution of e-commerce, contrasting online and offline shopping experiences, and employs models like Linear Temporal Logic, Computational Temporal Logic, and Probabilistic models to evaluate website structure. An e-commerce website is developed using Java and Bootstrap to analyze customer behavior based on factors like product evaluation, buying behavior, and purchase decisions. The analysis involves data pre-processing with Weka, comparison with leading e-commerce sites, and application of the k-means nearest neighbor (KNN) algorithm. The report also proposes a linear-temporal logic model for analyzing structured e-commerce web logs, converting them into event logs to capture user actions. Ultimately, the study aims to enhance e-commerce efficiency by understanding and catering to user interests, contributing to faster and more reliable web services.

Users’ Behaviour Analysis in Structured

E- Commerce Website

Student Name:

Register Number:

Supervisor:

1

E- Commerce Website

Student Name:

Register Number:

Supervisor:

1

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Abstract

The project mainly focused on the analysis of customer behavior towards e-commerce website.

At present, the people around the globe are addicted to online shopping due to its immense

comfort for the customers. E-Commerce has become so common and popular because, it brings

ease into people’s lives. Today’s E-commerce websites are highly effective and powerful to

benefit the customers. The E-Commerce websites provide n number of varieties at one place. It

is understood that e-commerce mainly focuses on customer satisfaction. Keeping all the benefits

of E-Commerce in mind, it is important to know that, it is complicated to understand the

behaviour of each customer, which helps in satisfying the customers. A session of the user on

different websites is stored, which helps in identifying the behavioural patterns of the users.

However, it is possible to capture the information like, what the user/ customer would like to

buy, based on mostly viewed products. The web server stores this type of information in the form

of web logs. The logs helps to analyze the information to know the behaviour of the users.

Therefore, this project works on understanding the customers and their interested products, to

satisfy them. To understand the fundamental of e-commerce website, a deep study has been

carried out. In this part consists of how e-commerce emerged, why people prefer online shopping

over offline shopping, and how it works in existing system. To analyze the customer behavior

towards e-commerce website, three model checking is done. The models are Linear Temporal

Logic model, Computational temporal logical model and Probabilistic model. The stability of

each node’s state is evaluated. An e-commerce website is developed to analyze the customer

behavior based on the parameters Evaluation of products and suppliers, Consumer Buying

Behavior, the customer's need, checking out alternative products and suppliers and purchase

decision. The obtained results are discussed in detail. To develop e-commerce website, Java is

used. To make the website for user friendly, the Bootstrap is applied. The sample Behavioral

patterns are taken and these are applied on Weka tool for data pre-processing. The analysis part

is carried out with comparing three top most e-commerce website and how the people are look

and feel while online shopping. Charts and tables are used in this analysis part for easy

understanding of data.Thus, effective comparison is the key to complete the analysis of this

project. For accomplishing the comparison the help of Web analysts is taken to concentrate on

applying data mining techniques such as k means nearest neighbor (KNN) algorithm for

analyzing user’s behavior. Sequence of action is performed. Also, the behavioural patterns help

to understand the interests of users, to be applied on E-Commerce websites. The linear-temporal

logic model is proposed in this project to help the analysis of the structured e-commerce web

logs. Based on the E-commerce Structure, a common method for mapping the log records can

help in converting them to event logs, which captures the users’ behaviours or actions. Later,

various predefined queries are utilized for identifying the behavioural patterns which considers

various actions performed by the user, during a session. As a final point, the proposed model is

implemented on real case studies of different E-Commerce websites. On the other hand, in the

software development life cycle, the waterfall model is used, which takes care of requirement

gathering, planning, designing, coding and testing. The results of the analysis are provided.

Keywords: E-Commerce; model; Web logs analysis; behavioural patterns; Data mining

2

The project mainly focused on the analysis of customer behavior towards e-commerce website.

At present, the people around the globe are addicted to online shopping due to its immense

comfort for the customers. E-Commerce has become so common and popular because, it brings

ease into people’s lives. Today’s E-commerce websites are highly effective and powerful to

benefit the customers. The E-Commerce websites provide n number of varieties at one place. It

is understood that e-commerce mainly focuses on customer satisfaction. Keeping all the benefits

of E-Commerce in mind, it is important to know that, it is complicated to understand the

behaviour of each customer, which helps in satisfying the customers. A session of the user on

different websites is stored, which helps in identifying the behavioural patterns of the users.

However, it is possible to capture the information like, what the user/ customer would like to

buy, based on mostly viewed products. The web server stores this type of information in the form

of web logs. The logs helps to analyze the information to know the behaviour of the users.

Therefore, this project works on understanding the customers and their interested products, to

satisfy them. To understand the fundamental of e-commerce website, a deep study has been

carried out. In this part consists of how e-commerce emerged, why people prefer online shopping

over offline shopping, and how it works in existing system. To analyze the customer behavior

towards e-commerce website, three model checking is done. The models are Linear Temporal

Logic model, Computational temporal logical model and Probabilistic model. The stability of

each node’s state is evaluated. An e-commerce website is developed to analyze the customer

behavior based on the parameters Evaluation of products and suppliers, Consumer Buying

Behavior, the customer's need, checking out alternative products and suppliers and purchase

decision. The obtained results are discussed in detail. To develop e-commerce website, Java is

used. To make the website for user friendly, the Bootstrap is applied. The sample Behavioral

patterns are taken and these are applied on Weka tool for data pre-processing. The analysis part

is carried out with comparing three top most e-commerce website and how the people are look

and feel while online shopping. Charts and tables are used in this analysis part for easy

understanding of data.Thus, effective comparison is the key to complete the analysis of this

project. For accomplishing the comparison the help of Web analysts is taken to concentrate on

applying data mining techniques such as k means nearest neighbor (KNN) algorithm for

analyzing user’s behavior. Sequence of action is performed. Also, the behavioural patterns help

to understand the interests of users, to be applied on E-Commerce websites. The linear-temporal

logic model is proposed in this project to help the analysis of the structured e-commerce web

logs. Based on the E-commerce Structure, a common method for mapping the log records can

help in converting them to event logs, which captures the users’ behaviours or actions. Later,

various predefined queries are utilized for identifying the behavioural patterns which considers

various actions performed by the user, during a session. As a final point, the proposed model is

implemented on real case studies of different E-Commerce websites. On the other hand, in the

software development life cycle, the waterfall model is used, which takes care of requirement

gathering, planning, designing, coding and testing. The results of the analysis are provided.

Keywords: E-Commerce; model; Web logs analysis; behavioural patterns; Data mining

2

Table of Contents

Table of Contents.......................................................................................................................................3

1. Introduction-......................................................................................................................................1

1.1 Research Questions....................................................................................................................2

1.2 Aim of the Project......................................................................................................................2

1.3 Objectives...................................................................................................................................3

1.4 Literature Review......................................................................................................................3

1.5 Research Content.......................................................................................................................4

1.6 Dissertation Overview...............................................................................................................5

2. Background........................................................................................................................................6

2.1 Evolution of E-Commerce.........................................................................................................6

2.2 Existing System..........................................................................................................................7

2.2.1 Disadvantages of Existing System.....................................................................................9

2.3 Hypothesis..................................................................................................................................9

2.4 External Interface Requirements.............................................................................................9

2.5 Summary....................................................................................................................................9

3. Related work....................................................................................................................................10

3.1 Summary..................................................................................................................................13

4. Model Checking to Analyse Event Logs.........................................................................................14

4.1 Summary..................................................................................................................................19

5. Software Development Models.......................................................................................................21

5.1 Waterfall Model.......................................................................................................................21

6. Up and Scrap...................................................................................................................................22

6.1 Summary..................................................................................................................................24

7. Implementation................................................................................................................................25

8. Evaluation and Results....................................................................................................................32

8.1 Data Pre-Processing.................................................................................................................32

8.2 Results.......................................................................................................................................42

8.3 Summary..................................................................................................................................47

9. Identifying Users’ Behavioural Patterns........................................................................................47

9.1 Summary..................................................................................................................................48

10. Conclusions and Future Work....................................................................................................48

3

Table of Contents.......................................................................................................................................3

1. Introduction-......................................................................................................................................1

1.1 Research Questions....................................................................................................................2

1.2 Aim of the Project......................................................................................................................2

1.3 Objectives...................................................................................................................................3

1.4 Literature Review......................................................................................................................3

1.5 Research Content.......................................................................................................................4

1.6 Dissertation Overview...............................................................................................................5

2. Background........................................................................................................................................6

2.1 Evolution of E-Commerce.........................................................................................................6

2.2 Existing System..........................................................................................................................7

2.2.1 Disadvantages of Existing System.....................................................................................9

2.3 Hypothesis..................................................................................................................................9

2.4 External Interface Requirements.............................................................................................9

2.5 Summary....................................................................................................................................9

3. Related work....................................................................................................................................10

3.1 Summary..................................................................................................................................13

4. Model Checking to Analyse Event Logs.........................................................................................14

4.1 Summary..................................................................................................................................19

5. Software Development Models.......................................................................................................21

5.1 Waterfall Model.......................................................................................................................21

6. Up and Scrap...................................................................................................................................22

6.1 Summary..................................................................................................................................24

7. Implementation................................................................................................................................25

8. Evaluation and Results....................................................................................................................32

8.1 Data Pre-Processing.................................................................................................................32

8.2 Results.......................................................................................................................................42

8.3 Summary..................................................................................................................................47

9. Identifying Users’ Behavioural Patterns........................................................................................47

9.1 Summary..................................................................................................................................48

10. Conclusions and Future Work....................................................................................................48

3

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

References................................................................................................................................................50

Appendix..................................................................................................................................................51

List of Figures

List of Tables

List of Abbreviation

4

Appendix..................................................................................................................................................51

List of Figures

List of Tables

List of Abbreviation

4

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1. Introduction-

The use of E-Commerce websites for shopping ‘n’ number of products has increasing

demand. Along with threats, online shopping has immense benefits that overpowers the

customers. For instance, the primary advantages that overpowers the users include, shopping

from their comfort zone and saving a lot of time. E-Commerce websites has endless varieties and

trending products. E-Commerce websites ensures to satisfy their customers. However, it is a

known fact that, it is complicated to understand the behaviour of each customer, and it varies

from one to another. The sessions of users are stored for recognizing their behavioural patterns,

which helps to capture the answer for the following question-What the user or customer might

wish to purchase? This is made possible only depending on the mostly viewed products. The web

logs are stored by the web server, for analyzing the information to know the behaviour of the

users. Hence, to complete this project, understanding the customers’ behaviour is important.

This project uses Java for creating the Web pages and Weka tool is used for data pre-

processing. More than one model will be applied on various websites to prove the analysis,

which will result into comparison. Thus, effective comparison is the key to complete the analysis

of this project. For accomplishing the comparison, the help of Web analysts is taken, which

ensures to concentrate on applying data mining techniques for modelling users’ behaviour.

Sequence of action is performed. Hence, more complex behavioural patterns are identified by

analyzing the user sessions. Also, the behavioural patterns help to understand the interests of

users, to be applied on E-Commerce websites. The linear-temporal logic model is proposed in

this project to help the analysis of the structured e-commerce web logs. The proposed model is

implemented on real case studies of different E-Commerce websites. In software development

life cycle, the waterfall model is used, which takes care of requirement gathering, planning,

designing, coding and testing.

The importance of response time while searching the products in the websites require in-

depth understanding, to increase the efficiency of E-Commerce services provided by the

company websites. However, comparing our website’s efficiency with other company websites

has a significant importance, as it leads to improvisation of the web services. The recent

advancements in the programming languages and internet, has increased the number of E-

Commerce users. Thus, more reliable, fast and efficient web service is in high demand. In fact,

this helps to attract more number of customers. This investigation contributes to find an effective

1

The use of E-Commerce websites for shopping ‘n’ number of products has increasing

demand. Along with threats, online shopping has immense benefits that overpowers the

customers. For instance, the primary advantages that overpowers the users include, shopping

from their comfort zone and saving a lot of time. E-Commerce websites has endless varieties and

trending products. E-Commerce websites ensures to satisfy their customers. However, it is a

known fact that, it is complicated to understand the behaviour of each customer, and it varies

from one to another. The sessions of users are stored for recognizing their behavioural patterns,

which helps to capture the answer for the following question-What the user or customer might

wish to purchase? This is made possible only depending on the mostly viewed products. The web

logs are stored by the web server, for analyzing the information to know the behaviour of the

users. Hence, to complete this project, understanding the customers’ behaviour is important.

This project uses Java for creating the Web pages and Weka tool is used for data pre-

processing. More than one model will be applied on various websites to prove the analysis,

which will result into comparison. Thus, effective comparison is the key to complete the analysis

of this project. For accomplishing the comparison, the help of Web analysts is taken, which

ensures to concentrate on applying data mining techniques for modelling users’ behaviour.

Sequence of action is performed. Hence, more complex behavioural patterns are identified by

analyzing the user sessions. Also, the behavioural patterns help to understand the interests of

users, to be applied on E-Commerce websites. The linear-temporal logic model is proposed in

this project to help the analysis of the structured e-commerce web logs. The proposed model is

implemented on real case studies of different E-Commerce websites. In software development

life cycle, the waterfall model is used, which takes care of requirement gathering, planning,

designing, coding and testing.

The importance of response time while searching the products in the websites require in-

depth understanding, to increase the efficiency of E-Commerce services provided by the

company websites. However, comparing our website’s efficiency with other company websites

has a significant importance, as it leads to improvisation of the web services. The recent

advancements in the programming languages and internet, has increased the number of E-

Commerce users. Thus, more reliable, fast and efficient web service is in high demand. In fact,

this helps to attract more number of customers. This investigation contributes to find an effective

1

model that increases the efficiency of searching the products, for the customers/ users. The

response time is used for comparing the efficiency of the websites, in searching the products.

The following are the factors that are considered in the utilized programming languages,

of this research:

1. Portability

2. Versatility

3. Efficiency

4. Java is designed especially for distributed computing with internetworking

capability that is inherently integrated into it. Writing internetwork programs in

Java is used for data transfer in a file. Java has security design as a main part.

Based on Security Java language, compiler, interpreter, and runtime environment

were all developed. Robust means reliability. Java lays a lot of emphasis on early

checking for possible errors, in other languages java compilers is able to detect

Many Problems during execution. Simultaneous Program Execution is known as

Multithreading. In Java is adapted with multithreaded programming, while in

other languages, operating system-specific procedures have to be called in order

to enable multithreading.

5. Java has become a language of choice for providing worldwide internet

solutions ,Why Because its having more properties like robustness, ease of use,

cross-Platform Capabilities and Security.

1.1Research Questions

The following are the research questions of this dissertation:

1) How the customers look and feel about the e-commerce websites?

2) Does the customers prefer online shopping more than offline shopping?

1.2Aim of the Project

The aim of this project is to analyze the customer behavior towards E-commerce website.

The linear temporal logic model, computational temporal logic model and Probabilistic model

checking will be used for analyzing the structure of e-commerce website with the help of web

2

response time is used for comparing the efficiency of the websites, in searching the products.

The following are the factors that are considered in the utilized programming languages,

of this research:

1. Portability

2. Versatility

3. Efficiency

4. Java is designed especially for distributed computing with internetworking

capability that is inherently integrated into it. Writing internetwork programs in

Java is used for data transfer in a file. Java has security design as a main part.

Based on Security Java language, compiler, interpreter, and runtime environment

were all developed. Robust means reliability. Java lays a lot of emphasis on early

checking for possible errors, in other languages java compilers is able to detect

Many Problems during execution. Simultaneous Program Execution is known as

Multithreading. In Java is adapted with multithreaded programming, while in

other languages, operating system-specific procedures have to be called in order

to enable multithreading.

5. Java has become a language of choice for providing worldwide internet

solutions ,Why Because its having more properties like robustness, ease of use,

cross-Platform Capabilities and Security.

1.1Research Questions

The following are the research questions of this dissertation:

1) How the customers look and feel about the e-commerce websites?

2) Does the customers prefer online shopping more than offline shopping?

1.2Aim of the Project

The aim of this project is to analyze the customer behavior towards E-commerce website.

The linear temporal logic model, computational temporal logic model and Probabilistic model

checking will be used for analyzing the structure of e-commerce website with the help of web

2

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

logs. This helps in analyzing the behaviour of the website users. More than three case studies of

E-Commerce sites are considered in this project, to provide clear analysis results. The user’s

behaviours are captured with the stored web logs, which are later converted as event logs, using a

common method for mapping the log records. Then, identification of various behavioural

patterns takes place, using pre-defined queries which the user can likely perform. The researcher

of this project will define the pre-defined queries, to complete the project. Three websites will be

selected to perform real case study.

1.3Objectives

The objective is to analyze the customers’ behavior while shopping through online. This

report will provide an analysis of the three case studies of E-Commerce websites, where

customers’ behaviours will be analyzed. Online shopping website will be developed, where the

user can view the list of products and add them to their carts. To achieve the objective, data

mining will be applied. This will be compared by applying various models such as temporal

logical model, probabilistic decision making model and CLTL model. Throughout this model

checking, the efficiency of the website will be analyzed. Later, data pre-processing will be

implemented using WEKA tool.

1.4Literature Review

According to this paper (Bejju, 2018), In economic development, E-commerce is the

most important catalyst. Very fast growth in usage of web based application and internet

application is decreasing the operation costs of large enterprises. Many organizations have

decided to reconstruct their business strategies, to attain the maximum value and customer’s

satisfaction. E-commerce is not just trading the products; it provides the opportunity to complete

with other gains in the market such as delivering the needs of people within a short time. The

organizations attain business knowledge by using data mining, as it is used to attain the

knowledge from available information in order to help companies to make correct and weighted

decisions. E-commerce ensures fundamental changes to their customers. E-commerce marking

requires e-commerce websites to get a deeper understanding of e-commerce internetworks like

Flipkart, Myntra and Amazon market space. It is used to create interaction, reputation and trust

3

E-Commerce sites are considered in this project, to provide clear analysis results. The user’s

behaviours are captured with the stored web logs, which are later converted as event logs, using a

common method for mapping the log records. Then, identification of various behavioural

patterns takes place, using pre-defined queries which the user can likely perform. The researcher

of this project will define the pre-defined queries, to complete the project. Three websites will be

selected to perform real case study.

1.3Objectives

The objective is to analyze the customers’ behavior while shopping through online. This

report will provide an analysis of the three case studies of E-Commerce websites, where

customers’ behaviours will be analyzed. Online shopping website will be developed, where the

user can view the list of products and add them to their carts. To achieve the objective, data

mining will be applied. This will be compared by applying various models such as temporal

logical model, probabilistic decision making model and CLTL model. Throughout this model

checking, the efficiency of the website will be analyzed. Later, data pre-processing will be

implemented using WEKA tool.

1.4Literature Review

According to this paper (Bejju, 2018), In economic development, E-commerce is the

most important catalyst. Very fast growth in usage of web based application and internet

application is decreasing the operation costs of large enterprises. Many organizations have

decided to reconstruct their business strategies, to attain the maximum value and customer’s

satisfaction. E-commerce is not just trading the products; it provides the opportunity to complete

with other gains in the market such as delivering the needs of people within a short time. The

organizations attain business knowledge by using data mining, as it is used to attain the

knowledge from available information in order to help companies to make correct and weighted

decisions. E-commerce ensures fundamental changes to their customers. E-commerce marking

requires e-commerce websites to get a deeper understanding of e-commerce internetworks like

Flipkart, Myntra and Amazon market space. It is used to create interaction, reputation and trust

3

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

value in developing the business strategies, which supports to build an effective user application.

Also, E-commerce websites are used for company's image establishment, to sell and promote

goods. Moreover, it supports to give full customer support. Basically in an electronic market,

successful e-commerce websites are used to provide success for the company. E-commerce

website’s success is used to reduce the user perceived latency and quality. To reduce the latency,

the extraction of path traversal patterns approach is used in data mining. This approach is used to

predict the user behaviour in e-commerce sites. It also uses the decision tree approach to predict

the online rating of a product. The decision tree approach is used in visualization of probabilistic

business models such as product name, online rating, quantity and product price. In e-commerce

website, to retrieve the website data, extract classification rules are used. The data classification

is used to identify the common characteristics in a website data for categorising them in different

groups.

This paper describes (Sergio et al., 2018) that, In our daily lives, the online shopping is

more and more effective and common. The E-commerce website is very useful for e-commerce

market. To understand the user interest and behaviours is essential for e-commerce, so it leads to

adapt the e-commerce website. In e-commerce website, web server logs are used to store the user

information. To analysis the web server information data mining techniques are applied. The

user behaviours and numbers of the actions are performed by static characterization. This

technique is used to find out more complex behavioural patterns. This paper addresses the

analysis of structured e-commerce web logs by using linear temporal logic model checking

approach. The data mining techniques are used to extract a process model which will usually

provide with either over fitting spaghetti models. This approach is used to represent the event

attributes and types considering the e-commerce web structure and product categorization. It also

navigates the website according to the organization. This paper presents the application of the

approach to the up and scrap e-commerce website. It is used to identify various issues and

improves the organization’s product value.

1.5Research Content

The following are the research areas that will be required for this dissertation- For

Developing website, for implementation of Weka tool and for analysis of case studies on E-

4

Also, E-commerce websites are used for company's image establishment, to sell and promote

goods. Moreover, it supports to give full customer support. Basically in an electronic market,

successful e-commerce websites are used to provide success for the company. E-commerce

website’s success is used to reduce the user perceived latency and quality. To reduce the latency,

the extraction of path traversal patterns approach is used in data mining. This approach is used to

predict the user behaviour in e-commerce sites. It also uses the decision tree approach to predict

the online rating of a product. The decision tree approach is used in visualization of probabilistic

business models such as product name, online rating, quantity and product price. In e-commerce

website, to retrieve the website data, extract classification rules are used. The data classification

is used to identify the common characteristics in a website data for categorising them in different

groups.

This paper describes (Sergio et al., 2018) that, In our daily lives, the online shopping is

more and more effective and common. The E-commerce website is very useful for e-commerce

market. To understand the user interest and behaviours is essential for e-commerce, so it leads to

adapt the e-commerce website. In e-commerce website, web server logs are used to store the user

information. To analysis the web server information data mining techniques are applied. The

user behaviours and numbers of the actions are performed by static characterization. This

technique is used to find out more complex behavioural patterns. This paper addresses the

analysis of structured e-commerce web logs by using linear temporal logic model checking

approach. The data mining techniques are used to extract a process model which will usually

provide with either over fitting spaghetti models. This approach is used to represent the event

attributes and types considering the e-commerce web structure and product categorization. It also

navigates the website according to the organization. This paper presents the application of the

approach to the up and scrap e-commerce website. It is used to identify various issues and

improves the organization’s product value.

1.5Research Content

The following are the research areas that will be required for this dissertation- For

Developing website, for implementation of Weka tool and for analysis of case studies on E-

4

Commerce websites, through comparing three other e-commerce website to determine the

customer behaviour. To help developing the website, Java servlet is required for this research.

Then, one must know to work on Weka tool. In addition, waterfall model is considered in

software development and linear temporal logic model, computational logic model, probabilistic

model checking are also the areas to be researched before proceeding with the development of

the website.

1.6Dissertation Overview

Chapter 1 is the introduction part for the whole project. It signifies the problem and aim

of the project. This chapter also contains Literature Review. This section contains reviews of

various researchers that enlighten with additional information about the topics to be discussed in

this dissertation.

Chapter 2 briefly sketches the background for the literature Review presented in the

dissertation. This chapter acts as a base for this project, to understand the concepts involved in

the project. The hypothesis of the project are presented in this chapter.

Chapter 3 furnishes details about the related information for this project. The other areas

covered in this chapter includes- Data mining techniques, web usage mining , web transaction

and web page clustering technique and explanation of Markov model.

Chapter 4 provides in depth information on model checking for analyzing the event logs.

Chapter 5 comprises of solution and validation part. This chapter brings the effective

solution and convincing validations for the project. It is provided with a test plan to determine

the solution.

Chapter 6 highlights the strategies of up and scrap, which are listed and discussed. Then,

the implementation of statistics mining strategies on access logs is unveiled. As a whole, this

chapter discusses about the web statistics mining.

Chapter 7 is assigned for evaluation and results, where the output is analyzed. Further,

this chapter concludes with the achieved results.

In Chapter 8, identifying all the behavioural patterns of the users are studied.

The last part of this dissertation is entitled as Chapter 9, which includes the conclusion

for this project. All the areas covered are discussed in this chapter to express the purpose of this

project.

5

customer behaviour. To help developing the website, Java servlet is required for this research.

Then, one must know to work on Weka tool. In addition, waterfall model is considered in

software development and linear temporal logic model, computational logic model, probabilistic

model checking are also the areas to be researched before proceeding with the development of

the website.

1.6Dissertation Overview

Chapter 1 is the introduction part for the whole project. It signifies the problem and aim

of the project. This chapter also contains Literature Review. This section contains reviews of

various researchers that enlighten with additional information about the topics to be discussed in

this dissertation.

Chapter 2 briefly sketches the background for the literature Review presented in the

dissertation. This chapter acts as a base for this project, to understand the concepts involved in

the project. The hypothesis of the project are presented in this chapter.

Chapter 3 furnishes details about the related information for this project. The other areas

covered in this chapter includes- Data mining techniques, web usage mining , web transaction

and web page clustering technique and explanation of Markov model.

Chapter 4 provides in depth information on model checking for analyzing the event logs.

Chapter 5 comprises of solution and validation part. This chapter brings the effective

solution and convincing validations for the project. It is provided with a test plan to determine

the solution.

Chapter 6 highlights the strategies of up and scrap, which are listed and discussed. Then,

the implementation of statistics mining strategies on access logs is unveiled. As a whole, this

chapter discusses about the web statistics mining.

Chapter 7 is assigned for evaluation and results, where the output is analyzed. Further,

this chapter concludes with the achieved results.

In Chapter 8, identifying all the behavioural patterns of the users are studied.

The last part of this dissertation is entitled as Chapter 9, which includes the conclusion

for this project. All the areas covered are discussed in this chapter to express the purpose of this

project.

5

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

2. Background

2.1Evolution of E-Commerce

The retail business throughout the world has been revolutionized in the last two decades.

It has evolved from scratch and has passed through various significant milestones, which brings

us to today's E-Commerce. However, E-Commerce during its initial days, was least popular and

least used. People were scared to opt it and the retailers were scared to venture it, due to n

number of threats. The factors that affected the E-Commerce business are- The customers were

not able to touch and feel the product that they wish to purchase. This particular factor had a

greater negative impact on the E-commerce business. The other major concern was secured

payment mode, for their purchase. However, the customers also doubted the trustworthiness of

online shopping.

The development of internet and technology, the website services are enhanced. The

reason for the growth of E-Commerce is regarded as connectivity, through internet between the

customers and businesses that websites. The customers have easy access to their offered products

where they can see, compare the products and buy them with just some clicks. For increasing the

number of customers, the online retailers are in quest to improvise their e-commerce potentials

that maximizes the website’s responsiveness. The customers of this generation are quite savvy,

because they have complete details about their interested product, by means of reviews and

product details furnished in the websites.

Thus, purchasing from a particular website completely depends on the customer.

However, the online shopping behaviour of the customers can be studied, to help the customers

find their interested product. Generally, in this internet oriented world the customers tend to surf

internet before purchasing any product, where the mode of purchase could either be through

online shopping or by manual shopping. In terms of customer behaviour, the online shopping is

quite similar to manual shopping.

6

2.1Evolution of E-Commerce

The retail business throughout the world has been revolutionized in the last two decades.

It has evolved from scratch and has passed through various significant milestones, which brings

us to today's E-Commerce. However, E-Commerce during its initial days, was least popular and

least used. People were scared to opt it and the retailers were scared to venture it, due to n

number of threats. The factors that affected the E-Commerce business are- The customers were

not able to touch and feel the product that they wish to purchase. This particular factor had a

greater negative impact on the E-commerce business. The other major concern was secured

payment mode, for their purchase. However, the customers also doubted the trustworthiness of

online shopping.

The development of internet and technology, the website services are enhanced. The

reason for the growth of E-Commerce is regarded as connectivity, through internet between the

customers and businesses that websites. The customers have easy access to their offered products

where they can see, compare the products and buy them with just some clicks. For increasing the

number of customers, the online retailers are in quest to improvise their e-commerce potentials

that maximizes the website’s responsiveness. The customers of this generation are quite savvy,

because they have complete details about their interested product, by means of reviews and

product details furnished in the websites.

Thus, purchasing from a particular website completely depends on the customer.

However, the online shopping behaviour of the customers can be studied, to help the customers

find their interested product. Generally, in this internet oriented world the customers tend to surf

internet before purchasing any product, where the mode of purchase could either be through

online shopping or by manual shopping. In terms of customer behaviour, the online shopping is

quite similar to manual shopping.

6

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

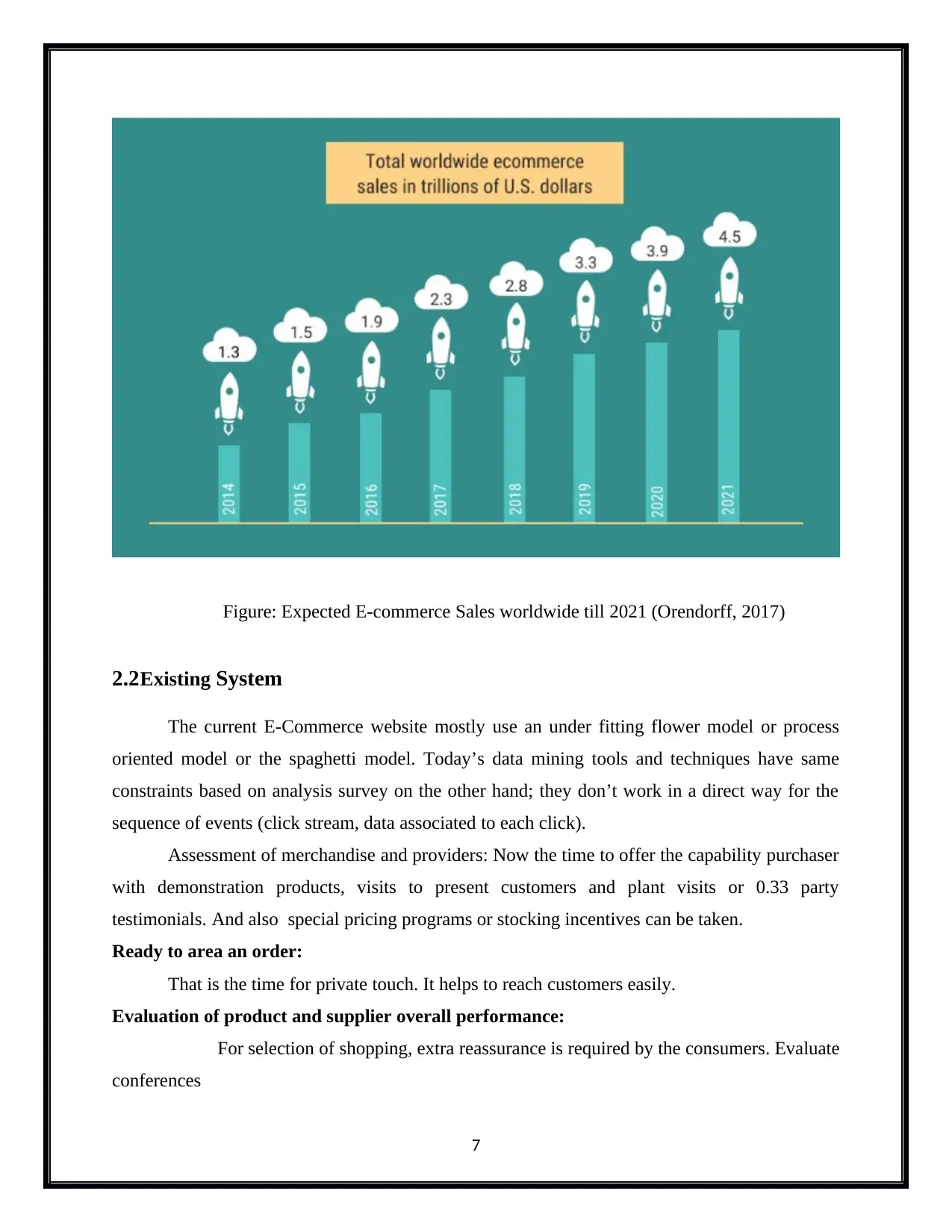

Figure: Expected E-commerce Sales worldwide till 2021 (Orendorff, 2017)

2.2Existing System

The current E-Commerce website mostly use an under fitting flower model or process

oriented model or the spaghetti model. Today’s data mining tools and techniques have same

constraints based on analysis survey on the other hand; they don’t work in a direct way for the

sequence of events (click stream, data associated to each click).

Assessment of merchandise and providers: Now the time to offer the capability purchaser

with demonstration products, visits to present customers and plant visits or 0.33 party

testimonials. And also special pricing programs or stocking incentives can be taken.

Ready to area an order:

That is the time for private touch. It helps to reach customers easily.

Evaluation of product and supplier overall performance:

For selection of shopping, extra reassurance is required by the consumers. Evaluate

conferences

7

2.2Existing System

The current E-Commerce website mostly use an under fitting flower model or process

oriented model or the spaghetti model. Today’s data mining tools and techniques have same

constraints based on analysis survey on the other hand; they don’t work in a direct way for the

sequence of events (click stream, data associated to each click).

Assessment of merchandise and providers: Now the time to offer the capability purchaser

with demonstration products, visits to present customers and plant visits or 0.33 party

testimonials. And also special pricing programs or stocking incentives can be taken.

Ready to area an order:

That is the time for private touch. It helps to reach customers easily.

Evaluation of product and supplier overall performance:

For selection of shopping, extra reassurance is required by the consumers. Evaluate

conferences

7

and helpline guide to provide reassurance properly after income help and continue exposure to

marketing and press coverage - justifying the acquisition decision.

Observe on purchase:

The primary purchase should no longer be visible as the cease of the process, however as the

starting of a protracted-term enterprise relationship.

Consumer shopping for conduct:

There are numerous fashions of patron shopping for behavior, however the steps below

are

pretty commonplace to maximum them.

The purchaser identifies a want:

This is often initiated by means of PR coverage, which includes phrase of mouth. The

Consumer might also have seen a pal or movie star using a service or product, or cognizance

may also had been sparked off by advertising and marketing.

Searching out facts:

At this degree, the customer needs to know more and looking for data. Advertising

and PR are nevertheless essential, however product demonstrations, packaging and product

shows play a significant role. Now the time to set up your sales employees and the customers

find motion pictures and brochures which are useful.

Finding out opportunity products and suppliers:

The consumer is now looking to select between products, or company up on the purchase

decision. This Platform is used for promoting product guarantees and warranties, and

maximizing packaging and product displays. Sales personnel greatly have an effect on the patron

at this level and income promotion offers emerge as of interest. Unbiased sources of facts are

nevertheless of hobby, which include product check evaluations.

Buy choice:

That is the time to 'tip the stability'. Income promotion come by their own, and if

appropriate,

income force incentives wants to make sure that your sales employees are incentives to shut the

deal.

Using the product:

8

marketing and press coverage - justifying the acquisition decision.

Observe on purchase:

The primary purchase should no longer be visible as the cease of the process, however as the

starting of a protracted-term enterprise relationship.

Consumer shopping for conduct:

There are numerous fashions of patron shopping for behavior, however the steps below

are

pretty commonplace to maximum them.

The purchaser identifies a want:

This is often initiated by means of PR coverage, which includes phrase of mouth. The

Consumer might also have seen a pal or movie star using a service or product, or cognizance

may also had been sparked off by advertising and marketing.

Searching out facts:

At this degree, the customer needs to know more and looking for data. Advertising

and PR are nevertheless essential, however product demonstrations, packaging and product

shows play a significant role. Now the time to set up your sales employees and the customers

find motion pictures and brochures which are useful.

Finding out opportunity products and suppliers:

The consumer is now looking to select between products, or company up on the purchase

decision. This Platform is used for promoting product guarantees and warranties, and

maximizing packaging and product displays. Sales personnel greatly have an effect on the patron

at this level and income promotion offers emerge as of interest. Unbiased sources of facts are

nevertheless of hobby, which include product check evaluations.

Buy choice:

That is the time to 'tip the stability'. Income promotion come by their own, and if

appropriate,

income force incentives wants to make sure that your sales employees are incentives to shut the

deal.

Using the product:

8

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 60

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.