Comparative Study of KNN, J48, and Naive Bayes in Weka Environment

VerifiedAdded on 2023/06/07

|19

|2887

|140

Report

AI Summary

This report presents a comparative analysis of three classification algorithms: K-Nearest Neighbor (KNN), J48 (Decision Tree), and Naive Bayes, using the Weka data mining tool. The study utilizes the Vote.ARFF dataset and evaluates the performance of each algorithm based on metrics such as accuracy, precision, recall, and the confusion matrix. The report details the implementation of each classifier within the Weka environment, including the use of 10-fold cross-validation and the handling of missing values. The results section provides a comprehensive breakdown of each algorithm's performance, including correctly and incorrectly classified instances, precision for different classes, and cost analysis curves. The report concludes with a comparison of the algorithms, identifying J48 (Decision Tree) as the best-performing classifier for the given dataset and discussing the strengths and weaknesses of each approach, providing valuable insights for future research and application in data mining. The report highlights the importance of algorithm selection based on the specific dataset and application requirements.

16

Comparative Exploration of KNN, J48 and

Lazy IBK Classifiers in Weka

Comparative Exploration of KNN, J48 and

Lazy IBK Classifiers in Weka

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

16

Abstract

A data mining technique that predicts that a group belongs to data instances in a particular record

with certain restrictions is known as a Classification tool. The data classification problem has

been found in several areas of data mining (Xhemali, Hinde, & Stone, 2009). This is the problem

of knowing a number of characteristic variables and an objective variable. Customer orientation,

medical diagnostics, social networking analysis, and artificial intelligence are some places of

application. This article focuses on different classification techniques, their pros, and cons. In

this article, J48 (decision tree), k-nearest neighbor and naive Bayes classification algorithms

have been used. A comparative evaluation of J48, k-nearest neighbor and naive Bayes in

connection with voting preferences has been performed. The results of the comparison presented

in this document relate to the accuracy of the classification and cost analysis. The result reveals

the efficacy and precision of the classifiers.

Abstract

A data mining technique that predicts that a group belongs to data instances in a particular record

with certain restrictions is known as a Classification tool. The data classification problem has

been found in several areas of data mining (Xhemali, Hinde, & Stone, 2009). This is the problem

of knowing a number of characteristic variables and an objective variable. Customer orientation,

medical diagnostics, social networking analysis, and artificial intelligence are some places of

application. This article focuses on different classification techniques, their pros, and cons. In

this article, J48 (decision tree), k-nearest neighbor and naive Bayes classification algorithms

have been used. A comparative evaluation of J48, k-nearest neighbor and naive Bayes in

connection with voting preferences has been performed. The results of the comparison presented

in this document relate to the accuracy of the classification and cost analysis. The result reveals

the efficacy and precision of the classifiers.

16

Table of Contents

Abstract........................................................................................2

Introduction................................................................................... 4

K- Nearest Neighbor Classification................................................4

Naive Bayes Classification.............................................................5

Decision Tree................................................................................5

Comparison among K-NN (IBK), Decision Tree (J48) and Naive

Bayes Techniques.........................................................................6

Instrument for the Comparison.....................................................6

Data Exploration...........................................................................7

Performance Investigation of the Classifiers.................................9

Classification by Naïve Bayes........................................................9

K-Nearest Neighbor Classification...............................................11

Decision Tree (J48) Classification................................................14

Conclusion..................................................................................17

Reference.................................................................................... 18

Table of Contents

Abstract........................................................................................2

Introduction................................................................................... 4

K- Nearest Neighbor Classification................................................4

Naive Bayes Classification.............................................................5

Decision Tree................................................................................5

Comparison among K-NN (IBK), Decision Tree (J48) and Naive

Bayes Techniques.........................................................................6

Instrument for the Comparison.....................................................6

Data Exploration...........................................................................7

Performance Investigation of the Classifiers.................................9

Classification by Naïve Bayes........................................................9

K-Nearest Neighbor Classification...............................................11

Decision Tree (J48) Classification................................................14

Conclusion..................................................................................17

Reference.................................................................................... 18

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

16

Introduction

In data classification, the format of the data organized accurately into categories in accordance to

similarity and specificity, so that objects in different groups are dissimilar and the algorithm

assigns each instance in order to minimize the error (Brijain, Patel, Kushik, & Rana, 2014).

Categorization of labels and classification of data based on the constraints of the model is created

with the associated data set and class labels. The classification utilizes a controlled approach

which can be categorized in two steps. Firstly, the permutation of training with the pre-

processing phase that constructs the classification model. Secondly, the application of the model

on an experimental data set with class variables (Jadhav, & Channe, 2016). The current article is

focused on exploring three different classification techniques in data mining. This study

compares between K-NN classification, Decision Tree and Bayesian Network based on the

precision of each algorithm. This comparative guide may help future researchers to develop

innovative algorithms in the field of data mining (Islam, Wu, Ahmadi, & Sid-Ahmed, 2007).

K- Nearest Neighbor Classification

The KNN algorithm assumes that the samples are close to one another and it is an instance-based

learning method. These types of classifiers are also known as lazy learners. KNN creates a

classifier by storing all the samples without marking. The slow learning algorithms take less

calculation time during the learning phase and are based on the learning of similarity. That is,

for a sample of X data to be sorted, it will search for its nearest K neighbor and then assign X to

the class name to which most of its neighbors belong. The performance of the nearest k neighbor

algorithm is influenced by choice of the value of k.

Introduction

In data classification, the format of the data organized accurately into categories in accordance to

similarity and specificity, so that objects in different groups are dissimilar and the algorithm

assigns each instance in order to minimize the error (Brijain, Patel, Kushik, & Rana, 2014).

Categorization of labels and classification of data based on the constraints of the model is created

with the associated data set and class labels. The classification utilizes a controlled approach

which can be categorized in two steps. Firstly, the permutation of training with the pre-

processing phase that constructs the classification model. Secondly, the application of the model

on an experimental data set with class variables (Jadhav, & Channe, 2016). The current article is

focused on exploring three different classification techniques in data mining. This study

compares between K-NN classification, Decision Tree and Bayesian Network based on the

precision of each algorithm. This comparative guide may help future researchers to develop

innovative algorithms in the field of data mining (Islam, Wu, Ahmadi, & Sid-Ahmed, 2007).

K- Nearest Neighbor Classification

The KNN algorithm assumes that the samples are close to one another and it is an instance-based

learning method. These types of classifiers are also known as lazy learners. KNN creates a

classifier by storing all the samples without marking. The slow learning algorithms take less

calculation time during the learning phase and are based on the learning of similarity. That is,

for a sample of X data to be sorted, it will search for its nearest K neighbor and then assign X to

the class name to which most of its neighbors belong. The performance of the nearest k neighbor

algorithm is influenced by choice of the value of k.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

16

Naive Bayes Classification

The Naive Bayes classification is based on the Bayesian statistical model and is called naive

because the classification is based on the assumptions that all factors have a contribution to the

classification and correlate with each other. This hypothesis is called Simple Bayes or Bayesian

independence. This naïve classification predicts the likelihood of a class membership, such as the

exact class label likelihood of a particular element. A naive Bayesian classification assumes the

presence of a given class-specific variables' attributes does not have to be performed with the

presence of another attribute. The naive Bayes classification technique is used when the size of

the inputs is high. The basis of the Bayesian classification is the Bayes theorem on conditional

probability where it is specifically used to compute the posterior probabilities, such as p (c | x)

and p (x | c).

Decision Tree

The decision tree is a prediction model that associates the observations of an element with the

objective value of the element. The decision tree algorithm is a data extraction induction

technique that segregates records by using the depth-first or the breadth-first approach. A

decision tree consists of root nodes, internal nodes, and leaf nodes. The resemblance with an

organization chart can be noticed, where each inner node specifies a test condition for an

attribute. The result of the test condition gets represented as a branch and each end node receives

a class tag. The root node is generally the topmost node of the decision tree. In a decision tree,

each route is an adjective rule. In general, it uses the depth-first approach in two phases, viz.

Naive Bayes Classification

The Naive Bayes classification is based on the Bayesian statistical model and is called naive

because the classification is based on the assumptions that all factors have a contribution to the

classification and correlate with each other. This hypothesis is called Simple Bayes or Bayesian

independence. This naïve classification predicts the likelihood of a class membership, such as the

exact class label likelihood of a particular element. A naive Bayesian classification assumes the

presence of a given class-specific variables' attributes does not have to be performed with the

presence of another attribute. The naive Bayes classification technique is used when the size of

the inputs is high. The basis of the Bayesian classification is the Bayes theorem on conditional

probability where it is specifically used to compute the posterior probabilities, such as p (c | x)

and p (x | c).

Decision Tree

The decision tree is a prediction model that associates the observations of an element with the

objective value of the element. The decision tree algorithm is a data extraction induction

technique that segregates records by using the depth-first or the breadth-first approach. A

decision tree consists of root nodes, internal nodes, and leaf nodes. The resemblance with an

organization chart can be noticed, where each inner node specifies a test condition for an

attribute. The result of the test condition gets represented as a branch and each end node receives

a class tag. The root node is generally the topmost node of the decision tree. In a decision tree,

each route is an adjective rule. In general, it uses the depth-first approach in two phases, viz.

16

growth and circumcision. Tree formation takes place in a top-down technique. At this stage, the

structure is recursively divided until the data elements belong to the same class plate. Trimming

is used to improve the prediction and notation of the algorithm by minimizing the excessive

problem of the tree (Taruna, & Pandey, 2014).

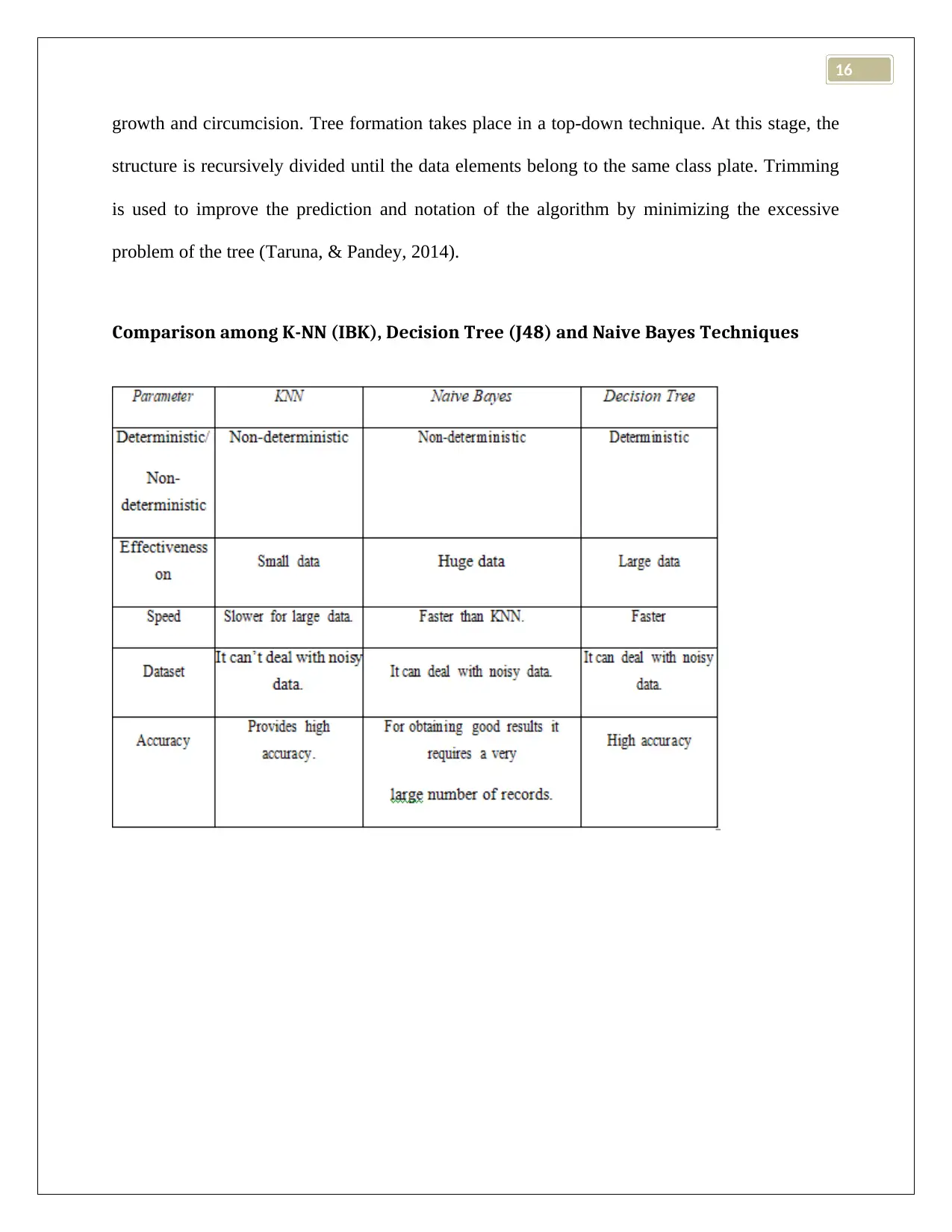

Comparison among K-NN (IBK), Decision Tree (J48) and Naive Bayes Techniques

growth and circumcision. Tree formation takes place in a top-down technique. At this stage, the

structure is recursively divided until the data elements belong to the same class plate. Trimming

is used to improve the prediction and notation of the algorithm by minimizing the excessive

problem of the tree (Taruna, & Pandey, 2014).

Comparison among K-NN (IBK), Decision Tree (J48) and Naive Bayes Techniques

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

16

Instrument for the Comparison

Waikato environment for knowledge analysis (WEKA) has been used as the statistical tool for

exploring and analyzing the performance of the three classifiers. The collection of visualization

tools and classification algorithms was sufficient for the purpose of the current investigation

(Dan, Lihua, & Zhaoxin, 2013). The Vote.ARFF file was imported in the Weka pre-process

window. The filters for classification was applied in the classify tab.

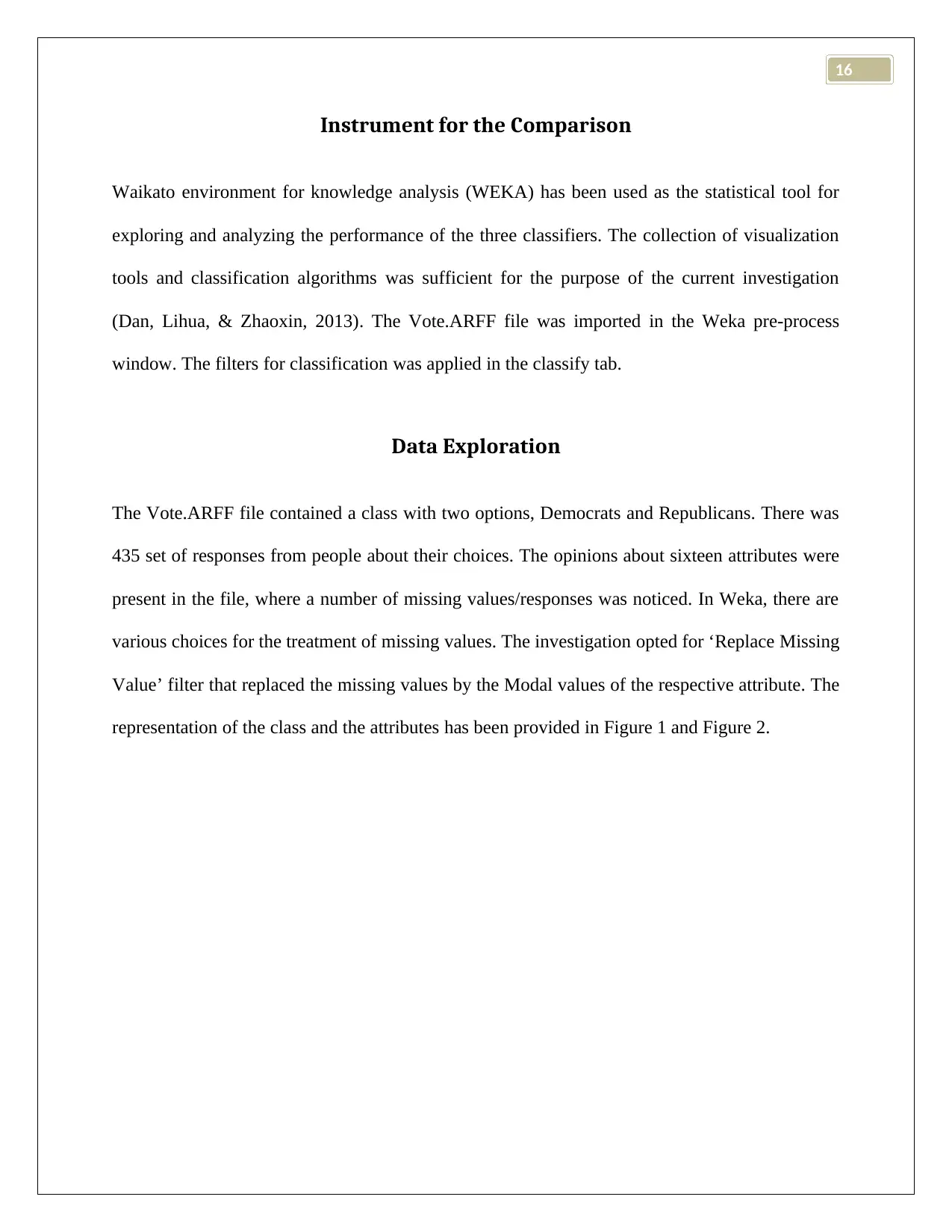

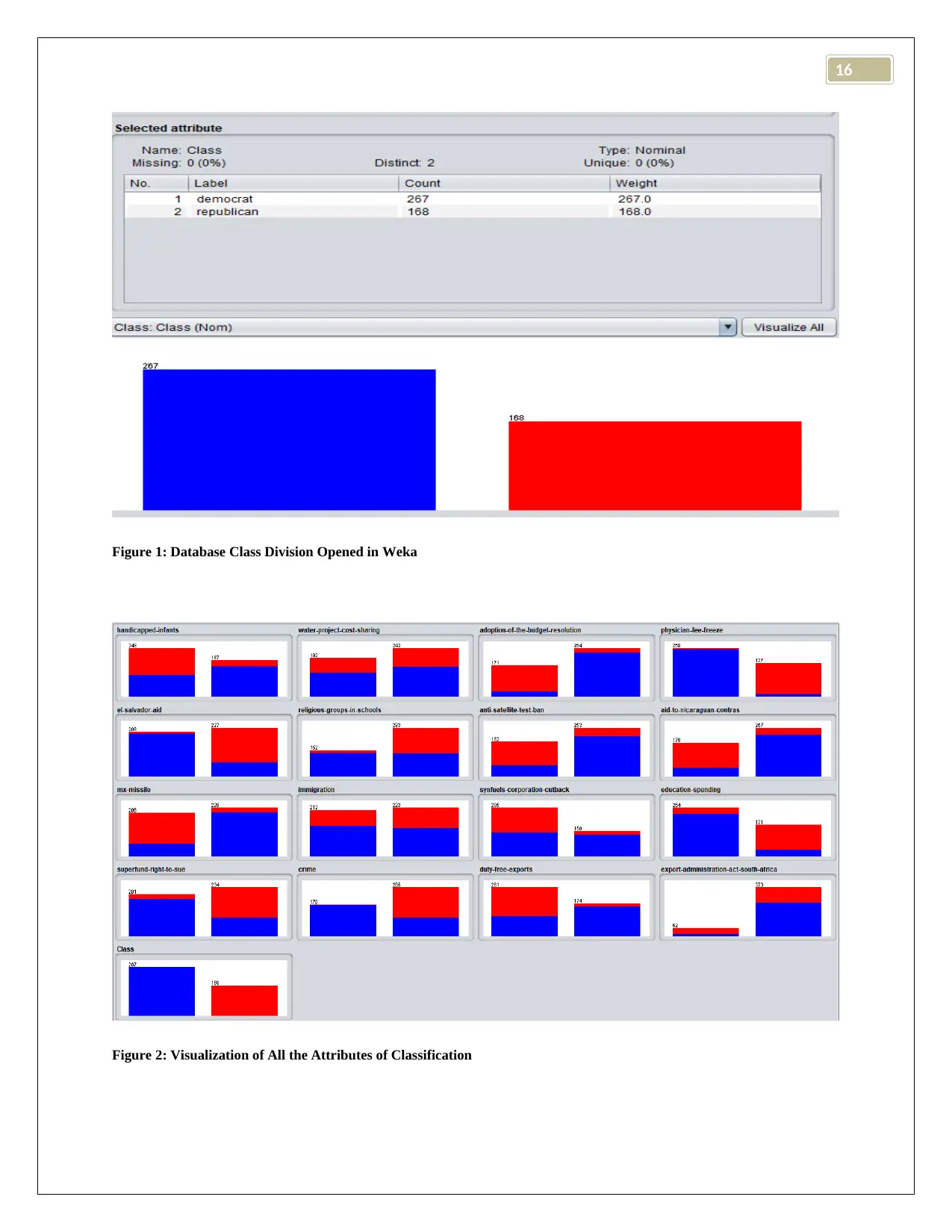

Data Exploration

The Vote.ARFF file contained a class with two options, Democrats and Republicans. There was

435 set of responses from people about their choices. The opinions about sixteen attributes were

present in the file, where a number of missing values/responses was noticed. In Weka, there are

various choices for the treatment of missing values. The investigation opted for ‘Replace Missing

Value’ filter that replaced the missing values by the Modal values of the respective attribute. The

representation of the class and the attributes has been provided in Figure 1 and Figure 2.

Instrument for the Comparison

Waikato environment for knowledge analysis (WEKA) has been used as the statistical tool for

exploring and analyzing the performance of the three classifiers. The collection of visualization

tools and classification algorithms was sufficient for the purpose of the current investigation

(Dan, Lihua, & Zhaoxin, 2013). The Vote.ARFF file was imported in the Weka pre-process

window. The filters for classification was applied in the classify tab.

Data Exploration

The Vote.ARFF file contained a class with two options, Democrats and Republicans. There was

435 set of responses from people about their choices. The opinions about sixteen attributes were

present in the file, where a number of missing values/responses was noticed. In Weka, there are

various choices for the treatment of missing values. The investigation opted for ‘Replace Missing

Value’ filter that replaced the missing values by the Modal values of the respective attribute. The

representation of the class and the attributes has been provided in Figure 1 and Figure 2.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

16

Figure 1: Database Class Division Opened in Weka

Figure 2: Visualization of All the Attributes of Classification

Figure 1: Database Class Division Opened in Weka

Figure 2: Visualization of All the Attributes of Classification

16

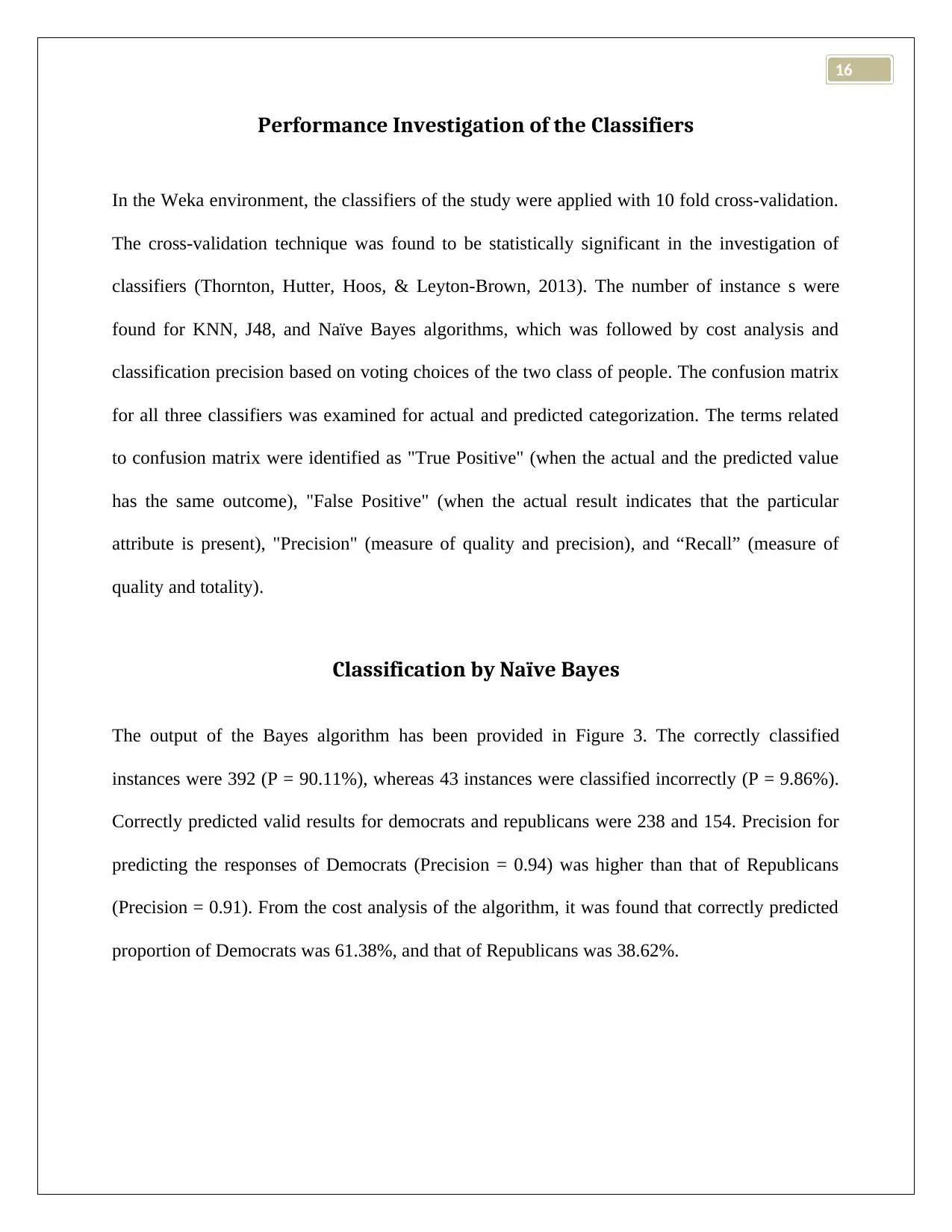

Performance Investigation of the Classifiers

In the Weka environment, the classifiers of the study were applied with 10 fold cross-validation.

The cross-validation technique was found to be statistically significant in the investigation of

classifiers (Thornton, Hutter, Hoos, & Leyton-Brown, 2013). The number of instance s were

found for KNN, J48, and Naïve Bayes algorithms, which was followed by cost analysis and

classification precision based on voting choices of the two class of people. The confusion matrix

for all three classifiers was examined for actual and predicted categorization. The terms related

to confusion matrix were identified as "True Positive" (when the actual and the predicted value

has the same outcome), "False Positive" (when the actual result indicates that the particular

attribute is present), "Precision" (measure of quality and precision), and “Recall” (measure of

quality and totality).

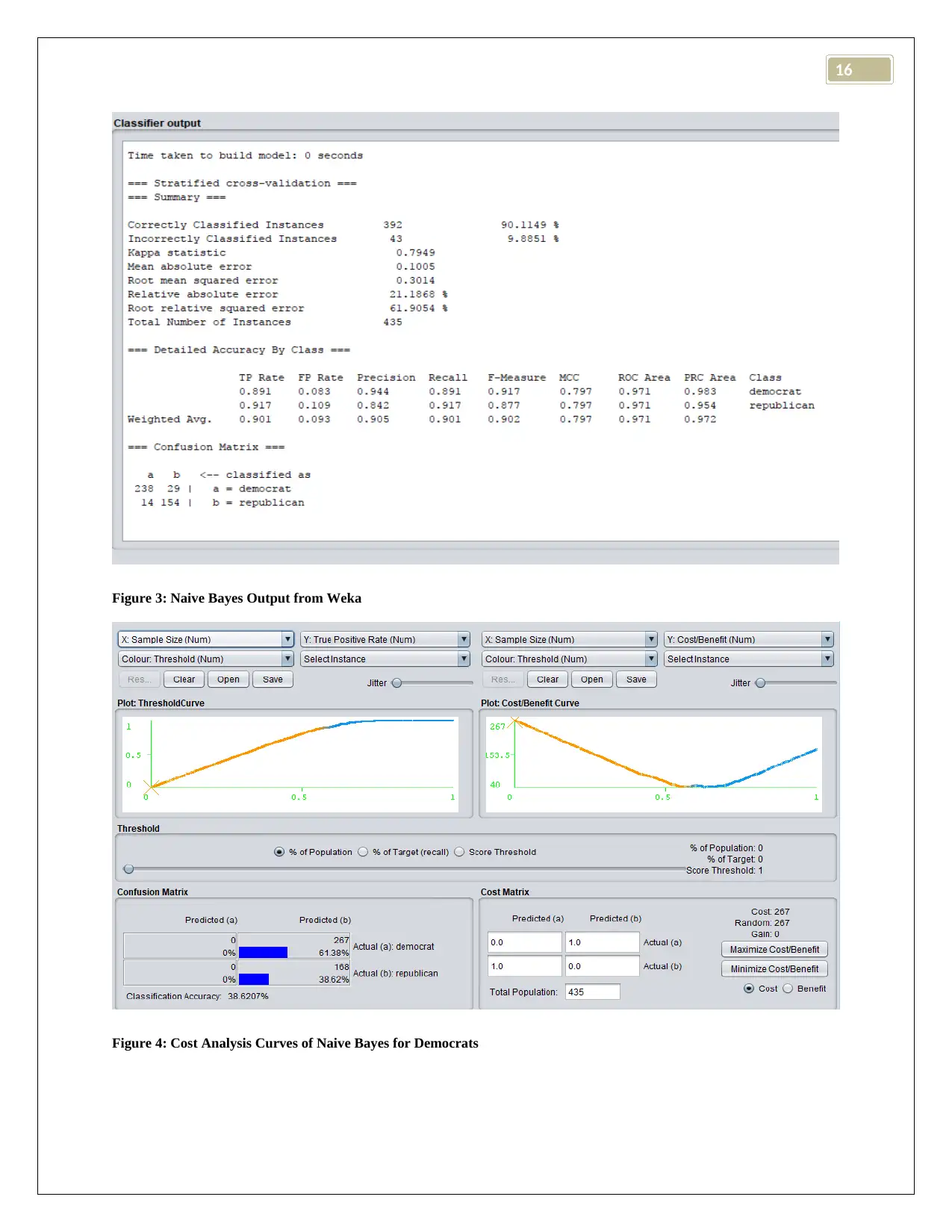

Classification by Naïve Bayes

The output of the Bayes algorithm has been provided in Figure 3. The correctly classified

instances were 392 (P = 90.11%), whereas 43 instances were classified incorrectly (P = 9.86%).

Correctly predicted valid results for democrats and republicans were 238 and 154. Precision for

predicting the responses of Democrats (Precision = 0.94) was higher than that of Republicans

(Precision = 0.91). From the cost analysis of the algorithm, it was found that correctly predicted

proportion of Democrats was 61.38%, and that of Republicans was 38.62%.

Performance Investigation of the Classifiers

In the Weka environment, the classifiers of the study were applied with 10 fold cross-validation.

The cross-validation technique was found to be statistically significant in the investigation of

classifiers (Thornton, Hutter, Hoos, & Leyton-Brown, 2013). The number of instance s were

found for KNN, J48, and Naïve Bayes algorithms, which was followed by cost analysis and

classification precision based on voting choices of the two class of people. The confusion matrix

for all three classifiers was examined for actual and predicted categorization. The terms related

to confusion matrix were identified as "True Positive" (when the actual and the predicted value

has the same outcome), "False Positive" (when the actual result indicates that the particular

attribute is present), "Precision" (measure of quality and precision), and “Recall” (measure of

quality and totality).

Classification by Naïve Bayes

The output of the Bayes algorithm has been provided in Figure 3. The correctly classified

instances were 392 (P = 90.11%), whereas 43 instances were classified incorrectly (P = 9.86%).

Correctly predicted valid results for democrats and republicans were 238 and 154. Precision for

predicting the responses of Democrats (Precision = 0.94) was higher than that of Republicans

(Precision = 0.91). From the cost analysis of the algorithm, it was found that correctly predicted

proportion of Democrats was 61.38%, and that of Republicans was 38.62%.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

16

Figure 3: Naive Bayes Output from Weka

Figure 4: Cost Analysis Curves of Naive Bayes for Democrats

Figure 3: Naive Bayes Output from Weka

Figure 4: Cost Analysis Curves of Naive Bayes for Democrats

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

16

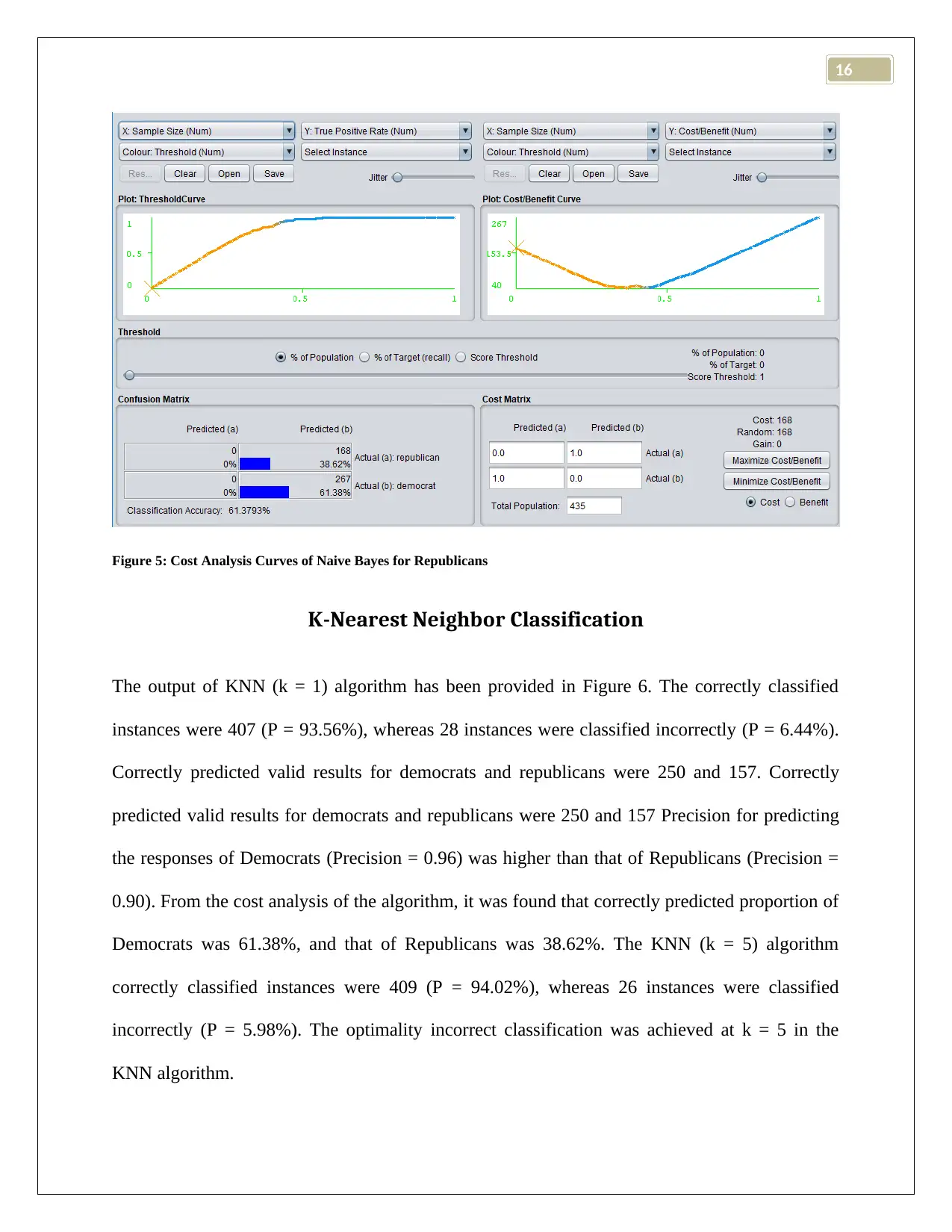

Figure 5: Cost Analysis Curves of Naive Bayes for Republicans

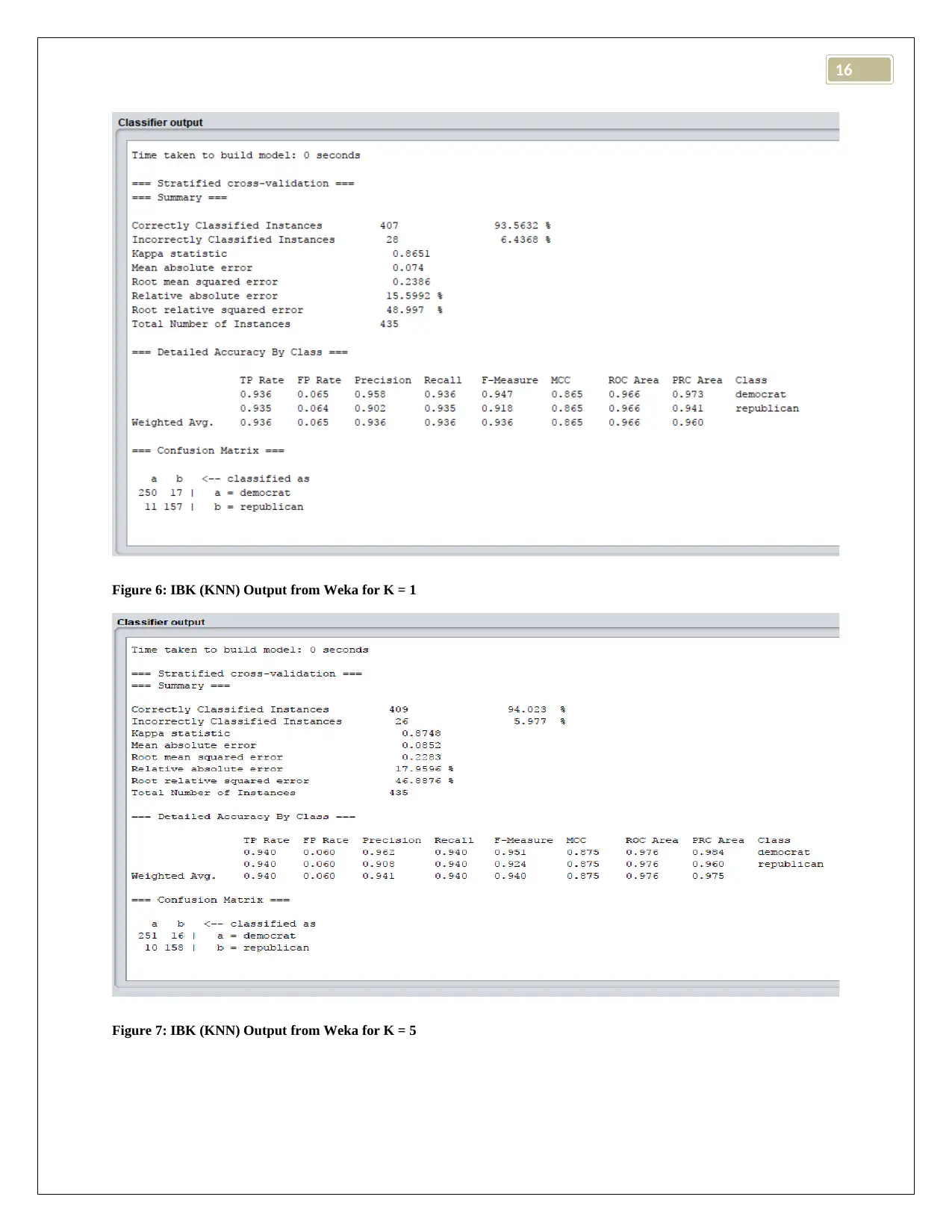

K-Nearest Neighbor Classification

The output of KNN (k = 1) algorithm has been provided in Figure 6. The correctly classified

instances were 407 (P = 93.56%), whereas 28 instances were classified incorrectly (P = 6.44%).

Correctly predicted valid results for democrats and republicans were 250 and 157. Correctly

predicted valid results for democrats and republicans were 250 and 157 Precision for predicting

the responses of Democrats (Precision = 0.96) was higher than that of Republicans (Precision =

0.90). From the cost analysis of the algorithm, it was found that correctly predicted proportion of

Democrats was 61.38%, and that of Republicans was 38.62%. The KNN (k = 5) algorithm

correctly classified instances were 409 (P = 94.02%), whereas 26 instances were classified

incorrectly (P = 5.98%). The optimality incorrect classification was achieved at k = 5 in the

KNN algorithm.

Figure 5: Cost Analysis Curves of Naive Bayes for Republicans

K-Nearest Neighbor Classification

The output of KNN (k = 1) algorithm has been provided in Figure 6. The correctly classified

instances were 407 (P = 93.56%), whereas 28 instances were classified incorrectly (P = 6.44%).

Correctly predicted valid results for democrats and republicans were 250 and 157. Correctly

predicted valid results for democrats and republicans were 250 and 157 Precision for predicting

the responses of Democrats (Precision = 0.96) was higher than that of Republicans (Precision =

0.90). From the cost analysis of the algorithm, it was found that correctly predicted proportion of

Democrats was 61.38%, and that of Republicans was 38.62%. The KNN (k = 5) algorithm

correctly classified instances were 409 (P = 94.02%), whereas 26 instances were classified

incorrectly (P = 5.98%). The optimality incorrect classification was achieved at k = 5 in the

KNN algorithm.

16

Figure 6: IBK (KNN) Output from Weka for K = 1

Figure 7: IBK (KNN) Output from Weka for K = 5

Figure 6: IBK (KNN) Output from Weka for K = 1

Figure 7: IBK (KNN) Output from Weka for K = 5

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 19

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.