Bayesian Approach Assignment

VerifiedAdded on 2023/05/29

|11

|1406

|202

AI Summary

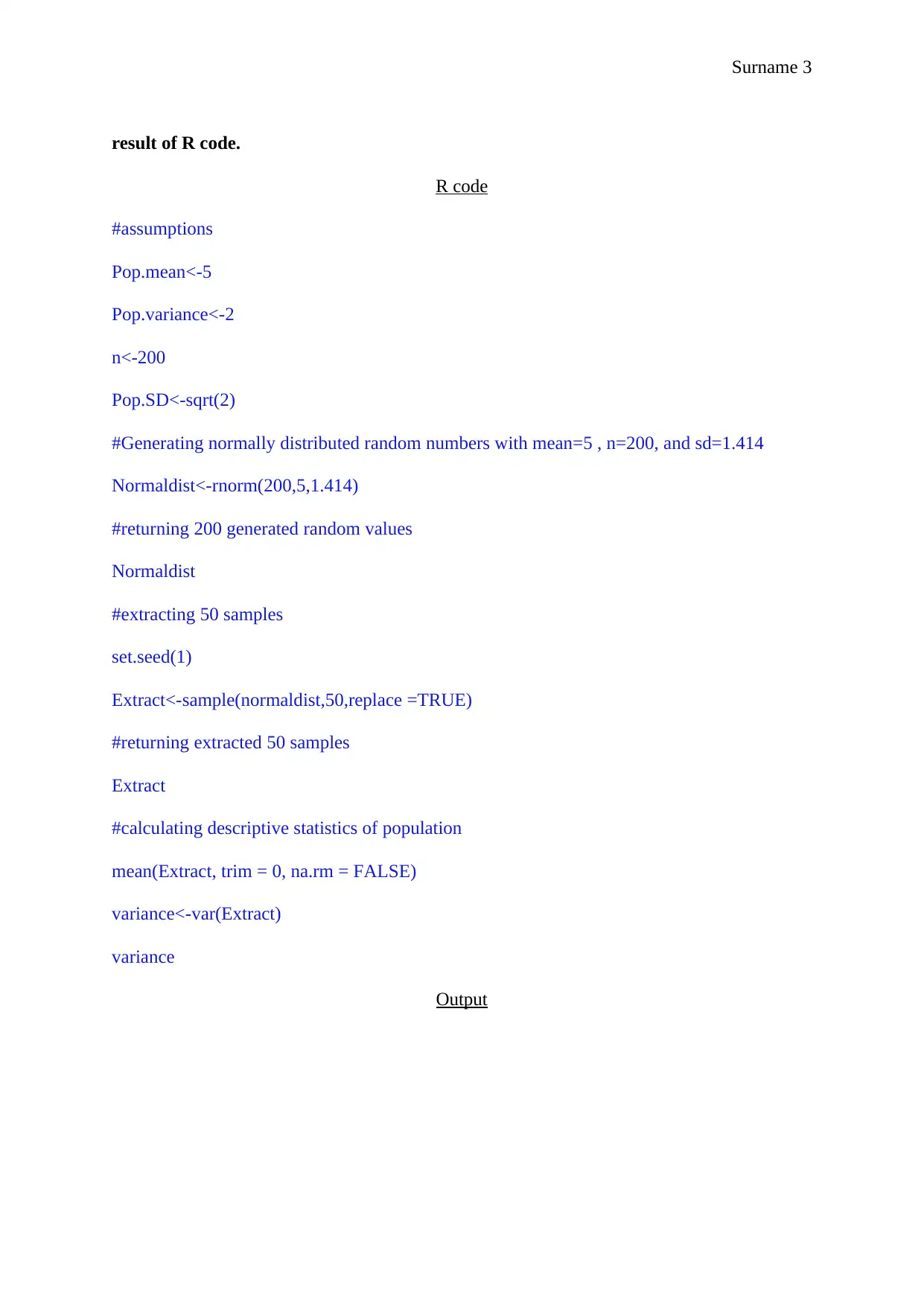

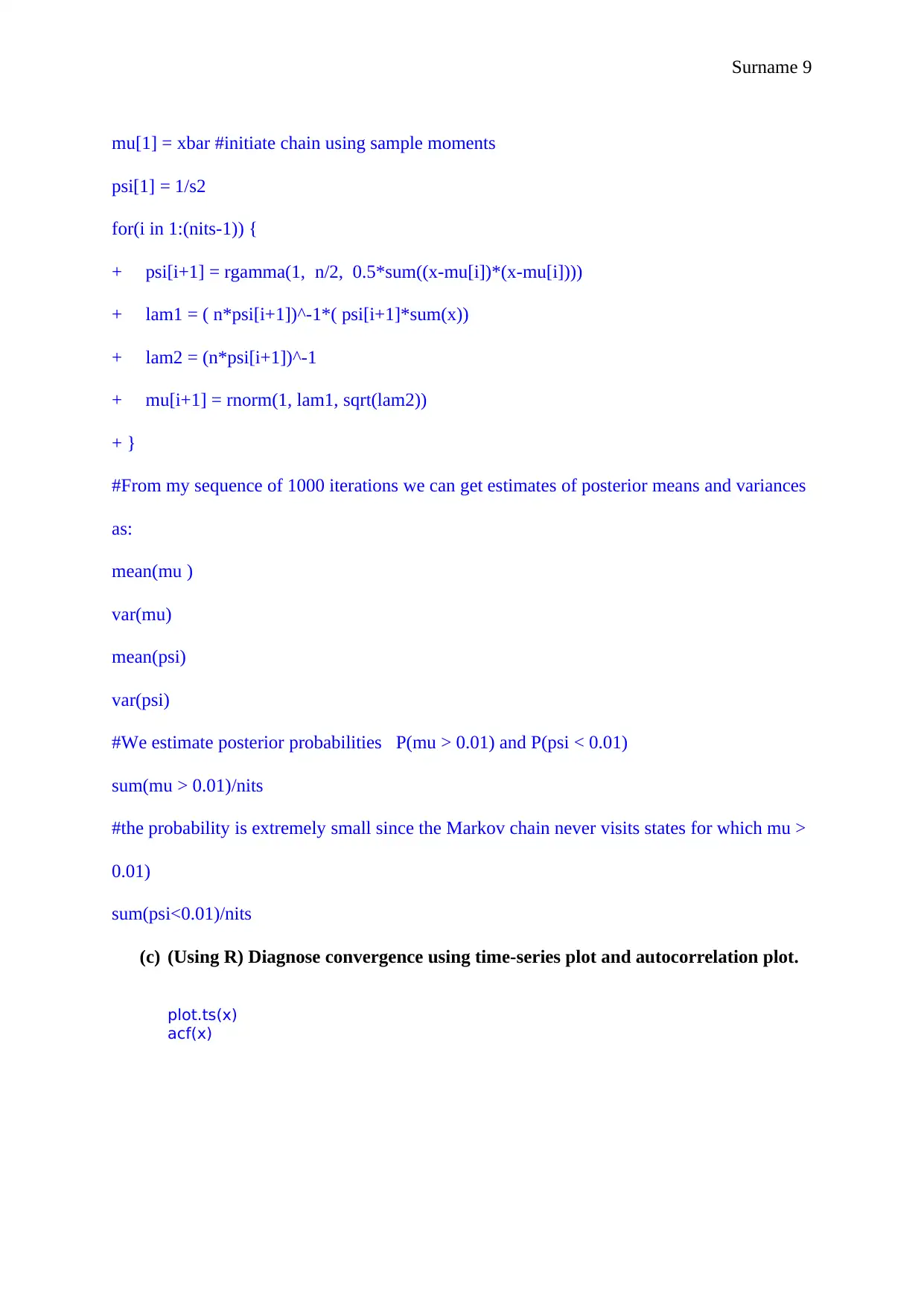

This assignment covers the Bayesian approach with solved examples and R code. It includes calculating probability using Monte Carlo and importance sampling methods, finding conditional posterior distribution of sigma and beta using Gibbs sampling method, and diagnosing convergence using time-series plot and autocorrelation plot.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

1 out of 11

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)